Prior-Driven NeRF: Prior Guided Rendering

Abstract

1. Introduction

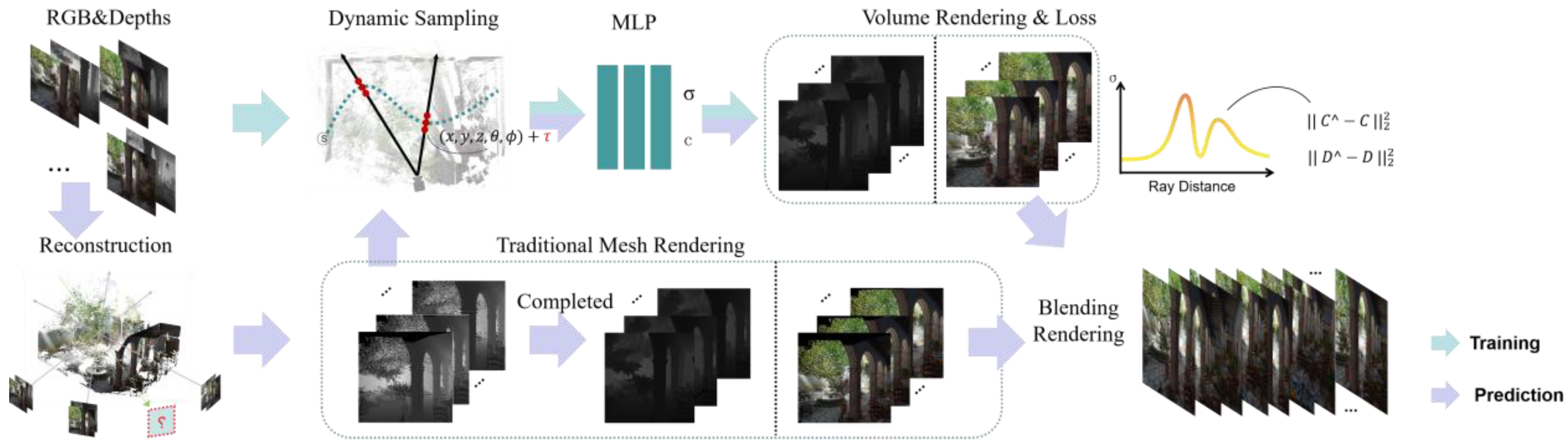

- A dynamic sampling method to reduce many unnecessary sampling points. During training and prediction, sampling points are only sampled near the surface of the object.

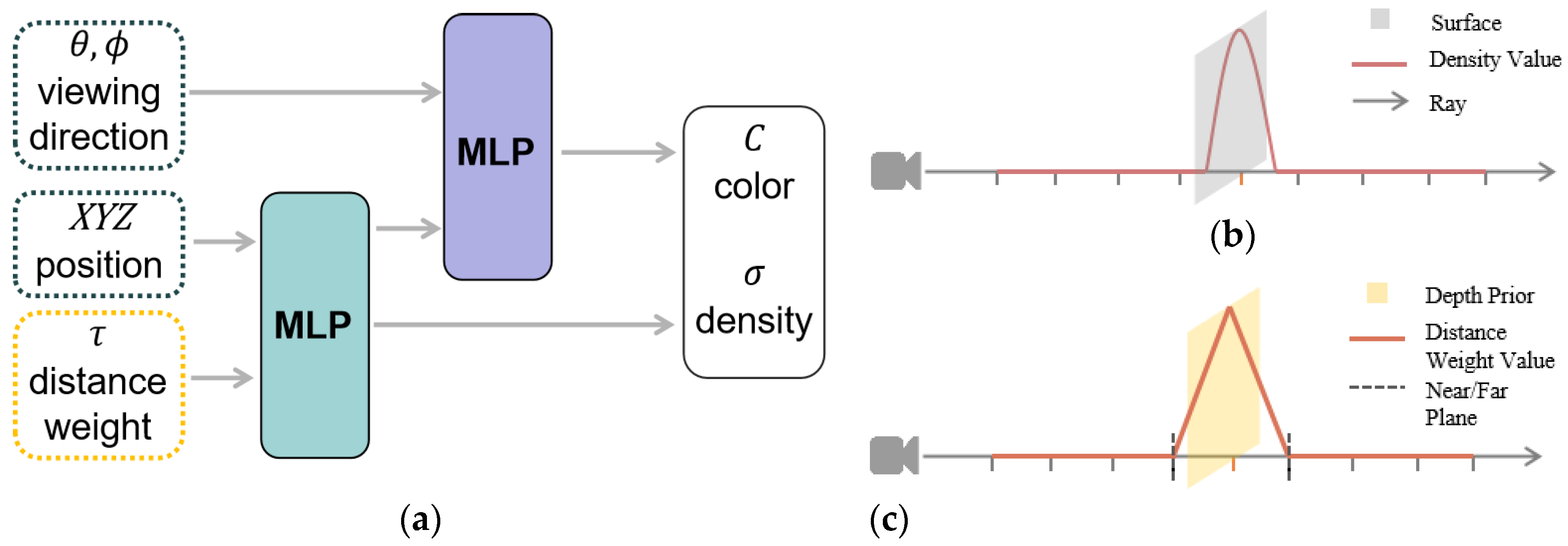

- A novel method of introducing distance weight directly into MLP as a depth prior. Adding distance weights as additional information to help the model fit the surface better.

- A fast Hybrid rendering method with guaranteed image rendering quality. Helps accelerate rendering of new views with the speed advantage of the TMRM.

- Our method outperforms other methods in terms of PSNR, SSIM, and LPIPS with a sparse input view (11 sheets per room) and few sampling points (eight points per ray). Particularly, our method renders a single image nearly 3× faster than other methods.

2. Related Work

2.1. Scene Reconstruction

2.2. Depth and NeRF

2.3. NeRF Accelerations

3. Background

3.1. Volume Rendering Revisited

3.2. Positional Encoding

4. Method

4.1. Dynamic Sampling

4.2. Distance Weight

4.3. Loss Function

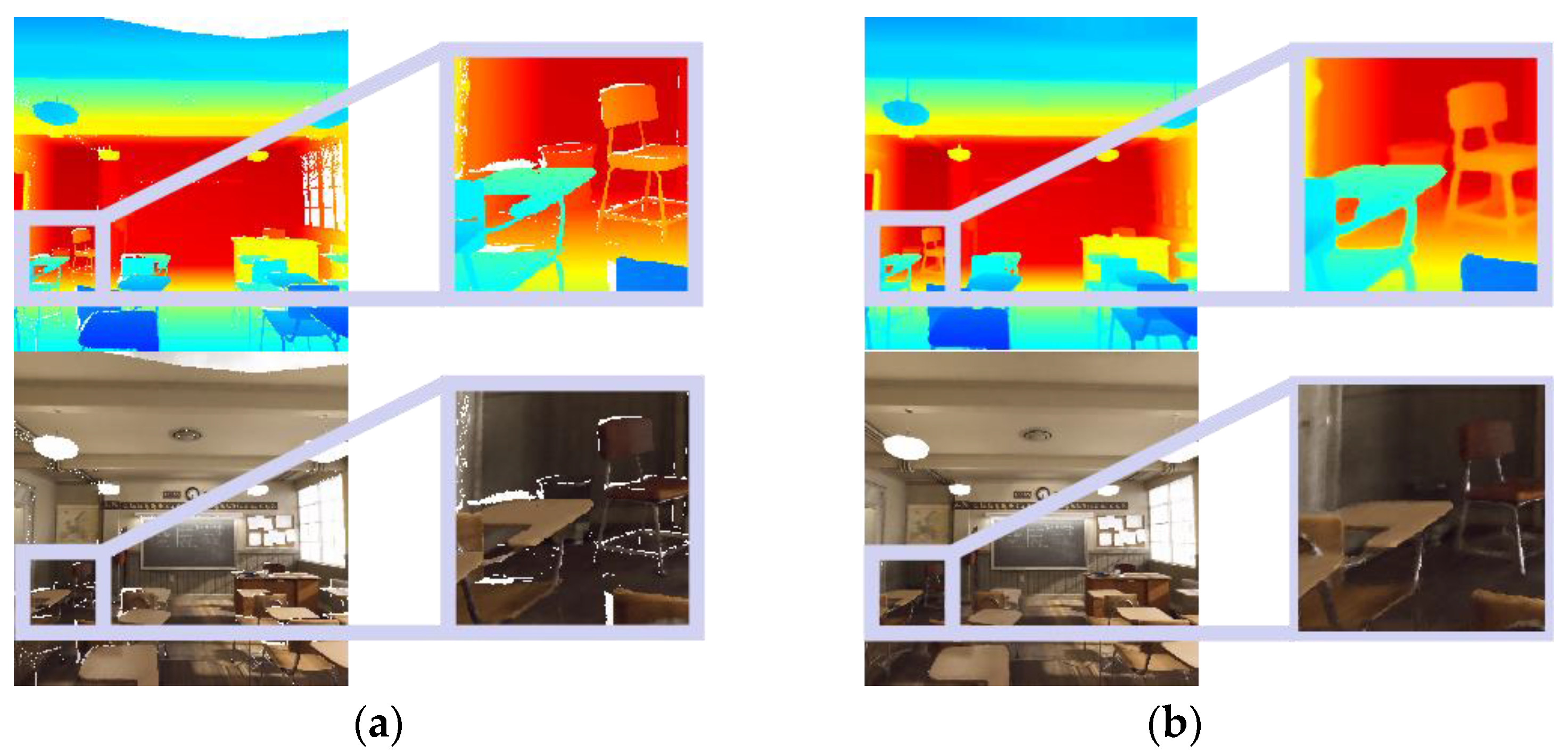

4.4. Depth Prior Acquisition and Hybrid Rendering

5. Results

5.1. Dataset and Set

5.2. Evaluation Metrics

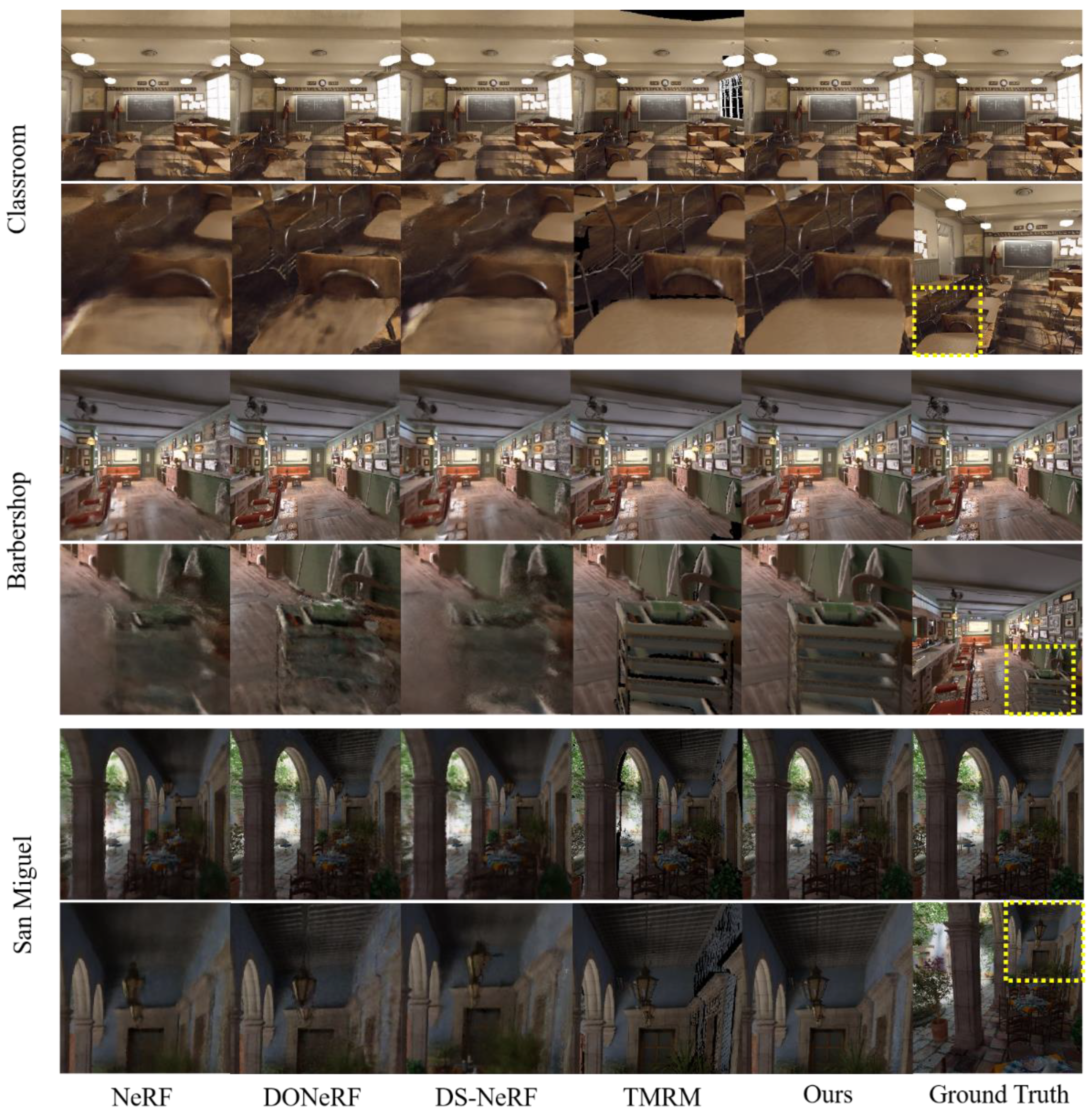

5.3. Comparisons

5.4. Ablation Study

6. Limitations

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mildenhall, B.; Srinivasan, P.P.; Tancik, M.; Barron, J.T.; Ramamoorthi, R.; Ng, R. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 2021, 65, 99–106. [Google Scholar] [CrossRef]

- Barron, J.T.; Mildenhall, B.; Tancik, M.; Hedman, P.; Martin-Brualla, R.; Srinivasan, P.P. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 5855–5864. [Google Scholar]

- Tancik, M.; Casser, V.; Yan, X.; Pradhan, S.; Mildenhall, B.; Srinivasan, P.P.; Barron, J.T.; Kretzschmar, H. Block-nerf: Scalable large scene neural view synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 19–24 June 2022; pp. 8248–8258. [Google Scholar]

- Martin-Brualla, R.; Radwan, N.; Sajjadi, M.S.; Barron, J.T.; Dosovitskiy, A.; Duckworth, D. Nerf in the wild: Neural radiance fields for unconstrained photo collections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2020; pp. 7210–7219. [Google Scholar]

- Kajiya, J.T.; Von Herzen, B.P. Ray tracing volume densities. ACM SIGGRAPH Comput. Graph. 1984, 18, 165–174. [Google Scholar] [CrossRef]

- Zhang, X.; Srinivasan, P.P.; Deng, B.; Debevec, P.; Freeman, W.T.; Barron, J.T. Nerfactor: Neural factorization of shape and reflectance under an unknown illumination. ACM Trans. Graph. (TOG) 2021, 40, 1–18. [Google Scholar] [CrossRef]

- Deng, K.; Liu, A.; Zhu, J.Y.; Ramanan, D. Depth-supervised nerf: Fewer views and faster training for free. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 19–24 June 2022; pp. 12882–12891. [Google Scholar]

- Karnewar, A.; Ritschel, T.; Wang, O.; Mitra, N. Relu fields: The little non-linearity that could. In ACM SIGGRAPH 2022 Conference Proceedings; Association for Computing Machinery: New York, NY, USA, 2022; pp. 1–9. [Google Scholar]

- Garbin, S.J.; Kowalski, M.; Johnson, M.; Shotton, J.; Valentin, J. Fastnerf: High-fidelity neural rendering at 200fps. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 14346–14355. [Google Scholar]

- Yuan, Y.J.; Lai, Y.K.; Huang, Y.H.; Kobbelt, L.; Gao, L. Neural radiance fields from sparse RGB-D images for high-quality view synthesis. In IEEE Transactions on Pattern Analysis and Machine Intelligence; IEEE: New York, NY, USA, 2022. [Google Scholar]

- Wei, Y.; Liu, S.; Rao, Y.; Zhao, W.; Lu, J.; Zhou, J. Nerfingmvs: Guided optimization of neural radiance fields for indoor multi-view stereo. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 5610–5619. [Google Scholar]

- Rematas, K.; Liu, A.; Srinivasan, P.P.; Barron, J.T.; Tagliasacchi, A.; Funkhouser, T.; Ferrari, V. Urban radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 19–24 June 2022; pp. 12932–12942. [Google Scholar]

- Roessle, B.; Barron, J.T.; Mildenhall, B.; Srinivasan, P.P.; Nießner, M. Dense depth priors for neural radiance fields from sparse input views. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 19–24 June 2022; pp. 12892–12901. [Google Scholar]

- Neff, T.; Stadlbauer, P.; Parger, M.; Kurz, A.; Mueller, J.H.; Chaitanya, C.R.; Kaplanyan, A.A.; Steinberger, M. DONeRF: Towards Real-Time Rendering of Compact Neural Radiance Fields using Depth Oracle Networks. Comput. Graph. Forum 2021, 40, 45–59. [Google Scholar] [CrossRef]

- Murez, Z.; Van As, T.; Bartolozzi, J.; Sinha, A.; Badrinarayanan, V.; Rabinovich, A. Atlas: End-to-end 3d scene reconstruction from posed images. In Proceedings of the European Conference on Computer Vision 2020, Online, 23–28 August 2020; pp. 414–431. [Google Scholar]

- Curless, B.; Levoy, M. A volumetric method for building complex models from range images. In Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques 1996, New Orleans, LA, USA, 4–9 August 1996; pp. 303–312. [Google Scholar]

- Dai, A.; Nießner, M.; Zollhöfer, M.; Izadi, S.; Theobalt, C. Bundlefusion: Real-time globally consistent 3d reconstruction using on-the-fly surface reintegration. ACM Trans. Graph. 2017, 36, 1. [Google Scholar] [CrossRef]

- Izadi, S.; Kim, D.; Hilliges, O.; Molyneaux, D.; Newcombe, R.; Kohli, P.; Shotton, J.; Hodges, S.; Freeman, D.; Davison, A.; et al. KinectFusion: Real-time 3D reconstruction and interaction using a moving depth camera. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology 2011, Santa Barbara, CA, USA, 16–19 October 2011; pp. 559–568. [Google Scholar]

- Nießner, M.; Zollhöfer, M.; Izadi, S.; Stamminger, M. Real-time 3D reconstruction at scale using voxel hashing. ACM Trans. Graph. 2013, 32, 1–11. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry processing 2006, Cagliari Sardinia, Italy, 26–28 June 2006. [Google Scholar]

- Pfister, H.; Zwicker, M.; Van Baar, J.; Gross, M. Surfels: Surface elements as rendering primitives. In Proceedings of the 27th Annual Conference On Computer Graphics And Interactive Techniques 2000, New Orleans, LA, USA, 23–28 July 2000; pp. 335–342. [Google Scholar]

- Marton, Z.C.; Rusu, R.B.; Beetz, M. On fast surface reconstruction methods for large and noisy point clouds. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation 2009, Kobe, Japan, 12–17 May 2009; pp. 3218–3223. [Google Scholar]

- Yu, A.; Ye, V.; Tancik, M.; Kanazawa, A. pixelnerf: Neural radiance fields from one or few images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2020; pp. 4578–4587. [Google Scholar]

- Tancik, M.; Mildenhall, B.; Wang, T.; Schmidt, D.; Srinivasan, P.P.; Barron, J.T.; Ng, R. Learned initializations for optimizing coordinate-based neural representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2021, Nashville, TN, USA, 20–25 June 2020; pp. 2846–2855. [Google Scholar]

- Turki, H.; Ramanan, D.; Satyanarayanan, M. Mega-NeRF: Scalable Construction of Large-Scale NeRFs for Virtual Fly-Throughs. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2022, New Orleans, LA, USA, 19–24 June 2022; pp. 12922–12931. [Google Scholar]

- Zhang, K.; Riegler, G.; Snavely, N.; Koltun, V. Nerf++: Analyzing and improving neural radiance fields. arXiv 2020, arXiv:2010.07492. [Google Scholar]

- Lin, C.H.; Ma, W.C.; Torralba, A.; Lucey, S. Barf: Bundle-adjusting neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 5741–5751. [Google Scholar]

- Meng, Q.; Chen, A.; Luo, H.; Wu, M.; Su, H.; Xu, L.; He, X.; Yu, J. Gnerf: Gan-based neural radiance field without posed camera. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 6351–6361. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Yu, A.; Li, R.; Tancik, M.; Li, H.; Ng, R.; Kanazawa, A. Plenoctrees for real-time rendering of neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision 2021, Montreal, BC, Canada, 10–17 October 2021; pp. 5752–5761. [Google Scholar]

- Liu, L.; Gu, J.; Zaw Lin, K.; Chua, T.S.; Theobalt, C. Neural sparse voxel fields. Adv. Neural Inf. Process. Syst. 2020, 33, 15651–15663. [Google Scholar]

- Müller, T.; Evans, A.; Schied, C.; Keller, A. Instant neural graphics primitives with a multiresolution hash encoding. arXiv 2022, arXiv:2201.05989. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Werner, D.; Al-Hamadi, A.; Werner, P. Truncated signed distance function: Experiments on voxel size. In Proceedings of the International Conference Image Analysis and Recognition 2014, Vilamoura, Portugal, 22–24 October 2014; pp. 357–364. [Google Scholar]

- Lorensen, W.E.; Cline, H.E. Marching cubes: A high resolution 3D surface construction algorithm. ACM Siggraph Comput. Graph. 1987, 21, 163–169. [Google Scholar] [CrossRef]

- Ku, J.; Harakeh, A.; Waslander, S.L. In defense of classical image processing: Fast depth completion on the cpu. In Proceedings of the 2018 15th Conference on Computer and Robot Vision, Toronto, ON, Canada, 8–10 May 2018; pp. 16–22. [Google Scholar]

| Classroom | Barbershop | San Miguel | Average | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ | Time(s)↓ |

| NeRF (8 + 8) | 0.78 | 24.42 | 0.25 | 0.74 | 23.03 | 0.29 | 0.59 | 22.13 | 0.40 | 0.70 | 23.19 | 0.31 | 5.7 |

| DONeRF (8 + 8) | 0.83 | 25.11 | 0.16 | 0.80 | 24.04 | 0.20 | 0.68 | 22.97 | 0.27 | 0.77 | 24.04 | 0.21 | 4.8 |

| DS-NeRF (8 + 8) | 0.77 | 24.27 | 0.26 | 0.74 | 23.29 | 0.28 | 0.59 | 22.05 | 0.42 | 0.70 | 23.20 | 0.32 | 5.3 |

| TMRM | 0.80 | 17.92 | 0.15 | 0.81 | 22.61 | 0.14 | 0.74 | 21.44 | 0.15 | 0.78 | 20.66 | 0.15 | 0.6 |

| Ours (Hybrid) | 0.86 | 25.88 | 0.10 | 0.85 | 25.15 | 0.12 | 0.77 | 24.15 | 0.13 | 0.83 | 25.06 | 0.12 | 0.6 + 1.2 |

| Classroom | Barbershop | San Miguel | Average | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ | SSIM↑ | PSNR↑ | LPIPS↓ |

| Ours (w/o D.S., Hybrid) | 0.75 | 23.85 | 0.28 | 0.72 | 22.84 | 0.29 | 0.51 | 20.52 | 0.47 | 0.66 | 22.40 | 0.35 |

| Ours (w/o D.W., Hybrid) | 0.81 | 25.23 | 0.18 | 0.80 | 24.89 | 0.20 | 0.67 | 23.26 | 0.29 | 0.76 | 24.46 | 0.22 |

| Ours (w/o Hybrid) | 0.83 | 25.78 | 0.16 | 0.80 | 24.95 | 0.18 | 0.68 | 23.32 | 0.27 | 0.77 | 24.68 | 0.20 |

| Ours | 0.86 | 25.88 | 0.10 | 0.85 | 25.15 | 0.12 | 0.77 | 24.15 | 0.13 | 0.83 | 25.06 | 0.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jin, T.; Zhuang, J.; Xiao, J.; Ge, J.; Ye, S.; Zhang, X.; Wang, J. Prior-Driven NeRF: Prior Guided Rendering. Electronics 2023, 12, 1014. https://doi.org/10.3390/electronics12041014

Jin T, Zhuang J, Xiao J, Ge J, Ye S, Zhang X, Wang J. Prior-Driven NeRF: Prior Guided Rendering. Electronics. 2023; 12(4):1014. https://doi.org/10.3390/electronics12041014

Chicago/Turabian StyleJin, Tianxing, Jiayan Zhuang, Jiangjian Xiao, Jianfei Ge, Sichao Ye, Xiaolu Zhang, and Jie Wang. 2023. "Prior-Driven NeRF: Prior Guided Rendering" Electronics 12, no. 4: 1014. https://doi.org/10.3390/electronics12041014

APA StyleJin, T., Zhuang, J., Xiao, J., Ge, J., Ye, S., Zhang, X., & Wang, J. (2023). Prior-Driven NeRF: Prior Guided Rendering. Electronics, 12(4), 1014. https://doi.org/10.3390/electronics12041014