A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging

Abstract

1. Introduction

- We have constructed an interpersonal relationship dataset for news texts, NewsPer, which is fully manual annotated, reducing the impact of noise data and supplementing a high-quality dataset for Chinese overlapping entity relation extraction research.

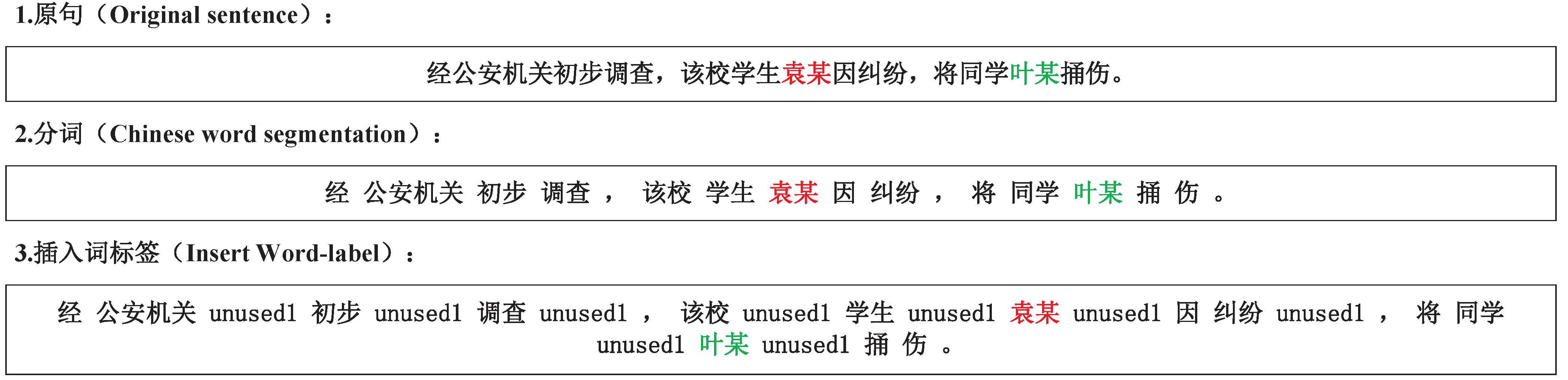

- We propose the Word-label method, which integrates the character features of Chinese text into the dependency graph of text segmentation so that the character features and dependency features of Chinese text can be effectively combined. This solves the problem of embedding differences between Chinese characters and words and the problem of feature differences caused by different grammatical structures in Chinese and English.

- We successfully integrated text context semantic information and dependency structure information by using GCN. We also conducted experimental comparisons with other overlapping entity relation extraction models on the dataset we constructed. The results show that our proposed model has greatly improved the performance of Chinese overlapping entity relation extraction.

2. Related Work

2.1. Relation Extraction

2.2. Entity Overlapping

2.3. Datasets for relation extraction

3. Proposed Method

3.1. Task Formulation

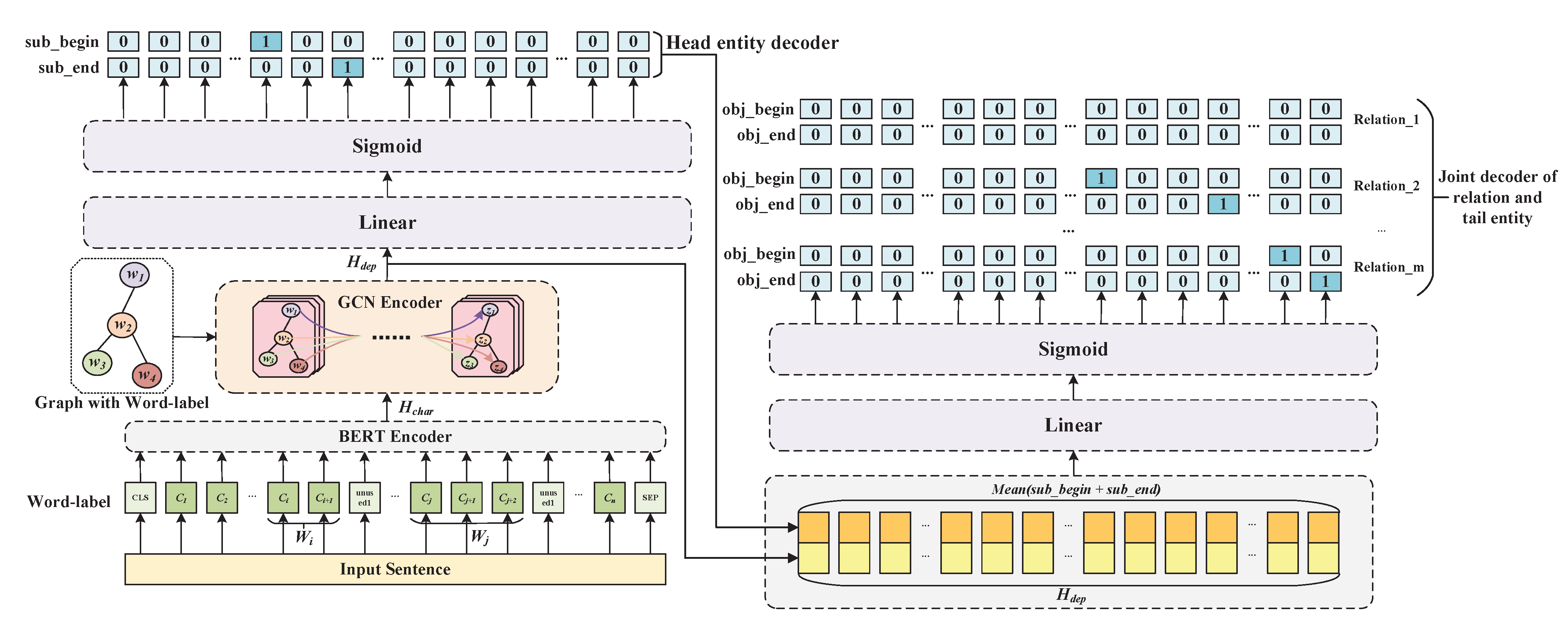

3.2. Encoder

3.2.1. BERT

3.2.2. GCN

3.3. Decoder

3.3.1. Head Entity Decoder

3.3.2. Joint Decoder of Relation and Tail Entity

4. Proposed Dataset

4.1. Data Collection

4.2. Data Processing

4.3. Data Features

4.4. Discussion

5. Experiments

5.1. Experimental Setting

5.2. Comparison Experiment and Result Analysis

- AttBLSTM [38] is a bidirectional LSTM network based on an attention mechanism that can automatically extract important words in text without using additional knowledge or NLP systems.

- CNN modifies the method proposed by Zeng et al. [39] by instead extracting text sentence features by using BERT, splicing the sentence features with the location features of the entity pairs, and finally feeding the output of CNN into a softmax classifier to predict the relationship between two tagged entities.

- GCN [40] is a deep learning model based on graph structure data; this experiment uses the publicly available Chinese word embedding (https://github.com/Embedding/Chinese-Word-Vectors; (accessed on 10 February 2018)), inputting the text feature representation and adjacency matrix into the GCN, and finally inputting the output of the GCN into a softmax classifier to predict the relationship between two tagged entities.

- CopyMTL [41] is a multitask learning framework that uses conditional random fields (CRF) to identify entities and the Seq2Seq model to extract relation triples. OneDecoder uses shared parameters to predict all triples, while MultiDecoder uses unshared decoders, and each decoder predicts a triplet.

- CasRel [8] is a novel cascading binary tagging framework. In the first stage, all possible head entities are identified, and then for each identified head entity, all possible relations and the corresponding tail entities are identified by a relation-specific tagger at the same time.

- SPN [28] represents the joint relation extraction task as a set prediction problem. The model employs a nonautoregressive decoder based on a transformer as the set generator, and when combined with the bipartite match loss function, all relation triples can be output directly at the same time.

5.3. Ablation Experiment and Result Analysis

- with regard to Word-label, the word feature vector of BERT encoding is spliced with the dependent feature vector of GCN encoding as the feature vector of text for decoder annotation;

- with regard to GCN Encoder, CasRel’s experimental results on the NewsPer test set are given;

- with regard to BERT Encoder, using the publicly available Chinese word embedding, the text features are encoded using BiLSTM for the decoder to annotate the location of entities.

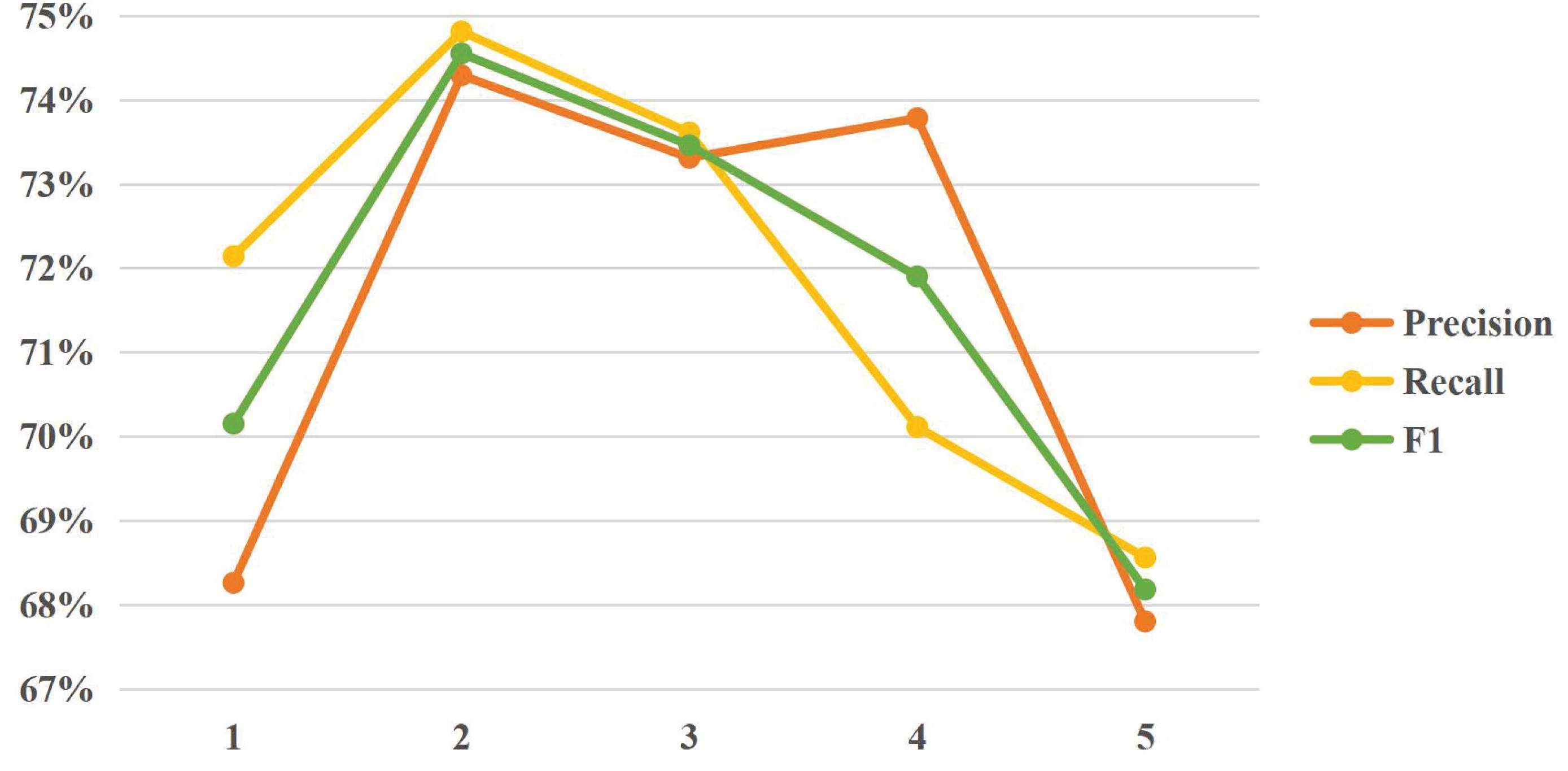

5.4. Effects of Graph Setting

- with regard to the directed graph, the character-word edge is from word node to character node, and the dependency edge is from head to dependent word;

- with regard to the mixed graph, character–word edge refers to word node pointing to character node, and dependency edge refers to undirected edge; and

- with regard to the undirected graph, both character–word edges and dependency edges are undirected edges.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Types of Named Entities

| Types | Content |

|---|---|

| PEO | Personal name, including nicknames and made-up names. |

| LOC | Geographic location, including country, city, etc. |

| ORG | Generally refers to businesses, schools, government departments, and so on, excluding bands, groups, and so on. |

| nw | Books or articles written, songs sung, programs performed, etc. |

Appendix A.2. Types of Named Relations

| ID | Names | Description |

|---|---|---|

| 0 | Unknown | Relationships that do not belong to any other relationship type. |

| 1 | 亲属关系/夫妻 | Established by marriage, including husband, wife, fiance, and fiancee. |

| 2 | 亲属关系/祖孙 | It refers to generational kinship, such as grandfather and grandmother. |

| 3 | 亲属关系/父母 | Refers to the person who plays the roles of father and mother, including father, mother, a spouse’s parents, and adoptive parents. |

| 4 | 亲属关系/兄弟姐妹 | It refers to brothers and sisters, including blood-related brothers and sisters and non-blood-related brothers and sisters. |

| 5 | 亲属关系/亲戚 | Refers to internal and external relatives, including matrilineal relatives and paternal relatives, such as uncles, aunts, etc. |

| 6 | 社交关系/情侣 | Refers to two people who attract and love each other, including boyfriend, girlfriend, lover, and cohabitation. |

| 7 | 社交关系/前任 | Refers to a person who previously held a certain position or status, including an ex-boyfriend, ex-girlfriend, ex-husband, and ex-wife. |

| 8 | 社交关系/朋友 | It refers to people with deep friendships, including good friends, girlfriends, confidants, etc. |

| 9 | 社交关系/同学 | Refers to people who go to school at the same school, including classmates, seniors, elder sisters, younger brothers, younger sisters, etc. |

| 10 | 社交关系/师生 | The collective name of teacher and student here refers to teacher, coach, and master. |

| 11 | 社交关系/合作 | Refers to the people who work together, including customers, teammates, colleagues, associates, team members, etc. |

| 12 | 社交关系/竞争 | It refers to the relationship of competing with others for their own interests, such as competitors in competitions and at work, and so on. |

| 13 | 其他关系/工作于 | Refers to a place or organization where a person works, such as a place or organization where he is working or once worked. |

| 14 | 其他关系/学习于 | It refers to a person studying in a certain place or organization, such as a graduating college, a college they are currently studying at, a place to study abroad, etc. |

| 15 | 其他关系/出生于 | A place, such as a country, city, etc. |

| 16 | 其他关系/作品 | It refers to the original intellectual achievements in the field of literature, art, or science produced by a person through creative activities and can be expressed in a certain form. |

| 17 | 其他关系/国籍 | Refers to the identity of an individual belonging to a certain country. |

Appendix A.3. Implementation Details

| Parameter Name | Parameter Value |

|---|---|

| Batch size | 8 |

| Epoch | 200 |

| GCN layer number | 2 |

| GCN hidden layer dimension | 300 |

| GCN Learning rate | 0.0001 |

| Bert Learning rate | 0.00001 |

References

- Liu, S.; Li, B.; Guo, Z.; Wang, B.; Chen, G. Review of Entity Relation Extraction. J. Inf. Eng. Univ. 2016, 17, 541–547. [Google Scholar]

- Aone, C.; Ramos-Santacruz, M. REES: A large-scale relation and event extraction system. In Proceedings of the Sixth Applied Natural Language Processing Conference, Seattle, WA, USA, 29 April–4 May 2000; pp. 76–83. [Google Scholar]

- Aitken, J.S. Learning Information Extraction Rules: An Inductive Logic Programming Approach. In Proceedings of the 15th Eureopean Conference on Artificial Intelligence, ECAI’2002, Lyon, France, 21–26 July 2002; pp. 355–359. [Google Scholar]

- Schutz, A.; Buitelaar, P. Relext: A tool for relation extraction from text in ontology extension. In Proceedings of the International Semantic Web Conference, Galway, Ireland, 6–10 November 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 593–606. [Google Scholar]

- Rink, B.; Harabagiu, S.M. A generative model for unsupervised discovery of relations and argument classes from clinical texts. In Proceedings of the Conference on Empirical Methods in Natural Language Processing 2011, Edinburgh, UK, 27–31 July 2011. [Google Scholar]

- Thattinaphanich, S.; Prom-On, S. Thai Named Entity Recognition Using Bi-LSTM-CRF with Word and Character Representation. In Proceedings of the 4th International Conference on Information Technology 2019, Bali, Indonesia, 24–27 October 2019. [Google Scholar]

- Zeng, X.; Zeng, D.; He, S.; Kang, L.; Zhao, J. Extracting Relational Facts by an End-to-End Neural Model with Copy Mechanism. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics 2018, Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Wei, Z.; Su, J.; Wang, Y.; Tian, Y.; Chang, Y. A Novel Cascade Binary Tagging Framework for Relational Triple Extraction. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics 2020, Online, 5–10 July 2020. [Google Scholar]

- Yang, X.; Zhang, S.; Ou-Yang, C. A Comprehensive Review on Relation Extraction. J. Univ. S. China (Sci. Technol.) 2018, 1. [Google Scholar]

- Socher, R.; Huval, B.; Manning, C.D.; Ng, A.Y. Semantic Compositionality through Recursive Matrix-Vector Spaces. In Proceedings of the Joint Conference on Empirical Methods in Natural Language Processing & Computational Natural Language Learning 2012, Jeju, Republic of Korea, 12–14 July 2012. [Google Scholar]

- Sun, J.D.; Xiu-Sen, G.U.; Yan, L.I.; Wei-Ran, X.U. Chinese entity relation extraction algorithms based on coae2016 datasets. J. Shandong Univ. 2017, 52, 7. [Google Scholar]

- Gao, D.; Peng, D.L.; Liu, C. Entity Relation Extraction Based on CNN in Large-scale Text Data. J. Chin. Comput. Syst. 2018, 39, 5. [Google Scholar]

- Miwa, M.; Bansal, M. End-to-End Relation Extraction using LSTMs on Sequences and Tree Structures. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics 2016, Berlin, Germany, 7–12 August 2016. [Google Scholar]

- Li, F.; Zhang, M.; Fu, G.; Ji, D. A Neural Joint Model for Extracting Bacteria and Their Locations. Advances in Knowledge Discovery and Data Mining. 2017, 10235, 15–26. [Google Scholar]

- Zheng, S.; Hao, Y.; Lu, D.; Bao, H.; Xu, J.; Hao, H.; Xu, B. Joint entity and relation extraction based on a hybrid neural network. Neurocomputing 2017, 257, 59–66. [Google Scholar] [CrossRef]

- Chen, Y.J.; Hsu, Y.J. Chinese Relation Extraction by Multiple Instance Learning. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence 2016, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Rönnqvist, S.; Schenk, N.; Chiarcos, C. A recurrent neural model with attention for the recognition of chinese implicit discourse relations. arXiv 2017, arXiv:1704.08092, 2017. [Google Scholar]

- Zhang, Q.Q.; Chen, M.D.; Liu, L.Z. An effective gated recurrent unit network model for chinese relation extraction. In Proceedings of the 2017 2nd International Conference on Wireless Communication and Network Engineering, WCNE 2017, Xiamen, China, 24–25 December 2017; pp. 275–280. [Google Scholar]

- Xu, J.; Wen, J.; Sun, X.; Su, Q. A discourse-level named entity recognition and relation extraction dataset for chinese literature text. arXiv 2017, arXiv:1711.07010. [Google Scholar]

- Zhang, Y.; Yang, J. Chinese NER using lattice LSTM. arXiv 2018, arXiv:1805.02023. [Google Scholar]

- Li, Z.; Ding, N.; Liu, Z.; Zheng, H.; Shen, Y. Chinese Relation Extraction with Multi-Grained Information and External Linguistic Knowledge. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics 2019, Florence, Italy, 28 July–2 August 2019; pp. 4377–4386. [Google Scholar]

- Wan, H.; Moens, M. F.; Luyten, W.; Zhou, X.; Mei, Q.; Liu, L.; Tang, J. Extracting relations from traditional Chinese medicine literature via heterogeneous entity networks. J. Am. Med. Inform. Assoc. 2016, 23, 356–365. [Google Scholar] [CrossRef] [PubMed]

- Jin, Y.; Zhang, W.; He, X.; Wang, X.; Wang, X. Syndrome-aware herb recommendation with multi-graph convolution network. In Proceedings of the 2020 IEEE 36th International Conference on Data Engineering 2020, Dallas, TX, USA, 20–24 April 2020; pp. 145–156. [Google Scholar]

- Ruan, C.; Ma, J.; Wang, Y.; Zhang, Y.; Yang, Y. Discovering regularities from traditional Chinese medicine prescriptions via bipartite embedding model. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence 2019, Macao, China, 10–16 August 2019. [Google Scholar]

- Zeng, X.; He, S.; Zeng, D.; Liu, K.; Liu, S.; Zhao, J. Learning the Extraction Order of Multiple Relational Facts in a Sentence with Reinforcement Learning. In Proceedings of the Empirical Methods in Natural Language Processing 2019, Hong Kong, China, 3–7 November 2019. [Google Scholar]

- Fu, T.J.; Li, P.H.; Ma, W.Y. GraphRel: Modeling Text as Relational Graphs for Joint Entity and Relation Extraction. In Proceedings of the Meeting of the Association for Computational Linguistics 2019, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Wang, Y.; Yu, B.; Zhang, Y.; Liu, T.; Sun, L. Tplinker: Single-stage joint extraction of entities and relations through token pair linking. arXiv 2020, arXiv:2010.13415. [Google Scholar]

- Sui, D.; Chen, Y.; Liu, K.; Zhao, J.; Liu, S. Joint entity and relation extraction with set prediction networks. arXiv 2020, arXiv:2011.01675. [Google Scholar]

- Doddington, G.R.; Mitchell, A.; Przybocki, M.A.; Ramshaw, L.A.; Strassel, S.M.; Weischedel, R.M. The automatic content extraction (ace) program-tasks, data, and evaluation. In Proceedings of the Lrec, Lisbon, Portugal, 26–28 May 2004; pp. 837–840. [Google Scholar]

- Song, Z.; Maeda, K.; Walker, C.; Strassel, S. Ace 2007 Multilingual Training Corpus. Available online: https://catalog.ldc.upenn.edu/LDC2014T18 (accessed on 15 September 2014).

- Hendrickx, I.; Su, N.K.; Kozareva, Z.; Nakov, P.; Szpakowicz, S. SemEval-2010 Task 8: Multi-Way Classification of Semantic Relations between Pairs of Nominals. In Proceedings of the Association for Computational Linguistics 2010, Uppsala, Sweden, 11–16 July 2010. [Google Scholar]

- Zhang, Y.; Zhong, V.; Chen, D.; Angeli, G.; Manning, C. D. Position-aware Attention and Supervised Data Improve Slot Filling. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing 2017, Copenhagen, Denmark, 7–11 September 2017. [Google Scholar]

- Riedel, S.; Yao, L.; Mccallum, A. K. Modeling relations and their mentions without labeled text. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, Barcelona, Spain, 20–24 September 2010; pp. 148–163. [Google Scholar]

- Li, S.; He, W.; Shi, Y.; Jiang, W.; Liang, H.; Jiang, Y.; Zhu, Y. Duie: A large-scale chinese dataset for information extraction. In Proceedings of the CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; pp. 791–800. [Google Scholar]

- Wang, H.; He, Z.; Ma, J.; Chen, W.; Zhang, M. IPRE: A dataset for inter-personal relationship extraction. In Proceedings of the CCF International Conference on Natural Language Processing and Chinese Computing, Dunhuang, China, 9–14 October 2019; pp. 103–115. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. Available online: https://www.cs.ubc.ca/amuham01/LING530/papers/radford2018improving.pdf (accessed on 10 February 2018).

- Zhang, J.L.; Zhang, Y.F.; Wamg, M.Q.; Huang, Y.J. Joint Extraction of Chinese Entity Relations Based on Graph Convolutional Neural Network. Comput. Eng. 2021, 47, 103–111. [Google Scholar]

- Peng, Z.; Wei, S.; Tian, J.; Qi, Z.; Bo, X. Attention-Based Bidirectional Long Short-Term Memory Networks for Relation Classification. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics 2016, Berlin, Germany, 7–12 August 2016. [Google Scholar]

- Zeng, D.; Liu, K.; Lai, S.; Zhou, G.; Zhao, J. Relation classification via convolutional deep neural network. In Proceedings of the 25th International Conference on Computational Linguistics 2014, Dublin, Ireland, 23–29 August 2014; pp. 2335–2344. [Google Scholar]

- Zhang, Y.; Qi, P.; Manning, C. D. Graph convolution over pruned dependency trees improves relation extraction. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2205–2215. [Google Scholar]

- Zeng, D.; Zhang, H.; Liu, Q. CopyMTL: Copy Mechanism for Joint Extraction of Entities and Relations with Multi-Task Learning. In Proceedings of the AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 9507–9514. [Google Scholar]

| Types | Text | Triples |

|---|---|---|

| Normal [7] | 周星驰中学就读于香港圣玛利奥英文书院。 (Zhou Xingchi studied at St. Mary’s English College in Hong Kong as a secondary school student.) | 〈周星驰,就读于,香港圣玛利奥英文书院〉

〈Zhou Xingchi, studied at, St. Mary’s English College in Hong Kong〉 |

| SEO [7] | 周星驰主演了《喜剧之王》和《大话西游》。 (Zhou Xingchi starred in “King of Comedy” and “A Chinese Odyssey”.) | 〈周星驰,演员,喜剧之王〉 〈Zhou Xingchi, actor, King of Comedy〉 〈周星驰,演员,大话西游〉 〈Zhou Xingchi, actor, A Chinese Odyssey〉 |

| EPO [7] | 由周星驰导演并主演的《功夫》于近期上映。 (Directed by and starring Zhou Xingchi, “Kung Fu Hustle” was recently released.) | 〈周星驰,演员,功夫〉 〈Zhou Xingchi, actor, Kung Fu Hustle〉 〈周星驰,导演,功夫〉 〈Zhou Xingchi, Director, Kung Fu Hustle〉 |

| Spilt | Sentences | Single Relation Sentences | Overlapping Entity Sentences |

|---|---|---|---|

| Train | 7057 | 5400 | 1657 |

| Dev | 2016 | 1526 | 490 |

| Test | 1009 | 734 | 275 |

| All | 10,082 | 7660 | 2422 |

| Triple Number | Train | Dev | Test | All |

|---|---|---|---|---|

| 1 | 5504 | 1555 | 746 | 7805 |

| 2 | 989 | 275 | 172 | 1436 |

| 3 | 377 | 124 | 56 | 557 |

| 4 | 97 | 23 | 16 | 136 |

| 5 | 39 | 16 | 11 | 66 |

| 6 | 32 | 13 | 7 | 52 |

| >7 | 19 | 10 | 1 | 30 |

| Model | Precison | Recall | F1 |

|---|---|---|---|

| AttBLSTM | 0.4412 | 0.4920 | 0.4652 |

| CNN | 0.5595 | 0.5480 | 0.5537 |

| GCN | 0.5819 | 0.5832 | 0.5825 |

| 0.4296 | 0.3792 | 0.4028 | |

| 0.4673 | 0.4017 | 0.4320 | |

| CasRel | 0.7105 | 0.6596 | 0.6841 |

| SPN | 0.7168 | 0.7153 | 0.7160 |

| DepCasRel | 0.7429 | 0.7481 | 0.7455 |

| Condition | Precison | Recall | F1 |

|---|---|---|---|

| DepCasRel | 0.7429 | 0.7481 | 0.7455 |

| -Word-label | 0.4684 | 0.6660 | 0.5500 |

| -GCN Encoder | 0.7105 | 0.6596 | 0.6841 |

| -BERT Encoder | 0.6144 | 0.4484 | 0.5185 |

| Condition | Precison | Recall | F1 |

|---|---|---|---|

| Directed graph & Word-label | 0.7244 | 0.7193 | 0.7218 |

| Mixed graph & Word-label | 0.7327 | 0.7291 | 0.7309 |

| Undirected graph & Word-label | 0.7429 | 0.7481 | 0.7455 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tuo, M.; Yang, W.; Wei, F.; Dai, Q. A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging. Electronics 2023, 12, 1013. https://doi.org/10.3390/electronics12041013

Tuo M, Yang W, Wei F, Dai Q. A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging. Electronics. 2023; 12(4):1013. https://doi.org/10.3390/electronics12041013

Chicago/Turabian StyleTuo, Meimei, Wenzhong Yang, Fuyuan Wei, and Qicai Dai. 2023. "A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging" Electronics 12, no. 4: 1013. https://doi.org/10.3390/electronics12041013

APA StyleTuo, M., Yang, W., Wei, F., & Dai, Q. (2023). A Novel Chinese Overlapping Entity Relation Extraction Model Using Word-Label Based on Cascade Binary Tagging. Electronics, 12(4), 1013. https://doi.org/10.3390/electronics12041013