Abstract

With the increasing interest in space science exploration, the number of spacecraft in Earth’s orbit has been steadily increasing. To ensure the safety and operational integrity of active satellites, advanced surveillance and early warning of unknown space objects such as space debris are crucial. The traditional threshold-based filter for space object detection heavily relies on manual settings, leading to limitations such as poor flexibility, high false alarm rates, and weak target detection capability in low signal-to-noise ratios. Therefore, detecting faint and small objects against a complex starry background remains a formidable challenge. To address this challenge, we propose a novel, intelligent, and accurate detection method called You Only Look Once for Space Object Detection (SOD-YOLO). Our method includes the following novel modules: Multi-Channel Histogram Truncation (MHT) enhances feature representation, CD-ELAN based on Central Differential Convolution (CDC) facilitates learning contrast information, the Space-to-Depth (SPD) module replaces pooling layer to prevent small object feature loss, a simple and parameter-free attention module (SimAM) expands receptive field for Global Contextual Information, and Alpha-EIoU optimizes the loss function for efficient training. Experiments on our SSOD dataset show SOD-YOLO has the ability to detect objects with a minimum signal-to-noise ratio of 2.08, improves AP by 11.2% compared to YOLOv7, and enhances detection speed by 42.7%. Evaluation on the Spot the Geosynchronous Orbit Satellites (SpotGEO) dataset demonstrates SOD-YOLO’s comparable performance to state-of-the-art methods, affirming its generalization and precision.

1. Introduction

Over the past couple of decades, space technology has been developed rapidly. Tens of thousands of new space assets are being launched globally [1]. With the expansion of human space exploration, the Resident Space Object (RSO) detection problem has been a global threat and critical issue in recent years [2]. According to the statistical results of an analysis of the space debris in Earth’s orbit by the US Space Surveillance Network (SSN), there are more than 5000 pieces of space debris larger than 1 m, and about 34,000 pieces of space debris larger than 10 cm, including only about 2000 normally operating satellites and other payloads. Only 31.3% of the catalogued space objects are spacecraft; about 16.6% are abandoned rocket bodies and other mission-related objects, and the remaining up to 52.1% are operational debris and useless space debris due to spacecraft collision and disintegration [1,3]. Hence, it is urgent to establish a space surveillance station to enable the detection, identification, and avoidance of space hazards as an important part of space situation awareness (SSA) systems [4]. As a critical technology, space object detection based on optical imaging characteristics has received wide attention.

Currently, space object detection technology is mainly divided into ground-based detection methods and space-based detection methods [5]. Due to the high cost and technical challenges of space-based observation, ground-based detection methods are more mainstream [6]. However, due to the long observation distance, the pixels occupied by space objects are typically less than 20. Further, the objects are often relatively dim, by reason of the interference of the celestial background, in astronomical images obtained from ground-based observations. Additionally, because of the inherent optical and mechanical structure of telescopes, it is inevitable that the image background will be non-uniform during imaging due to vignetting and stray light [5]. The space objects in different positions in an image will be affected to different degrees, which also brings great challenges to the detection of spatial objects in astronomical images [7].

Several algorithms have been developed to address these challenges. Bertin et al. [8] proposed a method based on high-pass filtering and connected domain thresholding for space object detection which heavily relies on manual threshold settings, leading to poor flexibility and high false alarms. Sun et al. [9,10] introduced a morphological method and a median filtering algorithm for space debris detection. Nevertheless, this method may encounter difficulties in detecting small or faint objects when the signal-to-noise ratio is low, and it is also dependent on the selection of appropriate morphological structuring elements. Pradhan et al. [11] employed the 1.3 m caliber Devasthal Fast Optical Telescope (DFOT) to capture images of space objects and successfully identified 50 cm-sized space debris in a 1000 km orbit using long exposure techniques. However, this method is limited by the requirement of a large aperture telescope that may not be easily accessible and may not be suitable for detecting smaller debris. Liu et al. [12] proposed a geometric dual multi-object detection method that is robust to initialization, enabling simultaneous detection of multiple spatial objects. However, this method may not be suitable for complex environments with overlapping or closely spaced objects. Ohsawa [13] presented the Tracee algorithm, a moving-object detection approach that extracts linearly aligned line segments from three-dimensional space to accelerate track recognition. However, this algorithm may exhibit limitations in accurately detecting and tracking objects when there are sharp changes in the background noise.

With the rapid development of artificial intelligence technology, object detection algorithms based on filtering are gradually being replaced by deep learning algorithms. At present, object detection algorithms based on deep learning are mainly divided into two categories: two-stage algorithms led by Spatial Pyramid Pooling Net (SPP-Net) [14] and Faster Region-based Convolutional Neural Network (Faster R-CNN) [15] and one-stage algorithms led by You Only Look Once (YOLO) series [16] and Single Shot multibox Detector (SSD) [17]. Additionally, one-stage algorithms have higher real-time performance because they use one network to identify and locate the objects [18]. However, we must point out that most of the existing deep learning algorithms are designed for natural images, and their application scenarios are more inclined to object recognition and classification in high-resolution color images. Their performance is inadequate when detecting small objects in astronomical image. Furthermore, it is worth noting that these astronomical images are typically 16-bit grayscale images, with pixel values ranging from 0 to 65,536. However, the brightness of the objects is often concentrated within a narrow range. If it is normalized directly, dim objects may become drowned out.

Therefore, with an in-depth study of the characteristics of astronomical images and deep learning theory, we propose a detection algorithm called SOD-YOLO (You Only Look Once for Space Object Detection) based on local contrast enhancement and global context information extraction. The main contributions of this paper can be summarized as follows:

- (1)

- We introduce a new algorithm, SOD-YOLO, for space object detection. It utilizes a multi-channel histogram truncation model to process 16-bit astronomical image data and enhance local contrast. To the best of our knowledge, this is the first deep learning model specifically designed for original astronomical image data, which has significant advantages compared to other deep learning methods in the field of space object detection. This innovation is expected to facilitate the development and benchmarking of object detection algorithms in astronomical images.

- (2)

- Based on the central differential convolution (CDC) [19], we reconstruct the Efficient Long-range Attention Network (ELAN) of YOLOv7 [20], which significantly improves the network’s ability to extract local contrast information and detect dim objects.

- (3)

- We integrate a simple and parameter-free attention module (SimAM) [21] into our algorithm, enabling the network to effectively exploit global contextual information.

- (4)

- Instead of using the average pooling layer, we employ the Space-to-Depth (SPD) module [22] for downsampling. This modification allows for the retention of more characteristic information, particularly for small objects.

- (5)

- We propose a new optimization of the loss function called Alpha-EIoU for more efficient model training.

- (6)

- We create a space object dataset called SSOD (Space Small Object Detection), which comprises 9822 astronomical images captured using professional astronomical imaging CCD deployed on ground-based astronomical telescopes. Subsequently, we perform comprehensive experiments and compare SOD-YOLO with several representative models on SSOD.

To sum up, our paper primarily elucidates how we design and improve a deep learning-based model for dim and small object detection and apply it to the field of astronomy. The subsequent sections of the paper are structured as follows: Section 2 provides a comprehensive overview of the relevant literature. Section 3 presents a detailed description of the overall network structure and its components. The experimental results and performance analysis are presented in Section 4. In Section 5, we conduct ablation experiments to further evaluate the effectiveness of specific modules. Finally, Section 6 gives a brief conclusion.

2. Related Works

Despite the limited research on deep learning algorithms for space object detection, there have been numerous investigations into detecting small objects within images, including infrared and Synthetic Aperture Radar (SAR) images. These tasks share similarities with space object detection and offer valuable insights for related work. Therefore, this chapter mainly focuses on introducing relevant research in these fields.

The most commonly used definition of small objects currently originates from the widely adopted dataset called Microsoft Common Objects in Context (MS-COCO) [23], which classifies small objects as those with a resolution less than pixels. Small object detection is a crucial task in various domains such as remote sensing, surveillance, and astronomy. Numerous approaches have been proposed to address this challenge. One common approach is based on traditional image processing techniques. These methods typically involve pre-processing steps such as image enhancement, noise reduction, and background suppression. They then utilize various detection algorithms, such as thresholding, template matching, or edge detection, to identify small objects. However, these techniques often struggle to handle complex backgrounds and low-contrast objects.

In recent years, Convolutional Neural Networks (CNNs) have been widely employed to automatically learn discriminative features from images. As one of the most challenging tasks in computer vision, small object detection has received extensive research attention [24]. Tsung-Yi Lin et al. proposed the Feature Pyramid Networks (FPN) [25] structure for feature map fusion. This approach assigns larger objects to lower-resolution feature maps for detection and smaller objects to higher-resolution feature maps, thereby enhancing the model’s ability to detect small objects. K. Yi et al. [26] use both long-term and short-term attention to guide neural networks to focus on specific parts of image features, thereby enhancing the detection of small objects. To solve the problem of multi-scale SAR ship detection in complex environments, Li et al. [27] proposed a multidimensional domain fusion network which fused low-level and high-level features to improve detection accuracy. Li et al. [28] constructed a channel attention fusion module to acquire multiscale features to improve the accuracy of small object detection. Wu et al. [29] embeds a tiny U-Net into a larger U-Net backbone, enabling the multi-level and multi-scale representation learning of infrared objects. Yao et al. [30] proposed a lightweight network model combined with traditional filtering methods based on the standard Fully Convolutional One-Stage Object Detection (FCOS) network, whose response for small infrared objects is enhanced, and the background response is suppressed.

We analyzed and summarized the differences between traditional filtering methods, existing deep learning methods, and SOD-YOLO in Table 1. Firstly, although traditional filtering methods have targeted research and design for astronomical images, and also have fast running speeds, due to their reliance on manual settings, their robustness and accuracy are not high, and they are gradually being replaced by deep learning methods. However, these aforementioned deep learning algorithms often come at the expense of significant computational complexity, resulting in a trade-off between modest improvements in accuracy and decreased processing speed. Furthermore, these methods overlook a crucial aspect: the original data, whether infrared or SAR images, is typically captured as 16-bit data. These algorithms are all designed for those converted into 8-bit images. Additionally, current 16-bit astronomical image processing predominantly relies on the traditional filtering algorithms mentioned in the introduction. In cases where existing neural network algorithms are to be employed, it is common practice to directly linearly transform the 16-bit astronomical images or to convert them into 8-bit images using the maximum-minimum value method before inputting them into the neural network algorithms, thereby disregarding the potential information loss during the conversion process. Consequently, we argue that network architectures specifically designed for the original 16-bit data can receive more comprehensive image information, thus possessing greater potential for improved performance. Drawing from this viewpoint, we conduct a thorough investigation and introduce the SOD-YOLO model as a novel solution to directly process 16-bit images and network learning. At the same time, it is worth mentioning that SOD-YOLO is also competitive on 8-bit astronomical images, which is proved by experiments on the open 8-bit astronomical image dataset Spot the Geosynchronous Orbit Satellites (SpotGEO).

Table 1.

Differences between SOD-YOLO and existing methods.

3. Methodology

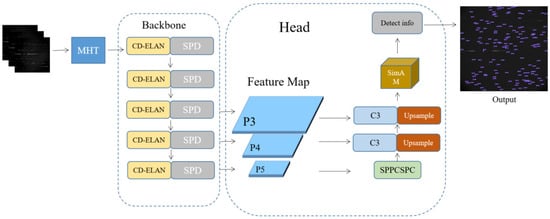

With the aim of detecting space objects while considering speed and accuracy, we propose SOD-YOLO, an improved version of the widely used one-stage algorithm: YOLOv7. This section discusses the fundamental and modified architecture of YOLOv7. The overall process of SOD-YOLO is depicted in Figure 1.

Figure 1.

The network architecture of SOD-YOLO.

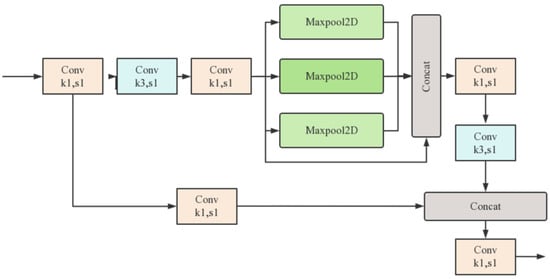

As shown in Figure 1, our SOD-YOLO mainly includes three parts: a pre-processing module for 16-bit data, a backbone composed of CD-ELAN and SPD modules, and a high- and low-level feature fusion head with a SimAM attention module. Concentrated-Comprehensive Convolution (C3) is stacked by CBS (Conv + Batch Normalization + Silu) with identity mapping [31] added. SPPCSPC is an improved spatial pyramid pool module with stronger abilities of feature fusion and extraction. The overall process of Spatial Pyramid Pooling and Channel-wise Spatial Pyramid Convolution (SPPCSPC) is depicted in Figure 2.

Figure 2.

The architecture of SPPCSPC.

The C3 module and SPPCSPC module are both the same as the previous YOLO series, and the other improved modules compared to YOLOv7 will be detailed in the following sections.

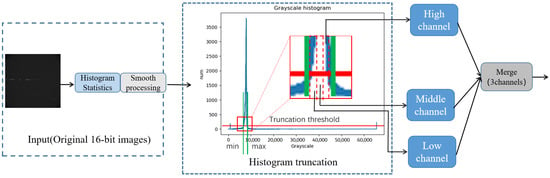

3.1. Multi-Channel Histogram Truncation Module (MHT)

As an effective image processing technique, histogram truncation is commonly used for enhancing image contrast by restricting or eliminating pixel values beyond a specific range. By defining a threshold, the high and low pixel values in the histogram are clipped or truncated, leading to a narrower intensity range. This process accentuates crucial details while mitigating the impact of outliers or extreme values, thereby improving the overall quality and visual appearance of the image.

The original astronomical grayscale data is typically represented in 16 bits, with pixel values ranging from 0 to 65,536. However, as shown in Figure 3, the brightness of the objects is often concentrated within a narrow range. If it is normalized directly, the dim objects may become drowned out. Histogram truncation is highly suitable for addressing this issue. Building upon histogram truncation, we leverage the autonomous learning capabilities of neural networks to design a preprocessing module named Multi-channel Histogram Truncation (MHT). This facilitates the narrowing of intensity ranges, thereby further enhancing the processing effectiveness. The processing flow of MHT is shown in Figure 3.

Figure 3.

Processing flow of MHT for 16-bit images. The red line represents the truncation threshold, and the interval between the two green lines corresponds to the normalized range.

Specifically, the steps involved in how MHT enhances the representation of features of space objects in 16-bit astronomical images are as follows:

Firstly, the MHT module performs a histogram analysis on the input image, allowing it to identify the specific range within which the most significant features are concentrated by examining the distribution of grayscale values. It is worth noting that in 16-bit astronomical images, this range is often quite narrow. This range typically encapsulates the crucial information within the image, while extraneous noise and irrelevant details are typically situated outside of this range.

Next, MHT applies a smoothing process to the resulting histogram curve. This process helps reduce any irregularities or noise present in the curve, making it more reliable for subsequent calculations.

Based on the smoothed curve, MHT determines the maximum and minimum values for normalization. A threshold is set on the number of pixels in order to identify the range within which the relevant features are concentrated. This ensures that the normalization process focuses on the most significant information and disregards potentially irrelevant details.

Within the determined truncation range, the grayscale values are further divided into high, medium, and low channels. These channels are normalized separately, allowing for more precise adjustment of their contrast and brightness levels. This division into multiple channels helps to preserve and enhance different aspects of the image, such as fine details, textures, and overall contrast.

Finally, the normalized channels are concatenated along the channel dimension and fed into the subsequent network or analysis. By combining the enhanced information from the different channels, MHT ensures a comprehensive and refined representation of the spatial object features in the image.

Overall, the MHT module enhances the representation of spatial object features in 16-bit astronomical images by identifying the relevant range of grayscale values, smoothing the histogram curve, normalizing the values within the truncation range, and combining the enhanced channels. This process effectively improves the contrast, clarity, and overall visibility of important features in the image, making it easier to distinguish between noise and signal.

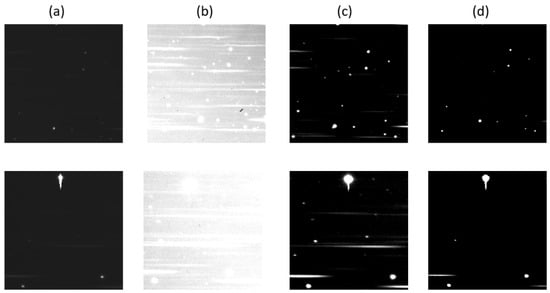

Through a number of experiments and statistics, we suggest that the threshold value of the number of pixels should be set to 0.5–1% of the number of pixels of the original 16-bit image, which can enhance the space objects and at the same time ensure they will not be removed. In this way, the characteristics of dim objects become more obvious and easier to be detected by the network. As for the MHT processing effect shown in Figure 4, it can be observed that some objects that were originally drowned out in the background, making them nearly indistinguishable to the naked eye, become significantly apparent after undergoing MHT processing. The network can enhance the likelihood of detecting dim space objects by autonomously selecting information from different channels through learning.

Figure 4.

Processing effect of MHT (a) original image, (b) low channel, (c) middle channel, and (d) high channel.

3.2. Central Differential-ELAN (CD-ELAN)

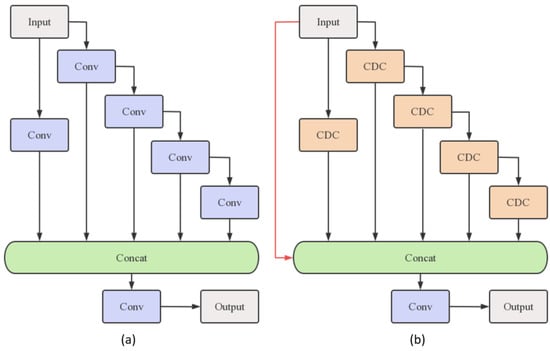

Convolution can effectively extract color, shape, texture, and other information, while standard convolution may be limited by the lack of information in space object detection tasks. Inspired by the human visual system’s sensitivity to intensity difference and contrast, we reconstruct the ELAN module in YOLOv7 based on central differential convolution to make the local contrast information more prominent, and name the new module CD-ELAN. The structures of ELAN and CD-ELAN are shown in Figure 5.

Figure 5.

ELAN module before and after improvement: (a) Structure of ELAN; (b) Structure of CD-ELAN.

In addition to adding identity mapping, the standard convolutions are replaced by CDC in our module. CDC can introduce additional contrast information by computing the difference between the center point and other points within the convolution window and can be described as Equation (1) [19].

In our module, CDC is combined with standard convolution to retain some of the common feature extraction capabilities, and the whole process can be expressed as Equation (2),

where the hyperparameter is used to determine the contribution between CDC and vanilla convolution. The window size used to calculate the difference is equal to the convolution kernel size.

The backbone network composed of CD-ELAN can extract local contrast information from input images better, exhibiting enhanced sensitivity to pixel grayscale value differences within astronomical images, thereby assisting the network in identifying dim space objects.

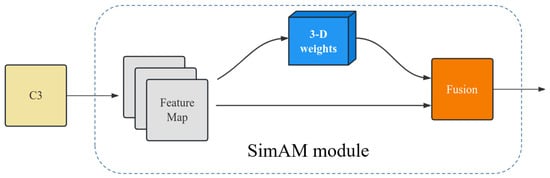

3.3. SimAM Attention Module

The attention mechanism is a crucial concept in neural networks which simulates the selective mechanism of human visual attention. Its core purpose is to filter out noise from cluttered information and selectively focus on the more relevant and critical information for the current task [32]. Initially proposed by the Google team in 2017 [33], it has since evolved into various variants and has been widely applied in the natural language processing and computer vision domains. By allocating different weights to different parts of feature maps, attention modeling indeed emphasizes the valuable regions while suppressing those dispensable ones. Naturally, one can deploy this superior scheme to highlight the small objects that are inclined to be dominated by the background and noisy patterns in an image.

Previous studies, such as the Bottleneck Attention Module (BAM) [34] and Convolutional Block Attention Module (CBAM) [35], have separately combined spatial attention and channel attention in parallel or sequential manners. However, the two types of attention in the human brain often work collaboratively. SimAM simulates the spatial inhibition characteristics of brain neurons by constructing an energy function and obtaining the minimum energy value of neurons through analytical solutions. This function can be expressed as Equation (3), where represents the values of individual pixels in the image, denotes the pixel mean value of the image, represents the channel variance, and is a manually set hyperparameter [21].

By calculating the above energy function, the SimAM attention module obtains the three-dimensional weight of the current feature map and assigns a unique weight to each neuron. And the flowchart of SimAM is shown below (Figure 6).

Figure 6.

Illustration of SimAM attention module.

The original head layer in YOLOv7 was composed of stacked ELAN, resulting in a substantial computational burden and limited improvement in the detection capability of small objects. Furthermore, it was not conducive to the integration of attention modules. Therefore, we adopted the same head layer as YOLOv5. The SimAM attention module was inserted after the C3 module. Multiple hierarchical feature maps extracted by the backbone were fused within the C3 module. The SimAM attention module enriches feature representation and refines the extracted feature maps by computing adaptive weights assigned to each pixel on the feature map, thereby complementing the C3 module. This refined information is then used by the subsequent layers for object detection and localization within the YOLOv5-based head layer. The novel head, incorporating the SimAM attention mechanism, exhibits reduced computational complexity and enables better detection of dim and small objects by leveraging global information.

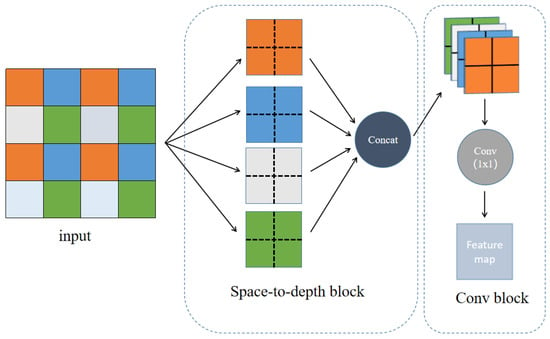

3.4. Space-to-Depth Module (SPD)

Convolutional Neural Networks (CNNs) have achieved significant success in various computer vision tasks such as image classification and object detection. However, their performance rapidly deteriorates in more challenging tasks with low image resolutions or small objects. Sunkara R et al. [22] claim that the root cause lies in the flawed yet common design of existing CNN architectures, which employ hierarchical convolutions and pooling layers, leading to the loss of fine-grained information and inefficient learning of feature representations. To solve this problem, they devised an alternative to the average pooling layer named the space-to-depth module (SPD).

The SPD module consists of a Space-to-depth (SPD) layer and a non-strided convolution (Conv) layer, as shown in Figure 7. This module can be applied to most CNN architectures. The SPD layer is responsible for downsampling the image. For any feature map , it is divided into a grid based on the scale size, as shown in Figure 5 with an example of , resulting in four sub-maps , , and . Each sub-map is one-quarter the size of the original feature map. After concatenating the four sub-maps in the depth dimension, they are compressed to match the depth of the original map through the Conv layer, completing the downsampling process.

Figure 7.

Illustration of the SPD module.

As space objects in astronomical images are often smaller than 20 pixels, using excessive average pooling for downsampling would result in the objects being overwhelmed by the background. Therefore, we use the SPD module to replace the stride convolution and pooling layers to map the feature information from spatial to depth. This approach aims to prevent the loss of space object feature information during the downsampling process.

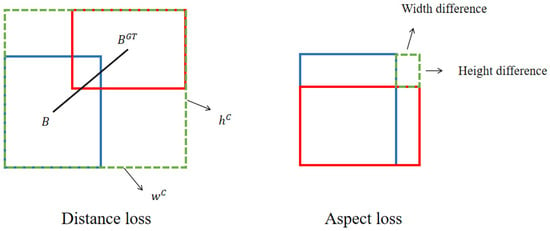

3.5. Alpha-EIoU Loss Function

The choice of loss function plays a critical role in the learning ability of neural networks during the training process. A suitable loss function can help improve the convergence speed and accuracy of the algorithm.

The Complete-IoU (CIoU) [36] loss function used in YOLOv7 can be expressed as Equations (4)–(6), where represents the Euclidean distance between two points, is the center point of the box, and and represent the width and height of the bounding box, respectively.

In Equation (6), only reflects the discrepancy of aspect ratio, rather than the real relations between and or and . Namely, all the boxes with property have , which is inconsistent with reality. In addition, in this equation, we have . Here, and have opposite signs. Thus, at any time, if one of these two variables ( or ) is increased, the other one will decrease. This is unreasonable, especially when and or and . Due to the high sensitivity of small object detection to IoU variations, these deficiencies can lead to slow convergence speed and low efficiency while training the model on datasets in which objects are small [24,37]. Therefore, Zhang et al. improved the CIoU loss function and proposed Efficient-IoU (EIoU). Compared to CIoU, EIoU decomposes the orientation loss term into the differences between the predicted width and height and the width and height of the ground truth bounding box, providing a more accurate orientation representation, which can be expressed as Equation (4). In Equation (7), and represent the width and height of the minimum enclosing rectangle of the predicted bounding box and the ground truth bounding box.

Additionally, He et al. [38] demonstrated through empirical studies that bounding box regression loss exhibits greater robustness towards datasets with low signal-to-noise ratios (SNR) when combined with an artificially-set power term . They named this loss function Alpha-IoU, and the equation is as follows:

The in Equation (8) represents an arbitrary regularization term.

Due to the characteristics of astronomical images having severe noise interference and small targets, we believe that the aforementioned two improvements to the loss function are highly applicable in the field of space object detection. Therefore, we combine Alpha-IoU and EIoU and propose Alpha-EIoU, a novel loss function for detecting small objects in low-SNR images. The function is expressed as Equation (9).

Alpha-EIoU consists of three components: Intersection over Union (IoU) loss, distance loss, and aspect loss. A hyperparameter is introduced as the exponent for each term. This novel loss function combines the advantages of EIoU and Alpha-IoU, enabling more precise localization of small objects and enhanced handling of noise interference. We firmly believe that Alpha-EIoU represents a superior choice for training models in the context of space object detection. The schematic diagram of Alpha-EIoU is shown in Figure 8.

Figure 8.

Schematic diagram of Alpha-EIoU.

4. Experiments

4.1. Space Small Object Dataset (SSOD)

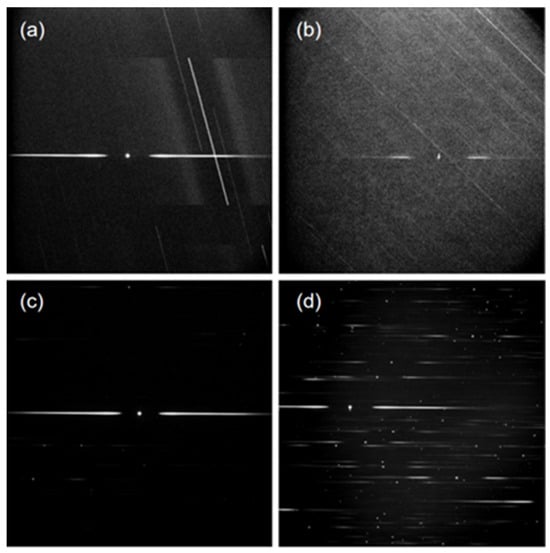

The training of neural networks often requires corresponding image datasets with ground truth annotations, and the composition, annotation quality, and amount of data used for training directly determine the performance of the model. Due to the lack of related work on publicly available datasets for space objects, we select numerous astronomical optical image data obtained from ground-based observations to create a Space Small Object Dataset (SSOD), which consists of 9822 annotated astronomical optical images. Figure 9 shows some example images, all of which have a uniform size of . The dataset encompasses various scenarios, including noise interference caused by starlight and ground light, trailing of target stars, and overexposure. It encompasses single stars, multiple stars, and dense star fields, with the latter referring specifically to cases where the number of objects in a single image exceeds 50.

Figure 9.

Examples of SSOD. (a,b) Single star image with different celestial backgrounds; (c) Multi-star image; (d) Dense star field.

All images are randomly divided into the training set or test set in an 8:2 ratio. Within the training set, 20% of the images are randomly selected as the validation set and fine-tuning is performed to ensure that the test set contains various conditions of astronomical images. The basic information about the SSOD is shown in Table 2, where the primary parameters of professional astronomical CCD PI1300 used for creating the dataset are also included. As an astronomical CCD camera, the PI1300 surpasses standard CCDs in the field of astronomical observation due to its advanced liquid cooling technology, lower dark current, and readout noise. Compared to non-cooled CCDs, the PI1300 can maintain a lower operating temperature, reducing the generation of thermal noise and consequently lowering dark current. Additionally, conventional CCDs typically exhibit readout noise of several tens of electrons, whereas the PI1300’s readout noise is only 4.26e−/ADU. These advantages make the PI1300 more sensitive to faint targets, resulting in images with a higher signal-to-noise ratio, suitable for demanding astronomical research and observation.

Table 2.

Details about SSOD.

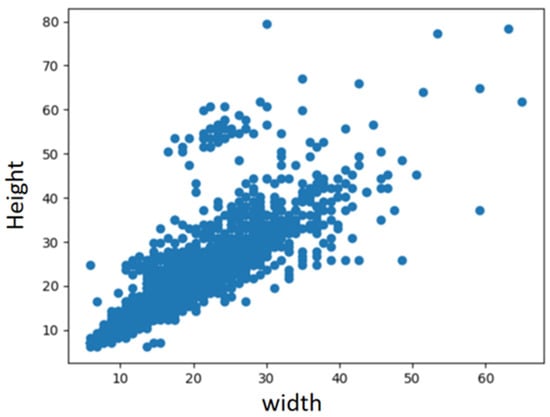

The statistics of object sizes in the SSOD are shown in Figure 10. In SSOD, 98.2% of the objects are smaller than , which aligns with the definition of small objects according to MS-COCO [19].

Figure 10.

Statistics of object sizes in SSOD.

4.2. Experimental Details

All training and testing experiments are conducted on a G448-X2 server with a Linux environment. The specific configuration is as follows: two Intel Xeon Gold 6226R 16Core CPUs, four Samsung 32 GB memory modules, and eight NVIDIA Quadro RTX A5000 GPUs with 24 GB VRAM each. The deep learning framework used is Pytorch 1.8. The batch size is set to 4, the learning rate is set to 0.01, and the epoch value is 60. During model testing, we set the IoU threshold to 0.7 to compare the model’s accuracy in locating small objects.

4.3. Evaluation Criteria

In this paper, average precision (AP), gigabit floating point operations per second (GFLOPs), and frames per second (FPS) were used to make a comprehensive evaluation of the model. GFLOPs is used to describe the time complexity of the model, which is positively correlated with the performance of the hardware required. FPS stands for the number of images that can be processed per second, which is used to evaluate the running speed of the model. AP is used to evaluate the model’s detection ability, and its formula is shown in Equations (10)–(12),

where TP and FP denote the number of true positives and false positives, respectively, and FN stands for the number of false negatives.

4.4. Comparative Experiments

To demonstrate the superiority of SOD-YOLO, we conduct comparative experiments between SOD-YOLO and other models on the SSOD, using X50-CSP (ResNeXt-50 + Cross Stage Partial Network) and R50-CSP (ResNet-50 + Cross Stage Partial Network) as baselines. The results are presented in Table 3.

Table 3.

Comparison of algorithms on SSOD.

As shown in Table 3, SOD-YOLO achieves higher detection accuracy than other models while maintaining a relatively low parameter count. With the exception of YOLOv5s, it also exhibits the fastest detection speed, meeting the requirements of practical applications. Compared to the X50-CSP, SOD-YOLO yields a 19.0% improvement in AP and a 56.8% increase in detection speed. When compared to the R50-CSP, SOD-YOLO achieves a 18.2% improvement in AP and a 23.7% increase in detection speed. Moreover, compared to YOLOv7, SOD-YOLO demonstrates an 11.2% improvement in AP and a 42.7% increase in detection speed. These results provide evidence of the advantage of SOD-YOLO over other algorithms when tested on the SSOD.

Additionally, given the highly demanding requirements for object localization accuracy in space detection tasks, we aim to further investigate the robustness of SOD-YOLO under different IoU thresholds, in addition to setting the IoU to a high value of 0.7 in the comparative experiment. Therefore, we configure multiple sets of IoU thresholds to test the performance of SOD-YOLO, and the results are presented in Table 4.

Table 4.

Comparison of SOD-YOLO under different IOU thresholds.

As shown in Table 4, the influence of IoU on accuracy is minimal when set between 0.1 and 0.5. This indicates that SOD-YOLO achieves highly accurate localization for these objects, with minimal deviations in positioning. Otherwise, as the IoU threshold decreases, there should be a noticeable increase in recall rate. This result demonstrates the robustness of SOD-YOLO in space object localization. Furthermore, when adjusting the IoU threshold from 0.7 to 0.8, there is a 6% decrease in recall rate, indicating that a small portion of detected objects have IoU values between 0.5 and 0.6. However, even with an IoU set to 0.8, both recall rate and precision remain at an extremely high level, demonstrating the strong capability of SOD-YOLO in achieving highly precise localization for space objects.

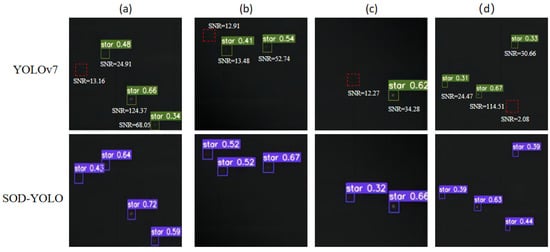

4.5. Visualization Results

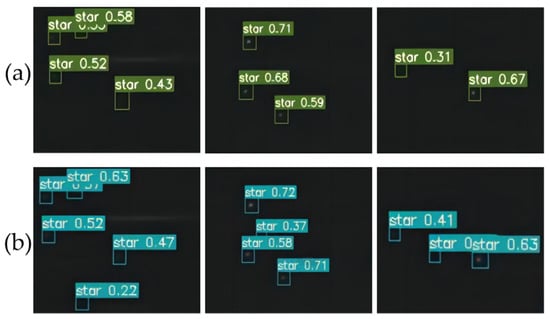

In order to demonstrate the improvements more intuitively, a selection of astronomical images are chosen for a visual comparison between SOD-YOLO and YOLOv7. The results are shown in Figure 11. The red boxes in the figure indicate missed objects. Furthermore, we calculate the SNR for each object using Equation (14) [43] and mark them in Figure 11, aiming for a more scientific and rigorous comparison.

Figure 11.

Comparison of detection results between YOLOv7 and SOD-YOLO. (a–d) represent the detection results of YOLOv7 and SOD-YOLO under different scenarios. The green bounding boxes indicate the detected objects by YOLOv7, the purple bounding boxes represent the detected objects by SOD-YOLO, and the red bounding boxes indicate the missed detections.

In Equation (14), represents the total count of astronomical light within the aperture; represents the number of pixels within the aperture; represents the celestial background; represents the dark current of each pixel; and represents the CCD readout noise. For most CCD systems currently used in astronomical research, the dark current can be neglected [41]. In Figure 11a–d, due to the faint intensity of the objects and the extremely low SNR, YOLOv7 occasionally experiences missed detections. The SNRs of the missed objects are 13.16, 12.91, 12.27, and 2.08. In contrast, all these objects are successfully detected by SOD-YOLO. Specifically, according to statistical analysis on the SSOD, when the confidence threshold is set to 0.2, the lowest detectable SNR by YOLOv7 is 13.48, as shown in Figure 11b. On the other hand, the lowest detectable SNR by SOD-YOLO is 2.08, as shown in Figure 11d. These results demonstrate that SOD-YOLO exhibits stronger feature extraction and detection capabilities for weak and small objects compared to YOLOv7.

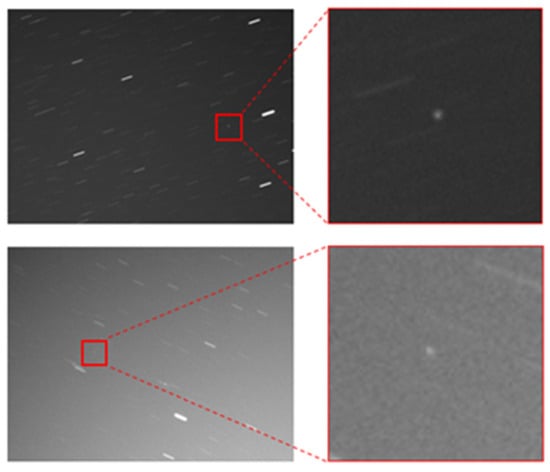

4.6. Test on SpotGEO Dataset

To investigate the generalization of SOD-YOLO for the task of detecting weak and small space objects in astronomical images, we also conduct a comparative experiment on the public dataset Spot the Geosynchronous Orbit Satellites (SpotGEO) [44]. SpotGEO is a dataset for geostationary orbit object detection, developed by the European Space Agency Advanced Concepts Team. The dataset consists of a total of 6400 image sequences, divided into 1280 sequences for training and 5120 sequences for testing. Each sequence contains 5 images with an exposure time of approximately 40 s. During the camera exposure time, background stars other than the objects or celestial bodies near the geostationary orbit exhibit a relative velocity with respect to the Earth, resulting in striped patterns in the images. On the other hand, GEO objects remain relatively stationary and mostly appear as point-like shapes in the images, as shown in Figure 12.

Figure 12.

Examples of SpotGEO dataset.

The evaluation metric used for the SpotGEO dataset is , where is calculated as Equation (13).

We train SOD-YOLO on the SpotGEO dataset and compare it with the top ten algorithms. The comparison results are shown in Table 5 [42].

Table 5.

Comparison of algorithms in SpotGEO.

The results show that the detection performance of SOD-YOLO is comparable to the SOTA algorithm on SpotGEO, with a score 0.0099 higher only, and show a significant improvement compared to YOLOv7. In addition, SOD-YOLO achieves a detection speed of 11.7 images per second on the SpotGEO dataset, meeting the requirements for real-time detection.

These comparative experiments on different astronomical optical image datasets demonstrate that SOD-YOLO has a certain generalizability in the field of detecting faint space objects in astronomical optical images. It can adapt to detection requirements under different time periods, locations, and characteristics of the starry background.

5. Discussion

In this section, ablation experiments are conducted on the SSOD dataset to evaluate the advantage of each innovation in the proposed model. And In the entire section, “✓” indicates the incorporation of this improvement in the model, while “—” denotes its absence.

We first analyze the overall effectiveness of the proposed modules. Table 6 presents the overall quantitative comparison results. We utilize the YOLOv7 as the baseline network. From Table 4, it can be clearly seen that with the accumulation of the modules, the mAP increases from 73.0% to 84.2% with less computation. The experimental results demonstrate the effectiveness of our overall network structure design.

Table 6.

Overall effectiveness of the modules.

5.1. Influence of CD-ELAN on the Experimental Results

To verify the effectiveness of our proposed CD-ELAN, we conduct ablation experiments with the original network and the proposed CD-ELAN, respectively. From Table 7, we can observe that the proposed module can improve the detection accuracy effectively, which offers a 6.1% improvement in AP, and the increase in computational load can be neglected.

Table 7.

The ablation experiment results of CD-ELAN.

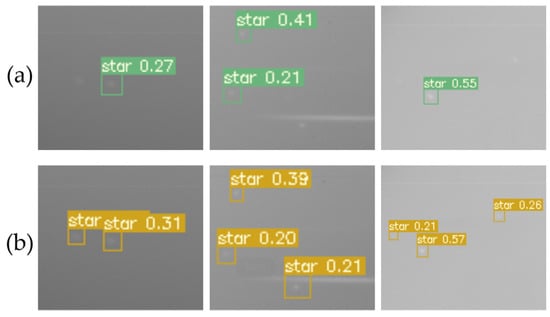

As shown in Figure 13, in the presence of relatively weak and dim objects, the original network manifests numerous instances of unsuccessful detections. Conversely, the network integrated with CD-ELAN showcases a higher level of precision in detecting a larger quantity of targets. This outcome unambiguously validates the pronounced performance superiority of CD-ELAN in detecting weak and dim objects.

Figure 13.

Detection results on SSOD: (a) Original network; (b) CD-ELAN.

5.2. Influence of SimAM on the Experimental Results

We also conduct ablation experiments on SimAM. In order to provide a more rigorous evaluation, the ablation experiment includes a comparative analysis with the head layer of YOLOv5. As shown in Table 8, compared to the head layer of YOLOv7, the computational complexity of the head layer in YOLOv5 is significantly reduced, while maintaining the same level of accuracy. This finding confirms our previous assertion that deep network stacking does not necessarily enhance the capability of detecting small objects. Additionally, the inclusion of the SimAM module results in a notable improvement in performance, with AP increasing from 73.0% to 77.9%.

Table 8.

The ablation experiment results of SimAM.

Figure 14 presents the comparative detection results, showcasing the challenges posed by significant interference from ground or stellar light in the given scenarios. Despite these challenging conditions, the network, when equipped with the SimAM module, demonstrates consistent and stable detection performance. This observation provides compelling evidence of the SimAM module’s effectiveness in filtering out noise and accentuating object features, even in the presence of strong interference.

Figure 14.

Detection results on SSOD: (a) Original network; (b) Head layer of YOLOv5 with SimAM.

The incorporation of the SimAM module represents a critical factor in maintaining the network’s detection stability and performance under adverse conditions. By leveraging the adaptive weight computation and feature refinement capabilities of the SimAM module, the network effectively suppresses the influence of interfering light and enhances the discriminative power of the extracted features. As a result, the network’s ability to accurately detect and localize objects remains robust, even in challenging environments characterized by high levels of interference.

5.3. Influence of SPD on the Experimental Results

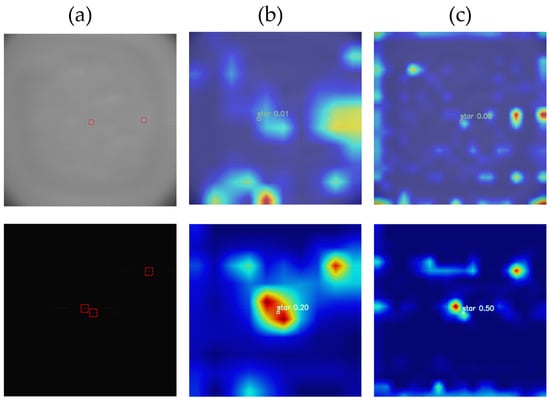

We conduct ablation experiments on the network before and after replacing the average pooling layer and stride convolution layer with the SPD module.

As shown in Table 9, the utilization of the SPD module for downsampling in the backbone network increases the AP value by 1.3, indicating that this improvement partially addresses the issue of information loss in small target detection caused by excessive use of average pooling for downsampling in the original network structure. To visually demonstrate this point, we employ the GardCAM [45] method to visualize the heatmaps of the backbone outputs before and after the improvement. As shown in Figure 15, due to the excessive use of average pooling and stride convolution, the original backbone cannot effectively detect and locate small space objects, as the small objects are almost completely drowned out. In contrast, the network utilizing the SPD module for downsampling better preserves the feature information of small objects, making the objects more prominent in the heatmaps, which resulting in the improvement of detection accuracy.

Table 9.

The ablation experiment results of SPD.

Figure 15.

Comparisons of heatmap outputs from the backbone: (a) Input images, red rectangles indicate manually annotated objects; (b) Outputs from the original backbone; (c) Outputs from backbone with the SPD module.

5.4. Influence of MHT on the Experimental Results

We conduct ablation experiments on the MHT module. The experimental results, as shown in Table 10, demonstrate a significant improvement in detection accuracy after incorporating the MHT module for preprocessing, with an increase from 73.0% to 81.7%. This result aligns with our expectations as one of the major challenges in space object detection lies in its extremely low signal-to-noise ratio, and the MHT module significantly improves the signal-to-noise ratio of the targets, thereby greatly reducing the difficulty of object detection. The specific processing effect can be seen in Figure 4.

Table 10.

The ablation experiment results of MHT.

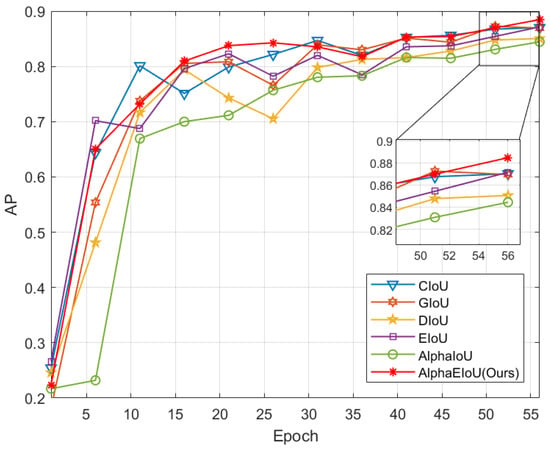

5.5. Comparative Experiment of Loss Function

To further demonstrate the effectiveness of the improved loss function Alpha-EIoU, we conduct comparative experiments between Alpha-EIoU and the current mainstream loss functions to verify the impact of different loss functions on the convergence speed and detection accuracy. YOLOv7 instances trained by different loss functions are evaluated on SSOD, and the results of detection accuracy are shown in Table 11.

Table 11.

Influence of different loss function on detection accuracy.

The convergence process of YOLOv7 with different loss functions is illustrated in Figure 16.

Figure 16.

Influence of different loss function on Rate of convergence.

Taking the CIoU loss function used in YOLOv7 as the baseline, among the mainstream loss functions mentioned above, only EIoU can improve the detection accuracy of the network model for small objects in astronomical images. However, as shown in Figure 16, its convergence process is not as stable and efficient as CIoU. The remaining loss functions all lead to varying degrees of degradation in the model’s detection performance. Combining the above experimental results, it can be concluded that, compared to the current mainstream loss functions, Alpha-EIoU effectively balances the improvement of small object detection accuracy and network training convergence speed. Although the improvement of the loss function results in only a marginal increase in accuracy compared to the original network, we have chosen to retain this modification due to its ability to reduce training time without incurring any additional costs.

6. Conclusions

In this paper, we present the integration of the deep learning approach YOLO into the domain of space object detection. Moreover, we propose a series of targeted improvements to significantly enhance the algorithm’s capability in accurately detecting dim and weak space objects. As a result, our approach achieves efficient and high-precision detection in 16-bit astronomical images.

We use multi-channel histogram truncation to enhance contrast in 16-bit astronomical images and employ the CD-ELAN module with central difference convolution to improve detection of faint celestial objects. Additionally, we integrate the SimAM module to extract global contextual information and mitigate feature loss for small objects. Finally, we propose a novel loss function to improve small object detection accuracy. These enhancements significantly improve our algorithm’s ability to detect small space objects with low SNR. Experimental results on our SSOD dataset and the publicly available SpotGEO dataset demonstrate the superiority of SOD-YOLO.

SOD-YOLO achieves 84.2% accuracy on SSOD, but struggles with objects below a signal-to-noise ratio of 2. However, we believe in its potential for improvement. For example, enhancing the MHT module, incorporating traditional denoising filters, and introducing super-resolution networks can enhance performance. Additionally, there is room for speed optimization by exploring lightweight alternatives for the backbone network and reducing network layers. In conclusion, our algorithm shows promise for space object detection in 16-bit astronomical images, and we hope it advances the use of deep learning in this field, ultimately improving situational awareness in space.

Author Contributions

Data curation, Y.T.; Writing—original draft, Y.J.; Writing—review & editing, Y.T.; Methodology, Y.J.; Project administration, Y.T.; Software, Y.J.; Formal analysis, Y.J.; Investigation, Y.J.; Funding acquisition, Y.T.; Resources, Y.T.; Conceptualization, Y.J.; Supervision, Y.T.; Validation, C.Y.; Visualization, Y.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Defense Science and Technology Innovation Special Zone Project Foundation of China grant number 19-163-21-TS-001-067-01.

Data Availability Statement

Data available in a publicly accessible repository that does not issue DOIs. Publicly available datasets were analyzed in this study. This data can be found here: [https://github.com/jiangyx123/SSOD-dataset].

Conflicts of Interest

The authors declare no conflict of interest.

References

- NASA Orbital Debris Quarterly News [EB/OL]. (2017-2). Available online: https://directory.eoportal.org/web/eoportal/satellite-missions/content/-/article/orbital-debris (accessed on 24 February 2022).

- Fitzmaurice, J.; Bédard, D.; Lee, C.H.; Seitzer, P. Detection and Correlation of Geosynchronous Objects in NASA’s Wide-Field Infrared Survey Explorer Images. Acta Astronaut. 2021, 183, 176–198. [Google Scholar] [CrossRef]

- European Space Agency. Space Debris [EB/OL]. 2018. Available online: http://m.esa.int/Our_Activities/Operations/Space_Debris/FAQ_Frequently_asked_questions (accessed on 27 May 2023).

- Diprima, F.; Santoni, F.; Piergentili, F.; Fortunato, V.; Abbattista, C.; Amoruso, L. Efficient and Automatic Image Reduction Framework for Space Debris Detection Based on GPU Technology. Acta Astronaut. 2018, 145, 332–341. [Google Scholar] [CrossRef]

- Guo, J.X. Research on the Key Technologies of Dim Space Target Detection Based on Deep Learning. Ph.D. Thesis, University of Chinese Academy of Sciences (Changchun Institute of Optics, Fine Mechanics), Changchun, China, 2023. [Google Scholar]

- Zhang, D. Dim Space Target Detection Technology Research Based on Ground-Based Telescope. Ph.D. Thesis, University of Chinese Academy of Sciences (Changchun Institute of Optics, Fine Mechanics), Changchun, China, 2020. [Google Scholar] [CrossRef]

- Li, M.Y. Research on Detection Methods for Dim and Small Targets in Complex Space-Based Background. Ph.D. Thesis, University of Chinese Academy of Sciences (Changchun Institute of Optics, Fine Mechanics), Changchun, China, 2021. [Google Scholar] [CrossRef]

- Bertin, E.; Arnouts, S. SExtractor: Software for source extraction. Astron. Astrophys. Suppl. Ser. 1996, 117, 393–404. [Google Scholar] [CrossRef]

- Sun, R.-Y.; Zhan, J.-W.; Zhao, C.-Y.; Zhang, X.-X. Algorithms and applications for detecting faint space debris in GEO. Acta Astron. 2015, 110, 9–17. [Google Scholar] [CrossRef]

- Sun, R.-Y.; Zhao, C.-Y. A new source extraction algorithm for optical space debris observation. Res. Astron. Astrophys. 2013, 13, 604. [Google Scholar] [CrossRef]

- Pradhan, B.; Hickson, P.; Surdej, J. Serendipitous detection and size estimation of space debris using a survey zenith-pointing telescope. Acta Astronaut. 2019, 164, 77–83. [Google Scholar] [CrossRef]

- Liu, D.; Chen, B.; Chin, T.-J.; Rutten, M.G. Topological sweep for multi-target detection of geostationary space objects. IEEE Trans. Signal Process. 2020, 68, 5166–5177. [Google Scholar] [CrossRef]

- Ohsawa, R. Development of a Tracklet Extraction Engine. arXiv 2021, arXiv:2109.09064. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; p. 28. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Zou, Z.; Chen, K.; Shi, Z.; Guo, Y.; Ye, J. Object detection in 20 years: A survey. Proc. IEEE 2023, 111, 257–276. [Google Scholar] [CrossRef]

- Yu, Z.; Zhao, C.; Wang, Z.; Qin, Y.; Su, Z.; Li, X.; Zhou, F.; Zhao, G. Searching central difference convolutional networks for face anti-spoofing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 15–19 June 2020; pp. 5295–5305. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, QC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Sunkara, R.; Luo, T. No more strided convolutions or pooling: A new CNN building block for low-resolution images and small objects. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Grenoble, France, 19–23 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 443–459. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Proceedings, Part V 13; Springer International Publishing: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Cheng, G.; Yuan, X.; Yao, X.; Yan, K.; Zeng, Q.; Xie, X.; Han, J. Towards large-scale small object detection: Survey and benchmarks. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 13467–13488. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Yi, K.; Jian, Z.; Chen, S.; Zheng, N. Feature selective small object detection via knowledge-based recurrent attentive neural network. arXiv 2018, arXiv:1803.05263. [Google Scholar]

- Li, D.; Liang, Q.; Liu, H.; Liu, Q.; Liao, G. A novel multidimensional domain deep learning network for SAR ship detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5203213. [Google Scholar] [CrossRef]

- Li, X.; Li, D.; Liu, H.; Wan, J.; Chen, Z.; Liu, Q. A-BFPN: An attention-guided balanced feature pyramid network for SAR ship detection. Remote Sens. 2022, 14, 3829. [Google Scholar] [CrossRef]

- Wu, X.; Hong, D.; Chanussot, J. UIU-Net: U-Net in U-Net for infrared small object detection. IEEE Trans. Image Process. 2022, 32, 364–376. [Google Scholar] [CrossRef] [PubMed]

- Yao, S.; Zhu, Q.; Zhang, T.; Cui, W.; Yan, P. Infrared image small-target detection based on improved FCOS and spatio-temporal features. Electronics 2022, 11, 933. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Liu, J.W.; Liu, J.W.; Luo, X.L. Research progress in attention mechanism in deep learning. Chin. J. Eng. 2021, 43, 1499–1511. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; p. 30. [Google Scholar]

- Park, J.; Woo, S.; Lee, J.-Y.; Kweon, I.S. A simple and light-weight attention module for convolutional neural networks. Int. J. Comput. Vis. 2020, 128, 783–798. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Zhang, Y.-F.; Ren, W.; Zhang, Z.; Jia, Z.; Wang, L.; Tan, T. Focal and efficient IOU loss for accurate bounding box regression. Neurocomputing 2022, 506, 146–157. [Google Scholar] [CrossRef]

- He, J.; Erfani, S.; Ma, X.; Bailey, J.; Chi, Y.; Hua, X.S. alpha-IoU: A Family of Power Intersection over Union Losses for Bounding Box Regression. Adv. Neural Inf. Process. Syst. 2021, 34, 20230–20242. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. You only learn one representation: Unified network for multiple tasks. arXiv 2021, arXiv:2105.04206. [Google Scholar]

- Zeng, K.H.; Peng, Q.Y. Notes on High Precision Aperture Photometry of Stars. Astron. Res. Technol. 2010, 7, 124–131. [Google Scholar]

- Chen, B.; Liu, D.; Chin, T.J.; Rutten, M.; Derksen, D.; Martens, M.; von Looz, M.; Lecuyer, G.; Izzo, D. Spot the GEO Satellites: From Dataset to Kelvins SpotGEO Challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Montreal, QC, Canada, 10–17 October 2021; pp. 2086–2094. [Google Scholar]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).