Abstract

The use of augmented reality (AR) is the only meaningful way towards the creation and integration of information into a cultural (and not only) analogue leaflet, flyer and poster, which by their nature have limited informational material. The enrichment of information, which an analogue leaflet has, with additional descriptive information (text), multimedia (image, video, sound), 3-D models, etc., gives the end recipient/user a unique experience of approaching and understanding a music event, for example. The Digi-Orch is a research project that aims to create smart printed communication materials (e.g., posters, concert programme booklets) and an accompanying AR application to provide this enhanced audience experience to classical music lovers. The paper presents the ways in which AR can extend and enrich an analogue leaflet/flyer/poster, the features of and how to use the developed AR application, as well as the components, architecture and functions of the system. It also presents the different versions of the AR application within the project, starting with the laboratory versions, continuing with the pilot versions in real conditions (music events) and reaching the final prototype and the educational version of the AR application.

1. Introduction

Augmented reality (AR) refers to the technology that allows digital virtual elements to be added to a real environment, thus providing an experience that combines the real and virtual worlds [1]. Various industries, such as retail, healthcare, real estate markets, the construction industry, entertainment, academia, tourism, the cultural industry, hospitality, aeronautics and the military sector are increasingly recognizing the need to research, develop and invest in augmented reality in order to increase and improve productivity. At the same time, these sectors have so far demonstrated the development of excellent AR applications. Mobile devices, both hardware and software, have made it possible for many sectors to change the way that people interact and engage with games, sports, tours and live performances (e.g., a play, a concert), among other activities [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20,21,22].

Augmented reality technology was introduced by the first presentation of the Head-Mounted Display (HDM) in 1968, while researchers started to develop AR applications after 1999 with the introduction of the ARTollKit from HitLAB [23,24]. In 2005, a mobile phone AR application was presented that used “markers” placed on paper in order to present 3-D objects on the mobile phone’s screen [25]. Reilly et al., 2006, used RFID (Radio Frequency Identification) devices to connect locations on a map with digital information [26]. In 2009, Morrison et al. presented an innovative AR application for mobile phones, working in outdoor spaces without using markers, that utilized printed maps and the user’s coordinates, acquired by the mobile phone’s GPS receiver, for the retrieval and display of additional information (e.g., images) acquired in the field by the user or other users of the application from a database [27,28].

In 2010, Adithya et al. developed an innovative AR application that obtained a digital 3-D terrain representation and relevant information by identifying the respective locations in an analogue 2-D military map [29]. Billinghurst and Dünser introduced an AR application in schools in 2012 [30], while Billinghurst et al., in 2001, presented an application that overlays virtual 3-D content on top of book pages and allows users to seamlessly move into a fully immersive virtual reality (VR) environment [31]. Dünser et al., 2012, presented a framework for creating educational AR books that overlay virtual content over real book pages. The framework features support for certain types of user interaction, model and texture animations, and an enhanced marker design that is suitable for educational books [32]. They exploit a web camera or an AR handheld device, markers and a computer.

Currently, AR software and hardware (like AR smart glasses, tablets, smart phones, etc.) allow for the creation of dedicated innovative and realistic AR applications. They utilize the device’s camera, GNSS receivers, the mobile communication network and computation capabilities, while receiving content that is projected in the real world by the computers that created it and are storing it [33,34,35].

A traditional analogue brochure (e.g., cultural content: programme of a musical event, theatre performance, etc.) can only accommodate a limited amount of information (usually in a few pages) for it to remain usable. If this limit of provided information is exceeded, there is a risk that the recipient of the information may not be able to assimilate it because of its volume. At the same time, an ordinary analogue brochure does not update its content, and thus, updating, upgrading and/or correcting are impossible.

AR can also be applied to analogue brochures by converting them into “smart” digital brochures through the use of dedicated applications for smartphones and tablets. This allows an analogue brochure to expand the limited amount of information it can accommodate. As a result, it is not limited to a static presentation of the content but allows the addition of information such as text, images, video, audio, etc. This additional information can be digitally linked to specific graphic parts of the analogue brochure, e.g., existing images and/or text, without adding QR codes, barcodes, etc. (which would not be consistent with the project’s visual identity). In this way, graphic parts that meet the requirements of being tracked by the camera of mobile devices are assigned as “Markers”. This information can be displayed when the user of the AR application faces the mobile device’s camera on these parts of the analogue brochure. This allows the recipient/user to better understand the content of the event/action through the emerging new digital information. Ways in which AR can extend and enrich an analogue brochure are:

- Enriched content presentation: Using AR technology, the analogue brochure and/or poster can present enriched content, such as audio clips, videos, live performances of artists, interviews with artists for a specific music event, etc. This could enhance the audience’s sense of the music, who may experience the music in an optimal way.

- Interactive experience: AR allows the audience to interact with the analogue brochure and/or poster via a smartphone or tablet to access additional information, change the presentation of the content or participate in activities.

- Continuous update: Through AR technology, the content of the analogue brochure and/or poster can be updated and enriched in real time. This allows the audience to access new data, news and information related to an event or the featured artists.

1.1. Expected Outcomes

In the framework of the Digi-Orch research project, an AR application was developed for the musical events and activities of the State Conservatory of Thessaloniki (SCT), ensuring effective and integrated presentation of information, interactivity and compatibility with various mobile devices and platforms. The SCT’s analogue brochures are mainly programmes and posters of music events and activities. In general, the application:

- Clearly presents the content of each event via AR.

- Supports content management through a corresponding system (content management system).

- Provides user-friendly interfaces.

The content management system:

- Organizes the content based on the analogue brochure created each time by the SCT for one or more of its activities/events.

- For each of the analogue brochures created, a link is made to the corresponding content, and at the same time, the content functions are defined. Each function is linked to the content that is necessary for viewing.

- Feeds the AR application with the appropriate function documentation, which should be present per analogue brochure and/or poster of a music event/action, and at the same time, it guides the application through the access paths of the content.

1.2. Innovation

Elements of innovation of the Digi-Orch are:

- Use of marker feature matching and not barcoding.

- A “neutral” content management system (CMS) as a major infrastructure where all future applications are implemented simply by introducing the new data.

- “Smart” implementation of the data flows, which supports large data communications using low-end devices (smart phones) and limited time frames.

- “Intuitive” user interface able to support different applications.

1.3. Methodological Steps

- The methodology follows the following steps: Personalized management and recording of user access and actions.

- Access to a central database, which is the index of documentation for each version of the application (different prints, different events, etc.).

- Interface with the ability to upload content, but also the ability to connect with external content: Uploading audio, video and 3-D models and mapping them to markers of the analogue brochure, importing hyperlinks and mapping them to objects of the analogue brochure, interfacing with third-party web resources, and finally, tools for mapping different entities to each other (e.g., brochures to events, brochures to functions, functions to content, etc.).

In terms of content presentation, the developed AR application supports:

- Multimedia viewing (text, video, audio and image);

- Grouping of these media to achieve encapsulation of the information;

- Viewing of 3-D models;

- The possibility of viewing the location of the event;

- Virtual reality (VR) tour viewing.

Specifically, the methodology comprises the following:

- System architecture;

- Functional modules and infrastructure;

- AR application user workflows.

2. System Architecture

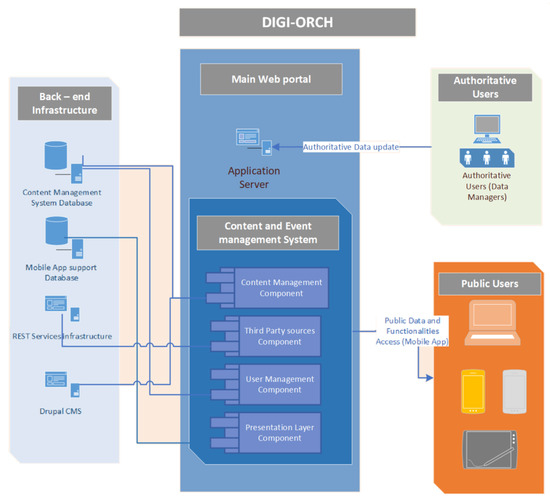

Figure 1 shows the architecture of the Digi-Orch system. The content management system is accessible to the administrators and to the users who organize and manage the content. At the same time, the AR application has access to the CMS for its content and functions update.

Figure 1.

System architecture.

According to the system architecture (Figure 1), the main parts of the front-end infrastructure of the content management portal relate to the:

- Content management tools.

- Tools to access third-party content and data sources.

- User management tools.

- Tools to support the viewing application.

Information support infrastructures have been created to implement the appropriate tools/functions. Thus, a database supporting the content management system, the tool for generating the content catalogue of the viewing interface (mobile app) and the REST API infrastructure for easy access to the content management database, as well as access infrastructures to the content itself for uploading it to the mobile app, were designed and developed.

3. Functional Modules and Infrastructure

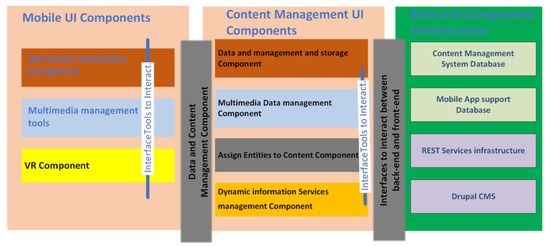

Based on the architecture, specific functional modules have been developed (Figure 2).

Figure 2.

Functional modules and infrastructure.

The first functional module is focused on the development of the infrastructure supporting the content management system. In order to implement the front-end content management interface according to the requirements in terms of functionality and security, back-end infrastructures were designed and implemented. In this context, the following components were designed and implemented:

- Content Management System Database: Manages the content that will be uploaded and/or linked, while allowing the system to archive the content and maintain the necessary metadata.

- Mobile App Support Database: Manages the number of entities related to the planning of content promotion per music event, brochure, function, etc. Supports the workflows for the creation of both the content and the functions of the app.

- REST API and microservices support infrastructure (REST Services Infrastructure): Infrastructure for the provision of services to the front end in order to easily manage access to the DBs and content. At the same time, it is possible to access the file system infrastructure through the services for uploading content to the mobile application.

- Content Management System (CMS): Provides an initial content management interface to support the web application, so that users can log in by going through the necessary user authentication procedures and then proceed to the tasks they need to implement.

The second functional module deals with the front-end content management interfaces. To support the workflows required to prepare the content and organize it in such a way that it is available in the mobile application, additional interfaces were implemented. For this purpose, content management and organization forms were created to serve the requirements of the mobile app. In this context, component interfaces were designed and implemented:

- Data Management and Content Storage Component: an interface that enables the user, in a user-friendly environment, to upload, categorize and organize the content of a text, 3-D models and other data, so that it can be accessed by the other interfaces of the system.

- Multimedia Data Management Component: an interface that enables the user, in a user-friendly environment, to upload, categorize and organize multimedia data content so that it can be accessed by the other interfaces of the system.

- Interface for organizing and linking the mobile application’s operating entities to content (Assign Entities to Content Component): an interface that enables the user to manage and organize the content that is available from the other interfaces, in a user-friendly environment, in such a way that it is linked to events, displays prints, etc., as well as to feed the mobile application in such a way that it dynamically provides the display material.

- Dynamic Information Services Management Component: an interface to connect with third-party sources and enrich the content with additional information that is available from the web.

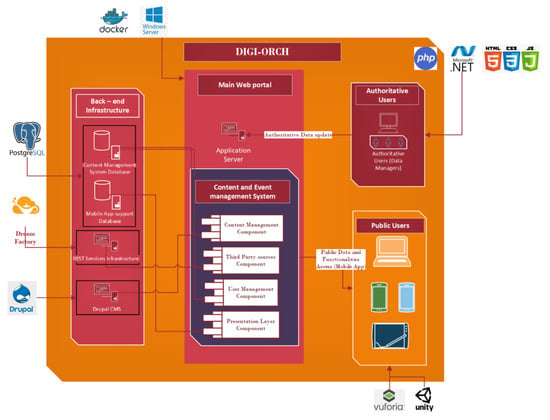

For the aforementioned interfaces, forms and management tools were developed in PHP and/or ASP.NET technologies for the server-side functionality and in HTML 5 and Javascript for the client-side functionality. The first and second functional modules and the technologies used per component are schematically presented in Figure 3.

Figure 3.

System implementation technologies.

The third functional module is a mobile application for the promotion of analogue printed material with AR functions and other electronic services. The mobile application is composed of a set of content that is organized to dynamically feed the end user. The mobile application is updated by the content management system with material made available per analogue brochure and event/action and then loaded for viewing by the end user. In this context, components and interfaces were designed and implemented that view and manage the content within the application:

- AR content management interface and dynamic projection through the camera of the mobile device (AR-Camera Interaction Component): dynamic projection of 3-D objects in space in the AR format, with interaction through the camera and shapes in analogue brochures and/or posters.

- Multimedia and non-content viewing interface (Multimedia Management Tools): the mobile application provides tools for viewing and integrating multimedia data, text material, photos and dynamic content from third-party sources.

- Virtual Reality Content Management Interface (VR Component): manages virtual tour content as well as video and 360° virtual tour content.

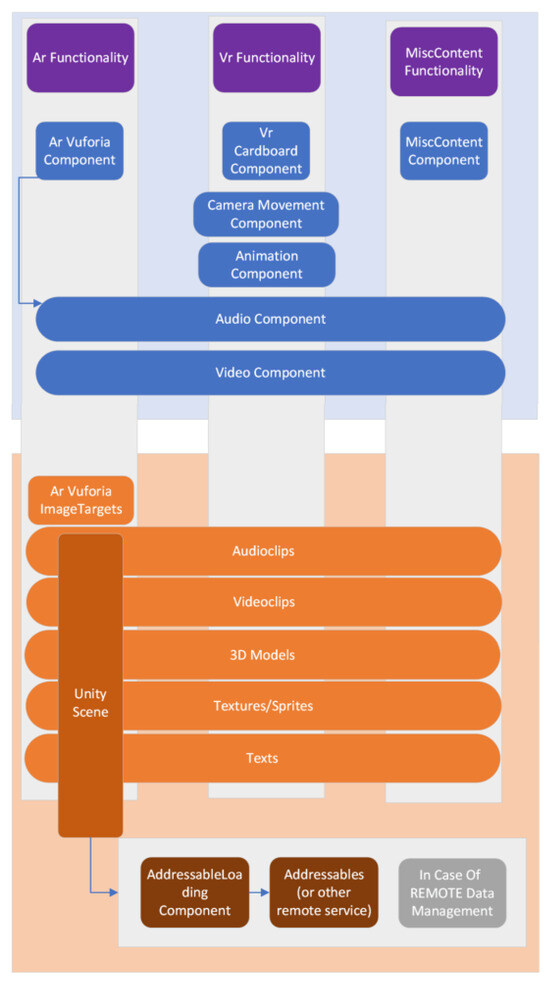

Besides these, the main module of the system is the in-application AR module that is responsible for the image recognition, matching, “loading” and presentation functions of the multimedia material (Figure 4). Using image recognition technology, the system recognizes specific objects/markers (existing images and text) of the analogue brochure and/or poster, which correspond to a specific content.

Figure 4.

Finding features suitable for the AR technology application.

For the selection of the optimal AR solution, a number of augmented reality frameworks with multiplatform functionality (Android and IOS), supported by the Unity development environment, were tested. The AR framework selected after the evaluation is Vuforia. Figure 5 schematically shows the structure of the programming tools, in which the mobile application and, consequently, its functionality were implemented.

Figure 5.

Functional modules and architectural design of the mobile application.

4. AR Application User Workflows

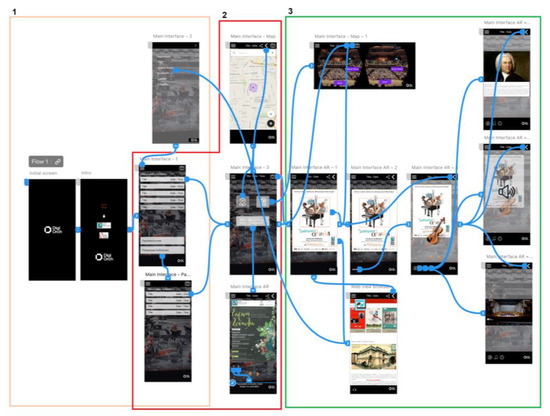

The main workflows relate to the three central modules of the software (Figure 6):

Figure 6.

User workflow diagram.

- The section providing the general information of the application and the event/activity organizer (Figure 6, area 1: square with beige color).

- Information section on current music events, event history and possibly other printed materials (selection of a specific music event, camera function for use directly in a smart brochure, information on the geographical location of the event and index of smart printed material) (Figure 6, area 2: square with red color).

- Event-specific/action-specific information management module and “smart” brochures (Figure 6, area 3: square with green color).

- Function to display external links such as operator websites and information links for specific content.

- Functions of AR objects and the enrichment of these with video, audio, text and other information.

- Multimedia viewing functions.

5. The AR App Versions for Music Events

Initially, demo laboratory versions (Figure 7) of the application were created on previous analogue SCT music events’ brochures and posters, which were evaluated internally by members of the Digi-Orch research project development team.

Figure 7.

Access to the camera of the handheld device and the AR functions. In the left image, the recognition of a marker (text) and the display in 3-D of a cello. In the next image, the marker (image) recognized in an analogue poster, and in the last two images, the marker (text) recognized and display of additional informative text.

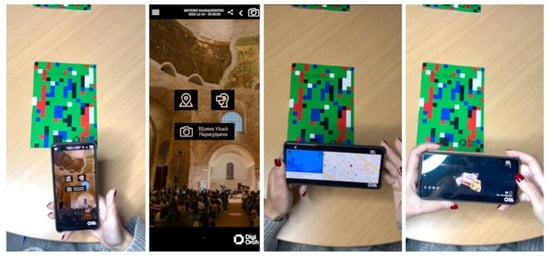

The solutions to issues/problems that emerged in the early stages of development led to the version of the AR application that was evaluated in real-life conditions on 10 December 2022, at a music event held for this purpose at the SCT premises (Figure 8). It related to a Chamber Music event entitled “Musical Kaleidoscope” (Figure 9, Figure 10, Figure 11 and Figure 12), featuring the internationally renowned artists Simos Papanas (violin), Dimos Goundaroulis (cello) and Vassilis Varvaresos (piano). They performed Robert Schumann’s Piano Trio No. 3 in G minor, Op. 110 and Franz Schubert’s Piano Trio No. 2 in E flat major, Op. 100 D. 929. The music event was attended by more than 100 people. You can visit the project’s website, namely, https://digiorch.eu/?portfolio=mousiko-kaleidoskopio-gr (accessed on 22 November 2023), where you can watch a detailed video of the use of the AR application.

Figure 8.

The State Conservatory of Thessaloniki (SCT).

Figure 9.

The cover of the music event brochure (10 December 2022) and the poster.

Figure 10.

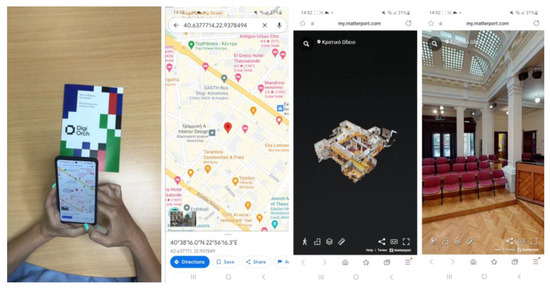

On the left, the cover of the analogue brochure and the smartphone app, showing (in the second image too) the icons of the geospatial location, the 360° VR tour (the event venue) and the transition to the content of the smart brochure. In the third image, the geospatial location of the event is activated, and in the last image, the 360° VR tour of the event venue is activated.

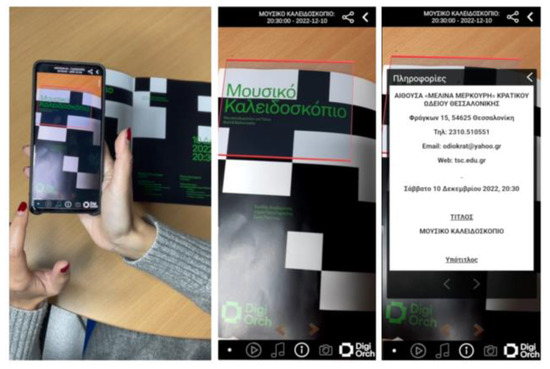

Figure 11.

On the left, the inside of the analogue print and the smartphone app. In the middle image (and also in the left image) the application recognizes the marker (text and displays a red frame on the screen of the mobile device), where descriptive information (text, right image) is provided.

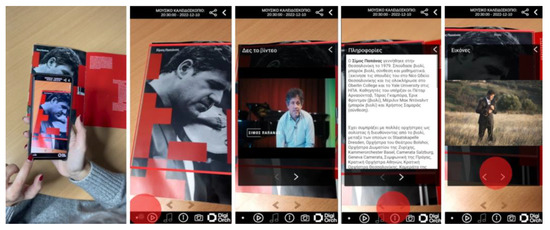

Figure 12.

On the left, the inside of the analogue brochure and the smartphone app. In the second image (but also in the first one) the application recognizes the marker (image and displays a red frame on the screen of the mobile device), where a video of the artist’s interview (middle image) about the specific music event, his biography in text (fourth image) and his photos (last image) are displayed.

A web-based application for questionnaire creation and sharing was used for the evaluation of the application (Table 1). For the distribution of the questionnaire to the public attending the event a unique link (Web link Share) and a QR code were used to facilitate the easy and fast user access to it. Both the Web link Share and the QR code were integrated to the smart flyer of the event [36].

Table 1.

The questionnaire.

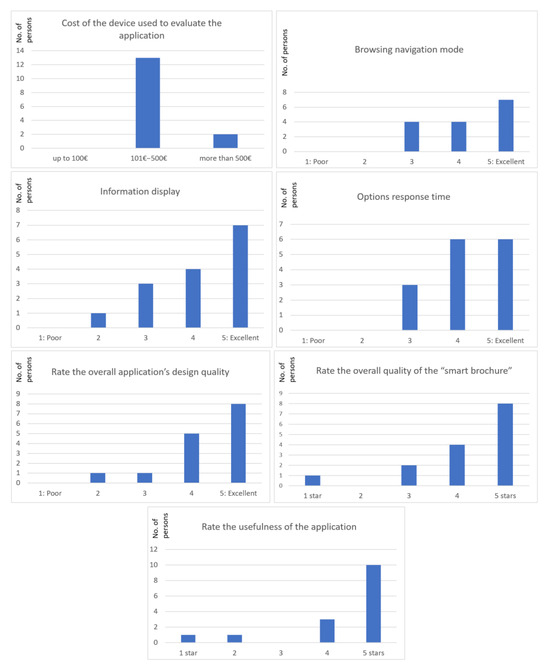

Fifteen (15) users evaluated the application during the break of the music event. The research project Digi-Orch, the AR application and how it is used were presented to the public attending the event just before its start. According to the results of the evaluation 87% of the users are using mobile devices of average technology, meaning that the application does not need high end hardware for its operation (Figure 13). 73% of the users indicated that the browsing navigation mode and the information display are effective. The application’s response time was excellent for 40%, very good for another 40% and good for the rest 20% of the users. The evaluation results confirm the effective design of the application and the implementation of the algorithms used or developed that allow its quick response time. Particularly high rates are observed in the scores of application’s design quality, where 53% rates it as excellent, and 33% as very good. 80% of the users rate the quality of the “smart brochure” as excellent. Finally, thirteen (13) out of the fifteen (15) users that evaluated the application consider it very useful.

Figure 13.

The graphs refer to the evaluation conducted on 10 December 2022.

The final prototype was evaluated in real-life conditions on 16 June 2023, at an event held for this purpose at the SCT premises (Figure 14, Figure 15 and Figure 16). It involved a Chamber Music concert entitled “HAYDN, BRAHMS, SHOSTAKOVICH—Chamber Music for Piano Trio” with the internationally renowned artists Andreas Papanikolaou (violin), Angelos Liakakis (cello) and Titos Gouvelis (piano). They performed the Piano Trio in F sharp minor Hob. XV:26 by Joseph Haydn, the Piano Trio No. 1 in C Minor Op. 8 by Dmitri Shostakovich, and the Piano Trio No. 1 in B-flat Major Op. 8 by Johannes Brahms. The concert was attended by more than 100 people. You can visit the project’s website, namely: https://digiorch.eu/?portfolio=mousiki-domatiou-trio-gr (accessed on 22 November 2023), where you can watch a detailed video on how to use the AR app, download the brochure and poster of the musical event on your computer, and install the app on your mobile device on iOS or Android.

Figure 14.

The cover of the music event brochure (16 June 2023) and the poster.

Figure 15.

On the left, the cover of the analogue brochure and the smartphone app, showing (in the second image too) the geospatial location of the music event. In the last two images, the 360° VR tour of the event venue is activated.

Figure 16.

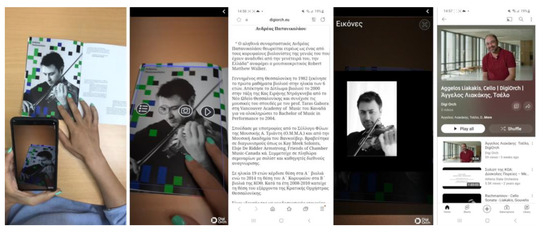

In the left image, the inside of the analogue brochure and the smartphone app. In the second image (but also in the first one), it can be seen that the application recognizes the marker and creates a blue frame on the screen of the mobile device where it contains descriptive (third image in order) information (text), photos (fourth in order) of the artist and an interview with the artist (fifth image in order) about this particular musical event (or videos of his other musical events).

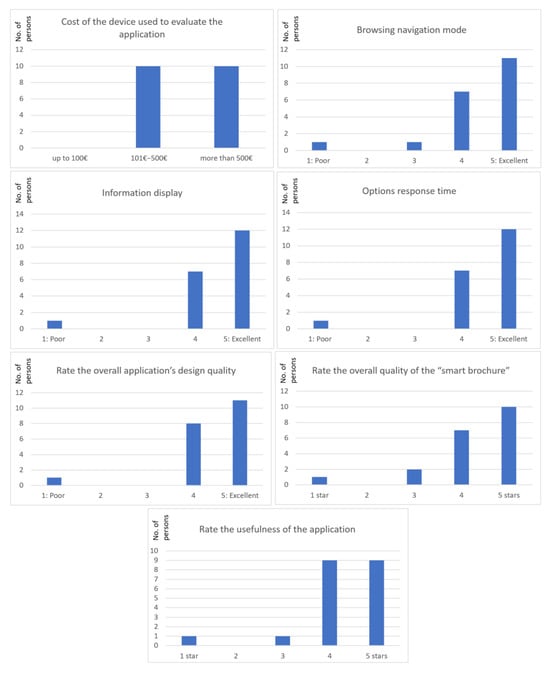

A web-based application for questionnaire creation and sharing was used for the evaluation of the application (Table 1). For the distribution of the questionnaire to the public attending the event a unique link (Web link Share) and a QR code were used to facilitate the easy and fast user access to it. Both the Web link Share and the QR code were integrated to the smart flyer of the event. Twenty (20) users evaluated the application during the break of the concert. The research project Digi-Orch, the AR application and how it is used were presented to the public attending the event just before its start. In addition, the application’s installation links in google store and app store were shared with the public in order to install it either on Android or iOS devices. According to the results of the evaluation 50% of the users are using mobile devices of average technology, meaning that the application does not need high end hardware for its operation (Figure 17). This result is important as it is one of the main goals of the research team. The goal is that the application will operate successfully in a low-end mobile device. 90% of the users indicated that the browsing navigation mode is effective, while 95% indicated that the information display is also effective. The application’s response time was excellent for 60%, and very good for another 45% of the users. The evaluation results confirm the effective design of the application and the implementation of the algorithms used or developed that allow its quick response time. Particularly high rates are observed in the scores of application’s design quality, where 55% rates it as excellent, and 40% as very good. 85% of the users rate the quality of the “smart brochure” as excellent. Finally, eighteen (18) out of the twenty (20) users that evaluated the application consider it very useful.

Figure 17.

The graphs refer to the evaluation conducted on 16 June 2023.

6. Educational Version of the AR Application

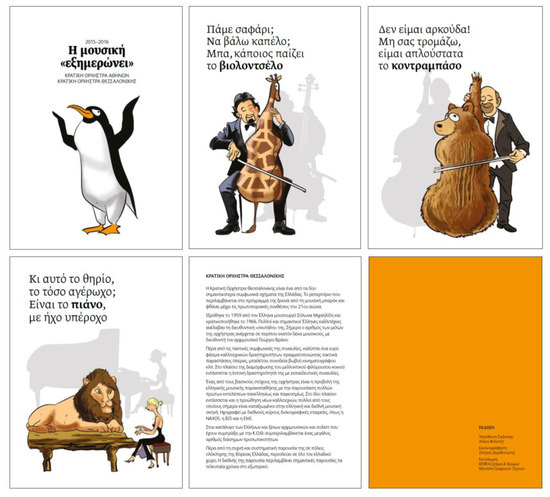

For the educational version of the AR application, a brochure entitled “Music tames” was used, which was created by the Athens State Orchestra and the Thessaloniki State Orchestra within the framework of the programme “Music Everywhere” (funded by the Stavros Niarchos Foundation). It relates to the musical education of children, as it presents all the musical instruments of the symphony orchestra, revealing their similarities (artistic license) with famous representatives of the animal kingdom (Figure 18).

Figure 18.

Images from the cover, some of the inside pages and the back cover of the brochure “Music tames”.

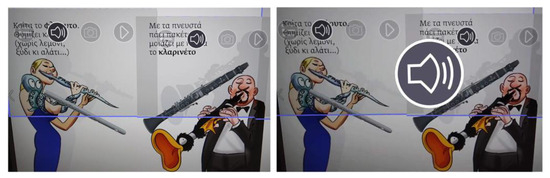

For each instrument of the symphony orchestra in the analogue brochure, the 3-D model of the instrument and its sound were added. This means that the young user, using the AR application, can at the same time see on his/her portable device the form of the musical instrument through a rotating 3-D model of the instrument and also hear its sound (Figure 19 and Figure 20).

Figure 19.

The 3-D model of the viola (and the violin) (left image) and the activation of the viola sound (right image) through the AR application.

Figure 20.

The 3-D model of the clarinet (and also the flute) (left image) and the clarinet sound activation (right image) through the AR application.

7. Discussion

Culture can clearly be one of the fields that benefit from the enhancement of user experience through the use of AR technologies. Previously prepared information for each event, concert, museum, archaeological site, etc., can be inserted into our mobile devices with the aid of AR applications.

Digi-Orch is a research project that produces two products to enhance the audience experience of people who love classical music: smart printed communication material (e.g., posters, concert programme booklets) and an AR application. The key issue is that the application and the material can be visited by the user at any time and in any order, as visitors can select the information of their interest and the extent to which they want to be informed (personalization of information).

Regarding the addition and retrieval of data, three basic steps are followed: firstly, descriptive information on each event is added by the administrative user. Afterwards, the information is paired with specific digital markers. Lastly, automated processes of the CMS back end produce catalogue files, which are used for the retrieval of information and the dynamic update of the user interface.

This approach makes the application very popular to many cultural organizations, as well as, more importantly, to the younger audience, due to the fact that new technologies are used and, therefore, they can familiarize themselves more with cultural events.

The system implementation and algorithm development can be used by numerous cultural organizations in Greece or abroad. The system provides proof of how technology can modernize the provision of classical information through the direct connection between classical music and technology. Finally, the familiarity of younger people with the new technologies will increase their interest and will make classical music more accessible and attractive.

Digi-Orch was evaluated on a TRL 6 (Technological Readiness Level Scale Definitions according to NASA and the EU Horizon’s Program) during a pilot concert event that took place in December 2022. User feedback, along with suggestions from the developers and the remaining stakeholders, was assessed, and a revised final version of the application was produced for the second pilot concert in June 2023.

The educational version of the app further helps young music lovers understand the instruments of the orchestra through their sounds and their 3-D rotating models by means of the AR technology. Thus, the modern digital world and the advantages of modern technology perfectly combine traditional physical materials (printed) with digital content, leading to an increase in the participation of children in educational events featuring classical music.

Author Contributions

Conceptualization, P.P.; methodology, P.P. and T.R.; software, T.R. and K.K.; formal analysis, P.P., D.K. (Dimitris Kaimaris), T.R. and C.G.; investigation, all Authors; data curation, T.R., D.K. (Dimitris Kaimaris), G.-J.P., K.P. and D.K. (Dimitris Karadimas); writing—original draft preparation, all Authors; writing—review and editing, D.K. (Dimitris Kaimaris); visualization, T.R. and K.K.; supervision, P.P.; project administration P.P. and D.K. (Dimitris Kaimaris); funding acquisition, P.P., G.-J.P., K.P. and D.K. (Dimitris Karadimas). All authors have read and agreed to the published version of the manuscript.

Funding

This research was carried out in the context of the project Digi-Orch: Development of a Model System for the Visualization of Information of the Cultural Activities and Events of the Thessaloniki State Conservatory (Τ6ΥΒΠ-00416, ΜΙS 5056218). The project is co-financed by Greece and the European Union (European Regional Development Fund) through the Operational Program Competitiveness, Entrepreneurship and Innovation 2014–2020, Special Actions “Aquaculture”—“Industrial Materials”—“Open Innovation in Culture”.

Data Availability Statement

All data are available from the corresponding author upon reasonable request.

Conflicts of Interest

Author Kostas Poulopoulos was employed by the company Beetroot. Author Dimitris Karadimas was employed by the company Vision Business Consultants. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Parekh, P.; Patel, S.; Patel, N.; Shah, M. Systematic review and meta-analysis of augmented reality in medicine, retail, and games. Vis. Comput. Ind. Biomed. Art 2020, 3, 21. [Google Scholar] [CrossRef]

- Vlahakis, V.; Karigiannis, J.; Tsotros, M.; Gounaris, M.; Almeida, L.; Stricker, D.; Gleue, T.; Christou, I.T.; Carlucci, R.; Ioannidis, N. Archeoguide: First results of an augmented reality, mobile compu-ting system in cultural heritage sites. In Proceedings of the 2001 Conference on Virtual Reality, Archeology, and Cultural Heritage, Glyfada, Greece, 28–30 November 2001; pp. 131–140. [Google Scholar]

- Wojciechowski, R.; Walczak, K.; White, M.; Cellary, W. Building virtual and augmented re-ality museum exhibitions. In Proceedings of the Ninth International Conference on 3D Web Technology Web3D, Monterey, CA, USA, 5–8 April 2004. [Google Scholar]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A Survey of Augmented, Virtual, and Mixed Reality for Cultural Heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- González Vargas, J.C.; Fabregat, R.; Carrillo-Ramos, A.; Jové, T. Survey: Using Augmented Re-ality to Improve Learning Motivation in Cultural Heritage Studies. Appl. Sci. 2020, 10, 897. [Google Scholar] [CrossRef]

- Antoniou, P.; Bamidis, P. 3D printing and virtual and augmented reality in medicine and surgery: Tackling the content development barrier through co-creative approaches. In 3D Printing: Applications in Medicine and Surgery; Papadopoulos, V.N., Tsioukas, V., Suri, J., Eds.; Elsevier: Amsterdam, The Netherlands, 2022; Volume 2, pp. 77–79. [Google Scholar]

- Acidi, B.; Ghallab, M.; Cotin, S.; Vibert, E.; Golse, N. Augmented Reality in liver surgery. J. Visc. Surg. 2023, 60, 2. [Google Scholar] [CrossRef] [PubMed]

- De Lima, C.B.; Walton, S.; Owen, T. A critical outlook at augmented reality and its adoption in education. Comput. Educ. Open 2022, 3, 100103. [Google Scholar] [CrossRef]

- Grodotzki, J.; Müller, B.T.; Tekkaya, A.E. Introducing a general-purpose augmented reality platform for the use in engineering education. Adv. Ind. Manuf. Eng. 2023, 6, 100116. [Google Scholar] [CrossRef]

- Nikashemi, S.R.; Knight, H.H.; Nusair, K.; Liat, C.B. Augmented reality in smart retailing: A(n) (A)Symmetric Approach to continuous intention to use retail brands’ mobile AR apps. J. Retail. Consum. Serv. 2021, 60, 102464. [Google Scholar] [CrossRef]

- Alesanco-Llorente, M.; Reinares-Lara, E.; Pelegrín-Borondo, J.; Olarte-Pascual, C. Mobile-assisted showrooming behavior and the r(evolution) of retail: The moderating effect of gender on the adoption of mobile augmented reality. Technol. Forecast. Soc. Chang. 2023, 191, 122514. [Google Scholar] [CrossRef]

- Kasapakis, V.; Gavalas, D.; Galatis, P. Augmented reality in cultural heritage: Field of view awareness in an archaeological site mobile guide. J. Ambient Intell. Smart Environ. 2016, 8, 501–514. [Google Scholar] [CrossRef]

- Serravalle, F.; Ferraris, A.; Vrontis, D.; Thrassou, A.; Christofi, M. Augmented reality in the tourism industry: A multi-stakeholder analysis of museums. Tour. Manag. Perspect. 2019, 32, 100549. [Google Scholar] [CrossRef]

- Boboc, R.G.; Gîrbacia, F.; Duguleană, M.; Tavčar, A. A handheld Augmented Reality to revive a demolished Reformed Church from Braşov. In Proceedings of the Virtual Reality International Conference—Laval Virtual, Laval, France, 22–24 March 2017. [Google Scholar]

- Lavoye, V.; Mero, J.; Tarkiainen, A. Augmented Reality in Retail and E-commerce: A Literature Review: An Abstract. In From Micro to Macro: Dealing with Uncertainties in the Global Marketplace. AMSAC 2020; Pantoja, F., Wu, S., Eds.; Springer: Cham, Switzerland, 2022. [Google Scholar]

- Laverdière, C.; Corban, J.; Khoury, J.; Ge, S.M.; Schupbach, J.; Harvey, E.J.; Reindl, R.; Martineau, P.A. Augmented reality in orthopaedics: A systematic review and a window on future possibilities. Bone Jt. J. 2019, 101-B, 1479–1488. [Google Scholar] [CrossRef] [PubMed]

- Mystakidis, S.; Christopoulos, A.; Pellas, N. A systematic mapping review of augmented reality applications to support STEM learning in higher education. Educ. Inf. Technol. 2022, 27, 1883–1927. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Li, S.; Pang, Y.; Lee, C.K.M. AR-assisted digital twin-enabled robot collaborative manufacturing system with human-in-the-loop. Robot. Comput. Integr. Manuf. 2022, 76, 102321. [Google Scholar] [CrossRef]

- Franz, J.; Alnusayri, M.; Malloch, J.; Reilly, D. A Comparative Evaluation of Techniques for Sharing AR Experiences in Museums. Proc. ACM Hum. Comput. Interact. 2019, 3, 124. [Google Scholar] [CrossRef]

- Ambre, T.P.; Khalane, P.P.; Kanjiya, S.D.; Kenny, J. Implementation of the 3d Digitalized Brochure using Marker-based Augmented Reality for Real Estates. In Proceedings of the Second International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 15–17 July 2020; pp. 483–487. [Google Scholar]

- Oke, A.E.; Arowoiya, V.A. An analysis of the application areas of augmented reality technology in the construction industry. Smart Sustain. Built Environ. 2022, 11, 1081–1098. [Google Scholar] [CrossRef]

- Sutherland, I.E. A head-mounted three-dimensional display. In Proceedings of the Fall Joint Computer Conference, Part I, San Francisco, CA, USA, 9–11 December 1968; pp. 757–764. [Google Scholar]

- Martinez, H.; Skoumetou, D.; HyppolaJ Laukkanen, S.; Heikkila, A. Drivers and bottlenecks in the adoption of augmented reality applications. J. Multimed. Theory Appl. 2014, 2, 27–44. [Google Scholar] [CrossRef]

- Henrysson, A.; Ollila, M.; Billinghurst, M. Mobile phone based AR scene assembly. In Proceedings of the MUM, Christchurch, New Zealand, 8–10 December 2005; pp. 95–102. [Google Scholar]

- Reilly, D.; Rodgers, M.; Argue, R.; Nunes, M.; Inkpen, K. Marked-up maps: Combining paper maps and electronic information resources. Pers. Ubiquitous Comput. 2006, 10, 215–226. [Google Scholar] [CrossRef]

- Morrison, A.; Oulasvirta, A.; Peltonen, P.; Lemmela, S.; Jacucci, G.; Reitmayr, G.; Näsänen, J.; Juustila, A. Like bees around the hive: A comparative study of a mobile augmented reality map. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 1889–1898. [Google Scholar]

- Morrison, A.; Mulloni, A.; Lemmelä, S.; Oulasvirta, A.; Jacucci, G.; Peltonen, P.; Schmalstieg, D.; Regenbrecht, H. Collaborative use of mobile augmented reality with paper maps. Comput. Graph. 2011, 35, 789–799. [Google Scholar] [CrossRef]

- Adithya, C.; Kowsik, K.; Namrata, D.; Nageli, S.V.; Shrivastava, S.; Rakshit, S. Augmented reality approach for paper map visualization. In Proceedings of the International Conference on Communication and Computational Intelligence (INCOCCI), Erode, India, 27–29 December 2010; pp. 352–356. [Google Scholar]

- Billinghurst, M.; Dünser, A. Augmented Reality in the Classroom. IEEE Comput. 2012, 45, 99. [Google Scholar] [CrossRef]

- Billinghurst, M.; Kato, H.; Poupyrev, I. The MagicBook: A transitional AR interface. Comput. Graph. 2001, 25, 745–753. [Google Scholar] [CrossRef]

- Dünser, A.; Walker, L.; Horner, H.; Bentall, D. Creating interactive physics education books with augmented reality. In Proceedings of the 24th Australian Computer-Human Interaction Conference, Melbourne, Australia, 26–30 November 2012; pp. 107–114. [Google Scholar]

- Grubert, J.; Langlotz, T.; Zollmann, S.; Regenbrecht, H. Towards pervasive augmented reality: Context-awareness in augmented reality. IEEE Trans. Vis. Comput. Graph. 2016, 23, 1. [Google Scholar] [CrossRef] [PubMed]

- Li, X.X.; YI, W.; Chi, H.L.; Wang, X.; Chan, A.P. A Critical review of virtual and augmented reality (VR/AR) applications in construction safely. Autom. Constr. 2018, 86, 150–162. [Google Scholar] [CrossRef]

- Shakil, A. A review on using opportunities augmented reality and virtual reality in construction project management. Organ. Technol. Manag. Constr. 2019, 11, 1839–1852. [Google Scholar]

- Patias, P.; Roustanis, T.; Klimantakis, K.; Kaimaris, D.; Chalkidou, S.; Christoforidis, I. A workflow for validation and evaluation of a Dynamic Content Management System for cultural events. In Proceedings of the International Cartographic Association, Commission on Cartographic Heritage into the Digital, 17th ICA Conference on Digital Approaches to Cartographic Heritage, Thessaloniki, Greece, 24–26 May 2023; pp. 196–207. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).