Design of Sensor Data Processing Software for the ISO 23150 Standard: Application to Autonomous Vehicle Software

Abstract

:1. Introduction

2. ISO 23150 Standard in Autonomous Driving Software

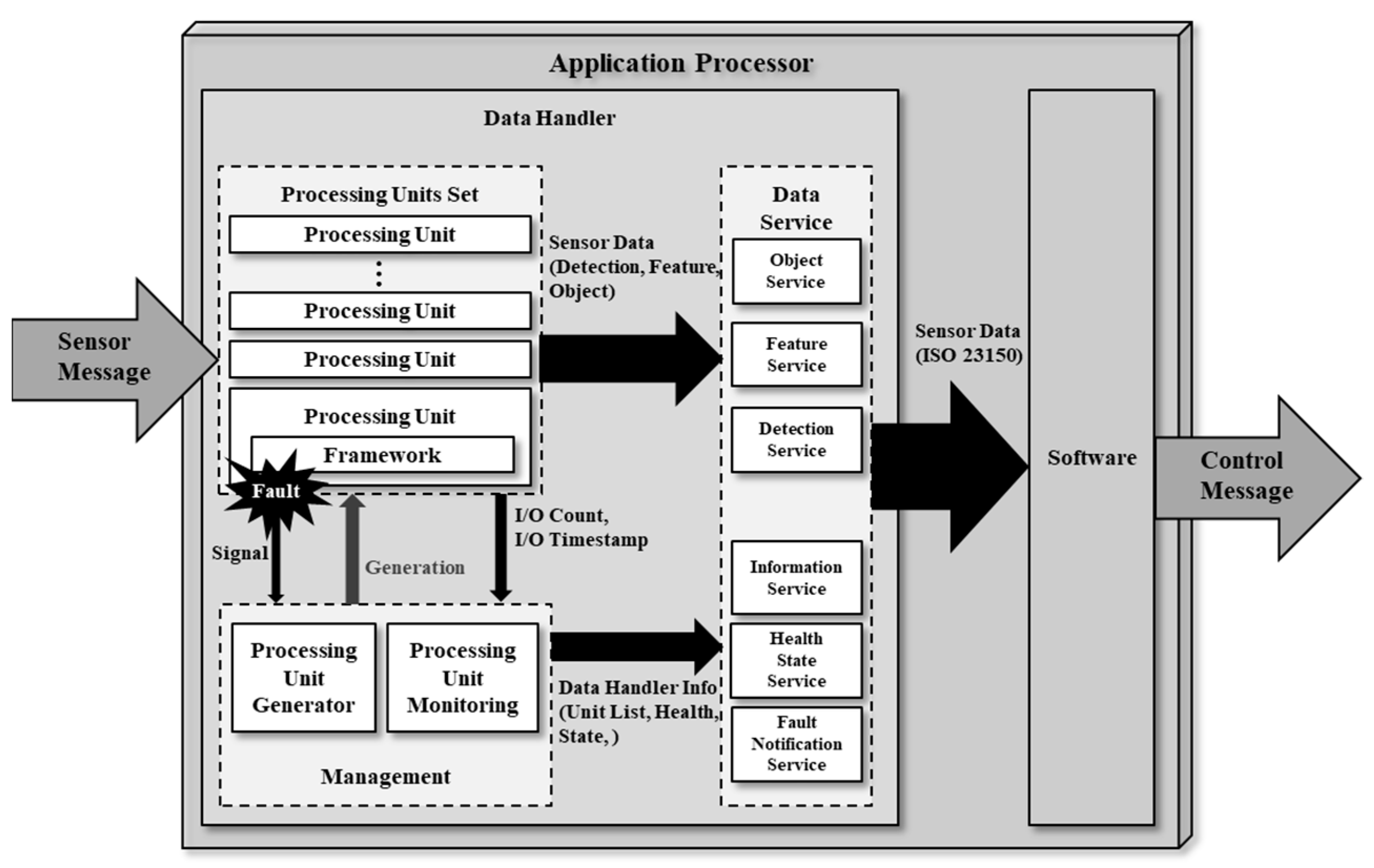

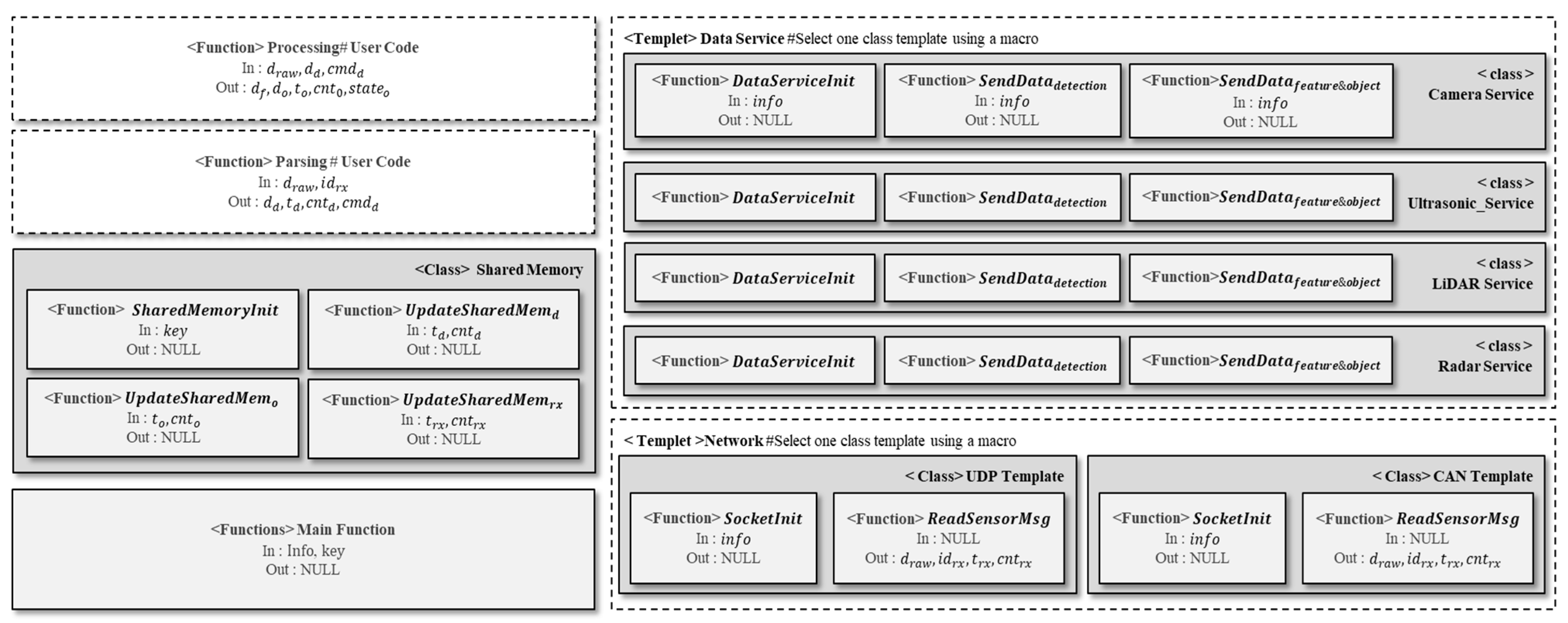

3. Software Architecture Design

3.1. Data Service

3.2. Management Unit

| Algorithm 1. Management Unit | |

| 1: | |

| 2: | /* Read the information of the sensors used for autonomous driving. */ |

| 3: | |

| 4: | /* Initialize the shared memory to receive the status of the processing unit. */ |

| 5: | |

| 6: | /* Read sensor information one by one (iterating for the number of sensors). */ |

| 7: | |

| 8: | /* Execute the processing unit for a specific sensor. */ |

| 9: | |

| 10: | /* Execute the fault monitoring unit to detect fault in the processing unit. */ |

| 11: | |

| 12: | end for |

| 13: | /* Execute the Health Monitoring Unit to receive the status information of the processing unit. */ |

| 14: | |

| 15: | |

| Algorithm 2. Fault Monitoring Unit | |

| 1: | |

| 2: | /* Initialize the service for notifying SWC of Processing Unit faults. */ |

| 3: | |

| 4: | /* Configured as an infinite loop to run until a fault occurs. */ |

| 5: | do |

| 6: | /* Receive signals sent from the Processing Unit. */ |

| 7: | |

| 8: | /* If the received signal is the SIGCHLD signal, measure the fault detection time and then notify SWC. */ |

| 9: | |

| 10: | |

| 11: | |

| 12: | |

| 13: | end if |

| 14: | end while |

| 15: | return NULL |

| Algorithm 3. Health Monitoring Unit | |

| 1: | |

| 2: | /* Initialize the service for providing the status information of processing units to SWC. */ |

| 3: | |

| 4: | while true do |

| 5: | /* Receive the status information of processing units stored in the shared memory. */ |

| 6: | |

| 7: | /* Provide the status information of processing units to SWCs through a data service. */ |

| 8: | |

| 9: | /* Provide the status information of processing units at approximately a 1-second interval. */ |

| 10: | |

| 11: | end while |

| 12: | |

3.3. Processing Unit

| Algorithm 4. Processing Unit | |

| 1: | |

| 2: | /* Initialize CAN or UDP sockets for receiving sensor data messages. */ |

| 3: | |

| 4: | /* Initialize a SOME/IP-based Data Service for transmitting sensor data compliant with ISO 23150. */ |

| 5: | |

| 6: | /* Initialize Shared Memory for transmitting the status information of the Processing Unit. */ |

| 7: | |

| 8: | do |

| 9: | /* Receive sensor messages transmitted from the sensor. */ |

| 10: | |

| 11: | /* Provide the count of received sensor messages and their reception times. */ |

| 12: | |

| 13: | /* Generate detection data. (User code.) */ |

| 14: | |

| 15: | /* Provide the count and timestamps of detection data generation. */ |

| 16: | /* Send detection data using SOME/IP. */ |

| 17: | /* Generate feature data and object data. (User code.) */ |

| 18: | |

| 19: | /* Provide the count and timestamps of detection data generation. */ |

| 20: | /* Send object data and feature data using SOME/IP. */ |

| 21: | end while |

| 22: | |

4. Experiments

4.1. Real-Time Performance Evaluation

4.2. Software Safety Evaluation

5. Conclusions

- Dependence on the ISO 23150 standard: The software relies on the ISO 23150 standard, which introduces constraints related to scalability and adaptability. To overcome these limitations, our future plans include extending the standard or developing adaptable layers to accommodate diverse requirements.

- Improvements in Linux-Based Software (Linux kernel 4.19.59-rt24): There is a need for enhancing the performance and security of Linux-based software. Our future research will address issues related to the performance and security of Linux-based software. We plan to introduce optimizations at the kernel level and enhance security mechanisms to stabilize the software.

- Handling Large Volumes of Sensor Data: Research is required to effectively handle large volumes of sensor data and optimize their processing for autonomous driving scenarios. Our plans involve researching data compression and distributed processing technologies to overcome bottlenecks and enhance real-time processing capabilities.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bagloee, S.A.; Tavana, M.; Asadi, M.; Oliver, T. Autonomous vehicles: Challenges, opportunities, and future implications for transportation policies. J. Mod. Transp. 2016, 24, 284–303. [Google Scholar]

- Autonomous Vehicles: Navigating the Legal and Regulatory Issues of a Driverless World, Washington, DC, USA. April 2018.

- Preliminary Report HWY18MH010 National Transportation Safety Board. 2018. Available online: https://www.ntsb.gov/investigations/AccidentReports/Reports/HWY18MH010-prelim.pdf (accessed on 30 October 2023).

- NTSB Opens Docket on Tesla Crash. 2017; p. 702. Available online: https://www.ntsb.gov/news/press-releases/Pages/PR20170619.aspx (accessed on 30 October 2023).

- Preliminary Report HWY18FH011. 2018. Available online: https://www.ntsb.gov/investigations/AccidentReports/Pages/HWY18FH011-preliminary.aspx (accessed on 30 October 2023).

- Rosique, F.; Navarro, P.J.; Fernández, C.; Padilla, A. A systematic review of perception system and simulators for autonomous vehicles research. Sensors 2019, 19, 648. [Google Scholar] [CrossRef] [PubMed]

- Shahian Jahromi, B.; Tulabandhula, T.; Cetin, S. Real-time hybrid multi-sensor fusion framework for perception in autonomous vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef] [PubMed]

- Velasco-Hernandez, G.; Yeong, D.J.; Barry, J.; Walsh, J. Autonomous driving architectures, perception and data fusion: A review. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP 2020), Cluj-Napoca, Romania, 3–5 September 2020. [Google Scholar]

- Nobis, F.; Geisslinger, M.; Weber, M.; Betz, J.; Lienkamp, M. A deep learning-based radar and camera sensor fusion architecture for object detection. In Proceedings of the 2019 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 15–17 October 2019. [Google Scholar]

- Xu, D.; Anguelov, D.; Jain, A. PointFusion: Deep sensor fusion for 3D bounding box estimation. arXiv 2018, arXiv:1711.10871v2. [Google Scholar]

- Sheeny, M.; De Pellegrin, E.; Mukherjee, S.; Ahrabian, A.; Wang, S.; Wallace, A. RADIATE: A radar dataset for automotive perception. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 1–7. [Google Scholar]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar]

- Saqib, N.; Yousuf, M.M.; Rashid, M. Design and implementation issues in autonomous vehicles-a comparative review. In Proceedings of the 2021 2nd International Conference on Computation, Automation and Knowledge Management (ICCAKM), Dubai, United Arab Emirates, 19–21 January 2021; pp. 157–162. [Google Scholar]

- Alghodhaifi, H.; Lakshmanan, S. Autonomous vehicle evaluation: A comprehensive survey on modeling and simulation approaches. IEEE Access 2021, 9, 151531–151566. [Google Scholar]

- Available online: https://opensimulationinterface.github.io/osi-documentation/ (accessed on 30 October 2023).

- Available online: https://www.autosar.org/ (accessed on 30 October 2023).

- Linnhoff, C.; Rosenberger, P.; Holder, M.F.; Cianciaruso, N.; Winner, H. Highly parameterizable and generic perception sensor model architecture. In Automatisiertes Fahren 2020; Bertram, T., Ed.; Springer: Wiesbaden, Germany, 2021; pp. 195–206. [Google Scholar]

- Kurzidem, I.; Saad, A.; Schleiss, P. A systematic approach to analyzing perception architectures in autonomous vehicles. In Proceedings of the Model-Based Safety and Assessment: 7th International Symposium, IMBSA 2020, Lisbon, Portugal, 14–16 September 2020; pp. 49–162. [Google Scholar]

- Haider, A.; Pigniczki, M.; Köhler, M.H.; Fink, M.; Schardt, M.; Cichy, Y.; Zeh, T.; Haas, L.; Poguntke, T.; Jakobi, M.; et al. Development of high-fidelity automotive LiDAR sensor Model with standardized interfaces. Sensors 2022, 22, 7556. [Google Scholar] [CrossRef]

- Serban, A.C.; Poll, E.; Visser, J. A standard driven software architecture for fully autonomous vehicles. In Proceedings of the International Conference on Software Architecture Companion (ICSA-C), Seattle, WA, USA, 30 April–4 May 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 120–127. [Google Scholar]

- Gao, H.; Cheng, B.; Wang, J.; Li, K.; Zhao, J.; Li, D. Object classification using CNN-based fusion of vision and LIDAR in autonomous vehicle environment. IEEE Trans. Ind. Inform. 2018, 14, 4224–4231. [Google Scholar] [CrossRef]

- Du, X.; Ang, M.H.; Rus, D. Car detection for autonomous vehicle: LIDAR and vision fusion approach through deep learning framework. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; IEEE: Vancouver, BC, Canada, 2017; pp. 749–754. [Google Scholar]

- Albrecht, C.R.; Behre, J.; Herrmann, E.; Jürgens, S.; Stilla, U. Investigation on robustness of vehicle localization using cameras and LIDAR. Vehicles 2022, 4, 445–463. [Google Scholar] [CrossRef]

- Shen, S.; Wang, S.; Wang, L.; Wei, H. A Refined-line-based method to estimate vanishing points for vision-based autonomous vehicles. Vehicles 2022, 4, 314–325. [Google Scholar] [CrossRef]

- Zong, W.; Zhang, C.; Wang, Z.; Zhu, J.; Chen, Q. Architecture design and implementation of an autonomous vehicle. IEEE Access 2018, 6, 21956–21970. [Google Scholar] [CrossRef]

- Prasad, A.O.; Mishra, P.; Jain, U.; Pandey, A.; Sinha, A.; Yadav, A.S.; Dixit, A.K. Design and development of software stack of an autonomous vehicle using robot operating system. Robot. Auton. Syst. 2023, 161, 104340. [Google Scholar] [CrossRef]

- Taş, Ö.Ş.; Kuhnt, F.; Zöllner, J.M.; Stiller, C. Functional system architectures towards fully automated driving. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 304–309. [Google Scholar]

- Azam, S.; Munir, F.; Sheri, A.M.; Kim, J.; Jeon, M. System, design and experimental validation of autonomous vehicle in an unconstrained environment. Sensors 2020, 20, 5999. [Google Scholar] [CrossRef] [PubMed]

- Lin, S.C.; Zhang, Y.; Hsu, C.H.; Skach, M.; Haque, E.; Tang, L.; Mars, J. The architectural implications of autonomous driving: Constraints and acceleration. In Proceedings of the Twenty-Third International Conference on Architectural Support for Programming Languages and Operating Systems (ASPLOS), Williamsburg, VA, USA, 24–28 March 2018; pp. 751–766. [Google Scholar]

- Chishiro, H.; Suito, K.; Ito, T.; Maeda, S.; Azumi, T.; Funaoka, K.; Kato, S. Towards heterogeneous computing platforms for autonomous driving. In Proceedings of the 2019 IEEE International Conference on Embedded Software and Systems (ICESS), Las Vegas, NV, USA, 2–3 June 2019; pp. 1–8. [Google Scholar]

| Data Size | Number of Processing Units | |||

|---|---|---|---|---|

| 4 | 6 | 8 | 10 | |

| 400 | scenario 4-400 | scenario 6-400 | scenario 8-400 | scenario 10-400 |

| 600 | scenario 4-600 | scenario 6-600 | scenario 8-600 | scenario 10-600 |

| 800 | scenario 4-800 | scenario 6-800 | scenario 8-800 | scenario 10-800 |

| 10,000 | scenario 4-1000 | scenario 6-1000 | scenario 8-1000 | scenario 10-1000 |

| Processing Units Set | Target Loop Count | Test Loop Count | Note | |

|---|---|---|---|---|

| Multiprocess | Multithread | |||

| Processing Unit #1 | 10 | 10 | 5 | Normal operation |

| Processing Unit #2 | 10 | 10 | 5 | Normal operation |

| Processing Unit #3 | 10 | 10 | 5 | Normal operation |

| Processing Unit #4 | 10 | 5 | 5 | Runtime error on 5th loop |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.-Y.; Park, J.-H.; Kim, H.-J.; Lee, S. Design of Sensor Data Processing Software for the ISO 23150 Standard: Application to Autonomous Vehicle Software. Electronics 2023, 12, 4505. https://doi.org/10.3390/electronics12214505

Han J-Y, Park J-H, Kim H-J, Lee S. Design of Sensor Data Processing Software for the ISO 23150 Standard: Application to Autonomous Vehicle Software. Electronics. 2023; 12(21):4505. https://doi.org/10.3390/electronics12214505

Chicago/Turabian StyleHan, Jun-Young, Jee-Hun Park, Hyeong-Jun Kim, and Suk Lee. 2023. "Design of Sensor Data Processing Software for the ISO 23150 Standard: Application to Autonomous Vehicle Software" Electronics 12, no. 21: 4505. https://doi.org/10.3390/electronics12214505

APA StyleHan, J.-Y., Park, J.-H., Kim, H.-J., & Lee, S. (2023). Design of Sensor Data Processing Software for the ISO 23150 Standard: Application to Autonomous Vehicle Software. Electronics, 12(21), 4505. https://doi.org/10.3390/electronics12214505