A lightweight detection model is designed to implement real-time traffic sign detection in this paper. The algorithm mainly consists of an offline data augmentation method, an improved SEDG-Yolov5 model architecture, and a training method based on response knowledge distillation.

3.1. Data Augmentation

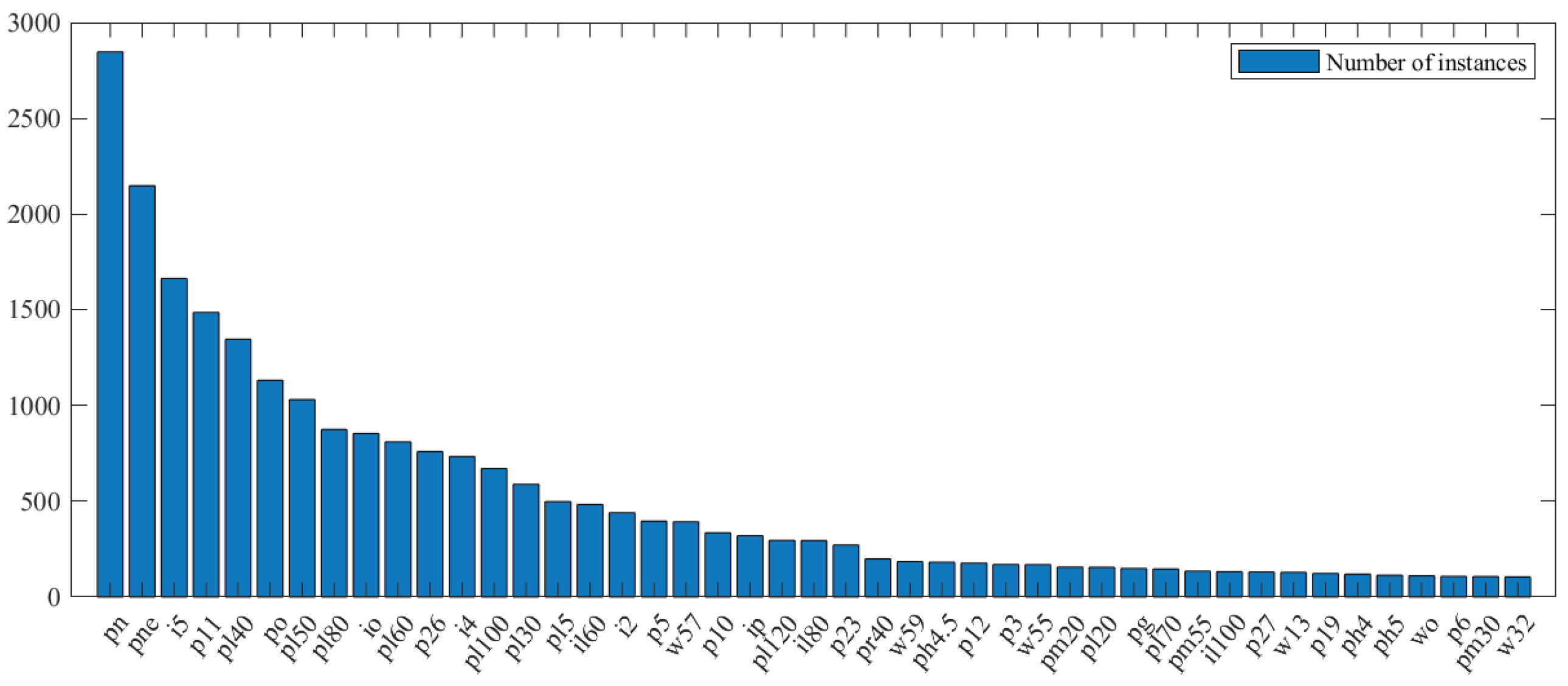

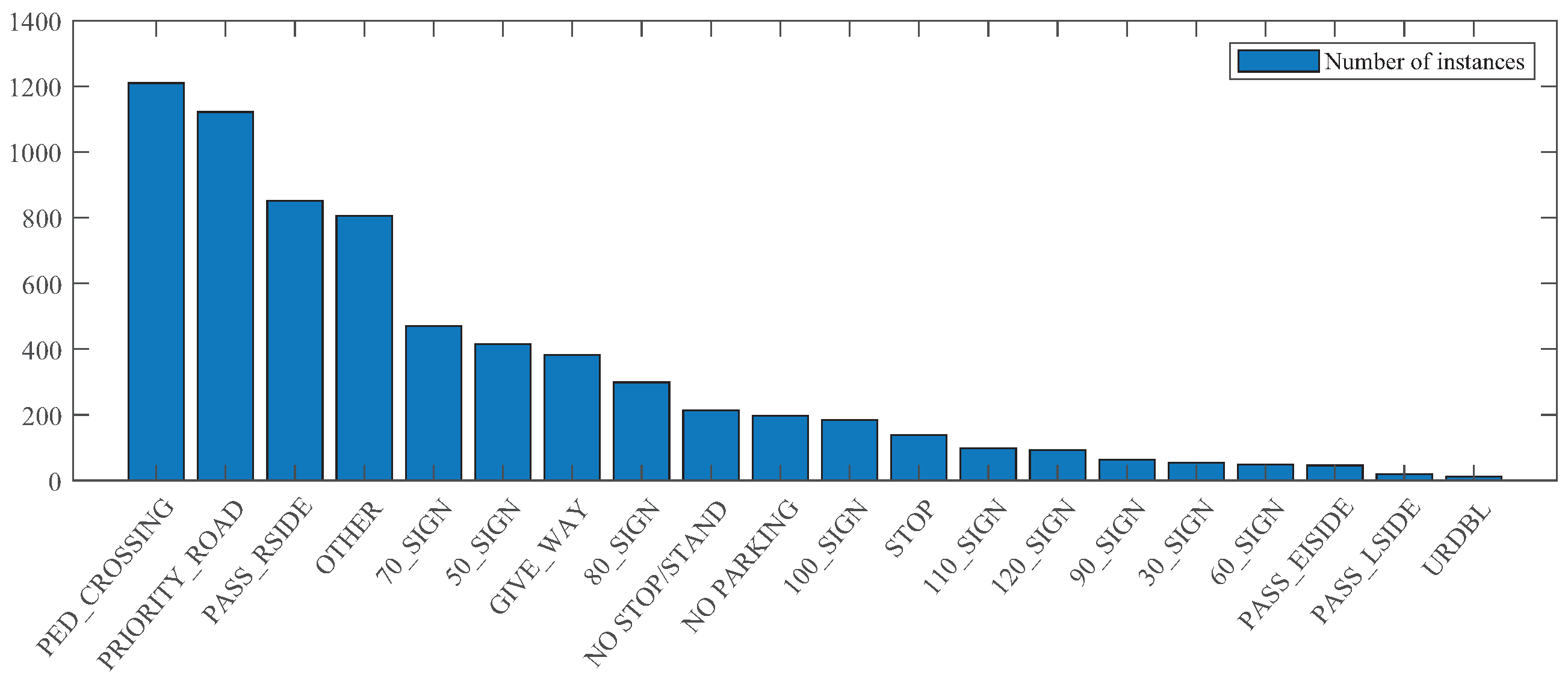

Due to the complexity of the imaging condition and the imbalanced characteristics of traffic sign dataset, the results of the detection model will deviate considerably, while the problem of the missing detection of small targets means the model cannot be applied in practice due to its weak detection performance. Slicing Aided Hyper Inference (SAHI) [

36] can be adopted to perform smaller slice inference on super-resolution images without redesigning and retraining the detection model; then, the inference results can be merged into a slice prediction of the original images.

In this work, the SAHI approach is employed as our local offline data augmentation. Specifically, the benchmark images are sliced by an overlap rate of 0.2 and a sliding window size of . By slicing the original data, we can expand the number of traffic sign instances and retain more efficient information, thus, making the detection model more robust and better performance.

3.2. Lightweight Network Architecture

Yolov5 [

15] detection model is an extended and improved version of the Yolo series of algorithms. The network as a whole is organized with four components: the input layer, the backbone network layer, the feature fusion layer (neck), and the prediction output head. The model dramatically ameliorates the depth and width of the network from previous versions. It has been extensively studied as a benchmark model for its rapid detection speed while maintaining a remarkable detection precision. The input layer generally applies mosaic data enhancement to re-patch the input image into a new sample image, which enriches the background of the target and enables the model to detect weak targets with enhanced capability; The feature fusion layer adopts FPN+PAN structure to merge the superficial feature map with strong location information and the profound feature map with much semantic information; The output head makes predictions against the fused feature map, then outputs the prediction category with the highest confidence score and returns details about the border coordinates for detecting different target locations.

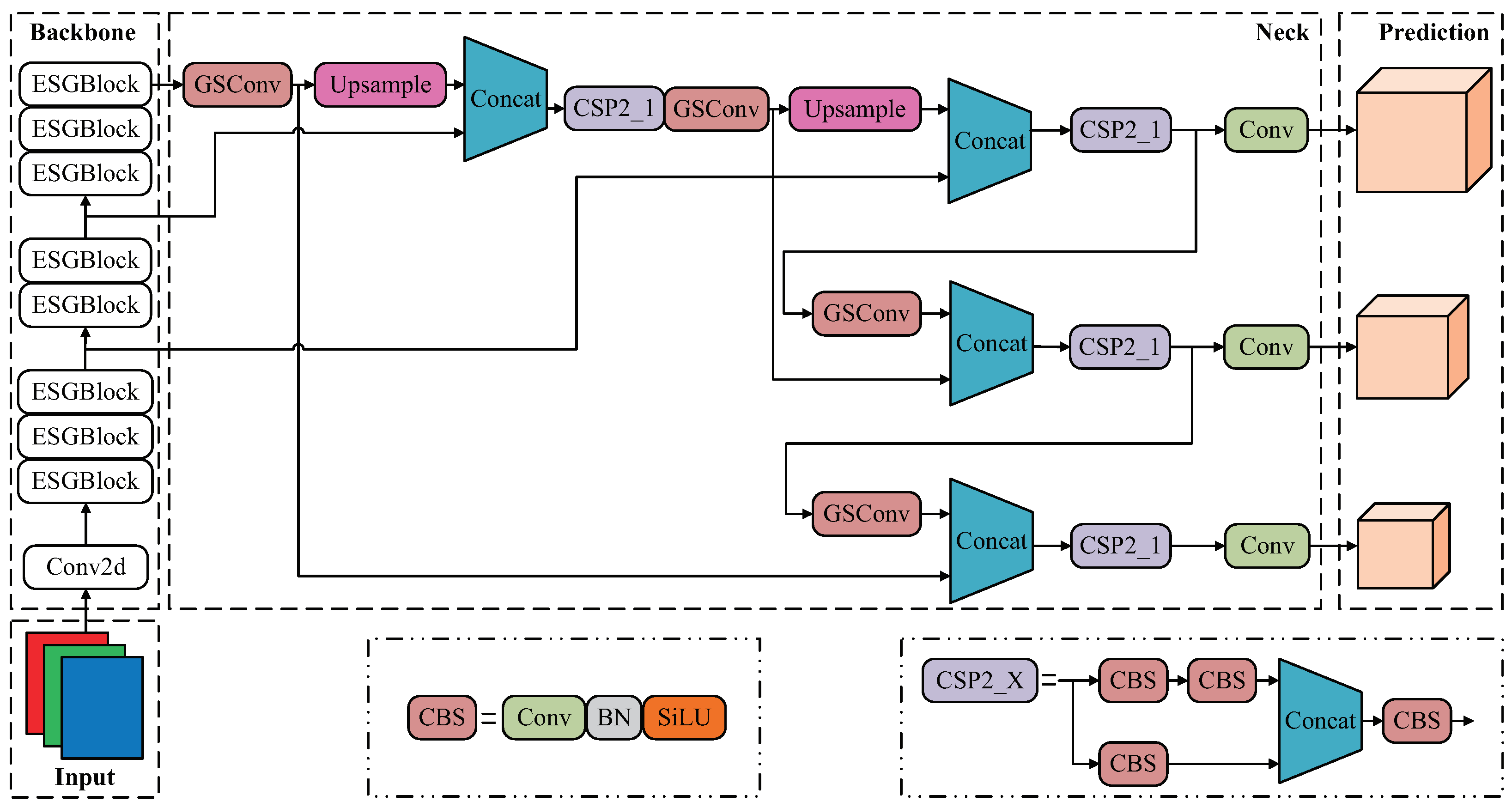

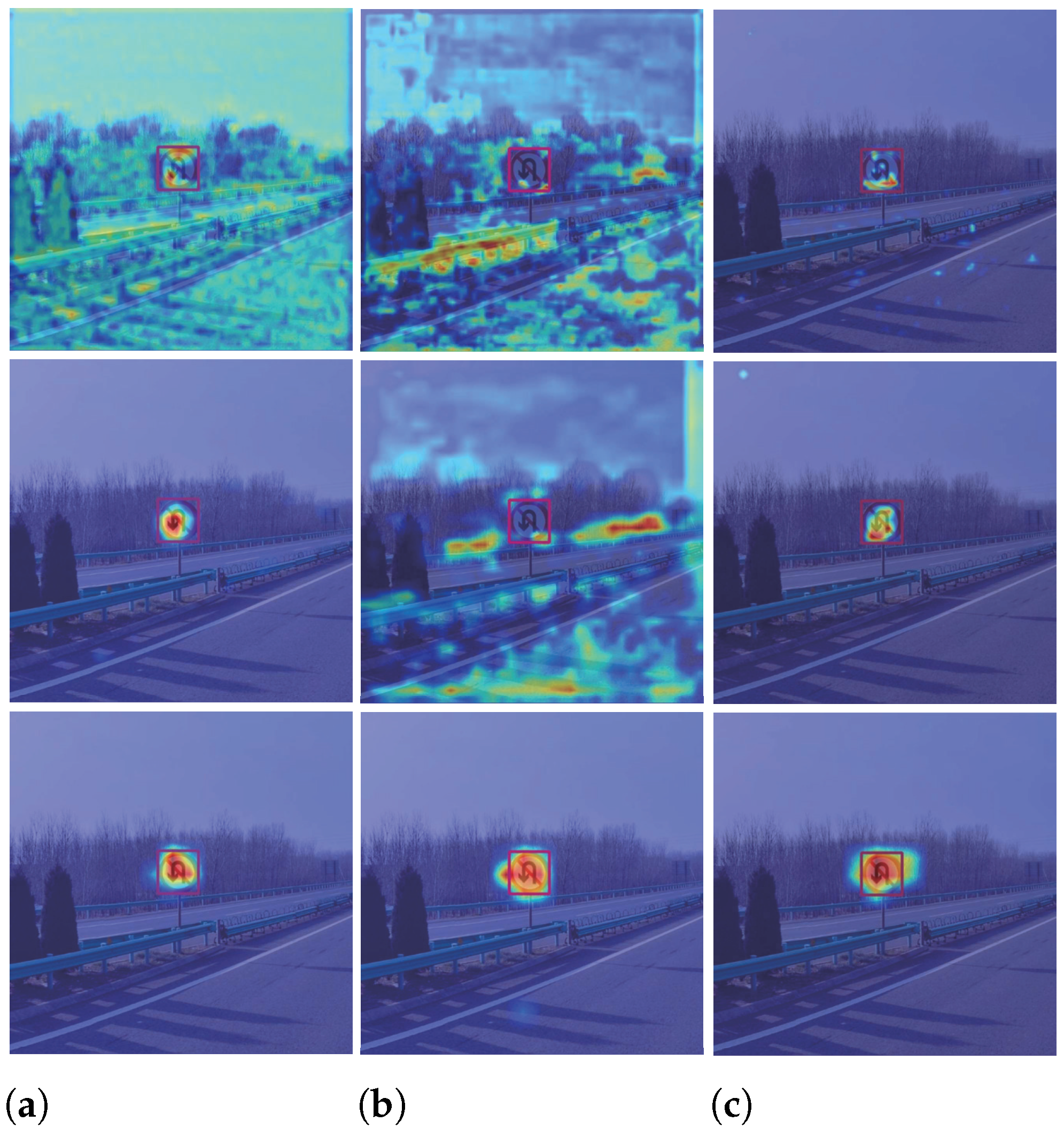

In this section, we present the structure of our proposed SEDG-Yolov5 detection model, as shown in

Figure 2. The first is the proposed ESGBlock based on the attention mechanism, which is used to build a lightweight convolutional neural network to reconstruct the backbone network layer of the detection model. ESGBlock can reduce the loss of traffic sign information created by the feature extraction process and makes the model sampling process better in terms of accounting for the sign’s channel information. Second, we also refer to the GSConv proposed in [

37] to replace part of the original convolution operation in the feature fusion layer, which further reduces the complexity of the traffic sign detection model and effectively balances the model between detection capability and detection speed.

3.2.1. ESGBlock

Many existing lightweight neural network models either based on manual design or automatic search utilize the inverted residual module as the basic constituent structure. However, its characteristic of inputting low-dimensional features will make it difficult for the model to retain enough valuable information. The Sandglass Bottleneck proposed in [

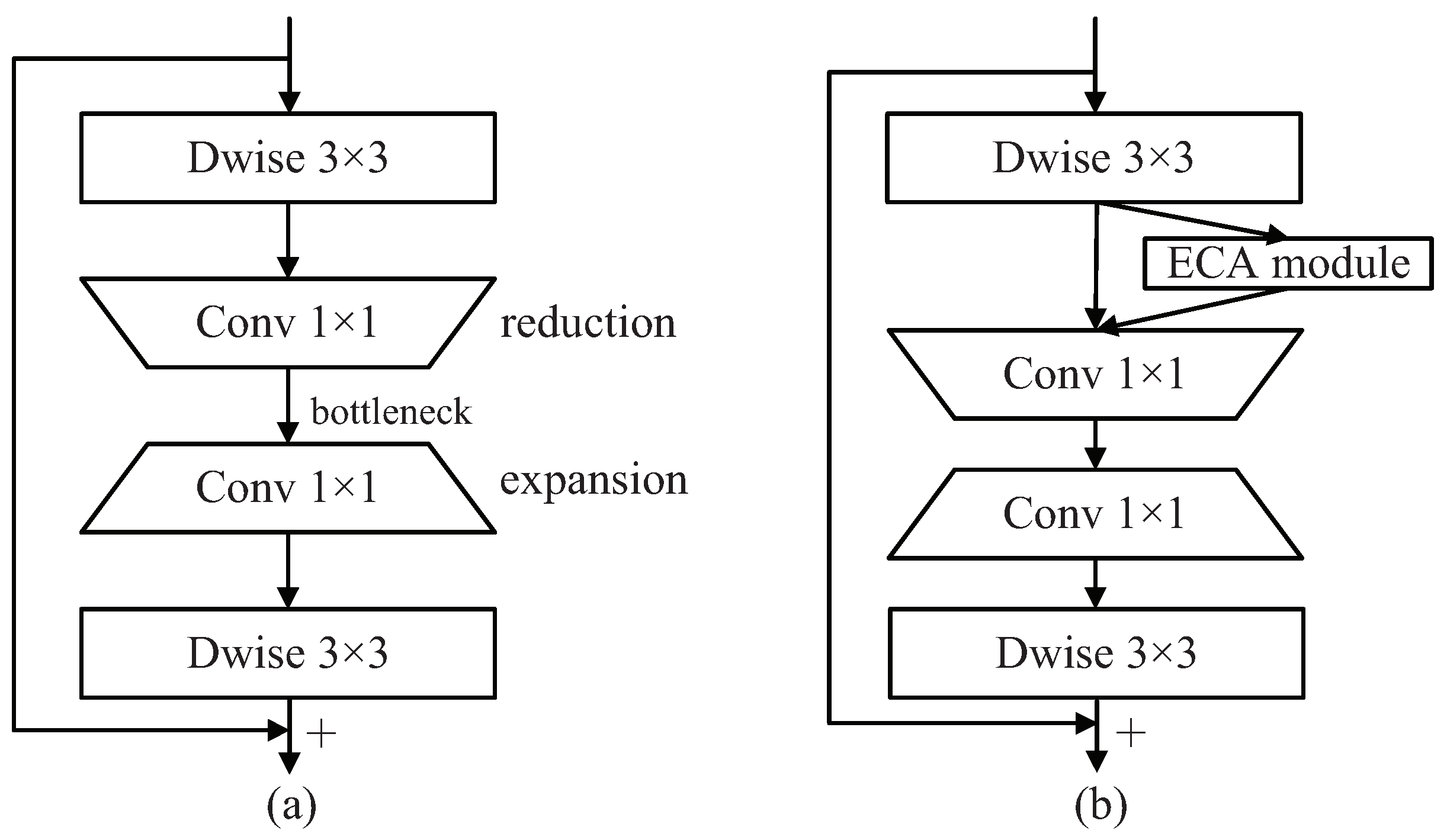

38] places the shortcut from the bottleneck structure between the high-dimensional feature representations based on inverted residual blocks. It applies deep convolution to encode spatial information on the high-dimensional features. The basic structure of the Sandglass Bottleneck is illustrated in

Figure 3a. The

point-by-point convolutional encoding of inter-channel information is used at the bottleneck structure so that the input feature maps are weighted and combined in the depth direction to obtain new feature information. In addition, the two depth separable convolutions at the head and tail retain more spatial information of the target, which contributes to the improvement of the detection performance.

The specially tailored structure of the Sandglass Bottleneck allows high-dimensional features information to be transmitted. At the same time, the model requires fewer parameters than the other neural network models of the same type and can achieve better performance at a considerable computational cost. However, it is worth noting that the sign information contained in high-dimensional features and low-dimensional features are complementary to some extent. As shown in

Figure 3b, inspired by some model light-weighting methods, we introduce the Efficient Channel Attention (ECA) module [

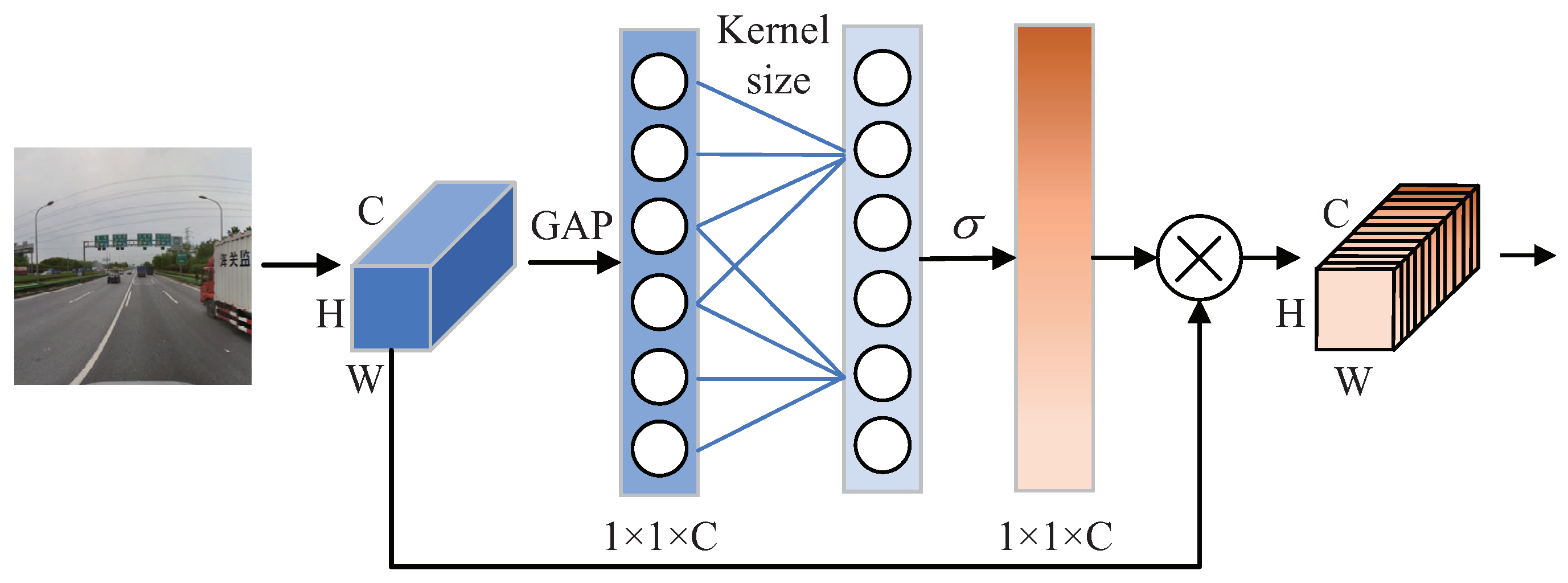

39] in the high-dimensional feature layer to avoid the loss of traffic sign information due to dimensionality reduction in the feature extraction process. The schematic diagram of ECA is shown in

Figure 4. When the feature map of the traffic sign image to be detected is input, it is changed by global average pooling (GAP) to one-dimensional features that retain only channel information. Then, the one-dimensional convolution that replaces the fully connected layer ensures that the information between the channels in each layer interacts. Finally, after sigmoid processing, the feature maps containing more information are calculated by multiplying with the input feature maps.

As the core component of the detection network, the backbone is designed to extract the information of the target to be detected and obtain the downsampled feature maps with different multiplicities, so as to meet the detection needs of different scales and types of targets. Specifically, the CSPDarknet backbone network is arranged for the Yolov5 model from the network structure perspective. Its residual structure is composed of a large number of convolutional kernels, which enhances the learning ability of the network as well as its operation cost. A large volume of redundant feature information can be generated in traffic sign detection, which leads to the additional consumption of computational budget and makes the Yolov5 model run slowly on some edge devices with limited computational resources. Therefore, we adopt the attention-based mechanism of ESGBlock to structure the lightweight convolutional neural network to reconstruct the backbone network of the Yolov5 model. The overall detection method reduces the parameter computation of the model, while the network structure remains the same, making the model more applicable to the traffic sign detection needs of edge devices.

3.2.2. GSConv Module

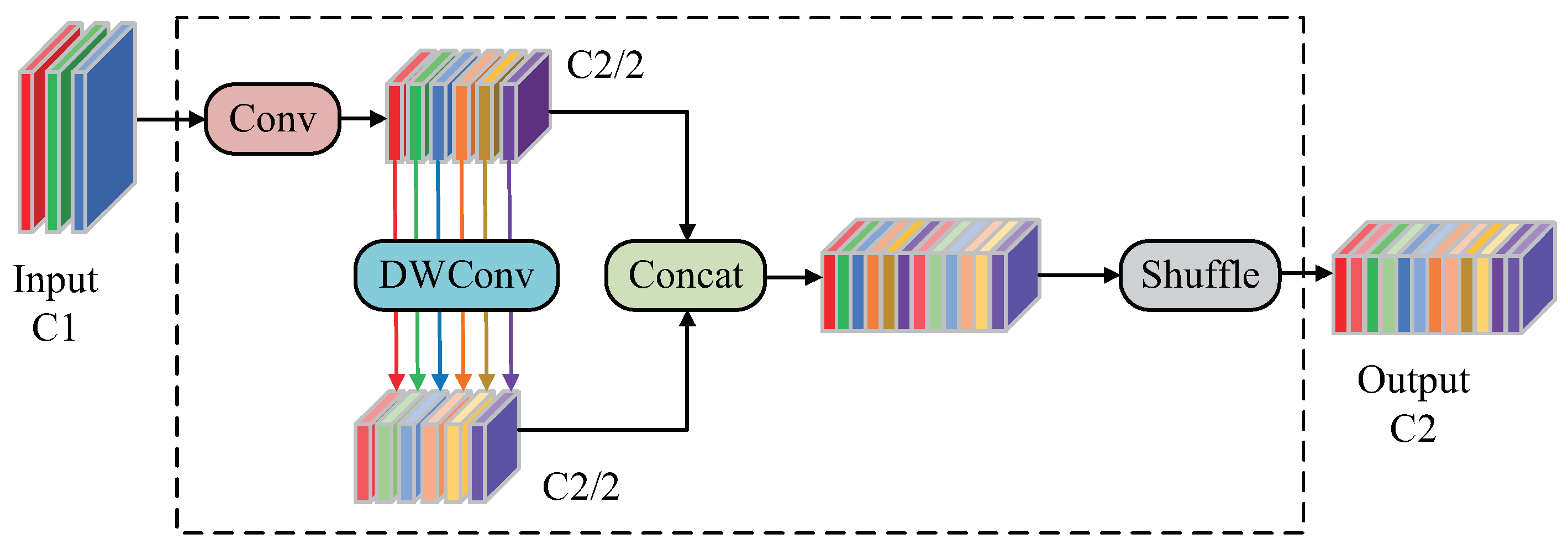

In the feature fusion layer, GSConv [

37] is employed to replace the standard convolutional structure, which further reduces the computational complexity of the overall detection model.

Figure 5 shows the structure of the GSConv module.

The traffic sign feature map from the backbone network undergoes partial loss of semantic information after the transformation of spatial information to channel transmission. GSConv allows retaining the hidden connection between each channel to the maximum extent. Meanwhile, GSConv used in the feature fusion layer can avoid the deepening of network hierarchy and maintain the transmission speed of feature data information, so that the detection speed can be effectively improved while keeping a certain detection precision.

3.3. Response-Based Objectness Scaled Knowledge Distillation

In the process of model lightweighting, the performance of the detection model will inevitably deteriorate as the number of parameters reduces in scale. Knowledge distillation can be employed not only for model compression but also for model enhancement; therefore, we leverage response-based knowledge distillation to retrain the lightweight model to compensate for the loss of detection performance.

The loss function, which directly determines the execution of the detection model, guides the optimization direction of the training model by calculating the output and target values. As for the Yolov5 detection algorithm, its loss function is a weighted sum of the objective classification loss, confidence loss, and bounding box loss, which can be expressed as follows:

where

represent the logical outputs of the category probability, object confidence, and bounding box of the detection model, respectively. The corresponding

indicate the ground truth of the experimental data and

denotes the softmax function.

Among them, the classification loss

and confidence loss

are calculated by the binary cross-entropy function with the following formula:

where

n denotes the number of experimental samples,

is the weight adjustment coefficient,

represents the Sigmoid function,

is the data label, and

indicates the prediction value. Furthermore, the bounding box loss

is calculated by the

CIoU method, which tackles the non-overlapping problem of anchor boxes while ensuring the convergence speed, making the target regression box more stable and more accurate positioning. The calculation formula can be expressed as follows:

where

is the balance weight coefficient,

is used to measure the similarity of the aspect ratio,

calculates the Euclidean distance between the center points of the prediction box

b and the target box

,

c denotes the diagonal distance of the smallest external rectangle that can contain both the prediction box and the target box.

IoU means the intersection and merging ratio of the prediction box and the target box is calculated.

Since the dense prediction output of the teacher network in the last layer will lead to incorrect learning in the bounding box of the student model, we leverage the strategy of objectness scaled [

40] to avoid the student network learning the background predictions with the faculty model. In other words, the downstream model will just learn the target regression framework and category probabilities when the teacher model confidence value is high; otherwise, the loss function is still calculated according to Equation (

1) with ground truth. Instead of directly adding the teacher prediction loss to the student loss in the original method, we propose a weighted sum approach to balance the share of teacher knowledge in student network training and prevent the overfitting of student models. Therefore, the improved distillation loss function of objectness scaled can be exhibited as

where

is a weighting factor indicating the proportion of the distillation loss component to the total loss function.

denote the logical output of the category probability, object confidence, and bounding box of the pre-trained teacher model, respectively.

is the objectness output of the teacher network, which indicates the probability that each bounding box contains an object. When the bounding box is background it has a small value, thus, effectively preventing the student model from incorrectly learning the background predictive knowledge of the teacher model.

computes the similarity of the predicted output of the teacher-student model, thus, motivating the downstream model to understand the output characteristics of the faculty network.

Moreover, we introduce a temperature factor to control the importance of the soft target of the teacher model, and the distillation loss function with the temperature factor is given as follows:

where

T is the distillation temperature coefficient, a higher temperature can distill more knowledge of the teacher model, weakening the probability distribution of each category. All categories have the same probability when

T tends to infinity.

We finally obtain the loss function for distillation training and it can be simply summed up as follows:

The overall knowledge distillation framework is shown in

Figure 6. The values of the weighting factor

and the temperature factor

T are crucial for the performance of the student model distillation training, which we will illustrate experimentally in the next section.

We finally derive the overall training method and flow of the model in this section, as demonstrated in Algorithm 1. The teacher and student model weights are obtained by training on the augmented dataset, respectively. Then the distillation model is finally obtained under the supervised guidance of the distillation loss function.

| Algorithm 1 The overall algorithm flow |

Input: Training set , validation set

Output: Original model , Lightweight model , Distillation model

- 1:

Build traffic sign augmentation dataset based on the input source data - 2:

Load the pre-trained model and iteratively train it on the augmented dataset according to Equation ( 1) - 3:

Calculate the loss function by Equation ( 1) without loading pre-training weights after model lightening - 4:

Set as the teacher model and as the student model - 5:

Allocate values to and T - 6:

repeat - 7:

for do - 8:

Randomly sample data from - 9:

Compute , respectively, according to Equations (7)–(9) - 10:

Update model parameters by Equation ( 10) - 11:

end for - 12:

until Convergence on the validation set

|