Abstract

By leveraging the Internet, cloud computing allows users to have on-demand access to large pools of configurable computing resources. PaaS (Platform as a Service), IaaS (Infrastructure as a Service), and SaaS (Software as a Service) are three basic categories for the services provided by cloud the computing environments. Quality of service (QoS) metrics like reliability, availability, performance, and cost determine which resources and services are available in a cloud computing scenario. Provider and the user-specified performance characteristics, such as, rejection rate, throughput, response time, financial cost, and energy consumption, form the basis for QoS. To fulfil the needs of its customers, cloud computing must ensure that its services are given with the appropriate quality of service QoS. A “A legally enforceable agreement known as a “Service Level Agreement” (SLA) between a service provider and a customer that outlines service objectives, quality of service requirements, and any associated financial penalties for falling short. We, therefore, presented “A Proactive Resource Supply based Run-time Monitoring of SLA in Cloud Computing”, which allows for the proactive management of SLAs during run-time via the provisioning of cloud services and resources. Within the framework of the proposed work, SLAs are negotiated between cloud users and providers at run-time utilizing SLA Manager. Resources are proactively allocated via the Resource Manager to cut down on SLA violations and misdetection costs. As metrics of performance, we looked at the frequency with which SLAs were broken and the money lost due to false positives. We compared the proposed PRP-RM-SLA model’s simulated performance to the popular existing SLA-based allocation strategy SCOOTER. According to simulation data, the suggested PRP-RM-SLA model is 25% more effective than the current work SCOOTER at reducing SLA breaches and the cost of misdetection.

1. Introduction

One of the most popular computer environments, the cloud, enables businesses to give their clients computational resources and services [1]. Three categories—IaaS “Infrastructure as a Service”, PaaS “Platform as a Service”, and SaaS “Software as a Service”—is used to group services offered by cloud computing environments. [2]. Users of IaaS must build their platforms on the cloud infrastructure, a raw resource used to deliver cloud services [3,4]. Users must install their apps on the cloud platforms supplied by PaaS to access the cloud services [5]. Finally, SaaS offers cloud services in the manner of apps based on what the users need. The cloud computing model offers the services and resources in a subscription-based setting with a “pay-as-you-use” pricing structure [6,7,8,9].

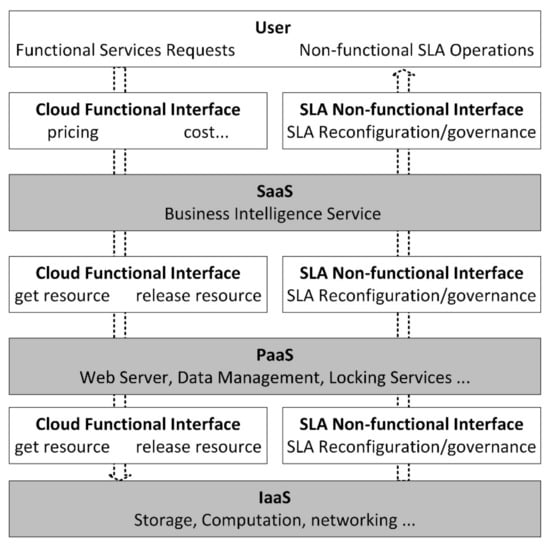

Cloud computing environments base their resources and services on quality of service (QoS) criteria like availability, dependability, performance, or cost. The capacity to ensure performance depends on characteristics defined by the supplier and users, including rejection rate, response time, energy consumption, throughput, and financial cost [10,11,12]. To fulfil users’ requests, cloud computing services must be provided at the needed degree of QoS. An SLA is a valid service agreement between a supplier and client that specifies goals, QoS, and the consequences of not meeting those goals [13,14,15]. The overall framework for SLA negotiation about IaaS, PaaS, and SaaS is depicted in Figure 1.

Figure 1.

Model for SLA negotiation [10].

SLA is established between the customer as well as a service provider to determine QoS and understand particular needs. With the mutual consent of the user and the provider, an arrangement between the cloud customer and the provider is signed based on a few terms and conditions (such as pricing and violations) [16]. When an SLA is violated, the pricing of services, fines, and commitments are considered. If the provider violates the agreement by declining to provide the necessary resources, they will be penalized. The same penalty will be applied if the consumer uses the provided resources excessively to violate the agreement. However, there are also circumstances where customers are using the resources provided by cloud providers to carry out several sensitive operations (such as those related to sectors of healthcare and scientific applications) [17]. The consumer is experiencing a legitimate loss in money and time when a cloud provider violates an SLA. Similar to this, there are some circumstances where cloud providers must make some extra resources available for usage in the future, and users may do so on purpose or accidentally. The cloud provider suffers significant energy use, expense, and time loss when users violate the SLA under such circumstances. The cloud user may incur waste as a monetary expense if the facilities are mistakenly overutilized [16,17,18]. To handle all of these issues, the parties’ agreed-upon SLA must be proactive with run-time management of SLA in cloud computing.

2. Existing Work

In this section, we explain different worldwide contributions made by researchers regarding managing SLA violations in Cloud Computing systems.

A cloud paradigm called SLAaaS (SLA as a Service), which considers QoS requirements and SLA as the main Cloud-based service components, was described in [10]. The concept is dissimilar from the other cloud-based services offered by SaaS, IaaS, and PaaS platforms. Three important parts of the model are: providing unique domain-specific language, (ii) offering SLA-based cloud service management, and (iii) using language and control mechanisms that provide SLA guarantees. All of these are needed to define the SLA-based QoS requirements. The model is a helpful SLA-based service provider model for cloud computing environments. It might be used to come up with new SLA-based resource provisioning strategies.

The authors of [19] gave details about an SLA architecture that used clustered workloads to self-manage the resources that cloud computing made available. The SCOOTER system, which stands for “Self-management of resources in the cloud for the operation of clustered workloads”, controls and manages the available cloud resources by keeping the SLA. The SCOOTER architecture regarded as self-management traits and possibility of maximum QoS values. However, the framework performs better when dealing with workload clusters and where run-time SLA management and monitoring are not crucial. However, the system performs poorly when task-based executions occur in a heterogeneous network and when run-time SLA monitoring and management are crucial for cloud providers and customers.

A particle resource scheduling system based on swarms was introduced in [20]. The method is known as “particle swarm optimization” or BULLET. A cloud environment-based resource provisioning and scheduling technique are proposed that more effectively executes workloads on available resources. The technique provides an estimated level of performance. Three key goals served as the foundation for the BULLET method presentation. First, determine the QoS requirements for workloads, cluster workloads using workload patterns, and finally, re-clustering workloads using the k-means Algorithm after giving weights to each workload’s quality features. In BULLET, the resource provider delivers the resources for clustered jobs while considering the QoS needs. The method, which largely concentrates on clustering the workloads and QoS needs, did not consider real-time monitoring and management of QoS requirements.

To lessen the effects of SLA violations, the authors in [21] use two user-hidden features: a readiness to pay the provision of and readiness to pay for assurance. A novel strategy known as the PRA (Proactive Resource Allocation) technique is being used to accomplish these objectives. The evaluation of such traits has also been made possible by new auto-learning techniques. The authors completed numerical simulations in challenging situations to support their viewpoint. However, the strategy is missing several crucial components, such as a mechanism for handling SLA violations if the system violates them on either the user or vendor side.

According to [22,23], a major challenge in cloud computing is deploying allotted cloud-based services guarantee the user’s active QoS requirements to prevent SLA violations. The cloud system is riskier and less reliable due to the quick increases in mobility, variety, and complexity. The cloud system requires service self-management to address these problems [24,25,26]. Resource management systems must be implemented for cloud providers to automatically handle the QoS needs of cloud customers, meet SLAs, and prevent SLA breaches. The STAR approach, one of the “Autonomic Resource Management” SLA-based techniques, is thus introduced by the authors. The STAR strategy primarily concentrated on lowering the SLA breach rate for optimum delivery of cloud-based assets and services. However, no technique for preventing SLA violations when they are about to occur was offered.

The fourth industrial revolution is driven by cloud computing, which the authors address in [27]. This technology spans from the cloud and the Internet of Things for the telecom industry 4.0 to smart cities. Cloud technology is widely used because it is simple to share and acquire resources using a pay-per-use and elastic provisioning approach. This suggests that cloud-based apps depend on the accuracy of the services offered by cloud platforms and that any potential outage could cause the program provider to suffer a significant loss in both reputation and financial resources. This might also result in legal action being taken against the cloud service provider for breaking the agreed-upon SLA.

The primary goal of this systematic mapping study, according to [28], is to get a comprehensive understanding of the most recent thinking on how to manage service-level agreements (SLAs) for cloud services. The authors have compiled 328 main papers on managing SLAs for Internet of Things (IoT) cloud services (IoT), which cover a variety of methodologies from different angles. Beyond these distinctions, they discover techniques that have a lot in common, such as emphasis, objective, and application context. These approaches differ in vocabulary, descriptions, artefacts, and involved activities. The research is categorized by the authors into seven key technical categories based on their findings: SLA management, SLA definition, SLA modelling, SLA negotiation, SLA monitoring, SLA evolution, and SLA violation and trustworthiness.

In [29], an effective SLA management in the cloud is the key to increasing the level of trust between the supplier and the consumer. This individual must be equipped with monitoring tools for the SLA. The authors of this study provide a semantic SLA modelling and monitoring approach for cloud computing. Using the advantages of ontological representation, create an intelligible model for diverse SLA documents and identify QoS violations to assure dependable QoS.

Considering the increased demand for cloud applications, the supply of cloud services increases due to changes in the costs of applications, resources, and configuration as explained in [30]. Numerous elements of cloud computing have received substantial financial investment, including savings in power usage, reconfiguration, and resources. When there is an increase in power demand, cloud service providers incur hefty electricity bills. Consequently, it becomes essential to limit power consumption, which necessitates load balancing.

The authors of [31] have outlined the critical challenges that impede the widespread adoption of cloud computing, particularly in the context of IoT applications. In order to provide QoS for performance-sensitive applications, they have identified problems with the current state of the art and created a system for managing resources across the cloud and the edge. In addition, they have discussed the work that is now being done and what will be done in the future to resolve the issues. The existing and suggested solutions are briefly summarized in Table 1.

Table 1.

Comparison table.

Among the fundamental components of the cloud computing environment is the resource provisioning process based on SLAs. During this process, an SLA is established between the cloud user and provider, after which the cloud provider provides resources to the user. The literature review provided the existing SLA-based resource supply techniques. However, the literature review showed that the existing SLA-based resource provisioning schemes, such as SCOOTER [19], BULLET [20], PRA [21], and STAR [22], were unable to satisfactorily explain the mechanism of a proactive resource provisioning-based SLA management in order to reduce the number of SLA Violations and the cost of misdetection (cost consumed when SLA violation is not detected and handled timely).

There are following flaws in the existing solutions.

- There are instances where customers are using the resources provided by cloud providers to carry out a small number of sensitive tasks (such as those related to the healthcare and scientific domains) [9]. The customer will suffer a reasonable loss in terms of money and time in the event that a cloud provider violates an SLA.

- Similar to the previous example, there are some situations where cloud providers are required to make some extra resources available for usage in the future, and users may actively or accidentally overuse the resources. In this instance, users violate the SLA, resulting in considerable losses for the cloud provider in terms of energy use and financial expenditures.

- The cloud user may incur a loss in the form of financial expense if the resources are mistakenly used excessively [7,8,9].

To address the above-mentioned issues, we therefore presented “Runtime Management of SLA through Proactive Provisioning of Resources in a Cloud Environment”.

3. System Model

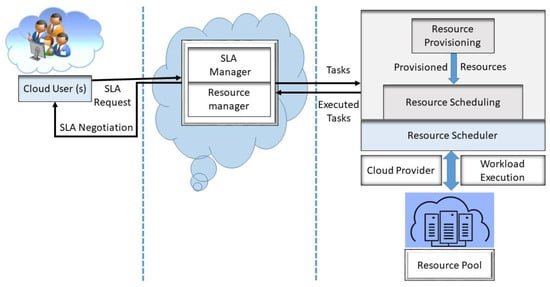

We suggested a novel Runtime Management based on Proactive Resource Provisioning of SLA in Cloud Computing (PRP-RM-SLA), that proactively combines cloud service provisioning with run-time management of service level agreements (SLAs). Figure 2 illustrates the main theme of the proposed idea.

Figure 2.

Proposed PRP-RM-SLA model.

The proposed scheme’s primary contributions include SLA Management and Runtime SLA Negotiation between Cloud Provider and Users using an SLA Manager. It is more cost-effective and time-efficient to use Resource Manager for proactive resource provisioning, which will cut down on both SLA violations and false positives. SLA Manager and Resource Manager were the two primary components of the proposed model. The SLA Manager oversees the overall SLA operations and collaborates with the following subcomponents:

3.1. SLA Negotiator

The SLA Negotiator established the SLA between cloud users and providers. One or more users send the system requests for resources during the SLA Management process. Initially, the SLA manager kept the queries in the library and obtained resource data from a cloud service provider. Next, examine the circumstance of each user request. If the user request is less than or equal to the resources that are available, the SLA will be positive and the user and cloud provider’s SLA will be established. Otherwise, the user has already received an SLA negative message. A positive SLA statement will show the ability to sign an SLA between the user and the cloud provider. While a SLA negative message will show that there are currently insufficient resources or unmet user requirements that prevent the user and cloud provider from signing a SLA. The user may send another request to start the procedure once a particular amount of time has passed.

3.2. SLA Monitor and Analyzer

The SLA manager delivers SLA statistics to the SLA Monitoring component following a successful SLA between the customer and cloud provider. The SLA Tracking components begin monitoring according to the terms and conditions agreed upon by the customer and cloud provider. SLA monitoring components analyze the monitoring data and contrast it with the terms and conditions of the contract between the user and the cloud provider. According to predetermined threshold values, if SLA is violated or is likely to be violated by the cloud provider or users during analysis, an alarm will be generated, and the SLA Violation Handler element will be called.

3.3. SLA Violation Handler

The component that handles SLA violations handles the SLA violation during one of the two phases when it is enabled. Depending on the threshold amount, an alarm will first be set off on both the user and cloud provider sides if the SLA is going to be violated. It will also be suggested to the user and the cloud provider that they either dynamically give the extra resources or that the user completes the task using the resources at hand. If one of the users and the cloud provider violates the SLA, the side that breached the SLA will be subject to the penalty outlined in the SLA’s terms and conditions. Following the imposition of the punishment, measures to prevent such offences in the future will be put in place.

3.4. Resource Manager

The resource manager oversees the management of resources. This covers the distribution of resource information and the allocation of resources in accordance with SLA terms and conditions. When an alarm is issued warning that the SLA is about to be broken, the Resource Manager automatically supplies the resources. The Algorithm 1 shows the working of proposed model.

| Algorithm 1: PRP-RM-SLA Model. |

| Input: SLAR = SLA Negotiation Request |

| Output:SLAM = SLA Management |

| 1: procedure PRP-RM-SLA( ) |

| 2: Reqi ← GetRequests() from User(s) d Reqi = Total No. of Requests |

| 3: Resi ← GetResourceInformation() from Resource Information Provider |

| d Resi = Information of all the available Resources |

| 4: for each request (Req1 to Reqn) do |

| 5: if (Reqi <= Availabler) then d Availabler = Available Resources |

| 6: SLA.Positive() |

| 7: else |

| 8: SLA.Negative() |

| 9: end if else |

| 10: end for |

| 11: for all (SLA.Positive()) do |

| 12: EstablishSLA() d SLA Establishment |

| 13: if (ThresholdValue(SLA.Positive()! = DefineThresholdValue) then |

| 14: SLAMonitor&Analyzer() |

| 15: if(SLA Violated) then |

| 16: Impose Penalty & Setup Checkpoint |

| 17: else |

| 18: GenerateAlarm() |

| 19: end if else |

| 20: else |

| 21: Return SLAManagement() |

| 22: end if else |

| 23: end for |

| 24: Return SLAManagement() |

| 25: end procedure |

The input of the Algorithm is SLA negotiation request, and the output contains SLA management between cloud user and service provider. The Algorithm starts the procedure in first step and store the user requests in a queue. The resource information is obtained from Resource Information Provider and each request is checked individually. Further, it is checked whether the user request can be accommodated from the available resources or not. If the condition is true, the system will display the message as SLA Positive, demonstrating that the agreement can be made between the user and service provider. If the condition is false, the system will display the message as SLA Negative, demonstrating that the agreement cannot be made between the user and service provider due to unavailability of the resources. Furthermore, SLAs for accepted user requests are established. Then a threshold value is defined for each request as per resource requirements. The SLA Monitor & Analyzer Module is invoked. This module will monitor the violation of SLA and take decision. If the SLA is violated then a penalty is imposed, and checkpoint is marked regarding non-violation of SLA in future. Moreover, if resources are used within threshold values, then an Alarm will be generated. If an SLA violation is imminent based on a threshold value, both the user and cloud provider will receive an alert. It will be suggested to both the cloud provider and user that either the dynamic cloud provider give the additional resources, or user complete the work with the available resources. The results of SLA Management for accepted requests are returned by ending the procedure.

The proposed PRP-RM-SLA model extensively uses two-way mechanisms, as shown in Algorithm. The first step in the negotiating process is the use of SLA Manager with run-time SLA management between cloud users and the provider. Additionally, proactive resource provisioning through the Resource Manager helps lower Privacy leakage and the cost of misidentification.

4. Experiments Results and Discussion

4.1. Simulation Environment

The relevant performance evaluation parameters [27] are assessed using CloudSim [23], “a toolset for creating and testing virtual environments for cloud computing”, to simulate the suggested method.

4.2. Performance Parameters

We utilize various performance evaluation metrics to evaluate the importance of the proposed model in comparison to previous work.

4.2.1. Cost of Misdetection

The entire cost or penalty for each false positive that results in SLA violation is known as the cost of misdetection [28,29,30]. It is also known as the penalty levied, and Equation (1) is used to compute it whether it is placed on the cloud provider or users.

where is cost of misdetection/penalties, PV in penalty per violation, and NVS are number of violations.

4.2.2. Number of SLA Violation

Total amount of SLA violations, whether committed by the user or the supplier, is referred to as such [28,29,30]. When a provider refuses to supply the user with the necessary services, the SLA will have been broken. The user will be in violation of the SLA if they consume the necessary resources excessively or fail to release them in a timely manner. Equation (2) is used in the calculation.

stands for the overall number of SLA violations, stands for the resources that are currently being available, stands for the resources that have been requested, and stands for the resources that have been allotted.

4.3. Experimental Setup

Below is a detailed explanation of the experimental setup:

4.3.1. Resource Modeling

The most pertinent simulation kit, CloudSim, is used for our evaluation environment [14,15]. In order to accomplish our suggested work, we changed CloudSim to accommodate SLA violations [19,31]. The specifics of the VMs with the possible settings are shown in Table 2.

Table 2.

The resource experimental specification.

4.3.2. Application Modelling

In our experiment, we consider a single user who independently submitted 500, 1000, 1500, and 2000 jobs [32,33,34,35]. We assume that the amount of memory needed for each job and each VM is equal. The precise number of jobs with the necessary setups is shown in Table 3.

Table 3.

The application experimental specification.

4.4. Results and Discussion

We simulated 500 virtual machines (VM)s with several job arrivals. Then the number of workloads varied from 500, 1000, 1500, to 2000 million instructions (MIPS). We considered the 100 hosts. The objective of this scenario is to determine the value of misdetection and the number of SLA breaches for various workload variants while maintaining a constant number of hosts and VMs. We utilized a 24-h running simulation environment. We contrasted the results of our proposed PRP-RM-SLA model with the findings of the baseline technique called SCOOTER [19] using unit values and graphical representations of specified performance assessment variables.

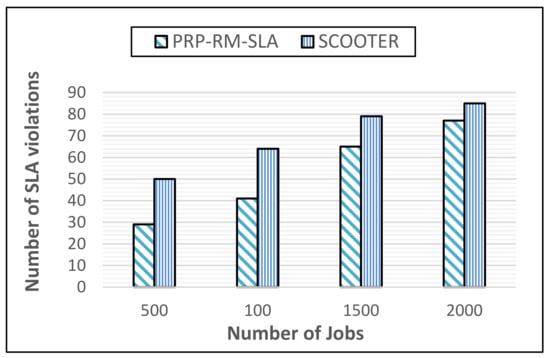

4.4.1. Number of SLA Violations

The proposed PRP-RM-SLA paradigm results in an average of 28 SLA Violations per 500 jobs, 41 per 1000 jobs, 63 per 1500 jobs, and 76 per 2000 jobs. The SLA Existing Work Scooter [19] is also 51 for 500 jobs, 65 for 1000 jobs, 78 for 1500 jobs, and 85 for 2000 jobs. Figure 3 compares the SLA Violation findings of the proposed PRP-RM-SLA model to those of the previously published SCOOTER [19] work, revealing that in every instance, the proposed PRP-RM-SLA model resulted in fewer SLA violations. We employed the idea of SLA detection and management, which is why there were fewer SLA breaches for the suggested PRP-RM-SLA model. The proposed technique dynamically distributes substitute resources from the pool of resources available to a system when it is about to breach the SLA to stop it.

Figure 3.

Comparison of results in respect of number of SLA violations.

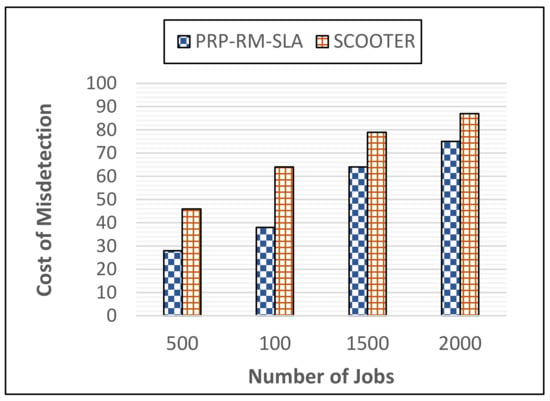

4.4.2. Cost of Misdetection

For 500 jobs, the suggested PRP-RM-SLA model’s average cost of misdetection is $140.00; for 1000 jobs, it’s $205.00; for 1500 jobs, it’s $315.00; and for 2000 jobs, it’s $380.00. According to SCOOTER [19], the cost of misdetection for existing work is $255 for 500 jobs, $325 for 1000 jobs, $390 for 1500 jobs, and $425 for 2000 jobs. The Figure 4 compares the proposed PRP-RM-SLA model’s Cost of Misdetection results to those of the preceding study SCOOTER [19] in terms of Cost of Misdetection.

Figure 4.

Comparison of results in respect of misdetection costs.

In most instances, Figure 4 demonstrates that the proposed PRP-RM-SLA model has a reduced Cost of misdiagnosis. Lower Cost of misdiagnosis in the proposed PRP-RM-SLA model is because violations are discovered and remedied by assigning reserved resources before SLA violations, which reduces the cost of misdetection compared to the present work SCOOTER [19].

The effect of increasing the number of jobs shows that at a certain point, when 1500 to 2000 jobs are completed, the comparisons of the results are symmetric, and there is no need to raise the number of jobs further. The creators of the current model SCOOTER conducted studies with workloads of 1000, 2000, 3000, 4000, and 5000; however, they do not offer the outcomes for smaller jobs, such 500 jobs. As a result, in the suggested approach, we began with 500 jobs on a small scale. The simulation findings of suggested model in contrast to the present model show that after 1500 to 2000 jobs, the comparisons of the results are symmetric regarding the question of 3000, 4000, and 5000 workloads. We chose not to replicate the 3000, 4000, and 5000 workloads since we believed that the results would be similar for higher workloads.

To summarize, the proposed research work, a Proactive Resource Provisioning-based Runtime Management of SLA in Cloud Computing, provides a mechanism for managing SLA at run-time. The suggested research activity also includes proactive cloud service and resource provisioning. The SLA is negotiated between cloud users and providers via SLA Manager, which includes run-time SLA management. Resource Manager is used to provision resources proactively to reduce SLA violations and the cost of misdetection. The performance evaluation metrics are the number of SLA violations and the cost of misdetection. The proposed PRP-RM-SLA model’s output is compared to the well-known SLA-based resource allocation method SCOOTER [19]. The simulation was carried out in the CloudSim simulation environment, and the findings demonstrate that the proposed PRP-RM-SLA model is 25% more effective than the present work SCOOTER at decreasing SLA breaches and the cost of misdetection. The proposed technique produced superior outcomes because it controlled the SLA between cloud provider and the user at run time. With dynamic provisioning of cloud resources, the suggested technique detects and handles SLA violations.

5. Conclusions and Future Work

We proposed a novel Proactive a way to provide SLA run-time management based on resource provisioning in Cloud settings. We talked about run-time SLA management, which includes proactive cloud services and resource provision. It stands to reason that this is the case, given the meteoric rise of “cloud computing” as a means for companies to acquire IT infrastructure as a service. The main services cloud computing platforms offer are IaaS, PaaS, and SaaS. Quality of Service (QoS) metrics like availability, reliability, performance, and cost affect the “resources and services offered by cloud computing environments.” When a service provider and their customers work together to establish performance standards, such as response time, rejection rate, throughput, energy use, and cost, this is known as quality of service (QoS). To meet their customers’ expectations, cloud service providers must deliver the level of service their clients need. A Service Level Agreement (SLA) is a legally binding contract between a service provider and its customers that specifies the parameters by which the service quality will be measured. An SLA Manager is used to negotiate SLAs between cloud users and providers in real-time. The resources are provisioned preemptively through the Resource Manager to cut down on SLA violations and misdetection costs.

The proposed model offers a method for managing the SLA in real-time. The proactive provisioning of cloud resources and services is another feature of the proposed research project. Through SLA Manager and run-time SLA management, the SLA is negotiated between cloud users and the provider. To lower SLA violations and misdetection costs, the resources are provisioned proactively through the Resource Manager. The amount of SLA breaches and the cost of misdetection were considered.

For this reason, we contrasted the results of the suggested PRP-RM-SLA model to that of the popular current SLA-based resource allocation strategy SCOOTER. According to simulation data, the suggested PRP-RM-SLA model is 25% more effective than the current work SCOOTER at reducing SLA breaches and the cost of misdetection. Since the proposed approach uses dynamic provisioning of cloud resources to detect and respond to SLA violations, it can do double duty as an SLA manager between the user and the cloud provider. Eventually, we hope to integrate the run-time management of the SLA with an energy-efficient resource provisioning model.

Author Contributions

S.N., S.K.u.Z., A.D.A. and N.u.A. conceived and designed the experiments; A.K., J.I., M.A.K. and Z.A. performed the simulations; M.A.K., S.N., N.u.A. and J.I. analyzed the results; Z.A., S.K.u.Z., H.E. and S.N. wrote the paper, H.E., A.D.A., N.u.A. and Z.A. technically reviewed the paper. All authors have read and agreed to the published version of the manuscript.

Funding

Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R51), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No data were used to support this study. We have conducted the simulations to evaluate the performance of proposed protocol. However, any query about the research conducted in this paper is highly appreciated and can be asked from the author (Muhammad Amir Khan) upon request.

Acknowledgments

The authors sincerely appreciate the support from Princess Nourah bint Abdulrahman University Researchers Supporting Project number (PNURSP2023R51), Princess Nourah bint Abdulrahman University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wu, C.; Buyya, R.; Ramamohanarao, K. Cloud Pricing Models. ACM Comput. 2020, 52, 1–36. [Google Scholar] [CrossRef]

- Mansouri, Y.; Toosi, A.N.; Buyya, R. Data Storage Management in Cloud Environments. ACM Comput. Surv. 2018, 50, 1–51. [Google Scholar] [CrossRef]

- Luong, N.C.; Wang, P.; Niyato, D.; Wen, Y.; Han, Z. Resource Management in Cloud Networking Using Economic Analysis and Pricing Models: A Survey. IEEE Commun. Surv. Tutor. 2017, 19, 954–1001. [Google Scholar] [CrossRef]

- Rodriguez, M.A.; Buyya, R. Budget-driven scheduling of scientific workflows in IaaS clouds with fine-grained billing periods. ACM Trans. Auton. Adapt. Syst. 2017, 12, 1–22. [Google Scholar] [CrossRef]

- Pahl, C. Containerization and the PaaS Cloud. IEEE Cloud Comput. 2015, 2, 24–31. [Google Scholar] [CrossRef]

- Palos-Sanchez, P.R.; Arenas-Marquez, F.J.; Aguayo-Camacho, M. Cloud Computing (SaaS) Adoption as a Strategic Technology: Results of an Empirical Study. Mob. Inf. Syst. 2017, 2017, 2536040. [Google Scholar] [CrossRef]

- Amato, F.; Moscato, F. Exploiting Cloud and Workflow Patterns for the Analysis of Composite Cloud Services. Future Gener. Comput. Syst. 2017, 67, 255–265. [Google Scholar] [CrossRef]

- Zhou, N.; Lin, W.; Feng, W.; Shi, F.; Pang, X. Budget-deadline constrained approach for scientific workflows scheduling in a cloud environment. Clust. Comput. 2020, 1, 1–15. [Google Scholar] [CrossRef]

- Dimitri, N. Pricing cloud IaaS computing services. J. Cloud Comput. 2020, 9, 1–11. [Google Scholar] [CrossRef]

- Serrano, D.; Bouchenak, S.; Kouki, Y.; de Oliveira, F.A., Jr.; Ledoux, T.; Lejeune, J.; Sopena, J.; Arantes, L.; Sens, P. SLA guarantees for cloud services. Future Gener. Comput. Syst. 2016, 54, 233–246. [Google Scholar] [CrossRef]

- Shivani; Singh, A. Taxonomy of SLA violation minimization techniques in cloud computing. In Proceedings of the 2018 Second International Conference on Inventive Communication and Computational Technologies (ICICCT), Coimbatore, India, 20–21 April 2018; pp. 1845–1850. [Google Scholar]

- Kaur, S.; Bagga, P.; Hans, R.; Kaur, H. Quality of Service (QoS) Aware Workflow Scheduling (WFS) in Cloud Computing: A Systematic Review. Arab. J. Sci. Eng. 2019, 44, 2867–2897. [Google Scholar] [CrossRef]

- Zeng, X.; Garg, S.; Barika, M.; Zomaya, A.Y.; Wang, L.; Villari, M.; Chen, D.; Ranjan, R. SLA Management for Big Data Analytical Applications in Clouds. ACM Comput. Surv. 2021, 53, 1–40. [Google Scholar] [CrossRef]

- Gupta, A.; Bhadauria, H.S.; Singh, A. SLA-aware load balancing using risk management framework in cloud. J. Ambient Intell. Humaniz. Comput. 2021, 12, 7559–7568. [Google Scholar] [CrossRef]

- Viegas, E.; Santin, A.; Bachtold, J.; Segalin, D.; Stihler, M.; Marcon, A.; Maziero, C. Enhancing service maintainability by monitoring and auditing SLA in cloud computing. Clust. Comput. 2021, 24, 1659–1674. [Google Scholar] [CrossRef]

- García, A.G.; Espert, I.B.; García, V.H. SLA-driven dynamic cloud resource management. Future Gener. Comput. Syst. 2014, 31, 1–11. [Google Scholar] [CrossRef]

- Maurer, M.; Emeakaroha, V.C.; Brandic, I.; Altmann, J. Cost–benefit analysis of an SLA mapping approach for defining standardized Cloud computing goods. Future Gener. Comput. Syst. 2012, 28, 39–47. [Google Scholar] [CrossRef]

- Pop, F.; Iosup, A.; Prodan, R. HPS-HDS: High Performance Scheduling for Heterogeneous Distributed Systems. Future Gener. Comput. Syst. 2018, 78, 242–244. [Google Scholar] [CrossRef]

- Gill, S.S.; Buyya, R. Resource Provisioning Based Scheduling Framework for Execution of Heterogeneous and Clustered Workloads in Clouds: From Fundamental to Autonomic Offering. J. Grid Comput. 2019, 17, 385–417. [Google Scholar] [CrossRef]

- Gill, S.S.; Buyya, R.; Chana, I.; Singh, M.; Abraham, A. BULLET: Particle Swarm Optimization Based Scheduling Technique for Provisioned Cloud Resources. J. Netw. Syst. Manag. 2018, 26, 361–400. [Google Scholar] [CrossRef]

- Morshedlou, H.; Meybodi, M.R. Decreasing Impact of SLA Violations:A Proactive Resource Allocation Approachfor Cloud Computing Environments. IEEE Trans. Cloud Comput. 2014, 2, 156–167. [Google Scholar] [CrossRef]

- Singh, S.; Chana, I.; Buyya, R. STAR: SLA-aware Autonomic Management of Cloud Resources. IEEE Trans. Cloud Comput. 2020, 8, 1040–1053. [Google Scholar] [CrossRef]

- Calheiros, R.N.; Ranjan, R.; Beloglazov, A.; de Rose, C.A.F.; Buyya, R. CloudSim: A toolkit for modeling and simulation of cloud computing environments and evaluation of resource provisioning algorithms. Softw. Pract. Exp. 2011, 41, 23–50. [Google Scholar] [CrossRef]

- Buyya, R.; Ranjan, R.; Calheiros, R.N. Modeling and simulation of scalable cloud computing environments and the cloudsim toolkit: Challenges and opportunities. In Proceedings of the 2009 International Conference on High Performance Computing and Simulation, HPCS, Leipzig, Germany, 21–24 June 2009; pp. 1–11. [Google Scholar]

- Xiao, P.; Liu, D. A Novel Cloud Monitoring Framework with Enhanced QoS Supporting. Int. J. e-Collab. 2019, 15, 31–45. [Google Scholar] [CrossRef]

- De Carvalho, C.A.B.; de Castro Andrade, R.M.; de Castro, M.F.; Coutinho, E.F.; Agoulmine, N. State of the art and challenges of security SLA for cloud computing. Comput. Electr. Eng. 2017, 59, 141–152. [Google Scholar] [CrossRef]

- Cinque, M.; Russo, S.; Esposito, C.; Choo, K.-K.R.; Free-Nelson, F.; Kamhoua, C.A. Cloud Reliability: Possible Sources of Security and Legal Issues. IEEE Cloud Comput. 2018, 5, 31–38. [Google Scholar] [CrossRef]

- Mubeen, S.; Asadollah, S.A.; Papadopoulos, A.V.; Ashjaei, M.; Pei-Breivold, H.; Behnam, M. Management of Service Level Agreements for Cloud Services in IoT: A Systematic Mapping Study. IEEE Access 2017, 6, 30184–30207. [Google Scholar] [CrossRef]

- Labidi, T.; Mtibaa, A.; Gaaloul, W.; Tata, S.; Gargouri, F. Cloud SLA Modeling and Monitoring. In Proceedings of the 2017 IEEE International Conference on Services Computing (SCC), Honolulu, HI, USA, 25–30 June 2017; pp. 338–345. [Google Scholar]

- Vanitha, M.; Marikkannu, P. Effective resource utilization in cloud environment through a dynamic well-organized load balancing algorithm for virtual machines. Comput. Electr. Eng. 2017, 57, 199–208. [Google Scholar] [CrossRef]

- Shekhar, S.; Gokhale, A. Dynamic Resource Management Across Cloud-Edge Resources for Performance-Sensitive Applications. In Proceedings of the 17th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (CCGRID), Madrid, Spain, 14–17 May 2017; pp. 707–710. [Google Scholar]

- Safi, A.; Ahmad, Z.; Jehangiri, A.I.; Latip, R.; Zaman, S.K.U.; Khan, M.A.; Ghoniem, R.M. A Fault Tolerant Surveillance System for Fire Detection and Prevention Using LoRaWAN in Smart Buildings. Sensors 2022, 22, 8411. [Google Scholar] [CrossRef]

- Jehangiri, A.I.; Maqsood, T.; Umar, A.I.; Shuja, J.; Ahmad, Z.; Dhaou, I.B.; Alsharekh, M.F. LiMPO: Lightweight mobility prediction and offloading framework using machine learning for mobile edge computing. Clust. Comput. 2022, 1–19. [Google Scholar] [CrossRef]

- Ahmad, Z.; Jehangiri, A.I.; Ala’anzy, M.A.; Othman, M.; Latip, R.; Zaman SK, U.; Umar, A.I. Scientific workflows management and scheduling in cloud computing: Taxonomy, prospects, and challenges. IEEE Access 2021, 9, 53491–53508. [Google Scholar] [CrossRef]

- Jehangiri, A.I.; Maqsood, T.; Ahmad, Z.; Umar, A.I.; Shuja, J.; Alanazi, E.; Alasmary, W. Mobility-aware computational offloading in mobile edge networks: A survey. Clust. Comput. 2021, 24, 2735–2756. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).