ACPA-Net: Atrous Channel Pyramid Attention Network for Segmentation of Leakage in Rail Tunnel Linings

Abstract

1. Introduction

- We propose an ACPA module, which is a lightweight channel attention module. The ACPA module can effectively capture longer distance channel interaction. The ablation study shows that the ACPA module can help ACPA-Net learn strong semantic feature representations effectively.

- An effective ACPA-Net is proposed to segment the leakage regions in the rail tunnel image. ACPA-Net uses a U-shaped network as a basic network structure and combines it with the proposed ACPA module.

- The combined model with the deep supervision strategy is applied to the Crack500 [21] dataset and our tunnel leakage dataset. Then, excellent performance is obtained, which proved the effectiveness of the model in rail tunnel leakage segmentation.

2. Related Work

3. Method

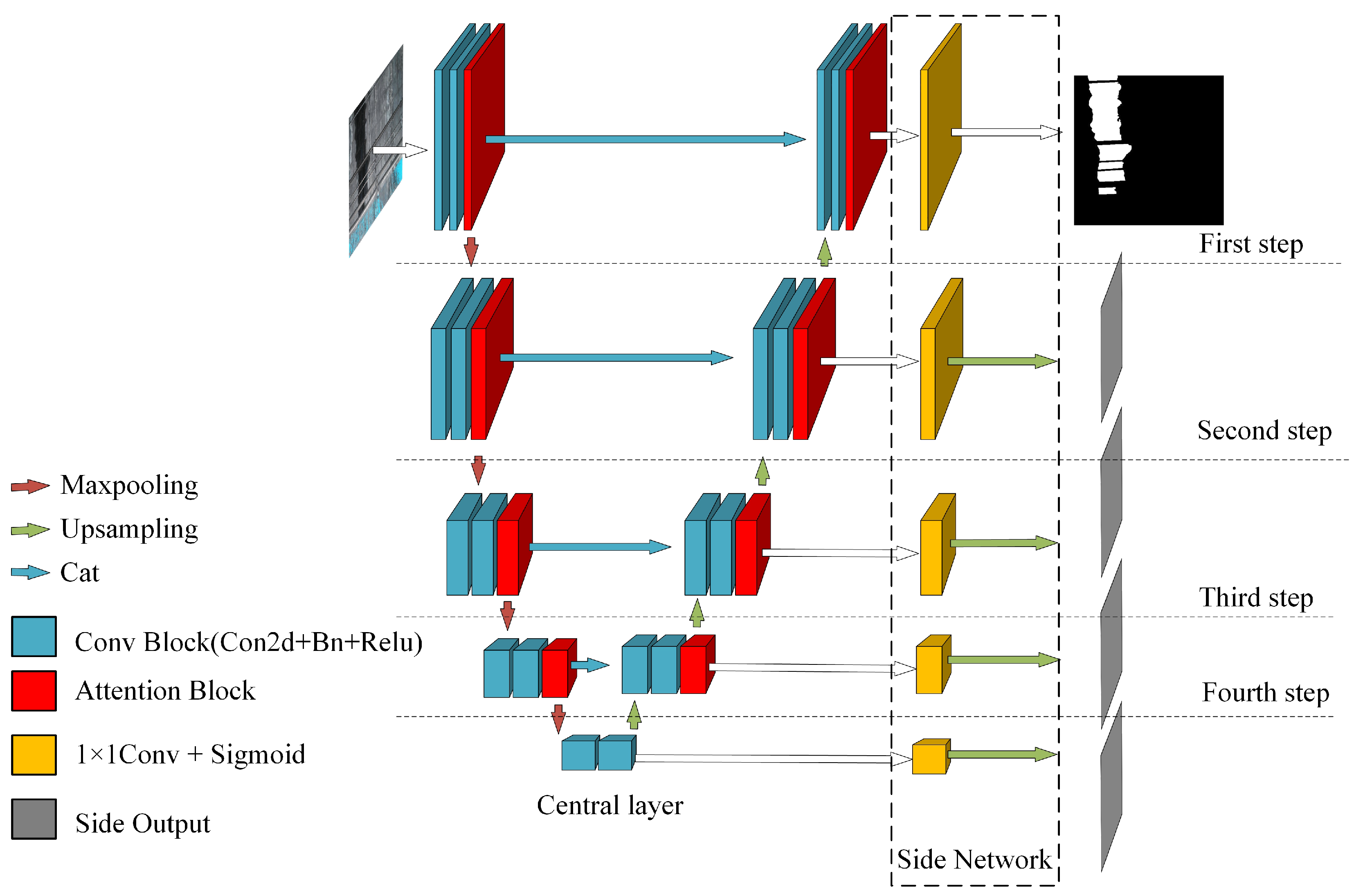

3.1. Network Architecture

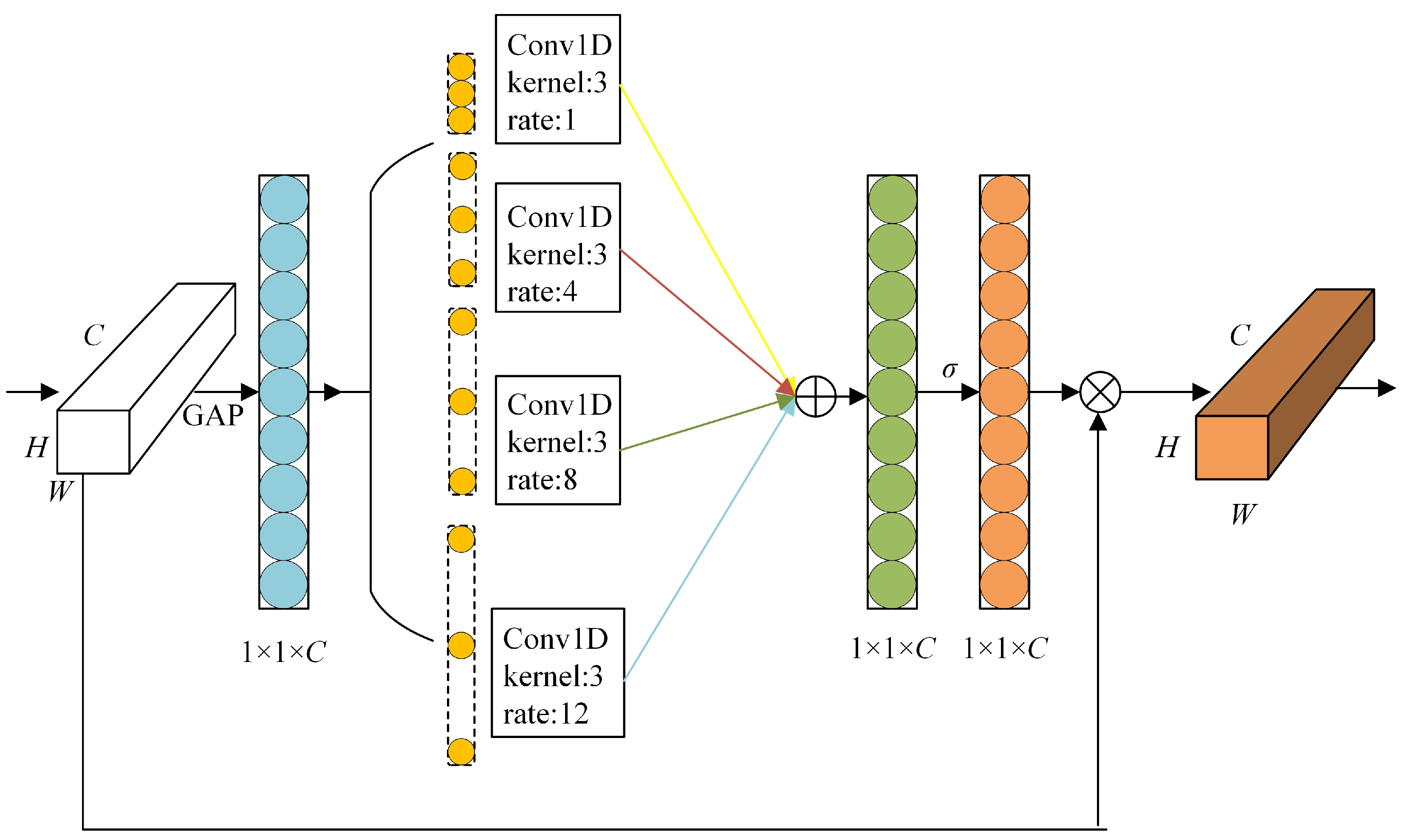

3.2. Atrous Channel Pyramid Attention

3.3. Deep Supervision

4. Experiment

4.1. Implementation Details

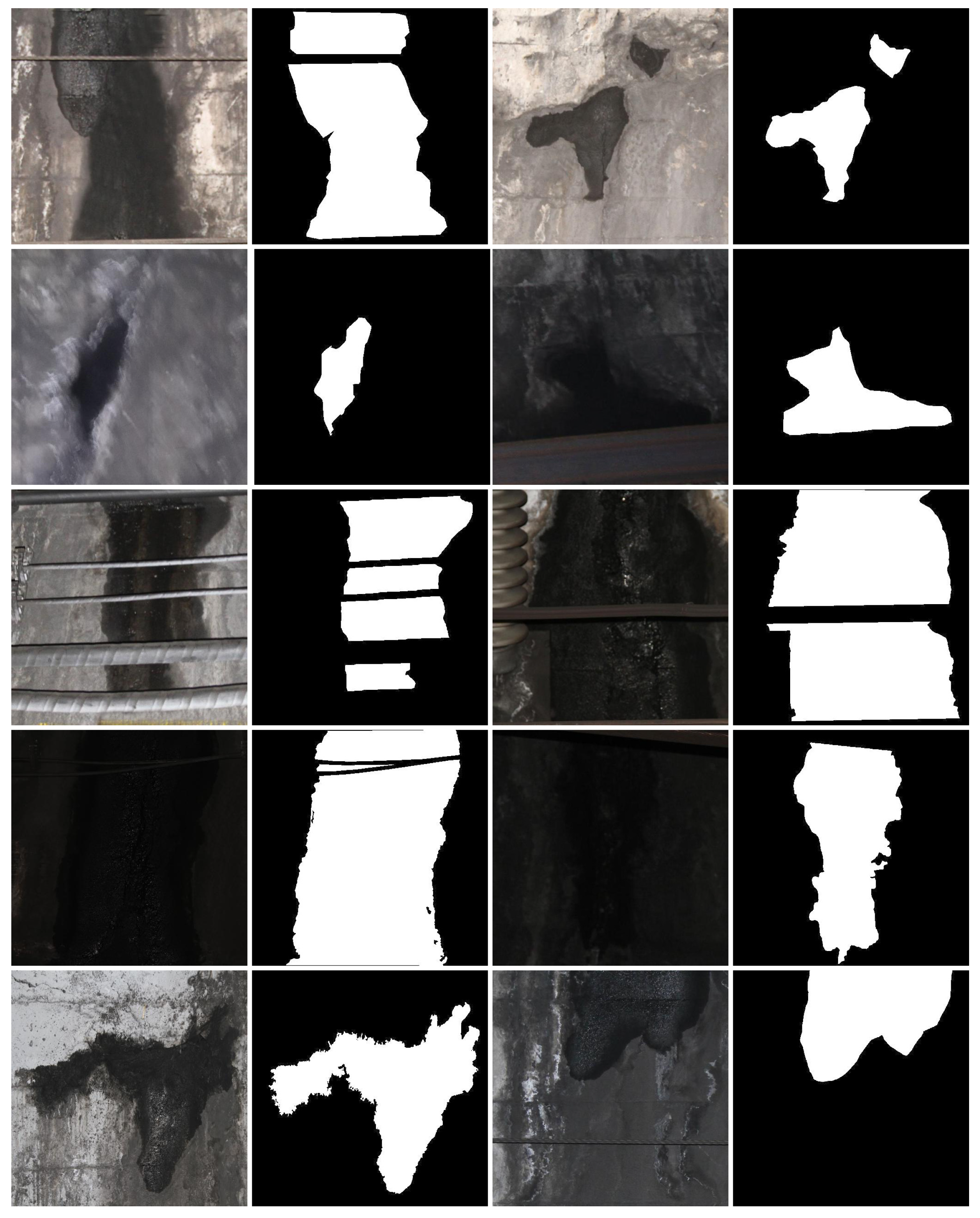

4.2. Datasets

4.3. Evaluation Metrics

4.4. Ablation Studies

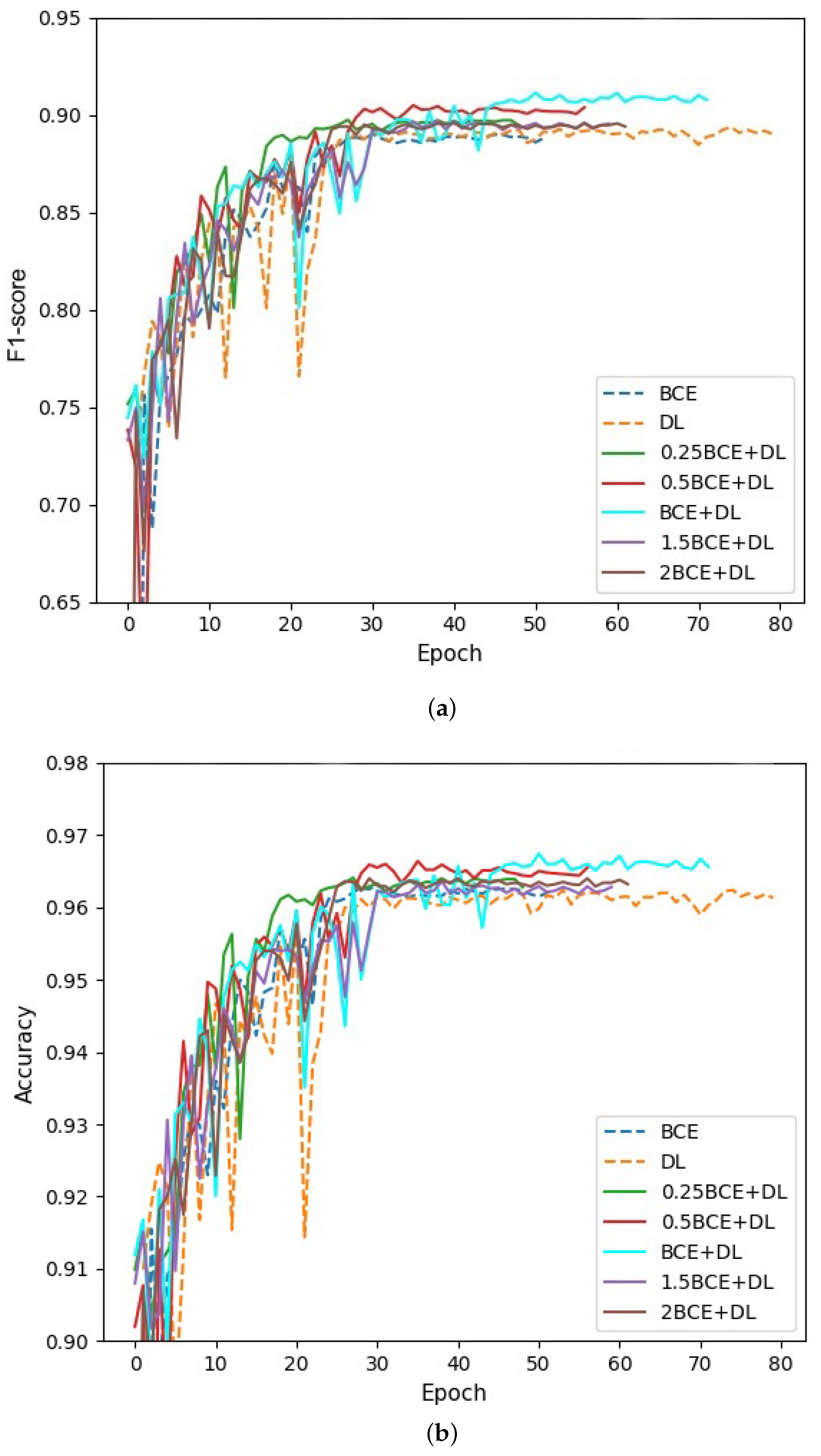

4.4.1. The Effect of Hybrid Loss

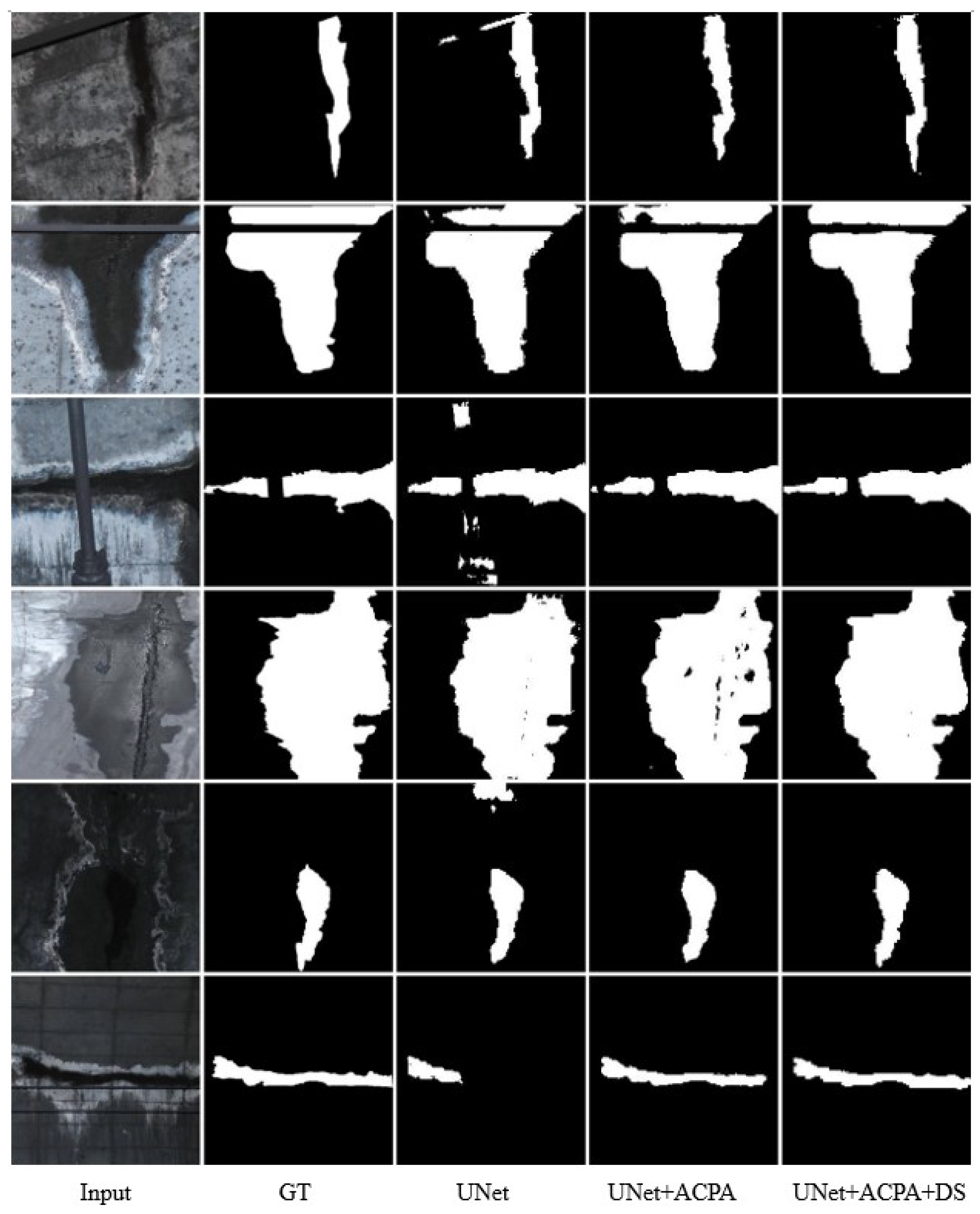

4.4.2. The Effect of the ACPA Module and Deep Supervision

4.4.3. Comparison with Other Attention Modules

4.5. Comparisons with Existing Methods

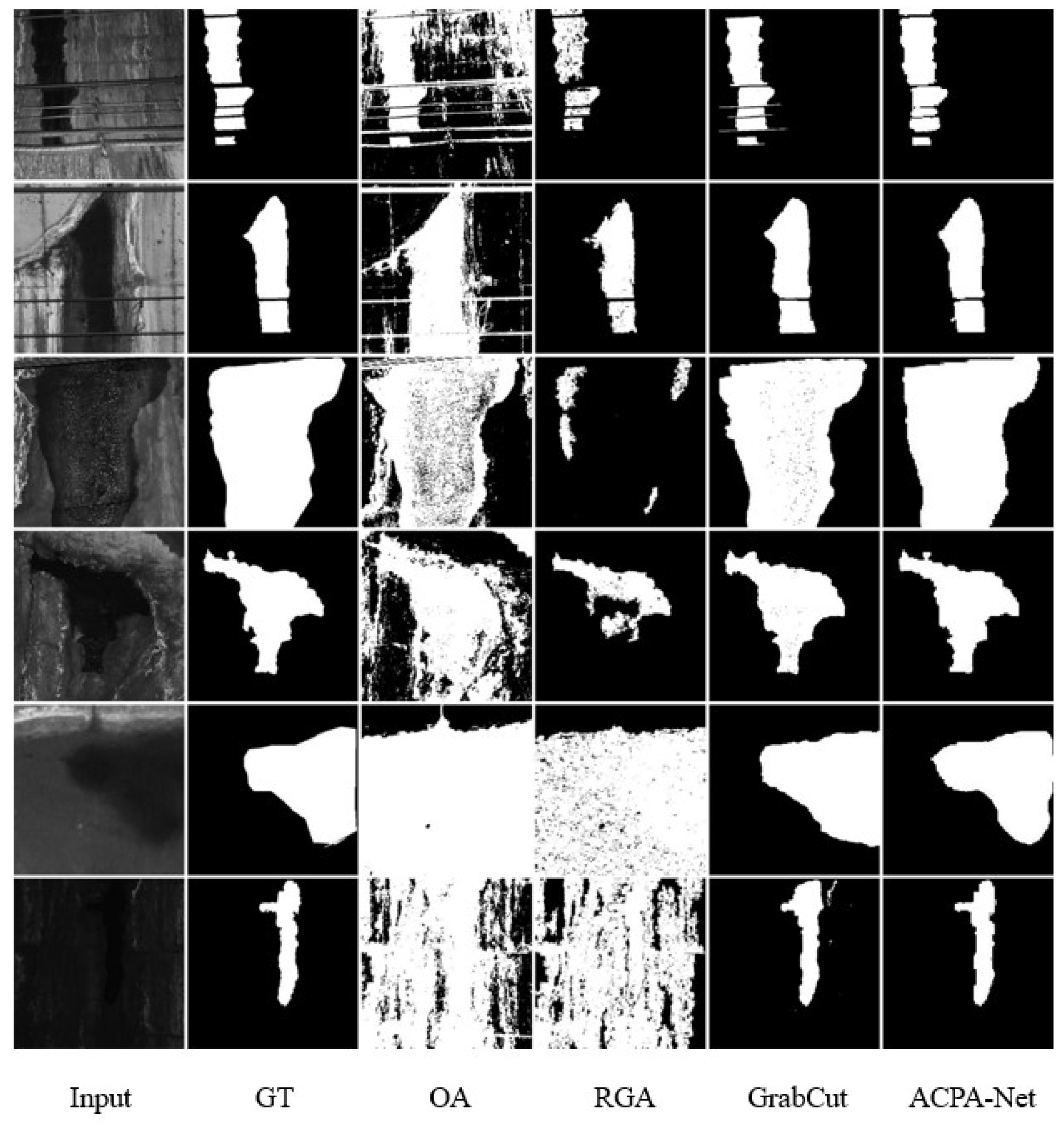

4.5.1. Comparisons with Traditional Methods

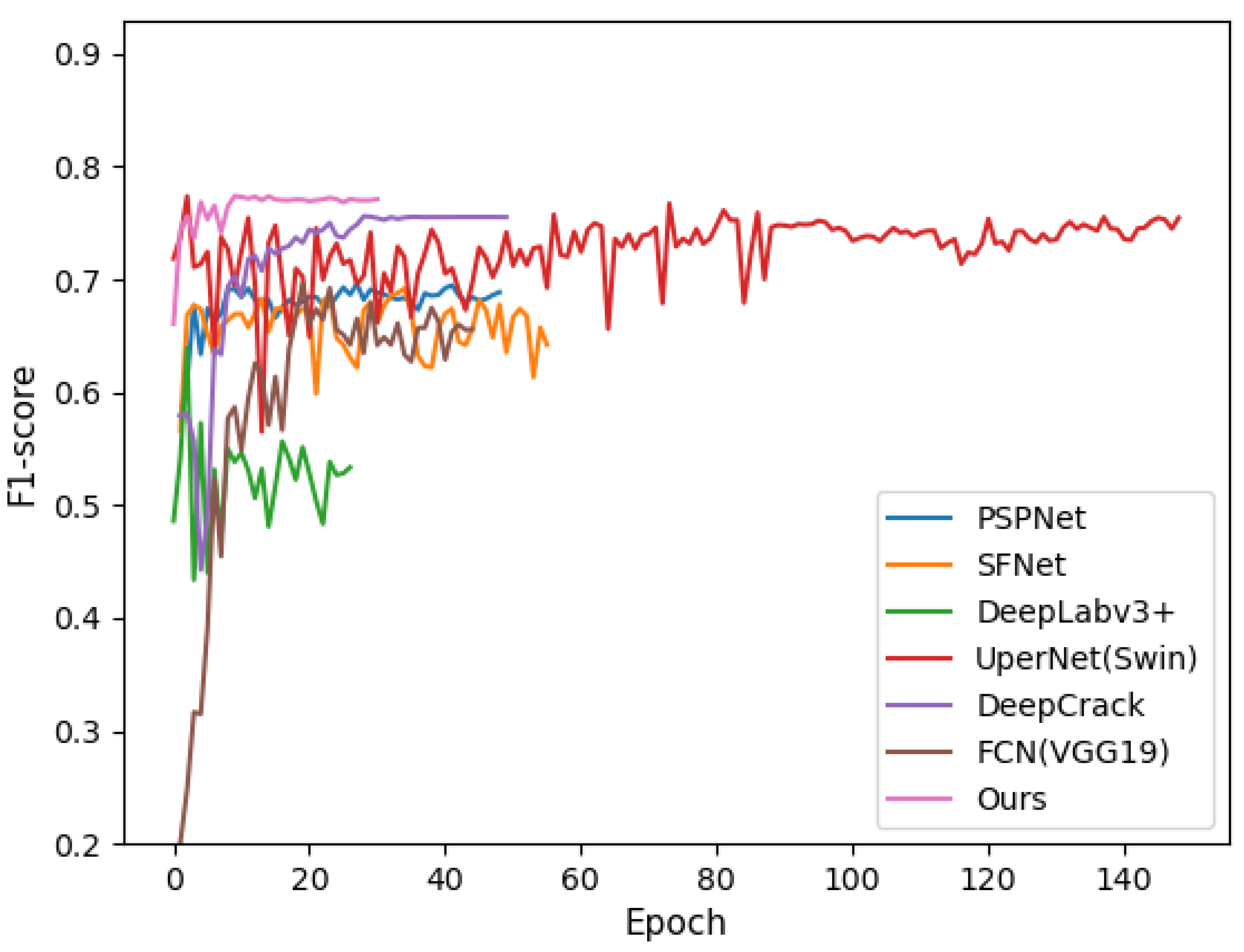

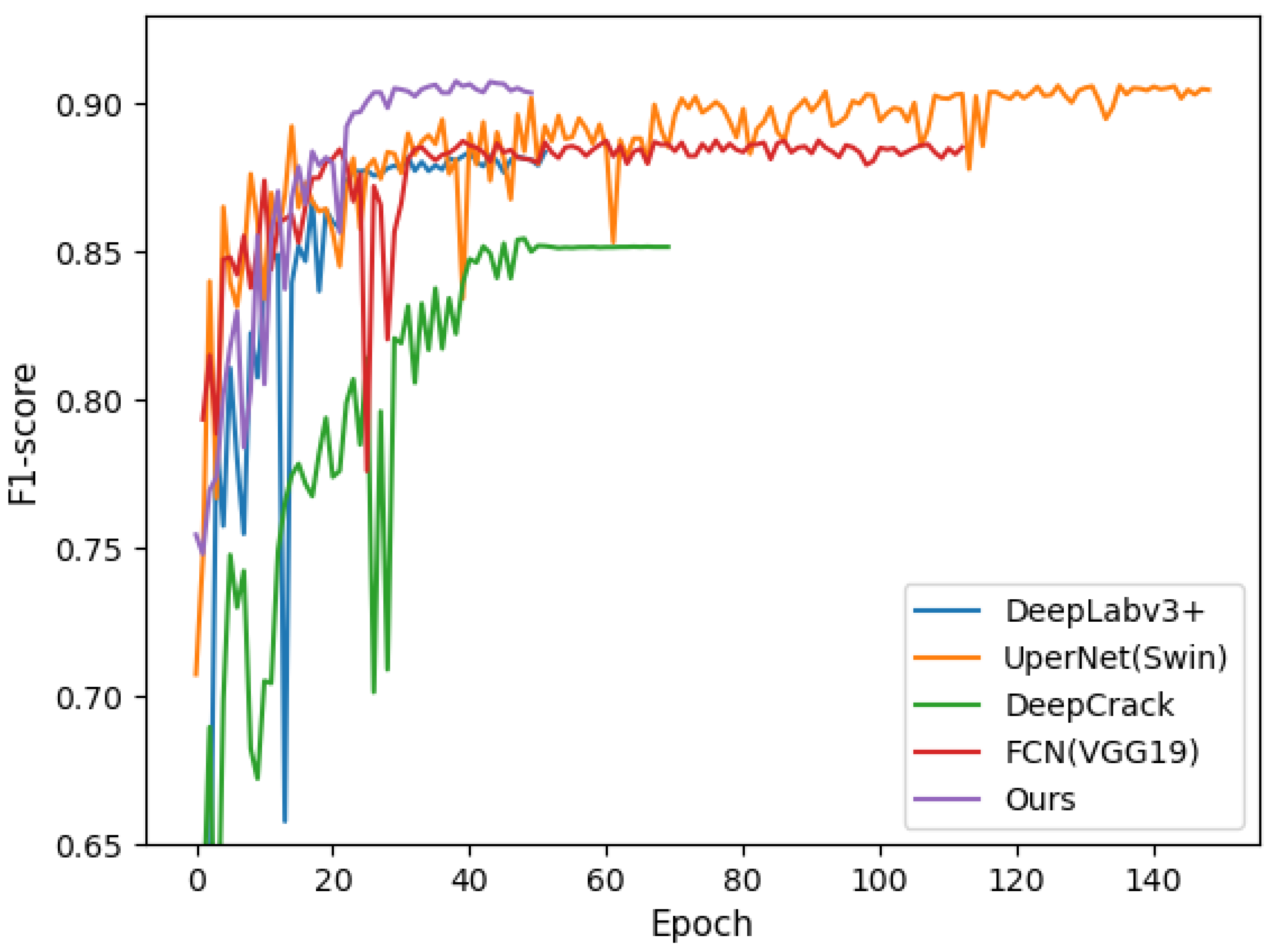

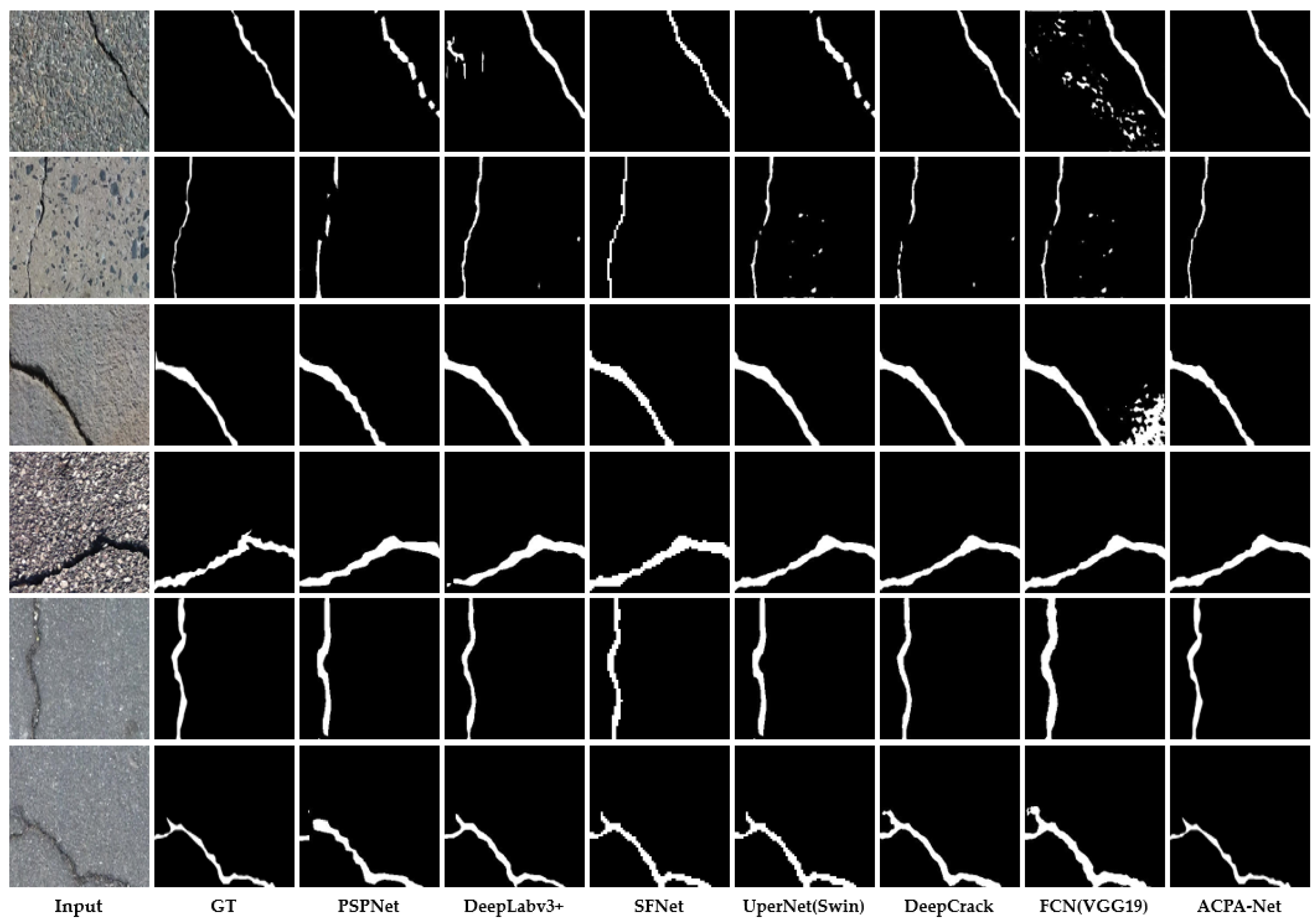

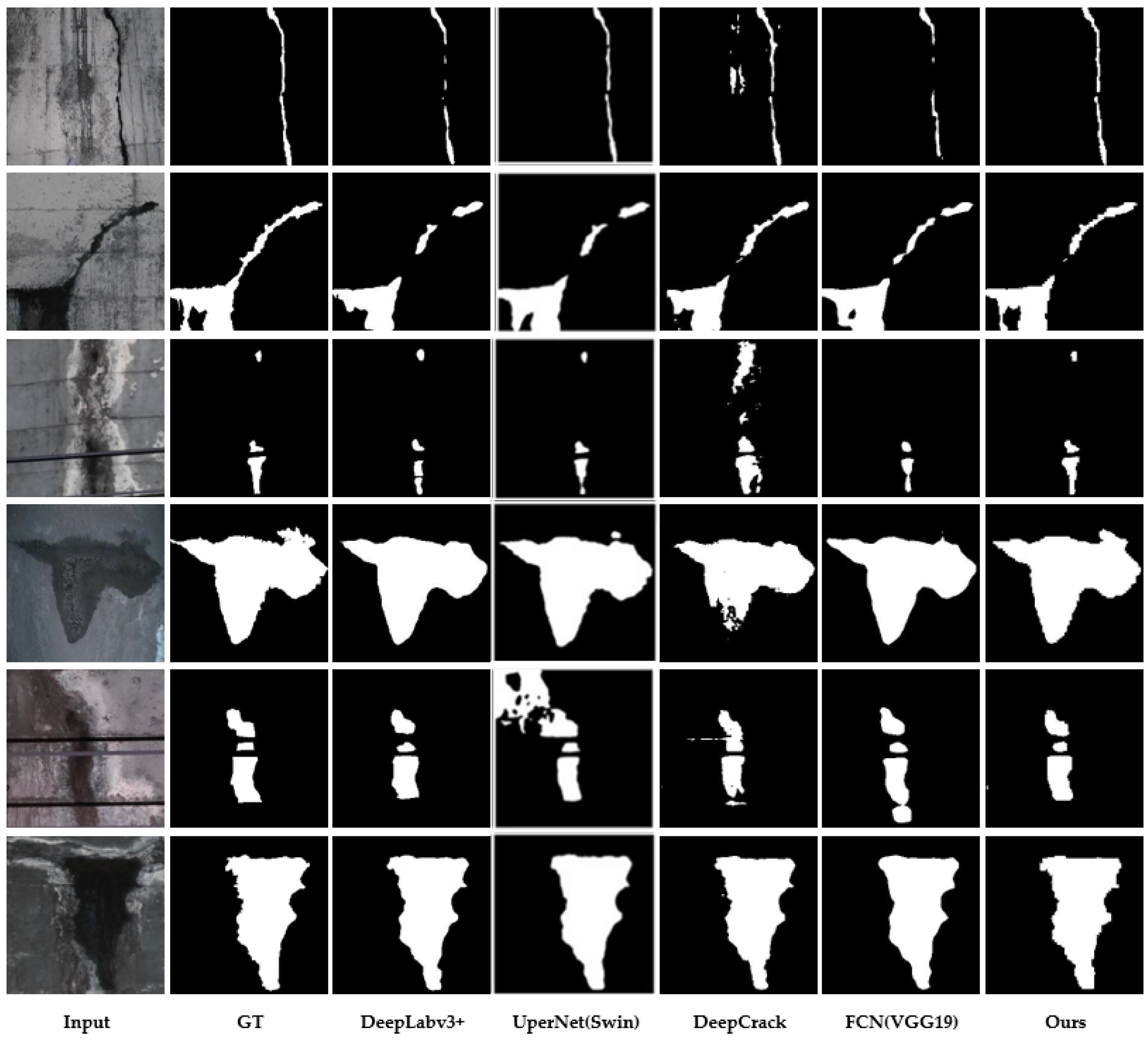

4.5.2. Comparison with Deep Learning Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Huang, H.W.; Li, Q.T.; Zhang, D.M. Deep learning based image recognition for crack and leakage defects of metro shield tunnel. Tunn. Undergr. Space Technol. 2018, 77, 166–176. [Google Scholar] [CrossRef]

- Yuan, Y.; Jiang, X.; Liu, X. Predictive maintenance of shield tunnels. Tunn. Undergr. Space Technol. 2013, 38, 69–86. [Google Scholar] [CrossRef]

- Zhao, D.P.; Tan, X.R.; Jia, L.L. Study on investigation and analysis of existing railway tunnel diseases. In Applied Mechanics and Materials; Trans Tech Publication: Zurich, Switzerland, 2014; Volume 580, pp. 1218–1222. [Google Scholar]

- Wu, Y.; Hu, M.; Xu, G.; Zhou, X.; Li, Z. Detecting Leakage Water of Shield Tunnel Segments Based on Mask R-CNN. In Proceedings of the 2019 IEEE International Conference on Architecture, Construction, Environment and Hydraulics (ICACEH), Xiamen, China, 20–22 December 2019; pp. 25–28. [Google Scholar]

- Zhao, S.; Zhang, D.M.; Huang, H.W. Deep learning–based image instance segmentation for moisture marks of shield tunnel lining. Tunn. Undergr. Space Technol. 2020, 95, 103156. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Gao, X.; Jian, M.; Hu, M.; Tanniru, M.; Li, S. Faster multi-defect detection system in shield tunnel using combination of FCN and faster RCNN. Adv. Struct. Eng. 2019, 22, 2907–2921. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Zhao, S.; Shadabfar, M.; Zhang, D.; Chen, J.; Huang, H. Deep learning-based classification and instance segmentation of leakage-area and scaling images of shield tunnel linings. Struct. Control. Health Monit. 2021, 28, e2732. [Google Scholar] [CrossRef]

- Xue, Y.; Jia, F.; Cai, X.; Shadabfare, M. Semantic Segmentation of Shield Tunnel Leakage with Combining SSD and FCN. In Proceedings of the SAI Intelligent Systems Conference, London, UK, 3–4 September 2020; Springer: Cham, Switzerland, 2020; pp. 53–61. [Google Scholar]

- Xue, Y.; Cai, X.; Shadabfar, M.; Shao, H.; Zhang, S. Deep learning-based automatic recognition of water leakage area in shield tunnel lining. Tunn. Undergr. Space Technol. 2020, 104, 103524. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef]

- Xiong, L.; Zhang, D.; Zhang, Y. Water leakage image recognition of shield tunnel via learning deep feature representation. J. Vis. Commun. Image Represent. 2020, 71, 102708. [Google Scholar] [CrossRef]

- Cheng, X.; Hu, X.; Tan, K.; Wang, L.; Yang, L. Automatic Detection of Shield Tunnel Leakages Based on Terrestrial Mobile LiDAR Intensity Images Using Deep Learning. IEEE Access 2021, 9, 55300–55310. [Google Scholar] [CrossRef]

- Zou, Q.; Zhang, Z.; Li, Q.; Qi, X.; Wang, Q.; Wang, S. Deepcrack: Learning hierarchical convolutional features for crack detection. IEEE Trans. Image Process. 2018, 28, 1498–1512. [Google Scholar] [CrossRef]

- Li, H.; Zong, J.; Nie, J.; Wu, Z.; Han, H. Pavement crack detection algorithm based on densely connected and deeply supervised network. IEEE Access 2021, 9, 11835–11842. [Google Scholar] [CrossRef]

- Hong, Y.; Lee, S.J.; Yoo, S.B. AugMoCrack: Augmented morphological attention network for weakly supervised crack detection. Electron. Lett. 2022, 58, 651–653. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Huyan, J.; Ma, T.; Li, W.; Yang, H.; Xu, Z. Pixelwise asphalt concrete pavement crack detection via deep learning-based semantic segmentation method. Struct. Control. Health Monit. 2022, e2974. [Google Scholar] [CrossRef]

- Han, C.; Ma, T.; Huyan, J.; Huang, X.; Zhang, Y. CrackW-Net: A novel pavement crack image segmentation convolutional neural network. IEEE Trans. Intell. Transp. Syst. 2021, 23, 22135–22144. [Google Scholar] [CrossRef]

- Yang, F.; Zhang, L.; Yu, S.; Prokhorov, D.; Mei, X.; Ling, H. Feature pyramid and hierarchical boosting network for pavement crack detection. IEEE Trans. Intell. Transp. Syst. 2019, 21, 1525–1535. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Fan, T.; Wang, G.; Li, Y.; Wang, H. Ma-net: A multi-scale attention network for liver and tumor segmentation. IEEE Access 2020, 8, 179656–179665. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual attention network for scene segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3146–3154. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Li, H.; Yue, Z.; Liu, J.; Wang, Y.; Cai, H.; Cui, K.; Chen, X. SCCDNet: A Pixel-Level Crack Segmentation Network. Appl. Sci. 2021, 11, 5074. [Google Scholar] [CrossRef]

- Roy, A.G.; Navab, N.; Wachinger, C. Concurrent spatial and channel ‘squeeze & excitation’in fully convolutional networks. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Granada, Spain, 16–20 September 2018; pp. 421–429. [Google Scholar]

- Lau, S.L.H.; Chong, E.K.P.; Yang, X.; Wang, X. Automated Pavement Crack Segmentation Using U-Net-Based Convolutional Neural Network. IEEE Access 2020, 8, 114892–114899. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhou, Z.; Zhang, J.; Gong, C.; Ding, H. Automatic identification of tunnel leakage based on deep semantic segmentation. Yanshilixue Yu Gongcheng Xuebao/Chin. J. Rock Mech. Eng. 2022, 41, 2082–2093. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7794–7803. [Google Scholar]

- Zhang, Z.; Wen, Y.; Mu, H.; Du, X. Dual Attention Mechanism Based Pavement Crack Detection. J. Image Graph. 2022, 27, 2240–2250. [Google Scholar]

- Chu, H.; Wang, W.; Deng, L. Tiny-Crack-Net: A multiscale feature fusion network with attention mechanisms for segmentation of tiny cracks. Comput. Aided Civ. Infrastruct. Eng. 2022, 37, 1914–1931. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11531–11539. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Bisenet: Bilateral segmentation network for real-time semantic segmentation. In Proceedings of the European conference on computer vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 325–341. [Google Scholar]

- Lee, C.Y.; Xie, S.; Gallagher, P.; Zhang, Z.; Tu, Z. Deeply-supervised nets. In Proceedings of the Artificial Intelligence and Statistics, PMLR, San Diego, CA, USA, 9–12 May 2015; pp. 562–570. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Huang, H.; Lin, L.; Tong, R.; Hu, H.; Zhang, Q.; Iwamoto, Y.; Han, X.; Chen, Y.W.; Wu, J. Unet 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: Redesigning skip connections to exploit multiscale features in image segmentation. IEEE Trans. Med Imaging 2019, 39, 1856–1867. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7479–7489. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago Condes, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Torralba, A.; Russell, B.C.; Yuen, J. Labelme: Online image annotation and applications. Proc. IEEE 2010, 98, 1467–1484. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Rother, C.; Kolmogorov, V.; Blake, A. Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2012, 23, 3. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Li, X.; You, A.; Zhu, Z.; Zhao, H.; Yang, M.; Yang, K.; Tan, S.; Tong, Y. Semantic flow for fast and accurate scene parsing. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 775–793. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv 2021, arXiv:2103.14030. [Google Scholar]

| Methods | F1-Score (%) | IoU (%) | Accuracy (%) |

|---|---|---|---|

| UNet (Baseline) | 86.85 | 77.86 | 95.43 |

| +ACPA | 88.4 (1.55↑ 1) | 79.96 (2.1↑) | 95.80 (0.37↑) |

| +ACPA+DS | 90.75 (3.9↑) | 83.62 (5.76↑) | 96.68 (1.25↑) |

| Methods | F1-Score (%) | IoU(%) | Accuracy (%) | Param |

|---|---|---|---|---|

| UNet_DS (Baseline) | 87.81 | 79.20 | 95.77 | – |

| +SE | 89.68 (1.87↑) | 81.76 (2.56↑) | 96.17 (0.40↑) | 13.95 K |

| +CBAM | 89.50 (1.69↑) | 81.69 (2.49↑) | 96.36 (0.59↑) | 30.28 K |

| +scSE | 88.14 (0.33↑) | 79.77 (0.57↑) | 95.64 (0.13↓) | 110.30 K |

| +ECA | 89.92 (2.11↑) | 82.39 (3.19↑) | 96.49 (0.72↑) | 24 |

| +ACPA | 90.75 (2.94↑) | 83.62 (4.42↑) | 96.68 (0.91↑) | 96 |

| Methods | F1-Score (%) | Recall (%) | Precision (%) | IoU (%) | Accuracy (%) |

|---|---|---|---|---|---|

| OA | 48.69 | 97.67 | 34.32 | 33.84 | 63.30 |

| RGA | 63.09 | 73.76 | 63.64 | 48.40 | 82.11 |

| GrabCut | 89.29 | 90.68 | 88.75 | 81.34 | 96.25 |

| Ours | 90.75 | 90.47 | 91.56 | 83.62 | 96.68 |

| Methods | F1-Score (%) | Recall (%) | Precision (%) | IoU (%) | Accuracy (%) |

|---|---|---|---|---|---|

| PSPNet | 71.69 | 77.27 | 68.80 | 57.40 | 97.18 |

| SFNet | 75.83 | 79.29 | 74.52 | 62.38 | 97.58 |

| DeepLabv3+ | 76.76 | 80.13 | 76.11 | 63.76 | 97.69 |

| UperNet(Swin) | 75.45 | 72.21 | 80.05 | 65.82 | 95.44 |

| DeepCrack | 75.60 | 80.13 | 74.94 | 62.75 | 97.66 |

| FCN(VGG19) | 69.64 | 75.28 | 63.03 | 55.74 | 96.61 |

| Ours | 79.17 | 82.30 | 77.52 | 66.62 | 97.88 |

| Methods | Param (M) | F1-Score (%) | Recall (%) | Precision (%) | IoU (%) | Accuracy (%) | FLOPs (G) | FPS |

|---|---|---|---|---|---|---|---|---|

| DeepLabv3+ | 12.33 | 88.37 | 89.89 | 87.98 | 79.82 | 95.83 | 4.58 | 124.74 |

| UperNet(Swin) | 59.83 | 90.60 | 92.24 | 89.20 | 82.98 | 96.52 | 59.64 | 38.23 |

| DeepCrack | 30.91 | 85.17 | 85.14 | 82.88 | 69.13 | 93.38 | 136.92 | 5.26 |

| FCN(VGG19) | 171.46 | 88.74 | 82.72 | 87.28 | 80.63 | 92.96 | 36.43 | 34.85 |

| Ours | 4.32 | 90.75 | 90.47 | 91.56 | 83.68 | 96.68 | 10.10 | 76.66 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Geng, P.; Tan, Z.; Luo, J.; Wang, T.; Li, F.; Bei, J. ACPA-Net: Atrous Channel Pyramid Attention Network for Segmentation of Leakage in Rail Tunnel Linings. Electronics 2023, 12, 255. https://doi.org/10.3390/electronics12020255

Geng P, Tan Z, Luo J, Wang T, Li F, Bei J. ACPA-Net: Atrous Channel Pyramid Attention Network for Segmentation of Leakage in Rail Tunnel Linings. Electronics. 2023; 12(2):255. https://doi.org/10.3390/electronics12020255

Chicago/Turabian StyleGeng, Peng, Ziye Tan, Jun Luo, Tongming Wang, Feng Li, and Jianghui Bei. 2023. "ACPA-Net: Atrous Channel Pyramid Attention Network for Segmentation of Leakage in Rail Tunnel Linings" Electronics 12, no. 2: 255. https://doi.org/10.3390/electronics12020255

APA StyleGeng, P., Tan, Z., Luo, J., Wang, T., Li, F., & Bei, J. (2023). ACPA-Net: Atrous Channel Pyramid Attention Network for Segmentation of Leakage in Rail Tunnel Linings. Electronics, 12(2), 255. https://doi.org/10.3390/electronics12020255