CLICK: Integrating Causal Inference and Commonsense Knowledge Incorporation for Counterfactual Story Generation

Abstract

:1. Introduction

- Inspired from causal graph modeling on how conditions formulate story endings, we propose the counterfactual generation framework CLICK based on CausaL Inference in event sequences and Commonsense Knowledge incorporation, improving the interpretability of generative reasoning.

- We investigate the causal invariance by analyzing the causal relationship among event sequences to pinpoint the necessary modification locations. Meanwhile, CLICK enhances the causal continuity between the ending tokens and the counterfactual condition with commonsense knowledge.

- We conduct experiments on the TIMETRAVEL dataset. The experimental results demonstrate that the CLICK framework outperforms previous state-of-the-art models under unsupervised settings. Ablation experiments further validate the effectiveness of considering causal relationships among event sequences and incorporating structural knowledge.

2. Related Work

2.1. Knowledge-Enhanced Text Generation

2.2. Causal Inference and NLP

3. Preliminaries

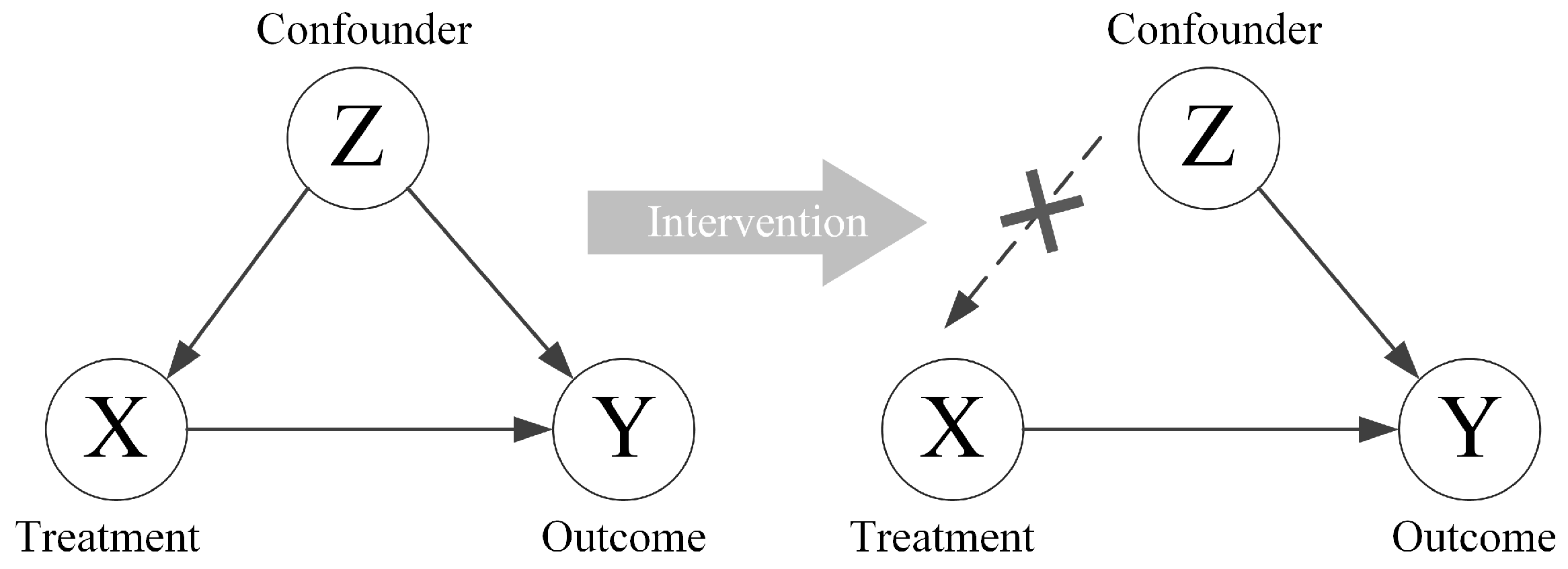

3.1. Causal Graph

3.2. Causal Intervention

4. Methodology

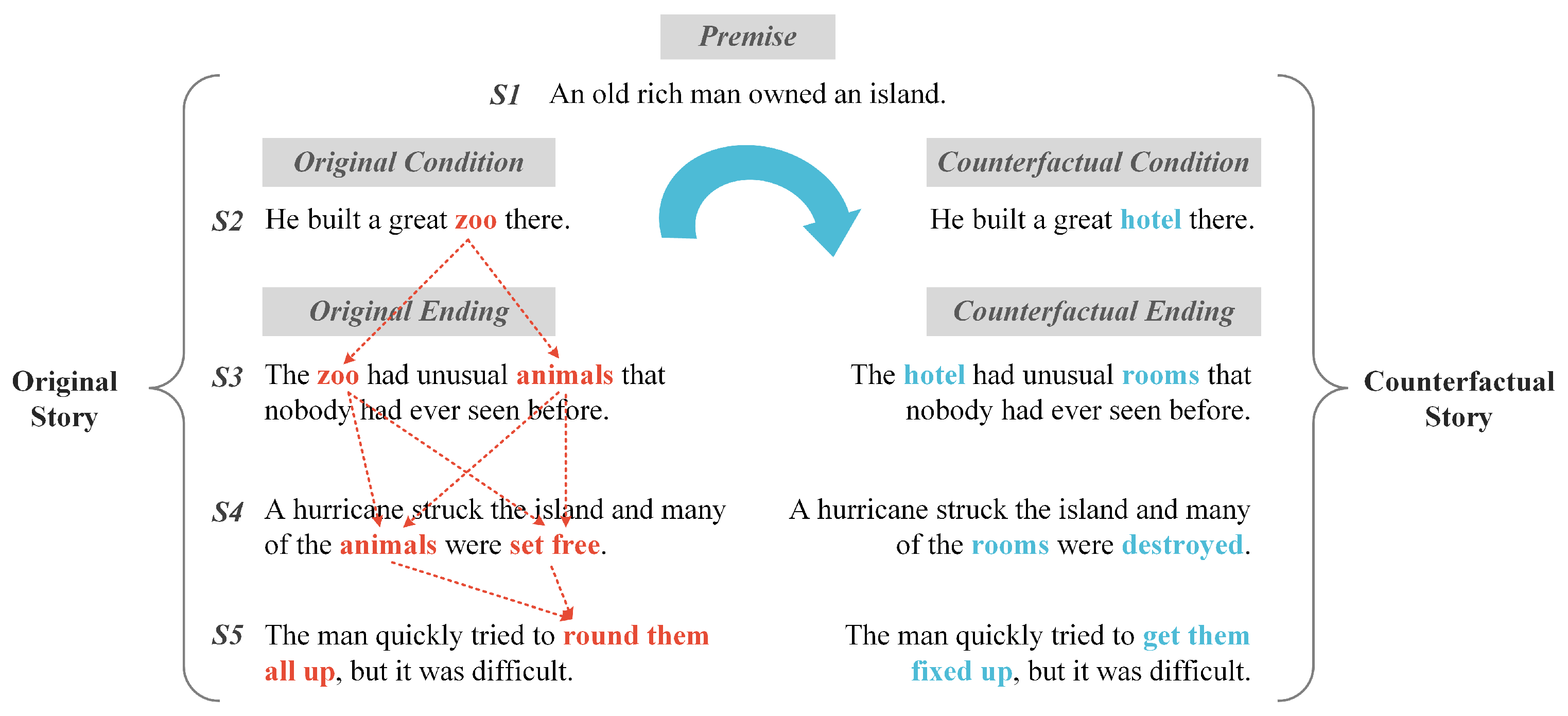

4.1. Task Formulation

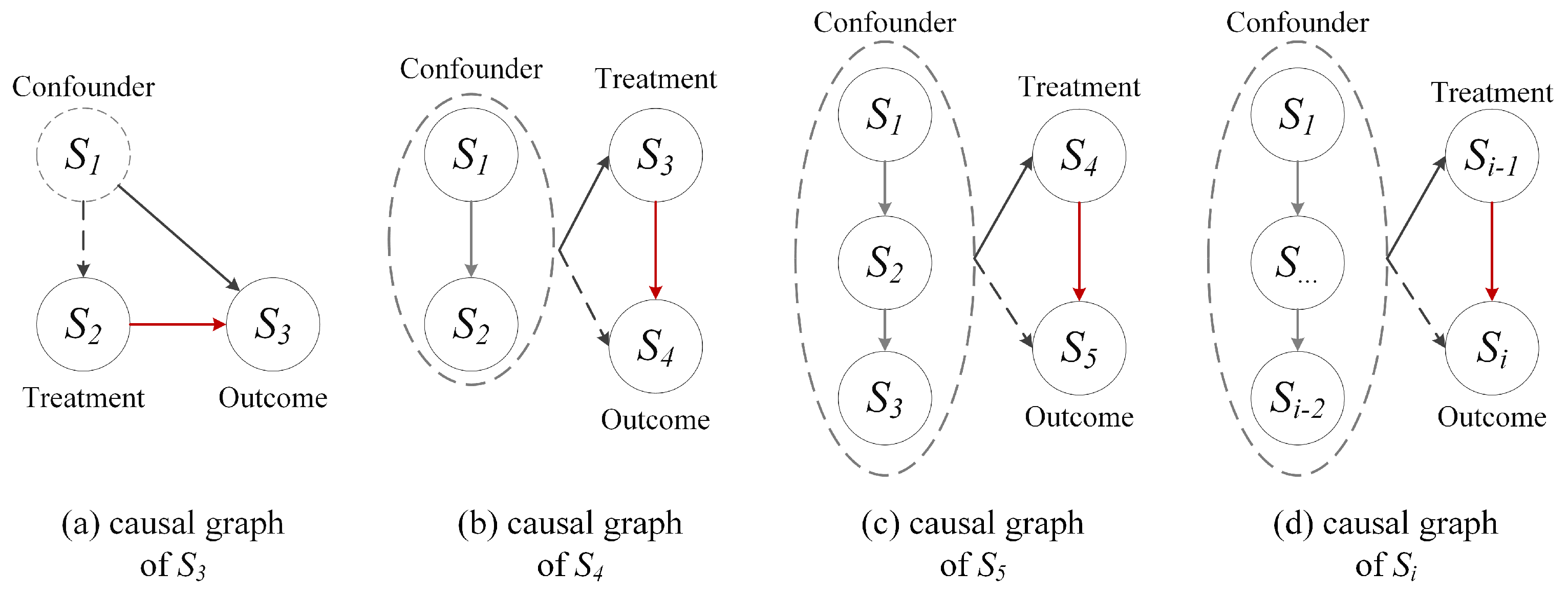

4.2. Causal Graph and Causal Path Analysis

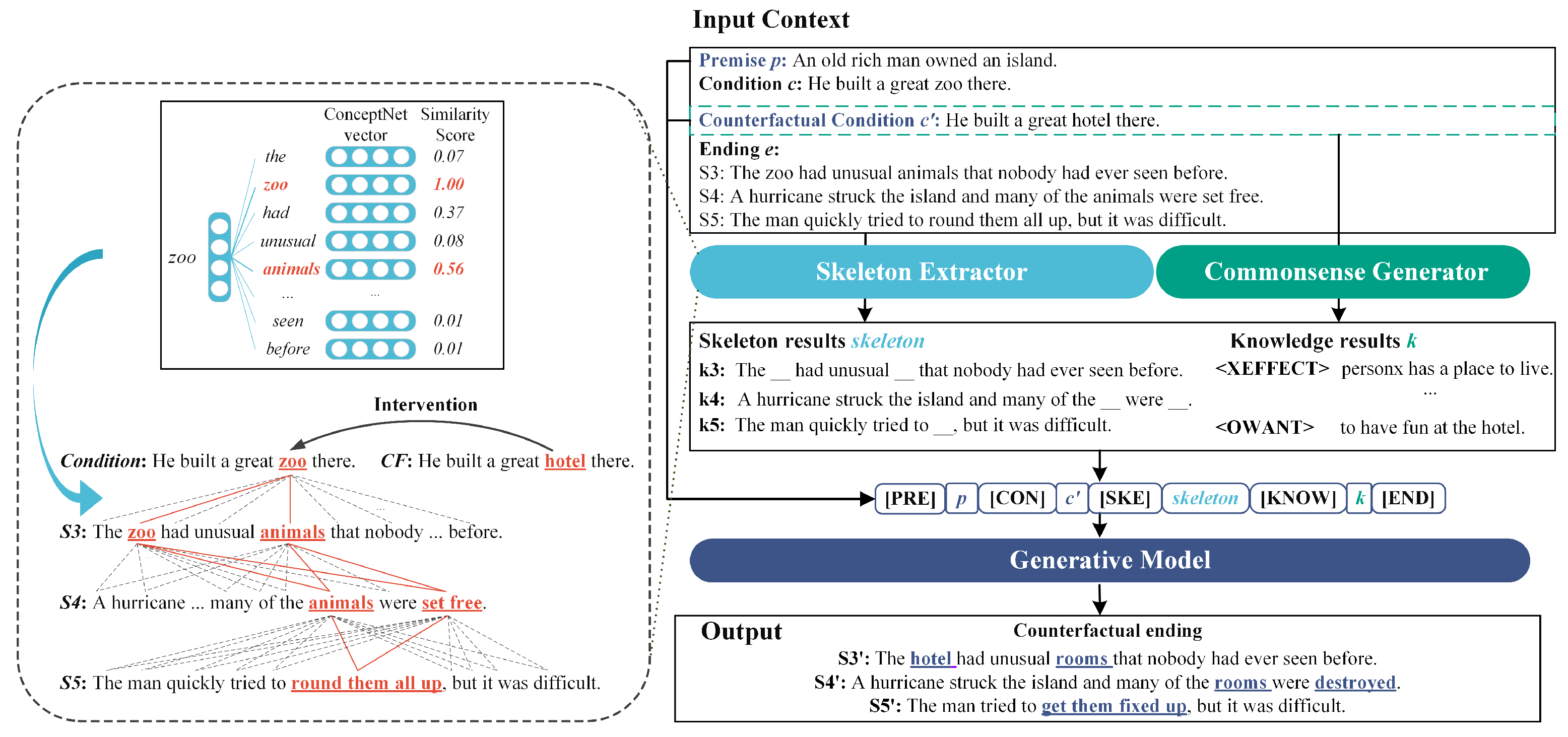

4.3. Model Overview

4.4. Skeleton Extractor with Narrative Chain Guidance

4.4.1. Condition-Guided Intervention Selection

- (1)

- Word substitution: By selectively modifying only a subset of words in the original conditions, new counterfactual conditions can be obtained. Thus, by comparing c and , the modified content in c is the intervention.

- (2)

- Word deletion and addition: By selectively deleting or adding words to the original conditions, the new counterfactual conditions can be obtained. In this case, all words in c are considered the intervention.

4.4.2. Sequence-Aware Correlation Calculation

| Algorithm 1 Sequence-aware correlation computation |

Input:

: Initial intervention words; : Three sentences in ending; : Threshold used to determine correlation Output:

: Causal word set in the i-th sentence

|

4.4.3. Skeleton Acquisition

4.5. Knowledge-Alignment Commonsense Generator

- If-Event-Then-Mental-State: Defines three relations relating to the mental pre- and post-conditions of an event, including XIntent (why does X cause the event), XReact (how does X feel after the event), and OReact (how do others feel after the event). Our focus lies on the knowledge of events related to explicitly mentioned participants, specifically within the categories of XIntent and XReact.

- If-Event-Then-Event: Defines five relations relating to events that constitute probable pre- and post-conditions of a given event, including XNeed (what does X need to do before the event), XEffect (what effects does the event have on X), XWant (what would X likely want to do after the event), OWant (what would others likely want to do after the event), and OEffect (what effects does the event have on others). Our focus is on the knowledge of events that are related to explicitly mentioned participants, encompassing the categories of XNeed, XEffect, and XWant.

- If-Event-Then-Persona: Defines a stative relation that describes how the subject of an event is described or perceived, including XAttr (how would X be described).

4.6. Commonsense-Constrained Generative Model

5. Experiments

5.1. Dataset

5.2. Evaluation Metrics

5.3. Implementation Details

5.4. Compared Approaches

- GPT2-M: [12] utilizes a pre-trained model GPT-2 for story ending rewriting. The method receives the story premise and counterfactual condition as input, without undergoing any training on the dataset.

- GPT2-M + FT: [12] fine-tunes the pre-trained model GPT-2 to maximize the log-likelihood of the stories in the ROCStories corpus. The premise and the counterfactual condition are provided as input.

- DELOREAN: [71] is an unsupervised decoding algorithm that can flexibly incorporate both the past and future contexts using only off-the-shelf, left-to-right language models and no supervision. The method receives the story premise and counterfactual condition as input, without undergoing any training on the dataset.

- EDUCAT: [20] is an editing-based unsupervised approach for counterfactual story rewriting, which includes a target position detection strategy and a modification action.

- Human: One of the three ground-truth counterfactual endings edited by humans. The results are from [20].

- CLICK--w/o-kno: A version of CLICK that does not use the commonsense knowledge in the experiment. The variant method receives the story premise, counterfactual condition, and skeleton as input. The correlation threshold in the skeleton extractor module is set to 0.2.

- CLICK-w/o-ske: A version of CLICK that does not use the skeleton in the experiment. The variant method receives the story premise, counterfactual condition, and commonsense knowledge as input.

- CLICK: The full version of our method.

5.5. Main Results

5.6. Analysis and Discussion

5.6.1. Ablation Study

- w/o skeleton means removing the skeleton extractor module;

- w/o knowledge means removing the commonsense generator module;

- w/o ske w/o kno means removing both the skeleton extractor module and the commonsense generator module.

5.6.2. Effect of Skeleton

5.6.3. Effect of Commonsense Knowledge

5.7. Case Study

6. Conclusions

7. Limitations

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A. Causal Graph

References

- Cornacchia, G.; Anelli, V.W.; Biancofiore, G.M.; Narducci, F.; Pomo, C.; Ragone, A.; Sciascio, E.D. Auditing fairness under unawareness through counterfactual reasoning. Inf. Process. Manag. 2023, 60, 103224. [Google Scholar] [CrossRef]

- Tian, B.; Cao, Y.; Zhang, Y.; Xing, C. Debiasing NLU Models via Causal Intervention and Counterfactual Reasoning. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 36, pp. 11376–11384. [Google Scholar]

- Jaimini, U.; Sheth, A.P. CausalKG: Causal Knowledge Graph Explainability Using Interventional and Counterfactual Reasoning. IEEE Internet Comput. 2022, 26, 43–50. [Google Scholar] [CrossRef]

- Huang, Z.; Kosan, M.; Medya, S.; Ranu, S.; Singh, A.K. Global Counterfactual Explainer for Graph Neural Networks. In Proceedings of the Sixteenth ACM International Conference on Web Search and Data Mining, Singapore, 27 February 2023–3 March 2023; pp. 141–149. [Google Scholar]

- Stepin, I.; Alonso, J.M.; Catalá, A.; Pereira-Fariña, M. A Survey of Contrastive and Counterfactual Explanation Generation Methods for Explainable Artificial Intelligence. IEEE Access 2021, 9, 11974–12001. [Google Scholar] [CrossRef]

- Temraz, M.; Keane, M.T. Solving the class imbalance problem using a counterfactual method for data augmentation. Mach. Learn. Appl. 2022, 9, 100375. [Google Scholar] [CrossRef]

- Calderon, N.; Ben-David, E.; Feder, A.; Reichart, R. DoCoGen: Domain Counterfactual Generation for Low Resource Domain Adaptation. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022. [Google Scholar]

- Howard, P.; Singer, G.; Lal, V.; Choi, Y.; Swayamdipta, S. NeuroCounterfactuals: Beyond Minimal-Edit Counterfactuals for Richer Data Augmentation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022. [Google Scholar]

- Wang, X.; Zhou, K.; Tang, X.; Zhao, W.X.; Pan, F.; Cao, Z.; Wen, J. Improving Conversational Recommendation Systems via Counterfactual Data Simulation. arXiv 2023, arXiv:2306.02842. [Google Scholar]

- Filighera, A.; Tschesche, J.; Steuer, T.; Tregel, T.; Wernet, L. Towards Generating Counterfactual Examples as Automatic Short Answer Feedback. In Proceedings of the Artificial Intelligence in Education—23rd International Conference, Durham, UK, 27–31 July 2022; Volume 13355, pp. 206–217. [Google Scholar]

- Liu, X.; Feng, Y.; Tang, J.; Hu, C.; Zhao, D. Counterfactual Recipe Generation: Exploring Compositional Generalization in a Realistic Scenario. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 7354–7370. [Google Scholar]

- Qin, L.; Bosselut, A.; Holtzman, A.; Bhagavatula, C.; Clark, E.; Choi, Y. Counterfactual Story Reasoning and Generation. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, Virtual, 6–12 December 2020. [Google Scholar]

- Chowdhery, A.; Narang, S.; Devlin, J.; Bosma, M.; Mishra, G.; Roberts, A.; Barham, P.; Chung, H.W.; Sutton, C.; Gehrmann, S.; et al. PaLM: Scaling Language Modeling with Pathways. arXiv 2022, arXiv:2204.02311. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI blog 2019, 1, 9. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. J. Mach. Learn. Res. 2020, 21, 5485–5551. [Google Scholar]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual Denoising Pre-training for Neural Machine Translation. Trans. Assoc. Comput. Linguistics 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Hao, C.; Pang, L.; Lan, Y.; Wang, Y.; Guo, J.; Cheng, X. Sketch and Customize: A Counterfactual Story Generator. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 2–9 February 2021; Volume 35, pp. 12955–12962. [Google Scholar]

- Chen, J.; Gan, C.; Cheng, S.; Zhou, H.; Xiao, Y.; Li, L. Unsupervised Editing for Counterfactual Stories. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtually, 22 February–1 March 2022; Volume 36, pp. 10473–10481. [Google Scholar]

- Bisk, Y.; Holtzman, A.; Thomason, J.; Andreas, J.; Bengio, Y.; Chai, J.; Lapata, M.; Lazaridou, A.; May, J.; Nisnevich, A.; et al. Experience Grounds Language. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Virtually, 16–20 November 2020; pp. 8718–8735. [Google Scholar]

- Tan, B.; Yang, Z.; Al-Shedivat, M.; Xing, E.P.; Hu, Z. Progressive Generation of Long Text with Pretrained Language Models. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 4313–4324. [Google Scholar]

- Dziri, N.; Madotto, A.; Zaïane, O.; Bose, A.J. Neural Path Hunter: Reducing Hallucination in Dialogue Systems via Path Grounding. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; pp. 2197–2214. [Google Scholar]

- Bosselut, A.; Rashkin, H.; Sap, M.; Malaviya, C.; Celikyilmaz, A.; Choi, Y. COMET: Commonsense Transformers for Automatic Knowledge Graph Construction. In Proceedings of the 57th Conference of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019. [Google Scholar]

- Zhang, H.; Song, H.; Li, S.; Zhou, M.; Song, D. A Survey of Controllable Text Generation using Transformer-based Pre-trained Language Models. J. ACM 2023, 37, 111:1–111:37. [Google Scholar] [CrossRef]

- Ling, Y.; Liang, Z.; Wang, T.; Cai, F.; Chen, H. Sequential or jumping: Context-adaptive response generation for open-domain dialogue systems. Appl. Intell. 2023, 53, 11251–11266. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Z. Fixed global memory for controllable long text generation. Appl. Intell. 2023, 53, 13993–14007. [Google Scholar] [CrossRef]

- Yang, L.; Shen, Z.; Zhou, F.; Lin, H.; Li, J. TPoet: Topic-Enhanced Chinese Poetry Generation. ACM Trans. Asian Low-Resour. Lang. Inf. Process. 2023, 22, 1–15. [Google Scholar] [CrossRef]

- Mao, Y.; Cai, F.; Guo, Y.; Chen, H. Incorporating emotion for response generation in multi-turn dialogues. Appl. Intell. 2022, 52, 7218–7229. [Google Scholar] [CrossRef]

- He, X. Parallel Refinements for Lexically Constrained Text Generation with BART. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021. [Google Scholar]

- Xu, F.; Xu, G.; Wang, Y.; Wang, R.; Ding, Q.; Liu, P.; Zhu, Z. Diverse dialogue generation by fusing mutual persona-aware and self-transferrer. Appl. Intell. 2022, 52, 4744–4757. [Google Scholar] [CrossRef]

- Mo, L.; Wei, J.; Huang, Q.; Cai, Y.; Liu, Q.; Zhang, X.; Li, Q. Incorporating sentimental trend into gated mechanism based transformer network for story ending generation. Neurocomputing 2021, 453, 453–464. [Google Scholar] [CrossRef]

- Spangher, A.; Hua, X.; Ming, Y.; Peng, N. Sequentially Controlled Text Generation. arXiv 2023, arXiv:2301.02299. [Google Scholar]

- Chung, J.J.Y.; Kim, W.; Yoo, K.M.; Lee, H.; Adar, E.; Chang, M. TaleBrush: Sketching Stories with Generative Pretrained Language Models. In Proceedings of the CHI ’22: CHI Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April 2022–5 May 2022. [Google Scholar]

- Wang, J.; Zou, B.; Li, Z.; Qu, J.; Zhao, P.; Liu, A.; Zhao, L. Incorporating Commonsense Knowledge into Story Ending Generation via Heterogeneous Graph Networks. In Proceedings of the Database Systems for Advanced Applications—27th International Conference, Virtual, 11–14 April 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13247, pp. 85–100. [Google Scholar]

- Chen, J.; Chen, J.; Yu, Z. Incorporating Structured Commonsense Knowledge in Story Completion. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Liu, R.; Zheng, G.; Gupta, S.; Gaonkar, R.; Gao, C.; Vosoughi, S.; Shokouhi, M.; Awadallah, A.H. Knowledge Infused Decoding. In Proceedings of the Tenth International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Wei, K.; Sun, X.; Zhang, Z.; Zhang, J.; Zhi, G. Trigger is not sufficient: Exploiting frame-aware knowledge for implicit event argument extraction. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, Virtual, 1–6 August 2021. [Google Scholar]

- Wei, K.; Sun, X.; Zhang, Z.; Jin, L.; Zhang, J.; Lv, J.; Zhi, G. Implicit Event Argument Extraction With Argument-Argument Relational Knowledge. IEEE Trans. Knowl. Data Eng. 2023, 35, 8865–8879. [Google Scholar] [CrossRef]

- Wei, K.; Yang, Y.; Jin, L.; Sun, X.; Zhang, Z.; Zhang, J.; Zhi, G. Guide the Many-to-One Assignment: Open Information Extraction via IoU-aware Optimal Transport. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023. [Google Scholar]

- Guan, J.; Huang, F.; Huang, M.; Zhao, Z.; Zhu, X. A Knowledge-Enhanced Pretraining Model for Commonsense Story Generation. Trans. Assoc. Comput. Linguistics 2020, 8, 93–108. [Google Scholar] [CrossRef]

- Xu, P.; Patwary, M.; Shoeybi, M.; Puri, R.; Fung, P.; Anandkumar, A.; Catanzaro, B. MEGATRON-CNTRL: Controllable Story Generation with External Knowledge Using Large-Scale Language Models. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing, Online, 16–20 November 2020; pp. 2831–2845. [Google Scholar]

- Speer, R.; Chin, J.; Havasi, C. ConceptNet 5.5: An Open Multilingual Graph of General Knowledge. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4444–4451. [Google Scholar]

- Martin, L.J.; Ammanabrolu, P.; Wang, X.; Hancock, W.; Singh, S.; Harrison, B.; Riedl, M.O. Event Representations for Automated Story Generation with Deep Neural Nets. In Proceedings of the AAAI Conference on Artificial Intelligence, Orleans, LA, USA, 2–7 February 2018; Volume 32, pp. 868–875. [Google Scholar]

- Martin, L.J.; Sood, S.; Riedl, M.O. Dungeons and DQNs: Toward Reinforcement Learning Agents that Play Tabletop Roleplaying Games. In Proceedings of the Joint Workshop on Intelligent Narrative Technologies and Workshop on Intelligent Cinematography and Editing Co-Located with 14th AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, INT/WICED@AIIDE 2018, Edmonton, AB, Canada, 13–14 November 2018. [Google Scholar]

- Glymour, M.; Pearl, J.; Jewell, N.P. Causal Inference in Statistics: A Primer; John Wiley & Sons: Chichester, UK; Hoboken, NJ, USA, 2016. [Google Scholar]

- Feder, A.; Keith, K.A.; Manzoor, E.; Pryzant, R.; Sridhar, D.; Wood-Doughty, Z.; Eisenstein, J.; Grimmer, J.; Reichart, R.; Roberts, M.E.; et al. Causal Inference in Natural Language Processing: Estimation, Prediction, Interpretation and Beyond. Trans. Assoc. Comput. Linguistics 2022, 10, 1138–1158. [Google Scholar] [CrossRef]

- Yao, L.; Chu, Z.; Li, S.; Li, Y.; Gao, J.; Zhang, A. A Survey on Causal Inference. ACM Trans. Knowl. Discov. Data 2021, 15, 74:1–74:46. [Google Scholar] [CrossRef]

- Zhang, Y.; Feng, F.; He, X.; Wei, T.; Song, C.; Ling, G.; Zhang, Y. Causal Intervention for Leveraging Popularity Bias in Recommendation. In Proceedings of the SIGIR ’21: The 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, 11–15 July 2021; pp. 11–20. [Google Scholar]

- Wang, W.; Feng, F.; He, X.; Zhang, H.; Chua, T. Clicks can be Cheating: Counterfactual Recommendation for Mitigating Clickbait Issue. In Proceedings of the SIGIR ’21: The 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual, 11–15 July 2021; pp. 1288–1297. [Google Scholar]

- Qian, C.; Feng, F.; Wen, L.; Ma, C.; Xie, P. Counterfactual Inference for Text Classification Debiasing. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, Virtually, 1–6 August 2021. [Google Scholar]

- Zhang, W.; Lin, H.; Han, X.; Sun, L. De-biasing Distantly Supervised Named Entity Recognition via Causal Intervention. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, Virtually, 1–6 August 2021. [Google Scholar]

- Li, S.; Li, X.; Shang, L.; Dong, Z.; Sun, C.; Liu, B.; Ji, Z.; Jiang, X.; Liu, Q. How Pre-trained Language Models Capture Factual Knowledge? A Causal-Inspired Analysis. In Proceedings of the Findings of the Association for Computational Linguistics, Dublin, Ireland, 22–27 May 2022; pp. 1720–1732. [Google Scholar]

- Zhu, Y.; Sheng, Q.; Cao, J.; Li, S.; Wang, D.; Zhuang, F. Generalizing to the Future: Mitigating Entity Bias in Fake News Detection. In Proceedings of the SIGIR ’22: The 45th International ACM SIGIR Conference on Research and Development in Information Retrieval, Madrid, Spain, 11–15 July 2022. [Google Scholar]

- Li, B.; Su, P.; Chabbi, M.; Jiao, S.; Liu, X. DJXPerf: Identifying Memory Inefficiencies via Object-Centric Profiling for Java. In Proceedings of the 21st ACM/IEEE International Symposium on Code Generation and Optimization, Montreal, QC, Canada, 25 February–1 March 2023. [Google Scholar]

- Li, B.; Xu, H.; Zhao, Q.; Su, P.; Chabbi, M.; Jiao, S.; Liu, X. OJXPERF: Featherlight Object Replica Detection for Java Programs. In Proceedings of the 44th IEEE/ACM 44th International Conference on Software Engineering, Pittsburgh, PA, USA, 25–27 May 2022. [Google Scholar]

- Xu, G. Finding reusable data structures. In Proceedings of the 27th Annual ACM SIGPLAN Conference on Object-Oriented Programming, Systems, Languages, and Applications, Tucson, AZ, USA, 21–25 October 2012. [Google Scholar]

- Li, B.; Zhao, Q.; Jiao, S.; Liu, X. DroidPerf: Profiling Memory Objects on Android Devices. In Proceedings of the 29th Annual International Conference on Mobile Computing and Networking, ACM MobiCom 2023, Madrid, Spain, 2–6 October 2023; pp. 1–15. [Google Scholar]

- Liu, C.; Gan, L.; Kuang, K.; Wu, F. Investigating the Robustness of Natural Language Generation from Logical Forms via Counterfactual Samples. In Proceedings of the 2022 Conference on Empirical Methods in Natural Language Processing, Abu Dhabi, United Arab Emirates, 7–11 December 2022. [Google Scholar]

- You, D.; Niu, S.; Dong, S.; Yan, H.; Chen, Z.; Wu, D.; Shen, L.; Wu, X. Counterfactual explanation generation with minimal feature boundary. Inf. Sci. 2023, 625, 342–366. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the 1st International Conference on Learning Representations, ICLR 2013, Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; A Meeting of SIGDAT, a Special Interest Group of the ACL. ACL: Doha, Qatar, 2014; pp. 1532–1543. [Google Scholar]

- Lison, P.; Tiedemann, J. OpenSubtitles2016: Extracting Large Parallel Corpora from Movie and TV Subtitles. In Proceedings of the Tenth International Conference on Language Resources and Evaluation LREC 2016, Portorož, Slovenia, 23–28 May 2016. [Google Scholar]

- Speer, R.; Lowry-Duda, J. ConceptNet at SemEval-2017 Task 2: Extending Word Embeddings with Multilingual Relational Knowledge. In Proceedings of the 11th International Workshop on Semantic Evaluation (SemEval-2017), Vancouver, BC, Canada, 3–4 August 2017; pp. 85–89. [Google Scholar]

- Yu, W.; Zhu, C.; Li, Z.; Hu, Z.; Wang, Q.; Ji, H.; Jiang, M. A Survey of Knowledge-enhanced Text Generation. ACM Comput. Surv. 2022, 54, 1–38. [Google Scholar] [CrossRef]

- Sap, M.; Bras, R.L.; Allaway, E.; Bhagavatula, C.; Lourie, N.; Rashkin, H.; Roof, B.; Smith, N.A.; Choi, Y. ATOMIC: An Atlas of Machine Commonsense for If-Then Reasoning. In Proceedings of the AAAI conference on artificial intelligence, Honolulu, HI, USA, 27 January 27–1 February 2019. [Google Scholar]

- Fan, A.; Lewis, M.; Dauphin, Y.N. Hierarchical Neural Story Generation. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Mostafazadeh, N.; Chambers, N.; He, X.; Parikh, D.; Batra, D.; Vanderwende, L.; Kohli, P.; Allen, J.F. A Corpus and Evaluation Framework for Deeper Understanding of Commonsense Stories. arXiv 2016, arXiv:1604.01696. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Zhang, T.; Kishore, V.; Wu, F.; Weinberger, K.Q.; Artzi, Y. BERTScore: Evaluating Text Generation with BERT. In Proceedings of the 8th International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Qin, L.; Shwartz, V.; West, P.; Bhagavatula, C.; Hwang, J.D.; Le Bras, R.; Bosselut, A.; Choi, Y. Back to the Future: Unsupervised Backprop-based Decoding for Counterfactual and Abductive Commonsense Reasoning. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 794–805. [Google Scholar]

- Devlin, J.; Chang, M.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

| Event | Type of Relations | Inference Examples | Inference dim. |

|---|---|---|---|

| She and her brother watched the whole film. | If-Event Then-Mental-State | to be entertained | XIntent |

| happy | XReact | ||

| If-Event Then-Event | to go to the theater | XNeed | |

| learns something new | OEffect | ||

| If-Event Then-Persona | interested | XAttr | |

| Suddenly, she woke up in pain. | If-Event Then-Mental-State | to feel better | XIntent |

| hurt | XReact | ||

| worried | OReact | ||

| If-Event Then-Event | to have had a nightmare | XNeed | |

| cries | XEffect | ||

| to go to the bathroom | XWant | ||

| to make sure she is ok | OWant | ||

| If-Event Then-Persona | hurt | XAttr |

| Method | Single Metric | Overall Metric | ||

|---|---|---|---|---|

| BLEU | BERT | ENTScore | HMEAN | |

| Human | 64.76 | 78.82 | 80.56 | 71.8 |

| GPT2 | 1.39 | 47.13 | 54.21 | 2.7 |

| GPT2+FT | 3.9 | 53.00 | 52.77 | 7.26 |

| DELOREAN | 23.89 | 59.88 | 51.4 | 32.62 |

| EDUCAT | 44.05 | 74.06 | 32.28 | 37.26 |

| CLICK(−0.2−w/o−kno) | 62 | 78.2 | 29.35 | 39.84 |

| CLICK(−w/o−ske) | 2.5 | 51.3 | 55.1 | 4.8 |

| CLICK(Final) | 46.7 | 73.2 | 36.7 | 41.1 |

| Ablation | Single Metric | Overall Metric | ||

|---|---|---|---|---|

| BLEU | BERT | ENTScore | HMean | |

| Full CLICK | 46.7 | 73.2 | 36.7 | 41.1 |

| w/o skeleton | 2.5 (↓ 44.2) | 51.3 | 55.1 | 4.8 |

| w/o knowledge | 46.1 | 72.8 | 35.46 (↓ 1.24) | 40.07 |

| w/o ske w/o kno | 1.39 | 47.13 | 54.21 | 2.7 (↓ 38.4) |

| Knowledge Type | Single Metric | Overall Metric | |||

|---|---|---|---|---|---|

| BLEU | BERT | ENTScore | HMean | ||

| w/o knowledge | 46.1 | 72.8 | 35.46 | 40.07 | |

| If-Event-Then Mental-State | xIntent | 46.49 | 73.20 | 35.72 | 40.40 |

| xReact | 45.70 | 73.00 | 35.10 | 39.70 | |

| xIntent+xReact | 45.93 | 73.00 | 37.10 | 41.00 | |

| If-Event-Then Event | xEffect | 47.21 | 73.53 | 34.37 | 39.81 |

| xWant | 44.10 | 72.20 | 35.84 | 39.51 | |

| xNeed | 45.62 | 72.96 | 36.72 | 40.69 | |

| xEffect+xWant+ xNeed | 46.00 | 73.17 | 36.93 | 40.96 | |

| If-Event-Then Others | oReact | 46.70 | 73.00 | 34.90 | 40.00 |

| oEffect | 46.70 | 73.40 | 35.90 | 40.60 | |

| oWant | 47.00 | 73.30 | 35.50 | 40.30 | |

| oReact+oEffect+ oWant | 46.10 | 73.20 | 34.73 | 39.60 | |

| If-Event-Then Persona | xAttr | 46.70 | 73.20 | 36.70 | 41.10 |

| Nine kinds of knowledge | 46.67 | 72.90 | 36.15 | 40.74 | |

| Case 1 | |

| Premise | The day was sunny and warm, a perfect day for a picnic. |

| Orig Condition | Mom, James, and Renee went to the park. |

| CF condition | Rain started to fall. |

| Orig Ending | First they went for a walk. Then they had a picnic by the river. They all had a good time. |

| CF Ending | They found a covered seating area. Then they had a picnic there. They all had a good time. |

| Methods | Generated Counterfactual Ending |

| EDUCAT | So I went for a walk. Yes, I had a picnic by the river. I had a great time. |

| Logical Incoherence | |

| Sketch&Customize | Rain was then followed by a thunderstorm. All the picnic food was soaked. Then it was a cold day. |

| Overediting | |

| CLICK | First they found a rainbow. Then they had a great lunch by the window. They all had a good time. |

| Case 2 | |

| Premise | Tom was making some pasta. |

| Orig Condition | He boiled some water. |

| CF condition | He took all of the ingredients out of the pantry. |

| Orig Ending | He left the kitchen to answer an important phone call. When he came back there was water all over the ground. He turned off the stove and cleaned up the kitchen. |

| CF Ending | He left the kitchen to answer an important phone call. When he came back the dog had knocked everything over. He picked up the food and cleaned up the kitchen. |

| Methods | Generated Counterfactual Ending |

| EDUCAT | He left the kitchen to take an urgent phone call. When he got home, there was water all over the ground. He turned off the water and left the kitchen. |

| Low Counterfactual Consistency | |

| Sketch&Customize | He sat down to answer an important phone call. When he came back he was the ground. Tom turned off the oven to start it up. |

| Logical Incoherence | |

| CLICK | He left the kitchen to answer an important phone call. When he came back he found there was mold all over the pasta. He cleaned off the mess and cleaned up the kitchen. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, D.; Guo, Z.; Liu, Q.; Jin, L.; Zhang, Z.; Wei, K.; Li, F. CLICK: Integrating Causal Inference and Commonsense Knowledge Incorporation for Counterfactual Story Generation. Electronics 2023, 12, 4173. https://doi.org/10.3390/electronics12194173

Li D, Guo Z, Liu Q, Jin L, Zhang Z, Wei K, Li F. CLICK: Integrating Causal Inference and Commonsense Knowledge Incorporation for Counterfactual Story Generation. Electronics. 2023; 12(19):4173. https://doi.org/10.3390/electronics12194173

Chicago/Turabian StyleLi, Dandan, Ziyu Guo, Qing Liu, Li Jin, Zequn Zhang, Kaiwen Wei, and Feng Li. 2023. "CLICK: Integrating Causal Inference and Commonsense Knowledge Incorporation for Counterfactual Story Generation" Electronics 12, no. 19: 4173. https://doi.org/10.3390/electronics12194173

APA StyleLi, D., Guo, Z., Liu, Q., Jin, L., Zhang, Z., Wei, K., & Li, F. (2023). CLICK: Integrating Causal Inference and Commonsense Knowledge Incorporation for Counterfactual Story Generation. Electronics, 12(19), 4173. https://doi.org/10.3390/electronics12194173