Abstract

To achieve an acceptable level of security on the web, the Completely Automatic Public Turing test to tell Computer and Human Apart (CAPTCHA) was introduced as a tool to prevent bots from doing destructive actions such as downloading or signing up. Smartphones have small screens, and, therefore, using the common CAPTCHA methods (e.g., text CAPTCHAs) in these devices raises usability issues. To introduce a reliable, secure, and usable CAPTCHA that is suitable for smartphones, this paper introduces a hand gesture recognition CAPTCHA based on applying genetic algorithm (GA) principles on Multi-Layer Perceptron (MLP). The proposed method improves the performance of MLP-based hand gesture recognition. It has been trained and evaluated on 2201 videos of the IPN Hand dataset, and MSE and RMSE benchmarks report index values of 0.0018 and 0.0424, respectively. A comparison with the related works shows a minimum of 1.79% fewer errors, and experiments produced a sensitivity of 93.42% and accuracy of 92.27–10.25% and 6.65% improvement compared to the MLP implementation. The range of the supported hand gestures can be a limit for the application of this research as a limited range may result in a vulnerable CAPTCHA. Also, the processes of training and testing require significant computational resources. In the future, we will optimize the method to run with high reliability in various illumination conditions and skin color and tone. The next development plan is to use augmented reality and create unpredictable random patterns to enhance the security of the method.

1. Introduction

The Internet has turned into an essential requirement of any modern society, and almost everybody around the world uses it for daily life activities, e.g., communication, shopping, research, etc. The growing number of Internet applications, the large volume of the exchanged information, and the diversity of services attract the attention of people wishing to gain unauthorized access to these resources, e.g., hackers, attackers, and spammers. These attacks highlight the critical role of information security technologies to protect resources and limit access to only authorized users. To this end, various preventive and protective security mechanisms, policies, and technologies are designed [1,2].

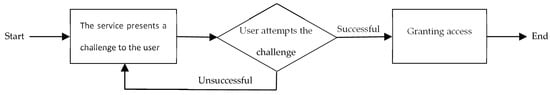

Approximately a decade ago, the Completely Automatic Public Turing test to tell Computers and Humans Apart (CAPTCHA) was introduced by Ahn et al. [3] as a challenge-response authentication measure. The challenge, as illustrated in Figure 1, is an example of Human Interaction Proofs (HIPs) to differentiate between computer programs and human users. The CAPTCHA methods are often designed based on open and hard Artificial Intelligence (AI) problems that are easily solvable for human users [4].

Figure 1.

The structure of the challenge-response authentication mechanism.

As attempts for unauthorized access increase every day, the use of CAPTCHA is needed to prevent the bots from disrupting services such as subscription, registration, and account/password recovery, running attacks like spamming blogs, search engine attacks, dictionary attacks, email worms, and block scrapers. Online polling, survey systems, ticket bookings, and e-commerce platforms are the main targets of the bots [5].

The critical usability issues of initial CAPTCHA methods pushed the cybersecurity researchers to evolve the technology towards alternative solutions that alleviate the intrinsic inconvenience with a better user experience, more interesting designs (i.e., gamification), and support for disabled users. The emergence of powerful smartphones motivated the researchers to design gesture-based CAPTCHAs that are a combination of cognitive, psychomotor, and physical activities within a single task. This class is robust, user-friendly, and suitable for people with disabilities such as hearing and in some cases vision impairment. A gesture-based CAPTCHA works based on the principles of image processing to detect hand motions. It analyzes low-level features such as color and edge to deliver human-level capabilities in analysis, classification, and content recognition.

Recently, outstanding advances have been made in hand gesture recognition. The image processing techniques perform segmentation and feature extraction on the input images and detect the hand pose, fingertips, and hand palm in real-time. During the past twenty years, the researchers used color or wired gloves equipped with sensors to detect hand poses. However, the skin-color-based analysis does not require a paper cover or sensors in order to detect fingers and works fast, accurately, and in real-time.

To detect the hand gesture, the algorithm should first transform the video frames into two-dimensional images, and then apply segmentation and skin filter functions. In this area, the researchers have presented various methods that utilize machine learning techniques such as support vector machines (SVM) [6], artificial neural networks (ANN) [7], fuzzy systems [8], deep learning (DL) [9], and metaheuristic methods [10].

The main contribution of this paper is the development of a novel hand-gesture-controlled CAPTCHA for smartphones that is easy to use even for people with visual challenges. Choosing a set of easy-to-imitate hand gestures enhances the usability of the method and minimizes the number of reattempts. The originality of the method is extended by detecting the bending angle of human fingers by applying genetic algorithm (GA) principles on a Multi-Layer Perceptron (MLP). The steps include detecting the palm center and fingertips, defining the distance between the palm center and each fingertip, and calculating the bending degree of fingers based on the detected distances. It then detects the bending degree of human fingers and, accordingly, the hand gesture. The target application of the GAPTCHA is to have a secure method for differentiating bots from genuine users in smartphones.

In what follows next, Section 2 reviews the literature of different CAPTCHA classes: visual, non-visual, and miscellaneous methods. Section 3 presents the abbreviations and notations used throughout the paper. Section 4 elaborates on the structure of the proposed method including the processes of hand detection based on skin color, detecting hand pose, palm, fingertips, and fingers’ bend angle. Section 5 covers implementation and experiments. Analysis, comparison, and discussion are located in Section 6, and the work is concluded in Section 7.

2. Background and Related Works

The increasing popularity of the web has made it an attractive ground for hackers and spammers, especially where there are financial transactions or user information [1]. CAPTCHAs are used to prevent bots from accessing the resources designed for human use, e.g., login/sign-up procedures, online forms, and even user authentication [11]. The general applications of CAPTCHAs include preventing comment spamming, accessing email addresses in plaintext format, dictionary attacks, and protecting website registration, online polls, e-commerce, and social network interactions [1].

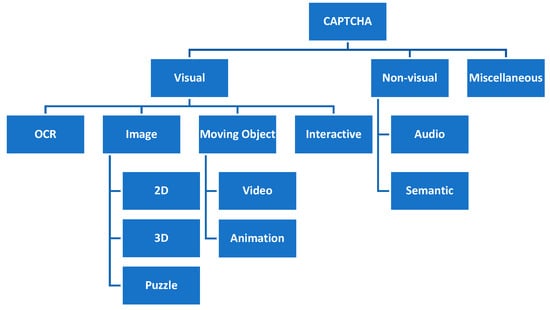

Based on the background technologies, CAPTCHAs can be broadly classified into three classes: visual, non-visual, and miscellaneous [1]. Each class then may be divided into several sub-classes, as illustrated in Figure 2.

Figure 2.

CAPTCHA classification.

The first class is visual CAPTCHA, which challenges the user on its ability to recognize the content. Despite the usability issues for visually impaired people, this class is easy to implement and straightforward.

Text-based or Optic Character Recognition (OCR)-based CAPTCHA is the first sub-class of visual CAPTCHAs. While the task is easy for a human, it challenges the automatic character recognition programs by distorting the combination of numbers and alphabets. Division, deformation, rotation, color variation, size variation, and distortion are some examples of techniques used to make the text unrecognizable for the machine [12]. BaffleText [12], Pessimal Print [13], GIMPY and Ez–Gimpy [14], and ScatterType [15] are some of the proposed methods in this area. Google reCHAPTCHA v2 [16], Microsoft Office, and Yahoo! Mail are famous examples of industry-designed CAPTCHAs [17]. Bursztein et al. [18] designed a model to measure the security of text-based CAPTCHAs. They identified several areas of improvement and designed a generic solution.

Due to the complexity of object recognition tasks, the second sub-class of visual CAPTCHAs is designed on the principles of semantic gap [19]—people can understand more from a picture than a computer. Among the various designed image-based CAPTCHAs, ESP-Pix [3], Imagination [20], Bongo [3], ARTiFACIAL [21], and Asirra [22] are the more advanced ones. The image-based CAPTCHAs have two variations: 2D and 3D [23]. In fact, the general design of the methods in this sub-class is 2D; however, some methods utilize 3D images (of characters) as a human is easier to recognize in 3D images. Another type of image-based CAPTCHA is a puzzle game designed by Gao et al. [24]. The authors used variants in color, orientation, and size, and they could successfully enhance the security. They prove that puzzle-based CAPTCHAs need less time than text-based ones. An extended version of [24] that uses animation effects was later developed in [25].

Moving object CAPTCHA is a relatively new trend that shows a video and asks the users to type what they have perceived or seen [26]. Despite being secure, this method is very complicated and expensive compared to the other solutions.

The last sub-class of visual CAPTCHAs, interactive CAPTCHA, tries to increase user interactions to enhance security. This method requires more mouse clicks, drag, and similar activities. Some variations request the user to type the perceived or identified response. Except for the users that have some sort of disabilities, solving the challenges is easy for humans but hard for computers. The methods designed by Winter-Hjelm et al. [27] and Shirali-Shahreza et al. [28] are two interactive CAPTCHA examples.

The second CAPTCHA class contains non-visual methods in which the user is assessed based on audio and semantic tests. The audio-based methods are subjected to speech recognition attacks, while the semantic-based methods are very secure, and it is much harder for them to be cracked by computers. Moreover, semantic-based methods are relatively easy for users, even the ones with hearing or visual impairment. However, the semantic methods might be very challenging for users with cognitive deficiencies [1].

The first audio-based CAPTCHA method was introduced by Chan [29], and later other researchers such as Holman et al. [30] and Schlaikjer [31] proposed more enhanced methods. A relatively advanced approach in this area is to ask the user to repeat the played sentence. The recorded response is then analyzed by a computer to check if the recorded speech is made by a human or a speech synthesis system [28]. Limitations of this method include requiring equipment such as a microphone and speaker and being difficult for people with hearing and speech disabilities.

Semantic CAPTCHAs are relatively a secure CAPTCHA sub-class as computers are far behind the capabilities of humans in answering semantic questions. However, they still are vulnerable to attacks that use computational knowledge engines, i.e., search engines or Wolfram Alpha. The implementation cost of semantic methods is very low as they are normally presented in a plaintext format [1]. The works by Lupkowski et al. [32] and Yamamoto et al. [33] are examples of a semantic CAPTCHA.

The third CAPTCHA class utilizes diverse technologies, sometimes in conjunction with visual or audio methods, to present techniques with novel ideas and extended features. Google’s reCAPTCHA is a free service [16] for safeguarding websites from spam or unauthorized access. The method works based on adaptive challenges and an advanced risk analysis system to prevent bots from accessing web resources. In reCAPTCHA, the user first needs to click on a checkbox. If it fails, the user then needs to select specific images from the set of given images. Google, in 2018, released the third version, i.e., reCAPTCHA v3 or NoCAPTCHA [34]. This version monitors the user’s activities and reports the probability of them being human or robot without needing the user to click the “I’m not a robot” checkbox. However, NoCAPTCHA is vulnerable to some technical issues such as erasing cookies, blocking JavaScript, and using incognito web browsing.

Yadava et al. [35] designed a method that displays the CAPTCHA for a fixed time and refreshes it until the user enters the correct answer. The refreshment only changes the CAPTCHA, not the page, and the defined time makes cracking it harder for the bots.

Wang et al. [36] put forward a graphical password scheme based on CAPTCHAs. The authors claimed that it can strengthen security by enhancing the capability to resist brute force attacks, withstand spyware, and reduce the size of password space.

Solved Media presented an advertisement CAPTCHA method in which the user should enter a response to the shown advertisement to pass the challenge. This idea can be extended to different areas, e.g., education or culture [1].

A recent trend is to design personalized CAPTCHAs that are specific to the users based on their conditions and characteristics. In this approach, an important aspect is identifying the cognitive capabilities of the user that must be integrated into the process of designing the CAPTCHA [37]. Another aspect is personalizing the content by considering factors such as geographical location to prevent third-party attacks. As an example, Wei et al. [38] developed a geographic scene image CAPTCHA that combines Google Map information and an image-based CAPTCHA to challenge the user with information that is privately known.

Solving a CAPTCHA on a small screen of a smartphone might be a hassle for the users. Jiang et al. [39] found that wrong touches on the screen of a mobile phone decrease the CAPTCHA performance. Analysis of the user’s finger movements in the back end of the application can help in differentiating between a bot and a human [40]. For example, by analyzing the sub-image dragging pattern, their method can detect whether the action is “BOTish” or “HUMANish”. Some similar approaches, like [41,42], challenge the user to find segmentation points in cursive words.

In the area of hand gesture recognition (HGR), the early solutions used a data glove, hand belt, and camera. Luzhnica et al. [43] used a hand belt equipped with a gyroscope, accelerometer, and Bluetooth for HGR. Hung et al. [44] acquired the input required data from hand gloves. Another research work used the Euclidean distance for analyzing twenty-five different hand gestures and employed an SVM for classification and controlling tasks [45]. In another effort [46], the researchers converted the red, green, and blue (RGB) captured images to grayscale, applied a Gaussian filter for noise reduction, and fed the results to a classifier to detect the hand gesture.

Chaudhary et al. [47] used a normal Windows-based webcam for recording user gestures. Their proposed method extracts the region of interest (ROI) from frames and applies a hue-saturation-value (HSV)-based skin filter on RGB images, in particular, illumination conditions. To help the fingertip detection process, the method analyzes hand direction according to pixel density in the image. It also detects the palm hand based on the number of pixels located in a 30 × 30-pixel mask on the cropped ROI image. The method has been implemented by a supervised neural network based on the Levenberg–Marquardt algorithm and uses eight thousand samples for all fingers. The architecture of the method has five input layers for five input finger poses and five output layers for the bent angle of the fingers. Marium et al. [48] extracted the hand gestures, palms, fingers, and fingertips from webcam videos using the functions and connectors in OpenCV.

Simion et al. [49] researched finger pose detection for mouse control. Their method, in the first step, detects the fingertips (except the thumb) and palm hand. Its second step is to calculate the distances between the pointing and middle fingers to the center of the palm and the distance between the tips of the pointing and middle fingers. The third step is to compute the angles between the two fingers, between the pointing finger and the x-axis, and between the middle finger and the x-axis. The researchers used six hand poses for mouse movements including right/left click, double click, and right/left movement.

3. Abbreviations and Notations

This research uses various equations and a substantial number of abbreviations in the areas of image processing and machine learning. In order to keep the language consistent and clear throughout the paper, Table 1 lists and briefly explains the abbreviations and notations used.

Table 1.

Abbreviations and notations.

4. The Proposed Method

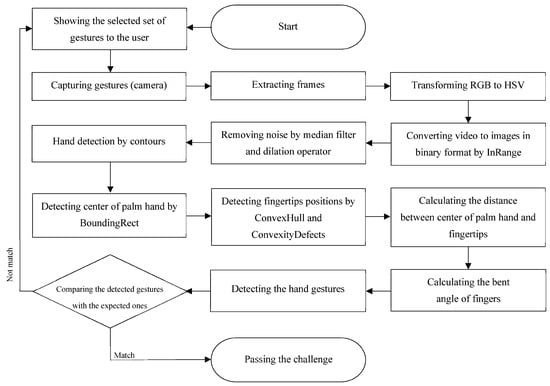

Gestural CAPTCHA, hereafter called GAPTCHA, shows a set of simple hand poses to the user and then requests the user to imitate these gestures in front of the camera. As illustrated in Figure 3, in this method, the essence of recognizing the gestures is calculating the fingers’ bent angles. The method preprocesses and applies a skin filter on the input 2D image. The employed segmentation method extracts the hand pose from an image even if the background contains skin-colored objects. It focuses only on the extracted areas of interest in the image for faster and more accurate analysis. The method first detects the fingertips and palm in the segmented image and then calculates the distance between the center of the palm and each fingertip. It calculates the bending angle of fingers based on the distances and detects fingers, palm, and angles with no errors. The proposed method can work with a normal quality camera for capturing the user’s hand movements, and no marked gloves, wearable sensors, or even a long sleeve is required. It also has no limitation for the camera angle.

Figure 3.

The GAPTCHA process.

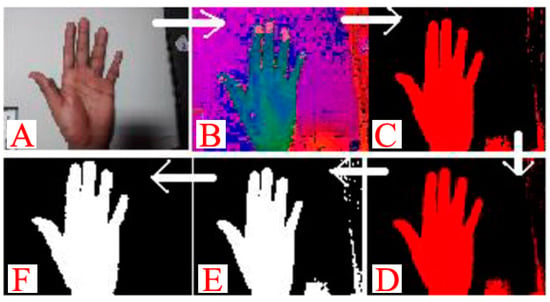

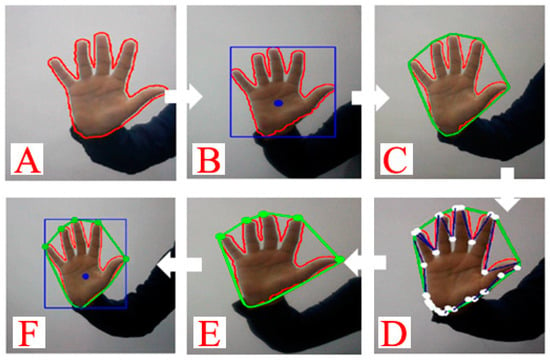

The most famous segmentation method in HGR systems is skin color as it is invariant to changes like size, rotation, and movements. The captured webcam images are in an RGB color system, and the involved parameters in this format are highly correlated and sensitive to illumination. However, the HSV system separates the color and illumination information. Therefore, in skin-color-based HGR systems, the captured RGB images are first converted to the HSV system and then segmented to generate the binary version of the image. Each pixel of a binary image is stored in one bit, and due to the skin-colored pixels in the background, these images normally carry some noise. As shown in Figure 4, this noise creates unwanted spots in the image. A median filter then removes the isolated spots and a dilation operator fills the regions.

Figure 4.

An example of producing a binary image: (A) original images, (B) HSV conversion, (C) filtered image, (D) smooth image, (E) binary image, and (F) noise-free image [50].

The proposed method supports freedom of angle for the camera and user, and the only limitation is to face the palm hand to the camera. The method uses contours to extract the hand image from the binary image. A contour is a list of the points that represent a curve in the image and its main application is in the analysis and detection of shapes. Here, FindContours in OpenCV is used to find the largest contour in the binary image—the hand shape shown in Figure 5A. Finding the center of the contour helps in finding the center of the palm hand (Figure 5B). To this end, using the BoundingRect function in OpenCV, a bounding box is applied to the hand shape to find the palm hand center.

Figure 5.

(A) Hand contour, (B) palm hand, (C) ConvexHull polygon, (D) ConvexityDefects, (E) fingertips, and (F) palm hand and fingertips.

The ConvexHull algorithm is employed to identify fingertips. It returns a set of polygons in which the corners of the largest one represent the fingertips—Figure 5C. To automate the process, the ConvexityDefects function approximates the gaps between the contour and the polygon by straight lines. The output of this function is multiple records of four fields: (i) the starting defect point, (ii) the ending defect point, (iii) the middle (farthest) defect point that connects the starting and ending points, and (iv) the approximate distance to the farthest point. A sample output of this step is shown in Figure 5D. Each record results in two lines: a line from the starting point to the middle point, and a line from the middle point to the endpoint. However, the function may return more points than the number of fingertips. The steps for filtering the detected points and finding the correct location of fingertips include (i) calculating the internal angle between two defect areas in a certain period, (ii) calculating the angle between the starting point and contour center in a certain period, and (iii) measuring the line length to remain below the defined threshold. The green points in Figure 5E represent the results of this step.

Equations (1)–(3) are utilized to calculate the length of the produced vectors from start (point ), middle (point ), and end (point ) points, and Equation (4) computes the angle. , , and are the lengths of the vectors calculated by the ConvexityDefects function.

Calculating the angle between the first point of the defect area and the center of the contour is an essential step for removing the non-fingertips areas. The consequent step is to filter the points to the ones between −30 and +160 degrees. Equation (5) returns the degree between the first point of the defect area and the center of the contour, and Equation (6) calculates the Euclidean distance—the vector length—between the first and the middle points. The whole process from finding the hand contour to detecting the palm hand and fingertips is illustrated in Figure 5. In this equation, the word center represents the center of the contour, i.e., the center of the palm hand.

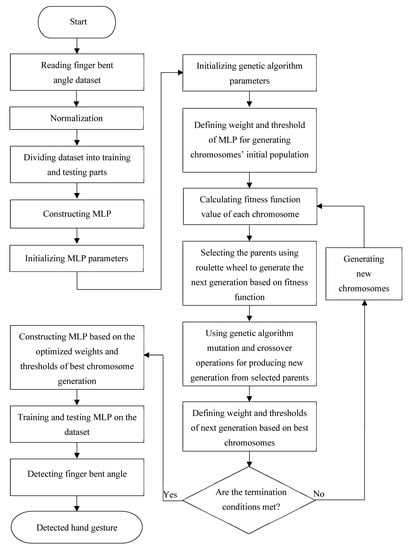

As already explained, this research uses a combination of MLP and GA to predict the bent angle of fingers. The used MLP has a flexible structure in which neurons are located in hidden layers and each neuron can influence its input and produce the desired output. To this end, a weight applies to the input value of each neuron and the result passes into an activation function together with a bias value. Reducing classification errors and correct prediction of the pose in an artificial neural network depends on selecting optimum weight and bias values. Figure 6 illustrates the detailed structure of the proposed method for applying GA principles on MLP to accurately detect the finger’s bent angle and accordingly recognize the hand gesture.

Figure 6.

The overall design process for hand gesture recognition by applying GA principles on MLP.

In GAPTCHA, the structure of each chromosome is its weight and bias in the neural network. This can be shown in the format of , in which is the weight of the ith entry into the jth neuron of the hidden layer, is the output, and and are the threshold values of the hidden and output layers, respectively. Upon defining the encoding system and the method of converting each answer to a chromosome, the next step is to produce the initial chromosome population. Normally, generating the initial population is a random process, however, heuristic algorithms can accelerate and optimize it. GAPTCHA uses a roulette wheel mechanism for selecting parents in mutation and crossover processes. In this mechanism, the probability of selecting a chromosome depends on how suitable it is for the evaluation function. In other words, the higher the quality of the chromosome, the higher the chance of being selected for producing the next generation and vice versa. Equation (7) calculates the chance of selecting a chromosome in the roulette wheel.

In the above equation, the probability (p) of selecting the ith chromosome is the proportion of the fitness function value of chromosome i to the sum of fitness function values of all chromosomes. A single-point crossover is used to apply crossover in this method. It chooses a random point in the chromosomes and swaps the information in the remaining parts. The steps to apply single-point crossover are as follows:

- Choose a random chromosome value between 0 and 1;

- Go to the next step and mutate if the number is bigger than the mutation threshold (a value between 0 and 1), otherwise skip mutation;

- Choose a random number that indicates one of the chromosome genes and makes a numerical mutation.

5. Implementation and Experiments

This research uses OpenCV functions and C++ for preprocessing, segmentation, and feature extraction from a video. The functions help to detect the center of the palm hand, fingertips, and bent angle of fingers. Matlab is the chosen platform due to having rich programming interface and metaheuristic libraries. The system specifications for running the experiments are Intel Core i7 12th generation CPU, 16 GB RAM, and Windows 11 OS. Applying GA principles to MLP helps the method to enhance predicting fingers’ bent angles. The following are the steps taken to implement the proposed method:

- Defining the initial parameters such as population, number of iterations, mutation rate, and crossover rate of GA;

- Preprocessing and normalizing part of input data to reduce learning errors and enhance performance in hand movement detection;

- Dividing the dataset into training and evaluation parts;

- Producing chromosomes for MLP and applying mutation and crossover on the chromosomes to find the optimum weight and bias and reduce the error rate;

- Evaluating the method according to the metrics in Section 5.2.

5.1. Dataset

The selected dataset for training and evaluation of the proposed method is “IPN Hand”, produced by Benitez et al. [51], which consists of 5649 RGB videos recorded in the resolution of 640 × 480 at 30 fps. The dataset has two parts: 1431 non-gestural and 4218 gestural videos. Since the gestural section contains gesture categories that are not easy to imitate, considering the usability requirement of GAPTCHA, some video categories were removed from the selected dataset.

After filtering the videos, the resulting subset contains 200 times click with one finger, 200 times click with two fingers, 200 times throw up, 201 times throw down, 200 times throw left, 200 times throw right, 200 times open twice, 200 times double click with one finger, 200 times double click with two fingers, 200 times zoom in, and 200 times zoom out videos—a total of 2201.

5.2. Evaluation Metrics

In the proposed method, each hand pose is considered as a class and is defined by a set of features. Therefore, this research challenges a multiclass optimization problem. The proposed method classifies each given sample in its corresponding class and tries to minimize the average learning and classification errors. The Mean Square Error (MSE) and Root Mean Square Error (RMSE) are the chosen goal test measures and can be calculated using Eq. 8 and 9. MSE is a measure to check how close the estimates are to actual values, and RMSE indicates the relation between observed data points and the model’s predicted values. In these equations, n represents the number of the samples, and real and predicted values of an instance, e.g., index i, are shown by and , respectively.

Two other important parameters for evaluating the applicability of the proposed algorithm are sensitivity and accuracy. Sensitivity is the proportion of true positive detections to the sum of true positives and false negatives (Equation (10)), and accuracy is the ratio of truly detected positives and negatives to all detections (Equation (11)).

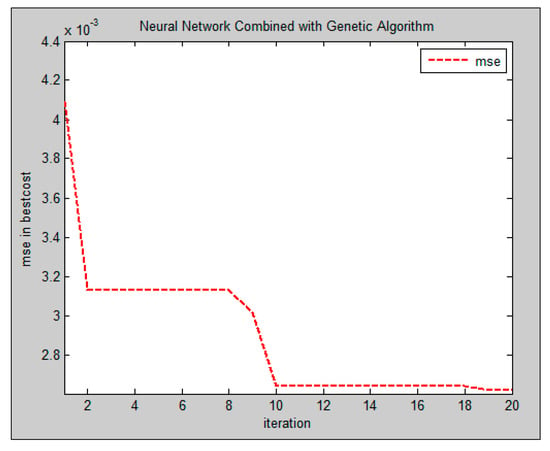

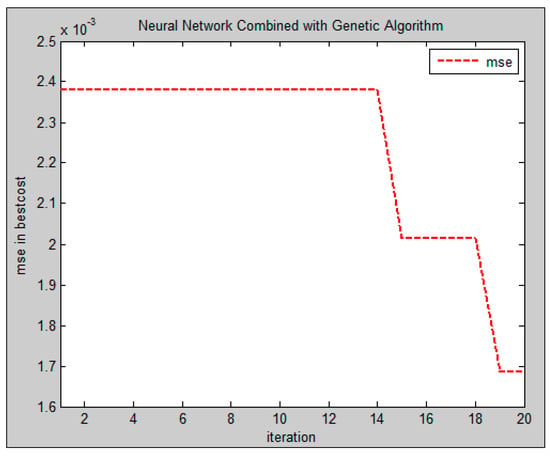

5.3. Error Analysis by Increasing Population and Iterations

The experiments were conducted by two populations of five and ten. The number of iterations was set to 20, and mutation and crossover ratios were defined as 0.15 and 0.5, respectively. A chromosome is a two-layer MLP in which each layer has four neurons. MSE prediction errors of both populations are reported in Figure 7 and Figure 8.

Figure 7.

Prediction error rate with 5 chromosomes in the proposed method.

Figure 8.

Prediction error rate with 10 chromosomes in the proposed method.

The reported values in Figure 7 and Figure 8 prove that increasing the number of chromosomes results in reducing MSE for hand pose detection. It happens due to the following reasons:

- Increasing the number of chromosomes increases the number of neural networks for prediction and results in a more accurate classification;

- Increasing population produces more and more diverse test ratios in GA, and accordingly increases the chance of finding a final and accurate answer;

- Increasing chromosomes in GA increases problem search space that normally results in reaching optimum answers and reducing error;

- Increasing the population increases the number of elite members and enhances the probability of mutation and crossover. This leads to increasing the chance of having a more accurate MLP for hand pose prediction.

Referring to Figure 8 and Figure 9, the relationship between the Mean Squared Error (MSE) and the number of iterations is observed to be in reverse correlation. This means that increasing the number of iterations allows the chromosomes (representing solutions in the genetic algorithm) more opportunities to choose optimal ratios in the Multi-Layer Perceptron (MLP) architecture, which results in lower MSE values. Therefore, fine-tuning the number of iterations during the optimization process can significantly impact the performance and accuracy of the GAPTCHA system for hand gesture recognition.

Figure 9.

Comparing MSE of the proposed method and MLP implementation.

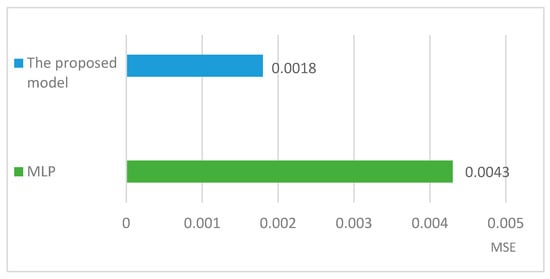

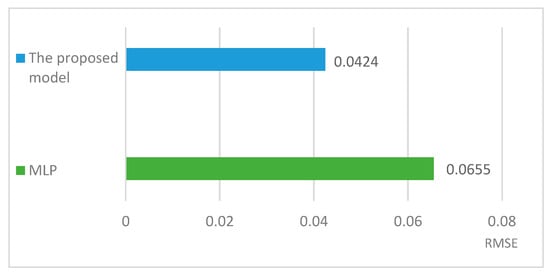

6. Evaluation

The proposed GA-based MLP method has been compared to a standard MLP implementation using several parameters and evaluation metrics. Both the proposed method and the standard MLP have four hidden neurons, a sigmoid activation function, a population size of 15, 30 iterations, a mutation rate of 0.15, and a crossover rate of 0.5. Additionally, 75% of the dataset was used for training, and the remaining 25% was used for testing. To evaluate the performance, two different metrics, namely Mean Squared Error (MSE) and Root Mean Squared Error (RMSE), were used. Figure 9 illustrates the MSE difference between the proposed GA-based MLP method and the standard MLP implementation, highlighting the impact of the genetic algorithm optimization on reducing the error. On the other hand, Figure 10 compares the two methods in terms of the RMSE benchmark, providing insights into their relative performance and accuracy.

Figure 10.

Comparing RMSE of the proposed method and MLP implementation.

The experiments and analysis of MSE and RMSE results demonstrate that the proposed method has higher accuracy compared to the MLP implementation. For the proposed method, the MSE and RMSE index values are 0.0018 and 0.0424, while for MLP these increase to 0.0043 and 0.0655, respectively. In other words, applying GA in the proposed method reduces MSE by 58.13% and RMSE by 35.26%. These lower error rates prove that using GA decreases the hand gesture detection errors and boosts the learning process.

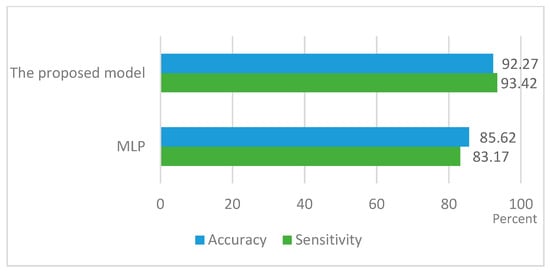

A comprehensive evaluation of sensitivity and accuracy was performed through 50 experiments, using the configurations specified earlier. Figure 11 illustrates the outcomes, showing that the proposed method achieved a sensitivity of 93.42%, significantly surpassing the 83.17% sensitivity of the standard MLP implementation. Regarding accuracy, the proposed method outperformed the MLP by 6.65%, achieving an impressive accuracy of 92.27% compared to 85.62%. These results provide compelling evidence that the combination of genetic algorithm (GA) and Multi-Layer Perceptron (MLP) in the proposed approach yields more reliable and robust performance in detecting human hand gestures. The proposed method showcases a substantial improvement in both sensitivity and accuracy, highlighting its potential for enhancing security and usability in mobile CAPTCHA authentication and other gesture recognition applications.

Figure 11.

Comparing sensitivity and accuracy of the proposed method and MLP implementation.

To the best of our knowledge, there is no other video CAPTCHA algorithm based on hand gesture detection. Therefore, to compare our work with the related ones, the hand gesture detection section of GAPTCHA is compared with the similar algorithms in [52,53,54,55]. Referring to the RMSE results in Table 2, GAPTCHA outperforms the Redmon et al. [53] work by a big distance (34.7%). Compared to Li et al. [52], our algorithm produces fewer errors (−1.79%) and is more efficient. The work by Shull et al. [54] produces 0.11 RMSE, and this metric’s value for the method of Manoj et al. [55] increases to 0.1732. Therefore, GAPTCHA outperforms these works by 6.76% and 13.08%, respectively.

Table 2.

RMSE comparison in hand gesture detection.

7. Conclusions

The expansion the Internet created a wider surface for malicious automated programs (i.e., bots) to run attacks such as Denial of Service (DoS) on the web services. The Completely Automated Public Turing test to tell Computers and Humans Apart (CAPTCHA) is an authentication mechanism that is able to distinguish human users from malicious computer programs.

Various types of CAPTCHAs are designed for different applications; for example, to help people with disabilities or for use on specific platforms. Using the common types of CAPTCHAs (e.g., text-based) may not be a convenient choice for smartphones due to their use in mobility and the small size of the screen. In this situation, a hand gesture recognition CAPTCHA can facilitate the use of the device to pass a human authentication challenge.

This research proposes a novel hand gesture recognition CAPTCHA for smartphones that applies genetic algorithm principles on MLP to develop a reliable method called Gestural CAPTCHA, i.e., GAPTCHA. The method shows a set of hand gestures to the user for imitation. GAPTCHA then recognizes the user’s hand gestures, utilizing a set of hand pose features based on the distances between fingertips and the center of the hand palm. Genetic algorithm principles such as concentrating on elite populations, mutation, and crossover have been used to reduce the detection error rates. The experiments show less 58.13% MSE and 35.26% RMSE hand gesture recognition errors compared to the standard MLP implementation. It also outdoes the related compared algorithms by at least 1.79%. In terms of sensitivity and accuracy, the proposed method reports values of 93.42% and 92.27%, while for MLP these values are 83.17% and 85.62%, respectively.

The effectiveness of GAPTCHA relies on the user’s ability to imitate a specific set of hand gestures accurately. If the gesture set is limited or does not cover a broad range of possible gestures, it may be easier for attackers to learn and reproduce them, potentially leading to security vulnerabilities. Moreover, the utilization of genetic algorithm principles on a Multi-Layer Perceptron may require significant computational resources during training and testing phases. This could lead to higher processing times and resource usage, particularly on mobile devices with limited hardware capabilities.

In the future, we will further develop GAPTCHA to turn it into an industry-use model. Our future research plans can be divided into two phases. In the first phase, we will (1) investigate the effects of various illumination conditions and (2) measure the accuracy of the model against different skin colors and tones. This phase aims to optimize the method to run reliably in all conditions. In the second phase, we will replace the shown gestures with augmented reality objects, and the user will be requested to do certain actions against a defined object. This replaces the predefined set of gestures with unpredictable actions and will make the model more attractive to the users. For example, a few moving animals will be shown, and the user needs to chase the rabbit by pointing a finger at it.

Author Contributions

Conceptualization, T.K.; validation, M.Z. and Manaf, A.A.M.; formal analysis, S.Y.; investigation, S.S.C.; writing—original draft preparation, F.M.A.; writing—review and editing, A.A.M.; All authors have read and agreed to the published version of the manuscript.”

Funding

This research received no external funding.

Data Availability Statement

Publicly available datasets were analyzed in this study. This data can be found here: Benitez-Garcia, G., Olivares-Mercado, J., Sanchez-Perez, G. and Yanai, K., 2021, January. IPN hand: A video dataset and benchmark for real-time continuous hand gesture recognition. In 2020 25th International Conference on PaZern Recognition (ICPR) (pp. 4340–4347). IEEE.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Moradi, M.; Keyvanpour, M. CAPTCHA and its Alternatives: A Review. Secur. Commun. Netw. 2015, 8, 2135–2156. [Google Scholar] [CrossRef]

- Chaeikar, S.S.; Alizadeh, M.; Tadayon, M.H.; Jolfaei, A. An intelligent cryptographic key management model for secure communications in distributed industrial intelligent systems. Int. J. Intell. Syst. 2021, 37, 10158–10171. [Google Scholar] [CrossRef]

- Von Ahn, L.; Blum, M.; Langford, J. Telling humans and computers apart automatically. Commun. ACM 2004, 47, 56–60. [Google Scholar] [CrossRef]

- Chellapilla, K.; Larson, K.; Simard, P.; Czerwinski, M. Designing human friendly human interaction proofs (HIPs). In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Portland, OR, USA, 2–7 April 2005; pp. 711–720. [Google Scholar]

- Chaeikar, S.S.; Jolfaei, A.; Mohammad, N.; Ostovari, P. Security principles and challenges in electronic voting. In Proceedings of the 2021 IEEE 25th International Enterprise Distributed Object Computing Workshop (EDOCW), Gold Coast, Australia, 25–29 October 2021; pp. 38–45. [Google Scholar]

- Arteaga, M.V.; Castiblanco, J.C.; Mondragon, I.F.; Colorado, J.D.; Alvarado-Rojas, C. EMG-driven hand model based on the classification of individual finger movements. Biomed. Signal Process. Control. 2020, 58, 101834. [Google Scholar] [CrossRef]

- Lupinetti, K.; Ranieri, A.; Giannini, F.; Monti, M. 3d dynamic hand gestures recognition using the leap motion sensor and convolutional neural networks. In Proceedings of the International Conference on Augmented Reality, Virtual Reality and Computer Graphics, Lecce, Italy, 7–9 September 2020; pp. 420–439. [Google Scholar]

- Cobos-Guzman, S.; Verdú, E.; Herrera-Viedma, E.; Crespo, R.G. Fuzzy logic expert system for selecting robotic hands using kinematic parameters. J. Ambient. Intell. Humaniz. Comput. 2020, 11, 1553–1564. [Google Scholar] [CrossRef]

- Martinelli, D.; Sousa, A.L.; Augusto, M.E.; Kalempa, V.C.; de Oliveira, A.S.; Rohrich, R.F.; Teixeira, M.A. Remote control for mobile robots using gestures captured by the rgb camera and recognized by deep learning techniques. In Proceedings of the 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), Rio Grande, Brazil, 22–26 October 2019; pp. 98–103. [Google Scholar]

- Chaeikar, S.S.; Ahmadi, A.; Karamizadeh, S.; Chaeikar, N.S. SIKM–a smart cryptographic key management framework. Open Comput. Sci. 2022, 12, 17–26. [Google Scholar] [CrossRef]

- Khodadadi, T.; Javadianasl, Y.; Rabiei, F.; Alizadeh, M.; Zamani, M.; Chaeikar, S.S. A novel graphical password authentication scheme with improved usability. In Proceedings of the 2021 4th International Symposium on Advanced Electrical and Communication Technologies (ISAECT), Alkhobar, Saudi Arabia, 6–8 December 2021; pp. 01–04. [Google Scholar]

- Chew, M.; Baird, H.S. Baffletext: A human interactive proof. In Document Recognition and Retrieval X; SPIE: Bellingham, WA, USA, 2003; Volume 5010, pp. 305–316. [Google Scholar]

- Baird, H.S.; Coates, A.L.; Fateman, R.J. Pessimalprint: A reverse turing test. Int. J. Doc. Anal. Recognit. 2003, 5, 158–163. [Google Scholar] [CrossRef]

- Mori, G.; Malik, J. Recognizing objects in adversarial clutter: Breaking a visual CAPTCHA. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003; Volume 1, p. I. [Google Scholar]

- Baird, H.S.; Moll, M.A.; Wang, S.Y. ScatterType: A legible but hard-to-segment CAPTCHA. In Proceedings of the Eighth International Conference on Document Analysis and Recognition (ICDAR’05), Seoul, Republic of Korea, 31 August–1 September 2005; pp. 935–939. [Google Scholar]

- Wang, D.; Moh, M.; Moh, T.S. Using Deep Learning to Solve Google reCAPTCHA v2’s Image Challenges. In Proceedings of the 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM), Seoul, Republic of Korea, 3–5 January 2020; pp. 1–5. [Google Scholar]

- Singh, V.P.; Pal, P. Survey of different types of CAPTCHA. Int. J. Comput. Sci. Inf. Technol. 2014, 5, 2242–2245. [Google Scholar]

- Bursztein, E.; Aigrain, J.; Moscicki, A.; Mitchell, J.C. The End is Nigh: Generic Solving of Text-based {CAPTCHAs}. In Proceedings of the 8th USENIX Workshop on Offensive Technologies (WOOT 14), San Diego, CA, USA, 19 August 2014. [Google Scholar]

- Obimbo, C.; Halligan, A.; De Freitas, P. CaptchAll: An improvement on the modern text-based CAPTCHA. Procedia Comput. Sci. 2013, 20, 496–501. [Google Scholar] [CrossRef][Green Version]

- Datta, R.; Li, J.; Wang, J.Z. Imagination: A robust image-based captcha generation system. In Proceedings of the 13th annual ACM international conference on Multimedia, Brisbane, Australia, 26–30 October 2005; pp. 331–334. [Google Scholar]

- Rui, Y.; Liu, Z. Artifacial: Automated reverse turing test using facial features. In Proceedings of the eleventh ACM International Conference on Multimedia, Berkeley, CA, USA, 2–8 November 2003; pp. 295–298. [Google Scholar]

- Elson, J.; Douceur, J.R.; Howell, J.; Saul, J. Asirra: A CAPTCHA that exploits interest-aligned manual image categorization. CCS 2007, 7, 366–374. [Google Scholar]

- Hoque, M.E.; Russomanno, D.J.; Yeasin, M. 2d captchas from 3d models. In Proceedings of the IEEE SoutheastCon, Memphis, TN, USA, 31 March 2006; pp. 165–170. [Google Scholar]

- Gao, H.; Yao, D.; Liu, H.; Liu, X.; Wang, L. A novel image based CAPTCHA using jigsaw puzzle. In Proceedings of the 2010 13th IEEE International Conference on Computational Science and Engineering, Hong Kong, China, 11–13 December 2010; pp. 351–356. [Google Scholar]

- Gao, S.; Mohamed, M.; Saxena, N.; Zhang, C. Emerging image game CAPTCHAs for resisting automated and human-solver relay attacks. In Proceedings of the 31st Annual Computer Security Applications Conference, Los Angeles, CA, USA, 7–11 December 2015; pp. 11–20. [Google Scholar]

- Cui, J.S.; Mei, J.T.; Wang, X.; Zhang, D.; Zhang, W.Z. A captcha implementation based on 3d animation. In Proceedings of the 2009 International Conference on Multimedia Information Networking and Security, Hubei, China, 18–20 November 2019; Volume 2, pp. 179–182. [Google Scholar]

- Winter-Hjelm, C.; Kleming, M.; Bakken, R. An interactive 3D CAPTCHA with semantic information. In Proceedings of the Norwegian Artificial Intelligence Symp, Trondheim, Norway, 26–28 November 2009; pp. 157–160. [Google Scholar]

- Shirali-Shahreza, S.; Ganjali, Y.; Balakrishnan, R. Verifying human users in speech-based interactions. In Proceedings of the Twelfth Annual Conference of the International Speech Communication Association, Florence, Italy, 27–31 August 2011. [Google Scholar]

- Chan, N. Sound oriented CAPTCHA. In Proceedings of the First Workshop on Human Interactive Proofs (HIP), Bethlehem, PA, USA, 19–20 May 2022; Available online: http://www.aladdin.cs.cmu.edu/hips/events/abs/nancy_abstract.pdf (accessed on 25 July 2023).

- Holman, J.; Lazar, J.; Feng, J.H.; D’Arcy, J. Developing usable CAPTCHAs for blind users. In Proceedings of the 9th international ACM SIGACCESS conference on Computers and Accessibility, Tempe, AZ, USA, 15–17 October 2007 (pp. 245-246). [Google Scholar]

- Schlaikjer, A. A dual-use speech CAPTCHA: Aiding visually impaired web users while providing transcriptions of Audio Streams. LTI-CMU Tech. Rep. 2007, 7–14. Available online: http://lti.cs.cmu.edu/sites/default/files/CMU-LTI-07-014-T.pdf (accessed on 25 July 2023).

- Lupkowski, P.; Urbanski, M. SemCAPTCHA—User-friendly alternative for OCR-based CAPTCHA systems. In Proceedings of the 2008 international multiconference on computer science and information technology, Wisla, Poland, 20–22 October 2018; pp. 325–329. [Google Scholar]

- Yamamoto, T.; Tygar, J.D.; Nishigaki, M. 2010, Captcha using strangeness in machine translation. In Proceedings of the 2010 24th IEEE International Conference on Advanced Information Networking and Applications, Perth, Australia, 20–23 April 2010; pp. 430–437. [Google Scholar]

- Gaggi, O. A study on Accessibility of Google ReCAPTCHA Systems. In Proceedings of the Open Challenges in Online Social Networks, Virtual Event, 30 August–2 September 2022; pp. 25–30. [Google Scholar]

- Yadava, P.; Sahu, C.; Shukla, S. Time-variant Captcha: Generating strong Captcha Security by reducing time to automated computer programs. J. Emerg. Trends Comput. Inf. Sci. 2011, 2, 701–704. [Google Scholar]

- Wang, L.; Chang, X.; Ren, Z.; Gao, H.; Liu, X.; Aickelin, U. Against spyware using CAPTCHA in graphical password scheme. In Proceedings of the 2010 24th IEEE International Conference on Advanced Information Networking and Applications, Perth, Australia, 20–23 April 2010; pp. 760–767. [Google Scholar]

- Belk, M.; Fidas, C.; Germanakos, P.; Samaras, G. Do cognitive styles of users affect preference and performance related to CAPTCHA challenges? In Proceedings of the CHI’12 Extended Abstracts on Human Factors in Computing Systems, Stratford, ON, Canada, 5–10 May 2012; pp. 1487–1492. [Google Scholar]

- Wei, T.E.; Jeng, A.B.; Lee, H.M. GeoCAPTCHA—A novel personalized CAPTCHA using geographic concept to defend against 3 rd Party Human Attack. In Proceedings of the 2012 IEEE 31st International Performance Computing and Communications Conference (IPCCC), Austin, TX, USA, 1–3 December 2012; pp. 392–399. [Google Scholar]

- Jiang, N.; Dogan, H. A gesture-based captcha design supporting mobile devices. In Proceedings of the 2015 British HCI Conference, Lincoln, UK, 13–17 July 2015; pp. 202–207. [Google Scholar]

- Pritom, A.I.; Chowdhury, M.Z.; Protim, J.; Roy, S.; Rahman, M.R.; Promi, S.M. Combining movement model with finger-stroke level model towards designing a security enhancing mobile friendly captcha. In Proceedings of the 2020 9th International Conference on Software and Computer Applications, Langkawi, Malaysia, 2020, 18–21 February; pp. 351–356.

- Parvez, M.T.; Alsuhibany, S.A. Segmentation-validation based handwritten Arabic CAPTCHA generation. Comput. Secur. 2020, 95, 101829. [Google Scholar] [CrossRef]

- Shah, A.R.; Banday, M.T.; Sheikh, S.A. Design of a drag and touch multilingual universal captcha challenge. In Advances in Computational Intelligence and Communication Technology; Springer: Singapore, 2021; pp. 381–393. [Google Scholar]

- Luzhnica, G.; Simon, J.; Lex, E.; Pammer, V. A sliding window approach to natural hand gesture recognition using a custom data glove. In Proceedings of the 2016 IEEE Symposium on 3D User Interfaces (3DUI), Greenville, SC, USA, 19–20 March 2016; pp. 81–90. [Google Scholar]

- Hung, C.H.; Bai, Y.W.; Wu, H.Y. Home outlet and LED array lamp controlled by a smartphone with a hand gesture recognition. In Proceedings of the 2016 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 7–11 January 2016; pp. 5–6. [Google Scholar]

- Chen, Y.; Ding, Z.; Chen, Y.L.; Wu, X. Rapid recognition of dynamic hand gestures using leap motion. In Proceedings of the 2015 IEEE International Conference on Information and Automation, Lijiang, China, 8–11 August 2015; pp. 1419–1424. [Google Scholar]

- Panwar, M.; Mehra, P.S. Hand gesture recognition for human computer interaction. In Proceedings of the 2011 International Conference on Image Information Processing, Brussels, Belgium, 11–14 November 2011; pp. 1–7. [Google Scholar]

- Chaudhary, A.; Raheja, J.L. Bent fingers’ angle calculation using supervised ANN to control electro-mechanical robotic hand. Comput. Electr. Eng. 2013, 39, 560–570. [Google Scholar] [CrossRef]

- Marium, A.; Rao, D.; Crasta, D.R.; Acharya, K.; D’Souza, R. Hand gesture recognition using webcam. Am. J. Intell. Syst. 2017, 7, 90–94. [Google Scholar]

- Simion, G.; David, C.; GSimion, G.; David, C.; Gui, V.; Caleanu, C.D. Fingertip-based real time tracking and gesture recognition for natural user interfaces. Acta Polytech. Hung. 2016, 13, 189–204. [Google Scholar]

- Chaudhary, A.; Raheja, J.L.; Raheja, S. A vision based geometrical method to find fingers positions in real time hand gesture recognition. J. Softw. 2012, 7, 861–869. [Google Scholar] [CrossRef]

- Benitez-Garcia, G.; Olivares-Mercado, J.; Sanchez-Perez, G.; Yanai, K. IPN hand: A video dataset and benchmark for real-time continuous hand gesture recognition. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; pp. 4340–4347. [Google Scholar]

- Li, Y.M.; Lee, T.H.; Kim, J.S.; Lee, H.J. Cnn-based real-time hand and fingertip recognition for the design of a virtual keyboard. In Proceedings of the 2021 36th International Technical Conference on Circuits/Systems, Computers and Communications (ITC-CSCC), Jeju, Republic of Korea, 27–30 June 2021; pp. 1–3. [Google Scholar]

- Redmon, J.; Farhadi, A. 2018. Yolov3: An incremental improvement. arxiv preprint 2018, arXiv:1804.02767. [Google Scholar]

- Shull, P.B.; Jiang, S.; Zhu, Y.; Zhu, X. Hand gesture recognition and finger angle estimation via wrist-worn modified barometric pressure sensing. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 724–732. [Google Scholar] [CrossRef] [PubMed]

- Manoj, H.M.; Pradeep, B.P.; Anil, C.; Rohith, S. An Novel Hand Gesture System for ASL using Kinet Sensor based Images. In Proceedings of the First International Conference on Computing, Communication and Control System, I3CAC 2021, Chennai, India, 7–8 June 2021; Bharath University: Chennai, India. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).