Abstract

Federated learning (FL) is an emerging machine learning method in which all participants can collaboratively train a model without sharing their raw data, thereby breaking down data silos and avoiding privacy issues caused by centralized data storage. In practical applications, client data are non-independent and identically distributed, resulting in FL requiring multiple rounds of communication to converge, which entails high communication costs. Moreover, the centralized architecture of traditional FL remains susceptible to privacy breaches, network congestion, and single-point failures. In order to solve these problems, this paper proposes an FL framework based on blockchain technology and a cluster training algorithm, called BCFL. We first improved an FL algorithm based on odd–even round cluster training, which accelerates model convergence by dividing clients into clusters and adopting serialized training within each cluster. Meanwhile, compression operations were applied to model parameters before transmission to reduce communication costs and improve communication efficiency. Then, a decentralized FL architecture was designed and developed based on blockchain and Inter-Planetary File System (IPFS), where the blockchain records the FL process and IPFS optimizes the high storage costs associated with the blockchain. The experimental results demonstrate the superiority of the framework in terms of accuracy and communication efficiency.

1. Introduction

In March 2023, the Global System for Mobile Communications Association (GSMA) released its annual “Mobile Economy” report [1], which indicated that at the end of 2022, over 5.4 billion people worldwide had subscribed to mobile services, with 4.4 billion also using mobile internet. By 2027, it is expected that the number of smartphones connected to the internet will reach 8 billion, with 6 billion mobile terminal users. By 2030, 5G technology is projected to contribute nearly 1 trillion dollars to the global economy, benefiting all industries. Over the next 7 years, with the increasing number of terminal devices connecting to the internet, there will be explosive growth in network data. With the advent of the big data era, data privacy protection has become an increasingly important issue. However, in the field of machine learning, large amounts of data are often required to train high-performance predictive models, and these data often contain sensitive user information, which can have serious privacy implications if leaked. Traditional machine learning methods typically integrate datasets for training, which makes it difficult to protect data privacy. To tackle the widespread issue of data privacy, the European Union implemented the General Data Protection Regulation (GDPR) [2], the first data privacy protection act to establish comprehensive regulations for protecting private data. As a result, federated learning has emerged as a new decentralized machine learning method aimed at solving the problems of data silos and data privacy. In federated learning, data are stored separately on different local devices, and through techniques such as encryption and secure computing, data sharing and model training can be achieved without the need to store datasets on any centralized server. This method not only protects user data privacy but also leverages massive amounts of data to collaboratively train more accurate and robust models, providing better support and assistance for development and decision making in various industries.

Although federated learning has made significant progress in privacy protection [3], in practical scenarios, multiple rounds of communication are required to achieve model convergence due to the participation of multiple terminals in transmitting large neural network models and the non-Independent and Identically Distributed (non-IID) nature of the data involved in most terminal training, thus communication overhead becomes an issue that cannot be ignored. In federated learning, the overall model training time is mainly affected by the training time of each terminal model and the communication transmission time. With the continuous improvement of device computing power, client training speed is gradually increasing, and communication efficiency has become the main bottleneck restricting training speed.

Blockchain is a distributed database technology that employs a chain-like data structure for storing and managing data. Data are packaged into individual blocks, which are then connected in chronological order, forming a continuously growing chain. Blockchain utilizes various technologies, including peer-to-peer network protocols, cryptography, consensus mechanisms, and smart contracts, to establish a secure and trustworthy environment for data storage and transmission. It is characterized by features such as decentralization, security, transparency, immutability, and auditability [4]. Traditional federated learning is a centralized architecture in which the central server is responsible for collecting, aggregating, and broadcasting the new global model. This architecture has problems, such as privacy leakage, network congestion, and single point of failure. To solve these problems, a decentralized federated learning architecture usually does not need a central server and relies primarily on blockchain to handle the related work. This approach can better protect user privacy, improves the efficiency and security of federated learning, and has good scalability and fault tolerance.

The aim of the research described in this paper was to improve the performance of federated learning, particularly communication efficiency. In practical applications, the non-IID characteristics of client data in federated learning increase the communication rounds required to reach convergence. It is necessary to study an efficient federated learning algorithm that is suitable for actual federated learning scenarios. In addition, federated learning lacks a trust mechanism, which can lead to issues such as data leakage and model tampering. Therefore, blockchain technology is needed to ensure the trust and security of federated learning systems. To address these issues, this paper offers an innovative approach regarding both federated learning algorithms and the architecture, proposing a federated learning framework based on blockchain and cluster training (BCFL). The contributions of this paper are summarized as follows:

- In terms of algorithms, this paper improves upon the federated learning algorithm based on odd–even round cluster training by incorporating blockchain instead of a central server. This enhancement provides higher levels of data privacy, security, and traceability while reducing the risk of a single point of failure;

- In terms of data compression, the model parameters obtained from local training on the client side are subjected to sparse quantization operations and integrated into the Quantization and Value–Coordinate Separated Storage (QVCSS) format proposed in this paper before being transmitted, thereby reducing the communication cost;

- In terms of architecture, a decentralized federated learning architecture based on blockchain and IPFS is proposed. First, instead of relying on a central server, a smart contract is designed to implement the Smooth Weighted Round-Robin (SWRR) algorithm to select the client responsible for global gradient aggregation in each round, which effectively avoids the single-point failure of the central server in traditional federated learning. Second, a consortium chain is adopted, which is more suitable for scenarios such as federated learning that require efficient processing of large amounts of data, as the consortium chain can improve performance by limiting the number of participants and using more efficient consensus algorithms. In addition, the use of IPFS optimizes the high overhead problem of storing model parameter files in the consortium chain.

The remainder of the paper is organized as follows: Section 2 presents related work. Section 3 provides a detailed description of the proposed BCFL framework, including the design of the federated learning algorithm, model parameter compression, and storage. Section 4 describes the application of blockchain technology in the proposed framework, i.e., the design of smart contracts. Section 5 shows the experimental implementation process and the evaluation results. Section 6 concludes the paper and proposes improvements for future work.

2. Related Work

In order to improve communication efficiency, domestic and foreign researchers have conducted in-depth research on algorithm optimization, data compression, and federated learning in non-IID data scenarios. In the initial federated learning mode, client devices participating in the training run one round of a stochastic gradient descent algorithm and upload the locally updated model parameters to a central server. Frequent communication can cause excessive data transmission to the central server and network overload. Therefore, starting from the optimization of the federated learning algorithm, McMahan et al. [5] proposed the FedAvg algorithm. This algorithm allows client devices participating in training to execute multiple rounds of the stochastic gradient descent algorithm locally before sending the model parameters to the central server for aggregation through weighted averaging. Compared with the FedSGD algorithm [6], the FedAvg algorithm places the computational burden on local client devices, while the central server is only responsible for aggregation, which reduces the number of communication rounds by more than 10 times. Based on the FedAvg algorithm, Briggs et al. [7] combined the idea of hierarchical clustering with federated learning, using the similarities between local updates and the global model to cluster and separate client devices, and achieved a reduction in the total number of communication rounds.

In terms of data compression, existing research shows that the size of the machine learning model has the greatest impact on communication costs. Effective reduction in data exchange on the uplink and downlink can be achieved through compression, which reduces the overall communication cost. However, data compression can also result in partial loss of information, so it is necessary to find the optimal balance between model accuracy and communication efficiency. Aji et al. [8] proposed a fixed sparsity rate, sorting the gradients obtained using local client devices during training and transmitting only the top P% of gradients to the central server while storing the rest in a residual vector. Jhunjhunwala et al. [9] proposed an adaptive quantization strategy called AdaQuantFL, which dynamically changes the number of quantization levels during the training process to achieve a balance between model accuracy and communication efficiency. Li et al. [10] introduced Compressed Sensing (CS) into federated learning and achieved good performance in both communication efficiency and model accuracy.

Data in federated learning come from various end users, and the data generated by these users often follow non-independent and identical distributions. Data collected on their independent devices cannot represent the data distribution of other participant datasets. Dealing with non-IID data can cause problems such as difficulty with convergence during training and excessive communication rounds. Considering the problems caused by non-IID data on client devices, Sattler et al. [11] proposed a Sparse Ternary Compression (STC) method, which extends the existing top-K methods and combines Golomb encoding to sparsify downstream communication. This method can help federated learning models converge faster. Haddadpour et al. [12] proposed a set of algorithms with periodic compressed communication and introduced local gradient tracking to adjust the gradient direction of client devices in non-IID scenarios. Seol et al. [13] alleviated class imbalance within client devices using local data sampling and between client devices by selecting clients and determining the amount of data used for training in non-IID data distribution scenarios. This ensures a balanced set of training data class distributions in each round, achieving high accuracy and low computation. To better address the performance degradation of federated learning when using non-IID image datasets, Zhao et al. [14] introduced FedDIS, which uses a privacy-preserving distributed information sharing strategy for clients to construct Independent and Identically Distributed (IID) datasets. They trained the final classification model using local and augmented datasets in a federated learning manner, significantly improving the model performance.

Machine learning and blockchain technology have been two highly notable technologies in recent years. There has been a substantial amount of research combining machine learning and blockchain technology, demonstrating the effectiveness of their collaboration [15,16]. Concurrently, federated learning, as a presently popular machine learning technique, has also triggered many studies on decentralized federated learning architectures that combine it with blockchain technology. Lo et al. [17] addressed the difficulty of implementing responsible artificial intelligence principles in federated learning systems and the unfairness problems that arise when dealing with different stakeholders and non-IID data. They proposed a blockchain-based accountability and fairness architecture for federated learning systems. The system designs a data model source registry smart contract, utilizes blockchain to store the data trained using local models and the hash values of local and global model versions, tracks and records data model sources, and realizes audit accountability. Jiang et al. proposed a threat detection model for sharing Cyber Threat Intelligence (CTI), called BFLS [18]. They used blockchain to overcome the risks of single server failure and malicious nodes during the shared model process. To improve the Practical Byzantine Fault Tolerance (PBFT) in the consortium chain, they verified and selected high-quality threat intelligence to participate in federated learning and then automatically aggregated and updated the model through smart contracts, filtering out low-quality and false threat intelligence while protecting organizational privacy. Zhang et al. [19] proposed a blockchain-based decentralized federated transfer learning method to solve the problem of collaborative mechanical fault diagnosis in industrial scenarios. They introduced blockchain to replace the central server in federated learning, greatly enhancing the security of data sharing. A committee consensus and a model aggregation scheme were proposed to optimize the model and motivate participants. They further designed a transfer learning algorithm without source data to achieve local customized models. On the Internet of Things (IoT), some data in Mobile Edge Computing (MEC) are highly sensitive, and direct sharing will infringe on privacy. Zhang et al. proposed a Vertical Federated Learning (VFL) architecture based on a consortium blockchain for data sharing in MEC [20]. The consortium chain replaces the third party in traditional VFL and is responsible for key distribution and training decryption tasks. They improved the Raft algorithm based on verifiable random functions and proposed a new consistency algorithm, V-Raft, which can quickly and stably select leaders to assist in the data sharing of VFL. After analyzing the key issues that need to be addressed in resource management in the future IoT, Fu et al. [21] used federated learning to perform the distributed intelligent management of IoT resources and proposed an IoT resource management framework combining blockchain and federated learning. They employed Support Vector Machine (SVM) to detect malicious nodes and optimize the selection of participants in federated learning. Furthermore, they ensured the security of model parameter exchange by uploading the local model parameters and the global model parameters to the blockchain.

3. Design of BCFL Method

3.1. System Architecture

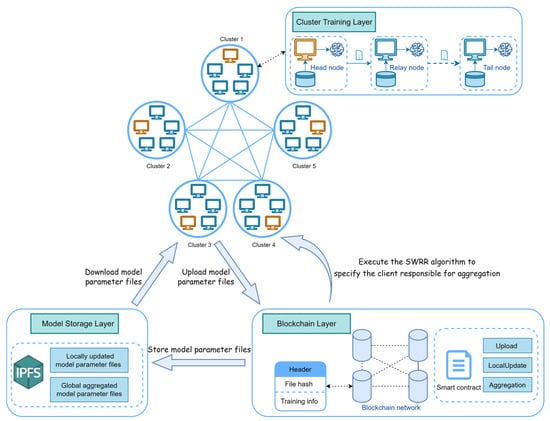

This paper applies blockchain and IPFS to the FL architecture based on the odd–even round cluster training algorithm and combines sparse quantization operations on model parameters with a mesh topology structure to further improve communication efficiency while ensuring the trustworthiness of FL. As shown in Figure 1, the decentralized BCFL architecture consists of four layers: cluster training layer, blockchain layer, and model storage layer.

Figure 1.

BCFL system architecture.

At the cluster training layer, client nodes follow the rules of the odd–even round cluster training algorithm to conduct model training and parameter transmission within the cluster. The participating clients are divided into several clusters, and the corresponding clients are selected to participate in training in odd and even rounds within each cluster. During each round of training, the model parameters are transmitted from the head node to the relay node, and finally to the tail node, while each node updates the model parameters based on its local dataset. In particular, before transmitting the model parameters, the client will integrate the model parameters into the QVCSS format and perform lossless compression on the array in the parameter file to further reduce the space occupied by the model parameter file, thereby improving communication efficiency.

At the blockchain layer, smart contracts are designed to schedule interactions between FL clients, the blockchain, and IPFS. The cluster training layer invokes smart contracts to achieve the uploading of model parameter files output by client nodes and the downloading of required model parameter files for local updates. When uploading model parameter files, their corresponding hash values and relevant training information are packaged and stored in blocks on the consortium blockchain. Furthermore, due to the absence of a central server in BCFL architecture for the aggregation of model parameters, a smart contract is designed to implement the SWRR algorithm to specify a client responsible for aggregation in each round.

At the model storage layer, IPFS is responsible for storing the real model parameter files generated by local updates and global aggregation. The FL client can find the corresponding complete file in IPFS based on the file hash stored in the blockchain and download it. This approach not only addresses the storage issues of the consortium blockchain but also achieves decentralized storage.

3.2. Federated Learning Algorithm Based on Odd–Even Round Cluster Training

Based on the federated learning algorithm with odd–even round and gradient sparsification (FedOES) [22], this paper proposes a federated learning algorithm based on odd–even round cluster training (FedOEC) by removing the central server. The core idea of the proposed FedOEC is to partition the participating clients into several clusters and select a subset of clients for serial training in each cluster according to odd–even rounds. Additionally, each time the model parameters are transferred, the algorithm performs compression operations on the gradient to reduce the amount of communication data. Then, the gradient information generated by each cluster is aggregated. This process effectively improves the communication efficiency of federated learning, and the cluster-based method shows strong robustness in handling non-IID scenarios.

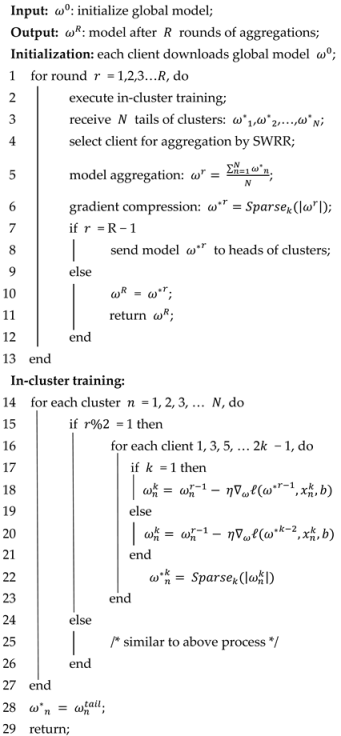

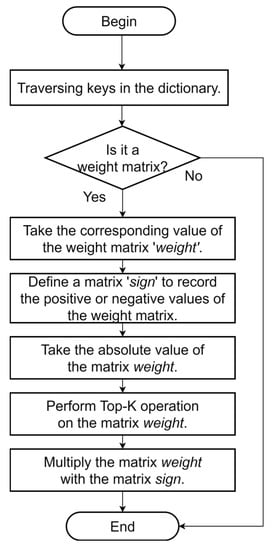

The FedOEC algorithm can be divided into three steps: initialization, cluster training, and model aggregation. The algorithm flow is analyzed in detail below, and the algorithm pseudocode for FedOEC is shown in Algorithm 1.

| Algorithm 1: FedOEC |

|

Initialization: The clients are randomly partitioned into clusters, assuming that there are clients in each cluster, and the client nodes in a cluster are denoted as . There are three types of nodes within each cluster: head node, tail node, and relay node. Since the training process is divided into odd–even rounds, each cluster has two head nodes, two tail nodes, and several relay nodes. In cluster , the head node is denoted as , the tail node is denoted as , and the remaining relay nodes are marked as . Specifically, the head node participating in the odd round training is marked as , and the tail node is marked as ; the head node participating in the even round training is marked as , and the tail node is marked as . The global model is initialized and sent to the head nodes of the clusters, which are denoted as .

Cluster training: Taking the odd round of cluster as an example, the head node performs local training after receiving the global gradient obtained from the previous round of aggregation. The formula for local training of the head node is as follows:

Here, represents the local model parameters updated by the head node in the odd round; represents the global gradient subjected to compression operation after the previous round of aggregation, where denotes the current training round; and represent the learning rate and loss function, respectively; represents the training data on the head node client; and represents the number of local training rounds.

To reduce the communication cost of transmitting model parameters, each client needs to perform gradient compression on its local model parameters. Taking the head node as an example, the formula is as follows:

Here, represents the parameters obtained by performing the operation on locally updated model parameters using the head node. reduces the memory occupied by the model parameters, which is beneficial for their transmission.

Within each cluster, clients perform serial training in a certain order, as shown in Figure 2.

Figure 2.

Serial training process within cluster n.

Taking the odd round as an example, after the head node is trained to get the local model and performs gradient compression on the local model parameters, it transmits the model parameters to the relay node , and so on, until the tail node completes the local update and performs the function. In this way, cluster will get the final model parameters of this round—that is, . The relay nodes and tail node participating in this round of training will perform the following local update and gradient compression:

Model aggregation: After all clusters have completed training, the tail node of each cluster will transmit the model parameters representing cluster . After receiving the model parameter set from each cluster, the client node is selected using the SWRR algorithm for model aggregation to obtain the new global model parameter . The formula for model aggregation is as follows:

Here, denotes the current round, and the SWRR algorithm is described in detail in Section 4.3.1. After completing model aggregation, the operation is performed on to obtain , which is then transmitted to the head nodes of the clusters participating in the next round of training.

3.3. Model Parameter Compression

According to the workflow of the FedOEC algorithm, it can be seen that we need to perform the gradient compression function on the updated model parameters after local training on the client side or aggregation of each model.

The function consists of two steps: (1) Perform a top-K operation on the gradient information, i.e., select the top K gradients with the largest absolute values. (2) Convert the dense matrix resulting from the top-K operation into a sparse matrix stored in QVCSS format.

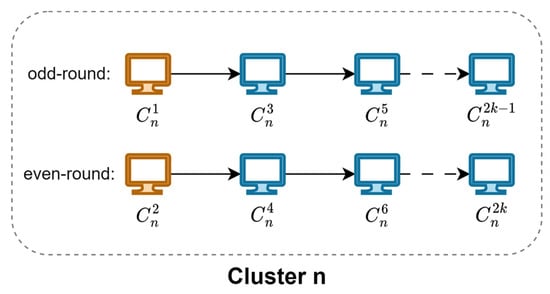

3.3.1. Top-K Operation

The model parameters are stored in a dictionary as key–value pairs, where the key represents the parameter name, such as Weight, Bias, etc., and the value represents the corresponding parameter value, usually a tensor. During the training process, a large number of parameters need to be updated, but not all of them have a significant impact on the model performance. Therefore, when updating the parameters, the top-K technique can be used to select a subset of gradients with larger absolute values for model updating, while the remaining gradients can be ignored, thus reducing the communication overhead and the amount of data uploaded. The process of performing the top-K operation on gradient information is shown in Figure 3.

Figure 3.

Flow diagram of top-K operation.

Here, the value range of elements of the matrix is , where indicates a negative number and indicates a positive number. In this paper, the following formula is used to represent the top-K operation on multidimensional tensors:

Here, represents the elements in obtained by taking the absolute value of the original tensor, and represents the set of the top largest elements in the tensor . If is in the set, the element is kept; otherwise, the element is set to . The resulting tensor only retains the top largest elements from the tensor .

3.3.2. Storage Format Based on Quantization and Value–Coordinate Separation

By performing the top-K operation, a dense tensor is obtained, for which the value of many parameters is , which will occupy a large amount of memory space and lead to wasted memory resources. Different from dense tensors, sparse tensors only store non-zero elements and their position information, which can significantly reduce memory usage. The traditional way of storing sparse matrices is to store coordinates and values together as tuples, known as the Coordinate format (COO) [23]. However, the COO is less suitable for handling high-dimensional sparse tensors because it requires storing multiple index values, which increases memory overhead.

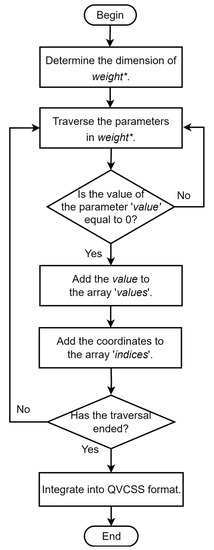

Therefore, a storage format that is based on quantization and value–coordinate separation, known as the QVCSS format, is proposed. Figure 4 shows the process of converting the dense tensor to the QVCSS format for storage.

Figure 4.

Flowchart of QVCSS format conversion.

In the workflow of the FedOEC algorithm, each client needs to restore the weight in the received QVCSS format model parameters to obtain the complete weight parameters, which are then used as the initial gradient for local updates in the current round.

3.4. Model Parameter Storage

Model parameter storage and updates are particularly critical, and this section describes a storage mode that combines the consortium chain with IPFS for storing federated learning model parameters. The process is divided into three steps: initialization, model parameter storage, and model parameter synchronization.

Initialization: set up a consortium chain, start an IPFS node at the same time, and join the IPFS network.

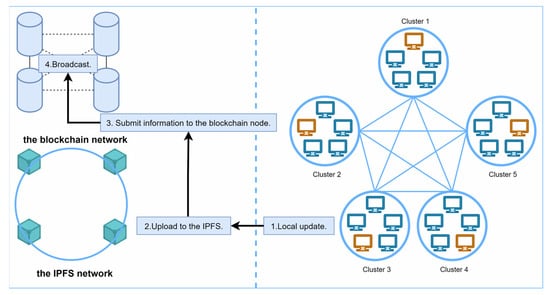

Model parameter storage: In each round of federated learning, participants update the model parameters based on their local data, and the updated model parameters are stored in the IPFS network. The specific steps are shown in Figure 5.

Figure 5.

Schematic diagram of model parameter storage.

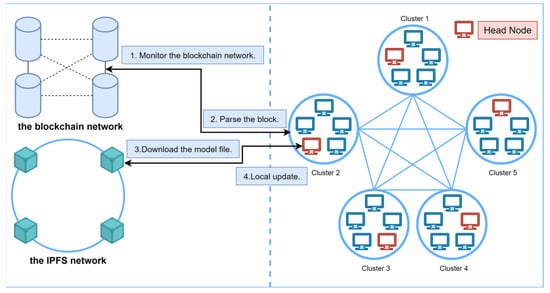

Model parameter synchronization: To ensure that the head node in each cluster can obtain the latest model parameters in a timely manner, a synchronization mechanism needs to be designed. Figure 6 shows the synchronization process.

Figure 6.

Diagram of model parameter synchronization.

4. Smart Contract Design

In this framework, the smart contract is responsible for executing core functions such as model downloading, local updating, and global model aggregation. The smart contract layer comprises three key functions: , , and .

4.1. Upload() Function

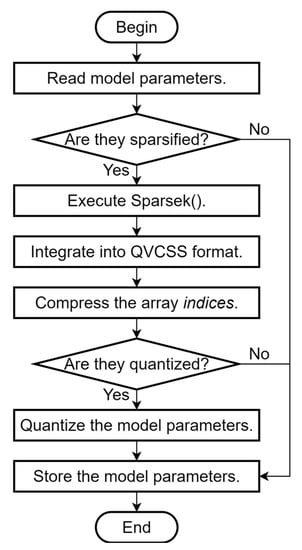

The function is responsible for implementing the uploading of model parameters, which is executed by the participating client in the training process. The process is as follows (Figure 7):

Figure 7.

Flowchart of function.

- (1)

- Read model parameters: the function is executed after local updating or global model parameter aggregation and retrieves the model parameters from the local client;

- (2)

- Perform sparse and quantization processing: Judge whether to perform sparse or quantization operations, and if necessary, execute the function to integrate the model parameters in QVCSS format. In particular, the deflate compression algorithm is used to compress the array in QVCSS format, which further reduces the storage space and transmission cost of the model parameters;

- (3)

- Store the model parameters: Store the processed model parameters in IPFS and then generate the hash value corresponding to the model parameter file. The hash value and the related training information are packaged and stored in a block of the consortium chain so that other participants can access them.

4.2. LocalUpdate() Function

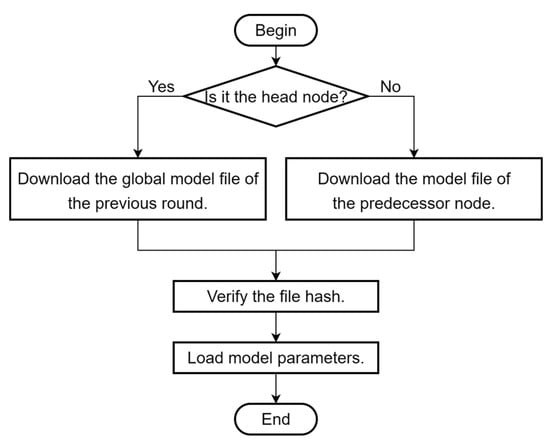

The function is responsible for processing the updating of the local model and is executed by participating clients in the training process. Figure 8 shows the function flow.

Figure 8.

Flowchart of function.

The process is as follows:

- (1)

- Download the model file: First, determine whether the client node is the head node in the cluster; if so, then download the global model file of the previous round from IPFS according to the hash value. Otherwise, download the model file of the predecessor node in the current round;

- (2)

- Verify the file hash: Determine the hash value of the downloaded model file and compare the calculated value with the provided value. If they match, then the file is complete and correct;

- (3)

- Load model parameters: load the model parameters from the model file and apply them to local training.

4.3. Aggregation() Function

4.3.1. FL Aggregation Node Selection Method Based on SWRR Algorithm

To achieve a truly decentralized architecture, BCFL cancels the central server responsible for aggregating models in traditional FL. Therefore, it is necessary to select one of the participating client nodes to be responsible for aggregating the model parameters in each round. We propose a node selection method based on the SWRR algorithm to determine the client node responsible for model aggregation in each round. The specific steps are as follows:

Step 1: Initialize weights. Each cluster participating in FL needs to elect a client node to participate in the aggregation of the global model. To select the appropriate client, it is necessary to assign a corresponding weight to each client by analyzing indicators such as its computing power, reliability, and availability. In particular, the client’s computing power can be evaluated based on indicators such as processor type, memory capacity, and graphics card quality, and clients with stronger computing power are usually assigned higher weights. If there are clusters, the set of client nodes responsible for aggregation is elected and denoted as .

The initialized weights can be expressed as two arrays, the weight mapping array and the dynamic weight mapping array . represents the weights assigned to clients in , while the initial value corresponding to each client in is . The weights in are fixed, while the values in will be updated after model aggregation, indicating the dynamic weight of each client at the current time;

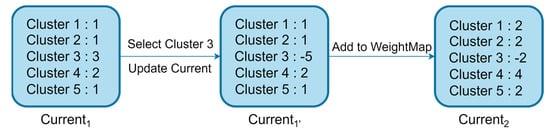

Step 2: Select the cluster responsible for aggregation. Assuming there are five clusters at this point, after the first round of local training is completed, each value in is added to the corresponding fixed weight in , so that is updated to . The SWRR algorithm determines the cluster responsible for aggregation in this round based on the key corresponding to the largest value in , which is ;

Step 3: Aggregate and update weights. In the previous step, was selected as the cluster responsible for aggregation in the first round. To balance the load among the client nodes responsible for aggregation, a special update operation needs to be performed on after aggregation. As shown in Figure 9, there are five client nodes responsible for aggregation, with weights of 1, 1, 3, 2, and 1, for a total weight of 8. If the client with the highest weight in is selected for processing in every round of global model aggregation, the network load in the entire FL will not be well balanced, and client nodes with lower weights, such as , , and , will hardly be used. Therefore, after selecting client for model aggregation, its weight needs to be subtracted from the total weight of all participants in . This means that the weight of will be updated to , and the updated dynamic weight mapping is recorded as . Then, is added to the corresponding weight in , resulting in . This method can more evenly distribute the global aggregation requests.

Figure 9.

Diagram of dynamic weight update.

The update details of the SWRR algorithm for the first nine rounds are presented in Table 1, where represents the weight array of the initial aggregation node in round of federated learning, and represents the updated weight array after selecting the aggregation node. for the next round is obtained by adding to the corresponding weight in .

Table 1.

Execution flow of SWRR algorithm.

The above steps are repeated until the convergence level of the FL model reaches the preset target. In the SWRR algorithm, the number of times each node is selected for aggregation is calculated according to the weights. There are nodes in client node set , and their weight set is . The number of times the ith client node is responsible for aggregation is denoted as and is calculated using Formula (7):

Here, indicates the communication round, is the total weight of all nodes responsible for aggregation, and is the floor function. The FL aggregation node selection method based on SWRR can more accurately balance the load among nodes and more smoothly distribute the model aggregation tasks.

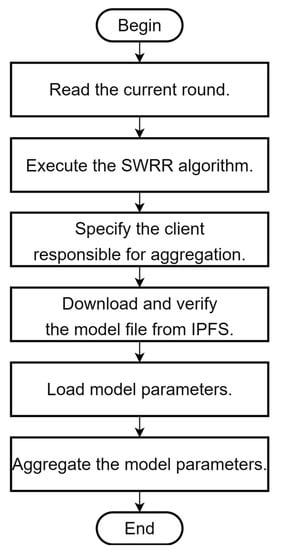

4.3.2. Design of Function

The function is responsible for processing global model aggregation. The process is shown in Figure 10, and the specific steps are as follows:

Figure 10.

Flowchart of function.

- (1)

- Read the current round: first, read the information of each cluster in the current round;

- (2)

- Execute the SWRR algorithm: use the information of the current round as the input of the SWRR algorithm and calculate the weight array corresponding to the nodes responsible for aggregation.

- (3)

- Specify the client responsible for aggregation: according to the result of the SWRR algorithm, specify the aggregation node for the current round;

- (4)

- Download and verify the model file from IPFS: the aggregation node downloads the model parameter files that were uploaded by the tail nodes of other clusters in the current round from IPFS and performs a hash verification;

- (5)

- Load model parameters: the aggregation node loads the model parameters from the model files;

- (6)

- Aggregate the model parameters: the aggregation node carries out weighted averaging of the model parameters of all clusters to obtain the global model parameter.

5. Experimental Implementation and Evaluation

5.1. Experimental Environment

A computer server equipped with NVIDIA GeForce RTX 3090 (24 GB video memory) and Intel(R) Xeon(R) Platinum 8358P CPU @ 2.60 GHz with 80 GB memory was used as the experimental environment. The operating system was Linux, with Anaconda 1.11.1 serving as the Python environment manager. Python 3.8 was used as the development language, and the PyTorch 1.11.0+cu113 deep learning framework was applied to implement and train the neural network models. The CUDA version of PyTorch, which supports GPU acceleration and significantly improves the training speed and effect of deep learning models, was used in this experiment. The consortium chain platform FISCO BCOS 3.2.0 was selected to build and manage the blockchain network, and IPFS 0.10.0 _linux-arm64 was configured to store model files.

5.2. Experimental Dataset

Two datasets were used in the experiment: MNIST and CIFAR-10.

- (1)

- MNIST dataset

The MNIST dataset contains 60,000 training images of 28 × 28 pixels and 10,000 testing images, each representing a handwritten digit, with each digit labeled as a category between 0 and 9. The number of labels in the training dataset is around 6000, and the data distribution is not completely uniform.

- (2)

- CIFAR-10 dataset

CIFAR-10 is a commonly used image classification dataset consisting of 60,000 32 × 32 color images in 10 categories. The dataset is divided into a training set with 50,000 images and a testing set with 10,000 images, and each category in the training set contains 5000 images. One of the characteristics of the CIFAR-10 dataset is that the size and resolution of the images are relatively small, but the categories and sample sizes are diverse, which makes it representative and challenging.

5.3. Experimental Metrics

The experiment used the testing accuracy after each round of model aggregation and the communication cost per round as evaluation metrics. This section gives the definitions of accuracy and communication cost.

The testing accuracy is the most critical metric for measuring the performance of federated learning algorithms, as shown in Formula (8):

Here, represents the label of sample , represents the predicted value of the model for , and represents the number of samples for which the predicted results of the model are the same as the true label.

Assuming there are participants and rounds in the FL training process, the communication cost can be calculated using the following formulas:

These formulas represent the communication cost of uploading and downloading data, where represents the amount of data uploaded and represents the amount of data downloaded by the ith participant in the jth round.

5.4. Experimental Setting and Implementation

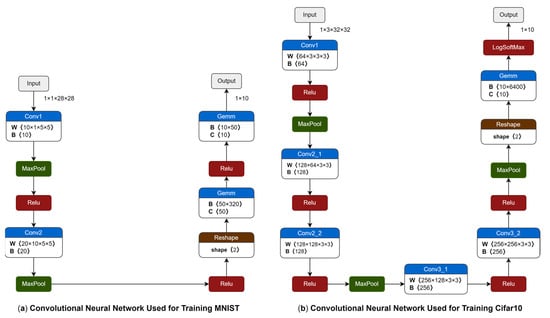

This experiment used the neural network models shown in Figure 11 to train the MNIST and CIFAR-10 datasets. Since the CIFAR-10 dataset has a higher image resolution and more input channels, a deeper and more complex neural network is required to learn the features of the images. The convolutional neural network used to train the CIFAR-10 dataset was different from the one used to train the MNIST dataset: it had three convolutional layers, three pooling layers, and one fully connected layer. Convolutional neural network models in two sizes were set up, and by comparing the performance of these two models with different parameter values in the federated learning scenario, the superiority of the proposed algorithm in terms of communication can be more clearly demonstrated.

Figure 11.

Comparison of convolutional neural network models in this experiment.

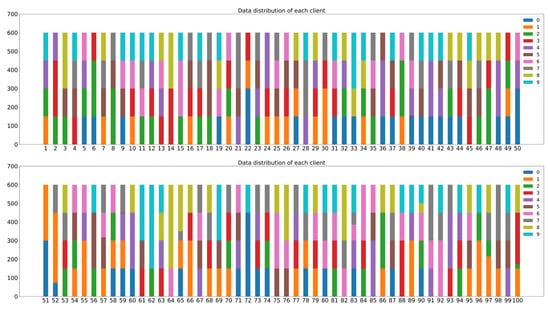

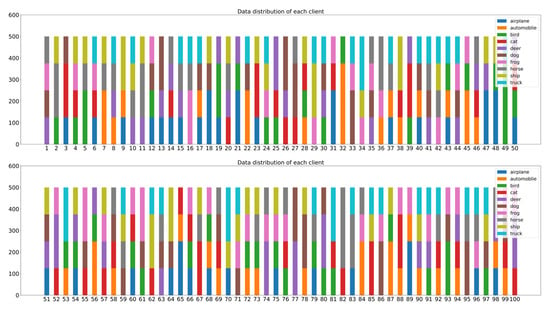

In order to simulate different non-IID data scenarios, the training dataset was sorted by label and divided into 400 equal parts. During the FL training, each of the 100 participating clients randomly selects four parts. In Figure 12 and Figure 13, the bar charts represent the label distribution of the MNIST and CIFAR-10 datasets occupied by 100 clients. Different colors in the bar charts represent different label types in the datasets.

Figure 12.

Label distribution of MNIST dataset for each client.

Figure 13.

Label distribution of CIFAR-10 dataset for each client.

In the experimental setting, it is necessary to consider whether the model parameters are sparse, and in this experiment, a sparsity rate of 0.5 was selected. At the same time, it is also necessary to consider whether to quantize the model parameter values from full-precision to half-precision floating-point numbers. Therefore, three processing methods were employed to set the model parameters in BCFL, which are listed in Table 2.

Table 2.

Model parameter processing settings for BCFL.

5.5. Security Evaluation

The decentralized federated learning framework proposed in this paper starts from the design principles of the information flow security model and combines a variety of security mechanisms, including integrity protection, consensus mechanism protection, and decentralized storage, to ensure the integrity and security of model parameters while resisting various potential challenges and attack.

- (1)

- Integrity protection

In accordance with the integrity protection principle in the information flow security model, all model parameters must first be processed by the SHA256 hash algorithm, and these hash values are stored in the blockchain. Before local updating, the model parameters downloaded from IPFS are hashed again and compared with the hash values stored in the blockchain. This method protects the integrity of the model parameters. Once the model parameters are tampered with during transmission or storage, the hash values will not match, therefore the system can identify such tampering and help prevent data tampering attacks.

- (2)

- Consensus mechanism protection

PBFT is used as the consensus mechanism. It can tolerate up to 1/3 of node failures or malicious behaviors [24], providing a high degree of security and inclusiveness. This helps prevent Byzantine attacks during the consensus process and ensures data consistency.

- (3)

- Decentralized storage

The real model parameters are stored on IPFS. Due to the characteristics of IPFS, the model parameters are stored in a distributed manner across multiple nodes, achieving decentralized storage. This storage method does not rely on a single entity and can enhance the system’s ability to resist single-point attacks.

5.6. Experimental Results

The experimental results mainly include the accuracy rate and communication cost as well as the execution time for file transfer and reconstruction in federated learning models.

- (1)

- Accuracy and communication cost analysis

Experiments were conducted on two data distributions of the MNIST and CIFAR-10 datasets to verify the learning performance of the BCFL framework at the algorithmic level in FL. The most commonly used FL algorithm, FedAvg, was compared with BCFL under different parameter processing patterns. In the analysis of communication cost, only the transmission of model parameter files between clients was considered, where upstream communication refers to the transmission of model parameter files within the cluster, and downstream communication refers to the global model parameter file transmitted by the aggregator node to the head node of each cluster.

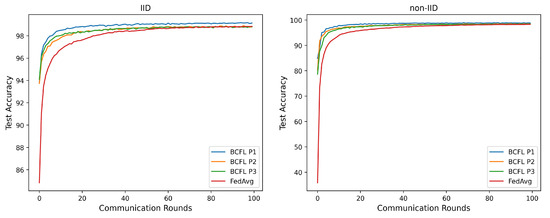

- Experiment results of MNIST dataset

Figure 14.

Convergence of BCFL and FedAvg on MNIST dataset.

Table 3.

Performance of BCFL and FedAvg in different rounds on MNIST dataset.

- 2.

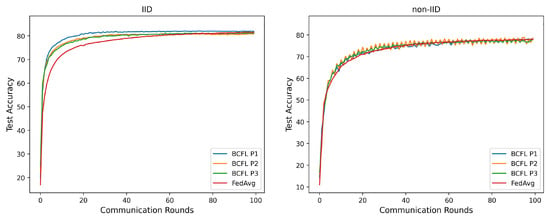

- Experiment results of CIFAR-10 dataset

Figure 15.

Convergence of BCFL and FedAvg on CIFAR-10 dataset.

Table 4.

Performance of BCFL and FedAvg in different rounds on CIFAR-10 dataset.

- 3.

- Experiment results analysis

In these two experiments, the accuracy and communication cost of different algorithms (BCFL(p1), BCFL(p2), BCFL(p3), and FedAvg) in different communication rounds (5th, 20th, and 100th rounds) on the MNIST and CIFAR-10 datasets were compared.

MNIST dataset:

Accuracy: As shown in Figure 14 and Table 3, in all rounds, BCFL(p1) had higher accuracy than the other algorithms in both IID and non-IID settings. BCFL(p2) and BCFL(p3) had slightly lower accuracy than BCFL(p1) in the same rounds. As the communication rounds increased, the accuracy of all algorithms improved. In the 100th round, the accuracy of BCFL(p1) reached 99.15% and 98.84% in the IID and non-IID settings, respectively, which were higher compared to FedAvg.

Communication cost: As shown in Table 3, the BCFL algorithm demonstrated significant advantages in terms of communication cost. Compared with FedAvg, BCFL(p1), BCFL(p2), and BCFL(p3) all had significantly reduced communication costs, and BCFL(p3) had the lowest value. In the same number of communication rounds, the communication costs of FedAvg were 2, 2.76, and 4.17 times higher than that of BCFL(p1), BCFL(p2), and BCFL(p3), respectively. In terms of downstream communication, the communication cost of the BCFL algorithm was significantly reduced by 9, 12.8, and 19.9 times compared to FedAvg. In summary, the BCFL algorithm outperformed FedAvg in terms of communication cost.

CIFAR-10 dataset:

Accuracy: As shown in Figure 15 and Table 4, similar to the MNIST dataset, BCFL(p1) outperformed the other algorithms on different data distributions of the CIFAR-10 dataset. When there were fewer communication rounds, the BCFL algorithm had significantly higher accuracy than the FedAvg algorithm. For example, in the 5th round, the accuracy of the BCFL algorithm in the three patterns under IID was 73.06%, 71.15%, and 71.01%, which is higher than FedAvg, while under non-IID, the accuracy of BCFL algorithm is 58.77%, 58.98%, and 57.27%, respectively, which is also higher than FedAvg’s. As the communication rounds increased, the accuracy of FedAvg was basically on par with BCFL. In the 100th round, the accuracy differences among BCFL(p1), BCFL(p2), BCFL(p3), and FedAvg under IID were small, with values of 81.98%, 80.82%, 81.20%, and 81.44%, respectively. Overall, under non-IID, BCFL had slightly better performance than FedAvg, with BCFL(p1), achieving an accuracy of 78.41%, higher than FedAvg.

Communication cost: As shown in Table 4, in terms of communication cost, the BCFL algorithm had a certain advantage over the FedAvg algorithm on the CIFAR-10 dataset. Specifically, after 100 communication rounds, FedAvg produced a total upstream communication cost of up to 47.14 GB, which was 2, 2.29, and 3.29 times that of BCFL(p1), BCFL(p2), and BCFL(p3), respectively. In the downstream communication link, FedAvg also generated a communication cost of 47.14 GB, which was 10, 11.44, and 16.43 times that of BCFL(p1), BCFL(p2), and BCFL(p3), respectively. Overall, the total upstream and downstream communication cost of FedAvg was 3.33, 3.81, and 5.47 times that of the three BCFL patterns.

- (2)

- Time consumption analysis

In addition to accuracy and communication cost, execution time is also a very important indicator for evaluating the performance of the BCFL algorithm. In the BCFL algorithm framework, the model parameter file is processed by sparsity and quantization to obtain a model file that takes up less memory, which is then passed on to other clients. After receiving the processed model parameter file, the client parses and restores it into usable model parameters for local updates. During this process, the time required for a client node to transfer the model parameters to another node is reduced, but the time required for processing the model parameter file is increased. This section analyzes the time required to transmit model parameter files between clients and to process the files in order to further demonstrate the superiority of the BCFL algorithm in communication.

In this experiment, the execution time was defined as shown in the following formula:

Here, is the time required to quantize the model parameters by the client, is the time required to sparsify the model parameter file by the client, is the time required to transmit the model parameter file between clients using the network communication, and is the time required for the client to restore the model parameter file after receiving it. The following mainly analyzes the execution time of different model files under different parameter processing methods.

The execution time of the model parameter files generated using the BCFL algorithm under different patterns for the MNIST and CIFAR-10 datasets are presented in Table 5. Here, file type p1 indicates that the BCFL algorithm did not perform sparsity or quantization operations on the model file; file type p2 indicates that the algorithm performed sparsity and quantization operations on the model file; and file type p3 indicates that the algorithm additionally performed compression operations. Due to the different sizes of the convolutional neural networks used for training the datasets, the model parameter files produced for the MNIST dataset were smaller than those for the CIFAR-10 dataset. From Table 5, it can be observed that the quantization operation is very fast, accounting for only a small portion of the overall execution time. The time required for the sparsity operation increased with the number of model parameters, but the sparsity operation reduced the size of the model file, thereby decreasing the transmission time. Most of the execution time is reflected in , which usually increases when the network bandwidth is congested. This experiment simulated a stable network transmission speed of 1 Mbps. In terms of the performance of the MNIST model files, the sparsity and quantization operations reduced the overall execution time. However, for the CIFAR-10 model files, due to the large number of model parameters, the sparsity operation required a certain amount of time and did not significantly reduce the overall execution time under ideal network conditions. However, when combined with the quantization operation, the size of the model file was greatly reduced, saving a lot of transmission time. Compared with CIFAR-10 (p2), CIFAR-10 (p3) reduced the overall execution time by about 10 s.

Table 5.

Analysis of execution time.

6. Conclusions and Future Work

In response to the current challenges faced by federated learning, we propose an FL framework called BCFL based on blockchain and cluster training. This paper proposes a federated learning algorithm based on odd–even round cluster training. By dividing clients into clusters and adopting a partially serialized training method within clusters, we can accelerate the convergence of the model. Before transmitting the parameters, the model file is sparsified and quantized to reduce communication costs and improve the communication efficiency of FL. The BCFL architecture no longer relies on a central server and uses a load-balancing algorithm to schedule the client responsible for aggregation in each round. It introduces a consortium chain to record the FL process and optimizes the high overhead problem of consortium chain storage by combining with IPFS. Experiments on the MNIST and CIFAR-10 datasets demonstrate that the proposed framework excels in accuracy and communication efficiency, and time analysis shows that the proposed model file processing scheme is beneficial to improving the transmission efficiency of FL.

Although this paper makes certain achievements in optimizing the performance of FL and the design of decentralized architecture, there are still many directions for further research and improvement. In future research, the following aspects can be considered:

- Optimization of client clustering partitioning strategies: In the algorithm proposed in this paper, client cluster partitioning is based on a simple random grouping strategy. Future research can explore more optimal client cluster partitioning strategies by considering specific application requirements and data distributions. This can further enhance the speed and accuracy of model convergence;

- Strengthening of security mechanisms: While the BCFL architecture proposed in this paper exhibits a high level of trustworthiness and robustness, it still has certain potential security risks and vulnerabilities. The utilization of blockchain technology in federated learning may face issues related to the abuse of decentralized authority. Additionally, consensus mechanisms in blockchain technology can be susceptible to attacks, such as the 51% attack, and smart contracts may contain security vulnerabilities that could be exploited by malicious actors [25]. Future research should focus on implementing effective oversight and governance mechanisms, continually improving and updating consensus mechanisms, and conducting security audits and vulnerability fixes for smart contract development—all of which are aimed at strengthening the system’s security mechanisms;

- Adaptation to heterogeneous devices and resource constraints: In practical application scenarios, clients participating in federated learning training may possess varying computational capabilities and resource constraints, such as IoT devices and edge computing systems. Future research can focus on several aspects to enhance the adaptability and practicality of federated learning algorithms. These aspects include reducing communication costs arising from limited device bandwidth, optimizing resource allocation, and enhancing the ability to combat malicious participants [26];

- Cross-domain applications of FL: This paper primarily focuses on the fundamental issues and methods of federated learning without delving into specific application domains. FL technology holds extensive potential applications in fields such as healthcare, finance, and transportation, among others. In the future, it will be possible to design cross-domain FL methods and application frameworks tailored to the characteristics and requirements of various domains.

Author Contributions

Conceptualization, Y.Y. and Z.L.; Methodology, Y.L., Y.Y., Z.L. and Z.Z.; Software, Z.L.; Validation, Y.Y. and Z.L.; Formal Analysis, C.Y.; Investigation, J.Z.; Data Curation, Z.L.; Writing—Original Draft, Y.Y.; Writing—Review and Editing, Y.L., Y.Y. and C.Y.; Visualization, Z.L.; Supervision, Y.L.; Funding Acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Shanghai Science and Technology Innovation Action Plan Project (No. 22511100700).

Data Availability Statement

The data used in this study are the MINST and CIFAR-10 datasets.

Conflicts of Interest

The authors declare no conflict of interest.

References

- The Mobile Economy 2023. Available online: https://data.gsmaintelligence.com/research/research/research-2023/the-mobile-economy-2023 (accessed on 10 May 2023).

- Complete Guide to GDPR Compliance. Available online: https://gdpr.eu/ (accessed on 12 May 2023).

- Yin, X.; Zhu, Y.; Hu, J. A comprehensive survey of privacy-preserving federated learning: A taxonomy, review, and future directions. ACM Comput. Surv. (CSUR) 2021, 54, 1–36. [Google Scholar] [CrossRef]

- Kouhizadeh, M.; Sarkis, J. Blockchain practices, potentials, and perspectives in greening supply chains. Sustainability 2018, 10, 3652. [Google Scholar] [CrossRef]

- McMahan, B.; Moore, E.; Ramage, D.; Hampson, S.; Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Artificial Intelligence and Statistics; PMLR: New York, NY, USA, 2017; pp. 1273–1282. [Google Scholar]

- Konecny, J.; McMahan, H.B.; Felix, X.Y.; Richtárik, P.; Suresh, A.T.; Bacon, D. Federated learning: Strategies for improving communication efficiency. arXiv 2016, arXiv:1610.05492. [Google Scholar]

- Briggs, C.; Fan, Z.; Andras, P. Federated learning with hierarchical clustering of local updates to improve training on non-IID data. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–9. [Google Scholar]

- Aji, A.F.; Heafield, K. Sparse communication for distributed gradient descent. arXiv 2017, arXiv:1704.05021. [Google Scholar]

- Jhunjhunwala, D.; Gadhikar, A.; Joshi, G.; Eldar, Y.C. Adaptive quantization of model updates for communication-efficient federated learning. In Proceedings of the ICASSP 2021—2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 3110–3114. [Google Scholar]

- Li, C.; Li, G.; Varshney, P.K. Communication-efficient federated learning based on compressed sensing. IEEE Internet Things J. 2021, 8, 15531–15541. [Google Scholar] [CrossRef]

- Sattler, F.; Wiedemann, S.; Müller, K.R.; Samek, W. Robust and communication-efficient federated learning from non-iid data. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 3400–3413. [Google Scholar] [CrossRef]

- Haddadpour, F.; Kamani, M.M.; Mokhtari, A.; Mahdavi, M. Federated learning with compression: Unified analysis and sharp guarantees. PMLR 2021, 130, 2350–2358. [Google Scholar]

- Seol, M.; Kim, T. Performance Enhancement in Federated Learning by Reducing Class Imbalance of Non-IID Data. Sensors 2023, 23, 1152. [Google Scholar] [CrossRef] [PubMed]

- Zhao, L.; Huang, J. A distribution information sharing federated learning approach for medical image data. Complex Intell. Syst. 2023, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Chen, F.; Wan, H.; Cai, H.; Cheng, G. Machine learning in/for blockchain: Future and challenges. Can. J. Stat. 2021, 49, 1364–1382. [Google Scholar] [CrossRef]

- Tsai, C.W.; Chen, Y.P.; Tang, T.C.; Luo, Y.C. An efficient parallel machine learning-based blockchain framework. Ict Express 2021, 7, 300–307. [Google Scholar] [CrossRef]

- Lo, S.K.; Liu, Y.; Lu, Q.; Wang, C.; Xu, X.; Paik, H.Y.; Zhu, L. Toward trustworthy ai: Blockchain-based architecture design for accountability and fairness of federated learning systems. IEEE Internet Things J. 2022, 10, 3276–3284. [Google Scholar] [CrossRef]

- Jiang, T.; Shen, G.; Guo, C.; Cui, Y.; Xie, B. BFLS: Blockchain and Federated Learning for sharing threat detection models as Cyber Threat Intelligence. Comput. Netw. 2023, 224, 109604. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, Z.; Li, X. Blockchain-based decentralized federated transfer learning methodology for collaborative machinery fault diagnosis. Reliab. Eng. Syst. Saf. 2023, 229, 108885. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, Y.; Li, T.; Zhou, H.; Chen, Y. Vertical Federated Learning Based on Consortium Blockchain for Data Sharing in Mobile Edge Computing. CMES-Comput. Model. Eng. Sci. 2023, 137, 345–361. [Google Scholar] [CrossRef]

- Fu, X.; Peng, R.; Yuan, W.; Ding, T.; Zhang, Z.; Yu, P.; Kadoch, M. Federated learning-based resource management with blockchain trust assurance in smart IoT. Electronics 2023, 12, 1034. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Z.; Huang, Y.; Xu, P. FedOES: An Efficient Federated Learning Approach. In Proceedings of the 2023 3rd International Conference on Neural Networks, Information and Communication Engineering (NNICE), Guangzhou, China, 24–26 February 2023; pp. 135–139. [Google Scholar]

- Davis, T.A.; Hu, Y. The University of Florida sparse matrix collection. ACM Trans. Math. Softw. 2011, 38, 1–25. [Google Scholar] [CrossRef]

- Zheng, Z.; Xie, S.; Dai, H.; Chen, X.; Wang, H. An overview of blockchain technology: Architecture, consensus, and future trends. In Proceedings of the 2017 IEEE International Congress on Big Data (BigData Congress), Honolulu, HI, USA, 25–30 June 2017; pp. 557–564. [Google Scholar]

- Li, X.; Jiang, P.; Chen, T.; Luo, X.; Wen, Q. A survey on the security of blockchain systems. Future Gener. Comput. Syst. 2020, 107, 841–853. [Google Scholar] [CrossRef]

- Lim, W.Y.B.; Luong, N.C.; Hoang, D.T.; Jiao, Y.; Liang, Y.C.; Yang, Q.; Niyato, D.; Miao, C. Federated learning in mobile edge networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2020, 22, 2031–2063. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).