1. Introduction

Modern hairstyling has evolved beyond its foundational role in public hygiene to become a symbolic manifestation of personal identity and self-expression [

1]. Consequently, hairstyling techniques have adapted in tandem with contemporary trends. While traditional elements such as cuts and perms remain relevant, the capacity to craft styles specific to an individual’s hair condition is now of utmost importance [

2]. Yet, achieving this necessitates adept hairstylists armed with tools such as scissors and clippers. Amidst the challenges of the COVID-19 era, establishing an appropriate remote hair design education system has been daunting. Most existing research has predominantly centered on the visual dynamics of hairstyling [

3,

4,

5,

6], leaving a tangible remote hair design training infrastructure largely uncharted. This paper introduces an innovative VR (virtual reality) hair design technology that transcends the constraints of time and space, paving the way for contactless beauty hair education.

The goal of this study is to deliver a remote hair design education experience that eclipses the limits of physical presence, empowering hairstylists and learners to collaboratively delve into hairstyling techniques within a virtual domain. Harnessing VR technology, our proposed model dispenses with the need for tangible materials and tools, offering a secure and versatile learning platform unhindered by chemical interactions or specific hair conditions [

7]. Traditional online beauty hair education has often been anchored to passive engagements, such as viewing prerecorded content or attending offline sessions. The present-day urgency, intensified by the COVID-19 situation [

8], calls for a vibrant, collective, and collaborative platform for contact-free beauty hair education. Our proposed initiative seeks to address this need, employing VR to conjure an immersive, interactive, and lifelike learning realm that benefits both educators and students.

Furthermore, this technology promotes proactive hair design by leveraging personalized scalp and hair modeling, allowing hairdressers to craft hairstyles attuned to an individual’s head shape and hair traits. By harnessing VR, this avant-garde approach delivers immersive, lifelike experiences, aligning it with the digital New Deal—a principal pillar of Korea’s economic rejuvenation strategy [

9]. The potential of the proposed solution for practical applications is vast and bears broad implications [

10]. The main contributions of this study are outlined below.

First, we present a virtual hair design technology that sidesteps limitations posed by chemical substances and individual hair conditions, thus offering enhanced flexibility in crafting a myriad of hairstyles.

Second, the technology contributes to cost-effective beauty hair education for salons by diminishing dependence on tangible materials and instruments, thereby increasing accessibility and affordability.

Third, it champions a collaborative multiplayer framework that overcomes barriers of time and space, enhancing interactions between educators and learners and enriching the overall learning journey.

Fourth, hairdressers are empowered to provide proactive, bespoke hair design methods, incorporating personalized scalp and hair modeling, resulting in a tailored approach in tune with individual desires and requirements.

Finally, this initiative embodies the forefront of XR (extended reality) technology for contactless beauty hair education, reshaping the modalities of remote learning and hands-on training in the beauty sector.

2. Related Work

Research has delved into hair cutting techniques that capture the essence of fluidity, flexibility, and vitality in hair while also defining haircuts and their style variations. Yet, there exists a gap in modules capable of simulating these techniques. Bridging the intricate and aesthetic realms of hair design with XR systems presents a groundbreaking challenge. This study employs the XR system in both VR and AR (augmented reality) configurations. The multiplayer feature promotes contactless beauty hair education, fostering interactions between multiple instructors and learners. Traditional hair beauty education leans heavily on face-to-face instruction due to the necessity of hands-on materials and tools. In stark contrast, our proposed technique delivers tailored education complemented by real-time feedback. Standard hairdressing typically entails physical salon visits, often limiting clients to the expertise and creativity of available hairstylists. With our method, clients’ scalp and hair data are relayed to the hairstylist, enabling the crafting of individualized hair designs from a distance, all while incorporating real-time feedback from the customer.

The swift progression of immersive technologies, coupled with the global response to the COVID-19 pandemic, has catalyzed the uptake of extended reality (XR) [

11]. The application and study of XR technology have flourished across various domains, encompassing tourism [

12], education [

13], gaming [

14], and healthcare [

15,

16]. While terms such as virtual reality, augmented reality, and mixed reality have been in the discourse, XR stands out as a more comprehensive and inclusive term. Its integration spans diverse areas, from social sectors such as civil defense, aviation, and emergency readiness to educational realms [

17]. Furthermore, VR technology is pioneering advancements across various sectors, including automotive, commerce, medicine, cultural education, healthcare, and industry. Several innovative applications of VR have emerged in diverse contexts. For example, one study [

18] highlighted the use of AR displays to deliver structured information to drivers. Another study [

19] leveraged VR to assess packaging products. The educational sector has also tapped into VR for gamified learning experiences [

20], and its significant impact has been felt in cultural learning through VR [

21]. The potential of VR in healthcare has been explored by crafting experimental VR scenarios [

22,

23]. Moreover, studies have investigated the role of VR as a design instrument [

24] and the formulation of VR-centric product configuration methods [

25].

A wealth of research surrounding hairstyle recommendations, hairdressing studies, and the development of smart mirror systems for hairstyling suggestions has been undertaken [

26,

27,

28]. Yet, a discernible gap remains when it comes to contactless beauty hair training in a multiplayer setting.

Much of the existing literature zeroes in on hair simulations, placing emphasis on facets such as hair motion and modeling, rather than compatibility with VR environments [

29,

30,

31]. A noticeable trend is a strong inclination towards visual animation, often sidelining a hands-on experiential approach. For instance, while efforts have been made to craft efficient sketch-based hairstyle models, they often stop short of offering users direct experiential interactions [

32]. The integration of VR technology into tangible reality stands out as a pressing need. While there have been strides in developing Android-based applications offering virtual hairstyle previews tailored to user facial features, these predominantly deploy AR for mere visual representation, lacking the capability for users to actively train or style their own hair [

33]. In a similar vein, the design of a smart mirror system hinging on Raspberry Pi merely presents a fixed roster of hairstyles, depriving users of the ability to innovate new styles or replicate the hairdressing training experience [

34].

3. Materials and Methods

3.1. System Overview

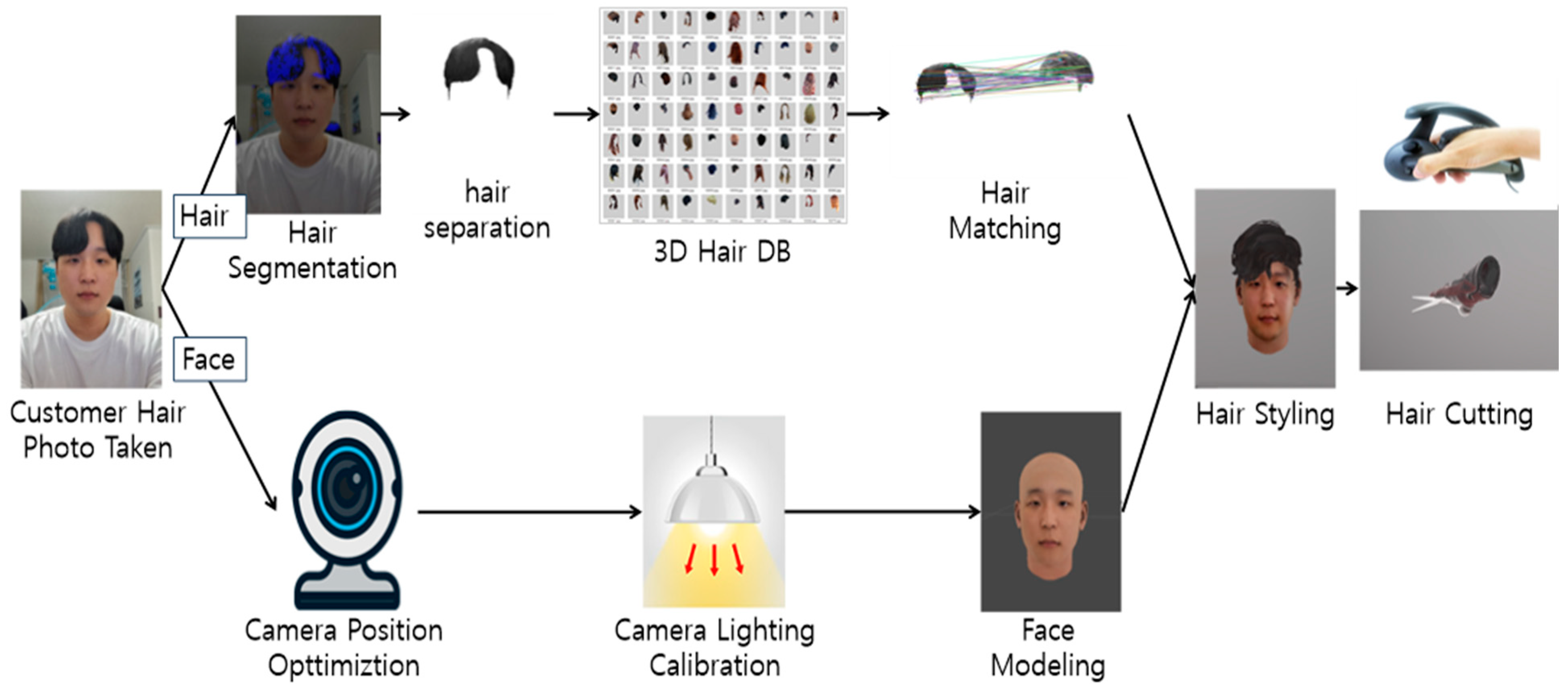

To facilitate remote hairstyling, our system employs a camera to capture user photographs. The hair segment is extracted from the input image using Fully Convolutional Networks (FCN), with the Figaro 1K dataset serving as the foundation [

35]. The FCN adapts the fully connected layer of the upsampling phase in conventional CNNs, ensuring the preservation of spatial information [

36]. Additionally, with the assistance of Avatar Maker [

37], our system generates a 3D facial model of the user based on their photograph.

In our study, hairstyles prevalent among Korean men and women were grouped into 14 distinct categories. Adhering to preset guidelines, corresponding 3D hair models were constructed. To find a suitable match, the SURF (Speed-Up Robust Features) algorithm was employed, comparing the user’s uploaded photos with a curated database of 140 hairstyle images. The optimal hairstyle, drawn from our predefined 14 categories, is subsequently sourced from our 3D hair model database for design purposes. VR HMD interactions are delineated to foster collaboration among multiple users within the VR realm. We have fashioned a system proficient in both hair cutting and perm styling functionalities.

Figure 1 offers a schematic representation of the preliminary stage, encompassing processes such as photo capture, hair segmentation, 3D hair matching, face modeling, and interactive hairstyling.

3.2. Hair Style Segmentation and Hair Matching

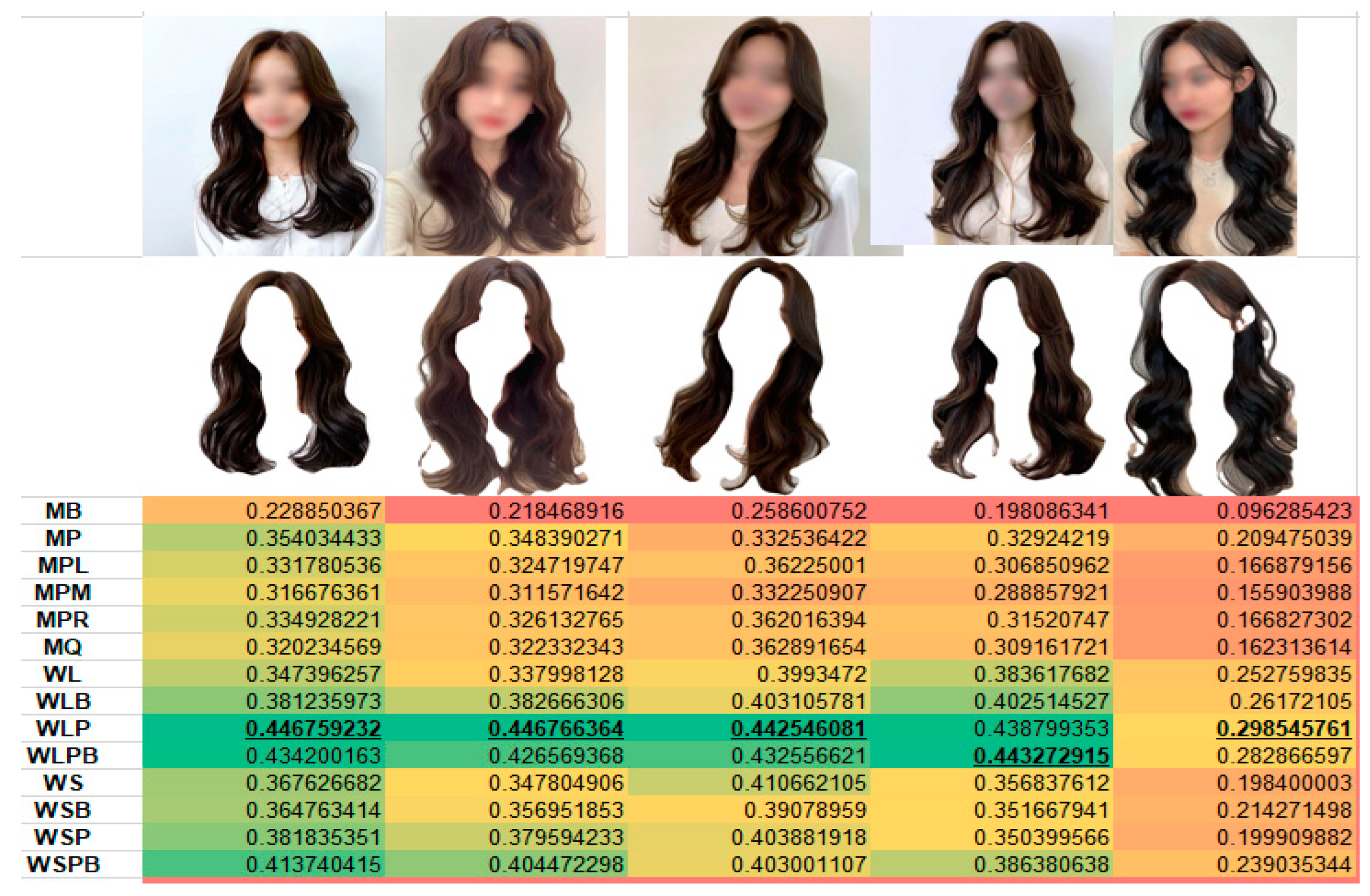

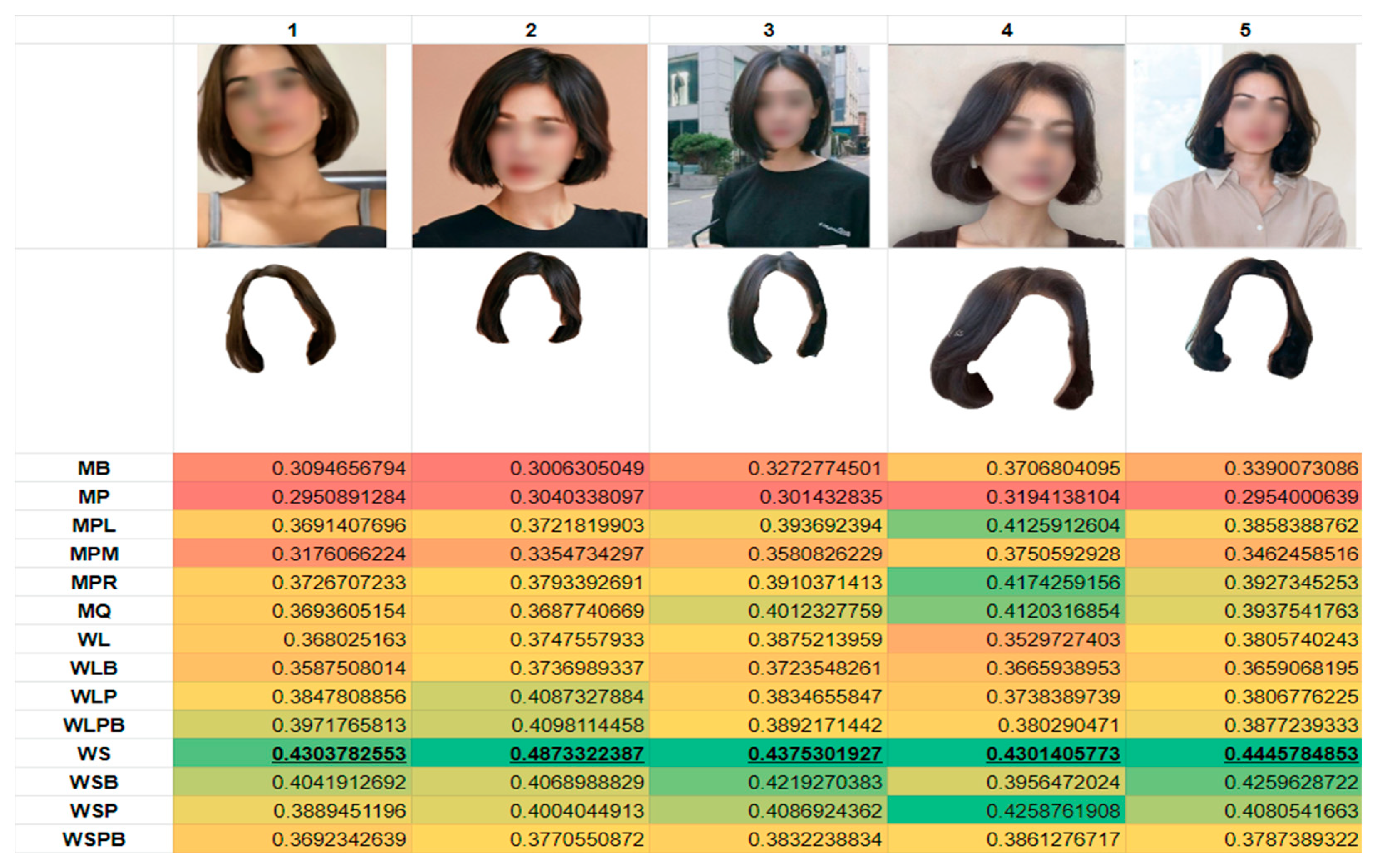

We established 14 categories to systematically classify Korean hairstyles. This classification was anchored on four criteria: gender, the presence of bangs, hair length, and hair condition. To address the distinct attributes of each gender, we separated the data into six categories for men and nine for women. For males, we identified categories such as basic two block (MB), updo (MQ), and specific partings with perm bangs such as left, right, and centre (MPL, MPR, and MPM) and generalized perm bangs (MP). Women’s hairstyles, on the other hand, were differentiated based on length (long or short) and further subdivided by the existence of perms and bangs. These categories served as our framework for analyzing the prevalent hairstyles in Korea.

We isolated just the hair sections from the input facial hair photographs. Then, utilizing the Speeded Up Robust Features (SURF) algorithm, we extracted the keypoints from the hair-only image. By comparing the keypoints between the input photos and those in our database, we determined an average score for each category based on the matched keypoints. This enabled us to categorize the hairstyles into the predefined categories we previously discussed and documented in our earlier research, as illustrated in

Figure 2 [

38].

To identify the hair section from the input photo, we extracted keypoints and assessed the matching score by evaluating the ratio between the keypoints of the input photo and those in our database. This facilitates our recommendation for the best-suited hairstyle. The SURF algorithm uses an approximation to the second-order derivative of the LoG (Laplacian of the Gaussian) box filter, constructing a Hessian matrix via convolution with integral images. The determinant of this matrix is subsequently calculated. When the determinant surpasses a set threshold and stands as the largest among neighboring scales, it is recognized as a keypoint. The utilized equation measures the precision of the matched categories. A score of 1.0 denotes the most accurately matched category, while a score of 0 corresponds to the least accurate. The experiment employed PyTorch 1.11.0 [

39] for hair segmentation and OpenCV 3.4.2.16 [

40] for the matching process. The code was developed accordingly, with 3 hair images representing each category, totaling 42 images. We endeavored to match these images using accuracy tests 1 and 2, as detailed in

Section 4.2.

3.3. Hair Style Modeling and Shading

In our approach to modeling a hairstyle, we began by distinguishing between the top and back regions of the head, pinpointing areas where the scalp’s hair parameters most notably change. This typically corresponds to the dominant region at the back. The back region was further subdivided into upper and lower sections. When observing from behind, we divided the left and right sides, using the center as a reference. Through this process, we delineated five clear guidelines essential for ensuring uniformity in the shape and density of hair strands. These sections were termed: lower back of the head, left side hair, right side hair, upper back of the head, and top hair.

Hair shading determines the final hair color by evaluating the brightness and reflective properties of individual strands. In this context, brightness describes the color’s intensity, while reflection pertains to how hair disperses and reflects light. Distinct from typical objects, hair possesses an intrinsic color and the capacity to both reflect and scatter light. This uniqueness necessitates a shading technique distinct from conventional color applications. As depicted in the first column of

Figure 3, using a standard shader resulted in darkened areas away from direct light. However, the second column showcases the application of Unity’s standard shader with an adjusted “flatness” parameter. This modification yielded a more uniform hair appearance, akin to 2D flatness, minimizing intense shading effects. This adjustment facilitated a more authentic portrayal of hair, emphasizing the intricate layering of individual strands, a feat which is hard to accomplish with traditional shaders.

Moreover, users can choose colors with a simple mouse click. Upon finalizing color extraction, pressing the spacebar allows them to exit the color-selection mode.

Figure 3 shows the various hair dyeing models.

3.4. Hair Styling Interaction

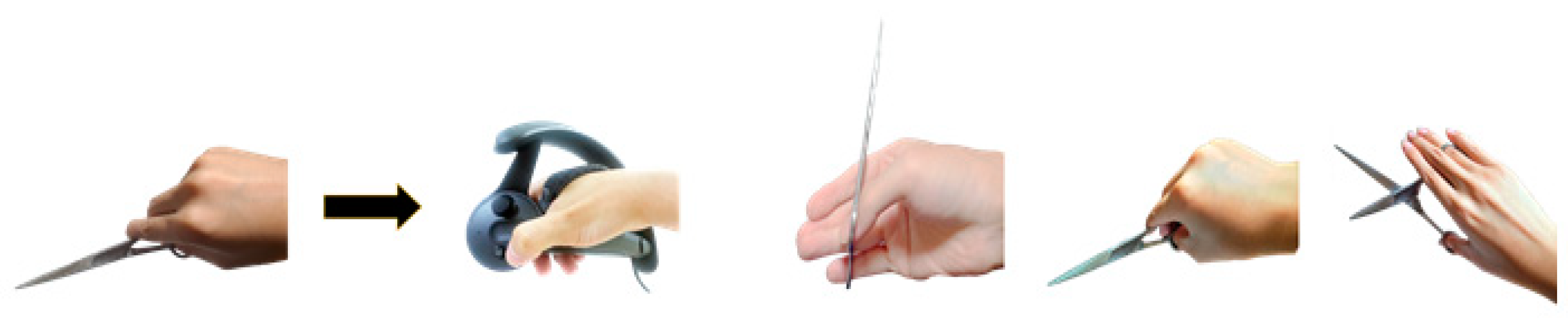

To emulate real-life beauty procedures in VR, the controller’s finger-tracking features need to correspond to the movements essential for hair cutting. These coordinated actions are depicted in

Figure 4. By positioning the ring finger into the holder linked to the hair scissors and securing the thumb in the bottom holder, users can achieve an approximate 90° open and close motion with the scissors.

We provided a binding definition document for the VR interactions defined in this study. Initially, the binding action mappings define variables in Unity 3D [

41] and SteamVR [

42] inputs, subsequently applying these variable values. For the specific requirements of hair cutting, actions were demarcated by varying the rotation axes and regulating the motions. These actions indicate button pressing and release patterns, as well as continuous pressing, detailing the control scope of the axis for precise movements.

User interactions include the use of a trigger, as shown in

Figure 5a, on the controller to pick and manage beauty tools. UI interaction links laser pointer events to UI elements by employing the Box Collider and overriding methods. This means interactions occur during a collision, in the absence of a collision, or when the trigger is engaged. Activating the button depicted in

Figure 5b facilitates hair cutting maneuvers on the hair model. Pushing button B, as shown in

Figure 5c, initiates the UI for beauty tool selection. Tool rotation is managed via a joystick (

Figure 5d). The pressure intensity, vital for different operations with beauty tools such as clippers, is gauged when the touchpad (

Figure 5e) is pressed and is denoted with values ranging from 1.0 to 0.0. This research introduced a user interface enabling the choice of assorted beauty tools—such as thinning scissors, blunt scissors, permanent rolls, clippers, and blow dryers—through ray casting. Moreover, we included a ring finger function to discern the cutting motion and crafted distinct cutting actions for each thinning/blunt scissor variant. The hair model was also given a layered structure to facilitate the accurate replication of the four national beauty test cuts (Spaniel, Isadora, graduation, and layered) using shape model algorithms. We have extensively discussed the nuances of these controller interactions in a prior publication [

43].

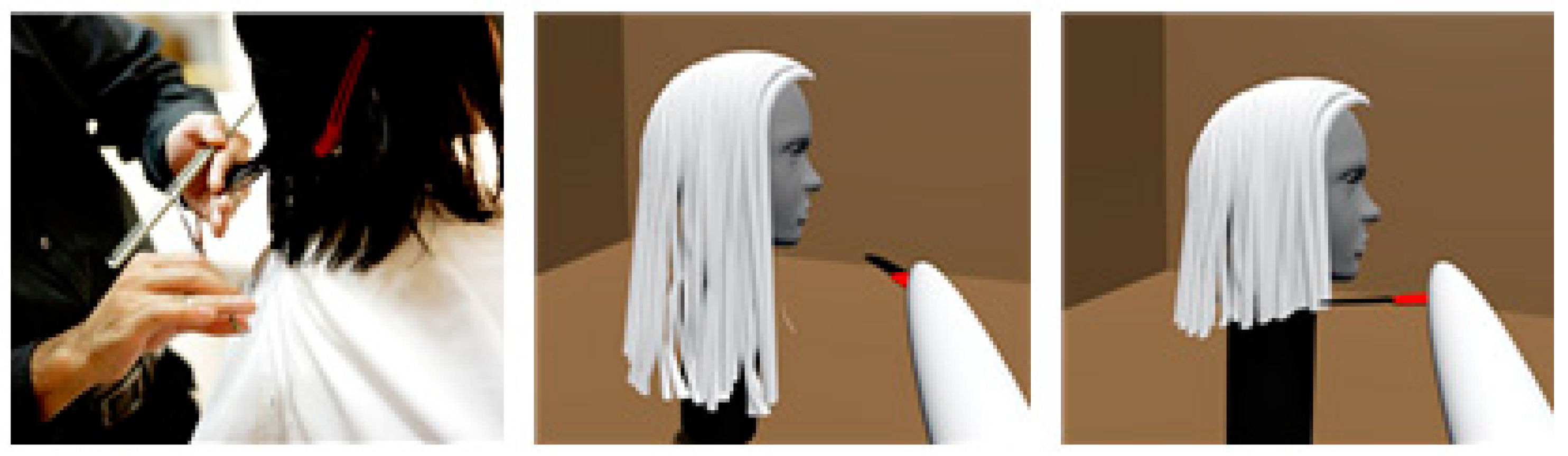

This study focused on using BzSoft’s Mesh Slicer [

44] to cut hair using a mesh-slicing approach. A Mesh Slicer is a tool designed to segment the mesh of an object upon contact, leveraging applied colliders. The segmented sections of the mesh were subsequently reformed into triangles and optimized. These portions were then populated with the specified material, forming a new mesh, which underwent validation [

45]. When a Mesh Slicer interacts with an object equipped with colliders, the object’s mesh is segmented, and these segments are reformed into polygons, followed by optimization. The segmented sections were then populated with a fresh mesh. This methodology was realized by fashioning an object named “knife”. By fine-tuning its distance and rotation, the mesh is sliced upon making contact at the observed position. The haircut function is illustrated in

Figure 6.

The left-hand controller simulates the motion of grabbing hair, while the right-hand controller mimics the movement of hairdressing scissors, as depicted in

Figure 7. The haircutting process is initiated when a collision transpires between the collider of the hairdressing scissors and the hair’s collider.

To simulate hair perming in a VR setting, our focus was on accurately replicating the pivotal rod winding process, integral to traditional hair perming. Rod winding typically begins at the hair ends, winding them around a rod and subsequently fastening them with rubber bands. In practice, one manually wraps a hair segment around the rod to fashion curls. For its VR adaptation, we designed hair sections that depict the stages before and after the rod, as well as the final permed state. These sections were amalgamated into a set designed for rod winding. The controllers’ interaction delineated the progression of rod winding, as illustrated in

Figure 8.

The perm category introduces 11 hair sets tailored to a 3D model, representing the look of long, permed hair. Engaging with any of these hair sets using the hand-based controller initiates the rod-winding preparation phase. In this interactive state, a button press gradually advances the rod winding, mirroring the real-world styling sequence. Once the interaction ceases, the rod stays secured to the hair. Re-engaging with the coiled hair via the controller transitions it to a stage set to unwind the rod. Upon concluding this interaction, the hair set assumes a wavy form, as depicted in

Figure 9.

Figure 10 presents the frontal, lateral, and posterior perspectives of the hair models.

4. Results

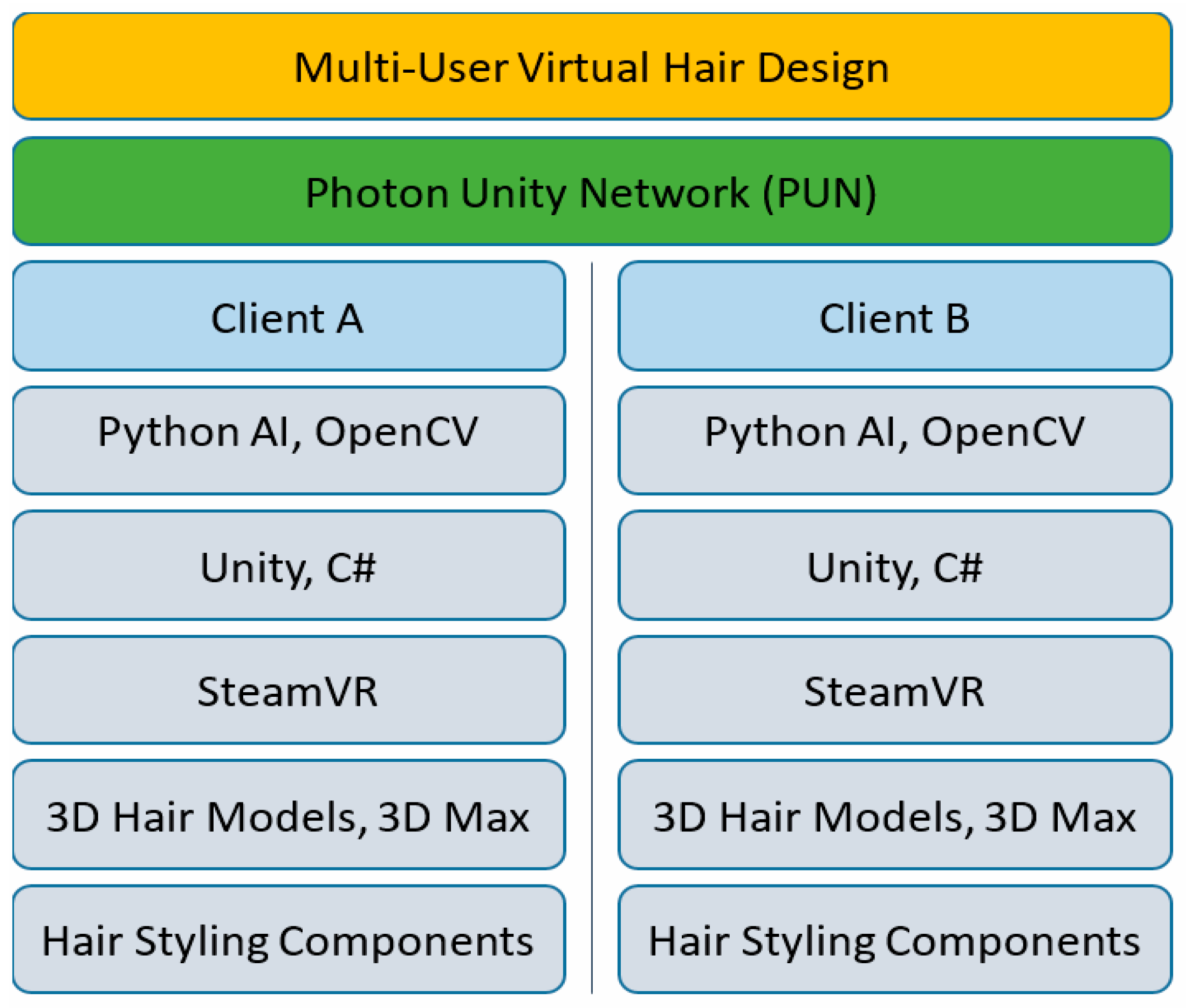

For a clearer understanding of the system development, we present a streamlined diagram of the proposed software architecture, similar to what is shown in

Figure 11. Within this architecture, upon entering a room through the Photon Unity Network server, Clients A and B employ Python’s AI algorithms and OpenCV to categorize hair types. These classifications are then saved as text files within Unity’s Resource Directory. Following this, the C# code embedded in the Unity Game Engine is utilized to access these hair classifications. Matching 3D hair model prefabrications are then generated, activating the respective 3D hair models in the Unity scene. The system is optimized to run smoothly on the SteamVR platform. Additionally, a collection of hairstyling tools has been crafted, incorporating the hair-cutting capability via the mesh-cutting function of the mesh slice component.

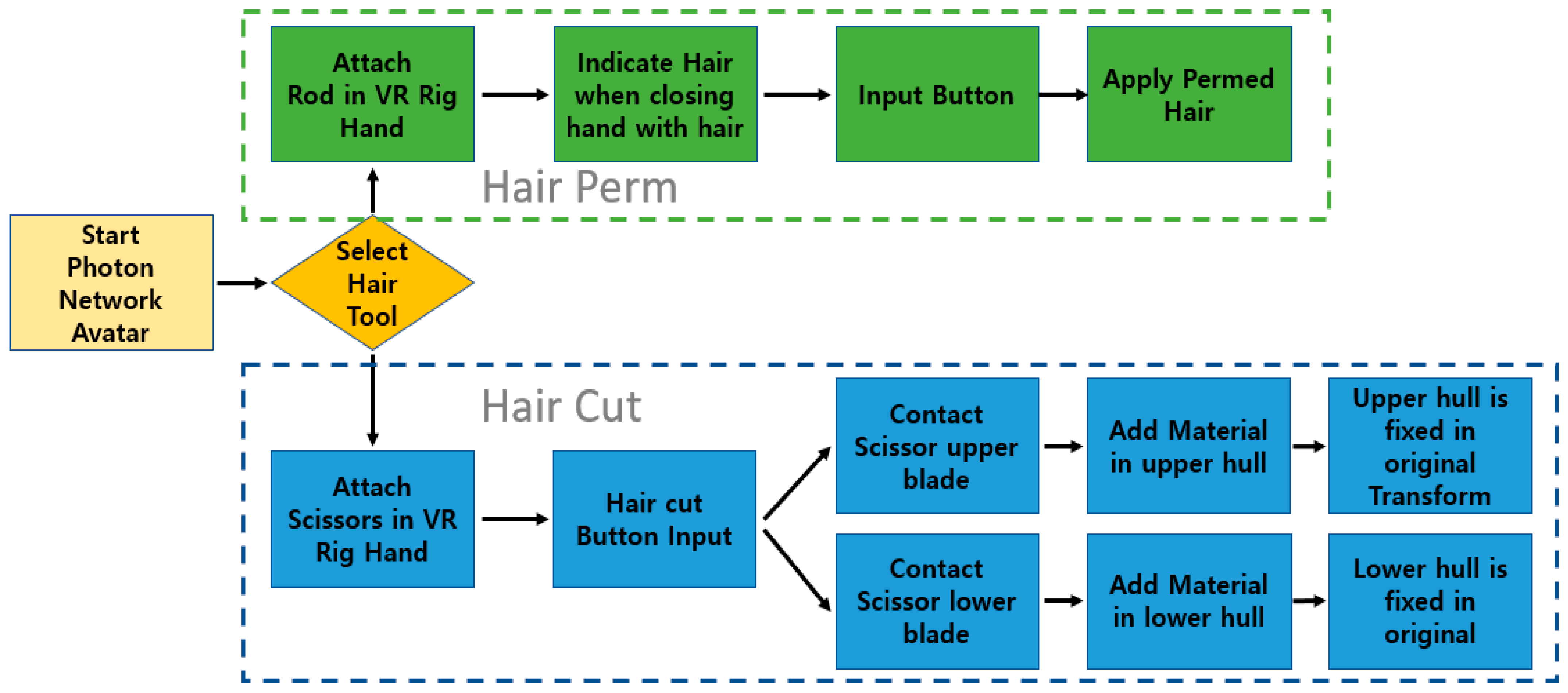

4.1. Multiplayer Network Flow

Upon entering the room via the Photon Network, a rig was initialized, initiating the interaction process. Selecting “Hair Cut” equips the controller with scissors, and choosing “Hair Perm” attaches rods, enabling the corresponding procedures. Utilizing a VR system grounded in SteamVR facilitates authentic hairstyling cuts and perms, offering a tangible hairstyling training ambiance. We have integrated a multicollaborative setting based on the Photon Unity Network. As users access the server-provided room, the Photon Unity Network bridges the local and distant clients, harmonizing users’ real-time positions. Joint efforts, such as hairstyling cuts and perms, are synchronized via remote procedure calls, rendering a lifelike hairstyling training landscape. The facilities of Photon Unity Networking have been harnessed to facilitate room creation and entry. Current evaluations affirm support for two concurrent players. Although Photon can host up to 16 users in a room, for adept data management, it is prudent to keep data message transmissions per second under 500. Given the study’s nature, which entails transmitting ample messages such as VR avatar motions and hair mesh creation, we advise capping user connections to 2–3 participants.

Figure 12 depicts the components and processes of the multiuser service. A Photon Unity Network typically embodies a structure inclusive of a dedicated server, lobbies, and rooms. As the execution environment boots up, it calls the connect-to-server function, triggering a Photon Network. It employs connect-with-settings to form a server link. The lobby callback is then bypassed, ushering in the invocation of the initial room function with a room code argument. Here, the room can be tailored with visibility and participant constraints.

Figure 13 outlines the comprehensive system flow for hairstyling, including cuts and perms. When a user links to the room, as illustrated in

Figure 13, a network character object is instantiated from the SteamVR-provided player prefab, serving as a rig. This entity persistently aligns its hand and head positions with other room occupants. As users actuate the controller button to select a hair instrument, a user interface emerges, highlighted in

Figure 14. When the controller’s ray intersects the hair tool selection button, it turns red. Upon actuating the controller, users are ushered into a hair tool selection pane. If a haircut is preferred over a perm, the scissors are appended to the character’s grasp through SteamVR_skeleton_poser, and a haircut model mirroring the user’s hair is rendered via hair matching.

The haircut performed using the scissors attached to the controller on client B is synchronized with client A using RPC, allowing both screens to display the haircut in progress.

When a user chooses “Hair Perm” in the Hair Tool Select, the hair perm visualization is concurrently synchronized on both Client A and Client B screens via RPC.

On the user interface, the hair tool selection button turns red upon interaction with a ray from the controller. Activating the controller’s trigger then leads the user to a hair tool selection menu. Opting for either “Hair Cut” or “Hair Perm” ensures synchronization through RPC, thus presenting the hair cutting or perming procedure on both Client A and Client B screens in tandem.

4.2. Accuracy Test of Hair Matching

As previously noted, we employed the SURF algorithm to compare a user-uploaded photo with our collection of 140 hairstyle images to discern the nearest match. To assess the efficacy of this comparison, we juxtaposed the keypoints of the user’s photo with those of 10 images for each hairstyle, amassing a total of 140 images. We used the ratio of matched keypoints as a metric for quantification. By determining the average score for each hairstyle, classifications were made based on the peak score. The code for this endeavor was crafted using PyTorch 1.11.0 for hair segmentation and OpenCV 3.4.2.16 for matching purposes.

In this study, a keypoint detection threshold of 500 was chosen, as it yielded the utmost precision in hair matching. Keypoints identified via SURF encapsulate their locations, orientations, and dimensions, which are subsequently compared across two images. The F1-score, indicative of a model’s precision, elevates with the threshold and then starts descending after reaching the 500 mark. Based on the hair image comparison, the optimal F1-Score was attained at a threshold of 500, producing a result of 0.517, signaling peak efficiency.

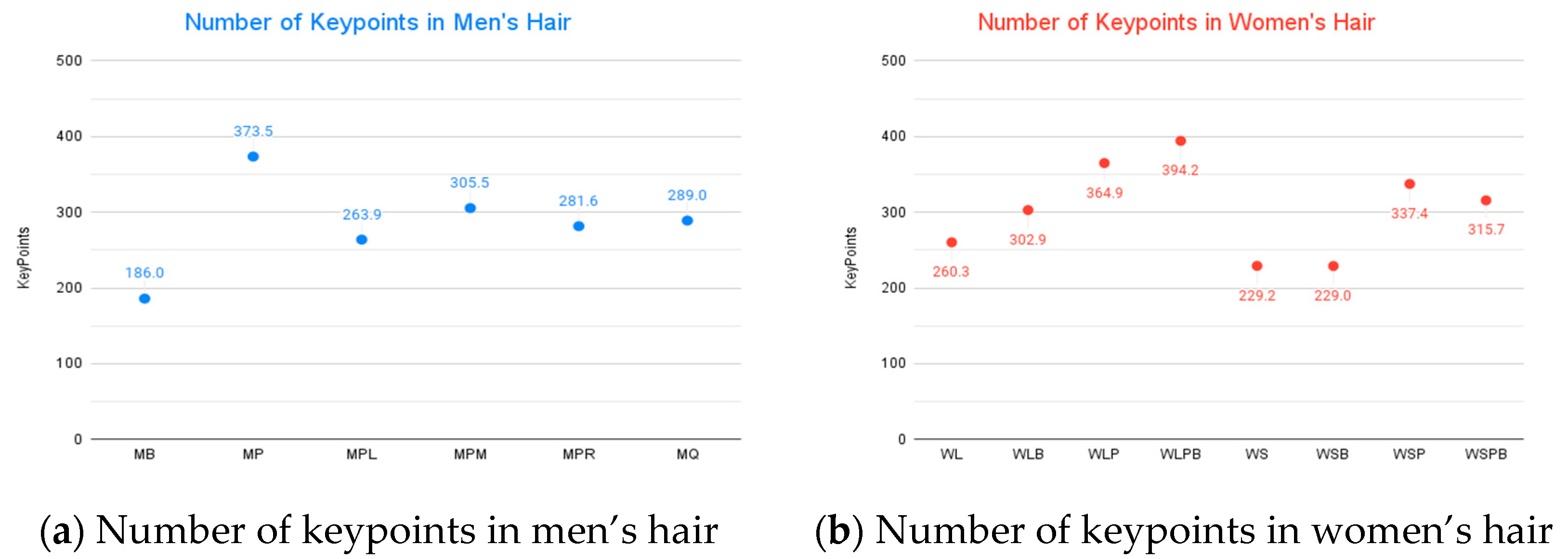

As illustrated in

Figure 15, the count of keypoints fluctuates based on hair length and curvature. Within female hairstyles, “Women’s Long Perm Bangs” (WLPB) exhibited the highest mean number of keypoints at 394.2. Conversely, “Men’s Blocked Hair with Forehead Coverage” (MB) registered the lowest average for men’s hairstyles, counting 186 keypoints. Generally, lengthier hairstyles exhibited a greater number of keypoints than their shorter counterparts, and curvier styles, such as perms, outnumbered the keypoints in straight hair.

We examined the variations in the number of keypoints according to the contour of each hairstyle. For the keypoint matching procedure, we employed the Brute-Force Matcher. This matcher assesses the keypoints from the database of hair images against those of the input hair image, subsequently pinpointing the keypoints that most closely align in terms of similarity.

To verify accuracy, we executed 2 distinct tests, leveraging 3 images for each of the 14 hairstyles, culminating in a set of 42 input photos. By applying these two accuracy assessments and scrutinizing the matching precision, we can gauge the effectiveness and accuracy of our hairstyle recommendation system.

4.2.1. Accuracy Test 1

Our initial matching approach calculated a candidate pool based on a greater number of keypoint matches between the input hair photo and the images in the database. From this pool, the highest score was chosen. This score was derived by dividing the number of keypoints in the input photo by those in each image from the database. For each hairstyle in the database, we determined scores using 10 images and opted for the highest score as the matching outcome. Nonetheless, if the number of keypoints in the input image surpasses those in the database, the outcome may be skewed. For instance, an input photo labeled “Women’s Long Perm” (WLP) typically has more keypoints compared to male or shorter female hairstyles in our database. This led to inflated scores, compromising the accuracy of the match.

To rectify this, we incorporated a correction by using the number of keypoints in the hairstyle for normalization. Specifically, when executing a match, we utilized the image with the more substantial number of keypoints (from the input image) as the denominator. Accuracy was computed using Equation (1).

where

Scoremax denotes the maximum value of the matching scores for the 14 hairstyles.

Scoremin denotes the minimum value among the matching scores of the 14 hairstyles.

The denominator represents the range of matching scores. Scorecorrect represents the matching score for the input hairstyle. NMPinput stands for “number of matched keypoints of the input photo”, which refers to the count of keypoints that were successfully matched between the input photo and the database photos. Similarly, NMPdatabase means the number of matched keypoints in the database photos.

4.2.2. Accuracy Test 2

Hair image keypoints are matched according to their similarity. When contrasting hairstyles are compared, only a few keypoints align. Conversely, with similar hairstyles, there is a more significant overlap in matched keypoints.

The matching score can be derived from the proportion of these aligned keypoints. To mitigate any disproportionate effects when one image has a high matching ratio and the counterpart a lower one, we employed Equation (2).

where

NMPinput and

NMPdatabase have the same meaning as those mentioned above.

Totalinput represents the total number of keypoints extracted from the input photo, whereas

Totaldatabase represents the total number of keypoints extracted from the database. Here, the total number of keypoints in the database refers to the number of keypoints for each image in the database.

Scoremax and

Scoremin are the same as those in Equation (1).

The matching score is derived from two distinct rounds of analysis. The first round determines the number of matched keypoints between the input hair image and those in the database. Conversely, the second round assesses keypoints matched between the database images and the input hair image.

The matching score is computed by dividing the aggregate of matched keypoints from both rounds by the combined total keypoints from the input and the database. This procedure yields the proportion of matched keypoints for each hair image. The resultant score ranges from 0.0 to 1.0, with a higher score signifying greater resemblance between hair images. If two images are perfectly congruent, every keypoint aligns, causing both the numerator and the denominator of the formula to be identical, leading to a maximum matching score of 1.0. Subsequently, scores for each individual image in both the input and database are evaluated, culminating in an average score for each hairstyle type. This average represents the definitive score for each hairstyle. The formula detailed in Accuracy Test 1 was employed to ascertain the system’s precision.

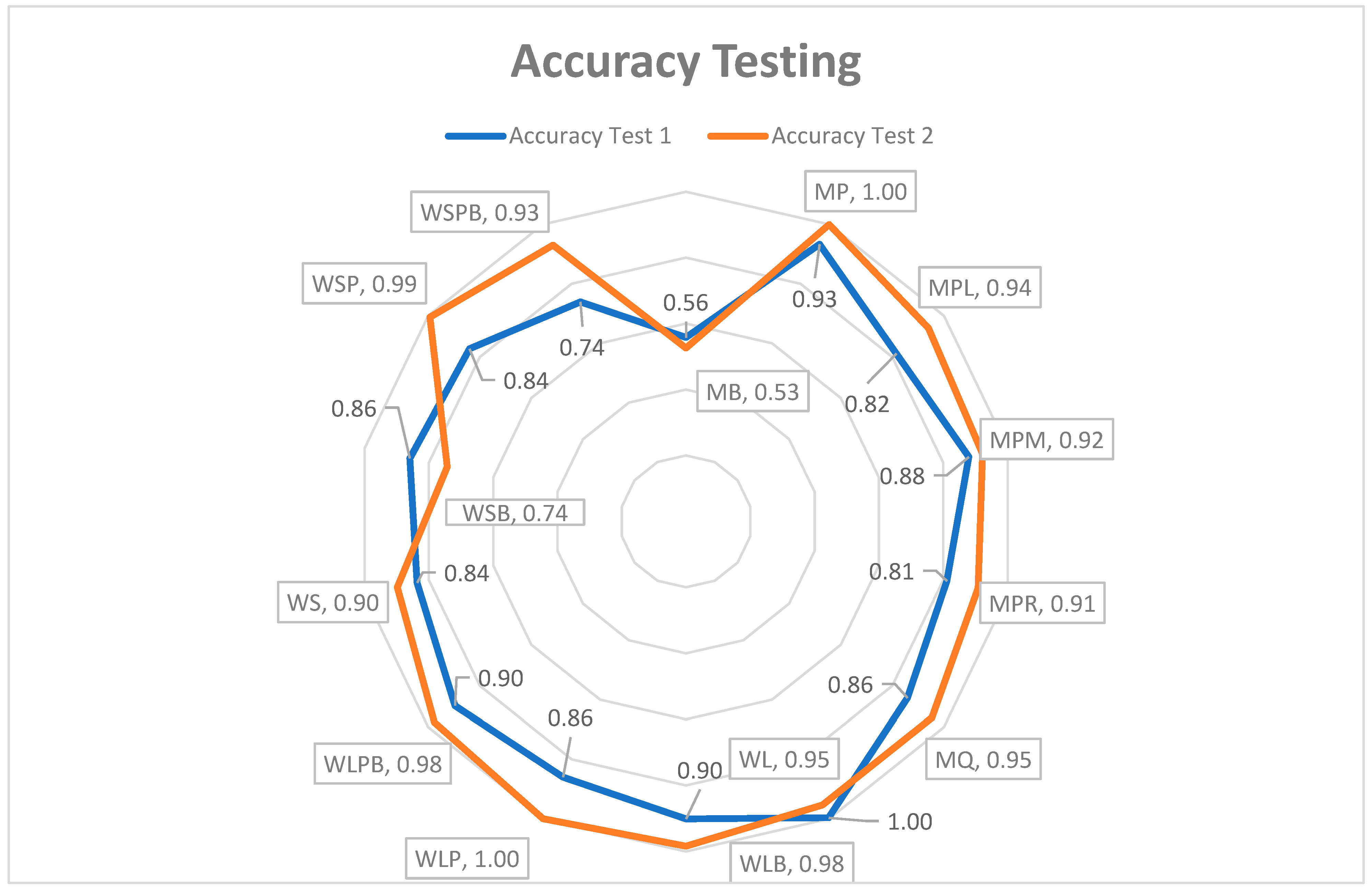

4.2.3. Comparison Results

The summary of the accuracy verification test results is as follows:

Accuracy Test 2 outperformed Accuracy Test 1 for the majority of the 14 hairstyles, demonstrating superior accuracy in 12 out of the 14 categories. Nevertheless, for the MB hairstyle, Test 1 registered a marginally superior average accuracy of 0.56, compared to the 0.53 recorded by Test 2. A similar trend was observed for the WSB hairstyle, where Test 1 edged out slightly in accuracy. In contrast, for the other 12 categories, Test 2 consistently surpassed Test 1 in accuracy.

As depicted in

Figure 16, a comparative analysis between Accuracy Tests 1 and 2 was conducted. Test 1 yielded an average accuracy of 0.8418, while Test 2, exhibiting a refined matching methodology, recorded a superior average accuracy of 0.9092. This underscores the heightened accuracy achieved by Test 2 in the matching procedure. On the whole, Test 2 surpassed Test 1, evidencing the increased precision of its matching method. The advancements in Test 2 affirm the efficacy of its improved matching strategy.

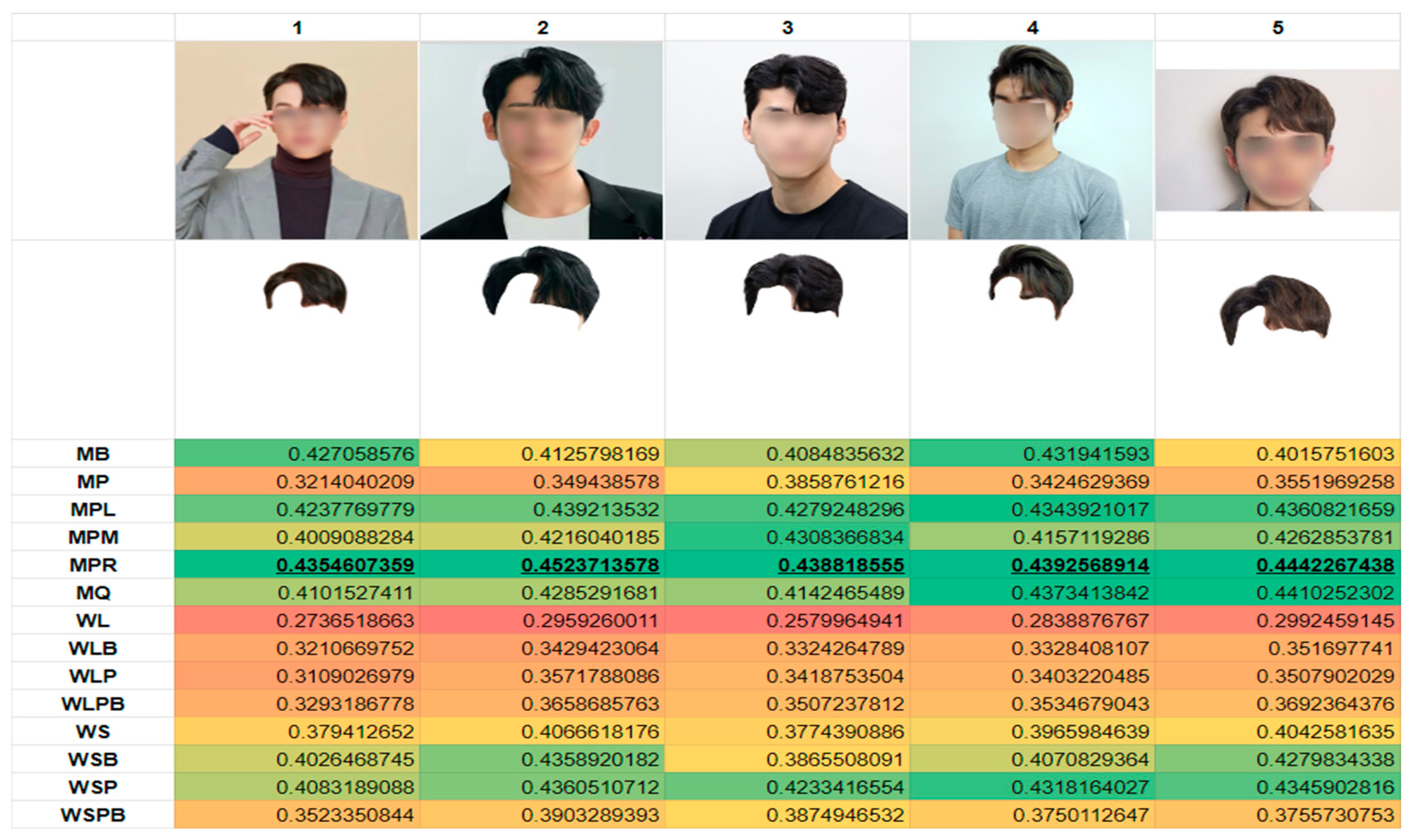

As illustrated in

Figure 17, the matching outcomes for the men’s locked hair with a forehead-covered (MB) style are presented. The peak score was achieved for the identical MB hairstyle, signifying a precise match. Notably, similar male hairstyles garnered elevated scores, whereas markedly distinct styles, such as long female hair or perm hairstyles, registered diminished scores. As shown in

Figure 18, for the side hairstyle, all the three categories, Left Part (MPL), Right Part (MPR), and Middle Part (MPM), yielded similarly high scores, with the forehead slightly exposed. The direction or shape of the part can vary based on the angles or specific styles; therefore, even in the case of the Right Part (MPR), there are instances where a different direction of the part, such as the Left Part (MPL) or Middle Part (MPM), achieves the highest score.

Figure 19 shows that for the Women’s Short Hair (WS) category, short hairstyles consistently yield higher scores, on average. There may be slight variations in the scores due to the presence of hair texture or slight deviations. Overall, for short hairstyles without any significant outliers, the categorization was consistently accurate from various perspectives.

For the Women’s Long Perm (WLP) category, hairstyles with prominent features resulted in significantly lower scores in the male hair category. In contrast, hairstyles in the female hair category, especially perm styles, exhibited higher scores (

Figure 20).

5. Conclusions and Discussion

In this study, we introduce a state-of-the-art, VR-based remote hair design training system, addressing the challenges tied to physical attendance in hairstyling education. By tapping into virtual environments and harnessing the immersive potential of VR technology, we have crafted a platform that facilitates collaboration and the synthesis of hairstyling techniques remotely, thus removing the need for tangible tools and sidestepping geographical barriers.

Our system utilizes advanced image processing techniques to segment hair from user-provided photographs. With a meticulous matching approach, these segmented hair sections are categorized into specific styles by our expert team. These identified hairstyles are then fluidly incorporated into the system as detailed 3D models, ready for users’ virtual avatars. This capability empowers users to vividly visualize and engage with their chosen hairstyle, providing a deeply immersive experience.

A fundamental component of our system is the VR HMD interaction mapping. This feature allows for virtual hair cutting and styling. Capitalizing on intuitive hand gestures and movements, users are able to closely emulate and appreciate the nuances of hands-on hairstyling.

Additionally, we have instituted a multiplayer framework, fostering a space where hairstylists and learners can collaboratively hone their skills and share insights. This multiplayer dimension not only augments interaction but also supports peer learning and nurtures a sense of connectedness, even across distances.

Offering a cost-effective alternative to conventional in-person hair beauty education, our system meets the escalating demand for touch-free learning avenues. Our VR-based training system, with its immersive qualities, ensures that learners effectively develop and sharpen their hairstyling skills within a secure, accessible, and vibrant virtual setting.

Our experimental results, drawn from accuracy tests, vouch for the system’s efficacy and dependability in accurately depicting and emulating diverse hairstyles. The enhancements observed in the matching procedure affirm the robustness and exactness of our methodology, amplifying the overall credibility and precision of the remote hair design training.

In summation, this research stands as a notable advancement in remote hair design training, synergizing cutting-edge VR technology with an exhaustive suite of tools. Through this innovation in hairstyle education delivery, we’re paving the way for hairstylists and learners to cultivate their creativity, broaden their expertise, and embark on a novel educational expedition in hair design.

Finally, as a potential avenue for enhancement, the incorporation of haptic feedback during user–hair tool interactions could address the immersion limitation. These interactions, coupled with hair styling, amplify the sense of realism. In future research, it would be plausible to explore aspects such as user interactions and user evaluation feedback, thereby enriching the overall user experience. We also believe that the principles of this application can be utilized in other disciplines that require hand–eye coordination in multi-user environments for training, such as surgeons, nurses, and operating theatres.

Author Contributions

Conceptualization, S.C.; methodology, S.C.; software, C.S.; validation, S.C.; formal analysis, S.C.; investigation, C.S.; resources, C.S.; writing—original draft preparation, S.C.; writing—review and editing, S.C.; visualization, S.C.; supervision, S.C.; project administration, C.S.; funding acquisition, S.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Basic Science Research Program through the National Research Foundation of Korea (NRF), funded by the Ministry of Science and ICT (No. 2021R1F1A104540111).

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to express their gratitude to S. W. Yoo and S. H. Kim for their initial assistance with the setup. I would also like to extend my gratitude to Y. H. Lee, K. S. Park, and C. R. Lee for their assistance in the initial stages of coding and modeling.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fink, B.; Hufschmidt, C.; Hirn, T.; Will, S.; McKelvey, G.; Lankhof, J. Age, Health and Attractiveness Perception of Virtual (Rendered) Human Hair. Front. Psychol. 2016, 7, 1893. [Google Scholar] [CrossRef]

- Holmes, H. Hair, the Hairdresser and the Everyday Practices of Women’s Hair Care; University of Sheffield: Sheffield, UK, 2010. [Google Scholar]

- Jung, S.; Lee, S.-H. Hair Modeling and Simulation by Style. Comput. Graph. Forum 2018, 37, 355–363. [Google Scholar] [CrossRef]

- Iben, H.; Brooks, J.; Bolwyn, C. Holding the Shape in Hair Simulation. In Proceedings of the ACM SIGGRAPH 2019 Talks, Los Angeles, CA, USA, 28 July–1 August 2019; ACM: New York, NY, USA, 2019. [Google Scholar]

- Yang, L.; Shi, Z.; Zheng, Y.; Zhou, K. Dynamic Hair Modeling from Monocular Videos Using Deep Neural Networks. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Fei, Y.; Maia, H.T.; Batty, C.; Zheng, C.; Grinspun, E. A Multi-Scale Model for Simulating Liquid-Hair Interactions. ACM Trans. Graph. 2017, 36, 1–17. [Google Scholar] [CrossRef]

- Ronda, E.; Hollund, B.E.; Moen, B.E. Airborne Exposure to Chemical Substances in Hairdresser Salons. Environ. Monit. Assess. 2009, 153, 83–93. [Google Scholar] [CrossRef]

- Bae, Y.; Shin, H. COVID 19, Accelerates the Contactless Society. Issue Anal. 2020, 416, 1–26. [Google Scholar]

- Cho, H. A Study on the Use of Immersive Media Contents Design Based on Extended Reality (XR) Technology in Digital Transformation Era. J. Korean Soc. Des. Cult. 2020, 26, 497–507. [Google Scholar] [CrossRef]

- Noh, K. A Study on Digital New Deal Strategy for Inclusive Innovative Growth and Job Creation. J. Digit. Conver-Gence 2020, 18, 23–33. [Google Scholar]

- Kwok, A.O.J.; Koh, S.G.M. COVID-19 and Extended Reality (XR). Curr. Issues Tourism 2021, 24, 1935–1940. [Google Scholar] [CrossRef]

- Jung, T.; Chung, N.; Leue, M.C. The Determinants of Recommendations to Use Augmented Reality Technologies: The Case of a Korean Theme Park. Tour. Manag. 2015, 49, 75–86. [Google Scholar] [CrossRef]

- Kerawalla, L.; Luckin, R.; Seljeflot, S.; Woolard, A. Making It Real”: Exploring the Potential of Augmented Reality for Teaching Primary School Science. Virtual Real. 2006, 10, 163–174. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; Rossmann, A.; tom Dieck, M.C. An Adoption Framework for Mobile Augmented Reality Games: The Case of Pokémon Go. Comput. Human Behav. 2017, 76, 276–286. [Google Scholar] [CrossRef]

- Glegg, S. Virtual Reality for Brain Injury Rehabilitation: An Evaluation of Clinical Practice, Therapists’ Adoption and Knowledge Translation; University of British Columbia: Vancouver, BC, Canada, 2012. [Google Scholar]

- Chuah, S.H.-W. Why and Who Will Adopt Extended Reality Technology? Literature Review, Synthesis, and Future Research Agenda. SSRN Electron. J. 2018. [Google Scholar] [CrossRef]

- Çöltekin, A.; Lochhead, I.; Madden, M.; Christophe, S.; Devaux, A.; Pettit, C.; Lock, O.; Shukla, S.; Herman, L.; Stachoň, Z.; et al. Extended Reality in Spatial Sciences: A Review of Research Challenges and Future Directions. ISPRS Int. J. Geoinf. 2020, 9, 439. [Google Scholar] [CrossRef]

- Charissis, V.; Falah, J.; Lagoo, R.; Alfalah, S.F.M.; Khan, S.; Wang, S.; Altarteer, S.; Larbi, K.B.; Drikakis, D. Employing Emerging Technologies to Develop and Evaluate In-Vehicle Intelligent Systems for Driver Support: Infotainment AR HUD Case Study. Appl. Sci. 2021, 11, 1397. [Google Scholar] [CrossRef]

- Branca, G.; Resciniti, R.; Loureiro, S.M.C. Virtual Is so Real! Consumers’ Evaluation of Product Packaging in Virtual Reality. Psychol. Mark. 2022, 40, 596–609. [Google Scholar] [CrossRef]

- Abuhammad, A.; Falah, J.; Alfalah, S. MedChemVR”: A Virtual Reality Game to Enhance Medicinal Chemistry Education. Multimodal Technol. Interact. 2021, 5, 10. [Google Scholar] [CrossRef]

- Gao, L.; Wan, B.; Liu, G.; Xie, G.; Huang, J.; Meng, G. Investigating the Effectiveness of Virtual Reality for Culture Learning. Int. J. Hum. Comput. Interact. 2021, 37, 1771–1781. [Google Scholar] [CrossRef]

- Colombo, G.; Facoetti, G.; Rizzi, C.; Vitali, A. Mixed Reality to Design Lower Limb Prosthesis. Comput. Aided Des. Appl. 2016, 13, 799–807. [Google Scholar] [CrossRef][Green Version]

- Bonfanti, S.; Gargantini, A.; Vitali, A. A Mobile Application for the Stereoacuity Test. In Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; pp. 315–326. ISBN 9783319210698. [Google Scholar]

- Lorusso, M.; Rossoni, M.; Colombo, G. Conceptual Modeling in Product Design within Virtual Reality Environments. Comput.-Aided Des. Appl. 2020, 18, 383–398. [Google Scholar] [CrossRef]

- Rossoni, M.; Bergonzi, L.; Colombo, G. Integration of Virtual Reality in a Knowledge-Based Engineering System for Prelimi-Nary Configuration and Quotation of Assembly Lines. Comput.-Aided Des. Appl. 2019, 16, 329–344. [Google Scholar] [CrossRef]

- Pasupa, K.; Sunhem, W.; Loo, C.K. A Hybrid Approach to Building Face Shape Classifier for Hairstyle Recommender System. Expert Syst. Appl. 2019, 120, 14–32. [Google Scholar] [CrossRef]

- Liu, S.; Liu, L.; Yan, S. Fashion Analysis: Current Techniques and Future Directions. IEEE Multimed. 2014, 21, 72–79. [Google Scholar] [CrossRef]

- Kim, S.; Song, M.; Joo, H.; Park, H.; Han, Y. Development of Smart Mirror System for Hair Styling. J. Korea Inst. Electron. Commun. Sci. 2020, 15, 93–100. [Google Scholar]

- Scheuermann, T. Practical Real-Time Hair Rendering and Shading. In Proceedings of the ACM SIGGRAPH 2004 Sketches on—SIGGRAPH ’04, Los Angeles, CA, USA, 8–12 August 2004; ACM Press: New York, NY, USA, 2004. [Google Scholar]

- Derouet-Jourdan, A.; Bertails-Descoubes, F.; Daviet, G.; Thollot, J. Inverse Dynamic Hair Modeling with Frictional Contact. ACM Trans. Graph. 2013, 32, 1–10. [Google Scholar] [CrossRef]

- Petrovic, L.; John, R.A. Hair Rendering Method and Apparatus. U.S. Patent 7,327,360, 5 February 2008. [Google Scholar]

- Ishii, M.; Itoh, T. Viewpoint Selection for Sketch-Based Hairstyle Modeling. In Proceedings of the 2020 Nicograph International (NicoInt), Tokyo, Japan, 5–6 June 2020; IEEE: New York, NY, USA, 2020. [Google Scholar]

- Fadhila, P.A.; Novamizanti, L. Aplikasi Try-On Hairstyle Berbasis Augmented Reality. Techné J. Ilm. Elektroteknika 2020, 19, 55–70. [Google Scholar] [CrossRef]

- Sun, Y.; Geng, L.; Dan, K. Design of Smart Mirror Based on Raspberry Pi. In Proceedings of the 2018 International Conference on Intelligent Transportation, Big Data & Smart City (ICITBS), Xiamen, China, 25–26 January 2018; IEEE: New York, NY, USA, 2018. [Google Scholar]

- Figaro 1K. Available online: http://projects.i-ctm.eu/it/progetto/figaro-1k (accessed on 23 August 2023).

- Kim, H.; Chung, Y. Effect of Input Data Video Interval and Input Data Image Similarity on Learning Accuracy in 3D-CNN. Int. J. Internet Broadcast. Commun. 2021, 13, 208–217. [Google Scholar]

- Download Archive; Beta Program Avatar Maker Pro—3D Avatar from a Single Selfie. Available online: https://assetstore.unity.com/packages/tools/modeling/avatar-maker-pro-3d-avatar-from-a-single-selfie-134800 (accessed on 23 August 2023).

- Sung, C.; Park, K.; Chin, S. SURF Based Hair Matching and VR Hair Cutting. Int. J. Adv. Smart Converg. 2022, 11, 49–55. [Google Scholar]

- PyTorch. Available online: https://pytorch.org (accessed on 23 August 2023).

- SteamVR—Valve Corporation. Available online: https://www.steamvr.com/en/ (accessed on 23 August 2023).

- OpenCV—Open Computer Vision Library. Available online: https://opencv.org (accessed on 23 August 2023).

- Beta Program Unity Real-Time Development Platform. Available online: https://unity.com/en (accessed on 23 August 2023).

- Park, S.; Yoo, S.; Chin, S. A Study of VR Interaction for Non-Contact Hair Styling. J. Converg. Cult. Technol. 2022, 8, 367–372. [Google Scholar]

- Ji, Z.; Liu, L.; Chen, Z.; Wang, G. Easy Mesh Cutting. Comput. Graph. Forum 2006, 25, 283–291. [Google Scholar] [CrossRef]

- Mesh Slicer. Available online: https://www.bzsoft.net/post/mesh-slicer-the-process-of-slicing (accessed on 23 August 2023).

Figure 1.

Prior stage system flow.

Figure 1.

Prior stage system flow.

Figure 2.

Hair matching with 12,000 samples.

Figure 2.

Hair matching with 12,000 samples.

Figure 3.

Shading effects of hair models.

Figure 3.

Shading effects of hair models.

Figure 4.

Mapping hair cutting actions using Valve Index controllers and mapped actions for hair cutting.

Figure 4.

Mapping hair cutting actions using Valve Index controllers and mapped actions for hair cutting.

Figure 5.

Custom actions for Valve Index controllers.

Figure 5.

Custom actions for Valve Index controllers.

Figure 6.

Haircut operation.

Figure 6.

Haircut operation.

Figure 7.

Before haircut, in progress, and after haircut.

Figure 7.

Before haircut, in progress, and after haircut.

Figure 8.

Rod winding operation (left 3 images) and controller interaction (right 3 images).

Figure 8.

Rod winding operation (left 3 images) and controller interaction (right 3 images).

Figure 9.

11 hair sets (left) and hair perm styling.

Figure 9.

11 hair sets (left) and hair perm styling.

Figure 10.

Various viewpoints.

Figure 10.

Various viewpoints.

Figure 11.

Software architecture.

Figure 11.

Software architecture.

Figure 12.

Multi-user service components and procedures.

Figure 12.

Multi-user service components and procedures.

Figure 13.

Multiplayer network flow.

Figure 13.

Multiplayer network flow.

Figure 14.

VR User interface for multi-user environments.

Figure 14.

VR User interface for multi-user environments.

Figure 15.

Number of keypoints by hair styles of Men’s hair and Women’s hair.

Figure 15.

Number of keypoints by hair styles of Men’s hair and Women’s hair.

Figure 16.

Accuracy testing results.

Figure 16.

Accuracy testing results.

Figure 17.

Matching results for MB hair style.

Figure 17.

Matching results for MB hair style.

Figure 18.

Matching results for MPR hair style.

Figure 18.

Matching results for MPR hair style.

Figure 19.

Matching results for WLP hair style.

Figure 19.

Matching results for WLP hair style.

Figure 20.

Matching results for WS hair style.

Figure 20.

Matching results for WS hair style.

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).