Adaptive Absolute Attitude Determination Algorithm for a Fine Guidance Sensor

Abstract

1. Introduction

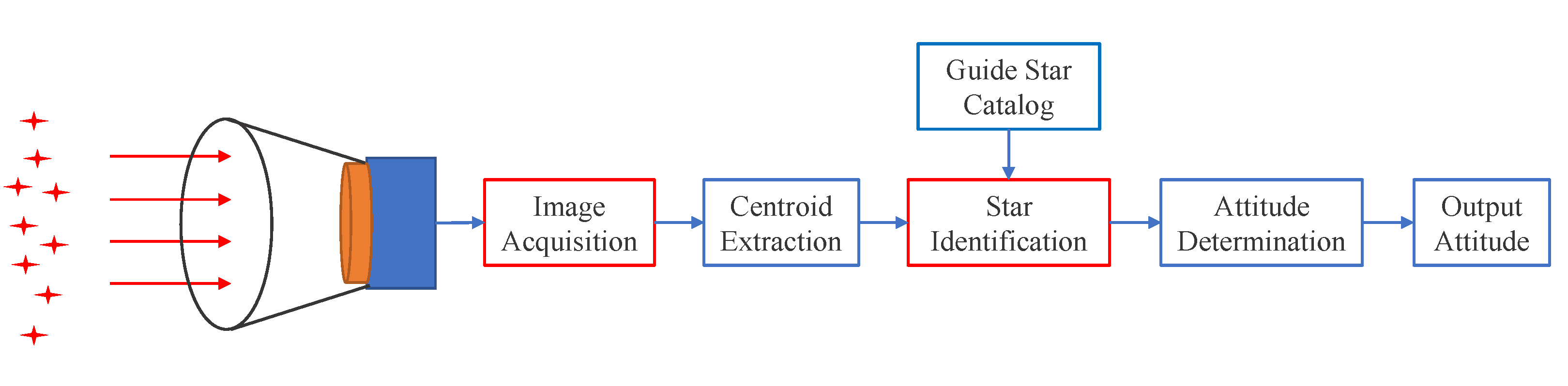

2. FGS Image Acquisition

2.1. The Relationship between the NOS and the Attitude Accuracy of an FGS

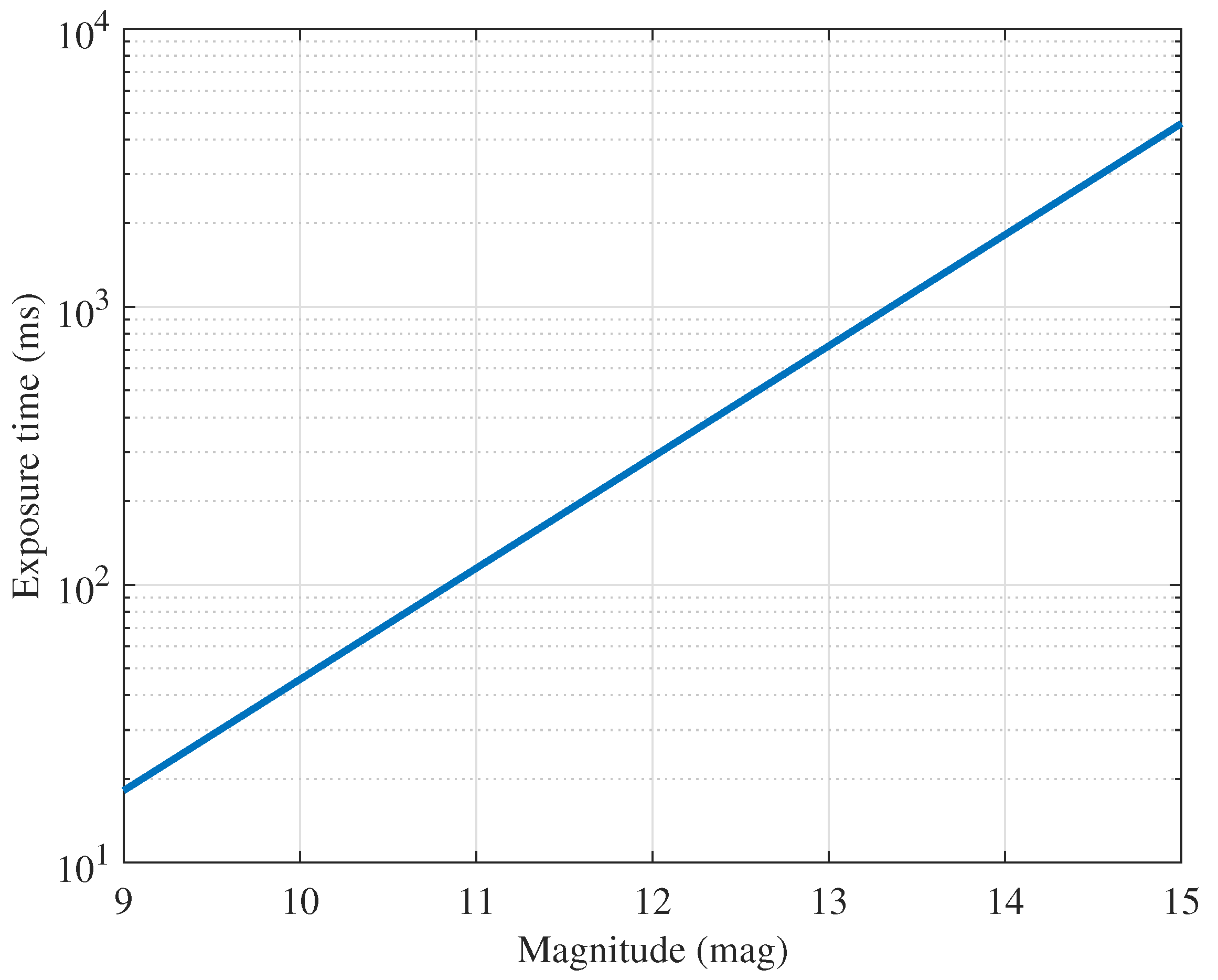

2.2. The Relationship between the Exposure Time of an FGS and the NOS Detected

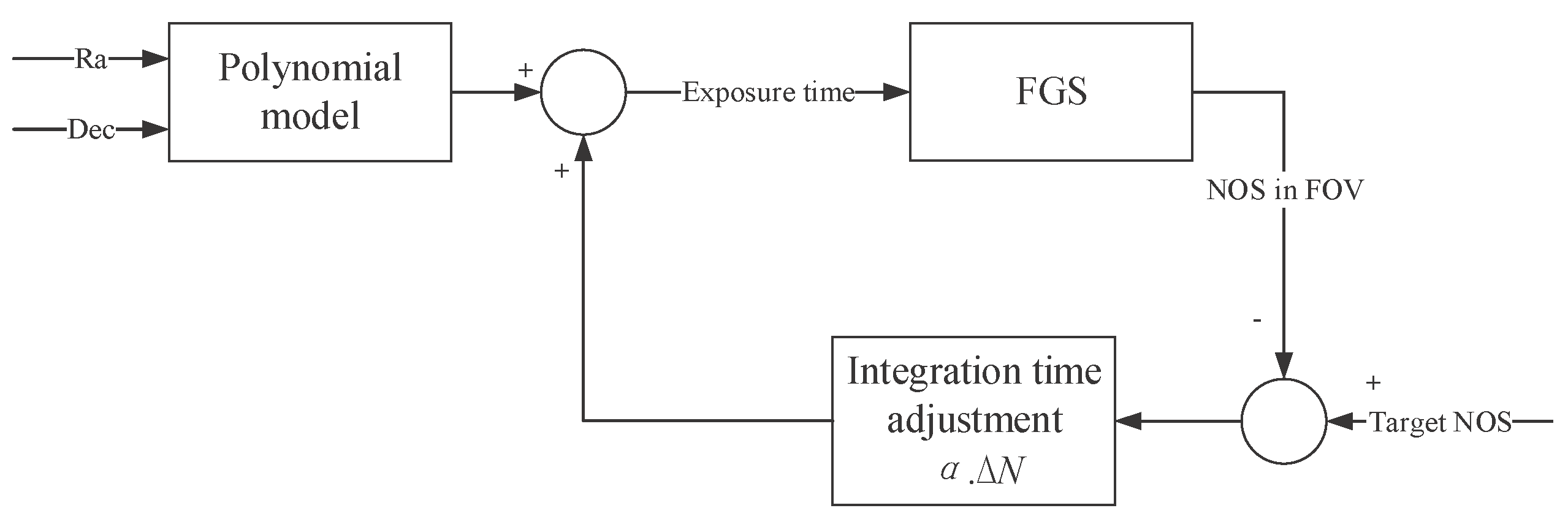

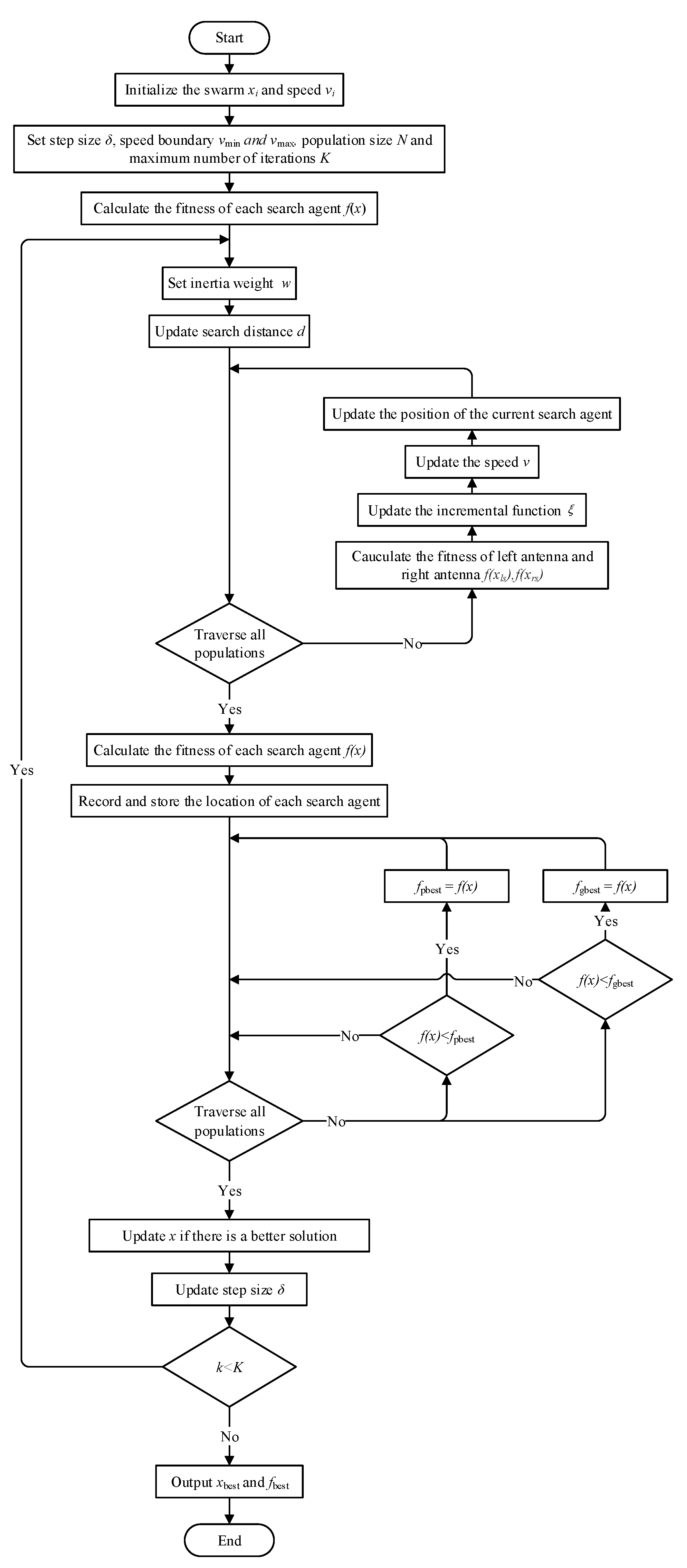

2.3. An Adaptive Adjustment Algorithm for the NOS in the FOV of the FGS

3. Absolute Attitude Determination of an FGS

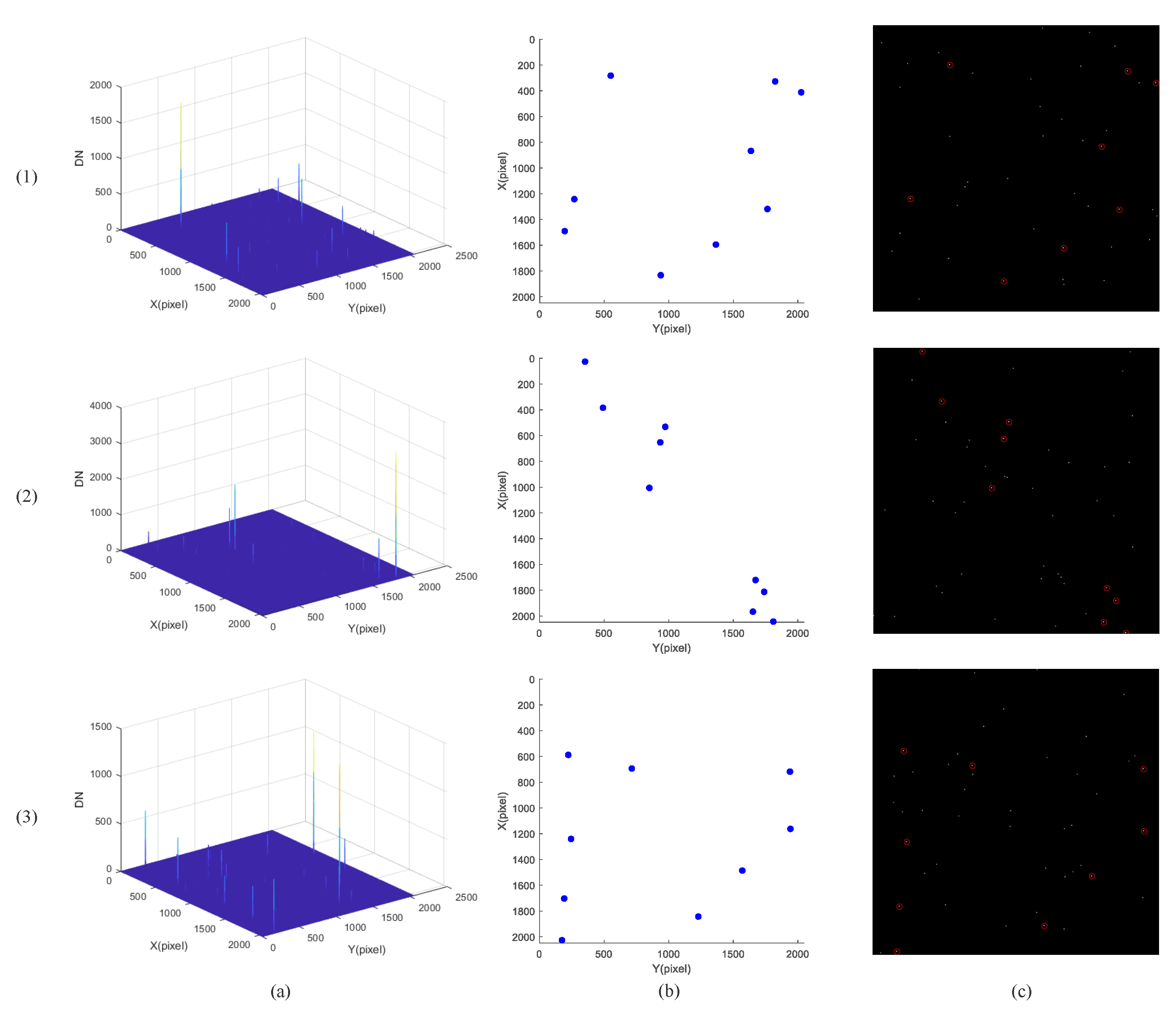

3.1. Multi-Star Centroid Extraction

3.1.1. Star Map Preprocessing

3.1.2. Multi-Connected Domain Segmentation

3.1.3. Centroid Positioning

3.2. Star Identification

3.2.1. Coordinate Transformation

3.2.2. Feature Construction

3.2.3. Matching and Recognition

- The feature vector of the guide star within the FOV is subtracted from the feature vector of the observing star, and a numerical matrix × is obtained.

- The values in the × numerical matrix are judged., marked as 1 if the value is greater than or equal to 0, otherwise marked as 0, and an × label matrix is obtained.

- The AND operation is performed on the elements of each row of the label matrix to obtain an × 1 column vector.

- The guide star feature vector corresponding to element 1 in the × 1 column vector is the matching result. When is large enough, the match is unique.

3.3. QUEST Algorithm

4. Experimental Results

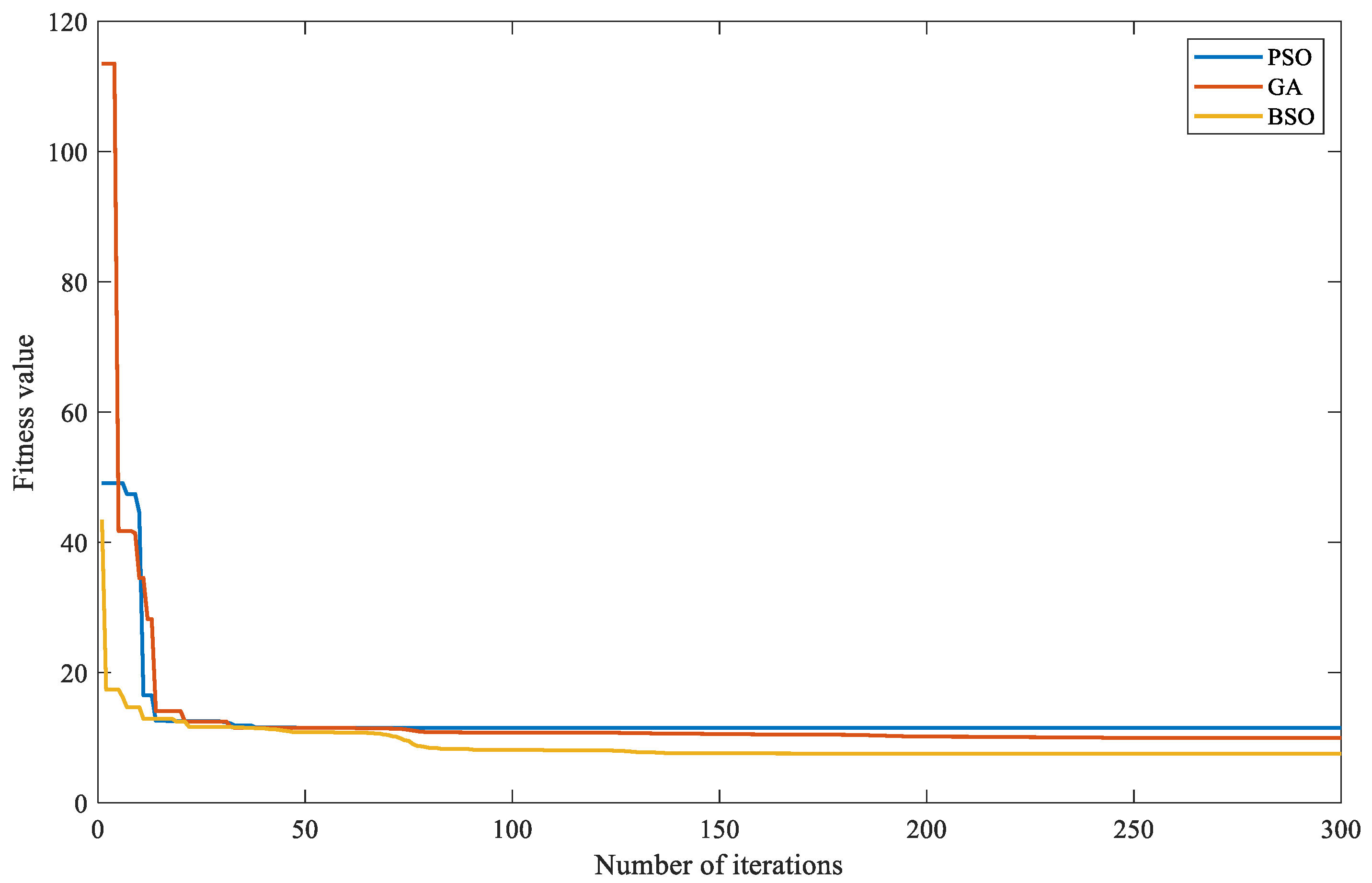

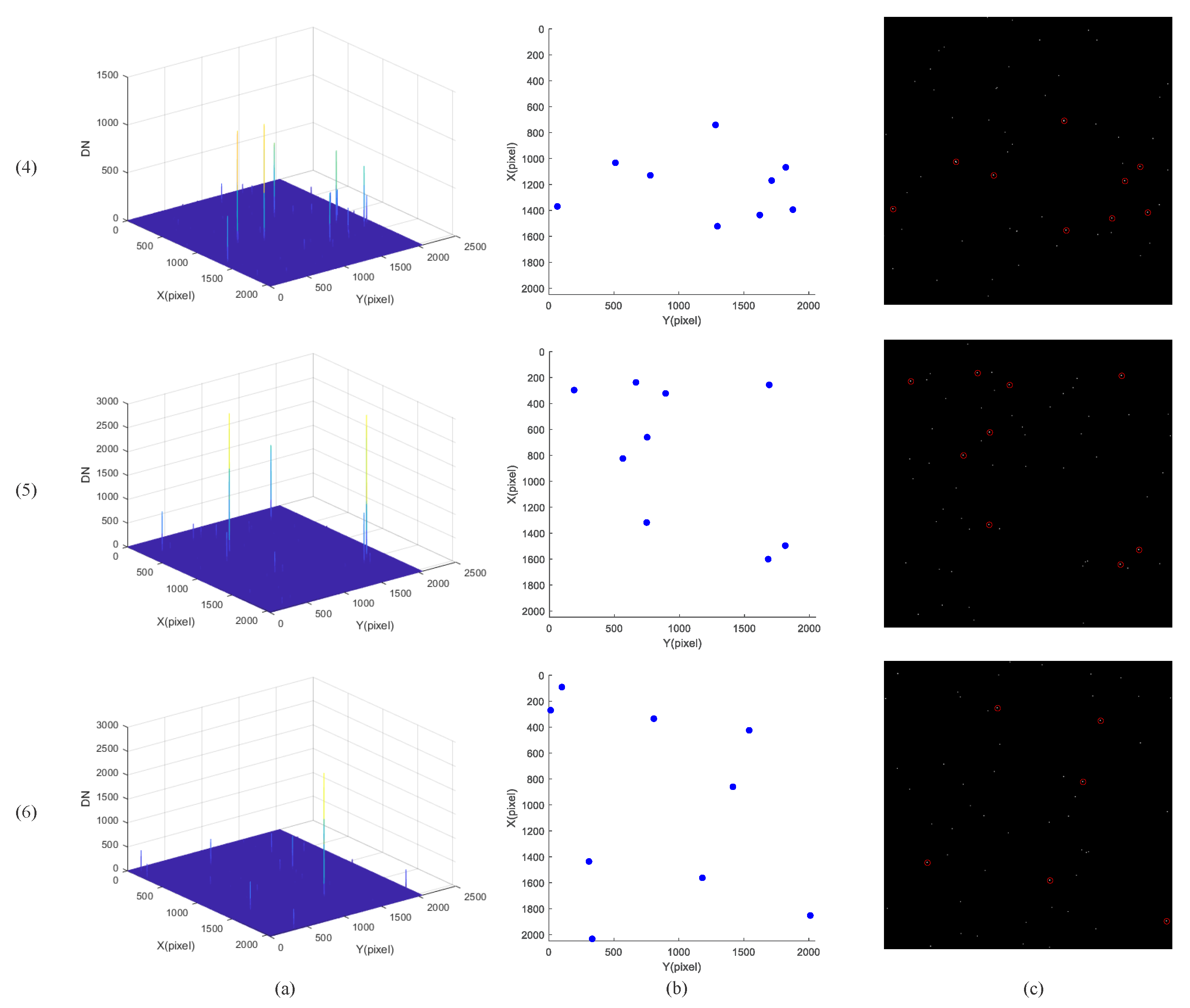

4.1. Results of Adaptive Adjustment Algorithm for the NOS in the FOV

4.2. Absolute Attitude Determination Results

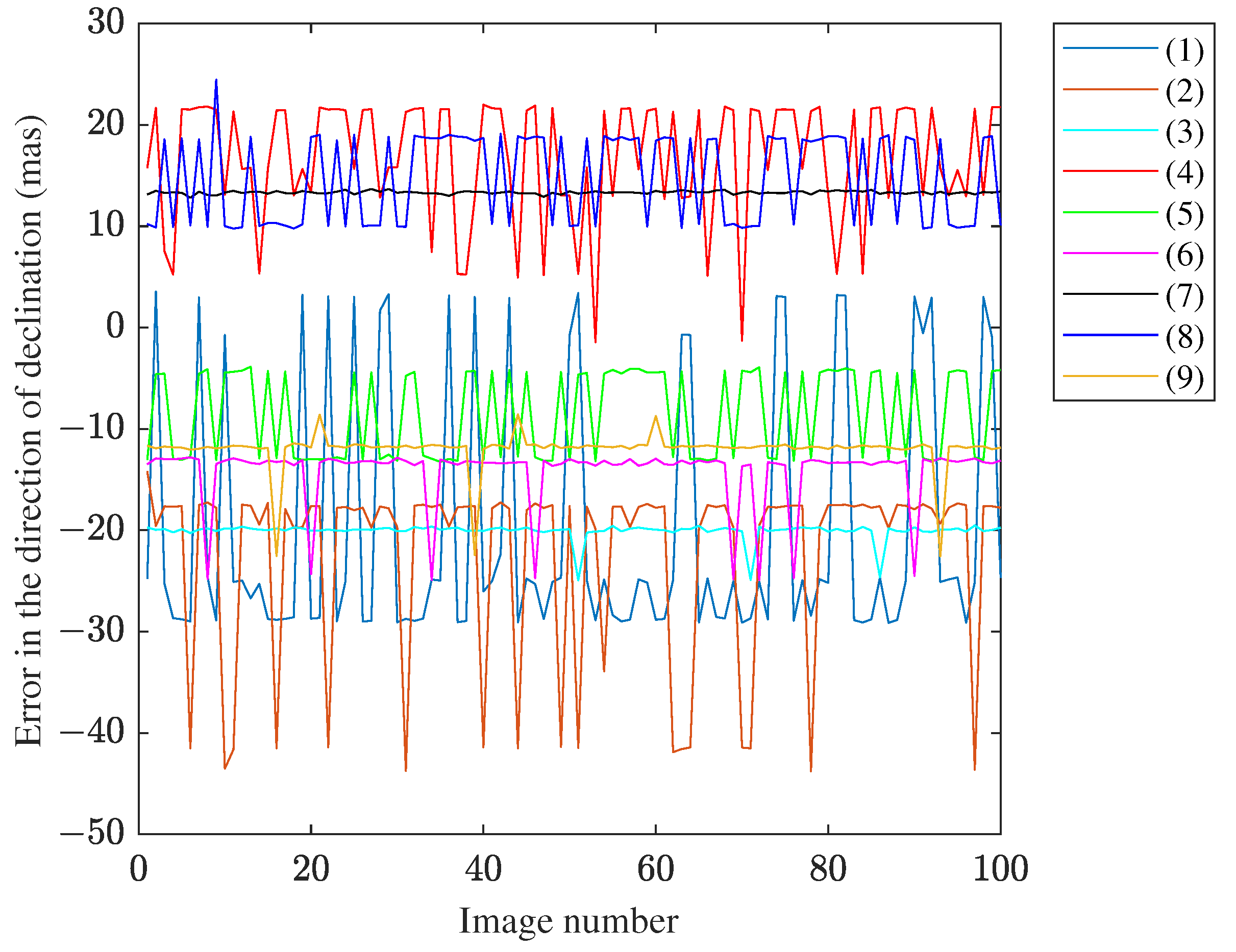

4.3. Absolute Attitude Error Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhan, H. Consideration for a large-scale multi-color imaging and slitless spectroscopy survey on the Chinese space station and its application in dark energy research. Sci. Sin. Phys. Mech. Astron. 2011, 41, 1441–1447. [Google Scholar] [CrossRef]

- CGTN. Chinese Xuntian Space Telescope to Unravel Cosmic Mysteries in 2023. 2022. Available online: https://news.cgtn.com/news/2022-05-06/Chinese-Xuntian-Space-Telescope-to-unravel-cosmic-mysteries-in-2023-19Ojkqf3iQ8/index.html (accessed on 23 May 2023).

- Zhan, H. The Chinese Survey Space Telescope. 2021. Available online: http://ilariacaiazzo.com/wp-content/uploads/2021/09/HuZhanSlides.pdf (accessed on 23 May 2023).

- Zhan, H. The wide-field multiband imaging and slitless spectroscopy survey to be carried out by the Survey Space Telescope of China Manned Space Program. Chin. Sci. Bull. 2021, 66, 1290–1298. [Google Scholar] [CrossRef]

- Chen, H. Fine Guidance Sensor Processing and Optical Closed-Loop Semi-Physical Simulation in Space Telescope. Ph.D. Thesis, Shanghai Institute of Technical Physics, University of Chinese Academy of Sciences, Beijing, China, 2019. [Google Scholar]

- Bhatia, D. Attitude Determination and Control System Design of Sub-Arcsecond Pointing Spacecraft. J. Guid. Control. Dyn. 2021, 44, 295–314. [Google Scholar] [CrossRef]

- NASA. Hubble Space Telescope—Fine Guidance Sensors. 2018. Available online: https://www.nasa.gov/sites/default/files/atoms/files/hst_fine_guidance_fact_sheet1_0.pdf (accessed on 23 May 2023).

- Sridhar, B.; Aubrun, J.N.; Lorell, K. Design of a precision pointing control system for the space infrared telescope facility. IEEE Control Syst. Mag. 1986, 6, 28–34. [Google Scholar] [CrossRef]

- Rowlands, N.; Vila, M.B.; Evans, C.; Aldridge, D.; Desaulniers, D.L.; Hutchings, J.B.; Dupuis, J. JWST fine guidance sensor: Guiding performance analysis. In Proceedings of the Space Telescopes and Instrumentation 2008: Optical, Infrared, and Millimeter, Marseille, France, 23–28 June 2008; Oschmann, J.M., Jr., de Graauw, M.W.M., MacEwen, H.A., Eds.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2008; Volume 7010, p. 701036. [Google Scholar] [CrossRef]

- Bosco, A.; Saponara, M.; Procopio, D.; Carnesecchi, F.; Saavedra, G. High accuracy spacecraft attitude measurement: The Euclid Fine Guidance Sensor. In Proceedings of the 2019 IEEE 5th International Workshop on Metrology for AeroSpace (MetroAeroSpace), Turin, Italy, 19–21 June 2019; pp. 290–296. [Google Scholar] [CrossRef]

- Benedict, G.F.; Mcarthur, B.E.; Bean, J.L. HST FGS astrometry—The value of fractional millisecond of arc precision. Proc. Int. Astron. Union 2007, 3, 23–29. [Google Scholar] [CrossRef]

- Li, L.; Yuan, L.; Wang, L.; Zheng, R.; Wang, X.; Wu, Y. Influence of micro vibration on measurement and pointing control system of high-performance spacecraft from Hubble Space Telescope. Opt. Precis. Eng. 2020, 28, 2478–2487. [Google Scholar]

- NASA. Hubble: An Overview of the Space Telescope. 2021. Available online: https://www.nasa.gov/sites/default/files/atoms/files/hstoverview-v42021_1.pdf (accessed on 23 May 2023).

- Nelan, E.P.; Lupie, O.L.; McArthur, B.; Benedict, G.F.; Franz, O.G.; Wasserman, L.H.; Abramowicz-Reed, L.; Makidon, R.B.; Nagel, L. Fine guidance sensors aboard the Hubble Space Telescope: The scientific capabilities of these interferometers. In Proceedings of the Astronomical Interferometry, Kona, HI, USA, 20–28 March 1998; Reasenberg, R.D., Ed.; Conference Series. Society of Photo-Optical Instrumentation Engineers (SPIE): Bellingham, WA, USA, 1998; Volume 3350, pp. 237–247. [Google Scholar] [CrossRef]

- Bayard, D. An Overview of the Pointing Control System for NASA’s Space Infra-Red Telescope Facility (SIRTF). In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Austin, TX, USA, 11–14 August 2003. [Google Scholar] [CrossRef]

- Bayard, D.; Kang, B.; Brugarolas, P.; Boussalis, D. Focal plane calibration of the Spitzer space telescope. IEEE Control Syst. Mag. 2010, 29, 47–70. [Google Scholar] [CrossRef]

- Mainzer, A.K.; Young, E.T.; Greene, T.P.; Acu, N.; Jamieson, T.H.; Mora, H.; Sarfati, S.; van Bezooijen, R.W.H. Pointing calibration and reference sensor for the Space Infrared Telescope Facility. In Proceedings of the Space Telescopes and Instruments V, Kona, HI, USA, 20–28 March 1998; Bely, P.Y., Breckinridge, J.B., Eds.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 1998; Volume 3356, pp. 1095–1101. [Google Scholar] [CrossRef]

- Mainzer, A.K.; Young, E.T. On-orbit performance testing of the pointing calibration and reference sensor for the Spitzer Space Telescope. In Proceedings of the Optical, Infrared, and Millimeter Space Telescopes, Glasgow, UK, 21–25 June 2004; Mather, J.C., Ed.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2004; Volume 5487, pp. 93–100. [Google Scholar] [CrossRef]

- Mainzer, A.K.; Young, E.T.; Huff, L.W.; Swanson, D. Pre-launch performance testing of the pointing calibration and reference sensor for SIRTF. In Proceedings of the IR Space Telescopes and Instruments, Waikoloa, HI, USA, 22–28 August 2002; Mather, J.C., Ed.; International Society for Optics and Photonics (SPIE): Bellingham, WA, USA, 2003; Volume 4850, pp. 122–129. [Google Scholar] [CrossRef]

- Meza, L.; Tung, F.C.; Anandakrishnan, S.M.; Spector, V.A.; Hyde, T.T. Line of Sight Stabilization of James Webb Space Telescope. In Proceedings of the 27th Annual AAS Guidance and Control Conference, Breckenridge, CO, USA, 5–9 February 2005. [Google Scholar]

- NASA. JWST Fine Guidance Sensor. 2022. Available online: https://jwst-docs.stsci.edu/jwst-observatory-hardware/jwst-fine-guidance-sensor (accessed on 23 May 2023).

- Chayer, P.; Holfeltz, S.; Nelan, E.; Hutchings, J.; Doyon, R.; Rowlands, N. JWST Fine Guidance Sensor Calibration. 2010. Available online: https://www.stsci.edu/~INS/2010CalWorkshop/chayer.pdf (accessed on 23 May 2023).

- Greenhouse, M. The James Webb Space Telescope: Mission Overview and Status. In Proceedings of the AIAA SPACE 2012 Conference & Exposition, Big Sky, MT, USA, 2–9 March 2019. [Google Scholar] [CrossRef]

- Bosco, A.; Bacchetta, A.; Saponara, M.; Criado, G.S. Euclid pointing performance: Operations for the Fine Guidance Sensor reference star catalogue. In Proceedings of the 2018 SpaceOps Conference, Marseille, France, 28 May–1 June 2018. [Google Scholar] [CrossRef]

- Bacchetta, A.; Saponara, M.; Torasso, A.; Saavedra Criado, G.; Girouart, B. The Euclid AOCS science mode design. CEAS Space J. 2015, 7, 71–85. [Google Scholar] [CrossRef]

- Bosco, A.; Bacchetta, A.; Saponara, M. Euclid Star Catalogue Management for the Fine Guidance Sensor. In Proceedings of the DASIA 2015—DAta Systems in Aerospace, Barcelona, Spain, 19–21 May 2015; Ouwehand, L., Ed.; ESA Special Publication. ESA: Paris, France, 2015; Volume 732, p. 50. [Google Scholar]

- Liu, H.; Wei, X.; Li, J.; Wang, G. A Star Identification Algorithm Based on Recommended Radial Pattern. IEEE Sens. J. 2022, 22, 8030–8040. [Google Scholar] [CrossRef]

- Yan, X.L.; Xu, W.; Yang, G.L. Star Map Recognition Algorithm Based on Improved Log-Polar Transformation. Acta Opt. Sin. 2021, 41, 103–109. [Google Scholar]

- Rijlaarsdam, D.; Yous, H.; Byrne, J.; Oddenino, D.; Furano, G.; Moloney, D. Efficient Star Identification Using a Neural Network. Sensors 2020, 20, 3684. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Wang, H.; Jin, Z. An Efficient and Robust Star Identification Algorithm Based on Neural Networks. Sensors 2021, 21, 7686. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Wang, Z.Y.; Wang, B.D.; Yu, Z.Q.; Jin, Z.H.; Crassidis, J.L. An artificial intelligence enhanced star identification algorithm. Front. Inf. Technol. Electron. Eng. 2020, 21, 1661–1670. [Google Scholar] [CrossRef]

- Shuster, M.D. The quest for better attitudes. J. Astronaut. Sci. 2006, 54, 657–683. [Google Scholar] [CrossRef]

- Markley, F.L.; Crassidis, J.L. Static Attitude Determination Methods. In Fundamentals of Spacecraft Attitude Determination and Control; Springer: New York, NY, USA, 2014; pp. 183–233. [Google Scholar] [CrossRef]

- Black, H.D. A passive system for determining the attitude of a satellite. AIAA J. 1964, 2, 1350–1351. [Google Scholar] [CrossRef]

- Bayard, D. Fast observers for spacecraft pointing control. In Proceedings of the 37th IEEE Conference on Decision and Control (Cat. No.98CH36171), Tampa, FL, USA, 18 December 1998; Volume 4, pp. 4702–4707. [Google Scholar] [CrossRef]

- Markley, F.L.; Crassidis, J.L. Fundamentals of Spacecraft Attitude Determination and Control; Space Technology Library; Springer: New York, NY, USA, 2014; Volume 4. [Google Scholar] [CrossRef]

- Liebe, C. Accuracy performance of star trackers—A tutorial. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 587–599. [Google Scholar] [CrossRef]

- Zhang, J.; Lian, J.; Yi, Z.; Yang, S.; Shan, Y. High-Accuracy Guide Star Catalogue Generation with a Machine Learning Classification Algorithm. Sensors 2021, 21, 2647. [Google Scholar] [CrossRef]

- Zhang, G. Star Identification; National Defense Industry Press: Beijing, China, 2011. [Google Scholar]

- Zheng, T. Research on High-Speed Processing of Fine Guidance Sensor in Space Telescope. Ph.D. Thesis, Shanghai Institute of Technical Physics, University of Chinese Academy of Sciences, Beijing, China, 2018. [Google Scholar]

- Wang, T.; Yang, L.; Liu, Q. Beetle Swarm Optimization Algorithm: Theory and Application. arXiv 2018, arXiv:1808.00206. [Google Scholar] [CrossRef]

- Liu, B. The Design and Implementation of Star Image Centroid Detection Algorithm Based on FPGA. Master’s Thesis, Shanghai Jiao Tong University, Shanghai, China, 2019. [Google Scholar]

- Cheng, H.; Ding, R.; Hu, B.; Li, J.; Li, Y. Fast Extraction Method for Connected Domain of Infrared Remote Sensing Image Based on High Level Synthesis. Aerosp. Shanghai 2021, 38, 144–151. [Google Scholar] [CrossRef]

- Shuster, M.D.; Oh, S.D. Three-axis attitude determination from vector observations. J. Guid. Control 1981, 4, 70–77. [Google Scholar] [CrossRef]

- Farrell, J.L.; Stuelpnagel, J.; Wessner, R.H.; Velman, J.R.; Brook, J.E. A Least Squares Estimate of Satellite Attitude (Grace Wahba). SIAM Rev. 1966, 8, 384–386. [Google Scholar] [CrossRef]

- Zhou, X. Design and Implementation of an Attitude Determination Software System Based on Star Sensor. Master’s Thesis, Huazhong University of Science and Technology, Wuhan, China, 2013. [Google Scholar]

| Parameter | Value |

|---|---|

| Horizontal FOV | |

| Vertical FOV | |

| Focal length | 1290 mm |

| Aperture | 161.25 mm |

| Number of horizontal pixels | 2048 |

| Number of vertical pixels | 2048 |

| Horizontal pixel size | 5.5 m |

| Horizontal pixel size | 5.5 m |

| Bit depth | 12 bit |

| Right Acension | |||||

|---|---|---|---|---|---|

| Exposure Time (ms) | |||||

| Declination | |||||

| 54 | 26 | 48 | |||

| 42 | 25 | 50 | |||

| 31 | 25 | 57 | |||

| Coefficient | Value |

|---|---|

| 987.00 | |

| 987.00 | |

| −63.81 | |

| 0.84 | |

| 987.00 | |

| 218.55 | |

| −3.59 | |

| −989.00 | |

| 9.61 | |

| 29.20 |

| Index | LOS Direction | Calculated LOS Direction | ||||

|---|---|---|---|---|---|---|

| Without Noise | Noise | |||||

| Right Ascension () | Declination () | Right Ascension () | Declination () | Right Ascension () | Declination () | |

| 1 | 43.5 | 6.0 | 43.49999556 | 5.99999317 | 43.49999268 | 5.99999304 |

| 2 | 43.5 | 6.5 | 43.49999672 | 6.49999114 | 43.49999502 | 6.49999512 |

| 3 | 43.5 | 7.0 | 43.49999227 | 6.99999580 | 43.49999139 | 6.99999443 |

| 4 | 44.0 | 6.0 | 44.00000170 | 6.00000418 | 44.00000450 | 6.00000441 |

| 5 | 44.0 | 6.5 | 44.00000364 | 6.49999940 | 43.99999806 | 6.50000196 |

| 6 | 44.0 | 7.0 | 44.00000757 | 6.99999761 | 44.00000881 | 6.99999627 |

| 7 | 44.5 | 6.0 | 44.50000703 | 6.00000327 | 44.50001021 | 6.00000366 |

| 8 | 44.5 | 6.5 | 44.50000449 | 6.49999810 | 44.50000029 | 6.50000677 |

| 9 | 44.5 | 7.0 | 44.50000695 | 6.99999882 | 44.50000808 | 6.99999670 |

| Index | Error (mas) | |||

|---|---|---|---|---|

| Without Noise | Noise | |||

| Right Ascension | Declination | Right Ascension | Declination | |

| 1 | −16.0 | −24.6 | −26.4 | −25.1 |

| 2 | −11.8 | −31.9 | −17.9 | −17.6 |

| 3 | −27.8 | −15.1 | −31.0 | −20.1 |

| 4 | 6.1 | 15.0 | 16.2 | 15.9 |

| 5 | 13.1 | −2.2 | −7.0 | 7.1 |

| 6 | 27.2 | −8.6 | 31.7 | −13.4 |

| 7 | 25.3 | 11.8 | 36.8 | 13.2 |

| 8 | 16.2 | −6.8 | 1.0 | 24.4 |

| 9 | 25.0 | −4.3 | 29.1 | −11.9 |

| Index | RMS (mas) | |

|---|---|---|

| Right Ascension | Declination | |

| 1 | 23.5 | 23.7 |

| 2 | 18.9 | 24.0 |

| 3 | 30.6 | 20.1 |

| 4 | 9.0 | 17.8 |

| 5 | 8.3 | 9.6 |

| 6 | 30.6 | 14.5 |

| 7 | 36.7 | 13.3 |

| 8 | 10.0 | 15.4 |

| 9 | 30.0 | 12.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, Y.; Fang, C.; Zhang, Q.; Yin, D. Adaptive Absolute Attitude Determination Algorithm for a Fine Guidance Sensor. Electronics 2023, 12, 3437. https://doi.org/10.3390/electronics12163437

Yang Y, Fang C, Zhang Q, Yin D. Adaptive Absolute Attitude Determination Algorithm for a Fine Guidance Sensor. Electronics. 2023; 12(16):3437. https://doi.org/10.3390/electronics12163437

Chicago/Turabian StyleYang, Yuanyu, Chenyan Fang, Quan Zhang, and Dayi Yin. 2023. "Adaptive Absolute Attitude Determination Algorithm for a Fine Guidance Sensor" Electronics 12, no. 16: 3437. https://doi.org/10.3390/electronics12163437

APA StyleYang, Y., Fang, C., Zhang, Q., & Yin, D. (2023). Adaptive Absolute Attitude Determination Algorithm for a Fine Guidance Sensor. Electronics, 12(16), 3437. https://doi.org/10.3390/electronics12163437