A Practical Non-Profiled Deep-Learning-Based Power Analysis with Hybrid-Supervised Neural Networks

Abstract

:1. Introduction

1.1. Our Contributions

1.1.1. Presenting a Novel Architecture with a Hybrid-Supervised Neural Network

1.1.2. Applying the Proposed Architecture for Feature Enhancement

1.1.3. Altering the Proposed Architecture for Data Resynchronization

1.2. Organization

2. Preliminaries

2.1. Deep Learning

2.2. Multi-Layer Perceptron and Convolutional Neural Network

2.3. AES Encryption Algorithm

2.4. Differential Deep Learning Analysis

| Algorithm 1 Differential Deep Learning Analysis |

Input: N power traces {} of length L with corresponding plaintexts {} Output:

|

2.5. Autoencoder and Supervision Methods in Deep Learning

3. Proposed Hybrid-Supervised Side-Channel Analysis

3.1. Design Principle of Hybrid-Supervised Side-Channel Analysis

| Algorithm 2 The Procedure of training the Self-supervised Autoencoder |

Input: N power traces {} of length L with corresponding plaintexts {} Output: Trained autoencoder for feature enhancement

|

| Algorithm 3 Hybrid-Supervised Side-Channel Analysis (HSSCA) |

Input: N power traces {} of length L with corresponding plaintexts {} Output:

|

3.2. Architecture of MLP-HSSCA

- The MLP self-supervised autoencoder primarily consists of two fully connected (FC) layers. The first layer takes an input of data points and produces 50 output neurons. The second layer then takes these 50 inputs and outputs neurons, matching the number of sample points in a data trace (). After the first layer, a hard sigmoid activation function is applied. The output of the second layer is passed through a sigmoid activation function. The following formulas describe the operation of the MLP self-supervised autoencoder:In the formulas above, and denote the encoder and decoder realized with FC layers, and denotes the labeling procedure of the autoencoder. After training, the output data of the autoencoder is closer to the ideal data calculated by the labeling procedure. Figure 3 illustrates the architecture of an MLP self-supervised autoencoder.

- The architecture of the non-profiled deep learning side-channel network is an MLP model. It is composed of two hidden layers of 70 and 20 neurons, respectively. The first layer has input data, and between each layer, the Rectified Linear Unit (ReLU) activation function is implemented, and at the output of the second hidden layer, the probability of the two classes is generated through a SoftMax activation function. For the labeling scheme, the Least Significant Bit (LSB) is chosen as the label of the data. The overall architecture of MLP-HSSCA can be observed in Figure 4.

3.3. Architecture of CNN-HSSCA

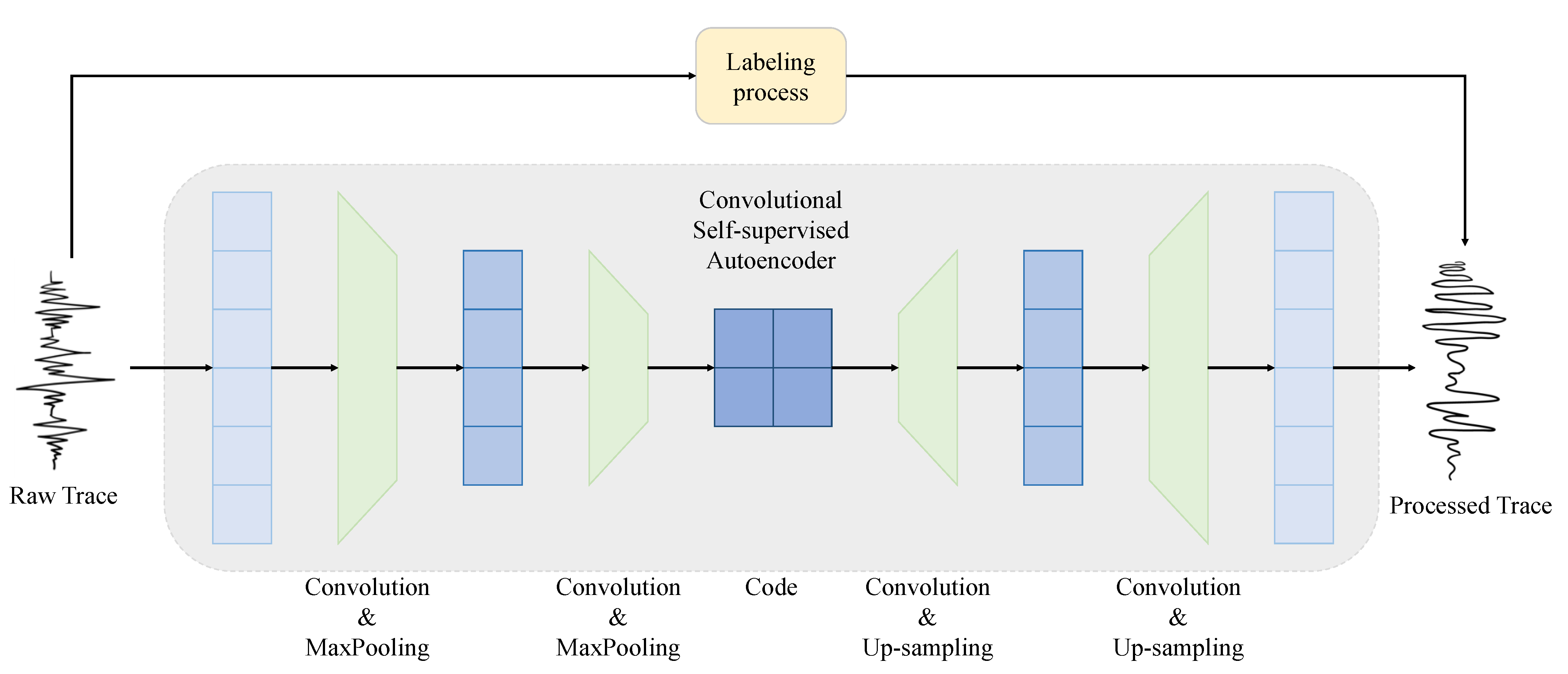

- The encoder of a CNN self-supervised autoencoder is constructed with convolutional layers and pooling layers, and the decoder is constructed with convolutional layers and upsampling layers. The first layer of the encoder is an 1D convolutional layer with 4 output channels, kernel size of 17, and padding of 8 to keep the 1D input data in the same length. Following is a MaxPooling layer with kernel size of 2 to make output data length half of the input. After that is an 1D convolutional layer with 8 output channels, same kernel size and padding followed by another same MaxPooling layer. The input of the encoder is 1D power trace data whose size is (, 1), and the output of the encoder is a code whose size is (, 8). As for the decoder, the structure is symmetric to the encoder, only the pooling layers are replaced by linear upsampling layers. In the autoencoder, after each convolutional layer there is a Randomized leaky Rectified Linear Unit (RReLU) activation funtion. In the end, the output size of the decoder is restored to (, 1). The following formulas describe the operation of the CNN self-supervised autoencoder:In the formulas above, and denote the encoding and decoding layers composed of convolutional layers, pooling layers, upsampling layers, and activation functions. Moreover, and denote CNN encoders and decoders. The architecture of a CNN self-supervised autoencoder is demonstrated in Figure 5.

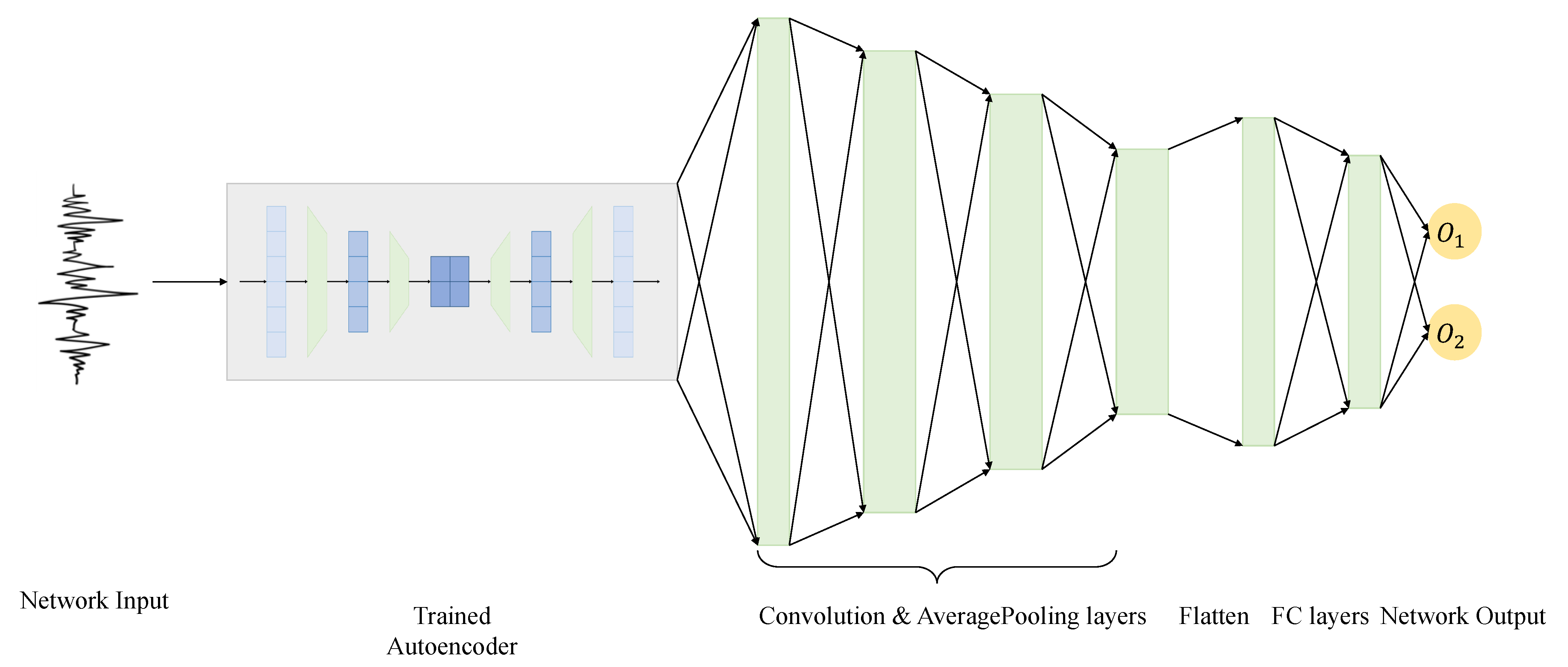

- The CNN non-profiled deep learning side-channel network is composed of 3 hidden convolutional layers with the kernel size of respectively 32, 16, and 8, and they all have 4 output channels. The first layer has input data, and after every convolutional layer, the ReLU activation function is implemented. Between convolutional layers, there are 3 AveragePooling layers. After the last AveragePooling layer, the data are flattened and passed through 2 hidden FC layers. At the output of the second hidden FC layer, the probability of the two classes is generated through a SoftMax activation function. The labeling scheme are the same as MLP. Figure 6 elaborates the structure of CNN-HSSCA.It can be seen from Figure 6 that the overall structure of CNN-HSSCA is the same as that of MLP-HSSCA, consisting of an autoencoder and a classifier network. Each power trace is preprocessed by the convolutional self-supervised autoencoder and used as the training data of the classifier network.

3.4. HSSCA for Small-Scale Datasets

3.5. HSSCA for Datasets with Desynchronized Traces

| Algorithm 4 Labeling Procedure of desynchronized traces |

Input: N power traces {} of length L with corresponding plaintexts {} Output: Reference traces as labels of autoencoder {}

|

4. Experiment Results

4.1. Experiment Environment and Datasets

4.1.1. Experiment Environment

4.1.2. ASCAD Dataset

4.1.3. Desynchronized ASCAD Dataset

4.1.4. AES_RD Dataset

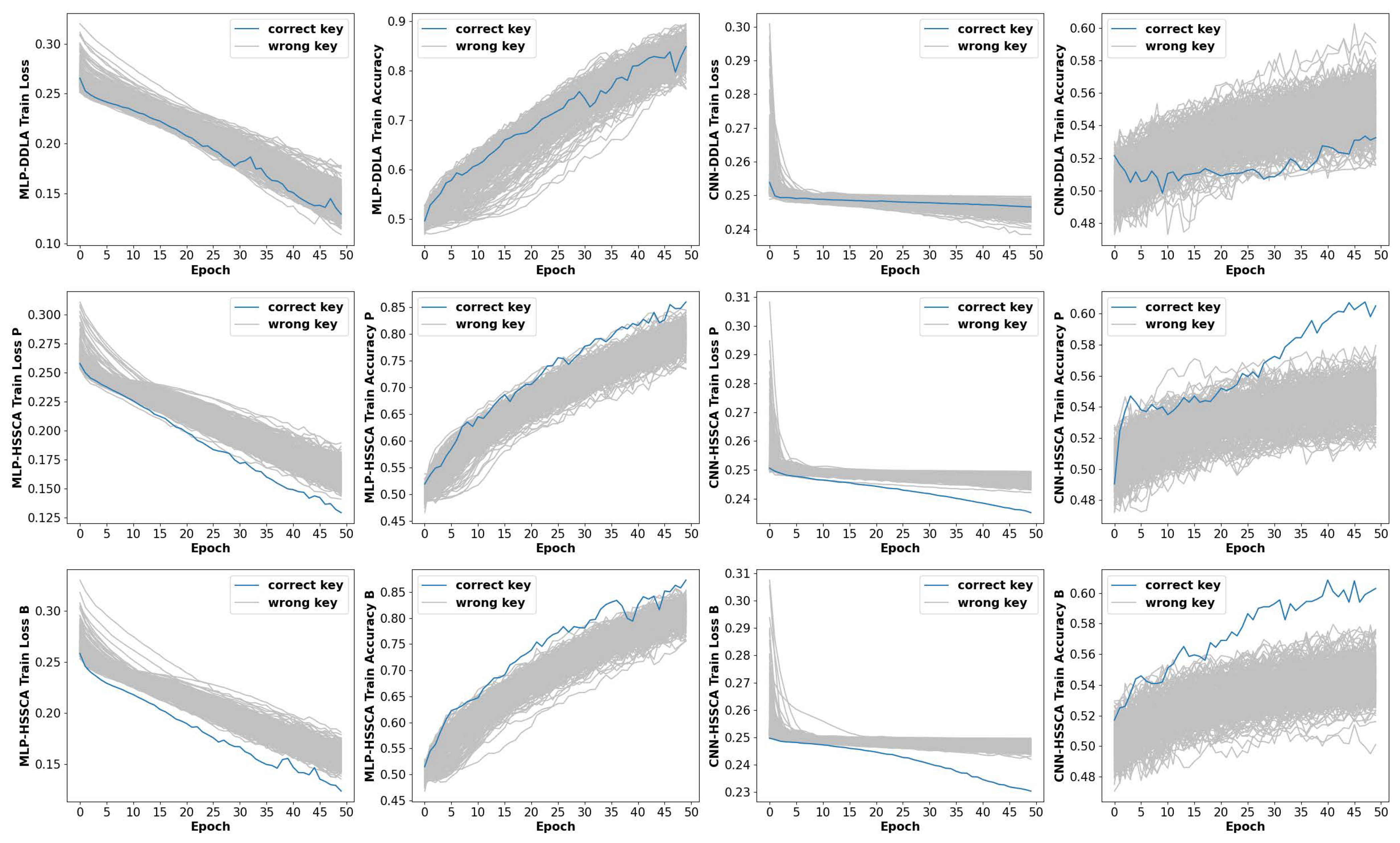

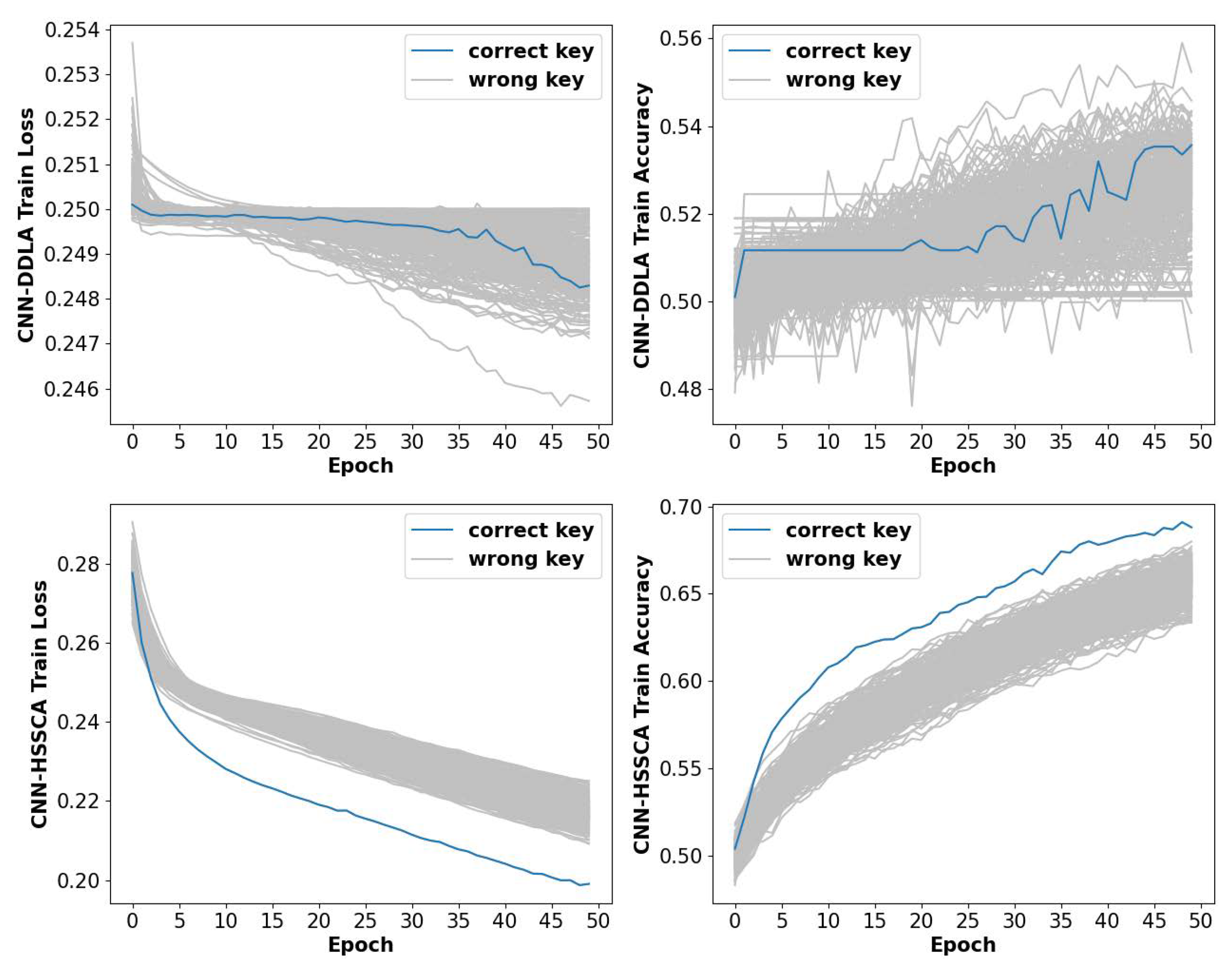

4.2. Implementation Results of HSSCA on Feature Enhancement

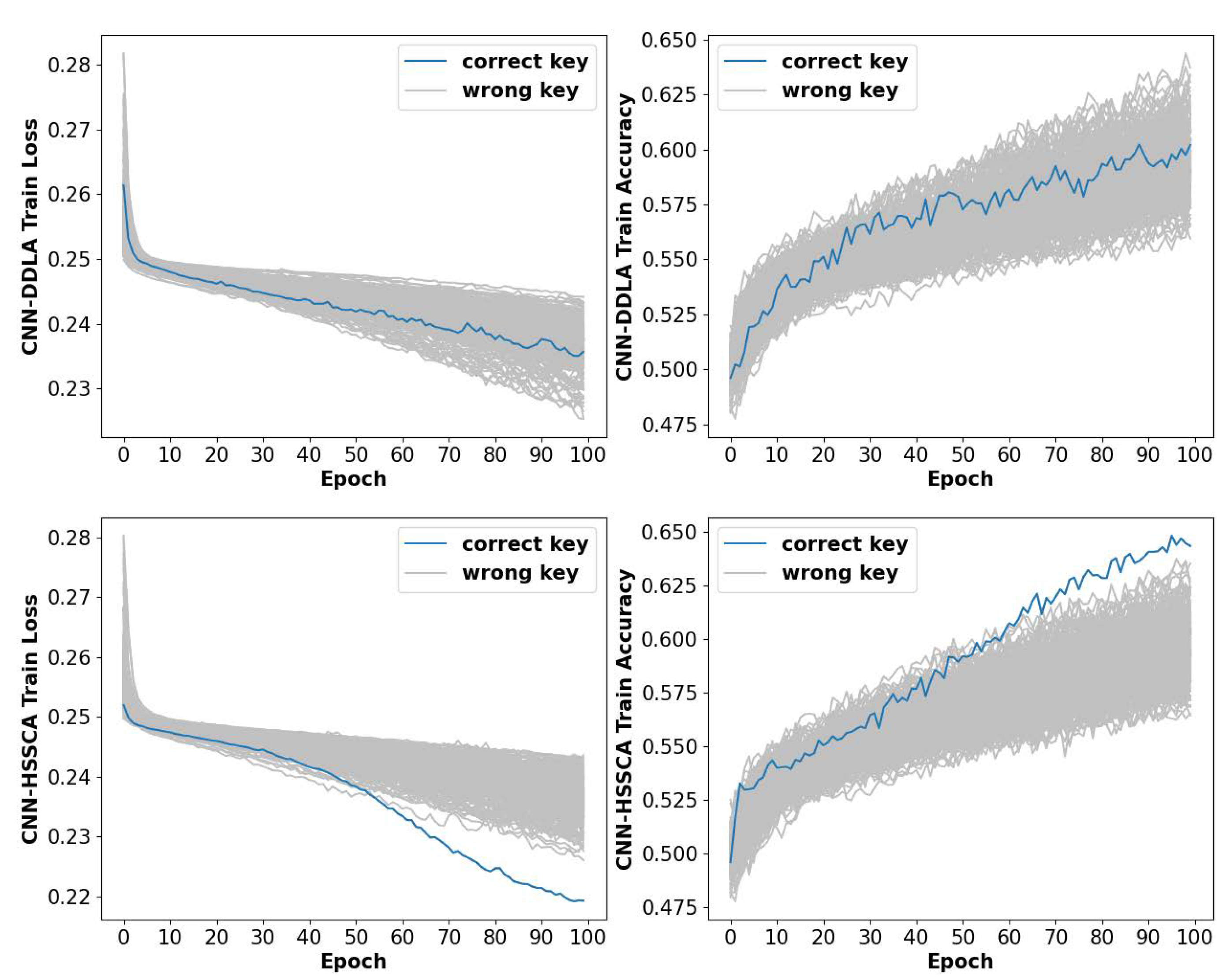

4.3. Implement Results of HSSCA on Desynchronized Datasets

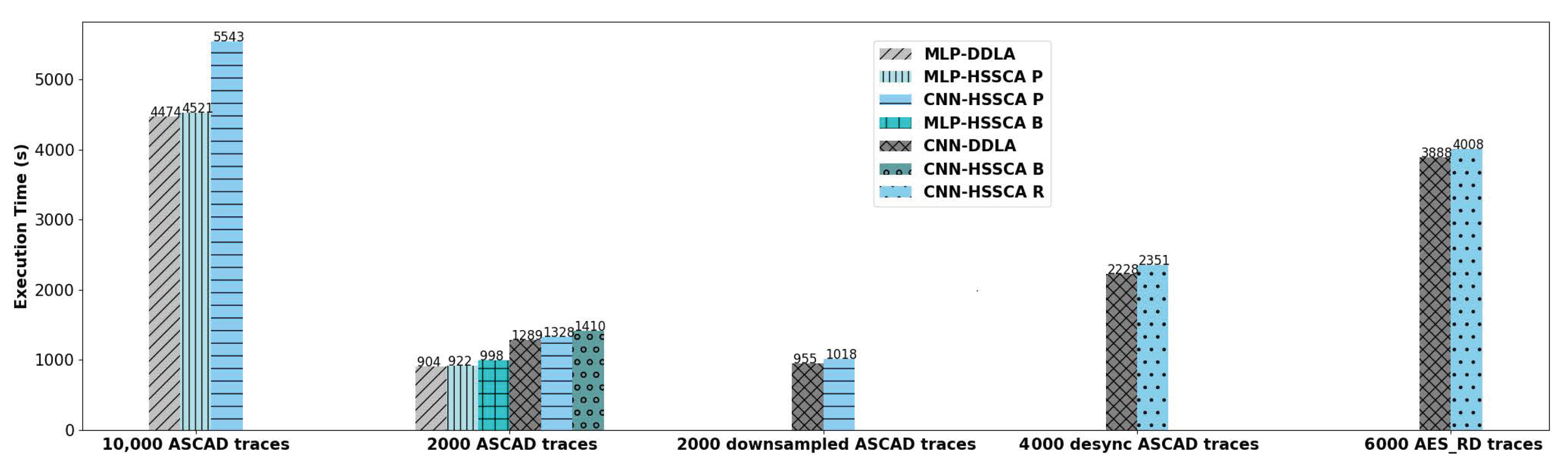

4.4. Reliability and Computational Efficiency Analysis of HSSCA

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CPA | Correlation Power Analysis |

| DPA | Differential Power Analysis |

| DDLA | Differential Deep Learning Analysis |

| AES | Advanced Encryption Standard |

| SNR | Signal-to-Noise Ratio |

| HSSCA | Hybrid-Supervised Side-Channel Analysis |

| MLP | Multilayer Perceptron |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| ML | Machine Learning |

| AI | Artificial Intelligence |

| FC | Fully Connected |

| ReLU | Rectified Linear Unit |

| LSB | Least Significant Bit |

| RReLU | Randomized leaky Rectified Linear Unit |

| MSB | Most Significant Bit |

| MSE | Mean Square Error |

| PoI | Points of Interest |

| PCA | Principal Component Analysis |

References

- Kocher, P.C. Timing attacks on implementations of Diffie-Hellman, RSA, DSS, and other systems. In Proceedings of the Advances in Cryptology—CRYPTO’96: 16th Annual International Cryptology Conference, Santa Barbara, CA, USA, 18–22 August 1996; Springer: Berlin/Heidelberg, Germany, 1996; pp. 104–113. [Google Scholar]

- Le, T.H.; Canovas, C.; Clédiere, J. An overview of side channel analysis attacks. In Proceedings of the 2008 ACM Symposium on Information, Computer and Communications Security, Tokyo, Japan, 18–20 March 2008; pp. 33–43. [Google Scholar]

- Standaert, F.X.; Koeune, F.; Schindler, W. How to compare profiled side-channel attacks? In Proceedings of the Applied Cryptography and Network Security: 7th International Conference, ACNS 2009, Paris-Rocquencourt, Paris, France, 2–5 June 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 485–498. [Google Scholar]

- Chari, S.; Rao, J.R.; Rohatgi, P. Template attacks. In Proceedings of the Cryptographic Hardware and Embedded Systems-CHES 2002: 4th International Workshop, Redwood Shores, CA, USA, 13–15 August 2002; Revised Papers. Springer: Berlin/Heidelberg, Germany, 2003; pp. 13–28. [Google Scholar]

- Rechberger, C.; Oswald, E. Practical template attacks. In International Workshop on Information Security Applications; Springer: Berlin/Heidelberg, Germany, 2004; pp. 440–456. [Google Scholar]

- Hettwer, B.; Gehrer, S.; Güneysu, T. Applications of machine learning techniques in side-channel attacks: A survey. J. Cryptogr. Eng. 2020, 10, 135–162. [Google Scholar] [CrossRef]

- Schindler, W.; Lemke, K.; Paar, C. A stochastic model for differential side channel cryptanalysis. In Proceedings of the Cryptographic Hardware and Embedded Systems–CHES 2005: 7th International Workshop, Edinburgh, UK, 29 August–1 September 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 30–46. [Google Scholar]

- Lemke-Rust, K.; Paar, C. Analyzing side channel leakage of masked implementations with stochastic methods. In Proceedings of the Computer Security–ESORICS 2007: 12th European Symposium on Research in Computer Security, Dresden, Germany, 24–26 September 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 454–468. [Google Scholar]

- Brier, E.; Clavier, C.; Olivier, F. Correlation power analysis with a leakage model. In Proceedings of the Cryptographic Hardware and Embedded Systems—CHES 2004: 6th International Workshop, Cambridge, MA, USA, 11–13 August 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 16–29. [Google Scholar]

- Sakamoto, J.; Tachibana, K.; Matsumoto, T. Application of Profiled Analysis to ADC-Based Remote Side-Channel Attacks. In Proceedings of the 2023 IEEE 9th Intl Conference on Big Data Security on Cloud (BigDataSecurity), IEEE Intl Conference on High Performance and Smart Computing (HPSC) and IEEE Intl Conference on Intelligent Data and Security (IDS), New York, NY, USA, 6–8 May 2023; pp. 115–121. [Google Scholar]

- Kocher, P.; Jaffe, J.; Jun, B. Differential power analysis. In Proceedings of the Advances in Cryptology—CRYPTO’99: 19th Annual International Cryptology Conference, Santa Barbara, CA, USA, 15–19 August 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 388–397. [Google Scholar]

- Maghrebi, H.; Portigliatti, T.; Prouff, E. Breaking cryptographic implementations using deep learning techniques. In Proceedings of the Security, Privacy, and Applied Cryptography Engineering: 6th International Conference, SPACE 2016, Hyderabad, India, 14–18 December 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 3–26. [Google Scholar]

- Wouters, L.; Arribas, V.; Gierlichs, B.; Preneel, B. Revisiting a methodology for efficient CNN architectures in profiling attacks. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 2020, 147–168. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, C.; Cao, P.; Gu, D.; Lu, H. Pay attention to raw traces: A deep learning architecture for end-to-end profiling attacks. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2021, 2021, 235–274. [Google Scholar] [CrossRef]

- Pfeifer, C.; Haddad, P. Spread: A new layer for profiled deep-learning side-channel attacks. Cryptol. Eprint Arch. 2018, 2018. [Google Scholar]

- Timon, B. Non-profiled deep learning-based side-channel attacks with sensitivity analysis. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2019, 2019, 107–131. [Google Scholar] [CrossRef]

- Le, T.H.; Clédière, J.; Serviere, C.; Lacoume, J.L. Noise reduction in side channel attack using fourth-order cumulant. IEEE Trans. Inf. Forensics Secur. 2007, 2, 710–720. [Google Scholar] [CrossRef]

- Das, D.; Maity, S.; Nasir, S.B.; Ghosh, S.; Raychowdhury, A.; Sen, S. High efficiency power side-channel attack immunity using noise injection in attenuated signature domain. In Proceedings of the 2017 IEEE International Symposium on Hardware Oriented Security and Trust (HOST), Mclean, VA, USA, 1–5 May 2017; pp. 62–67. [Google Scholar]

- Do, N.T.; Hoang, V.P.; Doan, V.S.; Pham, C.K. On the performance of non-profiled side channel attacks based on deep learning techniques. IET Inf. Secur. 2023, 17, 377–393. [Google Scholar] [CrossRef]

- Cheng, K.; Song, Z.; Cui, X.; Zhang, L. Hybrid denoising based correlation power analysis for AES. In Proceedings of the 2021 IEEE 4th Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Chongqing, China, 18–20 June 2021; Volume 4, pp. 1192–1195. [Google Scholar]

- Tseng, C.C.; Lee, S.L. Minimax design of graph filter using Chebyshev polynomial approximation. IEEE Trans. Circuits Syst. II Express Briefs 2021, 68, 1630–1634. [Google Scholar] [CrossRef]

- Maghrebi, H.; Prouff, E. On the use of independent component analysis to denoise side-channel measurements. In Proceedings of the Constructive Side-Channel Analysis and Secure Design: 9th International Workshop, COSADE 2018, Singapore, 23–24 April 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 61–81. [Google Scholar]

- Kwon, D.; Kim, H.; Hong, S. Non-profiled deep learning-based side-channel preprocessing with autoencoders. IEEE Access 2021, 9, 57692–57703. [Google Scholar] [CrossRef]

- Paguada, S.; Batina, L.; Armendariz, I. Toward practical autoencoder-based side-channel analysis evaluations. Comput. Netw. 2021, 196, 108230. [Google Scholar] [CrossRef]

- Wu, L.; Picek, S. Remove some noise: On pre-processing of side-channel measurements with autoencoders. IACR Trans. Cryptogr. Hardw. Embed. Syst. 2020, 2020, 389–415. [Google Scholar] [CrossRef]

- Kuroda, K.; Fukuda, Y.; Yoshida, K.; Fujino, T. Practical aspects on non-profiled deep-learning side-channel attacks against AES software implementation with two types of masking countermeasures including RSM. In Proceedings of the 5th Workshop on Attacks and Solutions in Hardware Security, Virtual Event, Republic of Korea, 19 November 2021; pp. 29–40. [Google Scholar]

- Kwon, D.; Hong, S.; Kim, H. Optimizing implementations of non-profiled deep learning-based side-channel attacks. IEEE Access 2022, 10, 5957–5967. [Google Scholar] [CrossRef]

- Won, Y.S.; Han, D.G.; Jap, D.; Bhasin, S.; Park, J.Y. Non-profiled side-channel attack based on deep learning using picture trace. IEEE Access 2021, 9, 22480–22492. [Google Scholar] [CrossRef]

- Hu, F.; Wang, H.; Wang, J. Cross subkey side channel analysis based on small samples. Sci. Rep. 2022, 12, 6254. [Google Scholar] [CrossRef]

- Taud, H.; Mas, J. Multilayer perceptron (MLP). In Geomatic Approaches for Modeling Land Change Scenarios; Springer International: Cham, Swizerland, 2018; pp. 451–455. [Google Scholar]

- O’Shea, K.; Nash, R. An introduction to convolutional neural networks. arXiv 2015, arXiv:1511.08458. [Google Scholar]

- Eren, L.; Ince, T.; Kiranyaz, S. A generic intelligent bearing fault diagnosis system using compact adaptive 1D CNN classifier. J. Signal Process. Syst. 2019, 91, 179–189. [Google Scholar] [CrossRef]

- Daemen, J.; Rijmen, V. Reijndael: The advanced encryption standard. Dr. Dobb’s J. Softw. Tools Prof. Program. 2001, 26, 137–139. [Google Scholar]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

- Vincent, P.; Larochelle, H.; Bengio, Y.; Manzagol, P.A. Extracting and composing robust features with denoising autoencoders. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 1096–1103. [Google Scholar]

- Liu, X.; Zhang, F.; Hou, Z.; Mian, L.; Wang, Z.; Zhang, J.; Tang, J. Self-supervised learning: Generative or contrastive. IEEE Trans. Knowl. Data Eng. 2021, 35, 857–876. [Google Scholar] [CrossRef]

- Benadjila, R.; Prouff, E.; Strullu, R.; Cagli, E.; Dumas, C. Deep learning for side-channel analysis and introduction to ASCAD database. J. Cryptogr. Eng. 2020, 10, 163–188. [Google Scholar] [CrossRef]

- Coron, J.S.; Kizhvatov, I. An efficient method for random delay generation in embedded software. In Proceedings of the Cryptographic Hardware and Embedded Systems-CHES 2009: 11th International Workshop, Lausanne, Switzerland, 6–9 September 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 156–170. [Google Scholar]

- Do, N.T.; Hoang, V.P.; Doan, V.S. A novel non-profiled side channel attack based on multi-output regression neural network. J. Cryptogr. Eng. 2023, 1–13. [Google Scholar] [CrossRef]

- Nomikos, K.; Papadimitriou, A.; Psarakis, M.; Pikrakis, A.; Beroulle, V. Evaluation of Hiding-based Countermeasures against Deep Learning Side Channel Attacks with Pre-trained Networks. In Proceedings of the 2022 IEEE International Symposium on Defect and Fault Tolerance in VLSI and Nanotechnology Systems (DFT), Austin, TX, USA, 19–21 October 2022; pp. 1–6. [Google Scholar]

| Parameter | MLP Autoencoder | MLP Classifier |

|---|---|---|

| Hidden layer | 1 (50 FC) | 2 (70 FC × 20 FC) |

| Output size | 700 | 2 (SoftMax) |

| Label | Plaintext/Binary | Binary |

| Initialization | Uniform | Uniform |

| Optimization | Adam | Adam |

| Learning rate | 0.001 | 0.001 |

| Batch size | 1000 | 1000 |

| Parameter | CNN Autoencoder | CNN Classifier |

|---|---|---|

| Hidden layer | 3 | 4 |

| 4 × 17 × 1 filters | 4 × 32 × 1 filters | |

| 8 ×17 × 1 filters | 4 × 16× 1 filters | |

| 4 × 17 × 1 filters | 4× 8 × 1 filters | |

| 18 FC | ||

| Output size | 700/350/2000 | 2 (SoftMax) |

| Label | Plaintext/Binary/Realign | Binary |

| Initialization | Xavier Normal | Xavier Normal |

| Optimization | Adam | Adam |

| Learning rate | 0.001 | 0.001 |

| Batch size | 1000 | 1000 |

| Dataset | Traces | Samples | Protection |

|---|---|---|---|

| ASCAD | 60,000 | 700 | First-order Boolean Mask |

| ASCAD_desync | 60,000 | 700 | First-order Boolean Mask & Random Delay |

| AES_RD | 50,000 | 3500 | Random Delay |

| Reference | Network Architecture | Number of Traces | Epochs |

|---|---|---|---|

| [26] | MLP | 30,000 | 100 |

| [16] | MLP | 20,000 | 50 |

| [19] | MLP/CNN | 20,000 | 50 |

| [39] | CNN | 20,000 | 50 |

| [24] | profiled CNN | 60,000 | - |

| [40] | profiled MLP/CNN | 10,000 | - |

| [28] | MLP/BNN | 10,000 | 100 |

| This work | MLP-HSSCA | 2000 | 50 |

| This work | CNN-HSSCA | 2000 | 50 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, F.; Wang, X.; Pu, K.; Zhang, J.; Dang, H. A Practical Non-Profiled Deep-Learning-Based Power Analysis with Hybrid-Supervised Neural Networks. Electronics 2023, 12, 3361. https://doi.org/10.3390/electronics12153361

Kong F, Wang X, Pu K, Zhang J, Dang H. A Practical Non-Profiled Deep-Learning-Based Power Analysis with Hybrid-Supervised Neural Networks. Electronics. 2023; 12(15):3361. https://doi.org/10.3390/electronics12153361

Chicago/Turabian StyleKong, Fancong, Xiaohua Wang, Kangran Pu, Jingqi Zhang, and Hua Dang. 2023. "A Practical Non-Profiled Deep-Learning-Based Power Analysis with Hybrid-Supervised Neural Networks" Electronics 12, no. 15: 3361. https://doi.org/10.3390/electronics12153361

APA StyleKong, F., Wang, X., Pu, K., Zhang, J., & Dang, H. (2023). A Practical Non-Profiled Deep-Learning-Based Power Analysis with Hybrid-Supervised Neural Networks. Electronics, 12(15), 3361. https://doi.org/10.3390/electronics12153361