Abstract

Fog computing has become a hot topic in recent years as it provides cloud computing resources to the network edge in a distributed manner that can respond quickly to intensive tasks from different user equipment (UE) applications. However, since fog resources are also limited, considering the number of Internet of Things (IoT) applications and the demand for traffic, designing an effective offload strategy and resource allocation scheme to reduce the offloading cost of UE systems is still an important challenge. To this end, this paper investigates the problem of partial offloading and resource allocation under a cloud-fog coordination network architecture, which is formulated as a mixed integer nonlinear programming (MINLP). Bring in a new weighting metric-cloud resource rental cost. The optimization function of offloading cost is defined as a weighted sum of latency, energy consumption, and cloud rental cost. Under the fixed offloading decision condition, two sub-problems of fog computing resource allocation and user transmission power allocation are proposed and solved using convex optimization techniques and Karush-Kuhn-Tucker (KKT) conditions, respectively. The sampling process of the inner loop of the simulated annealing (SA) algorithm is improved, and a memory function is added to obtain the novel simulated annealing (N-SA) algorithm used to solve the optimal value offloading problem corresponding to the optimal resource allocation problem. Through extensive simulation experiments, it is shown that the N-SA algorithm obtains the optimal solution quickly and saves 17% of the system cost compared to the greedy offloading and joint resource allocation (GO-JRA) algorithm.

1. Introduction

People’s quality of life has significantly altered as a result of the fast advancement of technical communication and mobile internet and has moved from an age based on man-made technology to an era of the Internet of Things based on internet and communication network technology. A wide range of electronic devices, including those used in smart industries, smart homes, smart transportation, smart healthcare, and smart education [1], are connected to the IOT. These gadgets have multiplied dramatically, and official data projections indicate that by 2025, there will be 50 billion electronic devices worldwide. It is difficult to process the data produced by these devices [2,3,4]. Although mobile users are now upgrading their own computing power, their portability makes storage and computational resources limited, and some intensive applications are difficult to execute on mobile devices.

Thus, the trade-off between latency and energy consumption causes overall computational performance degradation and creates problems such as data privacy security and additional latency. As a solution, the concept of fog computing was introduced [5]. Fog servers (FS) are deployed at the edge of the network and can communicate directly with UE to provide computational and storage resources. Thus, tasks can be offloaded directly to the FS instead of the remote cloud, which can save bandwidth resources and reduce losses. Compared to cloud computing, fog computing supports low loss, high reliability and can handle more requests in a smarter way [6,7].

FS can respond quickly for task offloading, but fog resources are limited, and relying entirely on fog computing is clearly not a wise choice. I. Sarkar et al. [8] researchers started to discuss the cloud-fog cooperative network architecture, and new issues emerged, such as how to cooperate between cloud and fog and task offloading to cloud or fog. M. Aazam et al. [9] scrutinized the offloading policy problem and proposed a cloud-fog cooperative network architecture. W.B. Sun et al. [10] considered an opportunity to access the cloud-fog cooperative network architecture and proposed a solution for computational offloading in their study. However, they only focused on the resources in the cloud and fog and ignored the local computational resources, resulting in a decrease in resource utilization and an increase in system costs.

In multiuser ultra-dense networking scenarios, offload decision and resource allocation are key to achieving efficient offload. It is very challenging to allocate fog computing resources efficiently and make each FS determine the optimal workload state [11]. Z. Chang et al. [12] jointly optimize offloading and resource allocation and propose an online algorithm based on Lyapunov optimization. Z. Fei et al. [13] jointly optimize task scheduling and power allocation and propose a binary offloading scheme. However, they did not consider the heterogeneity of fog computing resources, which is inconsistent with the real Internet of Things environment. Therefore, In order to reduce the offloading cost of UEs under the cloud-fog collaborative network architecture, a joint optimized partial task offloading and resource allocation optimization scheme is presented.

- The UE offload utility is modeled as a weighted sum of latency, energy consumption, and cloud rental cost. It is formulated as a mixed integer-nonlinear problem that jointly optimizes user task offload selection, FS computing resources, and user uplink transmit power resource allocation to maximize system benefits.

- The resource allocation problem is divided into two independent subproblems, one is the allocation of uplink transmit power and the other is the allocation of fog computing resources. The two subproblems are solved using convex optimization techniques and KKT conditions, respectively.

- The inner loop sampling mechanism of the traditional SA algorithm is improved, and the memory function is increased. Obtain the N-SA algorithm for solving the offloading decision problem. It is also demonstrated that by solving the polynomial, the ideal solution may be attained.

- It has been experimentally verified that the scheme significantly improves the offloading utility for users. Compared to the extreme strategy (full cloud), the proposed solution reduces the system cost by 61% at 25 users.

The remaining parts are structured as follows: Section 2 describes the work of previous studies. Section 3 presents the new system model, computational model, and problem description. Section 4 discusses the N-SA algorithm offloading strategy and computational resource allocation method. Section 5 shows the simulation findings. Finally, the research is summarized and future research directions are discussed.

2. Related Work

With the growing demand for smart devices and the massive generation of computational data in the IOT, computational offloading and resource allocation have been of great concern. Several studies have been conducted on computational offloading methods and resource allocation.

S. Yu-Jie et al. [14] proposed distributed computing offloading technique, which regards the competition of UE for fog resources as a game. The goal is to optimize the balance between fog node delay and energy consumption. Yadav et al. [15] view the offloading of fog computing as a multi-objective optimization problem and find the optimal offloading objective by considering two parameters, energy consumption and computation time, and proposing the enhanced multi-objective gray wolf algorithm (E-MOGW), but because it only considers the computational offloading of the fog layer and does not consider resource allocation, it causes competition for computing resources among users, overloading fog nodes, and increasing costs. M.T. Khan et al. [16] consider the fog environment as dynamic and address the problem of how to choose the optimal fog node in the fog computational offloading. An improved sine-cosine optimization technique is utilized to propose a task offloading technique to enhance quality of service (QOS), but it lacks consideration of cloud-fog collaboration. W. Bai et al. [17] proposed new fog computing architecture that introduces deep reinforcement learning to solve the problems of computational offloading and resource allocation, but only latency metrics are considered, and energy consumption metrics and cloud resource usage are not considered. A single-minded pursuit of too low latency may result in excessive energy consumption, leading to excessive total offload costs.

On the edge network architecture, a computational offloading scheme is proposed to minimize energy consumption within the delay range without the allocation of computing resources [18]. Y. Kyung et al. [19] proposed considering the possibility of overloading the node by accepting a large number of tasks at the fog node, and both direct and indirect offloading cases are considered to develop an analytical model of opportunity offloading probability. G. Belcredi et al. [20] modeled a network stochastic system in the architecture of fog cloud synergy. Using fluid limits to approximate the dynamics of IoT networks. This approach finds the equilibrium point for system offloading, but it considered transmit power is a constant value. P.K. Deb et al. [21] proposed to use a two-step distributed horizontal design to support computational offloading in fog environments with the goal of minimizing total latency and energy consumption. UE offload tasks to nearby FS nodes according to a greedy selection criterion. S. Vakilian et al. [22] addresses a convex optimization issue that takes response time and energy cost into account and proposes an artificial bee colony algorithm. Yet, remote cloud computing latency and leasing costs are overlooked. Tabarsi, B.T. et al. [23] faced with the problem of collaboration between fog nodes, fog computing cooperative computing system is built, and the problem is shown to be convex. A fair cooperation algorithm (FCA) is designed to solve the joint optimization problem. But fog-cloud cooperation is not considered, which may lead to fog-node overload and increased offloading costs.

Since resource allocation allows better utilization of available resources to achieve cost minimization, previous research on resource allocation are as follows: N. Khumalo et al. [24] argue that the resource allocation of fog nodes is not static and use deep reinforcement learning to optimize the cost function of the F-RAN system. S. Khan et al. [25] propose an efficient resource allocation and load balancing approach based on offloading to achieve a balance between fog nodes, and multiple metrics are used to judge the performance of the algorithm. But it utilizes only the resources of the fog nodes, and local computational resources are ignored. Q. Fan et al. [26] the resource allocation problem on the wireless channel and fog nodes is investigated to determine the minimum computational delay for the task. Optimal offloading decisions are solved using reinforcement learning. C. Yi et al. [27] an optimal solution algorithm based on branch-and-price idea is proposed for solving a multidimensional joint resource optimization problem with the objective of minimizing network losses. Tabarsi, B.T et al. [28] propose a scheme based on partial computational offloading and resource allocation in fog networks in order to reduce the system cost. However, the algorithm does not consider the allocation of transmission power. S. Sardellitti et al. [29] proposed to use a delayed acceptance algorithm (DAA). It makes the cost consumption of mobile devices reduced and the success rate of FS performing offloading tasks increased.

In conclusion, the vast majority of previous research did not include the multifaceted strategy of jointly deciding task portion offloading strategy, cloud resource rental cost, and computing resource allocation in the multi-layer architectural system taken into consideration in this paper.

3. System Model

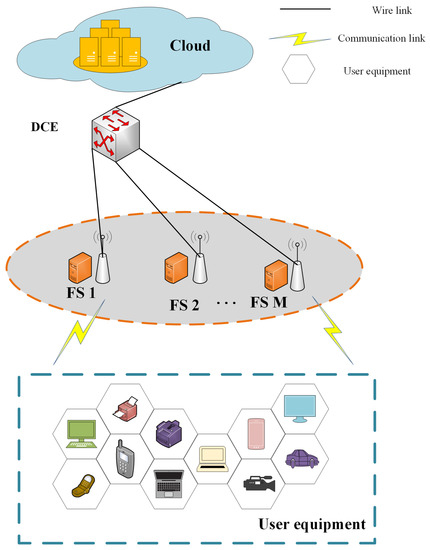

As shown in Figure 1, a three-tier fog network architecture is considered, which consists of a set of mobile users N, a set of fog server nodes M, and a remote cloud C. The set of UE is denoted as . The users are randomly distributed around the FS, and no interference between users. The FS nodes are located at the second layer, and the set of FS nodes is denoted as . The top layer is the cloud computing center. Tasks assigned to the user are separable, and any layer may be executed, it is defined as . Here, is defined as the size of the data volume of the offloading task, represents the workload, the amount of computation required to complete the task, with denoting the maximum tolerated latency. For easy reference, the parameters are shown in Table 1.

Figure 1.

Fog computing three-layer system architecture diagram.

Table 1.

Definition of symbols.

3.1. Local Computing Model

The UE generates a computational task . Here, the partial offload ratio is expressed as , which ranges from . the presence of means that the execution is completely local and no offload processing is performed, i.e., binary offload . Conversely, partial offload processing is performed to the FS or remote cloud, i.e., . The local computation frequency is , which is always greater than 0, is a constant to regulate the computing power of the UE, the computational delay and energy consumption of UE are as follows:

where, k is the number of active switching capacitors and is related to the UE own chip.

3.2. Communication Transmission Model

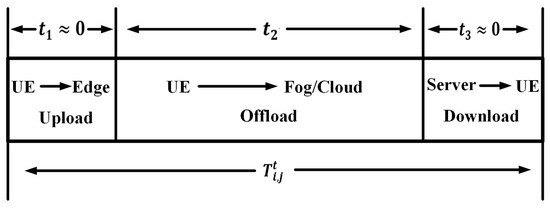

The transmission time model for offloading is shown in Figure 2. At a certain point in time, the user and fog access network state remains constant, the UE i selects the FS j for offloading, the channel gain follows the free-space path loss model the wireless channel gain at moment t is denoted by denoting .

where, denotes the distance from UE i to FS j at moment t, represents the carrier frequency, is the path loss, and denotes the antenna gain.

Figure 2.

An example of transfer time allocation for offloading tasks from UE i to the FS j.

The UE calculation task to be offloaded requires three steps to complete the transfer, namely the upload time , the transfer time and the return time . In general, the upload task data size and return data are much smaller than the offload task, and the return transmission rate is larger than the uplink transmission rate [13]. So the communication model considers only the uplink transmission time for the user i offload task. The transmission rate equation is expressed with reference to Shannon’s formula as:

where, B is the uplink channel bandwidth, is the transmit power of the UE, is the channel gain, is the noise power.

The transmission delay and energy consumption of the UE offloading task to the FS are expressed as:

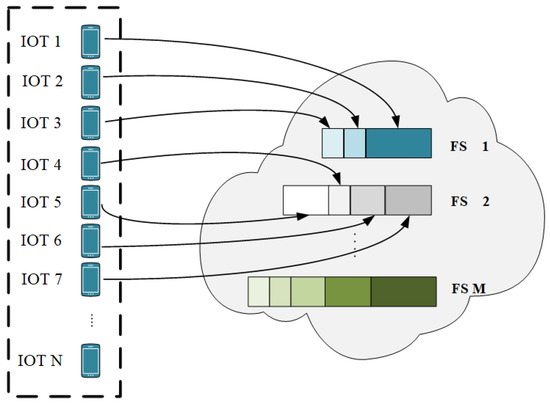

3.3. Fog Computing Model

The architecture of task offloading from UE to FS is shown in Figure 3 [30]. The model takes into account that a FS node can accept multiple UE to offload computing tasks. From the point of the FS, it is necessary to know the size of the UE i offload task in order to make a decision whether to execute it at FS j. If the offloading requirements are met, the FS will allocate the corresponding computing resources to the UE, the resource allocation is described in detail in Section 4, the execution delay of the FS j is shown as follows:

Figure 3.

UE offload to FS architecture diagram.

3.4. Cloud Computing Model

If the FS processing task does not meet the user’s requirements, it is directly offloaded to the remote cloud secondarily. Offloading tasks to the cloud requires transfer to the FS first with a time of , then transfer from the FS to the cloud with a time of . The transfer time to the cloud is expressed as:

The processing latency equation in the remote cloud is expressed as:

where, is the computing frequency of the remote cloud.

A new metrics for cloud resource rental prices . It is related to the size of the task to be offloaded, and the rental cost is expressed as:

where, is the cloud rental cost of a unit [31].

After deriving the offloading task ratio and determining the offloading decision and resource allocation scheme, the total delay of the user offloading task is expressed as:

The total energy consumption of the user equipment is expressed as:

The cost of cloud resources can be expressed as:

4. Question Formulation

The aim is to find the optimal offloading strategy and resource allocation scheme for each user, which minimizes the cost function of the UE, and the optimization function of the multi-objective optimization problem is expressed as:

The constraints in the above equation are interpreted as: (15) the range of guaranteed offload ratio, (16) the range of guaranteed user offload only to cloud or fog, (17) the range of ensuring that the sum of allocated computing resources cannot exceed the computing capacity of FS, (18) the range of user transmit power, and (19) the range of ensuring that the upper layer resources are greater than the lower layer resources.

Using the situation of an objective weighted sum, an objective function is obtained by standardizing the computational delay and energy consumption as well as the leasing cost,

Here, the weights of latency, energy consumption and cloud rental cost are represented respectively, and the sum of these three weight variables is equal to 1, that is . In practice, UE can adjust the size of the three indicator weights according to different scenarios. For example, when the UE is low on power, the weights of the other two metrics by increased and decrease to save more energy at the cost of longer task completion times or increased cloud rental costs. The formula is expressed as follows:

In the next section, the solution to the offloading decision and resource allocation is given. Thus, the solution of the original problem is obtained.

5. Problem Solution

The problem of joint computational offloading and resource allocation is presented, and the solution to the problem is elaborated.

5.1. Offload Proportion and Local Computing Resource Scheduling

Since computational frequency has an impact on both computational latency and energy consumption, CPU frequency modulation technology are utilized to find a balance point that minimizes the total local cost. It is influenced by the state of the UE, the smaller the value, the higher the energy consumption. Conversely, the larger the value, the lower the latency. In order to find the optimal offload ratio while meeting the requirements of the maximum tolerable delay of the task. Optimization of the second term of (22):

where, is the maximum local computation frequency, (25) ensures that the computation frequency is in the range of maximum to 0, (26) ensures that the local computation latency does not exceed the maximum latency of the user i task. The user’s computational resource scheduling is shown in Equation (24), which is convex in the definition domain, and the objective function increases as it increases.

The local optimal offloading part is obtained by setting ,which is:

Transformation from Equation (27) obtains:

The optimal offloading ratio for each UE is expressed as follows:

The local Cpu frequency scheduling algorithm is shown in Algorithm 1.

| Algorithm 1: UE offload ratio and CPU scheduling algorithm |

| Input: , , , , , each of UE |

| Output: Optimal computing resources. i.e., , offload ratio. i.e., |

| . |

| For |

| According to Equation (27), calculate . |

| If then |

| Else then |

| End if |

| End for |

| Return |

| For |

| If |

| An optimal calculated frequency is obtain according to Equation (24). |

| End if |

| End for |

| Return |

5.2. Computing Resource Allocation

Here, the fourth optimization of (22) aims to find a suitable allocation scheme:

Prove that the optimization problem is convex by the following procedure:

Proof.

Deriving for yields:

By calculating the second order derivative of we have:.

Equation (34) indicates that the objective functions are non-negative and the associated matrices are non-negative semi-positive definite matrices, which can demonstrate that all of the objective functions are convex [32]. Thus, the KKT condition is used to solve. Problem Y2 is stated as follows, using Lagrange multipliers:

where, is the Lagrange multiplier, and the derivation of the above equation is obtained as:

According to the KKT condition, the first-order partial derivative of any Lagrangian function optimizing the original variable at the optimal solution takes the value of 0. Therefore, it is obtained as follows:

Also, since is continuously satisfied, it is obtained as:

Inverted into the above equation to obtain:

5.3. Uplink Transmission Power Allocation

The first term of (24) is optimized as the objective function, which is expressed as:

The second-order derivative of in the definition domain is always greater than 0 by proof, so it is a convex problem in the feasible domain.

Proof.

The goal function first order derivative is calculated as follows:

Here, given as is:

Since the first-order derivative of the objective function has a denominator that is transversely greater than 0 in the domain of definition, proving that the second-order derivative of the objective function is greater than 0 only requires proving that the derivative of is greater than 0. After deriving for obtain:

For any in the feasible domain there is , so provable that is a monotonically increasing function in the feasible domain. And because , so the optimal solution of the objective function in the domain of definition is the zero of the first-order derivative, or the boundary point of the feasible domain. , or satisfies , It is demonstrable that when .

□

Accordingly, a low-complexity Golden Section (GS) transmit power allocation algorithm is designed. It is shown in Algorithm 2.

| Algorithm 2: GS based transmission power allocation algorithm |

| Input: offloading decision, maximum transmission power , transmission power allocation step r |

| Output: Transmission power allocation scheme |

| Set the lower limit of the transmitted power to 0 and the upper limit to , and calculate . |

| If then |

| Else |

| While ,do |

| Initialize so that , |

| Let |

| Let |

| If |

| Then , |

| Else |

| , |

| End if |

| Until |

| , |

| End while |

| End if |

5.4. Joint Computing Offload and Resource Allocation

In the previous section, the solutions obtained for the allocation of computational resources and transmit power were under the assumption that the offloading decision was determined. Here, the aim is to obtain the optimal offloading decision under known optimal resource allocation conditions. Substituting Equations (32), (38), (50) into Equation (26) to obtain:

The traditional SA algorithm avoids entering a local optimum by using the uncertainty of probability and random assignment to get the global optimal solution of the optimization function. This does wonders for resolving the MINLP challenge, the N-SA algorithm is chosen as the optimization algorithm in this paper.

In the problem of minimizing the loss function, since some UE are not offloaded (the equipment itself can do its own task) and are directly offloaded to the remote cloud, the FS does not need to allocate computational resources for these UE. The offloading decision and resource allocation are processed separately, and the resource allocation optimization operation is performed after each offloading decision. Relative to the traditional SA algorithm, make the following improvements to the algorithm:

- Improvements are made to the inner loop of the simulated annealing algorithm. The traditional annealing algorithm sets up an inner loop, i.e., the metropolis guidelines need to be executed several times at the same temperature, which is used to ensure global search capability. However, practice shows that this method not only increases the solution time but also causes the optimal solution to be lost. The change made is to improve the sampling process and setting a double threshold, so that the number of samples is flexible according to the current solution, to ensure the quality of the solution, and to reduce the solution time appropriately.

- The memory function has been added. During the execution of the algorithm, due to the nature of probability, an inferior solution to the current one may be accepted, resulting in the loss of the optimal solution. The improvement is the addition of the memory function, which means that the current optimal solution is remembered during the search process. The specific steps for N-SA algorithm are shown in Algorithm 3.

Initialize X, , , H. Let X be the optimal offloading decision and record the optimal fitness value. Set the parameters of the algorithm, e.g., , , K, then the temperature changes as follows.

After the offloading choice has been made, Algorithm 2 is utilized to distribute computational resources to the offloaded UE and compute the fitness value. The new solution is acceptable if the fitness value is higher than the prior one. Then, it determines whether the latest solution is due to the stored solution and then updates the solution, otherwise, the stored solution remains unchanged. If the latest solution is poor, it is accepted with probability, which varies with temperature, and the probability acceptance formula is shown in the following equation:

Record the number of consecutive not updated solutions. and it might become caught in a local optimum if it is higher than . The number of cooling cycles is counted to see if it meets the desired number, if not, the temperature is increased to its maximum, and the choice to offload is made randomly once.

| Algorithm 3: N-SA based task assignment algorithm |

| Input: N, M, , , , ,. |

| Output: Unloading decision X, the most value H, set the initial temperature , minimum temperature , K, q for each temperature reduction. |

| Initialize X. Calculate the initial system cost according to the formula , . |

| While |

| For each k |

| Generate a new offload decision and call Algorithm 2 for resource allocation, calculate the system cost . |

| Update unloading guidelines and system costs based on M guidelines. |

| If |

| Update and remember , , . |

| Else |

| Calculate the acceptance probability P |

| If |

| accept |

| Else |

| are not accepted. |

| End if |

| End if |

| End for |

| End While |

After completing the number of cycles K at a single temperature, it is necessary to cool down and judge whether the temperature is lower than the minimum temperature according to Equation (59), if the temperature is lower than it, the algorithm execution ends and the current optimal solution is output, otherwise, the next cycle continues at the current temperature.

6. Performance Evaluation

This research verifies the advantages and feasibility of the system model and the improved heuristic algorithm through extensive simulations. We conducted experiments on a desktop computer with an Intel(R) Core(TM) i5 @ 3.30 GHz, 8 GB of memory and Win10. The simulation software is MATLAB 2017b. A cloud-fog cooperation network system with 20 UE and 5 FS is used as the simulation environment for the tests. Users are dispersed randomly around the FS, and the FS do not interfere with one another. Each fog node is connected to the cloud computing center by a WAN, and user’s tasks all have different maximum tolerable latency. Channel state at offload time obeys the small-scale fading of Rayleigh distribution. The following strategies are contrasted with our improved offloading algorithm strategy.

- Full local computing: The computing tasks of UE are not offloaded for processing, i.e., the computing tasks are completely executed locally.

- Full fog computing processing: The computing tasks generated by the UE are completely offloaded to the FS, and the task size is fully allocated to the FS for processing according to the computing allocation algorithm.

- Full cloud computing processing: The computing tasks are completely transferred to the remote cloud for processing through the FS.

- GO-JRA algorithm [29]: Each user is randomly assigned a sub-band from its primary band base station (BS), and the user makes offloading decisions independently and implements joint resource allocation.

- Delay-protected task offloading and resource allocation (DP-TORA) algorithm [33]: Task offloading and resource allocation with guaranteed minimum latency.

Here, the range of user transmission power is , the UE computing resources , the task data size kb, and the task computing period Megacycles. The values of the parameters for the execution of the algorithm are shown in Table 2:

Table 2.

The parameter values.

Analysis of Simulation Results

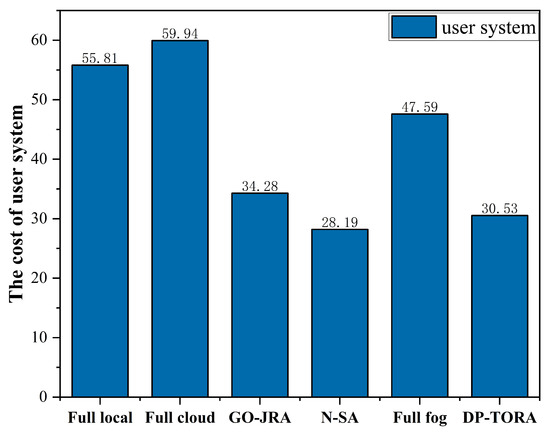

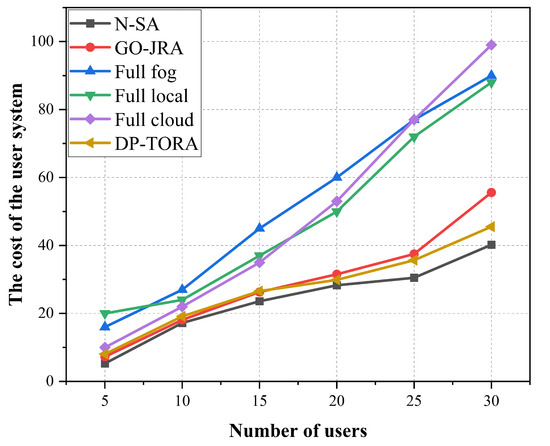

The cost comparison of several offloading mechanisms under the same circumstances is shown in Figure 4. The worst approach is full-cloud. The cost of offloading is the largest since the remote cloud is far from the user, causing excessive transmission delays and energy consumption. FS is closer to users and has less transmission delay and energy loss than full-cloud computing, full-fog computing is superior to both of these. However, because of its constrained computational capacity and the necessity for the FS to finish numerous jobs at once, latency will rise and fog nodes will become overloaded. The system cost is decreased to a level that is close to optimal thanks to the N-SA algorithm efficient use of each layer’s resources. Compared with the extreme strategy (full-cloud), the proposed solution reduces the system cost by 53% at 20 users.

Figure 4.

The system cost comparison of several offloading strategies.

Figure 5 depicts the relationship between the system cost and the change in the number of users. The offload costs for several strategies are trending upward. On the one hand, the computational resources allocated to users are decreasing, leading to higher processing latency. On the other hand, newly added users incur additional overhead, leading to an increase in the cost function. At 20 users, the increase of cost function is large, which may be due to the lack of fog resources and more users choosing to offload to remote clouds to complete their tasks. The offloading cost of the DP-TORA algorithm increases significantly for , which is due to the fact that multiple users all require more computational resources to reduce that delay, causing task overload and a cost increase. The difference between several strategies is most evident at , with a 33% reduction in N-SA algorithm cost compared to the GO-JRA algorithm.

Figure 5.

The impact of different number of users on the system cost.

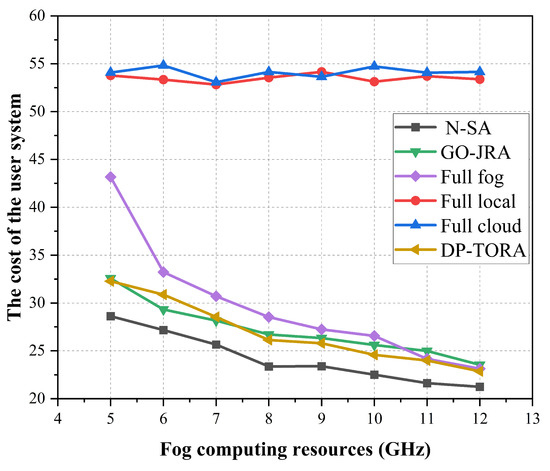

Figure 6 analyzes the relationship between system cost and fog computing resources. The computational system cost remains essentially constant for full-local and full-cloud, which is due to the fact that all tasks are executed on local and remote cloud and are not related to fog computing resources. In the (4, 12) interval, the magnitude of the total computational offload cost for the other three scenarios (full fog, GO-JRA, DP-TORA, and N-SA) all decreases with increasing fog resources, which is in accordance with the algorithm expectation. The GO-JRA algorithm is offloading to a particular FS as it goes, resource utilization is not high, and the performance is not obvious. The DP-TORA algorithm is limited by the delay requirements, and when the fog resources increase, it prefers to offload to reduce the delay, but the energy consumption increases, making the total cost slightly higher. The N-SA algorithm has good performance in completing tasks, considering computational offloading and fog resource allocation and making good use of local and cloud-fog resources to improve performance.

Figure 6.

The impact of different fog computing resources on the cost of offloading systems.

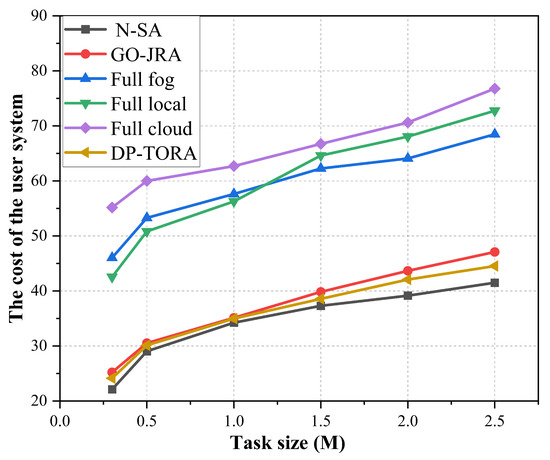

The effect of task data size on system cost is seen in Figure 7. The calculated costs for several offload strategies are trending upward. It is concluded that the full-cloud offloading is the worst, and the following explanation is given: The increase in leads to delay and energy consumption for transferring to the cloud, and the cloud resources lease more resources. Therefore, the order of magnitude of the cost function of full-cloud offloading is the largest. The increase at is the most significant, due to the increase in task data, several other strategies cannot fully utilize the resources, resulting in a significant cost the comparison is most significant at , where the cost is reduced by 46%, 42%, 38%, 9% and 11% respectively compared to several other policy algorithms.

Figure 7.

The impact of task data size on system offload costs.

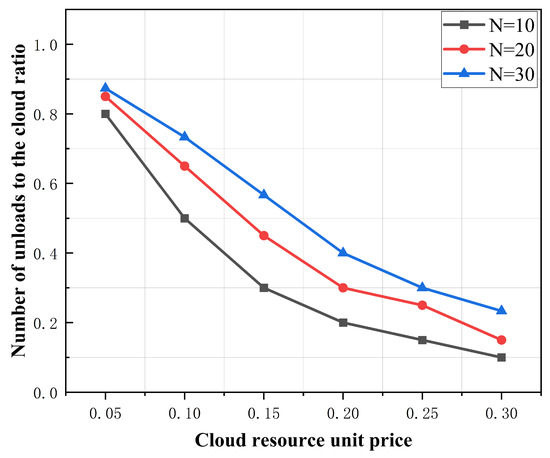

Figure 8 depicts the variation of cloud resource rental price versus the percentage of offloaded users. The more the number of users, the greater the percentage of offloading to remote cloud under the same cost condition. Because the resources of FS are not enough to meet the needs of multiple users, resulting in many users offloading to the remote cloud, and the percentage of offloading for is greater than . With the increase of cloud resource leasing price, most users are executed in FS to reduce system cost, resulting in a decrease in offloading ratio.

Figure 8.

Cloud resource rental price to user offload ratio chart.

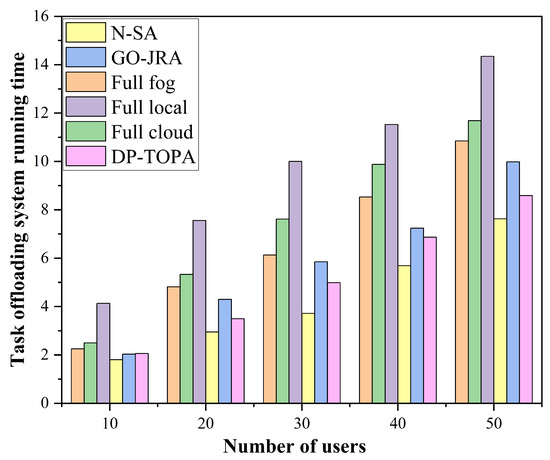

Figure 9 shows the effect of the on the operational efficiency. From the figure, the task completion time of several strategies will increase. It is not difficult to explain that as the increases, the number of tasks for users also increases, leading to an increase in computation time. Through comparison, it is concluded that the N-SA algorithm has the highest execution efficiency.

Figure 9.

Comparison of the runtime of several policies with different number of end devices.

7. Conclusions

In the context of a cloud-fog collaborative network architecture, this paper proposes a joint optimal partial computation offloading and resource allocation scheme. Considering multiple optimization objectives (latency, energy consumption, and cloud rental cost), construct the objective function using a weighted sum situation, it is expressed as MINLP. Uses the Cpu frequency adjustment technique to schedule the CPU cycle frequency of the UE in order to reduce UE power consumption. Due to the combinatorial nature of the problem, the remaining expressions are divided into the resource allocation problem with a fixed offloading decision and the optimal value offloading problem corresponding to the optimal resource allocation method. Resource allocation is divided into two sub-problems: fog computing resource allocation and transmission power allocation, which are solved by convex optimization techniques and KKT conditions, respectively. The inner loop process of the simulated annealing algorithm is improved, and a memory function is added to obtain the N-SA algorithm, which solves the optimal value offloading problem corresponding to the fixed resource allocation method. The simulation results demonstrate that the proposed algorithm always finds the best solution quickly and lowers the total offloading cost for users.

In addition, in future work, the proposed scheme strategy will be applied in the environment of the Internet of Things (intelligent medical, internet of vehicles, and intelligent education). The distribution of uplink transmission power must take into account cross-transmission interference. Future development will take into consideration D2D communication and fog collaboration, and each user will not be constrained to a certain FS range for offloading. It is also anticipated that other significant IOT network concerns will be addressed.

Author Contributions

All authors were jointly provided with this journal article, the ideas W.B. and Y.W. for the article, the methods and data—Y.W., the collection of data and article writing—Y.W., the review—W.B., and the revision—Y.W. W.B. provided supervision and guidance throughout the process. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported by Beijing Natural Science Foundation-Haidian Original Innovation Joint Fund Project (No. L182039).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Gu, L.; Zeng, D.; Guo, S.; Barnawi, A.; Xiang, Y. Cost efficient resource management in fog computing supported medical cyber physical system. IEEE Trans. 2017, 5, 108–119. [Google Scholar] [CrossRef]

- Mondal, S.; Das, G.; Wong, E. Cost-optimal cloudlet placement frameworks over fifiber-wireless access networks for low-latency applications. J. Netw. Comput. Appl. 2019, 138, 27–38. [Google Scholar] [CrossRef]

- Alippi, C.; Fantacci, R.; Marabissi, D.; Roveri, M. A cloud to the ground: The new frontier of intelligent and autonomous networks of things. IEEE Commun. 2016, 54, 14–20. [Google Scholar] [CrossRef]

- Shao, Y.; Li, C.; Fu, Z.; Jia, L.; Luo, Y. Cost-effective replication management and scheduling in edge computing. J. Netw. Comput. Appl. 2019, 129, 46–61. [Google Scholar] [CrossRef]

- Mouradian, C.; Naboulsi, D.; Yangui, S.; Glitho, R.H.; Morrow, M.J.; Polakos, P.A. A comprehensive survey on fog computing: State-of-the-art and research challenges. IEEE Commun. 2018, 20, 416–464. [Google Scholar] [CrossRef]

- Shukla, S.; Hassan, M.F.; Tran, D.C.; Akbar, R.; Paputungan, I.V.; Khan, M.K. Improving latency in Internet-of-Things and cloud computing for real-time data transmission: A systematic literature review (SLR). Cluster Comput. 2021. [Google Scholar] [CrossRef]

- Sarkar, I.; Adhikari, M.; Kumar, N.; Kumar, S. A Collaborative Computational Offloading Strategy for Latency-Sensitive Applications in Fog Networks. IEEE Internet Things J. 2022, 9, 4565–4572. [Google Scholar] [CrossRef]

- Aazam, M.; Islam, S.u.; Lone, S.T.; Abbas, A. Cloud of Things (CoT): Cloud-Fog-IoT Task Offloading for Sustainable Internet of Things. IEEE Trans. Sustain. Comput. 2022, 7, 87–98. [Google Scholar] [CrossRef]

- Sun, W.-B.; Xie, J.; Yang, X.; Wang, L.; Meng, W.-X. Efficient Computation Offloading and Resource Allocation Scheme for Opportunistic Access Fog-Cloud Computing Networks. IEEE Trans. Cogn. Commun. Netw. 2023, 9, 521–533. [Google Scholar] [CrossRef]

- Kansal, P.; Kumar, M.; Verma, O.P. Classification of resource management approaches in fog/edge paradigm and future research prospects: A systematic review. J. Supercomput. 2022, 78, 13145–13204. [Google Scholar] [CrossRef]

- Chang, Z.; Liu, L.; Guo, X.; Sheng, Q. Dynamic Resource Allocation and Computation Offloading for IoT Fog Computing System. IEEE Trans. Ind. Inform. 2021, 17, 3348–3357. [Google Scholar] [CrossRef]

- Fei, Z.; Wang, Y.; Zhao, J.; Wang, X.; Jiao, L. Joint Computational and Wireless Resource Allocation in Multicell Collaborative Fog Computing Networks. IEEE Trans. Wirel. Commun. 2022, 21, 9155–9169. [Google Scholar] [CrossRef]

- Sun, Y.-J.; Wang, H.; Zhang, C.-X. Balanced Computing Offloading for Selfish IoT Devices in Fog Computing. IEEE Access 2022, 10, 30890–30898. [Google Scholar]

- Yadav, J.; Suman. E-MOGWO Algorithm for Computation Offloading in Fog Computing. Intell. Autom. Soft Comput. 2023, 36, 1063–1078. [Google Scholar] [CrossRef]

- Khan, M.T.; Barik, L.; Adholiya, A.; Patra, S.S.; Brahma, A.N.; Barik, R.K. Task Offloading Scheme for Latency Sensitive Tasks In 5G IOHT on Fog Assisted Cloud Computing Environment. In Proceedings of the International Conference for Emerging Technology (INCET), Belgaum, India, 27–29 May 2022; pp. 1–5. [Google Scholar]

- Bai, W.; Qian, C. Deep Reinforcement Learning for Joint Offloading and Resource Allocation in Fog Computing. In Proceedings of the 2021 IEEE 12th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 20–22 August 2021; pp. 131–134. [Google Scholar]

- Xu, D.; Li, Q.; Zhu, H. Energy-saving computation offloading by joint data compression and resource allocation for mobile-edge computing. IEEE Commun. 2019, 23, 704–707. [Google Scholar] [CrossRef]

- Kyung, Y. Performance Analysis of Task Offloading with Opportunistic Fog Nodes. IEEE Access. 2022, 10, 4506–4512. [Google Scholar] [CrossRef]

- Belcredi, G.; Aspirot, L.; Monzón, P.; Belzarena, P. Large-Scale IoT Network Offloading to Cloud and Fog Computing: A Fluid Limit Model. In Proceedings of the 2021 IEEE URUCON, Montevideo, Uruguay, 24–26 November 2021; pp. 377–381. [Google Scholar]

- Deb, P.K.; Misra, S.; Mukherjee, A. Latency-Aware Horizontal Computation Offloading for Parallel Processing in Fog-Enabled IoT. IEEE Syst. J. 2022, 16, 2537–2544. [Google Scholar] [CrossRef]

- Vakilian, S.; Moravvej, S.V.; Fanian, A. Using the Artificial Bee Colony (ABC) Algorithm in Collaboration with the Fog Nodes in the Internet of Things Three-layer Architecture. In Proceedings of the 2021 29th Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, Islamic Republic, 18–20 May 2021; pp. 509–513. [Google Scholar]

- Dong, Y.; Guo, S.; Liu, J.; Yang, Y. Energy-Efficient Fair Cooperation Fog Computing in Mobile Edge Networks for Smart City. IEEE Internet Things J. 2019, 6, 7543–7554. [Google Scholar] [CrossRef]

- Khumalo, N.; Oyerinde, O.; Mfupe, L. Einforcement Learning-based Computation Resource Allocation Scheme for 5G Fog-Radio Access Network. In Proceedings of the 2020 Fifth International Conference on Fog and Mobile Edge Computing (FMEC), Paris, France, 20–23 April 2020; pp. 353–355. [Google Scholar]

- Khan, S.; Shah, I.A.; Tairan, N.; Shah, H.; Nadeem, M.F. Optimal resource allocation in fog computing for healthcare applications. Comput. Mater. Contin. 2022, 71, 6147–6163. [Google Scholar] [CrossRef]

- Fan, Q.; Bai, J.; Zhang, H.; Yi, Y.; Liu, L. Delay-Aware Resource Allocation in Fog-Assisted IoT Networks Through Reinforcement Learning. IEEE Internet Things J. 2022, 9, 5189–5199. [Google Scholar] [CrossRef]

- Yi, C.; Huang, S.; Cai, J. Joint Resource Allocation for Device-to-Device Communication Assisted Fog Computing. IEEE Trans. Mob. Comput. 2021, 20, 1076–1091. [Google Scholar] [CrossRef]

- Tabarsi, B.T.; Rezaee, A.; Movaghar, A. ROGI: Partial Computation Offloading and Resource Allocation in the Fog-Based IoT Network Towards Optimizing Latency and Power Consumption. Cluster Comput. 2023, 26, 1767–1784. [Google Scholar] [CrossRef]

- Sardellitti, S.; Scutari, G.; Barbarossa, S. Joint optimization of radio and computational resources for multicell mobile-edge computing. IEEE Trans. Signal Inf. 2015, 1, 89–103. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef]

- Vakilian, S.; Fanian, A.; Falsafain, H.; Gulliver, T.A. Node cooperation for workload offloading in a fog computing network via multi-objective optimization. J. Netw. Comput. Appl. 2022, 205, 1084–8045. [Google Scholar] [CrossRef]

- Tran, T.X.; Pompili, D. Joint Task Offloading and Resource Allocation for Multi-Server Mobile-Edge Computing Networks. IEEE Trans. Veh. Technol. 2019, 68, 856–868. [Google Scholar] [CrossRef]

- Mukherjee, M.; Kumar, S.; Zhang, Q.; Matam, R.; Mavromoustakis, C.X.; Lv, Y.; Mastorakis, G. Task Data Offloading and Resource Allocation in Fog Computing with Multi-Task Delay Guarantee. IEEE Access 2019, 7, 152911–152918. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).