Edge-Computing-Enabled Low-Latency Communication for a Wireless Networked Control System

Abstract

1. Introduction

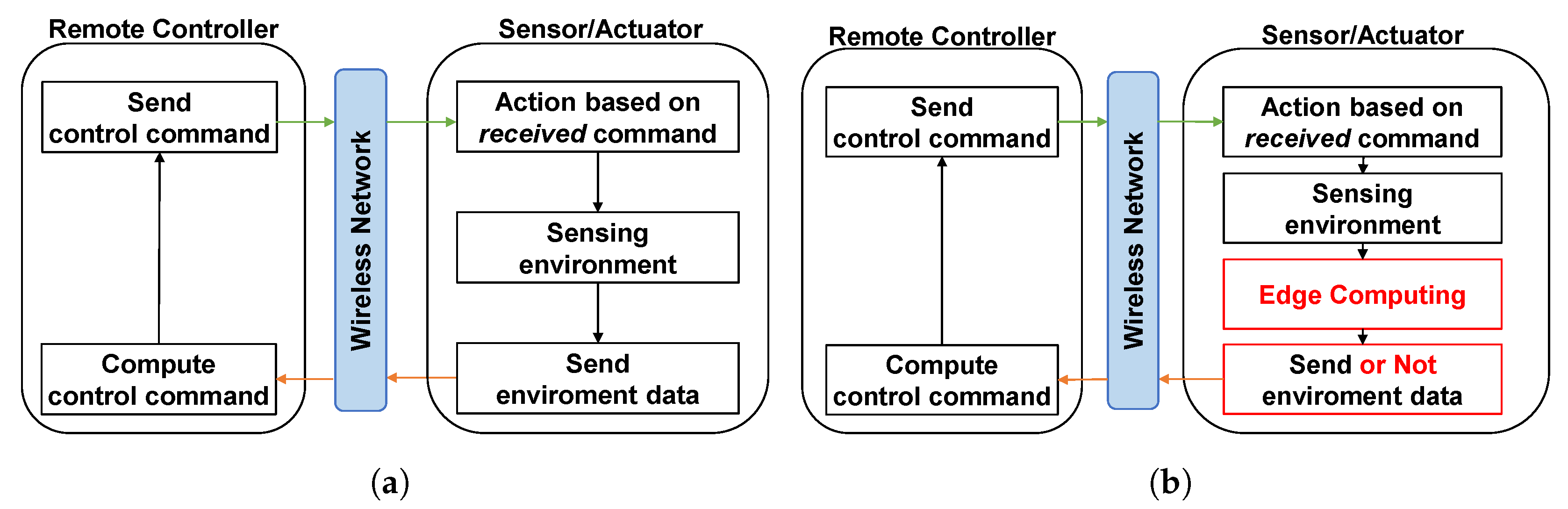

1.1. Background

1.2. Problem Statement

1.3. Motivation to Research

1.4. Proposed Approach

1.5. Contributions

- We propose an adaptive scheme, and we corroborated its effectiveness through real-world implementation. Our approach exhibits a reduction in communication cycles between the actuator and the remote server without compromising performance.

- We devised an algorithm to compute the threshold value for the proposed adaptive scheme. This algorithm ensures a threshold value that can balance the tradeoff between reduced communication cycles and system performance.

- We carried out extensive experiments using a developed testbed to evaluate the performance of the proposed system. The experimental results affirmed that our proposed system decreased the communication cycles between the actuator and the remote server in comparison to a conventional system.

1.6. Structure of the Paper

2. Related Works

2.1. Improving the Performance of WNCS

2.2. Edge Computing for IoT

2.3. Computation Offloading

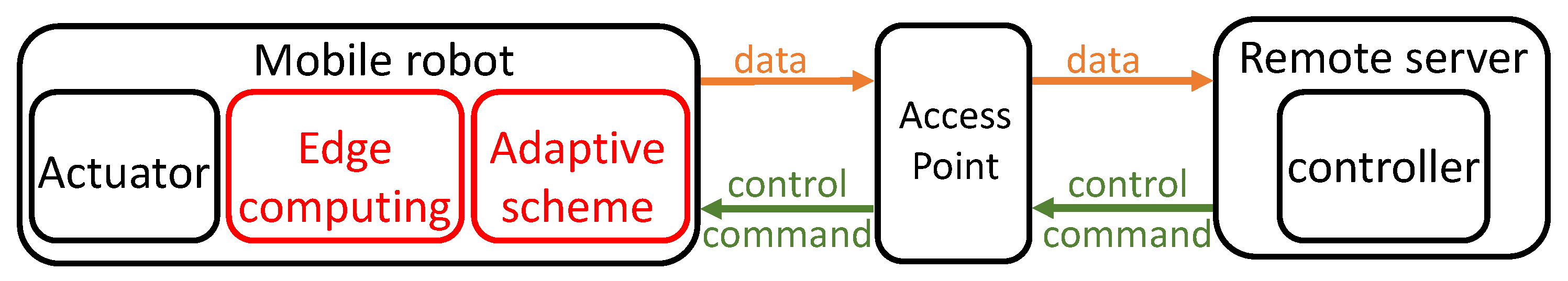

3. System Description

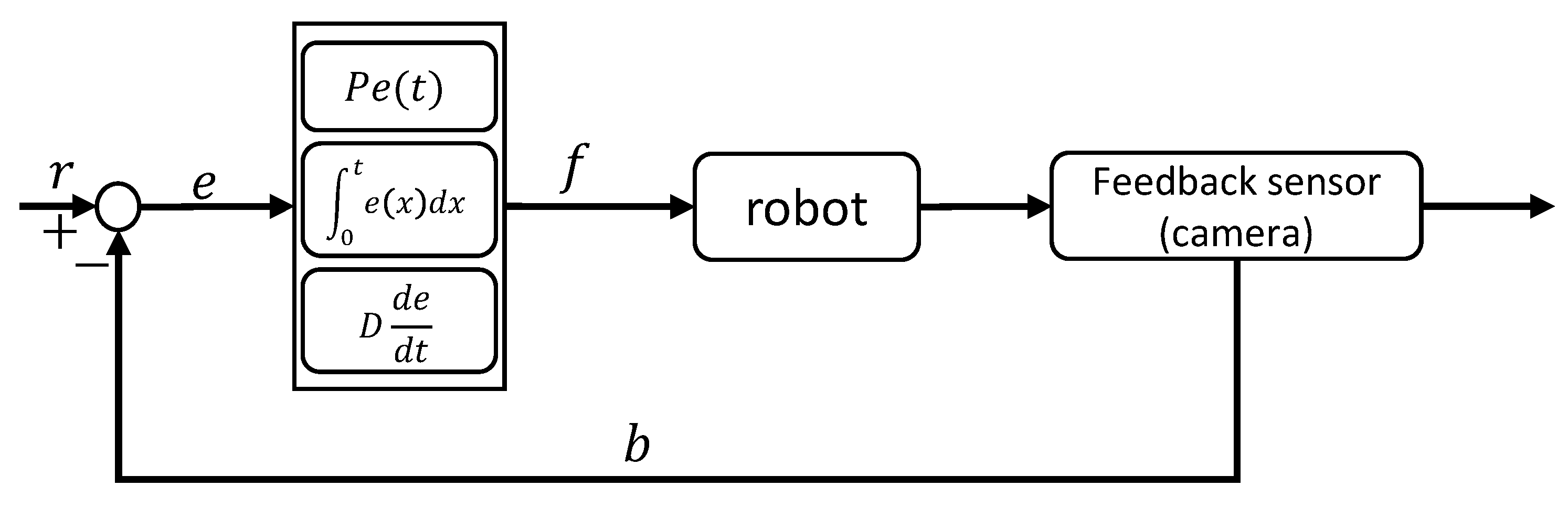

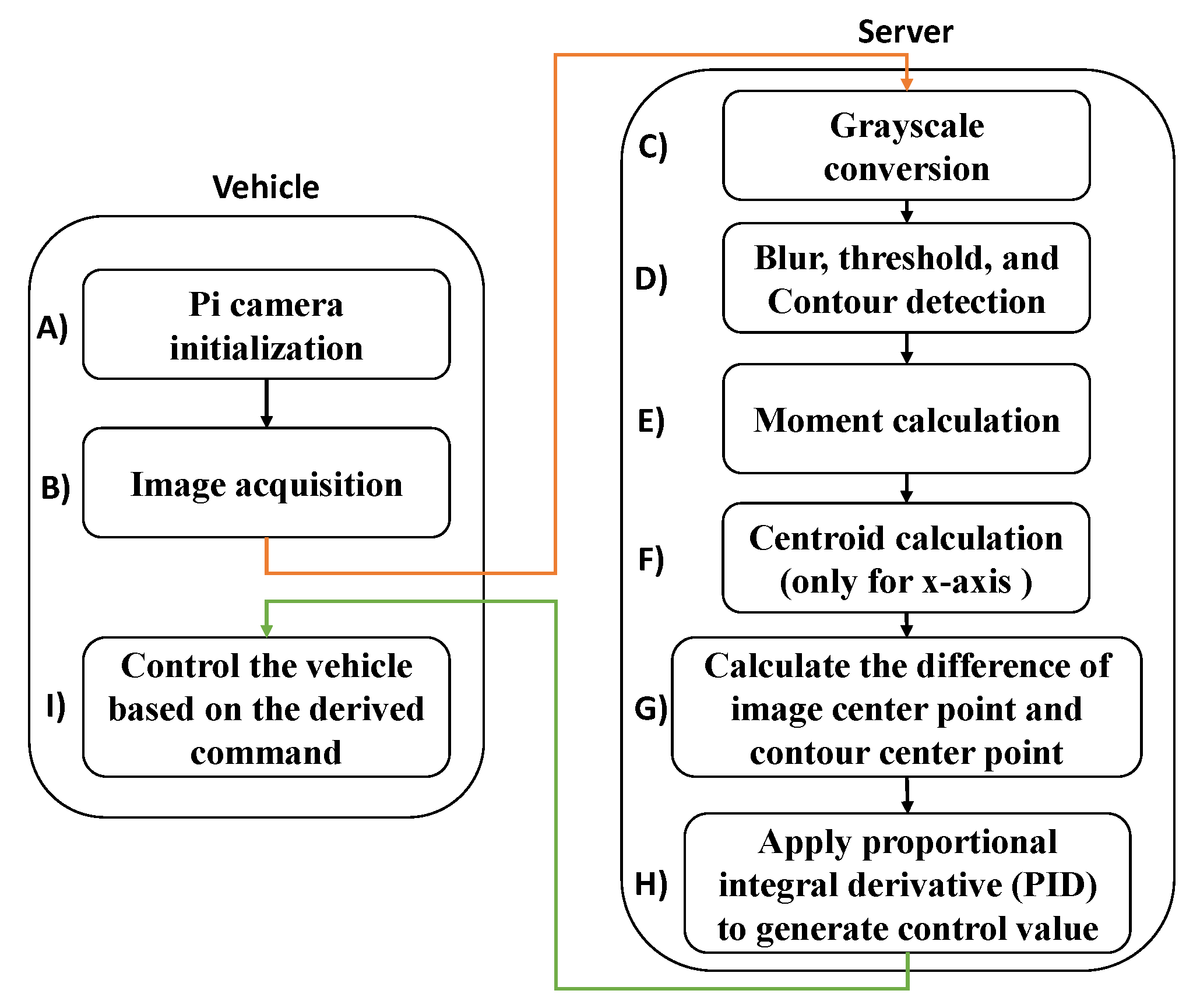

3.1. Puppet Mode

Mathematical Modeling of Puppet Mode

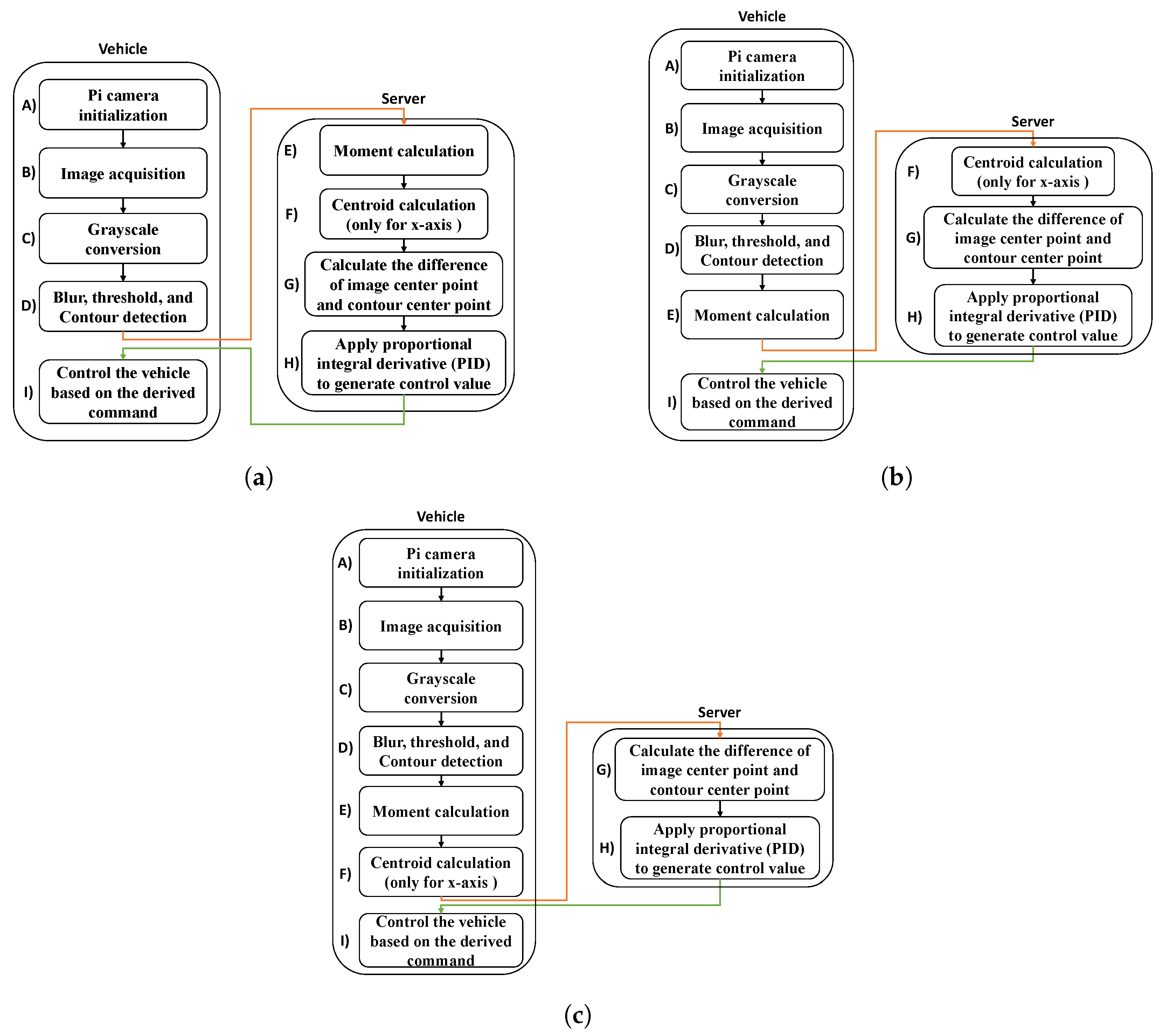

3.2. Edge Computing Mode

3.2.1. Mathematical Modeling of the Edge Computing Mode

3.3. Local Mode

Mathematical Modeling of Local Mode

3.4. Determining the Operation Mode

3.4.1. Determining the Operation Mode Considering Latency

3.4.2. Determining the Operation Mode Considering Vehicle Energy Consumption

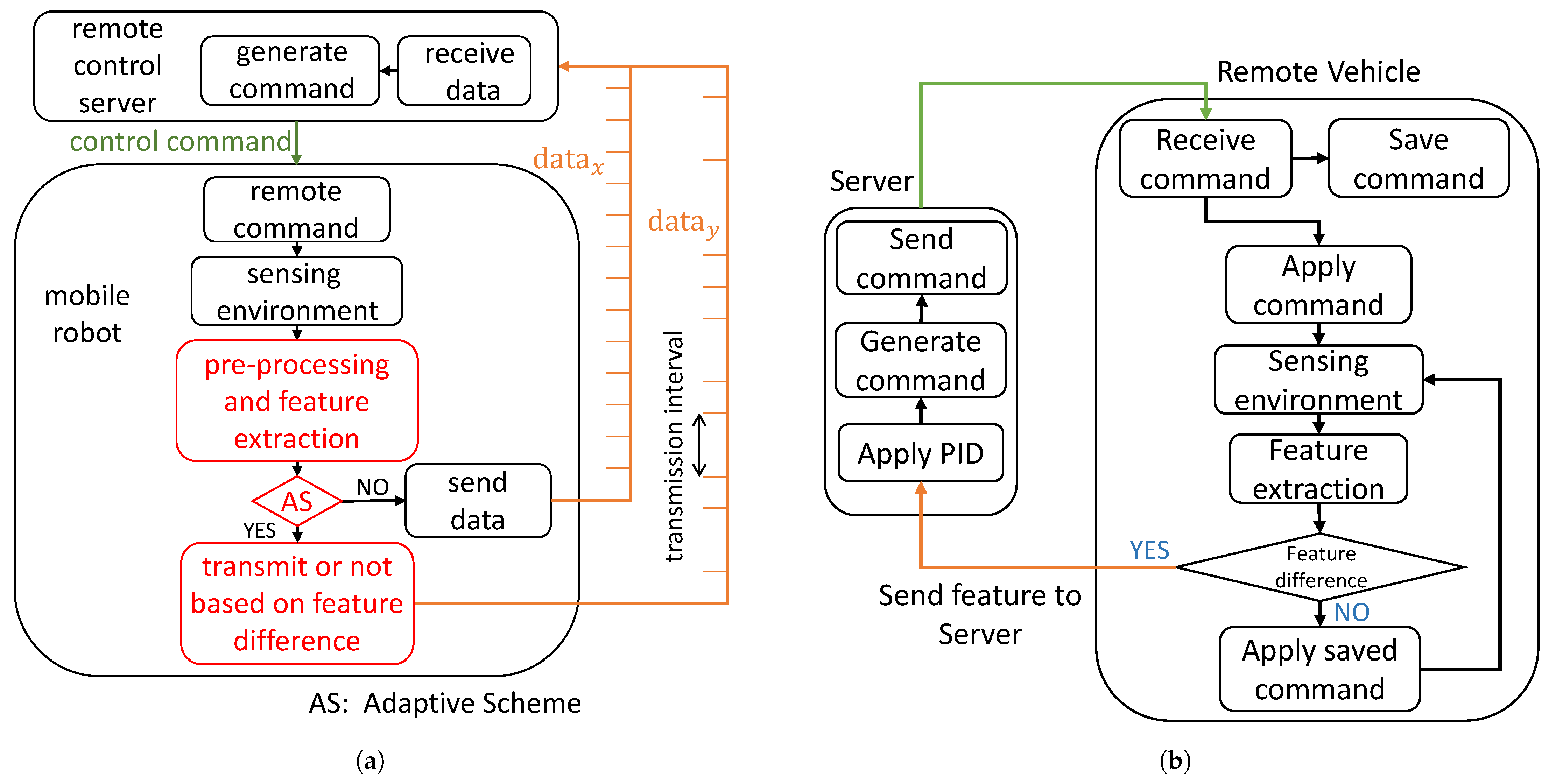

3.5. Adaptive Scheme

4. Experiments and Discussion

4.1. Testbed Setup

4.2. Assumptions Made

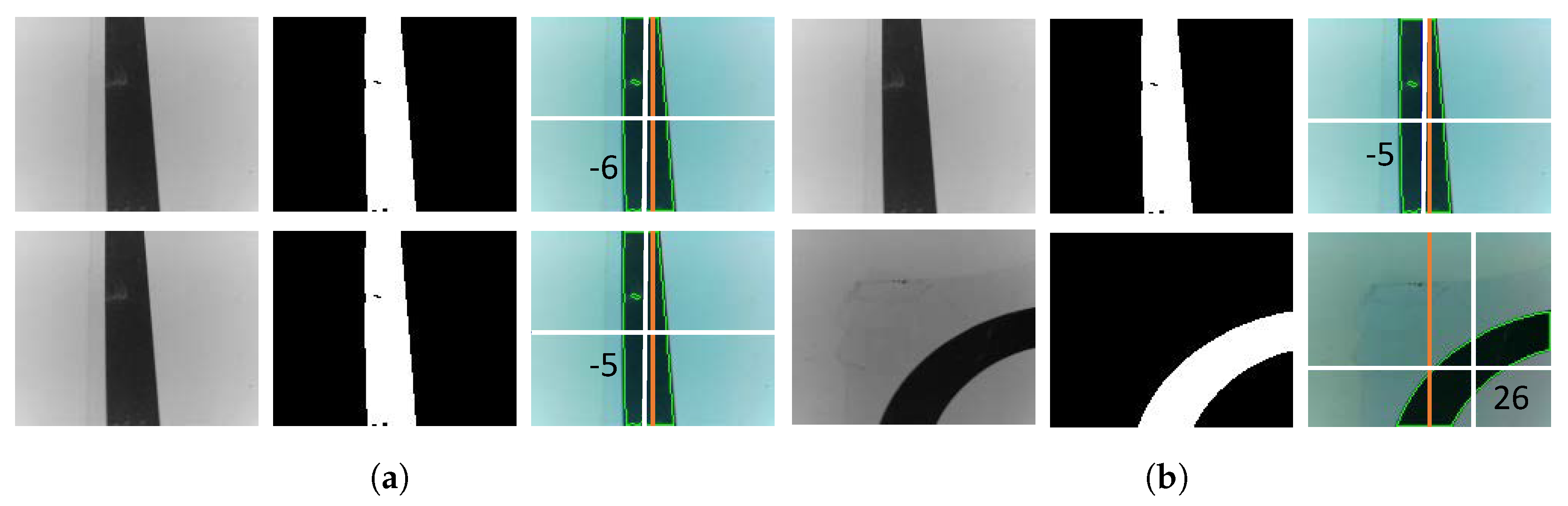

4.3. Local Mode Implementation

4.4. Puppet Mode Implementation

4.5. Edge Computing Mode Implementation

4.6. Adaptive Mode Implementation

| Algorithm 1 Update the threshold for the adaptive mode. |

Previous center point: Current center point: if

then do not send count number of consecutive events if consecutive events are exceeding t then end if else send count number of consecutive events if consecutive events are exceeding t then end if end if |

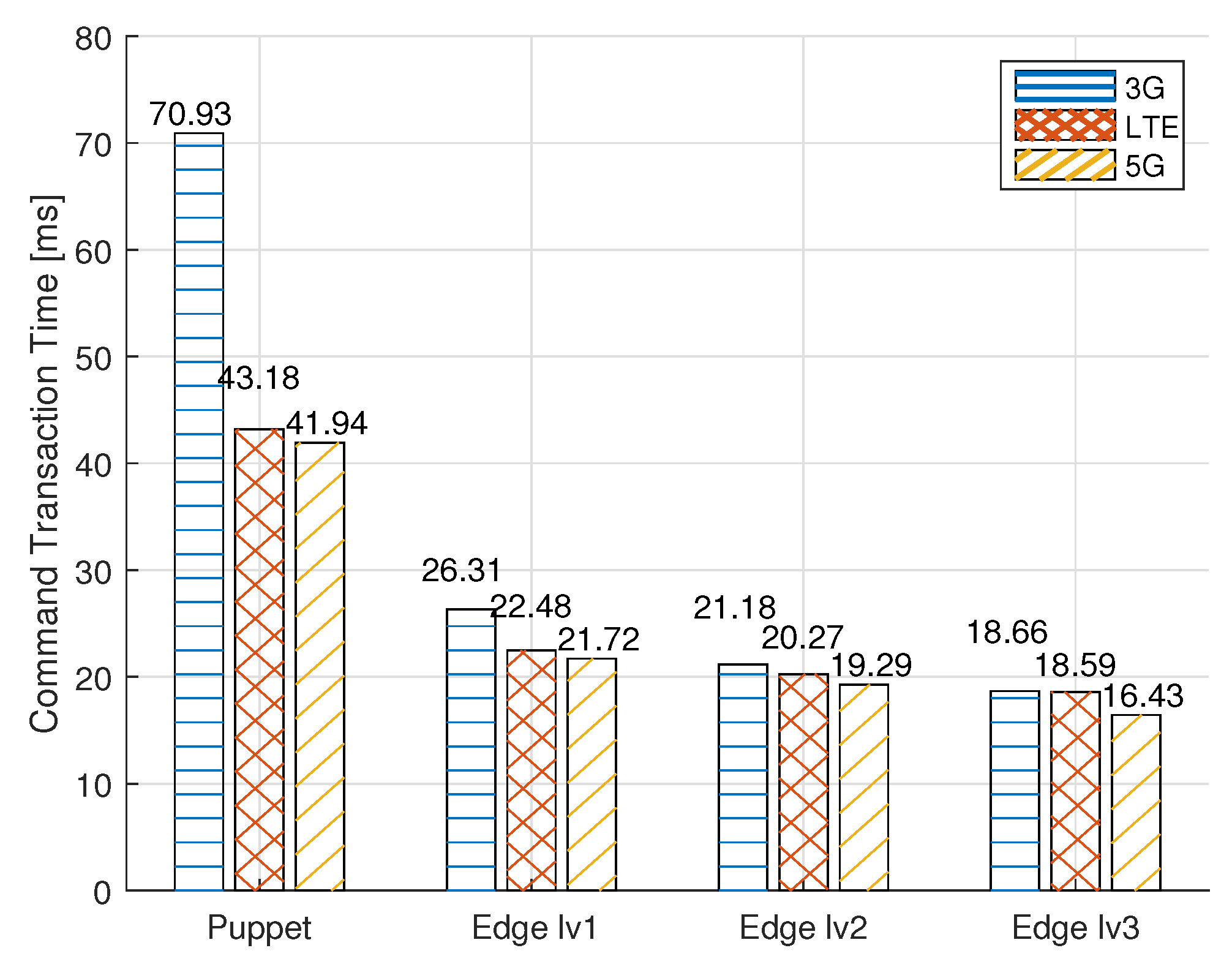

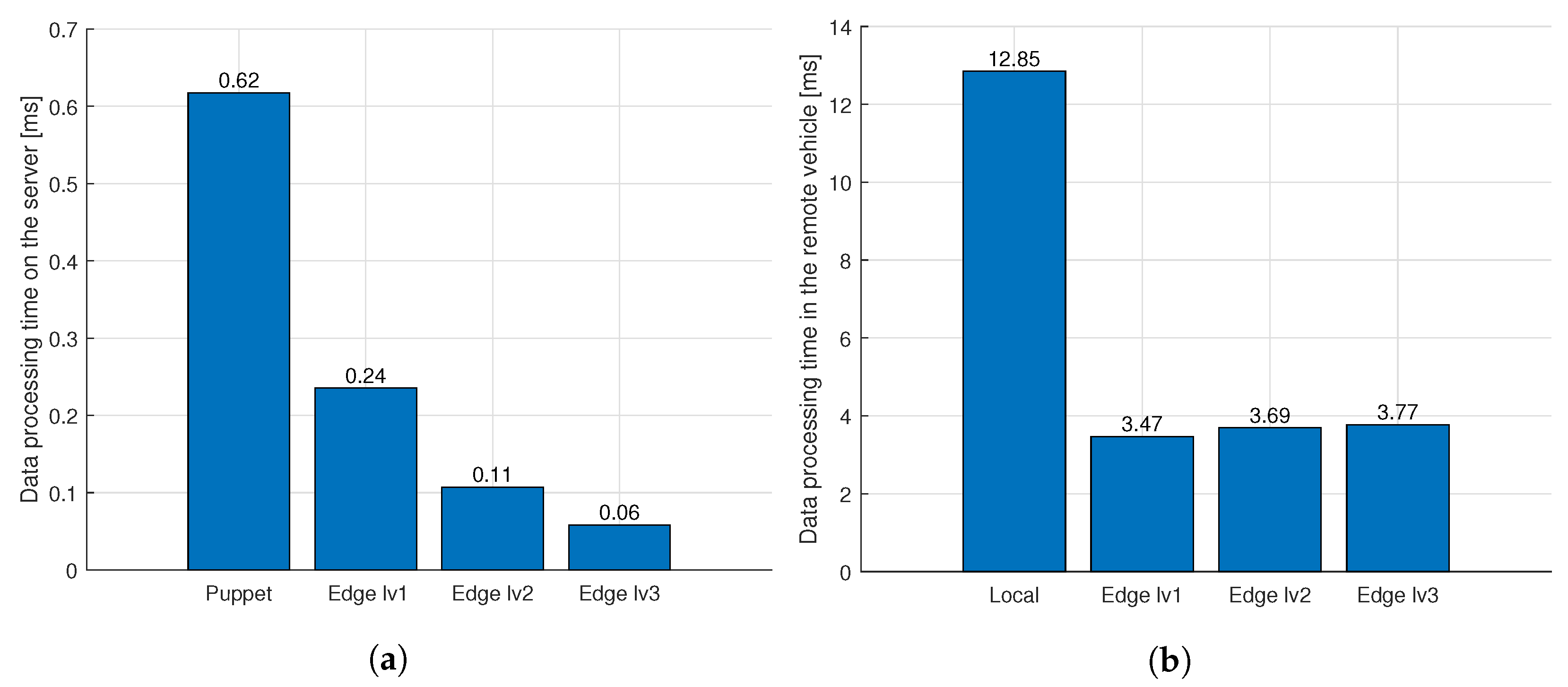

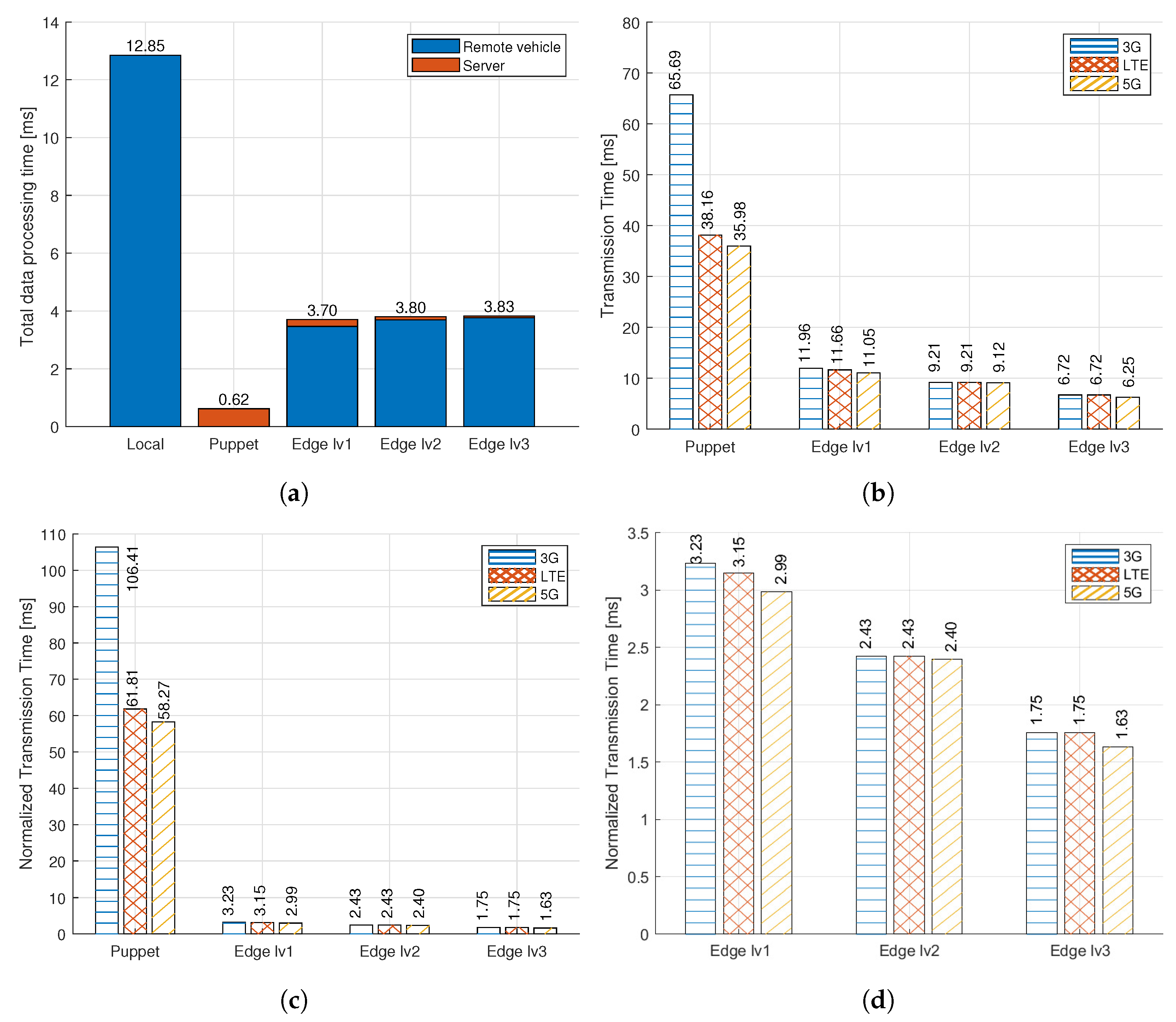

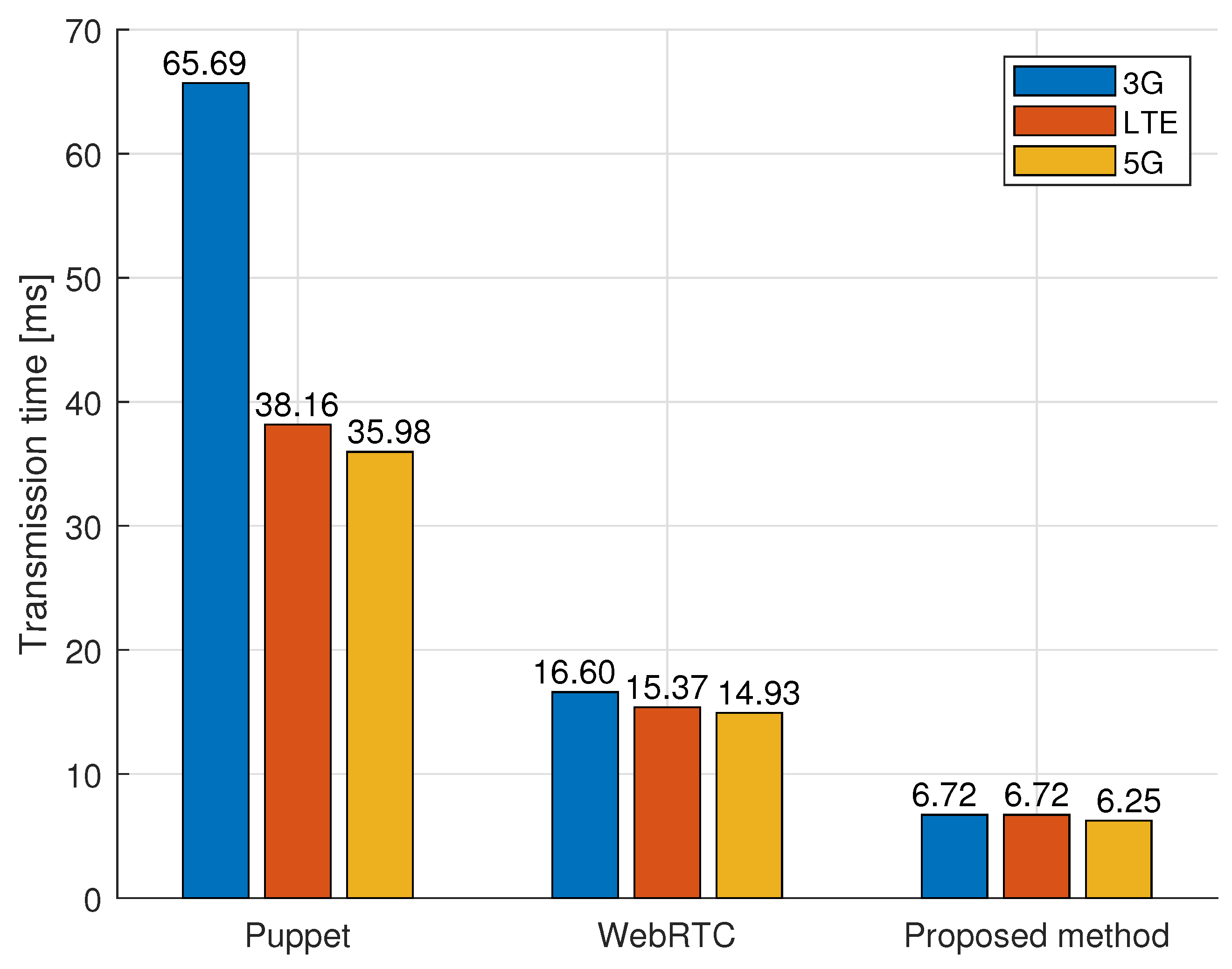

4.7. Results and Discussion

5. Limitations of the Proposed Approach

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Klügel, M.; Mamduhi, M.; Ayan, O.; Vilgelm, M.; Johansson, K.H.; Hirche, S.; Kellerer, W. Joint cross-layer optimization in real-time networked control systems. IEEE Trans. Control Netw. Syst. 2020, 7, 1903–1915. [Google Scholar] [CrossRef]

- Pillajo, C.; Hincapié, R. Stochastic control for a wireless network control system (WNCS). In Proceedings of the 2020 IEEE ANDESCON, Quito, Ecuador, 13–16 October 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Huang, K.; Liu, W.; Li, Y.; Vucetic, B. To sense or to control: Wireless networked control using a half-duplex controller for IIoT. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; IEEE: Piscataway, NJ, USA, 2019. [Google Scholar]

- Wiens, R.C.; Maurice, S.; Robinson, S.H.; Nelson, A.E.; Cais, P.; Bernardi, P.; Newell, R.T.; Clegg, S.; Sharma, S.K.; Storms, S.; et al. The SuperCam instrument suite on the NASA Mars 2020 rover: Body unit and combined system tests. Space Sci. Rev. 2021, 217, 4. [Google Scholar] [CrossRef]

- Khanh, Q.V.; Hoai, N.V.; Manh, L.D.; Le, A.N.; Jeon, G. Wireless communication technologies for IoT in 5G: Vision, applications, and challenges. Wirel. Commun. Mob. Comput. 2022, 2022, 3229294. [Google Scholar] [CrossRef]

- Sun, J.; Yang, J.; Zeng, Z. Predictor-based periodic event-triggered control for nonlinear uncertain systems with input delay. Automatica 2022, 136, 110055. [Google Scholar] [CrossRef]

- Akasaka, H.; Hakamada, K.; Morohashi, H.; Kanno, T.; Kawashima, K.; Ebihara, Y.; Oki, E.; Hirano, S.; Mori, M. Impact of the suboptimal communication network environment on telerobotic surgery performance and surgeon fatigue. PLoS ONE 2022, 17, e0270039. [Google Scholar] [CrossRef]

- Zhang, B.L.; Han, Q.L.; Zhang, X.M.; Yu, X. Sliding mode control with mixed current and delayed states for offshore steel jacket platforms. IEEE Trans. Control Syst. Technol. 2014, 22, 1769–1783. [Google Scholar] [CrossRef]

- Saleem, U.; Liu, Y.; Jangsher, S.; Tao, X.; Li, Y. Latency minimization for D2D-enabled partial computation offloading in mobile edge computing. IEEE Trans. Veh. Technol. 2020, 69, 4472–4486. [Google Scholar] [CrossRef]

- Almutairi, J.; Aldossary, M. Modeling and analyzing offloading strategies of IoT applications over edge computing and joint clouds. Symmetry 2021, 13, 402. [Google Scholar] [CrossRef]

- Ma, X. Optimal control of whole network control system using improved genetic algorithm and information integrity scale. Comput. Intell. Neurosci. 2022, 2022, 9897894. [Google Scholar] [CrossRef] [PubMed]

- Huang, K.; Liu, W.; Shirvanimoghaddam, M.; Li, Y.; Vucetic, B. Real-time remote estimation with hybrid ARQ in wireless networked control. IEEE Trans. Wirel. Commun. 2020, 19, 3490–3504. [Google Scholar] [CrossRef]

- Cho, B.M.; Jang, M.S.; Park, K.J. Channel-aware congestion control in vehicular cyber-physical systems. IEEE Access 2020, 8, 73193–73203. [Google Scholar] [CrossRef]

- Gatsis, K.; Pajic, M.; Ribeiro, A.; Pappas, G.J. Opportunistic control over shared wireless channels. IEEE Trans. Autom. Control 2015, 60, 3140–3155. [Google Scholar] [CrossRef]

- Huang, K.; Liu, W.; Li, Y.; Vucetic, B.; Savkin, A. Optimal downlink–uplink scheduling of wireless networked control for Industrial IoT. IEEE Internet Things J. 2019, 7, 1756–1772. [Google Scholar] [CrossRef]

- Demirel, B.; Gupta, V.; Quevedo, D.E.; Johansson, M. On the trade-off between communication and control cost in event-triggered dead-beat control. IEEE Trans. Autom. Control 2016, 62, 2973–2980. [Google Scholar] [CrossRef]

- Zeng, T.; Mozaffari, M.; Semiari, O.; Saad, W.; Bennis, M.; Debbah, M. Wireless communications and control for swarms of cellular-connected UAVs. In Proceedings of the 2018 52nd Asilomar Conference on Signals, Systems, and Computers, Pacific Grove, CA, USA, 28–31 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 719–723. [Google Scholar]

- Tiberkak, A.; Hentout, A.; Belkhir, A. WebRTC-based MOSR remote control of mobile manipulators. Int. J. Intell. Robot. Appl. 2023, 7, 304–320. [Google Scholar] [CrossRef]

- Gong, C.; Lin, F.; Gong, X.; Lu, Y. Intelligent cooperative edge computing in internet of things. IEEE Internet Things J. 2020, 7, 9372–9382. [Google Scholar] [CrossRef]

- Premsankar, G.; Di Francesco, M.; Taleb, T. Edge Computing for the Internet of Things: A Case Study. IEEE Internet Things J. 2018, 5, 1275–1284. [Google Scholar] [CrossRef]

- Li, H.; Ota, K.; Dong, M. Learning IoT in Edge: Deep Learning for the Internet of Things with Edge Computing. IEEE Netw. 2018, 32, 96–101. [Google Scholar] [CrossRef]

- Anzanpour, A.; Amiri, D.; Azimi, I.; Levorato, M.; Dutt, N.; Liljeberg, P.; Rahmani, A.M. Edge-Assisted Control for Healthcare Internet of Things: A Case Study on PPG-Based Early Warning Score. ACM Trans. Internet Things 2021, 2, 1–21. [Google Scholar] [CrossRef]

- Damigos, G.; Lindgren, T.; Nikolakopoulos, G. Toward 5G Edge Computing for Enabling Autonomous Aerial Vehicles. IEEE Access 2023, 11, 3926–3941. [Google Scholar] [CrossRef]

- Koyasako, Y.; Suzuki, T.; Kim, S.Y.; Kani, J.I.; Terada, J. Motion control system with time-varying delay compensation for access edge computing. IEEE Access 2021, 9, 90669–90676. [Google Scholar] [CrossRef]

- Shahhosseini, S.; Anzanpour, A.; Azimi, I.; Labbaf, S.; Seo, D.; Lim, S.S.; Liljeberg, P.; Dutt, N.; Rahmani, A.M. Exploring computation offloading in IoT systems. Inf. Syst. 2022, 107, 101860. [Google Scholar] [CrossRef]

- Zhang, K.; Mao, Y.; Leng, S.; Zhao, Q.; Li, L.; Peng, X.; Pan, L.; Maharjan, S.; Zhang, Y. Energy-efficient offloading for mobile edge computing in 5G heterogeneous networks. IEEE Access 2016, 4, 5896–5907. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic Computation Offloading for Mobile-Edge Computing with Energy Harvesting Devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Hossain, M.S.; Nwakanma, C.I.; Lee, J.M.; Kim, D.S. Edge computational task offloading scheme using reinforcement learning for IIoT scenario. ICT Express 2020, 6, 291–299. [Google Scholar] [CrossRef]

- Wang, K.; Xiong, Z.; Chen, L.; Zhou, P.; Shin, H. Joint time delay and energy optimization with intelligent overclocking in edge computing. Sci. China Inf. Sci. 2020, 63, 140313. [Google Scholar] [CrossRef]

- Shao, J.; Zhang, J. Communication-Computation Trade-off in Resource-Constrained Edge Inference. IEEE Commun. Mag. 2020, 58, 20–26. [Google Scholar] [CrossRef]

- Zhang, W.; Wen, Y.; Guan, K.; Kilper, D.; Luo, H.; Wu, D.O. Energy-optimal mobile cloud computing under stochastic wireless channel. IEEE Trans. Wirel. Commun. 2013, 12, 4569–4581. [Google Scholar] [CrossRef]

- Shakarami, A.; Shahidinejad, A.; Ghobaei-Arani, M. An autonomous computation offloading strategy in Mobile Edge Computing: A deep learning-based hybrid approach. J. Netw. Comput. Appl. 2021, 178, 102974. [Google Scholar] [CrossRef]

- Carvalho, G.; Cabral, B.; Pereira, V.; Bernardino, J. Computation offloading in Edge Computing environments using Artificial Intelligence techniques. Eng. Appl. Artif. Intell. 2020, 95, 103840. [Google Scholar] [CrossRef]

- Chen, M.; Wang, T.; Zhang, S.; Liu, A. Deep reinforcement learning for computation offloading in mobile edge computing environment. Comput. Commun. 2021, 175, 1–12. [Google Scholar] [CrossRef]

- Ju, Y.; Chen, Y.; Cao, Z.; Liu, L.; Pei, Q.; Xiao, M.; Ota, K.; Dong, M.; Leung, V.C.M. Joint Secure Offloading and Resource Allocation for Vehicular Edge Computing Network: A Multi-Agent Deep Reinforcement Learning Approach. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5555–5569. [Google Scholar] [CrossRef]

- Gao, H.; Huang, W.; Liu, T.; Yin, Y.; Li, Y. PPO2: Location Privacy-Oriented Task Offloading to Edge Computing Using Reinforcement Learning for Intelligent Autonomous Transport Systems. IEEE Trans. Intell. Transp. Syst. 2022, 24, 7599–7612. [Google Scholar] [CrossRef]

- Ma, X.; Xu, H.; Gao, H.; Bian, M. Real-time multiple-workflow scheduling in cloud environments. IEEE Trans. Netw. Serv. Manag. 2021, 18, 4002–4018. [Google Scholar] [CrossRef]

| Features Considered | Related Works | Our Work | |

|---|---|---|---|

| Reducing transmission | Dynamic sampling cycle | - | considered |

| Elongated sampling cycle | [14,15,16,18] | - | |

| Edge computing | Remedy for reducing the data size to be transmitted | - | considered |

| Remedy for limited computing power | [21,22,23,24,27,28,29,30,31,32,33,35,36] | - | |

| Computation offloading | [27,28,29,30,31,32,33,35,36] | considered | |

| Only considered communication protocol | [12,13,14,35,36] | - | |

| Proposed solution | Implemented on a real-world testbed | [18,22,23,24] | considered |

| Performed by simulation | [12,13,14,15,16,21,27,28,29,30,31,32,33,35,36] | - | |

| Product Details | Raspberry Pi 3B+ |

|---|---|

| SOC | Broadcom BCM2837B0 |

| Core Type | Cortex-A53 64-bit |

| No. of Cores | 4 |

| GPU | VideoCore IV |

| CPU | 1.4 GHz |

| RAM | 1 GB DDR2 |

| Power Ratings | 1.13A @ 5V |

| Puppet Mode | Edge Level 1 | Edge Level 2 | Edge Level 3 |

|---|---|---|---|

| 61,440 Bytes | 2100 Bytes | 495 Bytes | 19 Bytes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mtowe, D.P.; Kim, D.M. Edge-Computing-Enabled Low-Latency Communication for a Wireless Networked Control System. Electronics 2023, 12, 3181. https://doi.org/10.3390/electronics12143181

Mtowe DP, Kim DM. Edge-Computing-Enabled Low-Latency Communication for a Wireless Networked Control System. Electronics. 2023; 12(14):3181. https://doi.org/10.3390/electronics12143181

Chicago/Turabian StyleMtowe, Daniel Poul, and Dong Min Kim. 2023. "Edge-Computing-Enabled Low-Latency Communication for a Wireless Networked Control System" Electronics 12, no. 14: 3181. https://doi.org/10.3390/electronics12143181

APA StyleMtowe, D. P., & Kim, D. M. (2023). Edge-Computing-Enabled Low-Latency Communication for a Wireless Networked Control System. Electronics, 12(14), 3181. https://doi.org/10.3390/electronics12143181