FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising

Abstract

1. Introduction

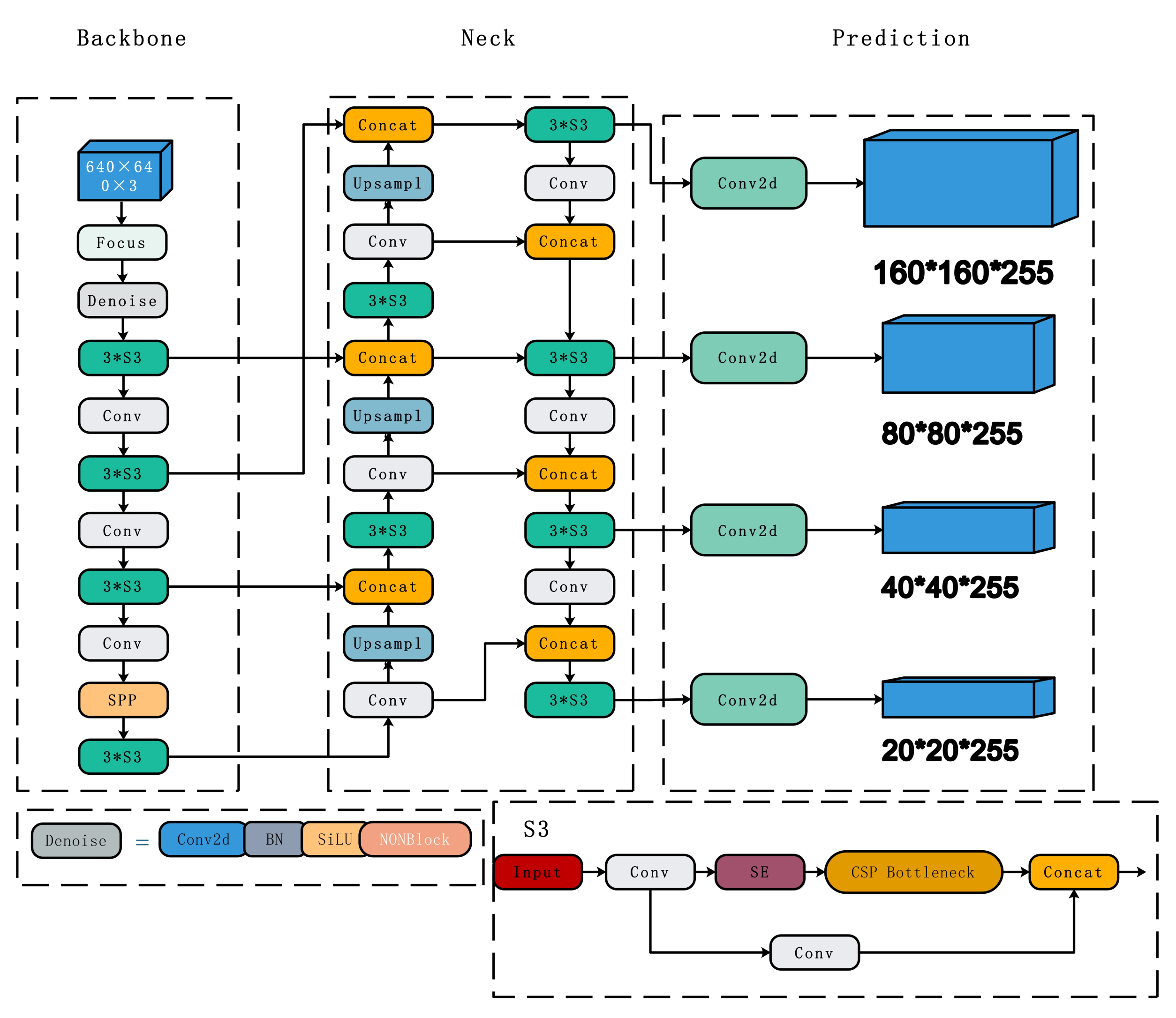

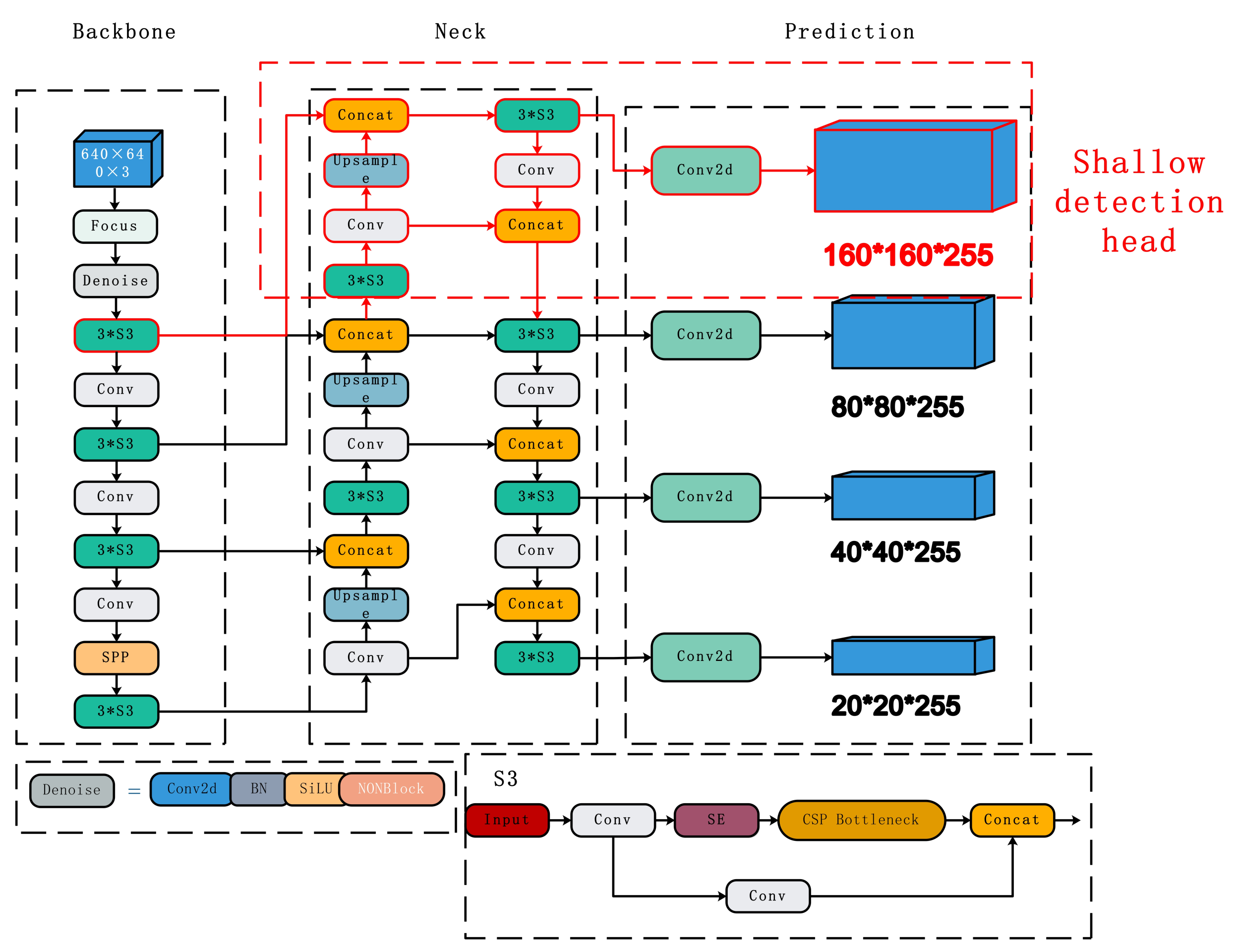

- A shallow object detection head is added to the prediction part, integrating shallow features comprehensively. This method effectively leverages the shallow features of small objects, enhances the semantic representation of small objects, and improves the detection performance of the safety helmet detection model.

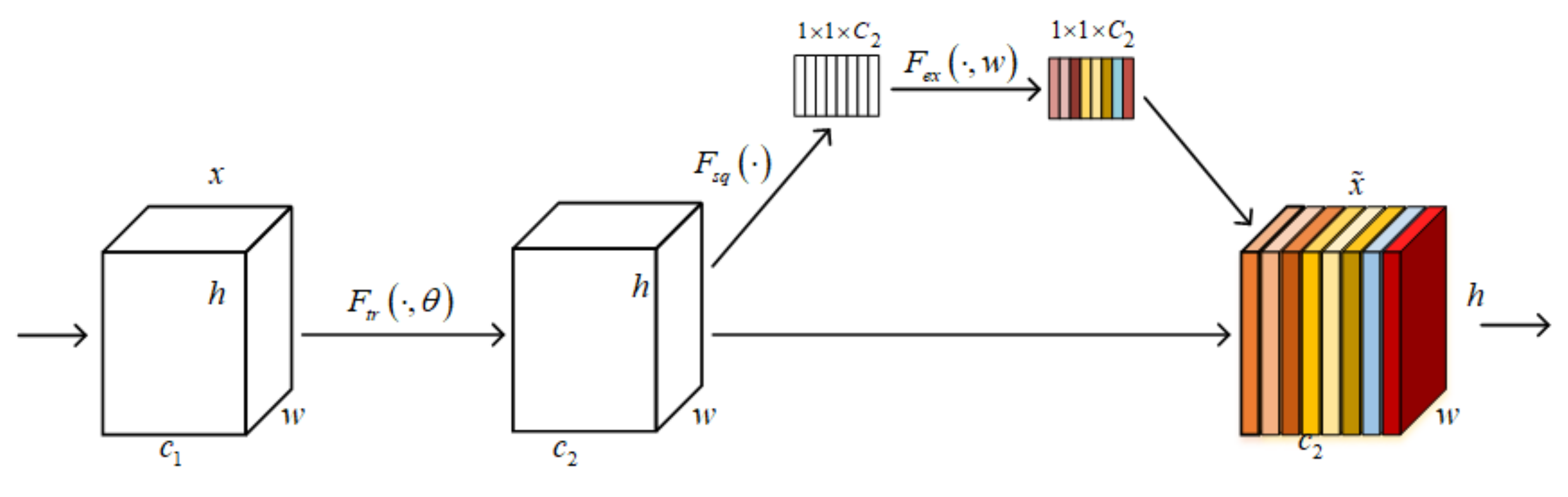

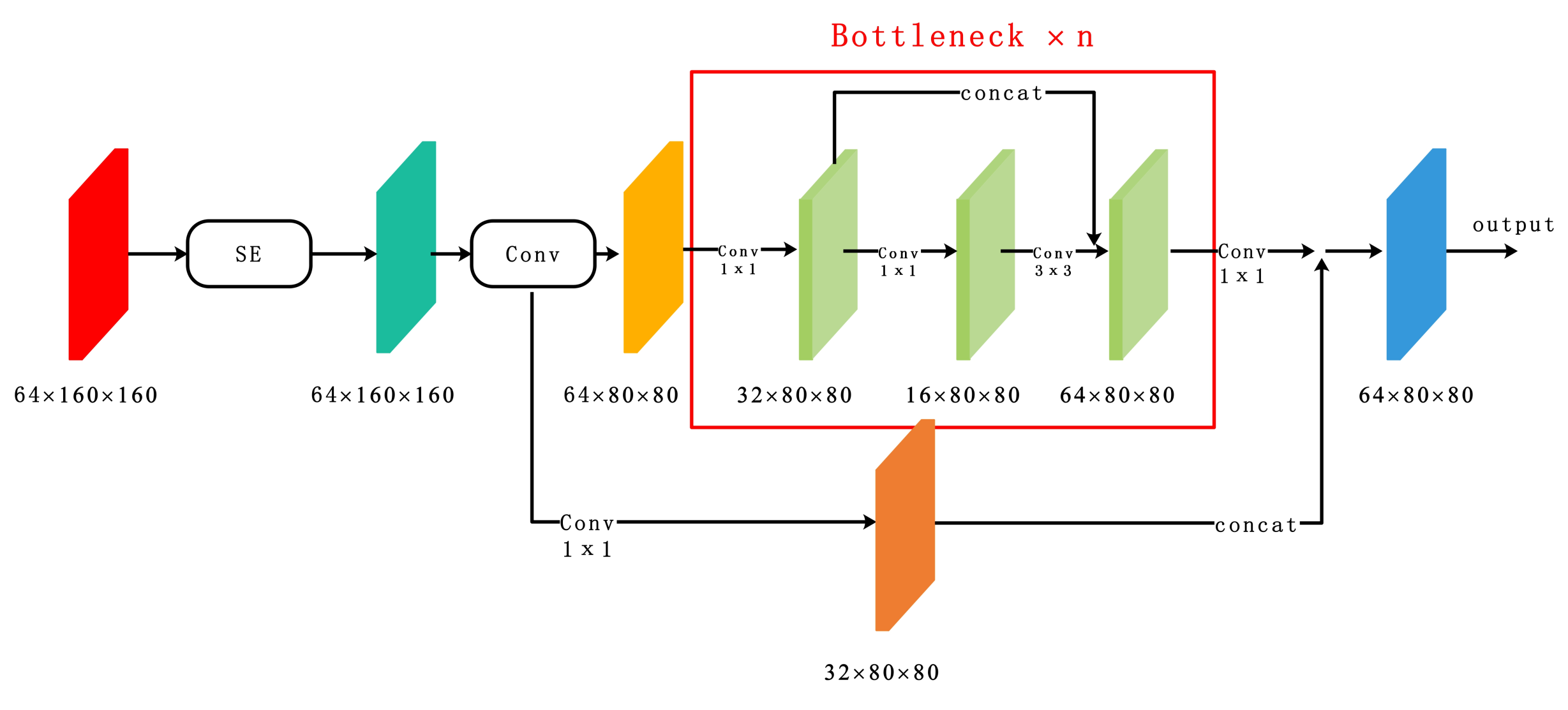

- By introducing an attention module, the algorithm selectively learns and adjusts the interdependencies among different channels in the feature map. This approach highlights the relevant information of small objects and enhances their representation in the feature space, which in turn, significantly improves the detection accuracy of small objects in complex scenarios.

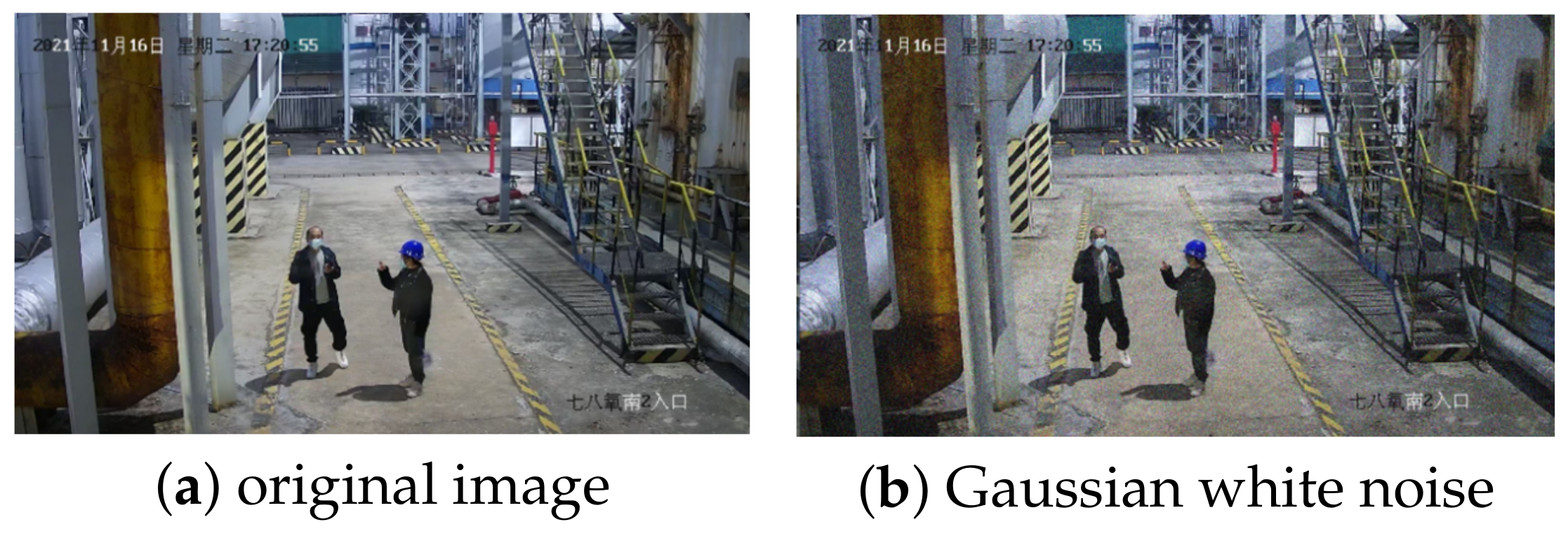

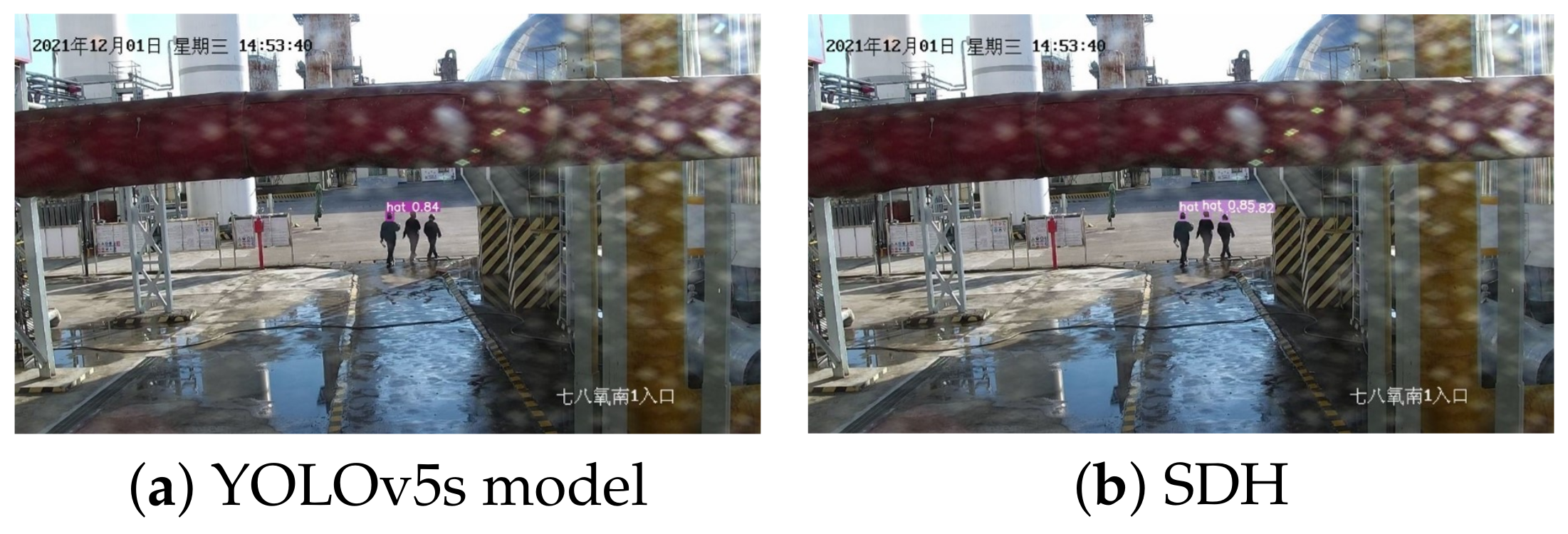

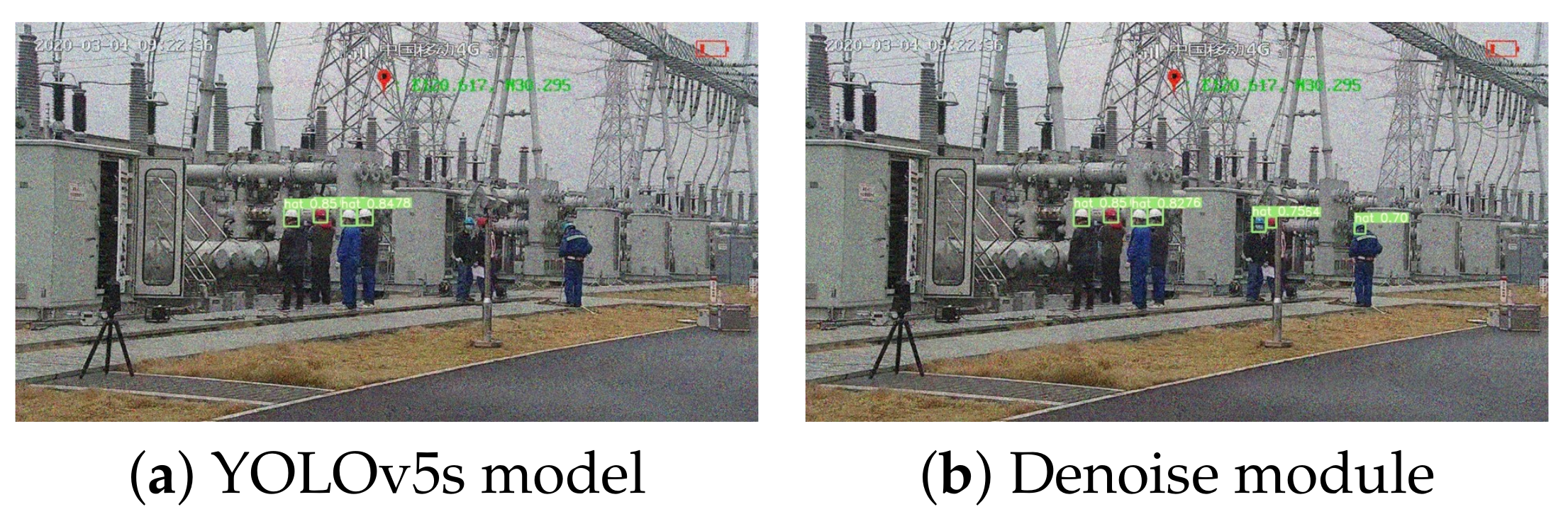

- A novel noise reduction block is proposed based on the Non-Local Means algorithm, eliminating noise within features extracted by the convolutional layer, thereby improving detection results.

2. Related Works

3. FEFD-YOLOv5

3.1. Network Structure

- Addition of a small object detection head to the shallow feature section: This is specifically designed for small objects, with an input size of 160 ∗ 160 ∗ 255. Its primary function is to capture the information of small objects in the early stages of feature extraction, ensuring that this information is not lost or blurred in the subsequent deeper network layers.

- Incorporation of the SENet attention module, forming an S3 structure: By introducing an attention mechanism into the network, the importance of feature channels can be dynamically adjusted. The SENet module can automatically learn and extract features that are more useful for object recognition, thereby concentrating the model’s focus and reducing the interference of irrelevant information.

- Addition of a noise reduction module to the Backbone: The Backbone, being the main part of the network, is used to obtain the basic features of the original image. Adding a noise reduction module helps eliminate image noise at an early stage, enhancing the model’s resistance to noise and ensuring the clarity and accuracy of the features.

3.2. Small Object Detection Layer

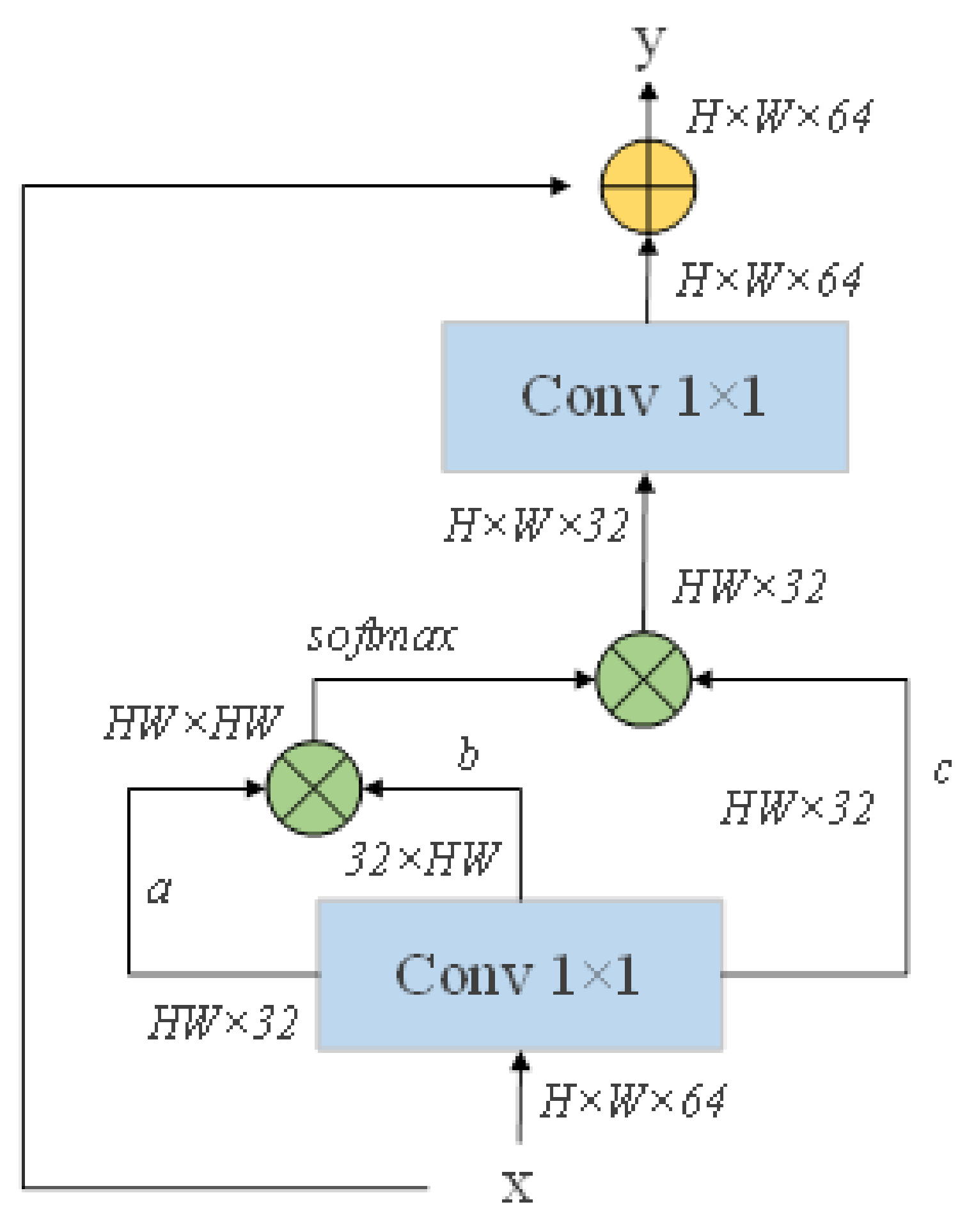

3.3. Channel Attention Module

3.4. Denoise Module

4. Experimental Results and Analysis

4.1. Experimental Datasets

4.2. Experimental Environments

4.3. Experimental and Evaluation Criteria

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jeong, J.; Park, H.; Kwak, N. Enhancement of SSD by concatenating feature maps for object detection. arXiv 2017, arXiv:1705.09587. [Google Scholar]

- Li, Z.; Zhou, F. FSSD: Feature fusion single shot multibox detector. arXiv 2017, arXiv:1712.00960. [Google Scholar]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Chen, P.Y.; Chang, M.C.; Hsieh, J.W.; Chen, Y.S. Parallel residual bi-fusion feature pyramid network for accurate single-shot object detection. IEEE Trans. Image Process. 2021, 30, 9099–9111. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Huang, D. Receptive field block net for accurate and fast object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 385–400. [Google Scholar]

- Wang, X.; Girshick, R.; Gupta, A.; He, K. Non-local neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7794–7803. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Han, K.; Zeng, X. Deep learning-based workers safety helmet wearing detection on construction sites using multi-scale features. IEEE Access 2021, 10, 718–729. [Google Scholar] [CrossRef]

- Li, N.; Lyu, X.; Xu, S.; Wang, Y.; Wang, Y.; Gu, Y. Incorporate online hard example mining and multi-part combination into automatic safety helmet wearing detection. IEEE Access 2020, 9, 139536–139543. [Google Scholar] [CrossRef]

- Wu, F.; Jin, G.; Gao, M.; Zhiwei, H.; Yang, Y. Helmet detection based on improved YOLO V3 deep model. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 363–368. [Google Scholar]

- Han, G.; Zhu, M.; Zhao, X.; Gao, H. Method based on the cross-layer attention mechanism and multiscale perception for safety helmet-wearing detection. Comput. Electr. Eng. 2021, 95, 107458. [Google Scholar] [CrossRef]

- Tai, W.; Wang, Z.; Li, W.; Cheng, J.; Hong, X. DAAM-YOLOV5: A Helmet Detection Algorithm Combined with Dynamic Anchor Box and Attention Mechanism. Electronics 2023, 12, 2094. [Google Scholar] [CrossRef]

- Murtagh, F. Multilayer perceptrons for classification and regression. Neurocomputing 1991, 2, 183–197. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

| Hardware | Software |

|---|---|

| Graphics Card | 3090 |

| Graphics Memory | 24 GB |

| RAM | 32 GB |

| Hard Drive | 20 TB |

| Operating System | Ubuntu 18.04.6 |

| Development Environment | CUDA 11.4 |

| Programming Language | Python 3.6 |

| Framework | PyTorch |

| SENet | CBAM | SDH | mAP@0.5/% | t/ms |

|---|---|---|---|---|

| 88.01% | 24 | |||

| ✓ | 92.43% | 27 | ||

| ✓ | 89.63% | 28 | ||

| ✓ | 90.47% | 26 | ||

| ✓ | ✓ | 92.60% | 32 | |

| ✓ | ✓ | 94.78% | 30 |

| Model | No Noise | = 15 | = 25 | = 35 | = 50 |

|---|---|---|---|---|---|

| YOLOv5s | 90.26% | 88.50% | 85.65% | 80.92% | 70.17% |

| YOLOv5s-N | 90.50% | 91.02% | 91.43% | 90.78% | 89.40% |

| FEFDNet | 94.89% | 94.33% | 94.57% | 93.82% | 91.55% |

| Algorithm | AP@0.5/% (Hat) | AP@0.5/% (Person) | mAP@0.5/% |

|---|---|---|---|

| Faster R-CNN | 82.67% | 84.55% | 83.61% |

| SSD300 | 77.62% | 74.84% | 76.23% |

| YOLOv3 | 81.78% | 79.68% | 80.73% |

| YOLOv4 | 87.87% | 85.93% | 86.90% |

| YOLOv5s | 90.26% | 85.76% | 88.01% |

| YOLOv6 [26] | 90.46% | 87.51% | 88.98% |

| YOLOv7 [27] | 90.67% | 88.76% | 89.71% |

| FEFDNet | 94.89% | 94.67% | 94.78% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Qiu, Y.; Bai, H. FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising. Electronics 2023, 12, 2902. https://doi.org/10.3390/electronics12132902

Zhang Y, Qiu Y, Bai H. FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising. Electronics. 2023; 12(13):2902. https://doi.org/10.3390/electronics12132902

Chicago/Turabian StyleZhang, Yiduo, Yi Qiu, and Huihui Bai. 2023. "FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising" Electronics 12, no. 13: 2902. https://doi.org/10.3390/electronics12132902

APA StyleZhang, Y., Qiu, Y., & Bai, H. (2023). FEFD-YOLOV5: A Helmet Detection Algorithm Combined with Feature Enhancement and Feature Denoising. Electronics, 12(13), 2902. https://doi.org/10.3390/electronics12132902