MFDANet: Multi-Scale Feature Dual-Stream Aggregation Network for Salient Object Detection

Abstract

1. Introduction

- (1)

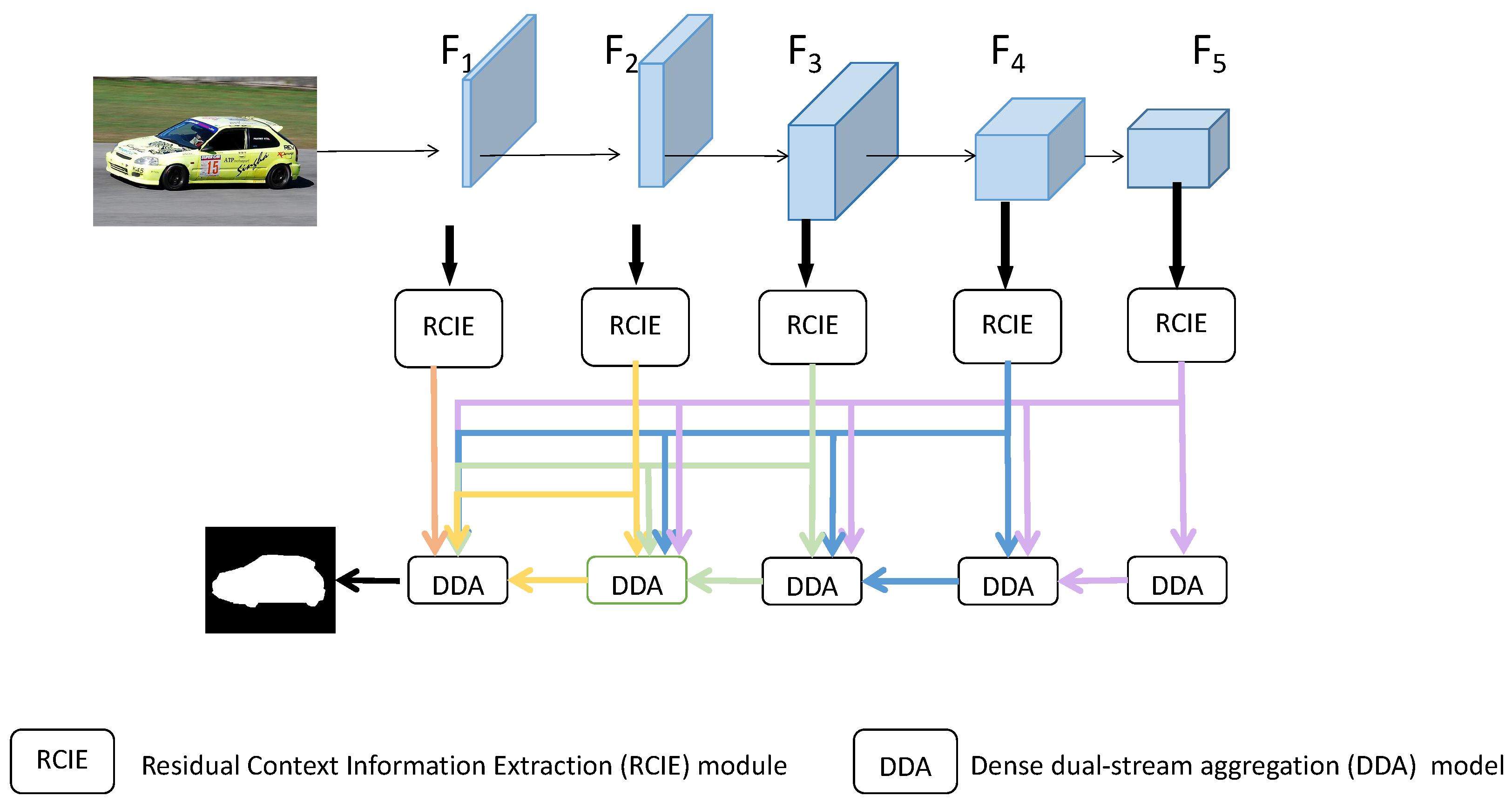

- The proposed MFDANet network effectively addresses the issues in SOD by incorporating the RCIE and DDA modules to capture multi-scale context information and aggregate different level features.

- (2)

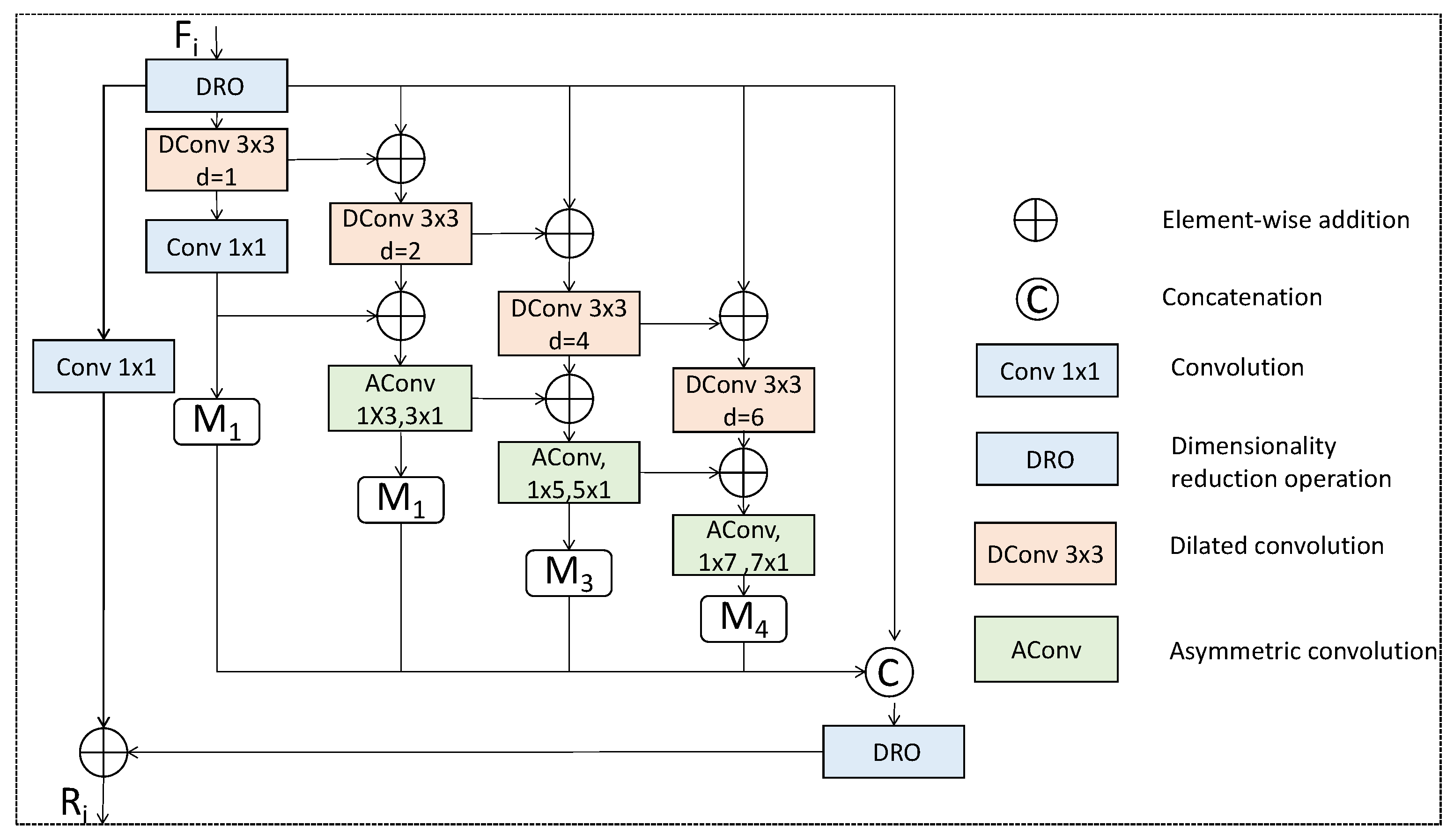

- The RCIE module introduces a novel approach to extracting context information using dilated and asymmetric convolutions, which improves the discriminative power of features.

- (3)

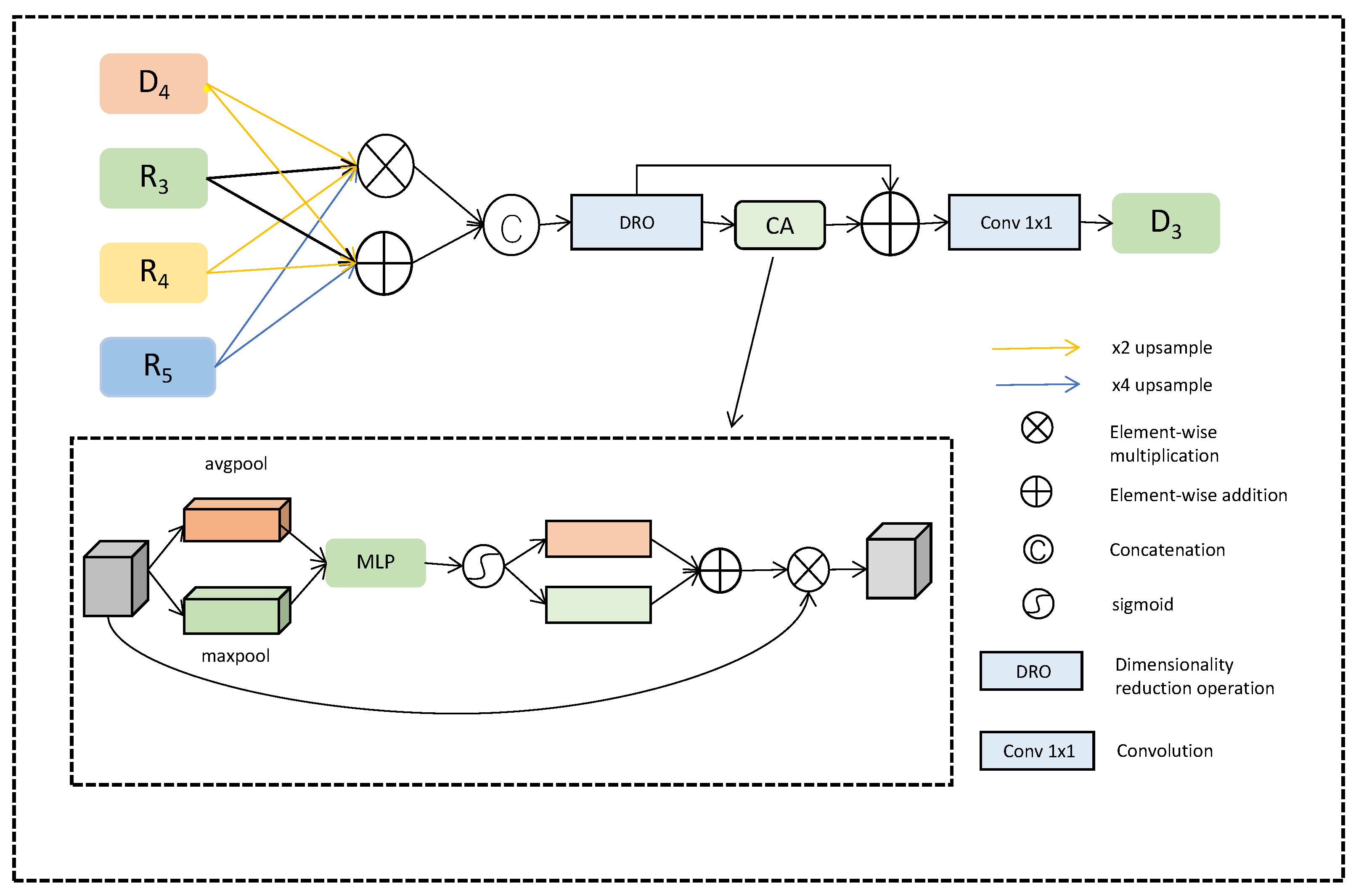

- The DDA module introduces two interaction modes for feature aggregation, allowing for information interactions between different level features and resulting in high-quality feature representations.

- (4)

- Extensive experiments on 5 benchmark datasets demonstrate the superiority of the MFDANet method against 15 state-of-the-art SOD methods across diverse evaluation metrics.

2. Related Work

2.1. Extract Multi-Scale Context Information

2.2. Aggregate Multi-Level Features

3. Proposed MFDANet Method

3.1. Overall Architecture

3.2. Residual Context Information Extraction (RCIE) Module

3.3. Dense Dual-Stream Aggregation (DDA) Module

3.4. Loss Function

4. Experiment Analysis

4.1. Datasets

4.2. Experimental Details

4.3. Evaluation Metrics

4.4. Comparison with State-of-the-Art Experiments

4.4.1. Quantitative Comparison

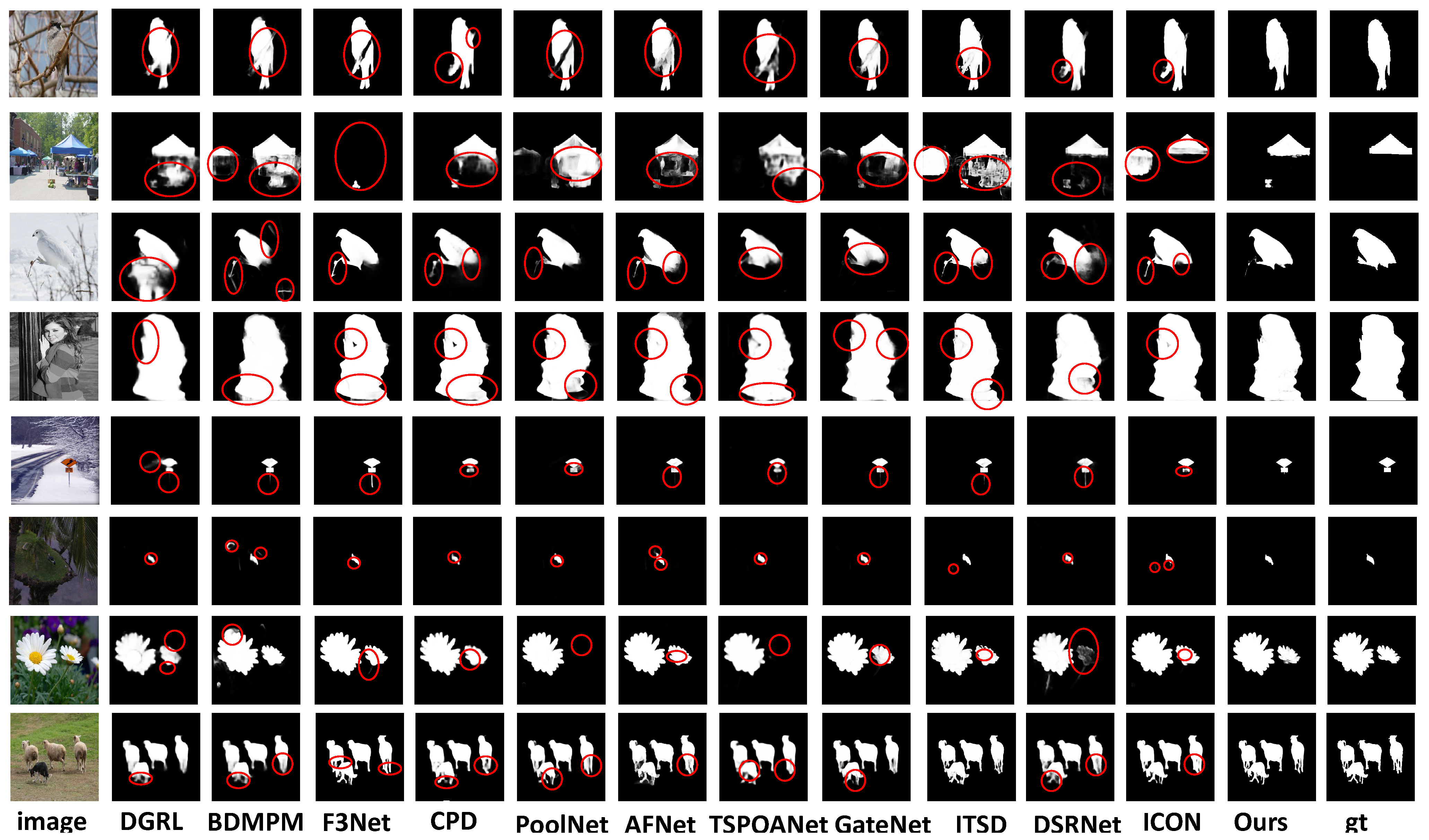

4.4.2. Qualitative Analysis

4.5. Ablation Experiment

4.5.1. Effectiveness of RCIE Module

4.5.2. Effectiveness of DDA Module

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rutishauser, U.; Walther, D.; Koch, C.; Perona, P. Is Bottom-Up Attention Useful for Object Recognition? In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2004), with CD-ROM, Washington, DC, USA, 27 June–2 July 2004; IEEE Computer Society: Los Alamitos, CA, USA, 2004; pp. 37–44. [Google Scholar] [CrossRef]

- Wang, W.; Shen, J.; Sun, H.; Shao, L. Video Co-Saliency Guided Co-Segmentation. IEEE Trans. Circuits Syst. Video Technol. 2018, 28, 1727–1736. [Google Scholar] [CrossRef]

- Craye, C.; Filliat, D.; Goudou, J. Environment exploration for object-based visual saliency learning. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation, ICRA 2016, Stockholm, Sweden, 16–21 May 2016; Kragic, D., Bicchi, A., Luca, A.D., Eds.; IEEE: Toulouse, France, 2016; pp. 2303–2309. [Google Scholar] [CrossRef]

- He, J.; Feng, J.; Liu, X.; Cheng, T.; Lin, T.; Chung, H.; Chang, S. Mobile product search with Bag of Hash Bits and boundary reranking. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE Computer Society: Los Alamitos, CA, USA, 2012; pp. 3005–3012. [Google Scholar] [CrossRef]

- Cheng, M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Zhang, L.; Lu, H.; Ruan, X.; Yang, M. Saliency Detection via Graph-Based Manifold Ranking. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE Computer Society: Los Alamitos, CA, USA, 2013; pp. 3166–3173. [Google Scholar] [CrossRef]

- Jiang, H.; Wang, J.; Yuan, Z.; Wu, Y.; Zheng, N.; Li, S. Salient Object Detection: A Discriminative Regional Feature Integration Approach. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE Computer Society: Los Alamitos, CA, USA, 2013; pp. 2083–2090. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Hu, X.; Guo, L.; Ren, J.; Wu, F. Background Prior-Based Salient Object Detection via Deep Reconstruction Residual. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 1309–1321. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Los Alamitos, CA, USA, 2015; pp. 3431–3440. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, L.; Wang, S.; Lu, H.; Yang, G.; Ruan, X.; Borji, A. Detect Globally, Refine Locally: A Novel Approach to Saliency Detection. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation/IEEE Computer Society: Washington, DC, USA, 2018; pp. 3127–3135. [Google Scholar] [CrossRef]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ECCV 2018—15th European Conference, Munich, Germany, 8–14 September 2018; Proceedings, Part XI; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Springer: New York, NY, USA, 2018; Volume 11215, pp. 404–419. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaïane, O.R.; Jägersand, M. U2-Net: Going deeper with nested U-structure for salient object detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Zhao, X.; Pang, Y.; Zhang, L.; Lu, H.; Zhang, L. Suppress and Balance: A Simple Gated Network for Salient Object Detection. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ECCV 2020—16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part II; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Springer: New York, NY, USA, 2020; Volume 12347, pp. 35–51. [Google Scholar] [CrossRef]

- Wu, Z.; Su, L.; Huang, Q. Cascaded Partial Decoder for Fast and Accurate Salient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; Computer Vision Foundation/IEEE: Washington, DC, USA, 2019; pp. 3907–3916. [Google Scholar] [CrossRef]

- Liu, N.; Han, J.; Yang, M. PiCANet: Learning Pixel-Wise Contextual Attention for Saliency Detection. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation/IEEE Computer Society: Washington, DC, USA, 2018; pp. 3089–3098. [Google Scholar] [CrossRef]

- Wei, J.; Wang, S.; Huang, Q. F3Net: Fusion, Feedback and Focus for Salient Object Detection. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 12321–12328. [Google Scholar]

- Zhao, J.; Liu, J.; Fan, D.; Cao, Y.; Yang, J.; Cheng, M. EGNet: Edge Guidance Network for Salient Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Toulouse, France, 2019; pp. 8778–8787. [Google Scholar] [CrossRef]

- Wang, L.; Lu, H.; Ruan, X.; Yang, M. Deep networks for saliency detection via local estimation and global search. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Los Alamitos, CA, USA, 2015; pp. 3183–3192. [Google Scholar] [CrossRef]

- Li, J.; Pan, Z.; Liu, Q.; Wang, Z. Stacked U-Shape Network with Channel-Wise Attention for Salient Object Detection. IEEE Trans. Multim. 2021, 23, 1397–1409. [Google Scholar] [CrossRef]

- Xu, B.; Liang, H.; Liang, R.; Chen, P. Locate Globally, Segment Locally: A Progressive Architecture with Knowledge Review Network for Salient Object Detection. In Proceedings of the Thirty-Fifth AAAI Conference on Artificial Intelligence, AAAI 2021, Thirty-Third Conference on Innovative Applications of Artificial Intelligence, IAAI 2021, The Eleventh Symposium on Educational Advances in Artificial Intelligence, EAAI 2021, Virtual Event, 2–9 February 2021; AAAI Press: Menlo Park, CA, USA, 2021; pp. 3004–3012. [Google Scholar]

- Liu, Y.; Zhang, Q.; Zhang, D.; Han, J. Employing Deep Part-Object Relationships for Salient Object Detection. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, ICCV 2019, Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Toulouse, France, 2019; pp. 1232–1241. [Google Scholar] [CrossRef]

- Liu, J.; Hou, Q.; Cheng, M.; Feng, J.; Jiang, J. A Simple Pooling-Based Design for Real-Time Salient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; Computer Vision Foundation/IEEE: Washington, DC, USA, 2019; pp. 3917–3926. [Google Scholar] [CrossRef]

- Zhang, L.; Dai, J.; Lu, H.; He, Y.; Wang, G. A Bi-Directional Message Passing Model for Salient Object Detection. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2018, Salt Lake City, UT, USA, 18–22 June 2018; Computer Vision Foundation/IEEE Computer Society: Los Alamitos, CA, USA, 2018; pp. 1741–1750. [Google Scholar] [CrossRef]

- de Boer, P.; Kroese, D.P.; Mannor, S.; Rubinstein, R.Y. A Tutorial on the Cross-Entropy Method. Ann. Oper. Res. 2005, 134, 19–67. [Google Scholar] [CrossRef]

- Máttyus, G.; Luo, W.; Urtasun, R. DeepRoadMapper: Extracting Road Topology from Aerial Images. In Proceedings of the IEEE International Conference on Computer Vision, ICCV 2017, Venice, Italy, 22–29 October 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 3458–3466. [Google Scholar] [CrossRef]

- Yan, Q.; Xu, L.; Shi, J.; Jia, J. Hierarchical Saliency Detection. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 1155–1162. [Google Scholar] [CrossRef]

- Li, Y.; Hou, X.; Koch, C.; Rehg, J.M.; Yuille, A.L. The Secrets of Salient Object Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2014, Columbus, OH, USA, 23–28 June 2014; IEEE Computer Society: Washington, DC, USA, 2014; pp. 280–287. [Google Scholar] [CrossRef]

- Li, G.; Yu, Y. Visual saliency based on multiscale deep features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2015, Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 5455–5463. [Google Scholar] [CrossRef]

- Wang, L.; Lu, H.; Wang, Y.; Feng, M.; Wang, D.; Yin, B.; Ruan, X. Learning to Detect Salient Objects with Image-Level Supervision. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 3796–3805. [Google Scholar] [CrossRef]

- Feng, M.; Lu, H.; Ding, E. Attentive Feedback Network for Boundary-Aware Salient Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2019, Long Beach, CA, USA, 16–20 June 2019; Computer Vision Foundation/IEEE: Washington, DC, USA, 2019; pp. 1623–1632. [Google Scholar] [CrossRef]

- Mohammadi, S.; Noori, M.; Bahri, A.; Majelan, S.G.; Havaei, M. CAGNet: Content-Aware Guidance for Salient Object Detection. Pattern Recognit. 2020, 103, 107303. [Google Scholar] [CrossRef]

- Zhou, H.; Xie, X.; Lai, J.; Chen, Z.; Yang, L. Interactive Two-Stream Decoder for Accurate and Fast Saliency Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, CVPR 2020, Seattle, WA, USA, 13–19 June 2020; Computer Vision Foundation/IEEE: Washington, DC, USA, 2020; pp. 9138–9147. [Google Scholar] [CrossRef]

- Chen, Z.; Xu, Q.; Cong, R.; Huang, Q. Global Context-Aware Progressive Aggregation Network for Salient Object Detection. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence, AAAI 2020, The Thirty-Second Innovative Applications of Artificial Intelligence Conference, IAAI 2020, The Tenth AAAI Symposium on Educational Advances in Artificial Intelligence, EAAI 2020, New York, NY, USA, 7–12 February 2020; AAAI Press: Menlo Park, CA, USA, 2020; pp. 10599–10606. [Google Scholar]

- Wang, L.; Chen, R.; Zhu, L.; Xie, H.; Li, X. Deep Sub-Region Network for Salient Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 31, 728–741. [Google Scholar] [CrossRef]

- Zhuge, M.; Fan, D.; Liu, N.; Zhang, D.; Xu, D.; Shao, L. Salient Object Detection via Integrity Learning. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 3738–3752. [Google Scholar] [CrossRef] [PubMed]

| Method | DGRL [10] | BDMPM [23] | EGNet [17] | F3Net [16] | CPD [14] | PoolNet [22] | AFNet [30] | TSPOANet [21] | GateNet [13] | CAGNet [31] | ITSD [32] | GCPANet [33] | SUCA [19] | DSRNet [34] | ICON [35] | Ours | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Publish | |||||||||||||||||

| ECSSD(1000) | 0.046 | 0.045 | 0.037 | 0.033 | 0.037 | 0.039 | 0.042 | 0.046 | 0.040 | 0.037 | 0.035 | 0.035 | 0.040 | 0.039 | 0.032 | 0.032 | |

| 0.893 | 0.869 | 0.920 | 0.925 | 0.917 | 0.915 | 0.908 | 0.900 | 0.916 | 0.921 | 0.895 | 0.919 | 0.916 | 0.910 | 0.928 | 0.934 | ||

| 0.871 | 0.871 | 0.903 | 0.912 | 0.898 | 0.896 | 0.886 | 0.876 | 0.894 | 0.902 | 0.911 | 0.903 | 0.894 | 0.891 | 0.918 | 0.921 | ||

| 0.935 | 0.916 | 0.947 | 0.946 | 0.950 | 0.945 | 0.941 | 0.935 | 0.943 | 0.944 | 0.932 | 0.952 | 0.943 | 0.942 | 0.954 | 0.953 | ||

| PASCAL-S(850) | 0.077 | 0.073 | 0.074 | 0.061 | 0.071 | 0.075 | 0.070 | 0.077 | 0.067 | 0.066 | 0.066 | 0.062 | 0.067 | 0.067 | 0.064 | 0.064 | |

| 0.794 | 0.758 | 0.817 | 0.835 | 0.820 | 0.815 | 0.815 | 0.804 | 0.819 | 0.833 | 0.785 | 0.827 | 0.819 | 0.819 | 0.833 | 0.838 | ||

| 0.772 | 0.774 | 0.795 | 0.816 | 0.794 | 0.793 | 0.792 | 0.775 | 0.797 | 0.808 | 0.812 | 0.808 | 0.797 | 0.800 | 0.818 | 0.820 | ||

| 0.863 | 0.840 | 0.877 | 0.895 | 0.887 | 0.876 | 0.885 | 0.871 | 0.884 | 0.896 | 0.863 | 0.897 | 0.884 | 0.883 | 0.893 | 0.896 | ||

| HKU-IS(4447) | 0.041 | 0.039 | 0.031 | 0.028 | 0.033 | 0.032 | 0.036 | 0.038 | 0.033 | 0.030 | 0.031 | 0.031 | 0.033 | 0.035 | 0.029 | 0.027 | |

| 0.875 | 0.871 | 0.902 | 0.910 | 0.895 | 0.900 | 0.888 | 0.882 | 0.899 | 0.909 | 0.899 | 0.898 | 0.899 | 0.893 | 0.910 | 0.923 | ||

| 0.851 | 0.859 | 0.886 | 0.900 | 0.879 | 0.883 | 0.869 | 0.862 | 0.880 | 0.893 | 0.894 | 0.889 | 0.880 | 0.873 | 0.902 | 0.909 | ||

| 0.943 | 0.938 | 0.955 | 0.958 | 0.952 | 0.955 | 0.948 | 0.946 | 0.953 | 0.950 | 0.953 | 0.956 | 0.953 | 0.951 | 0.959 | 0.963 | ||

| DUT-OMRON(5168) | 0.066 | 0.064 | 0.053 | 0.053 | 0.056 | 0.056 | 0.057 | 0.061 | 0.055 | 0.054 | 0.061 | 0.056 | - | 0.061 | 0.057 | 0.052 | |

| 0.711 | 0.692 | 0.756 | 0.766 | 0.747 | 0.739 | 0.739 | 0.716 | 0.746 | 0.752 | 0.756 | 0.748 | - | 0.727 | 0.772 | 0.783 | ||

| 0.688 | 0.681 | 0.738 | 0.747 | 0.719 | 0.721 | 0.717 | 0.697 | 0.729 | 0.728 | 0.750 | 0.734 | - | 0.711 | 0.761 | 0.765 | ||

| 0.847 | 0.839 | 0.874 | 0.876 | 0.873 | 0.864 | 0.860 | 0.850 | 0.868 | 0.862 | 0.867 | 0.869 | - | 0.855 | 0.879 | 0.886 | ||

| DUTS-TE(5019) | 0.054 | 0.049 | 0.039 | 0.035 | 0.043 | 0.040 | 0.046 | 0.049 | 0.040 | 0.040 | 0.041 | 0.038 | 0.044 | 0.043 | 0.037 | 0.036 | |

| 0.755 | 0.746 | 0.815 | 0.840 | 0.805 | 0.809 | 0.793 | 0.776 | 0.807 | 0.837 | 0.804 | 0.817 | 0.802 | 0.791 | 0.838 | 0.859 | ||

| 0.748 | 0.761 | 0.816 | 0.835 | 0.795 | 0.807 | 0.785 | 0.767 | 0.809 | 0.817 | 0.824 | 0.821 | 0.803 | 0.794 | 0.837 | 0.846 | ||

| 0.873 | 0.863 | 0.907 | 0.918 | 0.904 | 0.904 | 0.895 | 0.885 | 0.903 | 0.913 | 0.898 | 0.913 | 0.903 | 0.892 | 0.919 | 0.927 |

| Datasets | Res + FPN | Res + RFB + FPN | Res + DDA | Res + RCIE + FPN | Res + RCIE + DDA (add) | Res + RCIE + DDA (mul) | Res + RCIE + DDA (cat) | Res + RCIE + DDA | |

|---|---|---|---|---|---|---|---|---|---|

| ECSSD(1000) | MAE ↓ | 0.055 | 0.050 | 0.040 | 0.046 | 0.033 | 0.036 | 0.031 | 0.032 |

| 0.873 | 0.879 | 0.903 | 0.887 | 0.929 | 0.922 | 0.931 | 0.934 | ||

| 0.856 | 0.870 | 0.893 | 0.883 | 0.916 | 0.906 | 0.919 | 0.921 | ||

| 0.917 | 0.920 | 0.944 | 0.925 | 0.955 | 0.948 | 0.956 | 0.953 | ||

| PASCAL-S(850) | MAE ↓ | 0.078 | 0.075 | 0.067 | 0.074 | 0.064 | 0.064 | 0.065 | 0.064 |

| 0.772 | 0.769 | 0.816 | 0.771 | 0.836 | 0.834 | 0.837 | 0.838 | ||

| 0.764 | 0.777 | 0.797 | 0.785 | 0.817 | 0.813 | 0.818 | 0.820 | ||

| 0.861 | 0.858 | 0.894 | 0.086 | 0.902 | 0.897 | 0.900 | 0.895 | ||

| HKU-IS(4447) | MAE ↓ | 0.047 | 0.043 | 0.032 | 0.039 | 0.027 | 0.030 | 0.027 | 0.027 |

| 0.878 | 0.886 | 0.889 | 0.899 | 0.914 | 0.911 | 0.917 | 0.923 | ||

| 0.832 | 0.848 | 0.884 | 0.868 | 0.905 | 0.899 | 0.908 | 0.909 | ||

| 0.943 | 0.946 | 0.954 | 0.954 | 0.961 | 0.958 | 0.962 | 0.963 | ||

| DUT-OMRON(5168) | MAE ↓ | 0.065 | 0.062 | 0.056 | 0.057 | 0.052 | 0.054 | 0.051 | 0.052 |

| 0.711 | 0.724 | 0.739 | 0.744 | 0.771 | 0.757 | 0.777 | 0.783 | ||

| 0.669 | 0.689 | 0.724 | 0.717 | 0.754 | 0.737 | 0.762 | 0.765 | ||

| 0.852 | 0.859 | 0.869 | 0.867 | 0.882 | 0.873 | 0.885 | 0.886 | ||

| DUTS-TE(5019) | MAE ↓ | 0.051 | 0.047 | 0.040 | 0.044 | 0.036 | 0.037 | 0.036 | 0.036 |

| 0.769 | 0.781 | 0.811 | 0.793 | 0.845 | 0.843 | 0.850 | 0.859 | ||

| 0.748 | 0.775 | 0.811 | 0.794 | 0.837 | 0.834 | 0.824 | 0.846 | ||

| 0.889 | 0.895 | 0.913 | 0.897 | 0.928 | 0.926 | 0.928 | 0.927 |

| Method | Input Size | Param (M) | Inference Speed (FPS) | Model Memory (M) |

|---|---|---|---|---|

| EGNet | 384 × 384 | 108.07 | 9 | 437 |

| PoolNet | 384 × 384 | 68.26 | 17 | 410 |

| AFNet | 224 × 224 | 37.11 | 26 | 128 |

| GataNet | 384 × 384 | 128.63 | 30 | 503 |

| DSRNet | 400 × 400 | 75.29 | 15 | 290 |

| Ours | 320 × 320 | 32.51 | 25 | 123 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ge, B.; Pei, J.; Xia, C.; Wu, T. MFDANet: Multi-Scale Feature Dual-Stream Aggregation Network for Salient Object Detection. Electronics 2023, 12, 2880. https://doi.org/10.3390/electronics12132880

Ge B, Pei J, Xia C, Wu T. MFDANet: Multi-Scale Feature Dual-Stream Aggregation Network for Salient Object Detection. Electronics. 2023; 12(13):2880. https://doi.org/10.3390/electronics12132880

Chicago/Turabian StyleGe, Bin, Jiajia Pei, Chenxing Xia, and Taolin Wu. 2023. "MFDANet: Multi-Scale Feature Dual-Stream Aggregation Network for Salient Object Detection" Electronics 12, no. 13: 2880. https://doi.org/10.3390/electronics12132880

APA StyleGe, B., Pei, J., Xia, C., & Wu, T. (2023). MFDANet: Multi-Scale Feature Dual-Stream Aggregation Network for Salient Object Detection. Electronics, 12(13), 2880. https://doi.org/10.3390/electronics12132880