3.1. Situation Awareness Structure

Endsley [

23] classifies situation perception into three levels: element extraction, evaluation, and prediction. The prediction stage aims to anticipate the “potential” future state. Initially, relevant environmental factors are perceived to understand the driver’s current state. This paper specifically focuses on analyzing head features as part of facial features [

24,

25] that influence the driving state. The evaluation step involves evaluating the current state, including the driver’s fatigue level and their present situation. Throughout the driver’s journey, various elements are considered to determine the degree of goal attainment and assess the current outcome using different data types and information. Future state projection predicts potential future states based on the current state [

26], estimating the driver’s future driving risk. In summary, this paper studies the current and present situation levels to identify potential future trends and issue warnings for high-risk fatigue states. Situational perception technology is commonly applied in network environment warnings, determining the network threat level based on network environment information [

27]. The process of fatigue state situational awareness is depicted in

Figure 6.

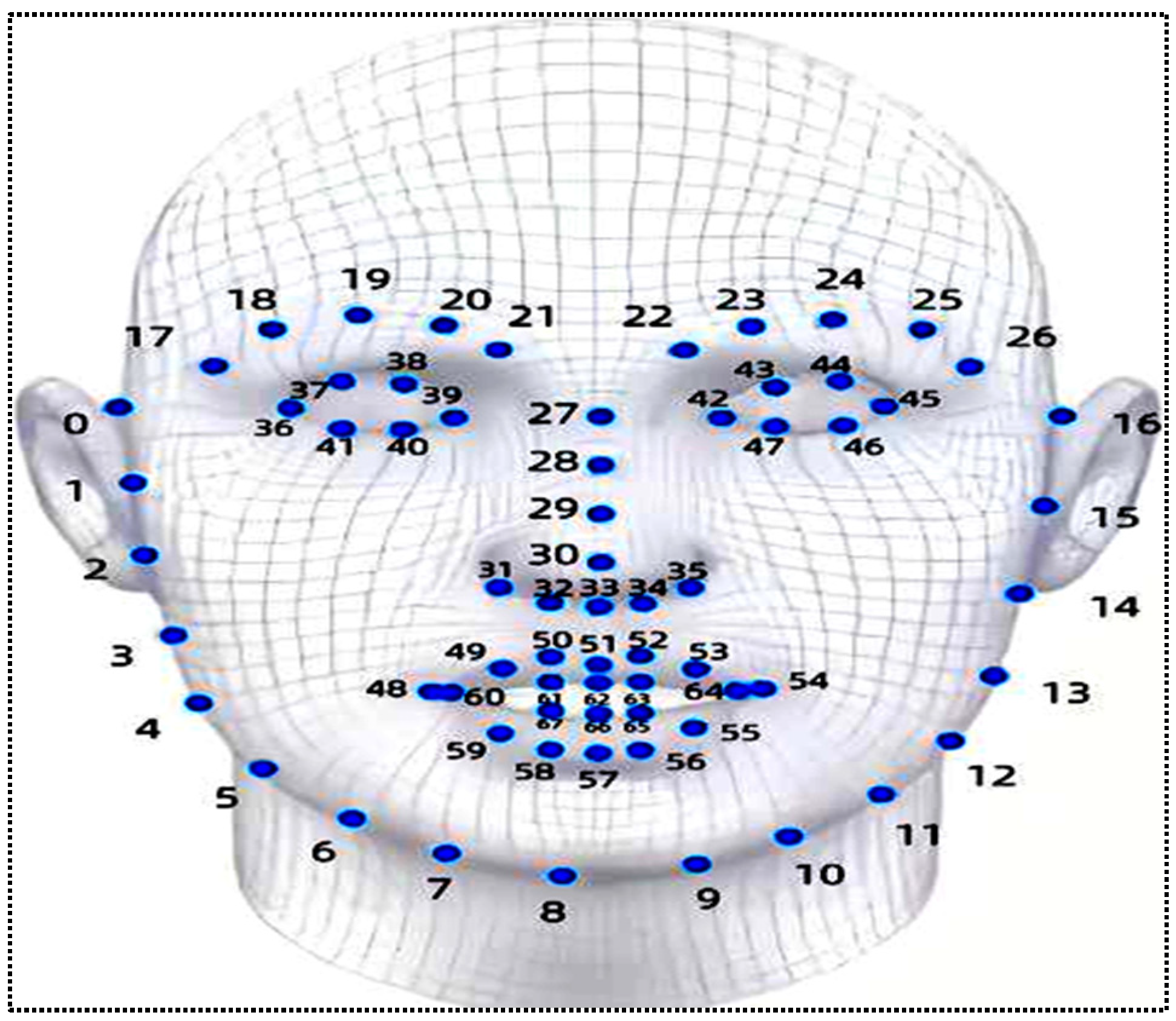

The fatigue state of a driver is determined by calculating parameters related to eye fatigue, mouth fatigue, and head posture. Firstly, the fatigue level of the driver’s fatigue state and evaluation indicators for the fatigue parameters are defined, and mathematical modeling and quantification of each indicator are performed. An AHP-fuzzy comprehensive evaluation algorithm is proposed to assess the driver’s fatigue state. Subsequently, future situation levels are predicted based on past and present states, with warnings issued for higher-level states. To accomplish this, a situation prediction method based on the improved marine predator algorithm (WMPA)-optimized GRU (gate recurrent unit) is proposed.

3.3. Situation Evaluation

The method of combining hierarchical analysis and fuzzy evaluation is referred to as the fuzzy hierarchical analysis method. The model establishment is depicted in

Figure 8. The hierarchical analysis method is utilized to calculate the weight of the evaluation indicators that influence the level of driver’s fatigue status. Subsequently, the fuzzy comprehensive evaluation method is employed to construct a fuzzy evaluation matrix and conduct a comprehensive evaluation. Finally, the final result of the driver’s fatigue status level is determined based on the principles of fuzzy theory.

3.3.1. Calculation Weight of Hierarchical Analysis Method

The judgment matrix serves as a criterion in determining the element values of the next layer. The values assigned to the matrix elements reflect the subjective understanding and evaluation of the relative importance of different factors based on objective reality. Typically, the values used are 1, 3, 5, 7, and 9, with their reciprocals as the benchmark values and 2, 4, 6, and 8 as intermediate values. The specific labeling method for the median value of adjacent judgments follows the 1–9 standard method proposed by Satty [

28], as shown in

Table 4.

- 2.

Calculate the maximum feature value

The maximum eigenvalue and eigenvector of each judgment matrix are calculated using the multiplication and summation method. Subsequently, the eigenvectors are normalized to obtain the weight ranking. Based on the weight rankings of each level, the overall weight ranking is determined. The specific calculation steps are as follows:

Step 1: Normalize each column of the judgment matrix

P to obtain the normalized matrix

.

In the formula, —line i, the value of the element in column j is formally treated; Pij—line i, the element of column j; —the harmony of column j element.

Step 2: Each column of regular judgment matrix

is added according to the row:

In the formula, —in the normalized judgment matrix, the sum of the ith row.

Step 3: Regularized vector

:

The obtained W = [W1, W2, …, Wn]T is the feature vector.

Step 4: Calculate the maximum feature value of the matrix

λmax:

In the formula, PWi is a matrix P and W product.

- 3.

Consistency check

Consistency check of weight vectors: Due to the complexity of objective phenomena or the potential bias in understanding them, it is necessary to assess the consistency and randomness of the judgment matrix by determining the feature values of the feature vector derived from it. The standardization steps are as follows:

Step 1: Calculate the consistency index (CI): To calculate the consistency index (CI), a survey was conducted among experts in 30 fields, resulting in the following judgment matrix: CI = (λmax − n)/(n − 1).

Step 2: Calculate the average random consistency index (

RI): The

RI is obtained by averaging the feature values from multiple randomly generated judgment matrices.

Table 5 presents the values of

RI for dimensions ranging from 1 to 10.

Step 3: Calculate CR: CR = CI/RI; when CR < 0.1, it is considered to have better consistency to judge the matrix.

- 4.

Calculation results

This paper formed an expert group consisting of 20 industry experts with senior titles or above in the field of object detection, as well as associate professors from universities. The selection process involved careful consideration of their expertise and industry experience. To ensure a comprehensive evaluation, multiple rounds of questionnaires were distributed to collect the experts’ opinions on the evaluation indices of driver fatigue status. The questionnaire was designed to assess the rationality of the preliminary selection of indicators based on the experts’ professional knowledge and industry experience. Moreover, by comparing the indicators pairwise, the relative importance between two indicators was determined using the 1–9 scale method. This approach helped capture the difference in significance between various indicators. Subsequently, the collected questionnaires underwent rigorous screening and elimination to exclude any that did not meet the requirements. The remaining data were analyzed and processed to obtain the judgment matrix, represented by matrix (12). This matrix reflects the comprehensive opinions and evaluations of the expert group.

The calculation steps are as follows:

Step 1: Normalize each column vector of the pairwise comparison judgment matrix

P to obtain

, as represented by matrix (13).

Step 2: Normalize and sum the matrix to obtain : = [1.4450 2.1261 0.4241 0.3051]T. The purpose of calculating w is to compute the normalized eigenvector.

Step 3: Calculate the eigenvector (weights), as shown in Equation (14).

Step 4: Translate the maximum eigenvalue of the judgment matrix, as shown in Equation (15).

Step 5: To perform consistency check on the judgment matrix, we need to calculate the consistency index (CI): .

For a 5th-order matrix, the average random consistency index (RI) is 0.89. The random consistency ratio (CR) is calculated as follows: .

Given that the consistency is reasonable, the weights can be summarized as shown in

Table 6 below.

3.3.2. Fuzzy Comprehensive Evaluation

Establish a set of evaluation factors, U = {u1, u2, u3 …u4}.

- 2.

Clear evaluation grade

The evaluation grade is represented by V = {V1, V2, V3 …Vm}. This paper uses five grades to evaluate the driver’s fatigue status level evaluation effect. The five grades correspond to low risk (level Ⅰ), lower risk (level Ⅱ), general risk (level Ⅲ), higher risk (level Ⅳ), and high risk (level Ⅴ).

- 3.

Constructing a fuzzy comprehensive evaluation matrix

By clarifying the attachment of the lower-level evaluation indicators in the evaluation indicators of each ladder level, the ambiguity matrix

R is represented by matrix (16):

- 4.

Calculate the evaluation factor right vector

Order W = {a1, a2, a3, …, am}. Among them, ai (indicator weight) represents the affiliation of ui (evaluation factor set) for the fuzzy subset of the evaluated object. The evaluation factor vector is the weight value of the staircase level in the previous section. In accordance with the different values of the affiliation, this paper obtains the summary table of the affiliation matrix.

- 5.

Calculate the comprehensive evaluation results

After combining the synthetic comprehensive evaluation result vector with the corresponding level of evaluation factor right vector W and the fuzzy comprehensive evaluation matrix

R, calculate the fuzzy comprehensive evaluation result vector

B, as shown in Equation (17):

Based on the principle of the most affiliated, the overall effect can be evaluated and analyzed.

3.4. Situation Prediction

After obtaining the situation evaluation result, which represents the “state” in the situation, it is crucial to focus on predicting the future trend of navigation, referred to as the “trend” in the situation. In the process of situation awareness, time plays a significant role as the situation evolves over time. Predicting future situations relies on capturing the long-term dependence of the situation on time. To address this issue, this section proposes an optimized GRU-based situation prediction method using the improved marine predator algorithm [

29,

30] to predict drivers’ fatigue levels. GRU (gated recurrent unit) [

31,

32] is a variant of the LSTM (long short-term memory) network [

33,

34,

35], which has a simpler structure and better performance.

It is currently a popular choice in neural networks. In related fields, neural networks are widely used for situation prediction due to their ability to incorporate past and present data and handle temporal continuity. Recurrent neural networks, such as GRU and LSTM, are well-suited for such problems as they can store historical information. However, it should be noted that the activation function of the RNN network cannot be a sigmoid function. The sigmoid function leads to the vanishing gradient problem, where gradients diminish over time, making it difficult for RNNs to handle long-term dependencies. In response to this challenge, a neural network model capable of retaining information over long periods has emerged, known as long short-term memory (LSTM).

The LSTM (Long Short-Term Memory) module unit for information retention is more complex, consisting of cell states, forget gates, input gates, and output gates. This architecture enables LSTM to effectively handle long-term dependencies. Its unit structure is shown in

Figure 9. The specific calculation steps for the proposed GRU-based situation prediction method optimized by the improved marine predator algorithm are as follows:

Step 1: The information that needs to be discarded is forgotten and output through the sigmoid function, Output

ft.

wf is weight, ht−1 is the information of the previous time, and bf is offset.

Step 2: First, the previous moment

ht−1 and the current state

xt are updated to the information after the sigmoid function process

it. Then, use the tan

h activation function to obtain the update information.

wi is the weight of the input door, bi is the input door offset, wc is the weight of entering the new cell, and bc is offset.

Step 3: Update the old status information by updating the door,

ct−1, and

ft’s Hadamard accumulation, select whether some old cell information is discarded by the forgotten door, and obtain the new cell status

ct.

Step 4: Combine the hidden layer status

ht−1 and the current state

xt in the previous moment, and the new output value

ot is processed by the sigmoid function.

Due to the complexity of the LSTM module, its computational process is cumbersome, resulting in longer calculation times. To address this issue, Cho et al. proposed GRU (gated recurrent unit) with gated control cycle units.

Figure 10 illustrates the GRU (Gated Recurrent Unit) architecture, which follows the same computational principles as LSTM but reduces the number of LSTM gates, effectively reducing the calculation time. GRU achieves comparable performance to LSTM using fewer parameters. It combines the forget and input gates of LSTM into a single update gate. The update gate output is obtained by applying the sigmoid function to the previous moment, as shown in the following equation.

“

wz” corresponds to the weight parameter of the update gate, while “

uz” represents the current weight parameter of the update gate.

Before the reset door decides how much information is available, the linear transformation also processes the output activation value through the sigmoid function.

“

wr” stands for the recurrent weight matrix of the reset gate, while “

wh” represents the weight matrix for the candidate hidden state.

is the local information extracted from the maintained door. After the updated door, the updated state

ht is obtained.

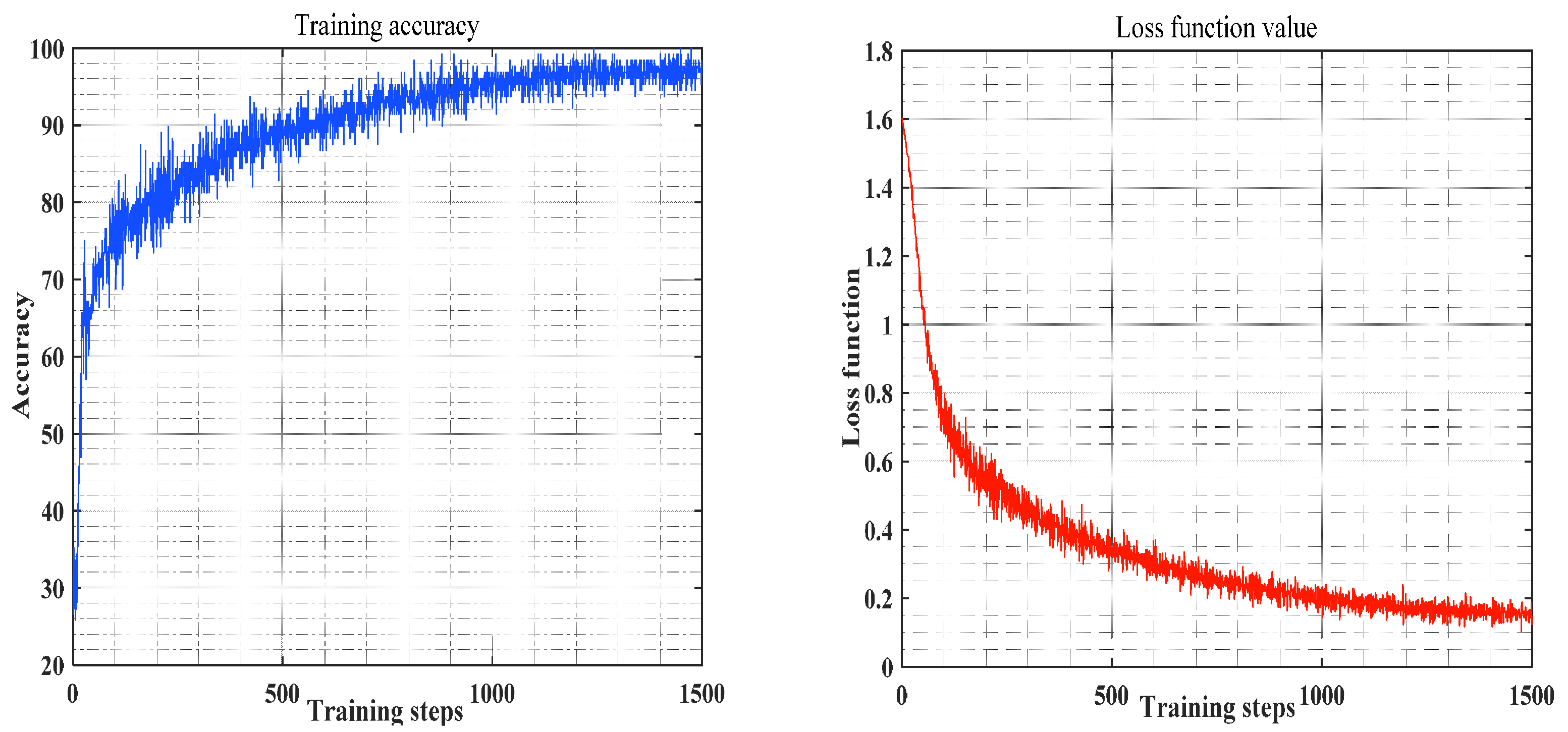

The utilization of GRU networks is more suitable for addressing problems that require long-term data prediction. However, adjusting the parameter values of the GRU network can be tedious and complex, and manual tuning may not lead to the optimal state. Therefore, an algorithm that optimizes the weight values to improve learning efficiency is necessary.

Figure 11 illustrates the process of optimizing the GRU neural network prediction algorithm using the improved marine predator algorithm.

Improved Marine Predator Algorithm (WMPA)

In the ocean, marine animals exhibit similarities with many animals in nature. They adopt a random walking strategy, wherein the next position or status of predators and prey is dependent on their current location or status. It has been observed that the speed of predators and prey in marine creatures determines their respective predation strategies. The transition between the Lévy flight strategy and Brownian motion strategy enables the identification of optimal strategies for optimization purposes.

In the process of updating the GRU network, the dual adaptive switching probability and the adaptive inertia weight are introduced into the MPA algorithm to speed up the convergence speed and solution accuracy of the algorithm, prevent the ocean capture algorithm from falling into local optimum, and improve the performance of global optimization. The specific improvement methods are as follows:

In this paper, adaptive learning factors are used to solve this problem, and the adaptive switching probability is used to replace the fixed probability

P, as shown in Formula (25). The diversity of eclipses and walks improves the local and global search capabilities of the algorithm.

“

Max_iter” represents the maximum number of iterations, and “

Iter” represents the current iteration. The adaptive probability

P undergoes changes in its value during the drawing process, as illustrated in

Figure 12.

From

Figure 12, it can be observed that there is a correlation between the number of iterations and the dynamics of the adaptive probability. In the early iterations, the algorithm focuses more on global search, which enhances its performance. However, as the number of iterations increases, the algorithm shifts toward conducting more local exploration when it approaches the global optimal solution. This adjustment aims to improve the accuracy of the solution and the overall search efficiency of the algorithm.

Inspired by various adaptive weight particle swarm algorithms [

36,

37], this paper enhances the local and global search capabilities of marine predators through the utilization of dual adaptive inertial weights. Specifically, weight

W1 is designed to enhance the overall search capabilities, while weight

W2 is intended to improve local search capabilities. These weights can be mathematically represented by Formula (26):

The variable “rand” represents a random number within the range [0, 1]. The dual inertial weights W1 and W2 have specific ranges: W1 ranges from 0 to 0.5, and W2 ranges from 0.5 to 1.

- 2.

Structure of WMPA algorithm

First, initialize the type:

Xmax and

Xmin in the formula are the maximum and minimum values of variables, and

is a uniform random vector defined between [0, 1]. Create the “

Elite” matrix (28) and “

prey” matrix (29):

In the equation above, X represents the vector of the top predator, and after n iterations, the Elie matrix is replicated. “n” denotes the number of populations, “d” represents the dimension of the search space, and “Xi,j” indicates the jth dimension of the ith prey.

The first stage of the improved marine predator algorithm (WMPA) is characterized by the predator being faster than the prey, and it employs an exploration strategy. This stage involves a global search within the solution space. It is particularly suitable for the initial 1/3 iterations of the algorithm, represented by Equation (30).

In this vector, R consists of random numbers generated from a normal distribution based on Brownian motion. P = 0.5 is a constant, and R is a random number ranging from 0 to 1.

The second stage of improved marine predator algorithm (WMPA) occurs between 1/3 and 2/3 of the total number of iterations. This stage is divided into two parts, where half of the population is responsible for exploitation, and the other half is responsible for exploration. When Iter/Max_iter < 0.5, they are represented by Formulas (31) and (32), respectively. When Iter/Max_iter > 0.5, they are represented by Formulas (33) and (34), respectively.

When Iter/Max_iter < 0.5,

When Iter/Max_iter > 0.5,

In this stage, RL represents the random number vector distributed by Lévy motion, and is the adaptive parameter that controls the movement of predators.

The third stage of the improved marine predator algorithm (WMPA) focuses on exploitation. It aims to find the optimal solution within the solution space by utilizing the Lévy flight strategy of the predators. The specific process is described by Formula (35):

The vortex formation and fish aggregating device (FADS) effect play a crucial role in helping the algorithm escape from local optima. It is represented by Formula (36):

In the formula, is a binary vector with elements of 0 and 1. r is a uniformly distributed random number in the range of [0, 1], and the reduction in r1 and r2 represents random indices in the prey matrix.

- 3.

The implementation step of the WMPA algorithm

The steps of the WMPA algorithm are presented in Algorithm 1 as a pseudo code.

| Algorithm 1: The pseudo code of the WMPA algorithm |

| 1.Initialize search agent (prey) population group i = 1, …, n |

| 2.While In the case of not meeting the termination conditions, calculate the adaptation, |

| construct the elite matrix, and realize memory saving |

| 3. If Iter < Max_Iter/3 |

| 4. Update the position of the current search agent through equivalent (30) |

| 5. else if Iter > Max_iter/3 && Iter < 2*Max_iter/3 |

| 6. For the first half of the group (i = 1, …, n/2) |

| 7. if (Iter/Max_iter > 0.5) |

| 8. Update the position of the current search agent through equivalent (33) |

| 9. else Update the position of the current search agent through equivalent (31) |

| 10. end |

| 11. For the other half of the group (i = n/2, …, n) |

| 12. if (Iter/Max_iter > 0.5) |

| 13. Update the position of the current search agent through equivalent (34) |

| 14. else Update the position of the current search agent through equivalent (32) |

| 15. end |

| 16. else |

| 17. Update the position of the current search agent through equivalent (35) |

| 18. end(if) |

| 19. Use the FADS effect to complete memory saving and elite updates, and update |

| according to the Formula (36) |

| 20. end(while) |