Dynamic Selection Slicing-Based Offloading Algorithm for In-Vehicle Tasks in Mobile Edge Computing

Abstract

:1. Introduction

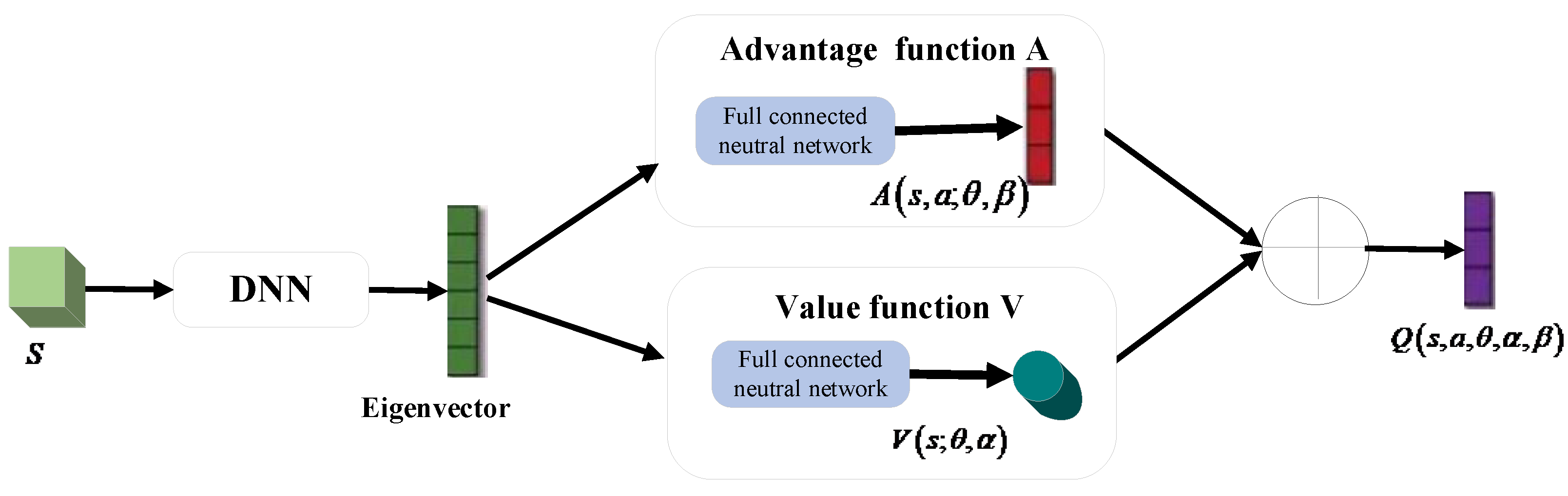

- The Dynamic selection slicing-based offloading algorithm for vehicular tasks: To accomplish the optimization objective of decreasing the aggregate expense related to task offloading, the dynamic selection slicing-based offloading algorithm for in-vehicle tasks in MEC is proposed in this paper. The algorithm uses the Dueling Network combined with Deep Q-Network (DQN) in deep reinforcement learning to dynamically select the optimal slicing results and then update the optimal offloading policy in the effective interval.

- Performance Evaluation: The results show that the DSSO algorithm proposed in this paper improves the efficiency of multi-dimensional resource utilization and task completion rate and reduces the total cost of the offloading task compared with the LOCAL, MINCO, and DJROM.

2. Related Work

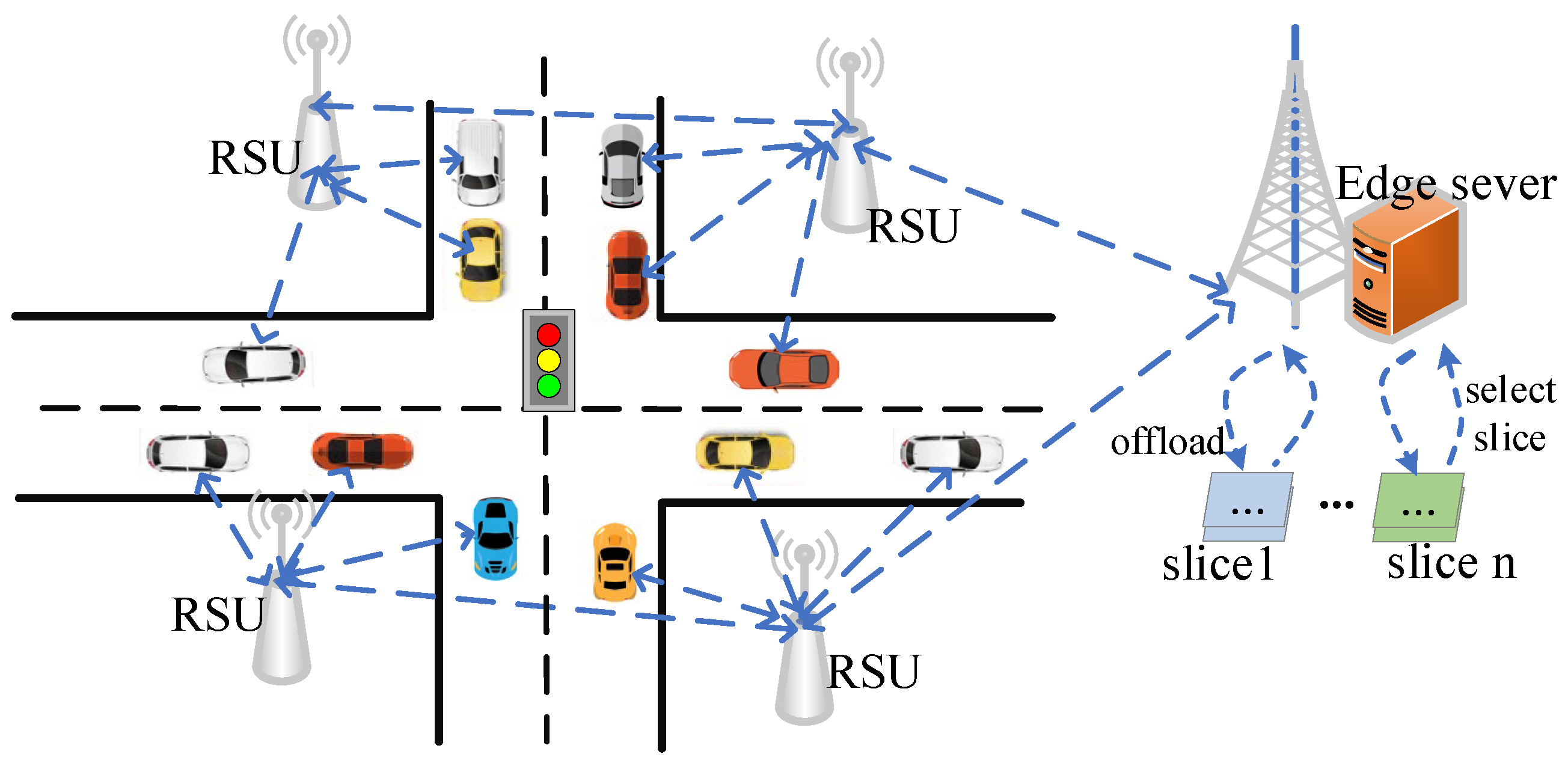

3. System Model and Optimization Goal

3.1. Communication Model

3.2. Offloading Model

- Local offload

- 2.

- Offloading to the slice

- 3.

- Optimization objectives

4. The Dynamic Selection Slice Offload Algorithm for In-Vehicle Task

4.1. Resource Slicing Based on Markovian Dynamic Adjustment

- StateThe state space is defined as the collection of all possible states that the agent can be in , and in this study, it includes the current status of network resources, such as wireless channel , input size , various required resources , and deadline time , available CPU as well as the current status of each slice and the vehicular location.

- ActionThe action space is the set of actions taken in the available states of the MEC-oriented vehicle networking when the agent processes the vehicular task in a given time slot corresponding to a certain state . The offloading decision action was taken by the vehicle. To offload the vehicular task to the appropriate slice for execution, the action space is mapped to the set of slices within the edge server and denoted as . Among them, represents offloading the in-vehicle task to the first slice for execution. Therefore, the action space for the in-vehicle task can be defined as: .

- RewardThe reward provides feedback to the agent about the action taken in a given state, and its positive or negative value reflects whether there is an advantage in MEC when available state information makes offloading decision actions or not. If the reward value is negative, it indicates the need for dynamic adjustment, which needs to interact with the current in-vehicle network in time to obtain an enormous reward value. Meanwhile, the reward value can be used as an indicator to evaluate the merit of the offloading strategy. Additionally, the reward value can serve as an indicator for evaluating the quality of offloading strategies.

4.2. Dueling DQN-Based Dynamic Selection Slice Offload Algorithm

4.3. Implementation of Offload Algorithm Based on the Dynamic Selection Slices

4.3.1. The Offload Algorithm Description

| Algorithm 1: The Dynamic Selection Slice Offloading Algorithm for In-Vehicle Task |

| Input: and the maximum number of iterations Output: Task offload to slice strategy 1. Begin |

| 2. and set the training interval 3. do 4. then 5. 6. then 7. ; 8. Use Adam Optimizer to get the best training parameters ; 9. end if 10. Get the computational resource solution ; 11. by dichotomous method; 12. 13. then 14. Wait and calculate the return to step 4 15. 16. Update Slice Offloading Policy 17. End |

4.3.2. Algorithm Complexity Analysis

5. Experimental Results and Analysis

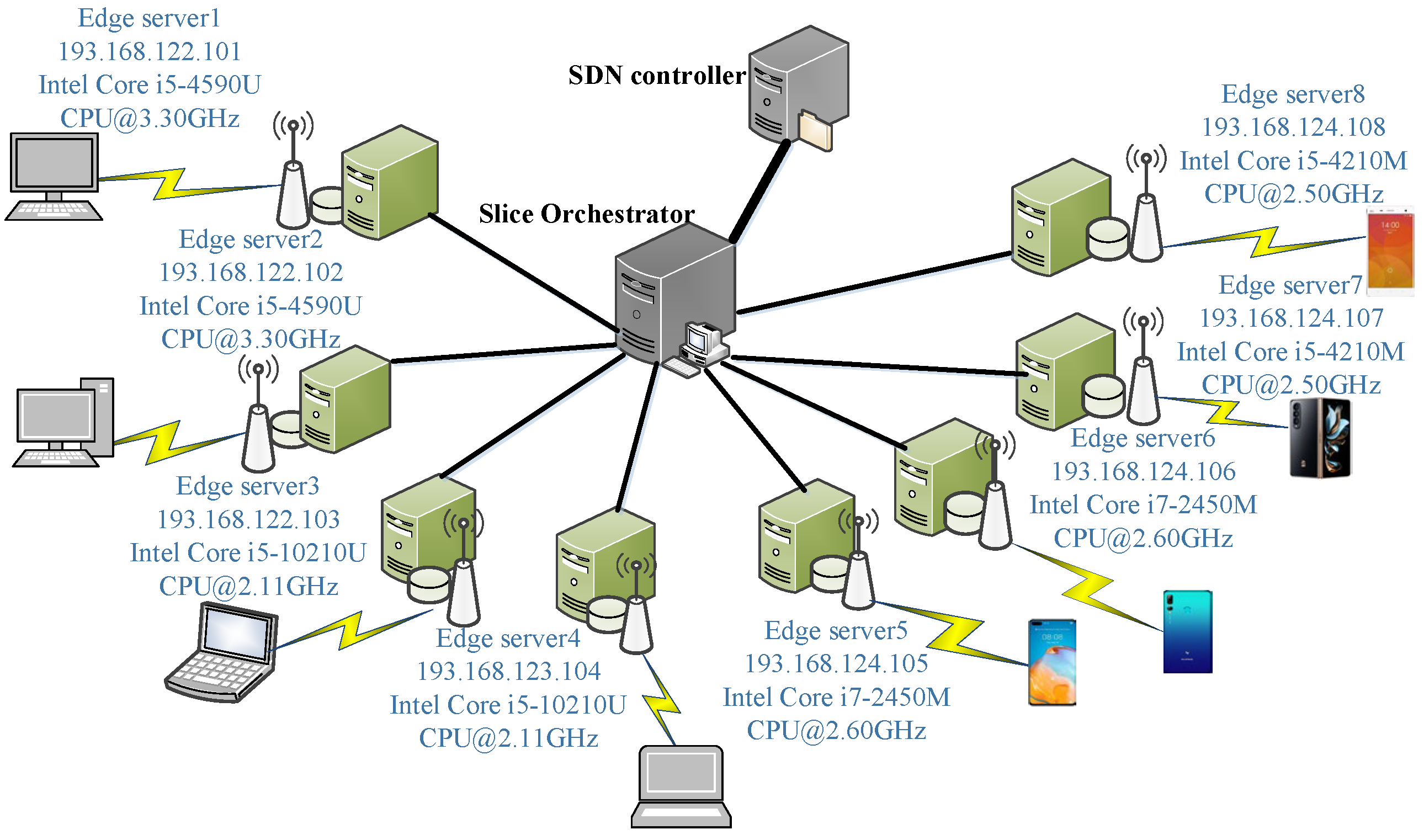

5.1. Experimental Environment and Configuration

5.2. Experimental Parameter Setting

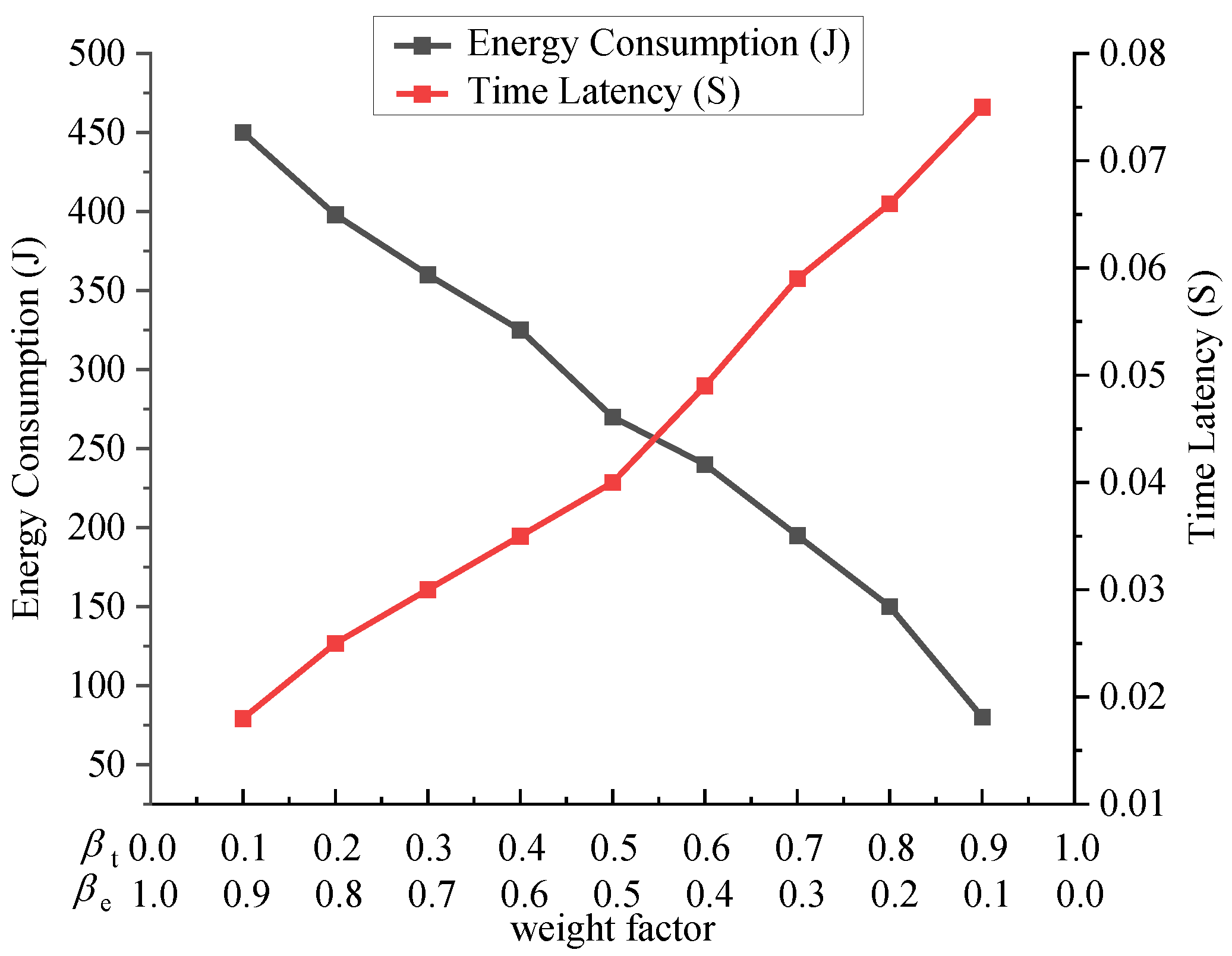

5.2.1. Reward Factor

5.2.2. Weight Factors

5.2.3. Evaluation Metrics

- Energy consumption: denotes the sum of the energy consumption of tasks on the vehicle terminals and the slices that offload the tasks to the edge server.

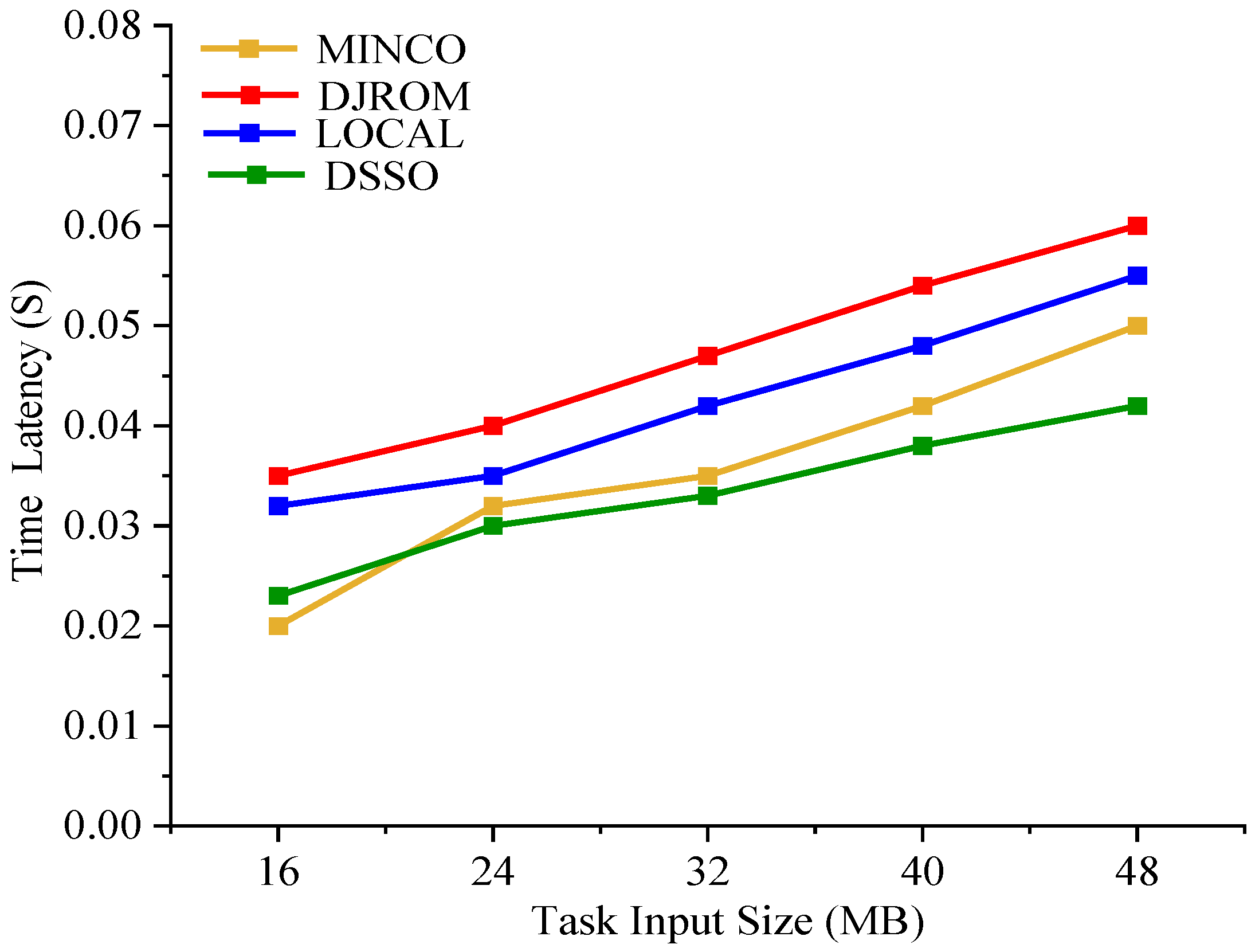

- Time latency: expresses the sum of task execution delay, transmission delay, and waiting delay.

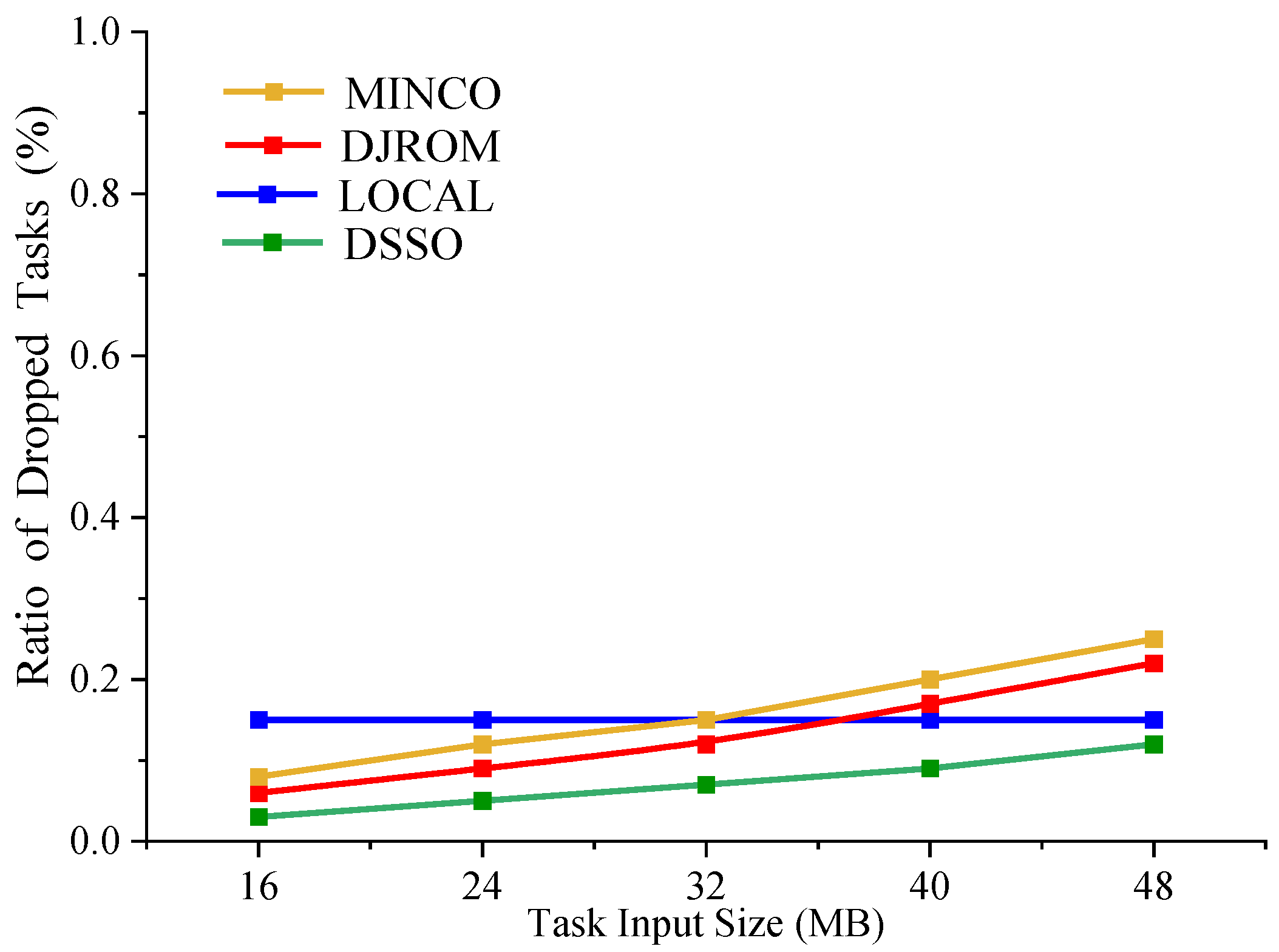

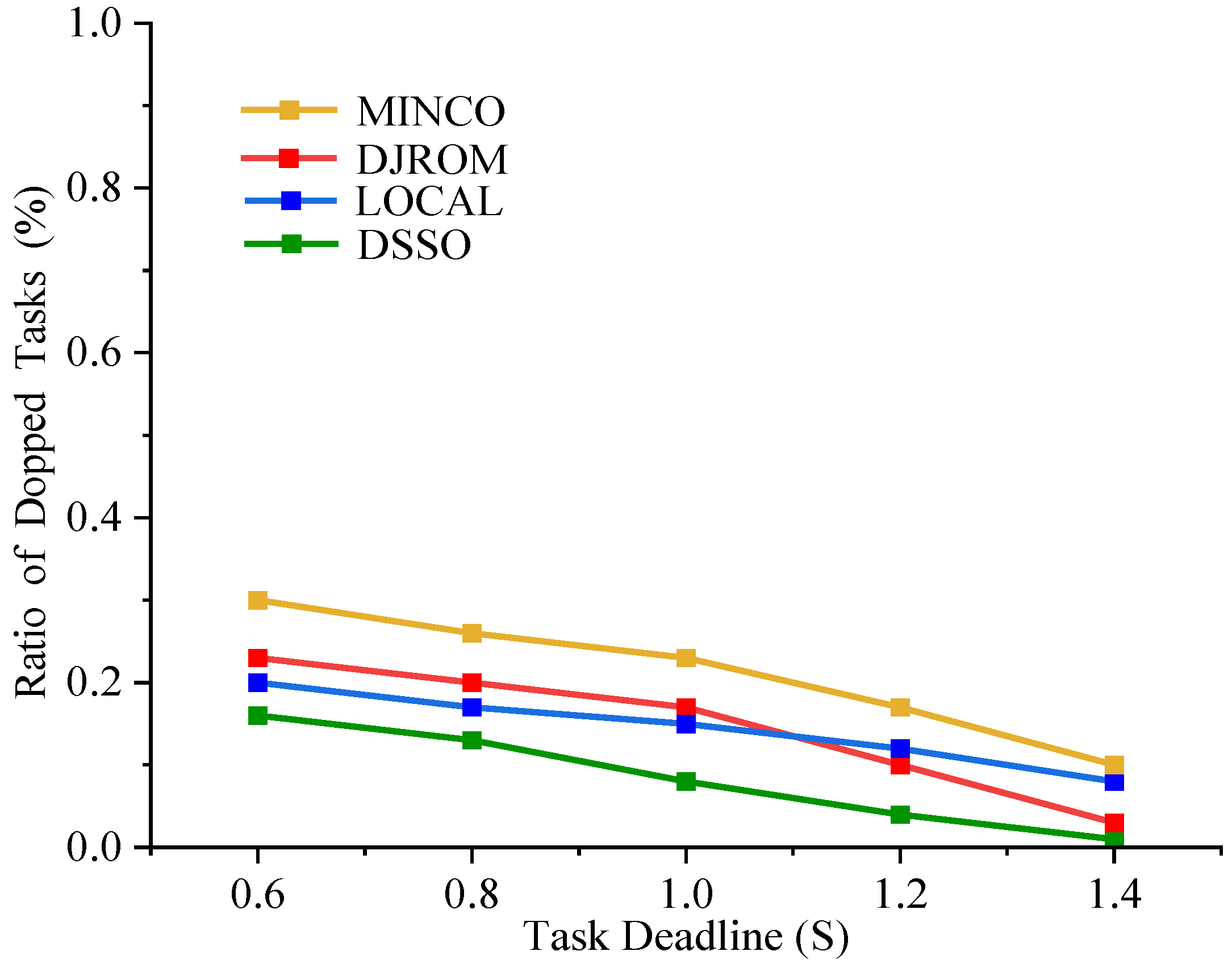

- The ratio of dropped tasks: represents the drop ratio of in-vehicle task offloading, and this metric evaluates the completion efficiency of task offloading.

5.3. Experimental Results and Analysis

- Impact of input data size on algorithm performance

- 2.

- Impact of task deadline on algorithm performance

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Saeik, F.; Avgeris, M.; Spatharakis, D.; Santi, N.; Dechouniotis, D.; Violos, J.; Leivadeas, A.; Athanasopoulos, N.; Mitton, N.; Papavassiliou, S. Task offloading in Edge and Cloud Computing: A survey on mathematical, artificial intelligence and control theory solutions. Comput. Netw. 2021, 195, 108177. [Google Scholar] [CrossRef]

- Singh, A.; Satapathy, S.C.; Roy, A.; Gutub, A. Ai-based mobile edge computing for IoT: Applications, challenges, and future scope. Arab. J. Sci. Eng. 2022, 47, 9801–9831. [Google Scholar] [CrossRef]

- Ma, Z. Communication Resource Allocation Strategy of Internet of Vehicles Based on MEC. J. Inf. Process. Syst. 2022, 18, 389–401. [Google Scholar]

- Garcia, M.H.C.; Molina-Galan, A.; Boban, M.; Gozalvez, J.; Coll-Perales, B.; Şahin, T.; Kousaridas, A. A tutorial on 5G NR V2X communications. IEEE Commun. Surv. Tutor. 2021, 23, 1972–2026. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, J.; Liu, Z.; Cui, Q.; Tao, X.; Wang, S. MDP-based task offloading for vehicular edge computing under certain and uncertain transition probabilities. IEEE Trans. Veh. Technol. 2020, 69, 3296–3309. [Google Scholar] [CrossRef]

- Tang, D.; Zhang, X.; Tao, X. Delay-optimal temporal-spatial computation offloading schemes for vehicular edge computing systems. In Proceedings of the 2019 IEEE Wireless Communications and Networking Conference (WCNC), Marrakesh, Morocco, 15–18 April 2019; pp. 1–6. [Google Scholar]

- Kim, J. Service Aware Orchestration for Dynamic Network Slicing in 5G Networks. In Advances in Computer Science and Ubiquitous Computing: CSA-CUTE 17; Springer: Singapore, 2018; pp. 1397–1402. [Google Scholar]

- Wijethilaka, S.; Liyanage, M. Survey on network slicing for Internet of Things realization in 5G networks. IEEE Commun. Surv. Tutor. 2021, 23, 957–994. [Google Scholar] [CrossRef]

- Jiang, W.; Zhan, Y.; Zeng, G.; Lu, J. Probabilistic-forecasting-based admission control for network slicing in software-defined networks. IEEE Internet Things J. 2022, 9, 14030–14047. [Google Scholar] [CrossRef]

- Gonçalves, D.M.; Puliafito, C.; Mingozzi, E.; Bittencourt, L.F.; Madeira, E.R. End-to-end network slicing in vehicular clouds using the MobFogSim simulator. Ad Hoc Netw. 2023, 141, 103096. [Google Scholar] [CrossRef]

- Saleem, M.A.; Mahmood, K.; Kumari, S. Comments on “AKM-IoV: Authenticated key management protocol in fog computing-based internet of vehicles deployment”. IEEE Internet Things J. 2020, 7, 4671–4675. [Google Scholar] [CrossRef]

- Yazdinejad, A.; Parizi, R.M.; Dehghantanha, A.; Zhang, Q.; Choo, K.K.R. An energy-efficient SDN controller architecture for IoT networks with blockchain-based security. IEEE Trans. Serv. Comput. 2020, 13, 625–638. [Google Scholar] [CrossRef]

- Zhou, S.; Huang, H.; Chen, W.; Zhou, P.; Zheng, Z.; Guo, S. Pirate: A blockchain-based secure framework of distributed machine learning in 5g networks. IEEE Netw. 2020, 34, 84–91. [Google Scholar] [CrossRef]

- Bondan, L.; Wauter, T.; Volckaert, B.; De Turck, F.; Granville, L.Z. NFV Anomaly Detection: Case Study through a Security Module. IEEE Commun. Mag. 2022, 60, 18–24. [Google Scholar] [CrossRef]

- Hussain, M.; Shah, N.; Amin, R.; Alshamrani, S.S.; Alotaibi, A.; Raza, S.M. Software-defined networking: Categories, analysis, and future directions. Sensors 2022, 22, 5551. [Google Scholar] [CrossRef] [PubMed]

- Chen, C.; Zeng, Y.; Li, H. A multi-hop task offloading decision model in MEC-enabled internet of vehicles. IEEE Internet Things J. 2022, 10, 3215–3230. [Google Scholar] [CrossRef]

- Yu, J.; Lu, L.Y.; Li, X. Edge-cloud collaborative task offloading mechanism based on DDQN in vehicular networks. Comput. Eng. 2022, 48, 156–164. [Google Scholar]

- Abinaya, A.B.; Karthikeyan, G. Data Offloading in the Internet of Vehicles Using a Hybrid Optimization Technique. Intell. Autom. Soft Comput. 2022, 34, 325–338. [Google Scholar] [CrossRef]

- Cao, B.; Fan, S.; Zhao, J.; Tian, S.; Zheng, Z.; Yan, Y.; Yang, P. Large-scale many-objective deployment optimization of edge servers. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3841–3849. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, Y.J. Computation rate maximization for wireless powered mobile-edge computing with binary computation offloading. IEEE Trans. Wirel. Commun. 2018, 17, 4177–4190. [Google Scholar] [CrossRef] [Green Version]

- Tang, M.; Wong, V.W.S. Deep reinforcement learning for task offloading in mobile edge computing systems. IEEE Trans. Mob. Comput. 2020, 21, 1985–1997. [Google Scholar] [CrossRef]

- Cheng, Z.; Wang, Q.; Li, Z.; Rudolph, G. Computation offloading and resource allocation for mobile edge computing. In Proceedings of the 2019 IEEE Symposium Series on Computational Intelligence (SSCI), Xiamen, China, 6–9 December 2019; pp. 2735–2740. [Google Scholar]

- Kim, K.; Lynskey, J.; Kang, S.; Hong, C.S. Prediction based sub-task offloading in mobile edge computing. In Proceedings of the 2019 International Conference on Information Networking (ICOIN), Kuala Lumpur, Malaysia, 9–11 January 2019; pp. 448–452. [Google Scholar]

- Li, C.; Tang, J.; Zhang, Y.; Yan, X.; Luo, Y. Energy efficient computation offloading for nonorthogonal multiple access assisted mobile edge computing with energy harvesting devices. Comput. Netw. 2019, 164, 106890. [Google Scholar] [CrossRef]

- Poularakis, K.; Llorca, J.; Tulino, A.M.; Taylor, I.; Tassiulas, L. Joint service placement and request routing in multi-cell mobile edge computing networks. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications, Paris, France, 29 April–2 May 2019; pp. 10–18. [Google Scholar]

- Chen, X.; Zhang, H.; Wu, C.; Mao, S.; Ji, Y.; Bennis, M. Optimized computation offloading performance in virtual edge computing systems via deep reinforcement learning. IEEE Internet Things J. 2018, 6, 4005–4018. [Google Scholar] [CrossRef] [Green Version]

- Van Huynh, N.; Hoang, D.T.; Nguyen, D.N.; Dutkiewicz, E. Optimal and fast real-time resource slicing with deep dueling neural networks. IEEE J. Sel. Areas Commun. 2019, 37, 1455–1470. [Google Scholar] [CrossRef]

- Al-Khatib, A.A.; Khelil, A. Priority-and reservation-based slicing for future vehicular networks. In Proceedings of the 2020 6th IEEE Conference on Network Softwarization, Ghent, Belgium, 29 June–3 July 2020; pp. 36–42. [Google Scholar]

- Peng, H.; Shen, X. Multi-agent reinforcement learning based resource management in MEC-and UAV-assisted vehicular networks. IEEE J. Sel. Areas Commun. 2020, 39, 131–141. [Google Scholar] [CrossRef]

- Mlika, Z.; Cherkaoui, S. Network slicing with MEC and deep reinforcement learning for the Internet of Vehicles. IEEE Netw. 2021, 35, 132–138. [Google Scholar] [CrossRef]

- Nassar, A.; Yilmaz, Y. Deep reinforcement learning for adaptive network slicing in 5G for intelligent vehicular systems and smart cities. IEEE Internet Things J. 2021, 9, 222–235. [Google Scholar] [CrossRef]

- Li, M.; Gao, J.; Zhao, L.; Shen, X. Deep reinforcement learning for collaborative edge computing in vehicular networks. IEEE Trans. Cogn. Commun. Netw. 2020, 6, 1122–1135. [Google Scholar] [CrossRef]

- Huang, L.; Bi, S.; Zhang YJ, A. Deep reinforcement learning for online computation offloading in wireless powered mobile-edge computing networks. IEEE Trans. Mob. Comput. 2019, 19, 2581–2593. [Google Scholar] [CrossRef] [Green Version]

- Ajayi, J.; Di Maio, A.; Braun, T.; Xenakis, D. An Online Multi-dimensional Knapsack Approach for Slice Admission Control. In Proceedings of the 2023 IEEE 20th Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 8–11 January 2023; pp. 152–157. [Google Scholar]

- Eftimie, A.; Borcoci, E. SDN controller implementation using OpenDaylight: Experiments. In Proceedings of the 2020 13th International Conference on Communications, Bucharest, Romania, 8–20 June 2020; pp. 477–481. [Google Scholar]

- Salvat, J.X.; Zanzi, L.; Garcia-Saavedra, A.; Sciancalepore, V.; Costa-Perez, X. Overbooking network slices through yield-driven end-to-end orchestration. In Proceedings of the 14th international conference on emerging networking experiments and technologies, Heraklion, Greece, 4–7 December 2018; pp. 353–365. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; Jiang, J.; Balasubramanian, V.; Khosravi, M.R.; Xu, X. Deep reinforcement learning-based multi-objective edge server placement in Internet of Vehicles. Comput. Commun. 2022, 187, 172–180. [Google Scholar] [CrossRef]

- Khoramnejad, F.; Erol-Kantarci, M. On joint offloading and resource allocation: A double deep q-network approach. IEEE Trans. Cogn. Commun. Netw. 2021, 7, 1126–1141. [Google Scholar] [CrossRef]

- Lu, J.; Shi, Z.; Wang, Y.; Pan, C.; Zhang, S. Multi-index evaluation learning-based computation offloading optimization for power internet of things. Phys. Commun. 2023, 56, 101949. [Google Scholar] [CrossRef]

| Parameters | Description |

|---|---|

| Vehicle | |

| In-vehicle task | |

| In-vehicle task input size (MB) | |

| Slice | |

| Access point | |

| An indicator variable to represent whether the vehicle select slice offload or not | |

| The execution delay in the slice for vehicle (S) | |

| The task execution time delay (S) | |

| The communication delay (S) | |

| The total time latency (S) | |

| The execution energy in the slice for the vehicle (J) | |

| The energy consumption for the tasks to the slice (J) | |

| The energy consumption for the vehicle to transfer the task to the slice (J) | |

| the total energy consumption (J) | |

| The CPU cycle frequency of the vehicle (MHz) | |

| The vehicle transmission power (W) | |

| Task deadline (S) | |

| The noise in the channel transmission (dB) | |

| The total amount of computing resources in the slice | |

| The number of computing resources allocated to the vehicle in the slice | |

| The transmission rate (bps) | |

| The network bandwidth (bit/s) | |

| The channel gain between the vehicle and the access point |

| Device Type | Memory | CPU |

|---|---|---|

| ES 1~2 | 4 GB | Intel(R) Core(TM) i5-10210U CPU @ 2.11 GHz |

| ES 3~4 | 4 GB | Intel(R) Core(TM) i7-2450M CPU @ 2.60 GHz |

| ES 5~6 | 4 GB | Intel(R) Core(TM) i5-4210M CPU @ 2.50 GHz |

| ES 7~8 | 4 GB | Intel(R) Core(TM) i5-4590U CPU @3.30 GHz |

| Dell Inspiron 5490 | 8 GB | Intel(R) Core(TM) i5-10210U CPU @ 1.60 GHz |

| Lenovo Xiaoxin pro 14 | 8 GB | Intel(R) Core(TM) i5-12500H CPU @ 1.80 GHz |

| Lenovo ThinkPad | 8 GB | Intel(R) Core(TM) i7-5600U CPU @ 1.80 GHz |

| HP 840G3 | 16 GB | Intel(R) Core(TM) i5-8350U CPU @ 1.80 GHz |

| Xiaomi 11 | 8 GB | Snapdragon 778G |

| Honor 70 pro | 12 GB | Dimensity 8000 |

| Galaxy Z Flip3 | 16 GB | Snapdragon 888 |

| Samsung w23 | 16 GB | Snapdragon 8 + Gen1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, L.; Bin, Y.; Zhu, S.; Liu, Y. Dynamic Selection Slicing-Based Offloading Algorithm for In-Vehicle Tasks in Mobile Edge Computing. Electronics 2023, 12, 2708. https://doi.org/10.3390/electronics12122708

Han L, Bin Y, Zhu S, Liu Y. Dynamic Selection Slicing-Based Offloading Algorithm for In-Vehicle Tasks in Mobile Edge Computing. Electronics. 2023; 12(12):2708. https://doi.org/10.3390/electronics12122708

Chicago/Turabian StyleHan, Li, Yanru Bin, Shuaijie Zhu, and Yanpei Liu. 2023. "Dynamic Selection Slicing-Based Offloading Algorithm for In-Vehicle Tasks in Mobile Edge Computing" Electronics 12, no. 12: 2708. https://doi.org/10.3390/electronics12122708

APA StyleHan, L., Bin, Y., Zhu, S., & Liu, Y. (2023). Dynamic Selection Slicing-Based Offloading Algorithm for In-Vehicle Tasks in Mobile Edge Computing. Electronics, 12(12), 2708. https://doi.org/10.3390/electronics12122708