Policy-Based Spam Detection of Tweets Dataset

Abstract

:1. Introduction

1.1. Motivation

1.2. Challenges

1.3. Research Contribution

- Included a total number of 1,100,000 plus (+) unique real-time Urdu tweets of multiple users. The dataset is publically available on Kaggle [16];

- Proposed a model to process real-time tweet couplets with feature extraction of Urdu couplets considering timestamp and policy features;

- Evaluated the model by applying machine learning and deep learning training and testing on the Urdu dataset to achieve improved accuracy.

2. Literature Review

3. Machine Learning and Deep Learning Algorithms

3.1. Multinomial Naïve Bayes

3.2. Logistic Regression

3.3. Support Vector Machine

3.4. Long Short-Term Memory (LSTM) and Gated Recurrent Neural Network (RNN)

4. Methodology

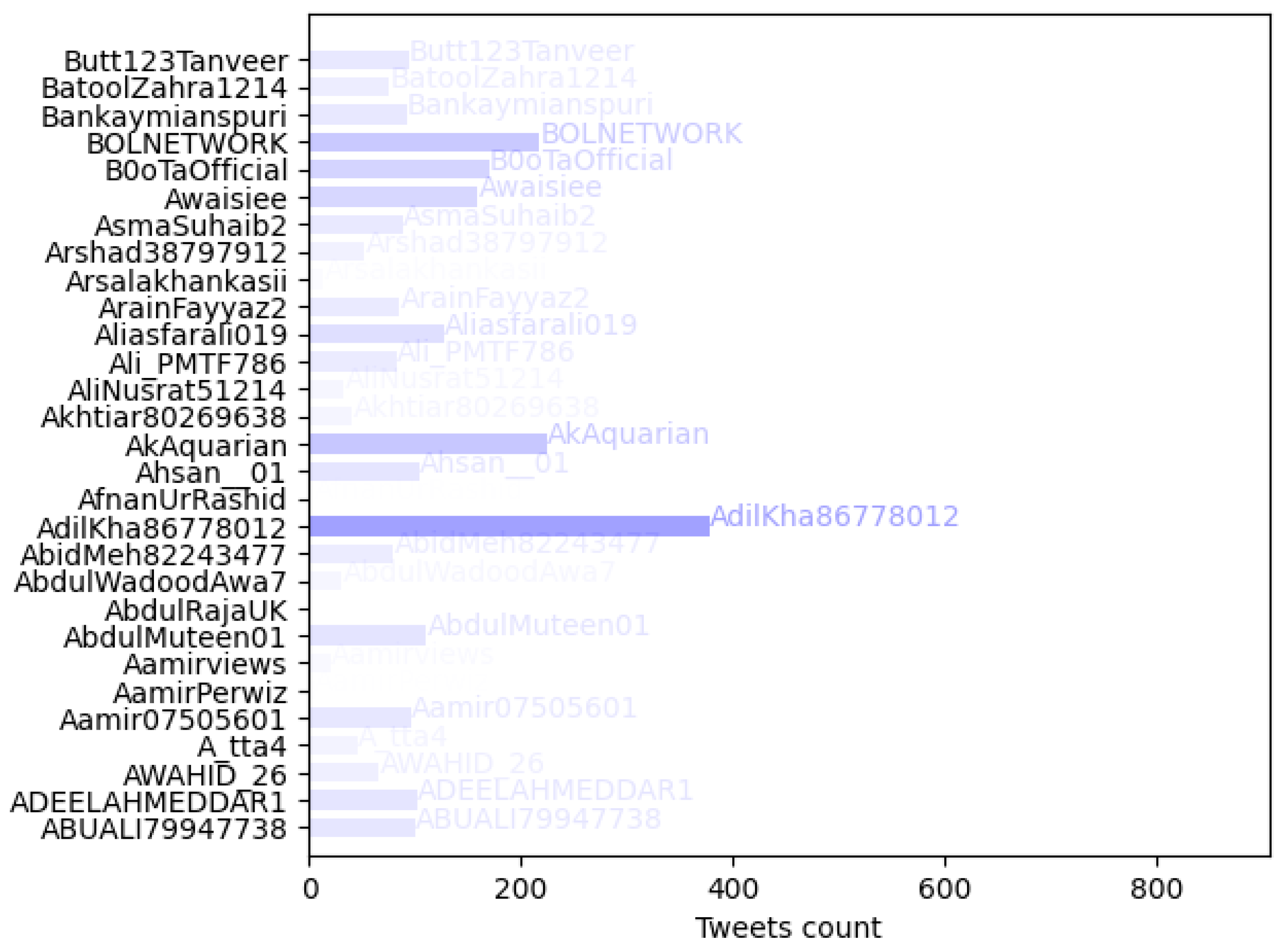

4.1. Data Collection

4.2. Preprocessing

4.3. Features Extraction

4.4. Implementation

| Algorithm 1: Labeling Ham/Spam Tweets in the Urdu Language |

| 0 def labeler_function (row, username_count): 1 if row[username_count] > 200: 2 label = Spam 3 else: 4 label = Ham 5 return label 6 7 dataset_label = dataset.apply (lambda row: labeler_function (row, username_count)) |

| Algorithm 2: Spam Tweets in Urdu Language | |

| 0 Input: Tweet 1 Output: Spam or Not-Spam 2 for each tweet in Tweets do 3 for Policy/Timestamp feature in Policy/Timestamp features 4 assign/label 0 to spam and 1 to non-spam 5 end for 6 end for 7 for each estimator in estimators (Naïve Bayes, Logistic Regression, SVM, BERT) 8 uniqueEstimtorArray = estimator.fit(labeled dataset using Policy/Timestamp features) 9 end for 10 if uniqueEstimtorArray.Predict ( == 1) 11 label = Non-Spam 12 elseif uniqueEstimtorArray.Predict ( == 0) 13 label = Spam 14 end if | |

4.5. Dataset Imbalance

4.6. Architecture

5. Experimental Results

6. Future Research Directions

- Examination of online bullying and harassment: There is a need to examine the frequency, features, and impacts of online bullying and harassment on Twitter in greater detail. This entails studying patterns and trends, comprehending the motivations behind such activities, and investigating the impact on victims and the greater online community;

- Methods for detection and prevention: Methods for detecting and preventing cyberbullying and cyber-harassment must be developed. These strategies should take Twitter’s standards for identifying and responding to harassment and abusive behavior into account. It is essential to develop automated methods that can detect and indicate incidents of harassment in real-time, allowing for prompt interventions;

- Machine learning and natural language processing: To identify and classify instances of online harassment and cyberbullying on Twitter, it is necessary to construct sophisticated machine learning models and natural language processing techniques. These models should be consistent with Twitter’s guidelines regarding abusive behavior and harassment, allowing for the proper identification and classification of harmful content [43,44];

- Dataset expansion and feature extraction: To improve the robustness and generalizability of the models, it is essential to expand the existing dataset utilized for spam identification. The emphasis of research should be on gathering more diverse and representative samples of spam and non-spam tweets. Additionally, studying various feature extraction techniques can result in a more accurate representation of the distinctive qualities of spam content;

- Comparison with advanced baseline approaches: While the current study employed simple baseline approaches, future research could investigate more complicated and advanced strategies for spam identification. Comparing the performance of advanced classifiers, such as deep learning models or ensemble approaches, can shed light on their efficacy and potential for improvement over conventional classifiers;

- Efforts should be made consistently to optimize the performance of spam identification classifiers. This involves fine-tuning the models, refining the feature selection procedure, and researching creative techniques to improve spam detection’s accuracy, precision, and recall.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alorini, D.; Rawat, D.B. Automatic spam detection on gulf dialectical. In Proceedings of the Conference on Computing, Networking and Communication, Honolulu, HI, USA, 18–21 February 2019; pp. 2325–2626. [Google Scholar]

- Liu, S.; Wang, Y.; Zhang, J.; Chen, C.; Xiang, Y. Addressing the class imbalance problem in Twitter spam detection using ensemble learning. Comput. Secur. 2017, 69, 35–49. [Google Scholar] [CrossRef]

- Wu, T.; Liu, S.; Zhang, J.; Xiang, Y. Twitter spam detection based on deep learning. In Proceedings of the Australasian Computer Science Week Multiconference, Geelong, Australia, 31 January 2017; pp. 1–8. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef] [PubMed]

- Larabi-marie-sainte, S.; Ghouzali, S.; Saba, T.; Aburahmah, L.; Almohaini, R. Improving spam email detection using deep recurrent neural network. Inst. Adv. Eng. Sci. 2022, 25, 1625–1633. [Google Scholar] [CrossRef]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef] [Green Version]

- Lahoti, P.; Morales, G.D.F.; Gionis, A. Finding topical experts in Twitter via query-dependent personalized PageRank. In Proceedings of the 2017 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining 2017 (ASONAM’ 17), Association for Computing Machinery, New York, NY, USA, 31 July–3 August 2017; pp. 155–162. [Google Scholar] [CrossRef] [Green Version]

- Rosenthal, M.; Kulkarni, V.; Preoţiuc-Pietro, D.V. Semeval-2015 task 10: Sentiment analysis in twitter. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015; pp. 451–463. [Google Scholar]

- Kolchyna, A.; Hopfgartner, F.; Pasi, G.; Albayrak, S. Exploring crowdsourcing for opinion spam annotation. In Proceedings of the 9th International Conference on Web and Social Media (ICWSM), Shanghai, China, 6–8 November 2015; pp. 437–440. [Google Scholar]

- Maas, A.L.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Portland, OR, USA, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Afzal, N.; Afzal, S.; Shafait, S.; Majeed, F. Leveraging machine learning to investigate public opinion of Pakistan. In Proceedings of the 26th ACM International Conference on Information and Knowledge Management (CIKM), Singapore, 6–10 November 2017; pp. 1883–1886. [Google Scholar]

- Javed, M.N.; Khan, A.; Majeed, F.; Shafait, S. Urdconv: A large-scale urdu conversation corpus. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics (EACL), Online, 19–23 April 2021; pp. 677–687. [Google Scholar]

- Ahmed, A.; Shafait, S. SMS spam filtering for Urdu text messages. In Proceedings of the International Conference on Com-putational Linguistics (COLING), Beijing, China, 23–27 August 2010; pp. 8–15. [Google Scholar]

- Javed, M.N.; Khan, A.; Majeed, F.; Shafait, S. Towards effective spam detection in social media: The case of Urdu language. In Proceedings of the 20th International Conference on Asian Language Processing (IALP), Singapore, 5–7 December 2017; pp. 92–95. [Google Scholar]

- Mehmood, A.; Farooq, M.S.; Naseem, A.; Rustam, F.; Villar, M.G.; Rodríguez, C.L.; Ashraf, I. Threatening URDU Language Detection from Tweets Using Machine Learning. Appl. Sci. 2022, 12, 10342. [Google Scholar] [CrossRef]

- Dar, M.; Iqbal, F. Urdu Tweets Dataset for Spam Detection. Kaggle. Available online: https://www.kaggle.com/datasets/momnadar23/urdu-tweets-dataset-for-spam-detection (accessed on 1 May 2023).

- Ge, Z.; Sun, Y.; Smith, M. Authorship attribution using a neural network language model. Proc. AAAI Conf. Artif. Intell. 2016, 30. [Google Scholar] [CrossRef]

- Anwar, W.; Bajwa, I.S.; Choudhary, M.A.; Ramzan, S. An empirical study on forensic analysis of Urdu text using LDA-based authorship attribution. IEEE Access 2018, 7, 3224–3234. [Google Scholar] [CrossRef]

- Mashooq, M.; Riaz, S.; Farooq, M.S. Urdu Sentiment Analysis: Future Extraction, Taxonomy, and Challenges. VFAST Trans. Softw. Eng. 2022, 10. [Google Scholar]

- Hussain, N.; Mirza, H.T.; Iqbal, F.; Hussain, I. Detecting Spam Product Reviews in Roman Urdu Scripts. Oxf. Comput. J. 2020, 64, 432–450. [Google Scholar] [CrossRef]

- Hussain, N.; Turab Mirza, H.; Ali, A.; Iqbal, F.; Hussain, I.; Kaleem, M. Spammer Group Detection and Diversification of Customer Reviews. PeerJ Comput. Sci. 2021, 7, e472. [Google Scholar] [CrossRef]

- Hussain, N.; Turab Mirza, H.; Hussain, I.; Iqbal, F.; Memon, I. Spam Review Detection Using the Linguistic and Spammer Behavioral Methods. IEEE Access 2020, 8, 53801–53816. [Google Scholar] [CrossRef]

- Duma, R.A.; Niu, Z.; Nyamawe, A.S.; Tchaye-Kondi, J.; Yusuf, A.A. A Deep Hybrid Model for fake review detection by jointly leveraging review text, overall ratings, and aspect ratings. Soft Comput. 2023, 27, 6281–6296. [Google Scholar] [CrossRef]

- Vijayakumar, B.; Fuad, M.M.M. A new method to identify short-text authors using combinations of machine learning and natural language processing techniques. Procedia Comput. Sci. 2019, 159, 428–436. [Google Scholar] [CrossRef]

- Mekala, S.; Tippireddy, R.R.; Bulusu, V.V. A novel document representation approach for authorship attribution. Int. J. Intell. Eng. Syst. 2018, 11, 261–270. [Google Scholar] [CrossRef]

- Saha, N.; Das, P.; Saha, H.N. Authorship attribution of short texts using multi-layer perceptron. Int. J. Appl. Pattern Recognit. 2018, 5, 251–259. [Google Scholar] [CrossRef]

- Benzebouchi, N.E.; Azizi, N.; Hammami, N.E.; Schwab, D.; Khelaifia, M.C.E.; Aldwairi, M. Authors’ writing styles based authorship identification system using the text representation vector. In Proceedings of the 2019 16th International Multi-Conference on Systems, Signals & Devices (SSD), Istanbul, Turkey, 21–24 March 2019; pp. 371–376. [Google Scholar]

- Sun, N.; Lin, G.; Qiu, J.; Rimba, P. Near real-time twitter spam detection with machine learning techniques. Int. J. Comput. Appl. 2022, 44, 338–348. [Google Scholar] [CrossRef]

- Khanday, A.M.D.; Wani, M.A.; Rabani, S.T.; Khan, Q.R. Hybrid Approach for Detecting Propagandistic Community and Core Node on Social Networks. Sustainability 2023, 15, 1249. [Google Scholar] [CrossRef]

- Jain, G.; Sharma, M.; Agarwal, B. Optimizing semantic LSTM for spam detection. Int. J. Inf. Technol. 2019, 11, 239–250. [Google Scholar] [CrossRef]

- Li, D.; Ahmed, K.; Zheng, Z.; Mohsan, S.; Alsharif, M.; Myriam, H.; Jamjoom, M.; Mostafa, S. Roman Urdu sentiment analysis using transfer learning. Appl. Sci. 2022, 12, 10344. [Google Scholar] [CrossRef]

- Muhammad, K.B.; Burney, S.A. Innovations in Urdu Sentiment Analysis Using Machine and Deep Learning Techniques for Two-Class Classification of Symmetric Datasets. Symmetry 2023, 15, 1027. [Google Scholar] [CrossRef]

- Rozaq, A.; Yunitasari, Y.; Sussolaikah, K.; Sari, E.R. Sentiment Analysis of Kampus Mengajar 2 Toward the Implementation of Merdeka Belajar Kampus Merdeka Using Naïve Bayes and Euclidean Distance Methods. Int. J. Adv. Data Inf. Syst. 2022, 3, 30–37. [Google Scholar] [CrossRef]

- Hussain, N. Spam Review Detection through Behavioral and Linguistic Approaches. Computational Intelligence, Machine Learning, and Data Analytics. Ph.D. Dissertation, Department of Computer Science COMSATS University Lahore, Lahore, Pakistan, 2022. [Google Scholar]

- Akhter, M.P.; Zheng, J.; Afzal, F.; Lin, H.; Riaz, S.; Mehmood, A. Supervised ensemble learning methods towards automati-cally filtering Urdu fake news within social media. PeerJ Comput. Sci. 2021, 7, e425. [Google Scholar] [CrossRef] [PubMed]

- Akhter, M.P.; Jiangbin, Z.; Naqvi, I.R.; Abdelmajeed, M.; Fayyaz, M. Exploring deep learning approaches for Urdu text clas-sification in product manufacturing. Enterp. Inf. Syst. 2022, 16, 223–248. [Google Scholar] [CrossRef]

- Ali, R.; Farooq, U.; Arshad, U.; Shahzad, W.; Beg, M.O. Hate speech detection on Twitter using transfer learning. Comput. Speech Lang. 2022, 74, 101365. [Google Scholar] [CrossRef]

- Uzan, M.; HaCohen-Kerner, Y. Detecting Hate Speech Spreaders on Twitter using LSTM and BERT in English and Spanish. In Proceedings of the Conference and Labs of the Evaluation Forum, CLEF (Working Notes), Bucharest, Romania, 21–24 September 2021; pp. 2178–2185. [Google Scholar]

- Akhter, M.P.; Jiangbin, Z.; Naqvi, I.R.; Abdelmajeed, M.; Mehmood, A.; Sadiq, M.T. Document-level text classification using single-layer multisize filters convolutional neural network. IEEE Access 2020, 8, 42689–42707. [Google Scholar] [CrossRef]

- Qutab, I.; Malik, K.I.; Arooj, H. Sentiment Classification Using Multinomial Logistic Regression on Roman Urdu Text. Int. J. Innov. Sci. Technol. 2022, 4, 223–335. [Google Scholar] [CrossRef]

- Rasheed, I.; Banka, H.; Khan, H.M. A hybrid feature selection approach based on LSI for classification of Urdu text. In Machine Learning Algorithms for Industrial Applications; Springer: Berlin/Heidelberg, Germany, 2021; pp. 3–18. [Google Scholar]

- Twitter, Understanding Twitter Limits (Twitter Help). Available online: https://help.twitter.com/en/rules-and-policies/twitter-limits (accessed on 17 May 2023).

- Daud, S.; Ullah, M.; Rehman, A.; Saba, T.; Damaševičius, R.; Sattar, A. Topic Classification of Online News Articles Using Optimized Machine Learning Models. Computers 2023, 12, 16. [Google Scholar] [CrossRef]

- Ozdemir, B.; AlGhamdi, H.M. Investigating the Distractors to Explain DIF Effects Across Gender in Large-Scale Tests With Non-Linear Logistic Regression Models. Front. Educ. 2022, 6, 552. [Google Scholar] [CrossRef]

| Dataset Name | Language | Number of Tweets/Messages | Size of Dataset | Quality |

|---|---|---|---|---|

| Twitter Sentiment Analysis Dataset [6] | English | 1.6 million | Large | High |

| Twitter Spam Classification Dataset [7] | English | 25,000 | Medium | High |

| SemEval-2015 Task 10 [8] | English | N/A | N/A | High |

| CrowdFlower Twitter Spam Corpus [9] | English | 10,000 | Small | Medium |

| Stanford Large Movie Review Dataset [10] | English | 50,000 | Large | High |

| Urdu Sentiment Corpus [11] | Urdu | 5000 | Small | Medium |

| UrdConv Corpus [12] | Urdu | 22,000 | Medium | Medium |

| COMSATS Urdu SMS Spam Corpus [13] | Urdu | 10,000 | Small | Medium |

| Social Media Urdu Corpus [14] | Urdu | 6500 | Small | Low |

| Papers | Urdu Dataset | Techniques | Feature | Policy Feature | Timestamp Feature |

|---|---|---|---|---|---|

| [30] | × | LSTM | Features on frequent or important Corpus | × | × |

| [31] | × | Transfer Learning | Feature mapping using CNN | × | × |

| [32] | ✓ | Naïve Bayes, SVM | Content feature | × | × |

| [33] | × | Naïve Bayes | TFIDF feature extraction | × | × |

| [34] | × | Linguistics Approaches | Linguistic and behavioral features | × | × |

| [35] | × | Machine Learning | TF-IDF feature extraction | × | × |

| [36] | ✓ | Deep Learning | CNN-LSTM n-gram feature | × | × |

| [37] | ✓ | BERT | Linguistic feature | × | × |

| [38] | × | LSTM, BERT | Word n-gram features | × | × |

| [39] | × | CNN | Feature mapping using CNN | × | × |

| [40] | ✓ | Logistic Regression | TFIDF feature extraction | × | × |

| [41] | ✓ | Machine Learning | Latent semantic indexing (LSI) extracted features | × | × |

| Proposed Research | ✓ | SVM, Logistic Regression, Naïve Bayes, BERT | Policy and timestamp features | ✓ | ✓ |

| Name | Spam | Ham |

|---|---|---|

| Count | 7319 | 1,213,068 |

| Mean | 0.6% | 99.4% |

| Standard Deviation | 7.72% | 7.72% |

| Maximum | 900 | 198 |

| Minimum | 208 | 1 |

| Name | Spam | Ham |

|---|---|---|

| Spam | 91 | 2114 |

| Ham | 36 | 363,876 |

| Name | Spam | Ham |

|---|---|---|

| Spam | 0 | 2205 |

| Ham | 0 | 363,912 |

| Name | Spam | Ham |

|---|---|---|

| Spam | 590 | 1615 |

| Ham | 83 | 363,829 |

| Name | Spam | Ham |

|---|---|---|

| Spam | 0 | 1098 |

| Ham | 0 | 85,883 |

| Model/Ensemble | Library | Accuracy | F1-Measure | Recall | Precision |

|---|---|---|---|---|---|

| Multinomial Naïve Bayes, CV, TF-IDF | SK-Learn | 99.40% | 0.54 | 52.0% | 86.0% |

| RBF Kernel SVM, CV, TF-IDF | SK-Learn | 99.38% | 0.5 | 50.0% | 50.0% |

| Logistic Regression, CV, TF-IDF | SK-Learn | 99.55% | 0.7 | 63.0% | 94.0% |

| BERT | Transformers | 99.00% | 0.5 | 50.0% | 49.0% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dar, M.; Iqbal, F.; Latif, R.; Altaf, A.; Jamail, N.S.M. Policy-Based Spam Detection of Tweets Dataset. Electronics 2023, 12, 2662. https://doi.org/10.3390/electronics12122662

Dar M, Iqbal F, Latif R, Altaf A, Jamail NSM. Policy-Based Spam Detection of Tweets Dataset. Electronics. 2023; 12(12):2662. https://doi.org/10.3390/electronics12122662

Chicago/Turabian StyleDar, Momna, Faiza Iqbal, Rabia Latif, Ayesha Altaf, and Nor Shahida Mohd Jamail. 2023. "Policy-Based Spam Detection of Tweets Dataset" Electronics 12, no. 12: 2662. https://doi.org/10.3390/electronics12122662

APA StyleDar, M., Iqbal, F., Latif, R., Altaf, A., & Jamail, N. S. M. (2023). Policy-Based Spam Detection of Tweets Dataset. Electronics, 12(12), 2662. https://doi.org/10.3390/electronics12122662