Spatial-Temporal Self-Attention Transformer Networks for Battery State of Charge Estimation

Abstract

1. Introduction

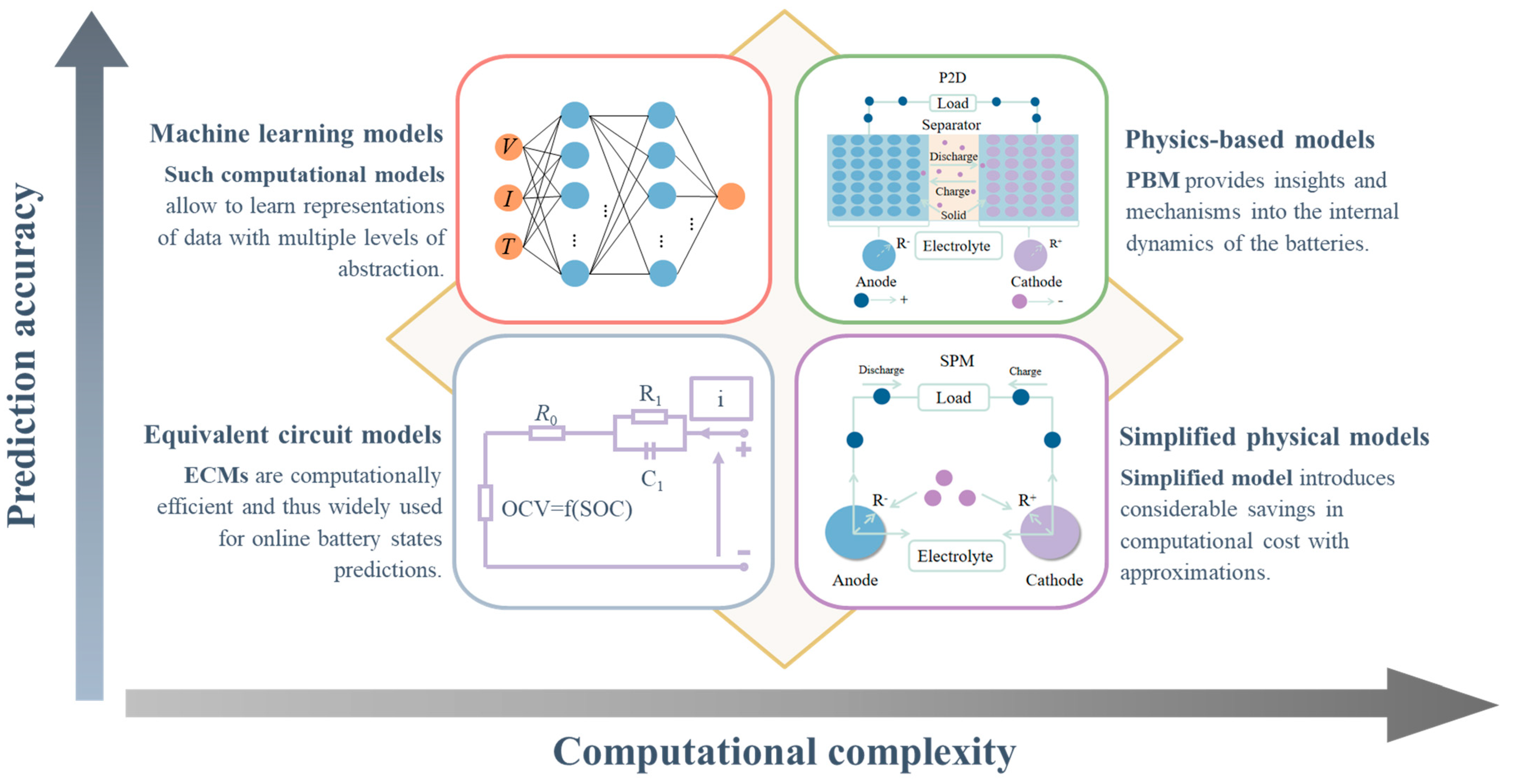

1.1. Current Methods for SOC Estimation

1.2. Contributions and Structure of the Work

- (1)

- The specialized Transformer model, termed as Bidirectional Encoder Representations from Transformers for Batteries (BERTtery), offers an effective tool to learn the non-linear relationship between SOC and input time-series data (e.g., current, voltage, and temperature), and to uncover intricate structures.

- (2)

- For efficient implementation of the Transformer, it is beneficial to create models and algorithms considering different operating conditions, such as charging and discharging processes. Consequently, the encoder network converts observational data into token-level representation, where each feature in the sequence is replaced with fixed-length positional and operational encoding.

- (3)

- A variable-length sliding window has been designed to produce predictions adhering to the underlying physico-chemical (thermodynamic and kinetic) principles. The sliding window aids in enriching the network with temporal memory, enabling BERTtery to generalize well beyond the training samples and to better exploit temporal structures in long-term time-series data.

- (4)

- For real-world applications, the accuracy of model performance is essential. Therefore, we have collected a diverse range of operating conditions and aging states from field applications to test the generalization capabilities of the machine learning model.

- (5)

- We devised a dual-encoder-based architecture to preserve the symplectic structure of the underlying multiphysics battery system. The channel-wise and temporal-wise encoders pave the way for broader exploration and capture epistemic uncertainty across multiple timescales, facilitating the assimilation of long-term time-series data while considering the influence of past states or forcing variables.

2. Materials and Methods

2.1. Data Generation

2.2. Transformer-Based Neural Network

2.2.1. Normalization

2.2.2. Embedding

- (i)

- Positional Encoding

- (ii)

- Operational Encoding

2.2.3. Two-Tower Structure

- (i)

- Temporal-Wise Encoder

- (ii)

- Channel-Wise Encoder

2.2.4. Gating Mechanism

2.2.5. Hyperparameter Determination

- (i)

- The model dimension in both the channel-wise and temporal-wise encoders was set at 64, enabling it to capture rich feature information.

- (ii)

- We used four layers in both the channel-wise and temporal-wise encoder, with a batch size of 384, balancing between learning capability and computational cost.

- (iii)

- Each multi-head attention for each layer was set to eight heads, allowing the model to focus on multiple input features simultaneously.

- (iv)

- We conducted 1300 training epochs to ensure thorough learning.

- (v)

- A dropout rate of 0.1 was applied as a regularization technique to prevent the model from overfitting.

- (vi)

- We employed the Adam optimizer for loss minimization, setting the initial learning rate at 2 for faster convergence.

- (vii)

- Gradient clipping with a value set at 1 was used to prevent the gradient values from becoming too large, known as the exploding gradients problem.

- (viii)

- A weight decay rate of 0.0001 was chosen to provide additional regularization.

- (ix)

- Batch normalization was implemented to accelerate learning and stabilize the neural network.

3. Results

3.1. Model Performance

3.1.1. Cell Level SOC Estimation at Dynamic Temperatures

3.1.2. Cell Level SOC Estimation at Different Aging Conditions

3.1.3. SOC Estimation at Pack Level

3.2. Model Training and Evaluation

3.2.1. Loss Function

3.2.2. Evaluation Metrics

3.3. Model Development and Applications

4. Discussion and Outlook

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AKF | Adaptive Kalman filter |

| APE | Average percentage error |

| BERTtery | Bidirectional encoder representations from transformers for batteries |

| CNN | Convolutional neural network |

| DFFNN | Deep feed-forward neural network |

| ECM | Equivalent circuit model |

| EVs | Electric vehicles |

| GRU | Gated recurrent unit |

| LAM | Loss of active material |

| LLI | Loss of lithium inventory |

| LSTM | Long short-term memory |

| MAE | Maximum absolute error |

| MCU | Microcontroller unit |

| MSE | Mean squared error |

| OCV | Open circuit voltage |

| OTA | Over-the-air |

| P2D | Pseudo-two-dimensional |

| PBM | Physics-based mode |

| PINNs | Physics-informed neural networks |

| RMSE | Root mean square error |

| RNNs | Recurrent neural networks |

| SAAS | Software as a service |

| SOC | State of charge |

| SOH | State of health |

| SOS | State of safety |

| SPM | Single particle model |

References

- Crabtree, G. The coming electric vehicle transformation. Science 2019, 366, 422–424. [Google Scholar] [CrossRef] [PubMed]

- Global Plug-In Electric Car Sales in October 2022 Increased by 55%. Available online: https://insideevs.com/news/625651/global-plugin-electric-car-sales-october2022/ (accessed on 19 August 2022).

- Mao, N.; Zhang, T.; Wang, Z.; Cai, Q. A systematic investigation of internal physical and chemical changes of lithium-ion batteries during overcharge. J. Power Sources 2022, 518, 230767. [Google Scholar] [CrossRef]

- Zhang, G.; Wei, X.; Chen, S.; Zhu, J.; Han, G.; Dai, H. Unlocking the thermal safety evolution of lithium-ion batteries under shallow over-discharge. J. Power Sources 2022, 521, 230990. [Google Scholar] [CrossRef]

- Dai, H.; Wei, X.; Sun, Z.; Wang, J.; Gu, W. Online cell SOC estimation of Li-ion battery packs using a dual time-scale Kalman filtering for EV applications. Appl. Energy 2012, 95, 227–237. [Google Scholar] [CrossRef]

- Tostado-Véliz, M.; Kamel, S.; Hasanien, H.M.; Arévalo, P.; Turky, R.A.; Jurado, F. A stochastic-interval model for optimal scheduling of PV-assisted multi-mode charging stations. Energy 2022, 253, 124219. [Google Scholar] [CrossRef]

- Ng, K.S.; Moo, C.S.; Chen, Y.P.; Hsieh, Y.C. Enhanced coulomb counting method for estimating state-of-charge and state-of-health of lithium-ion batteries. Appl. Energy 2009, 86, 1506–1511. [Google Scholar] [CrossRef]

- Wang, S.L.; Xiong, X.; Zou, C.Y.; Chen, L.; Jiang, C.; Xie, Y.X.; Stroe, D.I. An improved coulomb counting method based on dual open-circuit voltage and real-time evaluation of battery dischargeable capacity considering temperature and battery aging. Int. J. Energy Res. 2021, 45, 17609–17621. [Google Scholar] [CrossRef]

- Lee, S.; Kim, J.; Lee, J.; Cho, B.H. State-of-charge and capacity estimation of lithium-ion battery using a new open-circuit voltage versus state-of-charge. J. Power Sources 2008, 185, 1367–1373. [Google Scholar] [CrossRef]

- Pattipati, B.; Balasingam, B.; Avvari, G.V.; Pattipati, K.R.; Bar-Shalom, Y. Open circuit voltage characterization of lithium-ion batteries. J. Power Sources 2014, 269, 317–333. [Google Scholar] [CrossRef]

- Peng, J.; Luo, J.; He, H.; Lu, B. An improved state of charge estimation method based on cubature Kalman filter for lithium-ion batteries. Appl. Energy 2019, 253, 113520. [Google Scholar] [CrossRef]

- Lim, K.; Bastawrous, H.A.; Duong, V.H.; See, K.W.; Zhang, P.; Dou, S.X. Fading Kalman filter-based real-time state of charge estimation in LiFePO4 battery-powered electric vehicles. Appl. Energy 2016, 169, 40–48. [Google Scholar] [CrossRef]

- Sepasi, S.; Ghorbani, R.; Liaw, B.Y. A novel on-board state-of-charge estimation method for aged Li-ion batteries based on model adaptive extended Kalman filter. J. Power Sources 2014, 245, 337–344. [Google Scholar] [CrossRef]

- Xiong, R.; Tian, J.; Shen, W.; Sun, F. A novel fractional order model for state of charge estimation in lithiumion batteries. IEEE Trans. Veh. Technol. 2018, 68, 4130–4139. [Google Scholar] [CrossRef]

- Zhang, C.; Allafi, W.; Dinh, Q.; Ascencio, P.; Marco, J. Online estimation of battery equivalent circuit model parameters and state of charge using decoupled least squares technique. Energy 2018, 142, 678–688. [Google Scholar] [CrossRef]

- Meng, J.; Ricco, M.; Luo, G.; Swierczynski, M.; Stroe, D.I.; Stroe, A.I. An overview and comparison of online implementable SOC estimation methods for lithium-ion battery. IEEE Trans. Ind. Appl. 2017, 54, 1583–1591. [Google Scholar] [CrossRef]

- Marongiu, A.; Nußbaum, F.G.W.; Waag, W.; Garmendia, M.; Sauer, D.U. Comprehensive study of the influence of aging on the hysteresis behavior of a lithium iron phosphate cathode-based lithium ion battery–An experimental investigation of the hysteresis. Appl. Energy 2016, 171, 629–645. [Google Scholar] [CrossRef]

- Fleckenstein, M.; Bohlen, O.; Roscher, M.A.; Bäker, B. Current density and state of charge inhomogeneities in Li-ion battery cells with LiFePO4 as cathode material due to temperature gradients. J. Power Sources 2011, 196, 4769–4778. [Google Scholar] [CrossRef]

- Fan, K.; Wan, Y.; Wang, Z.; Jiang, K. Time-efficient identification of lithium-ion battery temperature-dependent OCV-SOC curve using multi-output Gaussian process. Energy 2023, 268, 126724. [Google Scholar] [CrossRef]

- Shrivastava, P.; Soon, T.K.; Idris, M.Y.I.B.; Mekhilef, S. Overview of model-based online state-of-charge estimation using Kalman filter family for lithium-ion batteries. Renew. Sustain. Energy Rev. 2019, 113, 109233. [Google Scholar] [CrossRef]

- Ye, M.; Guo, H.; Xiong, R.; Yu, Q. A double-scale and adaptive particle filter-based online parameter and state of charge estimation method for lithium-ion batteries. Energy 2018, 144, 789–799. [Google Scholar] [CrossRef]

- Xiong, R.; Yu, Q.; Lin, C. A novel method to obtain the open circuit voltage for the state of charge of lithium ion batteries in electric vehicles by using H infinity filter. Appl. Energy 2017, 207, 346–353. [Google Scholar] [CrossRef]

- Lai, X.; Zheng, Y.; Sun, T. A comparative study of different equivalent circuit models for estimating state-of-charge of lithium-ion batteries. Electrochim. Acta 2018, 259, 566–577. [Google Scholar] [CrossRef]

- Liu, Y.; Ma, R.; Pang, S.; Xu, L.; Zhao, D.; Wei, J.; Huangfu, Y.; Gao, F. A nonlinear observer SOC estimation method based on electrochemical model for lithium-ion battery. IEEE Trans. Ind. Appl. 2020, 57, 1094–1104. [Google Scholar] [CrossRef]

- Roman, D.; Saxena, S.; Robu, V.; Pecht, M.; Flynn, D. Machine learning pipeline for battery state-of-health estimation. Nat. Mach. Intell. 2021, 3, 447–456. [Google Scholar] [CrossRef]

- Zhao, J.; Ling, H.; Liu, J.; Wang, J.; Burke, A.F.; Lian, Y. Machine learning for predicting battery capacity for electric vehicles. eTransportation 2023, 15, 100214. [Google Scholar] [CrossRef]

- Zhao, J.; Ling, H.; Wang, J.; Burke, A.F.; Lian, Y. Data-driven prediction of battery failure for electric vehicles. Iscience 2022, 25, 104172. [Google Scholar] [CrossRef]

- Correa-Baena, J.P.; Hippalgaonkar, K.; Duren, J.V.; Jaffer, S.; Chandrasekhar, V.R.; Stevanovic, V.; Wadia, C.; Guha, S.; Buonassisi, T. Accelerating materials development via automation, machine learning, and high-performance computing. Joule 2018, 2, 1410–1420. [Google Scholar] [CrossRef]

- Severson, K.A.; Attia, P.M.; Jin, N.; Perkins, N.; Jiang, B.; Yang, Z.; Chen, M.H.; Aykol, M.; Herring, P.K.; Fraggedakis, D.; et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 2019, 4, 383–391. [Google Scholar] [CrossRef]

- Zhao, J.; Burke, A.F. Electric Vehicle Batteries: Status and Perspectives of Data-Driven Diagnosis and Prognosis. Batteries 2022, 8, 142. [Google Scholar] [CrossRef]

- Zhao, J.; Burke, A.F. Battery prognostics and health management for electric vehicles under industry 4.0. J. Energy Chem. 2023, in press. [Google Scholar] [CrossRef]

- Zheng, Y.; Ouyang, M.; Han, X.; Lu, L.; Li, J. Investigating the error sources of the online state of charge estimation methods for lithium-ion batteries in electric vehicles. J. Power Sources 2018, 377, 161–188. [Google Scholar] [CrossRef]

- Aykol, M.; Herring, P.; Anapolsky, A. Machine learning for continuous innovation in battery technologies. Nat. Rev. Mater. 2020, 5, 725–727. [Google Scholar] [CrossRef]

- Wang, Q.; Ye, M.; Wei, M.; Lian, G.; Li, Y. Deep convolutional neural network based closed-loop SOC estimation for lithium-ion batteries in hierarchical scenarios. Energy 2023, 263, 125718. [Google Scholar] [CrossRef]

- Quan, R.; Liu, P.; Li, Z.; Li, Y.; Chang, Y.; Yan, H. A multi-dimensional residual shrinking network combined with a long short-term memory network for state of charge estimation of Li-ion batteries. J. Energy Storage 2023, 57, 106263. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Wu, J.; Cheng, W.; Zhu, Q. SOC estimation for lithium-ion battery using the LSTM-RNN with extended input and constrained output. Energy 2023, 262, 125375. [Google Scholar] [CrossRef]

- Hong, J.; Wang, Z.; Chen, W.; Wang, L.Y.; Qu, C. Online joint-prediction of multi-forward-step battery SOC using LSTM neural networks and multiple linear regression for real-world electric vehicles. J. Energy Storage 2020, 30, 101459. [Google Scholar] [CrossRef]

- Bian, C.; He, H.; Yang, S. Stacked bidirectional long short-term memory networks for state-of-charge estimation of lithium-ion batteries. Energy 2020, 191, 116538. [Google Scholar] [CrossRef]

- Yang, F.; Li, W.; Li, C.; Miao, Q. State-of-charge estimation of lithium-ion batteries based on gated recurrent neural network. Energy 2019, 175, 66–75. [Google Scholar] [CrossRef]

- Jiao, M.; Wang, D.; Qiu, J. A GRU-RNN based momentum optimized algorithm for SOC estimation. J. Power Sources 2020, 459, 228051. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y.; Li, W.; Cheng, W.; Zhu, Q. State of charge estimation for lithium-ion batteries using gated recurrent unit recurrent neural network and adaptive Kalman filter. J. Energy Storage 2022, 55, 105396. [Google Scholar] [CrossRef]

- Takyi-Aninakwa, P.; Wang, S.; Zhang, H.; Yang, X.; Fernandez, C. A hybrid probabilistic correction model for the state of charge estimation of lithium-ion batteries considering dynamic currents and temperatures. Energy 2023, 273, 127231. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Hannan, M.A.; How, D.N.; Lipu, M.H.; Mansor, M.; Ker, P.J.; Dong, Z.Y.; Sahari, K.S.; Tiong, S.K.; Muttaqi, K.M.; Mahlia, T.I.; et al. Deep learning approach towards accurate state of charge estimation for lithium-ion batteries using self-supervised transformer model. Sci. Rep. 2021, 11, 19541. [Google Scholar] [CrossRef] [PubMed]

- Shen, H.; Zhou, X.; Wang, Z.; Wang, J. State of charge estimation for lithium-ion battery using Transformer with immersion and invariance adaptive observer. J. Energy Storage 2022, 45, 103768. [Google Scholar] [CrossRef]

- Shi, D.; Zhao, J.; Wang, Z.; Zhao, H.; Eze, C.; Wang, J.; Lian, Y.; Burke, A.F. Cloud-Based Deep Learning for Co-Estimation of Battery State of Charge and State of Health. Energies 2023, 16, 3855. [Google Scholar] [CrossRef]

- Sulzer, V.; Mohtat, P.; Aitio, A.; Lee, S.; Yeh, Y.T.; Steinbacher, F.; Khan, M.U.; Lee, J.W.; Siegel, J.B.; Stefanopoulou, A.G.; et al. The challenge and opportunity of battery lifetime prediction from field data. Joule 2021, 5, 1934–1955. [Google Scholar] [CrossRef]

- Ahmed, S.; Nielsen, I.E.; Tripathi, A.; Siddiqui, S.; Rasool, G.; Ramachandran, R.P. Transformers in time-series analysis: A tutorial. arXiv 2022, arXiv:2205.01138. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–13. [Google Scholar]

- Zhao, J.; Nan, J.; Wang, J.; Ling, H.; Lian, Y.; Burke, A.F. Battery Diagnosis: A Lifelong Learning Framework for Electric Vehicles. In Proceedings of the 2022 IEEE Vehicle Power and Propulsion Conference (VPPC), Merced, CA, USA, 1–4 November 2022; pp. 1–6. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Shi, D.; Zhao, J.; Eze, C.; Wang, Z.; Wang, J.; Lian, Y.; Burke, A.F. Cloud-Based Artificial Intelligence Framework for Battery Management System. Energies 2023, 16, 4403. [Google Scholar] [CrossRef]

- Tran, M.K.; Panchal, S.; Khang, T.D.; Panchal, K.; Fraser, R.; Fowler, M. Concept review of a cloud-based smart battery management system for lithium-ion batteries: Feasibility, logistics, and functionality. Batteries 2022, 8, 19. [Google Scholar] [CrossRef] [PubMed]

| Methods | Advantages | Disadvantages |

|---|---|---|

| Ampere-hour Counting | Low computational complexity, straightforward method | Susceptible to errors, depends heavily on initial SOC |

| Open Circuit Voltage | Simple, easy to implement | Not suitable for real-time SOC, requires resting state |

| Model-Based Estimation | Can be used for online applications, low computational demand | Limited accuracy, requires careful parameterization |

| Physics-Informed Methods | Provides insights into the internal battery dynamics | Complex equations, high computational cost |

| Filter-based Methods | Capable of handling noise and estimation uncertainty | Requires accurate system model, might be computationally heavy |

| Machine Learning | Can handle complex relationships, potential for high accuracy | Needs a large amount of data, requires training phase |

| Datasets | Entity | Cell Specification | SOH | Operating Temperature Window |

|---|---|---|---|---|

| Group A (Cell level) | 5 large-scale NMC cells | 105 Ah, 115 Ah and 135 Ah | 100%, 90% and 80%. | −5 °C to 40 °C |

| Group B (Pack level) | 1 battery pack | 92 NMC cells in-series | 8 consecutive months of service time in an EV | 10 °C to 35 °C |

| Datasets | RMSE | APE | MAE | Operating Conditions |

|---|---|---|---|---|

| Cell_1 | 0.4857 | 0.59% | 1.6507% | dynamic temperatures −4 °C to 4 °C |

| Cell_2 | 0.4356 | 0.71% | 1.3208% | dynamic temperatures 0 °C to 35 °C |

| Cell_3 | 0.4047 | 0.67% | 1.1275% | aging conditions, 100% SOH |

| Cell_4 | 0.4046 | 0.60% | 0.9461% | aging conditions, 90% SOH |

| Cell_5 | 0.4218 | 0.41% | 1.0836% | aging conditions, 80% SOH |

| Battery pack, Cell_V_max | 0.4033 | 0.95% | 1.4876% | Pack level, 20 °C to 25 °C, ~97.5% SOH |

| Battery pack, Cell_V_min | 0.4497 | 0.88% | 1.7525% | Pack level, 20 °C to 25 °C, ~97.5% SOH |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, D.; Zhao, J.; Wang, Z.; Zhao, H.; Wang, J.; Lian, Y.; Burke, A.F. Spatial-Temporal Self-Attention Transformer Networks for Battery State of Charge Estimation. Electronics 2023, 12, 2598. https://doi.org/10.3390/electronics12122598

Shi D, Zhao J, Wang Z, Zhao H, Wang J, Lian Y, Burke AF. Spatial-Temporal Self-Attention Transformer Networks for Battery State of Charge Estimation. Electronics. 2023; 12(12):2598. https://doi.org/10.3390/electronics12122598

Chicago/Turabian StyleShi, Dapai, Jingyuan Zhao, Zhenghong Wang, Heng Zhao, Junbin Wang, Yubo Lian, and Andrew F. Burke. 2023. "Spatial-Temporal Self-Attention Transformer Networks for Battery State of Charge Estimation" Electronics 12, no. 12: 2598. https://doi.org/10.3390/electronics12122598

APA StyleShi, D., Zhao, J., Wang, Z., Zhao, H., Wang, J., Lian, Y., & Burke, A. F. (2023). Spatial-Temporal Self-Attention Transformer Networks for Battery State of Charge Estimation. Electronics, 12(12), 2598. https://doi.org/10.3390/electronics12122598