Double Quantification of Template and Network for Palmprint Recognition

Abstract

1. Introduction

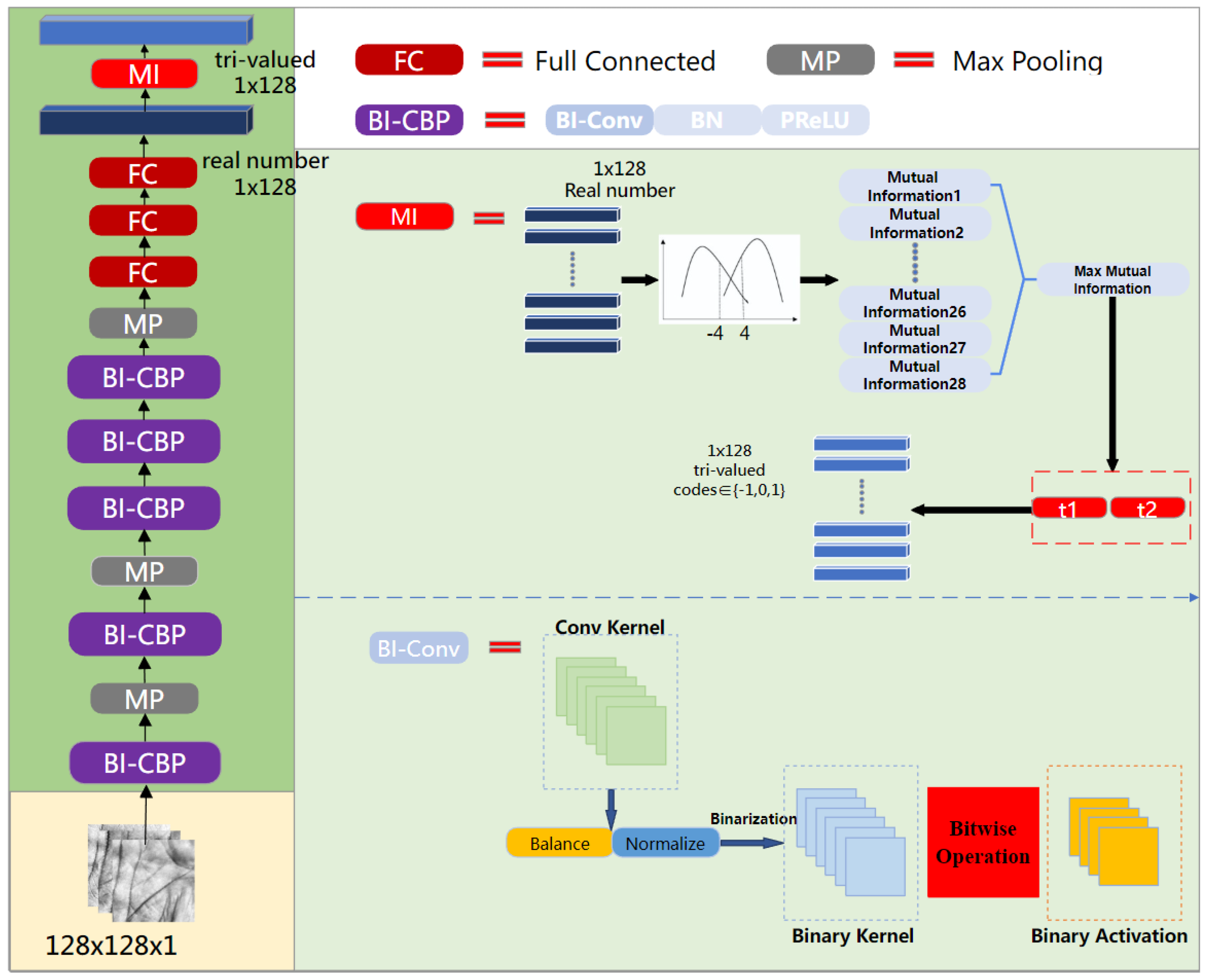

- The proposed B-DHN has two advantages. On the one hand, the speed of B-DHN is much faster than that of traditional DHN. On the other hand, B-DHN squeezes the network by binarizing the network to reduce the model storage. Thus, B-DHN has the advantages in terms of speed and storage;

- To improve the recognition accuracy of B-DHN, the weights are balanced and standardized by maximizing the information entropy and minimizing the quantization error before the network binarization, which reduces the information loss due to the parameter binarization in forward propagation. The gradient of B-DHN cannot be calculated; therefore, a function, which approximates the gradient to minimize the information loss, ensures sufficient update at the beginning of training and accurate gradient at the end of training;

- In order to reduce the accuracy degradation caused by the squeezing of the DHN, the outputs of DHN are quantized to tri-valued hash codes as the palmprint templates. Mutual information is used to dilute the ambiguity of the output binarization in Hamming space. Kleene Logic’s tri-valued Hamming distance measures the dissimilarity between palmprint templates; thus, the ambiguous intervals have a small weight to improve the recognition accuracy.

2. Related Works

2.1. Palmprint Recognition

2.1.1. Local Texture Coding Methods

2.1.2. Deep Learning-Based Methods

2.2. Deep Hash Network

3. Methodology

3.1. Binary Deep Hash Networks

3.1.1. Binary Convolution and Approximation Function

- Binary convolution

- 2.

- Approximation function

3.1.2. Loss Function of B-DHN

- Distance loss function

- 2.

- Quantization loss function

3.2. Tri-Valued Hash Codes

4. Experimental Results and Discussions

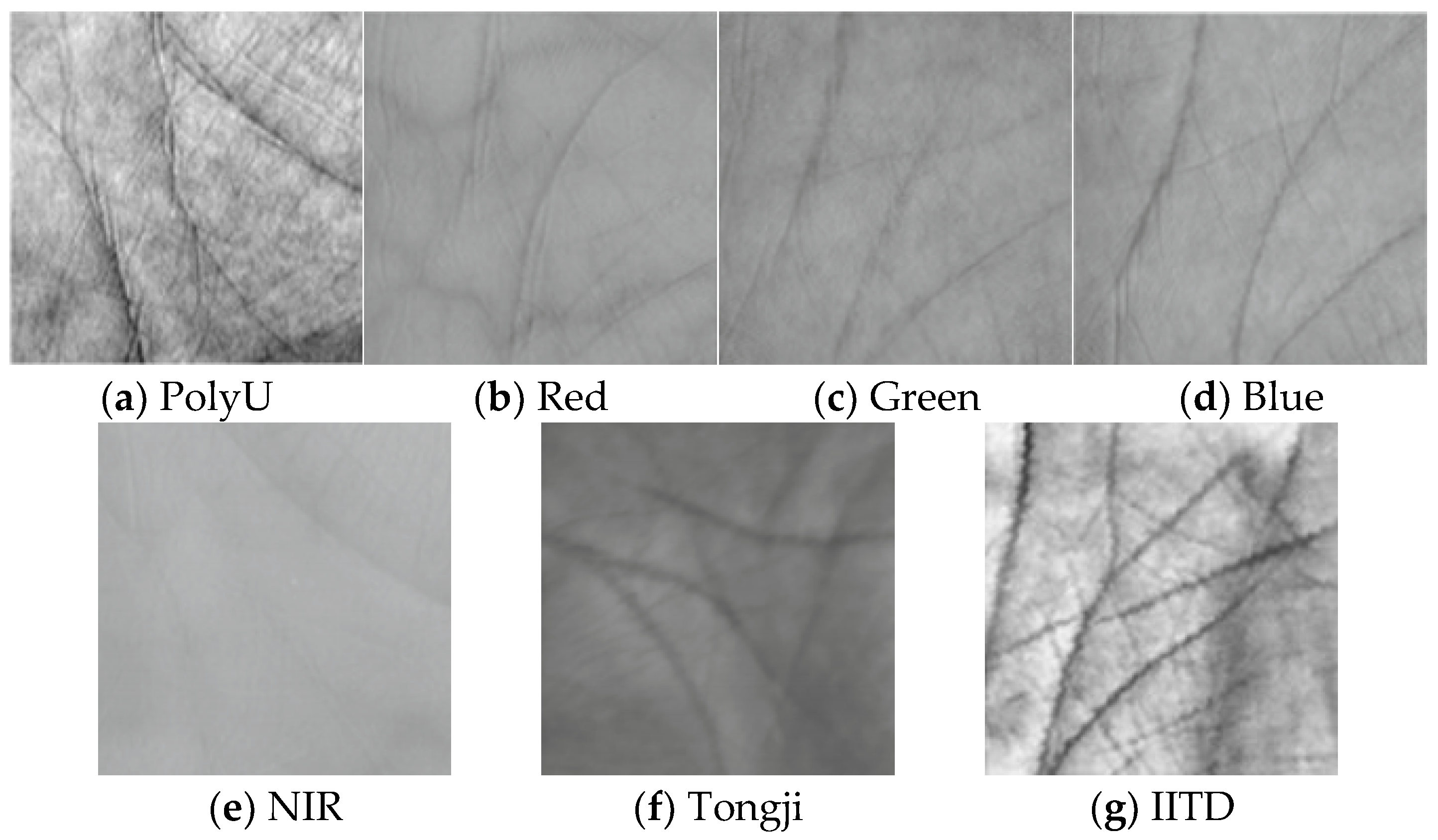

4.1. Dataset and Experimental Environment Setup

- PolyU database [33]. A total of 7752 images belong to 386 palms, each palm containing around 20 images. The images are all acquired with a contact device. There are, in total, 30,042,876 matchings, including 74,068 genuine matchings and 29,968,808 imposter matchings;

- Multispectral database [34]. The images are acquired with contact devices from different spectral environments. Each spectral database contains 6000 palm images. There are, in total, 1,799,700 matchings, including 33,000 genuine matchings and 1,796,400 imposter matchings;

- IITD database [35]. There are 2601 hand images captured with contactless device from 230 individuals (460 palms). Each palm has around five images. Contactless acquisition usually contains stronger noise;

- Tongji-print database [36]. It consists of 12,000 images of 300 individuals (600 palms) acquired with a contactless device in two sessions. In each session, 10 images of each palm are acquired. There are, in total, 1,799,700 matchings, including 2700 genuine matchings and 17,970,000 imposter matchings.

4.2. Ablation Experiments

- Balanced and normalized network parameters

- 2.

- Balanced network output

- 3.

- Tri-valued quantization

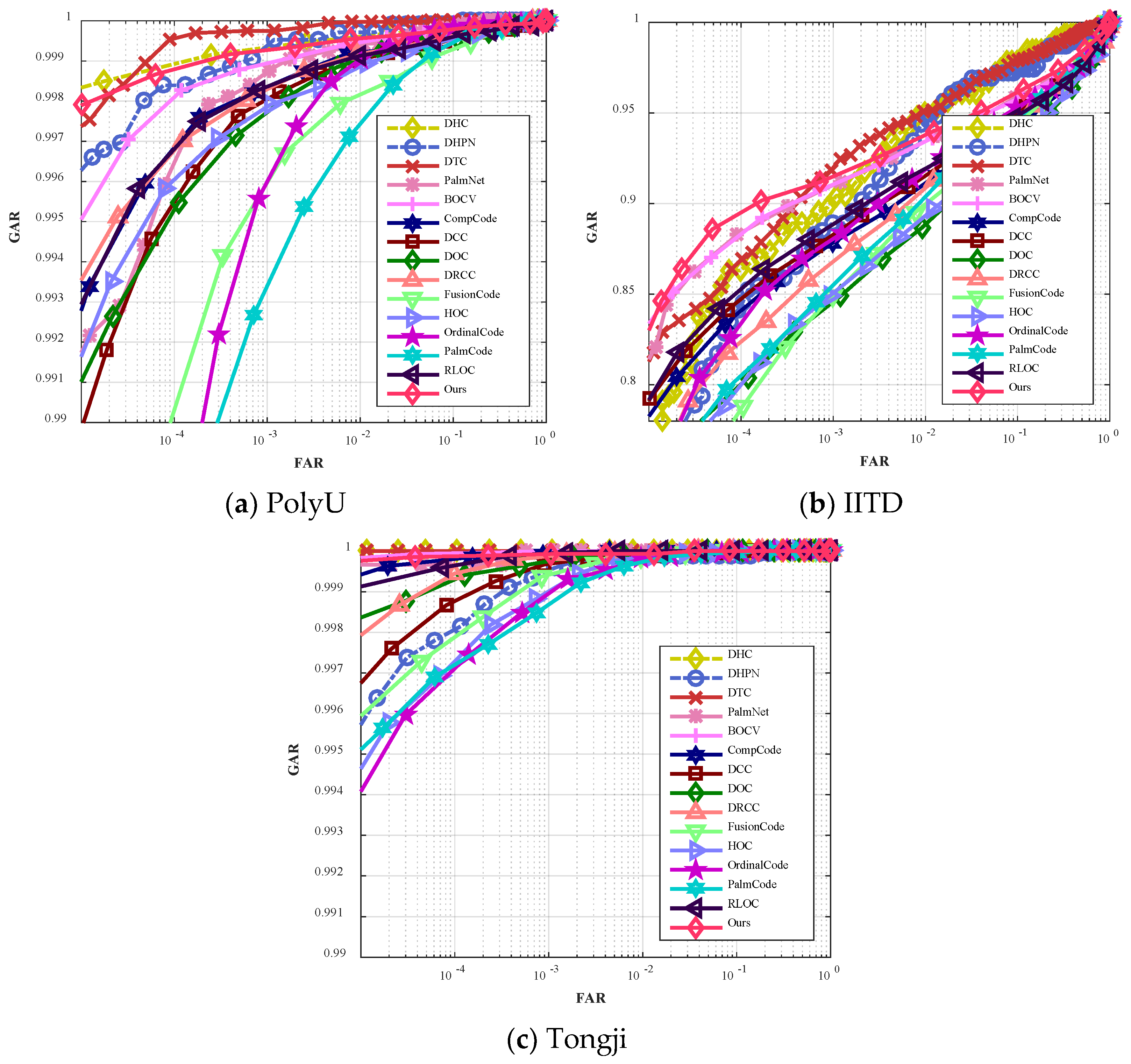

4.3. Comparison Experiments

5. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jia, W.; Huang, S.; Wang, B.; Fei, L.; Zhao, Y.; Min, H. Deep Multi-loss Hashing Network for Palmprint Retrieval and Recognition. In Proceedings of the 2021 IEEE International Joint Conference on Biometrics (IJCB), Shenzhen, China, 4–7 August 2021. [Google Scholar]

- Qin, H.; Gong, R.; Liu, X.; Shen, M.; Wei, Z.; Yu, F.; Song, J. Forward and Backward Information Retention for Accurate Binary Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Lin, Q.; Leng, L.; Khan, M.K. Deep Ternary Hashing Code for Palmprint Retrieval and Recognition. In Proceedings of the 2022 The 6th International Conference on Advances in Artificial Intelligence, Birmingham, UK, 21–23 October 2022. [Google Scholar]

- Zhang, D.; Kong, W.K.; You, J.; Wong, M. Online Palmpint Identification. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 25, 1041–1050. [Google Scholar] [CrossRef]

- Kong, A.W.K.; Zhang, D. Competitive Coding Scheme for Palmprint Verification. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 26 August 2004. [Google Scholar]

- Jia, W.; Huang, D.-S.; Zhang, D. Palmprint verification based on robust line orientation code. Pattern Recognit. 2008, 41, 1504–1513. [Google Scholar] [CrossRef]

- Leng, L.; Zhang, J. Palmhash Code vs. Palmphasor Code. Neurocomputing 2013, 108, 1–12. [Google Scholar] [CrossRef]

- Leng, L.; Li, M.; Kim, C.; Bi, X. Dual-source discrimination power analysis for multi-instance contactless palmprint recognition. Multimedia Tools Appl. 2015, 76, 333–354. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Tang, W.; Zhang, D. Double-orientation code and nonlinear matching scheme for palmprint recognition. Pattern Recognit. 2016, 49, 89–101. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, D.; Zhang, L.; Zuo, W. Palmprint verification using binary orientation co-occurrence vector. Pattern Recognit. Lett. 2009, 30, 1219–1227. [Google Scholar] [CrossRef]

- Xu, Y.; Fei, L.; Wen, J.; Zhang, D. Discriminative and Robust Competitive Code for Palmprint Recognition. IEEE Trans. Syst. Man, Cybern. Syst. 2016, 48, 232–241. [Google Scholar] [CrossRef]

- Leng, L.; Yang, Z.; Min, W. Democratic voting downsampling for coding-based palmprint recognition. IET Biom. 2020, 9, 290–296. [Google Scholar] [CrossRef]

- Yang, Z.; Leng, L.; Min, W. Extreme Downsampling and Joint Feature for Coding-Based Palmprint Recognition. IEEE Trans. Instrum. Meas. 2020, 70, 1–12. [Google Scholar] [CrossRef]

- Yang, Z.; Leng, L.; Min, W. Downsampling in uniformly-spaced windows for coding-based Palmprint recognition. Multimedia Tools Appl. 2023, 1–16. [Google Scholar] [CrossRef]

- Svoboda, J.; Masci, J.; Bronstein, M.M. Palmprint Recognition Via Discriminative Index Learning. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016. [Google Scholar]

- Wen, Y.; Zhang, K.; Li, Z.; Qiao, A. Discriminative Feature Learning Approach for Deep Face Recognition. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Zhong, D.; Zhu, J. Centralized Large Margin Cosine Loss for Open-Set Deep Palmprint Recognition. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 1559–1568. [Google Scholar] [CrossRef]

- Matkowski, W.M.; Chai, T.; Kong, A.W.K. Palmprint Recognition in Uncontrolled and Uncooperative Environment. IEEE Trans. Inf. Forensics Secur. 2019, 15, 1601–1615. [Google Scholar] [CrossRef]

- Chai, T.; Prasad, S.; Wang, S. Boosting palmprint identification with gender information using DeepNet. Futur. Gener. Comput. Syst. 2019, 99, 41–53. [Google Scholar] [CrossRef]

- Xu, H.; Leng, L.; Yang, Z.; Teoh, A.B.J.; Jin, Z. Multi-task Pre-training with Soft Biometrics for Transfer-learning Palmprint Recognition. Neural Process. Lett. 2022, 1–18. [Google Scholar] [CrossRef]

- Du, X.; Zhong, D.; Shao, H. Cross-Domain Palmprint Recognition via Regularized Adversarial Domain Adaptive Hashing. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 2372–2385. [Google Scholar] [CrossRef]

- Liu, Y.; Kumar, A. Contactless Palmprint Identification Using Deeply Learned Residual Features. IEEE Trans. Biom. Behav. Identit- Sci. 2020, 2, 172–181. [Google Scholar] [CrossRef]

- Chen, Y.C.; Lim, M.H.; Yuen, P.C.; Lai, J. Discriminant Spectral Hashing for Compact Palmprint Representation. In Proceedings of the Biometric Recognition: 8th Chinese Conference, Jinan, China, 16–17 November 2013. [Google Scholar]

- Cheng, J.; Sun, Q.; Zhang, J.; Zhang, Q. Supervised Hashing with Deep Convolutional Features for Palmprint Recognition. In Proceedings of the Biometric Recognition: 12th Chinese Conference, Shenzhen, China, 28–29 October 2017. [Google Scholar]

- Zhong, D.; Shao, H.; Du, X. A Hand-Based Multi-Biometrics via Deep Hashing Network and Biometric Graph Matching. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3140–3150. [Google Scholar] [CrossRef]

- Zhong, D.; Liu, S.; Wang, W.; Du, X. Palm Vein Recognition with Deep Hashing Network. In Proceedings of the Pattern Recognition and Computer Vision: First Chinese Conference, Guangzhou, China, 23–26 November 2018. [Google Scholar]

- Li, D.; Gong, Y.; Cheng, D.; Shi, W.; Tao, X.; Chang, X. Consistency-Preserving deep hashing for fast person re-identification. Pattern Recognit. 2019, 94, 207–217. [Google Scholar] [CrossRef]

- Liu, Y.; Song, J.; Zhou, K.; Yan, L.; Liu, L.; Zou, F.; Shao, L. Deep Self-Taught Hashing for Image Retrieval. IEEE Trans. Cybern. 2018, 49, 2229–2241. [Google Scholar] [CrossRef]

- Wu, T.; Leng, L.; Khan, M.K.; Khan, F.A. Palmprint-Palmvein Fusion Recognition Based on Deep Hashing Network. IEEE Access 2021, 9, 135816–135827. [Google Scholar] [CrossRef]

- Liu, C.; Fan, L.; Ng, K.W.; Jin, Y.; Ju, C.; Zhang, T.; Chan, C.S.; Yang, Q. Ternary Hashing. arXiv 2021, arXiv:2103.09173. [Google Scholar]

- Fitting, M. Kleene’s Logic, Generalized. J. Log. Comput. 1991, 1, 797–810. [Google Scholar] [CrossRef]

- Leng, L.; Teoh, A.B.J. Alignment-free row-co-occurrence cancelable palmprint Fuzzy Vault. Pattern Recognit. 2015, 48, 2290–2303. [Google Scholar] [CrossRef]

- PolyU Palmprint Database. Available online: https://www.comp.polyu.edu.hk/~biometrics (accessed on 11 July 2021).

- Zhang, L.; Cheng, Z.; Shen, Y.; Wang, D. Palmprint and Palmvein Recognition Based on DCNN and A New Large-Scale Contactless Palmvein Dataset. Symmetry 2018, 10, 78. [Google Scholar] [CrossRef]

- IITD Touchless Palmprint Database. Available online: http://www4.comp.polyu.edu.hk/∼csajaykr/IITD/Database_Palm.htm (accessed on 11 July 2021).

- Tongji Palmprint Image Database. Available online: https://cslinzhang.github.io/ContactlessPalm/ (accessed on 11 July 2021).

- Shao, H.; Zhong, D.; Du, X. Deep Distillation Hashing for Unconstrained Palmprint Recognition. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Sun, Z.; Tan, T.; Wang, Y.; Li, S.Z. Ordinal Palmprint Represention for Personal Identification. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR′05), San Diego, CA, USA, 20–25 June 2005. [Google Scholar]

- Kong, A.; Zhang, D.; Kamel, M. Palmprint identification using feature-level fusion. Pattern Recognit. 2006, 39, 478–487. [Google Scholar] [CrossRef]

- Fei, L.; Xu, Y.; Zhang, D. Half-orientation extraction of palmprint features. Pattern Recognit. Lett. 2016, 69, 35–41. [Google Scholar] [CrossRef]

- Genovese, A.; Piuri, V.; Plataniotis, K.N.; Scotti, F. PalmNet: Gabor-PCA Convolutional Networks for Touchless Palmprint Recognition. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3160–3174. [Google Scholar] [CrossRef]

| Layer | Configuration |

|---|---|

| Conv1 | Filter 16 × 3 × 3, st.4, pad 0, BN, PReLU |

| Max_pool | Filter 2 × 2, st.1, pad 0 |

| Conv2 | Filter 32 × 5 × 5, st.2, pad 2, BN, PReLU |

| Max_pool | Filter 2 × 2, st.1, pad 0 |

| Conv3 | Filter 64 × 3 × 3, st.1, pad 1, PReLU |

| Conv4 | Filter 64 × 3 × 3, st.1, pad 1, PReLU |

| Conv5 | Filter 128 × 3 × 3, st.1, pad 1, PReLU |

| Max_pool | Filter 2 × 2, st.1, pad 0 |

| Full6 | Length 2048 |

| Full7 | Length 2048 |

| Full8 | Length 128 |

| A ↔ B | ¬A | ||||

|---|---|---|---|---|---|

| B | −1 | 0 | 1 | ||

| A | −1 | 1 | 0 | −1 | 0 |

| 0 | 0 | 0 | 0 | 0 | |

| 1 | −1 | 0 | 1 | −1 | |

| Databases | PolyU | IITD | Multispectral | Tongji-Print |

|---|---|---|---|---|

| Collection | Touch | Touchless | Touchless | Touchless |

| Number of class | 378 | 460 | 500 | 600 |

| Number of samples per class | 20 | 5 | 12 | 20 |

| Total number of samples | 7560 | 2300 | 24,000 | 12,000 |

| Balanced and Normalized Network Parameters | Balanced Network Output | Tri-Valued Quantization | EER (%) |

|---|---|---|---|

| - | - | - | 3.0431 |

| √ | - | - | 0.0913 |

| - | √ | √ | 1.8691 |

| √ | - | √ | 0.0763 |

| √ | √ | - | 0.0850 |

| √ | √ | √ | 0.0673 |

| PolyU | IITD | Tongji | |

|---|---|---|---|

| PalmCode | 0.3500 | 5.4500 | 0.1100 |

| OrdinalCode | 0.2300 | 5.5000 | 0.1600 |

| FusionCode | 0.2400 | 6.2000 | 0.0731 |

| CompCode | 0.1200 | 5.5000 | 0.1100 |

| RLOC | 0.1300 | 5.0000 | 0.0253 |

| HOC | 0.1600 | 6.5500 | 0.0954 |

| DOC | 0.1800 | 6.2000 | 0.0431 |

| DCC | 0.1500 | 5.4900 | 0.0506 |

| DRCC | 0.1800 | 5.4200 | 0.0308 |

| BOCV | 0.0856 | 4.5600 | 0.0056 |

| DHPN | 0.0456 | 3.7310 | 0.0694 |

| PalmNet | 0.1110 | 4.2040 | 0.0332 |

| DHC | 0.0513 | 3.1180 | 0.0001 |

| DTC | 0.0302 | 2.9270 | 0.0000 |

| Ours | 0.0673 | 3.7960 | 0.0075 |

| Blue | Green | Red | NIR | |

|---|---|---|---|---|

| PalmCode | 0.2800 | 0.2500 | 0.2300 | 0.2000 |

| OrdinalCode | 0.1600 | 0.1500 | 0.0720 | 0.1100 |

| FusionCode | 0.3100 | 0.1900 | 0.1200 | 0.1700 |

| CompCode | 0.0911 | 0.1100 | 0.0357 | 0.0579 |

| RLOC | 0.0799 | 0.0855 | 0.0443 | 0.0629 |

| HOC | 0.1800 | 0.1600 | 0.1000 | 0.0839 |

| DOC | 0.1300 | 0.1200 | 0.0584 | 0.0501 |

| DCC | 0.1100 | 0.0979 | 0.0450 | 0.0575 |

| DRCC | 0.1100 | 0.0927 | 0.0659 | 0.0563 |

| BOCV | 0.0358 | 0.0593 | 0.0241 | 0.0261 |

| DHPN | 0.0213 | 0.0352 | 0.0369 | 0.0020 |

| PalmNet | 0.0178 | 0.0087 | 0.0366 | 0.0871 |

| DHC | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| DTC | 0.0000 | 0.0000 | 0.0000 | 0.0000 |

| Ours | 0.0018 | 0.0003 | 0.0087 | 0.0159 |

| Method | Storage | Method | Storage |

|---|---|---|---|

| PalmCode | 2048 | HOC | 2048 |

| OrdinalCode | 3072 | DOC | 2048 |

| FusionCode | 2048 | DRCC | 2048 |

| CompCode | 3072 | BOCV | 6144 |

| RLOC | 6144 | Ours | 128 |

| Method | Bit-Width (W/A) | Operation Type | Params (MB) | FLOPs (M) * | MACC (M) |

|---|---|---|---|---|---|

| DHPN (Feature Extraction + PCA) | 32/32 | Float | 527.76 | 30,816.89 | 13,621.10 |

| DHN | 32/32 | Float | 213.49 | 176.58 | 88.29 |

| DTC (Network + Quantification) | 32/32 | Float | 213.49 | 176.59 | 88.29 |

| Ours | 1/1 | Bitwise | 6.67 | - | 88.29 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Q.; Leng, L.; Kim, C. Double Quantification of Template and Network for Palmprint Recognition. Electronics 2023, 12, 2455. https://doi.org/10.3390/electronics12112455

Lin Q, Leng L, Kim C. Double Quantification of Template and Network for Palmprint Recognition. Electronics. 2023; 12(11):2455. https://doi.org/10.3390/electronics12112455

Chicago/Turabian StyleLin, Qizhou, Lu Leng, and Cheonshik Kim. 2023. "Double Quantification of Template and Network for Palmprint Recognition" Electronics 12, no. 11: 2455. https://doi.org/10.3390/electronics12112455

APA StyleLin, Q., Leng, L., & Kim, C. (2023). Double Quantification of Template and Network for Palmprint Recognition. Electronics, 12(11), 2455. https://doi.org/10.3390/electronics12112455