Fine-Grained Image Retrieval via Object Localization

Abstract

1. Introduction

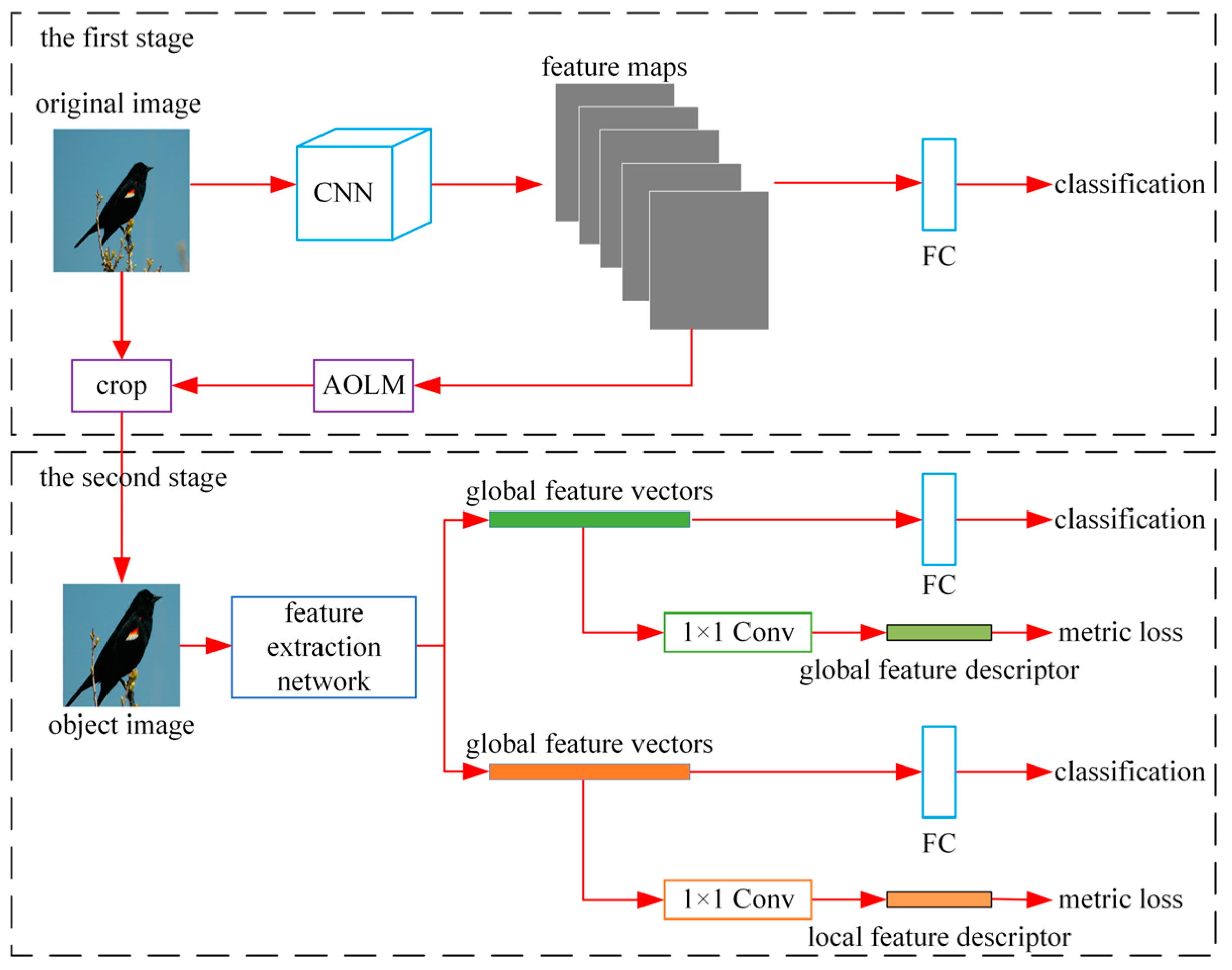

2. Method

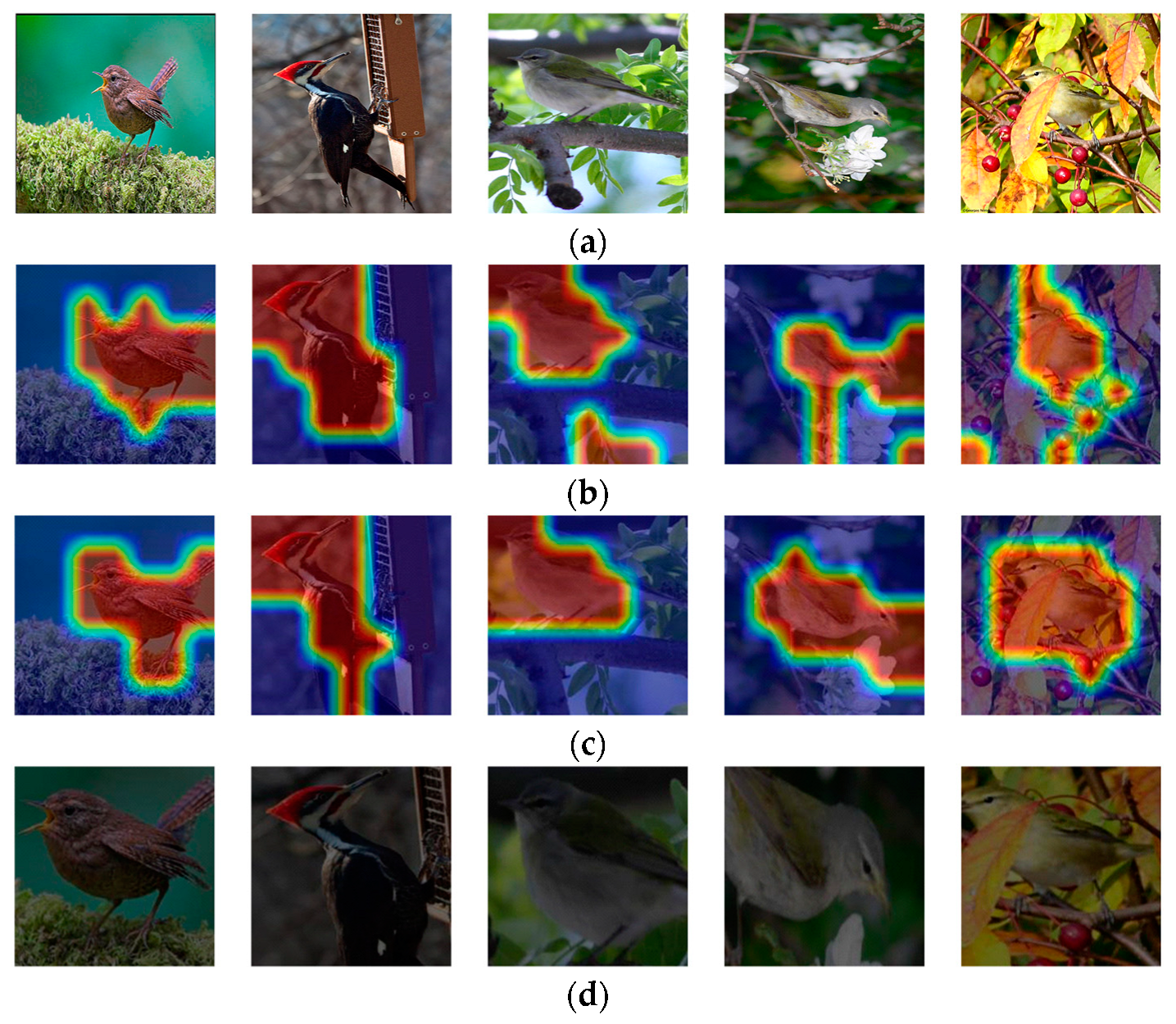

2.1. Design of the Object Localization Module

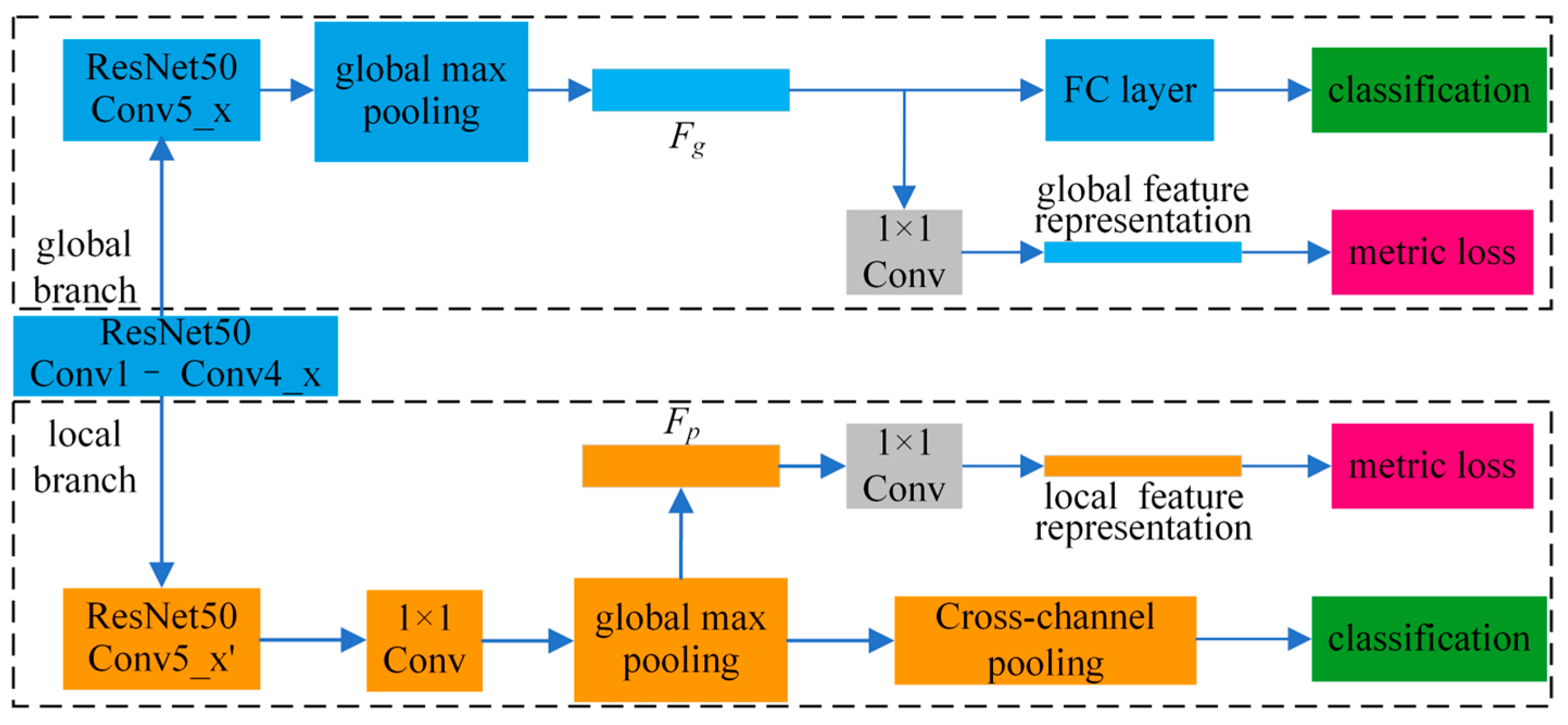

2.2. Design of the Networks for Extracting the Global and Local Features

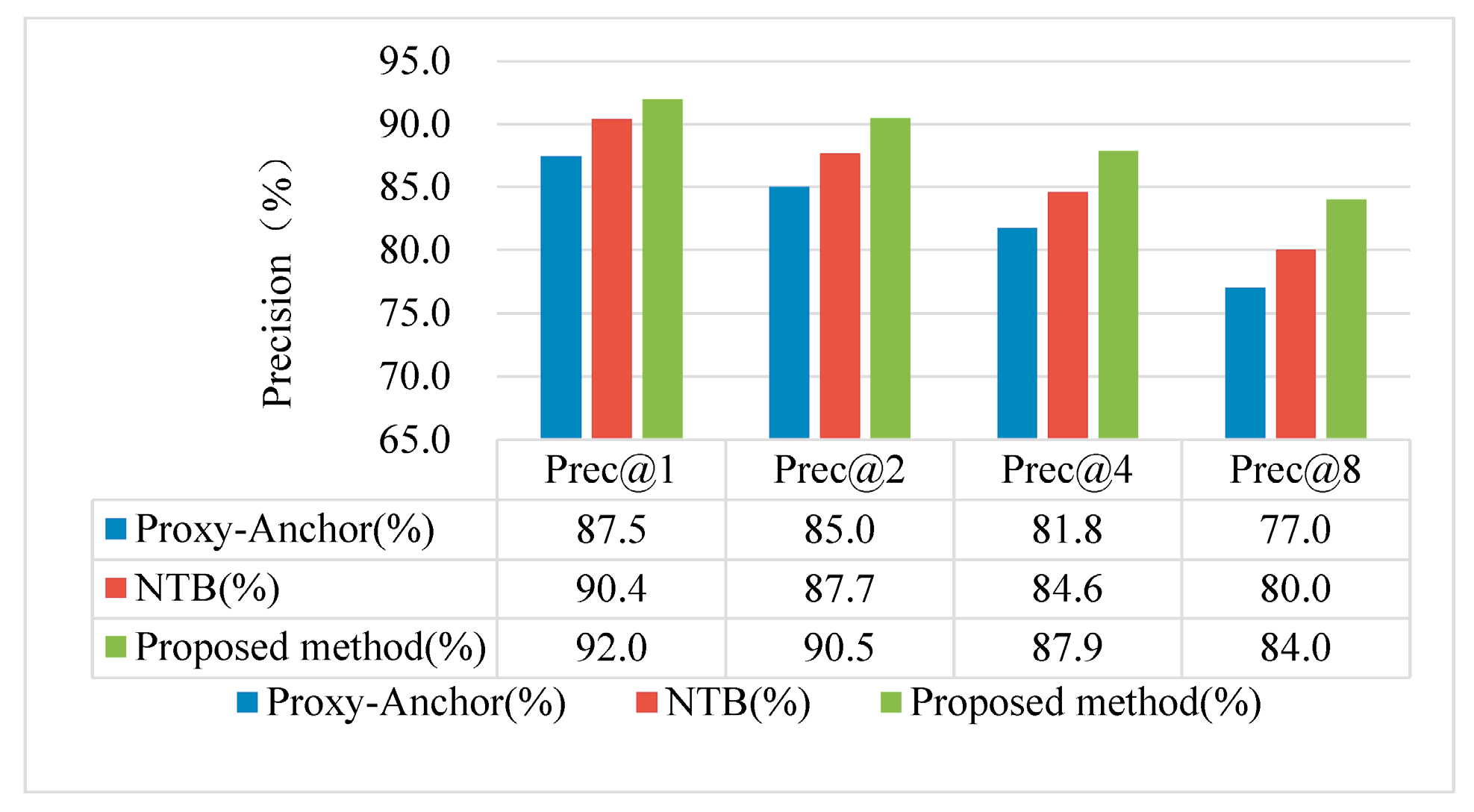

3. Results and Discussion

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Wei, X.; Song, Y.; Aodha, O.M.; Wu, J.; Peng, Y.; Tang, J.; Yang, J.; Belongie, S. Fine-grained image analysis with deep learning: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8927–8948. [Google Scholar] [CrossRef] [PubMed]

- Wei, X.; Xie, C.; Wu, J.; Shen, C. Mask-CNN: Localizing parts and selecting descriptors for fine-grained bird species categorization. Pattern Recognit. 2018, 76, 704–714. [Google Scholar] [CrossRef]

- Movshovitz-Attias, Y.; Toshev, A.; Leung, T.K.; Ioffe, S.; Singh, S. No Fuss Distance Metric Learning Using Proxies. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 360–368. [Google Scholar]

- Opitz, M.; Waltner, G.; Possegger, H.; Bischof, H. Bier-Boosting Independent Embeddings Robustly. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5189–5198. [Google Scholar]

- Xuan, H.; Souvenir, R.; Pless, R. Deep Randomized Ensembles for Metric Learning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 723–734. [Google Scholar]

- Akhmetzianov, A.V.; Kushner, A.G.; Lychagin, V.V. Multiphase Filtration in Anisotropic Porous Media. In Proceedings of the 2018 14th International Conference ‘Stability and Oscillations of Nonlinear Control Systems’ (STAB), Moscow, Russia, 30 May–1 June 2018; pp. 1–2. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Babenko, A.; Slesarev, A.; Chigorin, A.; Lempitsky, V. Neural Codes for Image Retrieval. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 584–599. [Google Scholar]

- Schroff, F.; Kalenichenko, D.; Philbin, J. Facenet: A Unified Embedding for Face Recognition and Clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 815–823. [Google Scholar]

- Sohn, K. Improved Deep Metric Learning with Multi-Class N-Pair Loss Objective. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 1857–1865. [Google Scholar]

- Zheng, X.; Ji, R.; Sun, X.; Wu, Y.; Huang, F.; Yang, Y. Centralized Ranking Loss with Weakly Supervised Localization for Fine-Grained Object Retrieval. In Proceedings of the 27th International Joint Conferences on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018; pp. 1226–1233. [Google Scholar]

- Wei, X.-S.; Luo, J.-H.; Wu, J.; Zhou, Z.-H. Selective convolutional descriptor aggregation for fine-grained image retrieval. IEEE Trans. Image Process. 2017, 26, 2868–2881. [Google Scholar] [CrossRef] [PubMed]

- Zheng, X.; Ji, R.; Sun, X.; Zhang, B.; Wu, Y.; Huang, F. Towards Optimal Fine Grained Retrieval via Decorrelated Centralized Loss with Normalize-Scale Layer. In Proceedings of the 33rd AAAI Conference on Artificial Intelligence (AAAI), Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 9291–9298. [Google Scholar]

- Zeng, X.; Zhang, Y.; Wang, X.; Chen, K.; Li, D.; Yang, W. Fine-Grained image retrieval via piecewise cross entropy loss. Image Vis. Comput. 2020, 93, 103820.1–103820.6. [Google Scholar] [CrossRef]

- Kim, S.; Kim, D.; Cho, M.; Kwak, S. Proxy Anchor Loss for Deep Metric Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3235–3244. [Google Scholar]

- Cao, G.; Zhu, Y.; Lu, X. Fine-Grained Image Retrieval via Multiple Part-Level Feature Ensemble. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Wang, R.; Zou, W.; Lin, X.; Wang, J. Learning Discriminative Features for Fine-Grained Image Retrieval. In Proceedings of the IEEE 6th International Conference on Computer and Communications (ICCC), Chengdu, China, 11–14 December 2020; pp. 1915–1919. [Google Scholar]

| Model | Recall@1 | Recall@2 | Recall@4 | Recall@8 |

|---|---|---|---|---|

| P | 0.7370 | 0.8293 | 0.8928 | 0.9343 |

| P + G | 0.7591 | 0.8385 | 0.8942 | 0.9367 |

| AOLM + P + G | 0.7762 | 0.8600 | 0.9104 | 0.9441 |

| AOLM + P | 0.7608 | 0.8457 | 0.9026 | 0.9392 |

| AOLM + G | 0.7227 | 0.8125 | 0.8137 | 0.9178 |

| Method | Recall@1 | Recall@2 | Recall@4 | Recall@8 |

|---|---|---|---|---|

| SCDA [14] | 62.6 | 74.2 | 83.2 | 90.1 |

| CRL-WSL [13] | 65.9 | 76.5 | 85.3 | 90.3 |

| DGCRL [15] | 67.9 | 79.1 | 86.2 | 91.8 |

| PCE [16] | 70.1 | 79.8 | 86.9 | 92.0 |

| Proxy-Anchor [17] | 69.9 | 79.6 | 86.6 | 91.4 |

| MPFE [18] | 69.3 | 79.9 | 87.3 | 92.1 |

| NTB [19] | 72.2 | 81.1 | 87.5 | 92.3 |

| Proposed method | 77.6 | 86.0 | 91.0 | 94.4 |

| Methods | Recall@1 | Recall@2 | Recall@4 | Recall@8 |

|---|---|---|---|---|

| SCDA | 58.5 | 69.8 | 79.1 | 86.2 |

| CRL-WSL | 63.9 | 73.7 | 82.1 | 89.2 |

| DGCRL | 75.9 | 83.9 | 89.7 | 94.0 |

| PCE | 86.7 | 91.7 | 95.2 | 97.0 |

| Proxy-Anchor | 87.7 | 92.7 | 95.5 | 97.3 |

| MPFE | 86.3 | 91.7 | 95.0 | 97.1 |

| NTB | 90.4 | 94.5 | 96.6 | 98.0 |

| Proposed method | 92.0 | 95.3 | 97.1 | 98.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Zou, W.; Wang, J. Fine-Grained Image Retrieval via Object Localization. Electronics 2023, 12, 2193. https://doi.org/10.3390/electronics12102193

Wang R, Zou W, Wang J. Fine-Grained Image Retrieval via Object Localization. Electronics. 2023; 12(10):2193. https://doi.org/10.3390/electronics12102193

Chicago/Turabian StyleWang, Rong, Wei Zou, and Jiajun Wang. 2023. "Fine-Grained Image Retrieval via Object Localization" Electronics 12, no. 10: 2193. https://doi.org/10.3390/electronics12102193

APA StyleWang, R., Zou, W., & Wang, J. (2023). Fine-Grained Image Retrieval via Object Localization. Electronics, 12(10), 2193. https://doi.org/10.3390/electronics12102193