Contrastive Learning via Local Activity

Abstract

:1. Introduction

- We propose a new autoencoder training algorithm, named the local activity contrast (LAC). The LAC algorithm can train the AE/CAE without using gradient backpropagation (BP); each layer of the network has its locally defined loss function.

- We demonstrate that the LAC algorithm is a useful unsupervised pretraining method, and does not require using negative pairs, momentum encoders, or complex augmentation treatment. Our experiments demonstrate that deep convolutional networks pretrained with the LAC show improved classification/detection performance on standard datasets (e.g., MNIST, CIFAR-10, CIFAR-100, Tiny-ImageNet, ImageNet, and COCO) after fine-tuning.

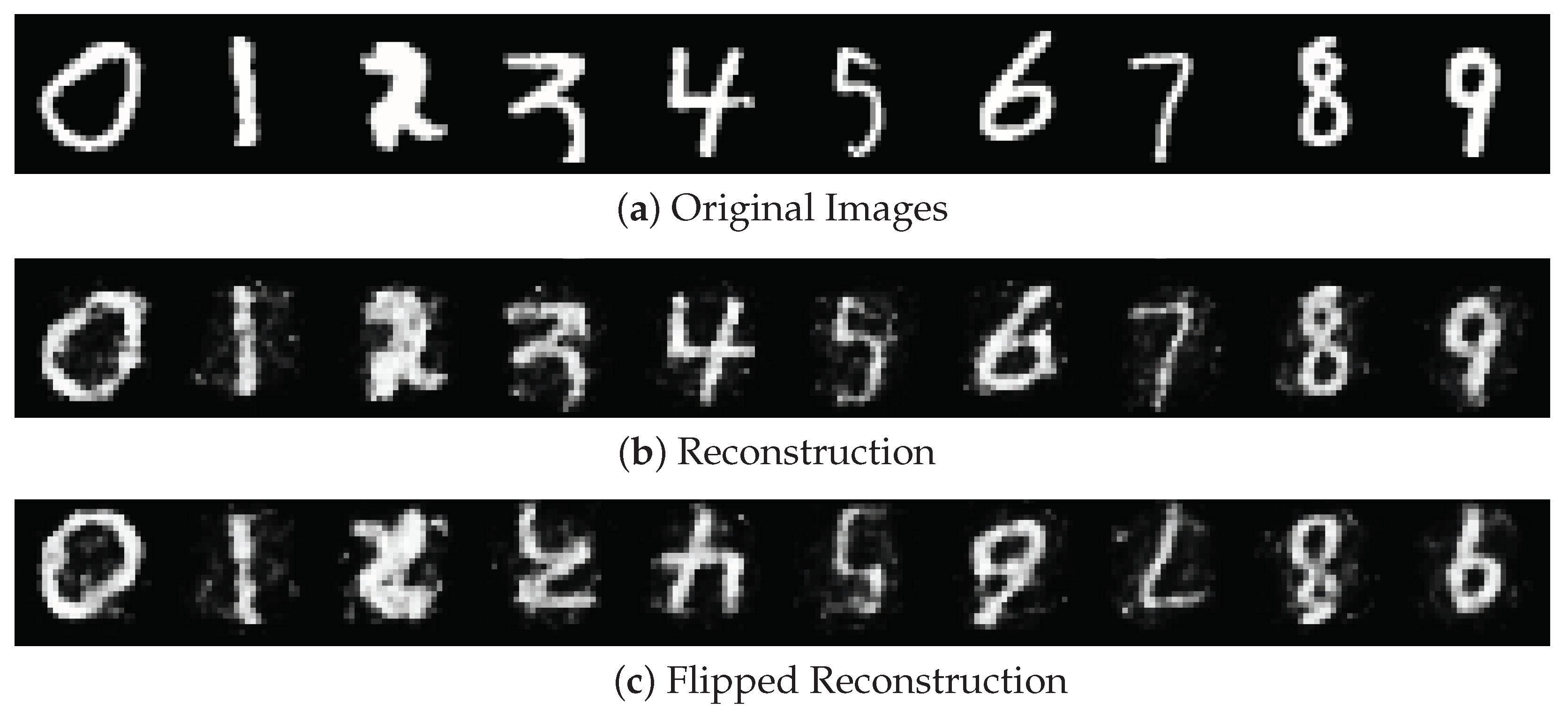

- We show that the LAC algorithm can effectively learn representations based on the image reconstruction pretext task. In contrast, gradient back-propagation could lead to over-fitting the pretext task and limiting the quality of learned representation.

2. Related Work

3. Method

| Algorithm 1 Pseudocode of LAC in a Pytorch-like style. |

| # model: the networks output is : # h_i,h_j: concatenation of all the hidden outputs # o_i,o_j: outputs of the output layer # h_opt: the optimizer of the hidden layers # o_opt: the optimizer of the output layer # detach() : stop the gradient flow into the other layers for x in dataloader: #load a minibatch-size data x h_i, o_i = model(x) # target activation h_j, o_j = model(o_i) # prediction activation # Compute hidden layers losses h_loss = torch.sum((h_i.detach()-h_j)**2) # Compute output layer loss o_loss = torch.sum((o_i-x)**2) # update: hidden layers h_opt.zero_grad() h_loss.backward(retain_graph=True) h_opt.step() # update: output layer o_opt.zero_grad() o_loss.backward() o_opt.step() |

4. Experiments

4.1. Implementation Details

4.2. LAC for Training an Autoencoder

LAC for Convolutional AE (CAE)

4.3. LAC for Unsupervised Pretraining

4.3.1. MNIST Classification

4.3.2. CIFAR-10/CIFAR-100 Classification

4.3.3. Tiny ImageNet Classification

4.3.4. COCO Detection and Segmentation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R. Momentum Contrast for Unsupervised Visual Representation Learning. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G.E. A Simple Framework for Contrastive Learning of Visual Representations. In Proceedings of the 37th International Conference on Machine Learning, ICML 2020, Virtual Event, 13–18 July 2020; Volume 119, pp. 1597–1607. [Google Scholar]

- Chen, X.; Fan, H.; Girshick, R.B.; He, K. Improved Baselines with Momentum Contrastive Learning. arXiv 2020, arXiv:2003.04297. [Google Scholar]

- Zhu, J.; Liu, S.; Yu, S.; Song, Y. An Extra-Contrast Affinity Network for Facial Expression Recognition in the Wild. Electronics 2022, 11, 2288. [Google Scholar] [CrossRef]

- Zhao, D.; Yang, J.; Liu, H.; Huang, K. Specific Emitter Identification Model Based on Improved BYOL Self-Supervised Learning. Electronics 2022, 11, 3485. [Google Scholar] [CrossRef]

- Liu, B.; Yu, H.; Du, J.; Wu, Y.; Li, Y.; Zhu, Z.; Wang, Z. Specific Emitter Identification Based on Self-Supervised Contrast Learning. Electronics 2022, 11, 2907. [Google Scholar] [CrossRef]

- Wu, Z.; Xiong, Y.; Yu, S.X.; Lin, D. Unsupervised feature learning via non-parametric instance discrimination. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Hjelm, R.D.; Fedorov, A.; Lavoie-Marchildon, S.; Grewal, K.; Bachman, P.; Trischler, A.; Bengio, Y. Learning deep representations by mutual information estimation and maximization. arXiv 2018, arXiv:1808.06670. [Google Scholar]

- Ye, M.; Zhang, X.; Yuen, P.C.; Chang, S.F. Unsupervised embedding learning via invariant and spreading instance feature. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Hadsell, R.; Chopra, S.; Lecun, Y. Dimensionality Reduction by Learning an Invariant Mapping. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; 2, pp. 1735–1742. [Google Scholar]

- Chen, T.; Kornblith, S.; Swersky, K.; Norouzi, M.; Hinton, G.E. Big Self-Supervised Models are Strong Semi-Supervised Learners. In Proceedings of the NeurIPS 2020, Virtual, 6–12 December 2020. [Google Scholar]

- Chen, X.; Xie, S.; He, K. An Empirical Study of Training Self-Supervised Vision Transformers. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, ICCV 2021, Montreal, QC, Canada, 10–17 October 2021; pp. 9620–9629. [Google Scholar]

- Chen, X.; He, K. Exploring Simple Siamese Representation Learning. In Proceedings of the CVPR 2021, Virtual, 19–25 June 2021; pp. 15750–15758. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the ICCV, Montreal, QC, Canada, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Zbontar, J.; Jing, L.; Misra, I.; LeCun, Y.; Deny, S. Barlow Twins: Self-Supervised Learning via Redundancy Reduction. In Proceedings of the ICML 2021, Virtual, 18–24 July 2021; Meila, M., Zhang, T., Eds.; Volume 139, pp. 12310–12320. [Google Scholar]

- Lee, D.; Zhang, S.; Fischer, A.; Bengio, Y. Difference Target Propagation. In Proceedings of the Machine Learning and Knowledge Discovery in Databases, Porto, Portugal, 7–11 September 2015. [Google Scholar]

- Bengio, Y.; Lee, D.; Bornschein, J.; Lin, Z. Towards Biologically Plausible Deep Learning. arXiv 2015, arXiv:1502.04156v3. [Google Scholar]

- Choromanska, A.; Cowen, B.; Kumaravel, S.; Luss, R.; Rigotti, M.; Rish, I.; Kingsbury, B.; DiAchille, P.; Gurev, V.; Tejwani, R.; et al. Beyond Backprop: Online Alternating Minimization with Auxiliary Variables. arXiv 2018, arXiv:1806.09077. [Google Scholar]

- Widrow, B.; Greenblatt, A.; Kim, Y.; Park, D. The No-Prop algorithm: A new learning algorithm for multilayer neural networks. Neural Netw. 2013, 37, 182–188. [Google Scholar] [CrossRef] [PubMed]

- Nøkland, A.; Eidnes, L.H. Training neural networks with local error signals. arXiv 2019, arXiv:1901.06656. [Google Scholar]

- Ma, W.K.; Lewis, J.P.; Kleijn, W.B. The HSIC Bottleneck: Deep Learning without Back-Propagation. arXiv 2019, arXiv:1908.01580. [Google Scholar] [CrossRef]

- Tishby, N.; Pereira, F.; Bialek, W. The information bottleneck method. arXiv 2000, arXiv:physics/0004057. [Google Scholar]

- Shwartzziv, R.; Tishby, N. Opening the Black Box of Deep Neural Networks via Information. arXiv 2017, arXiv:1703.00810. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Sacramento, J.; Costa, R.P.; Bengio, Y.; Senn, W. Dendritic cortical microcircuits approximate the backpropagation algorithm. Adv. Neural Inf. Process. Syst. 2018, 31, 8721–8732. [Google Scholar]

- Hinton, G.E.; Mcclelland, J.L. Learning Representations by Recirculation. In Neural Information Processing Systems 0 (NIPS 1987); American Institute of Physics: College Park, MD, USA, 1988; pp. 358–366. [Google Scholar]

- Hinton, G.E. Training products of experts by minimizing contrastive divergence. Neural Comput. 2002, 14, 1771–1800. [Google Scholar] [CrossRef] [PubMed]

- Lillicrap, T.P.; Cownden, D.; Tweed, D.B.; Akerman, C.J. Random feedback weights support learning in deep neural networks. arXiv 2014, arXiv:1411.0247. [Google Scholar]

- Hinton, G. The Forward-Forward Algorithm: Some Preliminary Investigations. Available online: https://www.cs.toronto.edu/~hinton/FFA13.pdf (accessed on 20 December 2022).

- He, K.; Chen, X.; Xie, S.; Li, Y.; Dollár, P.; Girshick, R. Masked autoencoders are scalable vision learners. arXiv 2021, arXiv:2111.06377. [Google Scholar]

- Xie, Z.; Zhang, Z.; Cao, Y.; Lin, Y.; Bao, J.; Yao, Z.; Dai, Q.; Hu, H. Simmim: A simple framework for masked image modeling. arXiv 2021, arXiv:2111.09886. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014. [Google Scholar]

- Kalantidis, Y.; Sariyildiz, M.B.; Pion, N.; Weinzaepfel, P.; Larlus, D. Hard Negative Mixing for Contrastive Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 21798–21809. [Google Scholar]

| Pretraining Method | Linear Evaluation | Fine-Tuning |

|---|---|---|

| No pretraining | 46.75 ± 0.05 | 96.08 ± 0.06 |

| BP pretraining | 93.75 ± 0.06 | 94.10 ± 0.06 |

| LAC pretraining | 88.25 ± 0.08 | 96.18 ± 0.06 |

| Pretraining Method | CIFAR-10 | CIFAR-100 | Tiny ImageNet |

|---|---|---|---|

| No pretraining | 95.38 ± 0.2 | 75.61 ± 0.2 | 62.86 ± 0.3 |

| MOCO [1] | 95.40 ± 0.1 | 76.02± 0.1 | 63.38± 0.2 |

| SimCLR [2] | 94.00 ± 0.1 | 76.50 ± 0.1 | 63.50± 0.2 |

| LAC | 95.72 ± 0.1 | 76.59 ± 0.1 | 63.78 ± 0.2 |

| Method | ||||||

|---|---|---|---|---|---|---|

| MoCo v2 * [3] | 34.2 | 55.4 | 36.2 | 39.0 | 58.6 | 41.9 |

| MoCHi * [35] | 34.4 | 55.6 | 36.7 | 39.2 | 58.8 | 42.4 |

| LAC | 34.6 | 56.0 | 36.9 | 39.6 | 59.1 | 42.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Chen, Y.; Hu, G.; Yu, S. Contrastive Learning via Local Activity. Electronics 2023, 12, 147. https://doi.org/10.3390/electronics12010147

Zhu H, Chen Y, Hu G, Yu S. Contrastive Learning via Local Activity. Electronics. 2023; 12(1):147. https://doi.org/10.3390/electronics12010147

Chicago/Turabian StyleZhu, He, Yang Chen, Guyue Hu, and Shan Yu. 2023. "Contrastive Learning via Local Activity" Electronics 12, no. 1: 147. https://doi.org/10.3390/electronics12010147

APA StyleZhu, H., Chen, Y., Hu, G., & Yu, S. (2023). Contrastive Learning via Local Activity. Electronics, 12(1), 147. https://doi.org/10.3390/electronics12010147