1. Introduction

In the information era with big data, large scale global optimization problems with high dimension are widespread in diverse practical areas, such as finance and economy [

1], parameter estimation [

2], machine learning [

3] and image processing [

4]. These issues cannot be effectively addressed by the traditional optimization algorithms [

5,

6,

7,

8,

9] because of high dimension, nonlinear, non-differential and even no special express. In this context, meta-heuristic algorithms, as an intelligent computing method, have attracted the strong attention of scholars and have been favorably received by engineers.

Different from the traditional optimization algorithms based on gradient information, meta-heuristic algorithms adopt a “trial and error” mechanism [

10] in the search to find the best solution possible in the iterative procedure. This mechanism, with convenient implementation, can easily discover one high-quality solution with less computation cost for optimization problems. Therefore, meta-heuristic algorithms can be regarded as a better way to solve large scale global optimization problems. To date, driven by numerous diverse practical optimization problems, many meta-heuristic algorithms have been investigated by scholars, such as particle swarm optimization (PSO) [

11], crisscross optimization algorithm (CSO) [

12], honey badger algorithm (HBA) [

13], ant colony optimization (ACO) [

14], artificial bee colony (ABC) [

15], fish swarm optimization (FSO) [

16], grey wolf optimizer (GWO) [

17], tunicate swarm algorithm (TSA) [

18], etc.

The complexity of large scale optimization problems increases exponentially as the number of dimensions increase, leading to the “curse of dimensionality” [

19]. Although meta-heuristic algorithms exhibit better performance for some practical problems, they still suffer from the puzzle of low convergence and precision, especially for large scale optimization problems. Therefore, it is still a significant research topic as to how meta-heuristic algorithms can remain at faster convergence rates, yet avoid the premature phenomenon. To overcome these drawbacks, much research has been performed to improve the performance of the standard algorithm by modifying parameters or introducing various mechanisms, and remarkable results have been achieved.

Whale optimization algorithm [

20] has been proved to exhibit better optimization capability in the engineering and industry field [

21], but still has disadvantages such as imbalance between exploration and exploitation, premature convergence and low accuracy. Hitherto, numerous improved versions of WOA have been presented by many scholars. In [

22,

23,

24], the strategy of constructing nonlinear parameters was introduced into WOA to improve its performance, by better tuning the exploitation and exploration capability. To enhance the optimization ability of WOA for large scale optimization problems, Sun et al. [

25] employed a nonlinear convergence factor based on cosine function to balance the global and local search process, and improved the avoidant ability of local minimum through a Lévy flight strategy. Similarly, Abdel-Basset et al. [

26] adopted Lévy flight and logical chaos mapping to construct an improved WOA. Jin et al. [

27] constructed a modified whale optimization algorithm containing three aspects: firstly, different evaluation and random operators were utilized to strengthen the capability of exploration; secondly, Lévy flight-based a step factor to enhance the capability of exploitation; and thirdly, an adaptive weight was constructed to tune the progress of exploration and exploitation. Saafan et al. [

28] presented a hybrid algorithm integrating an improved whale optimization algorithm with salp swarm algorithm. Firstly, an improved WOA based on exponential convergence factor was designed, and then, according to the condition given in advance, the hybrid algorithm selected the improved WOA or salp swarm algorithm to perform the optimization task. El-Aziz et al. [

29] constructed a hyper-heuristic DEWOA combing WOA and DE, where the DE algorithm was adopted to automatically select one chaotic mapping and one opposition-based learning, to gain an initial population with high-quality and enhance the ability of exploration and escaping from local solutions. Chakraborty et al. [

30] constructed an improved whale optimization algorithm by modifying parameters to solve high dimension problems. Nadimi-Shahraki et al. [

31] utilized whale optimization algorithm and moth flame optimization algorithm to present an effective hyper-heuristic algorithm WMFO to solve optimal power flow problems of complex structure. Nadimi-Shahraki et al. [

32] adopted Levy motion and Brownian motion to achieve a better balance between the exploration and exploitation of whale optimization algorithm. Liu et al. [

33] proposed an enhanced global exploration whale optimization algorithm (EGE-WOA) for complex multi-dimensional problems. EGE-WOA employed Levy flights to improve the ability of its global exploration, and adopted new convergent dual adaptive weights to perfect the convergence procedure. On the whole, these improved variants of WOA have displayed better performance for dealing with optimization problems. However, according to the theorem of no free lunch for optimizations [

34], they still face, somewhat, defects of plaguing into local optima and premature convergence when dealing with large scale optimization problems with higher dimension.

In order to enhance the optimization ability of WOA to solve large scale optimization problems, this paper presents a new modified WOA algorithm, namely, MWOA-CS. MWOA-CS is composed of a modified WOA and crisscross optimization algorithm, which contains the following improvements: (1) the design of a new nonlinear convergence factor to improve the relationship between the exploration and exploitation of WOA; (2) a cosine-based inertia weight is introduced into WOA to further optimize the optimization procedure; (3) in each iteration, each dimension randomly performs a modified whale optimization algorithm or crisscross optimization algorithm. This update mechanism will randomly divide the population dimension into parts, utilizing the exploration capability of WOA and the exploitation ability of CSO to reduce the search blind spots and avoid the premature phenomena in some dimensions; (4) a large number of numerical experiments are carried out to verify that the proposed MWOA-CS displays better performance for large scale global optimization problems.

The rest of this paper is organized as follows.

Section 2 presents a brief conception of WOA, followed by an introduction of the detailed improvements and contributions of the proposed MWOA-CS in

Section 3. In

Section 4, a discussion and analysis of numerical experiments are presented. Finally,

Section 5 presents the conclusions of this paper.

3. Some Improvements of WOA

Although WOA outperforms some meta-heuristic algorithms, such as PSO, ABC and DE [

21], it still contains some defects of low precision and premature convergence, especially when solving large scale optimization problems. In order to overcome these shortcomings, a new improved WOA is proposed by constructing nonlinear parameters and introducing other search methods in this section. The following details the construction of improvements in the MWOA-CS.

3.1. Nonlinear Convergence Factor

In the basic WOA, the convergence factor

tunes the global and local search phase by controlling the value of

. According to Equation (2), the larger the value of

is, the stronger the global search capability is. On the contrary, the smaller the value of

is, the stronger the local search ability is. When dealing with optimization problems, the ideal optimization process is to have a strong global search ability with a fast convergence speed, and meanwhile, maintain fast convergence with high accuracy. Obviously, the linear decrement strategy of

does not meet this expectation. Based on the characteristic of power function, a nonlinear convergence factor

is proposed, and its update formula is as follows:

where

is a nonlinear regulation coefficient, depicting the curve smoothness of parameter

.

Figure 2 illustrates the change curves of

under different values of

. Large amounts of numerical results on test functions describe a fact that compared with the strategy of linearly decreasing, nonlinear strategy is more beneficial in enhancing the optimization ability of the algorithm.

3.2. Nonlinear Inertia Weight

As a significant parameter in PSO, inertia weight represents an influence factor of the current optimal solution on population location update. A larger inertia weight value indicates a larger renewal step and enhances the ability to escape from local solutions, which is conductive to global exploration. Meanwhile a smaller inertia weight value can perform a more fine-grained local search to improve the convergence precision. Hence, an intuitive perception is that the weight value decreases along with the iterative process, which is more beneficial to the balance between exploration ability and exploitation ability. However, in the standard WOA, the inertia weight value is set to 1 during the whole optimization procedure. Although this strategy can ensure the performance of algorithm, it may be not optimal. How to construct an appropriate dynamic inertia weight to improve the performance of WOA is of great significance to solve optimization problems effectively. In this paper, a nonlinear inertia weight function is proposed:

Obviously,

is a cosine function with the period

. The parameter

controls the change period of

.

Figure 3 illustrates the variation curves of

under different

during the whole optimization procedure. The value of

is determined by a large number of numerical experiments as

for unimodal function and

for multimodal function, which implies that

. According to

Figure 3, the value of

decreases first, and then increases in the whole optimization process, which is not in line with our intuitive cognition and has guiding significance of the construction of inertia weight.

The new update mechanism of the modified WOA can be expressed as follows:

The presented cosine-based inertia weight strategy promotes the WOA to dynamically adjust the value of the inertia weight with respect to the iterative number (seen in

Figure 3). This strategy enables the optimal whale position to provide a different effect for other individuals to renew their location in the iterative procedure, and further tunes the exploration ability and exploitation ability of the standard WOA algorithm.

3.3. Proposed MWOA-CS

WOA exhibits poor convergence rate and accuracy when dealing with large scale global optimization problems. This may be due to the complexity of optimization problems, that the update mechanism of WOA cannot reach certain search blind spots and that some dimensions drop into local optimum. Meanwhile we observed that the whale optimization algorithm has better exploration ability. Crisscross optimization algorithm (CSO) [

12] is created by using a horizontal crossover operator in addition to a vertical crossover operator, which has better accuracy when dealing with low dimensional problems. Inspired by this, to enhance the optimization ability of WOA, CSO is embedded into the improved WOA to construct a hybrid algorithm (MWOA-CS) in this paper. In MWOA-CS, each dimension randomly performs MWOA or CSO within the feasible region of optimization problems, which can enhance the exploration ability of the algorithm. On the whole, according to the diversity of the current whale population, the problem dimension is randomly divided into two parts. One part executes MWOA, and the other performs CSO.

The variation of population during the optimization process can be defined as [

35]:

where

is the

th value of average solution

, and it is computed as follows:

and then,

is normalized using the logistic function (

).

According to the value of , the current whale population is randomly divided into two sub populations and in terms of dimension, namely,. Then with the dimension performs the WOA, and with the dimension is updated by the CSO. The specific update formulas of CSO are as follows:

Horizontal crossover:

where

and

are random values, and

.

and

are candidate solutions that are the offspring of

and

, respectively.

Vertical crossover:

where

represents a random number. That is, the

th and

th dimension of the individual

are chosen to conduct the vertical crossover operation and yield an offspring

. It is noted that

,

and

are compared with their parents, and the individual with better fitness is retained to enter the next iteration.

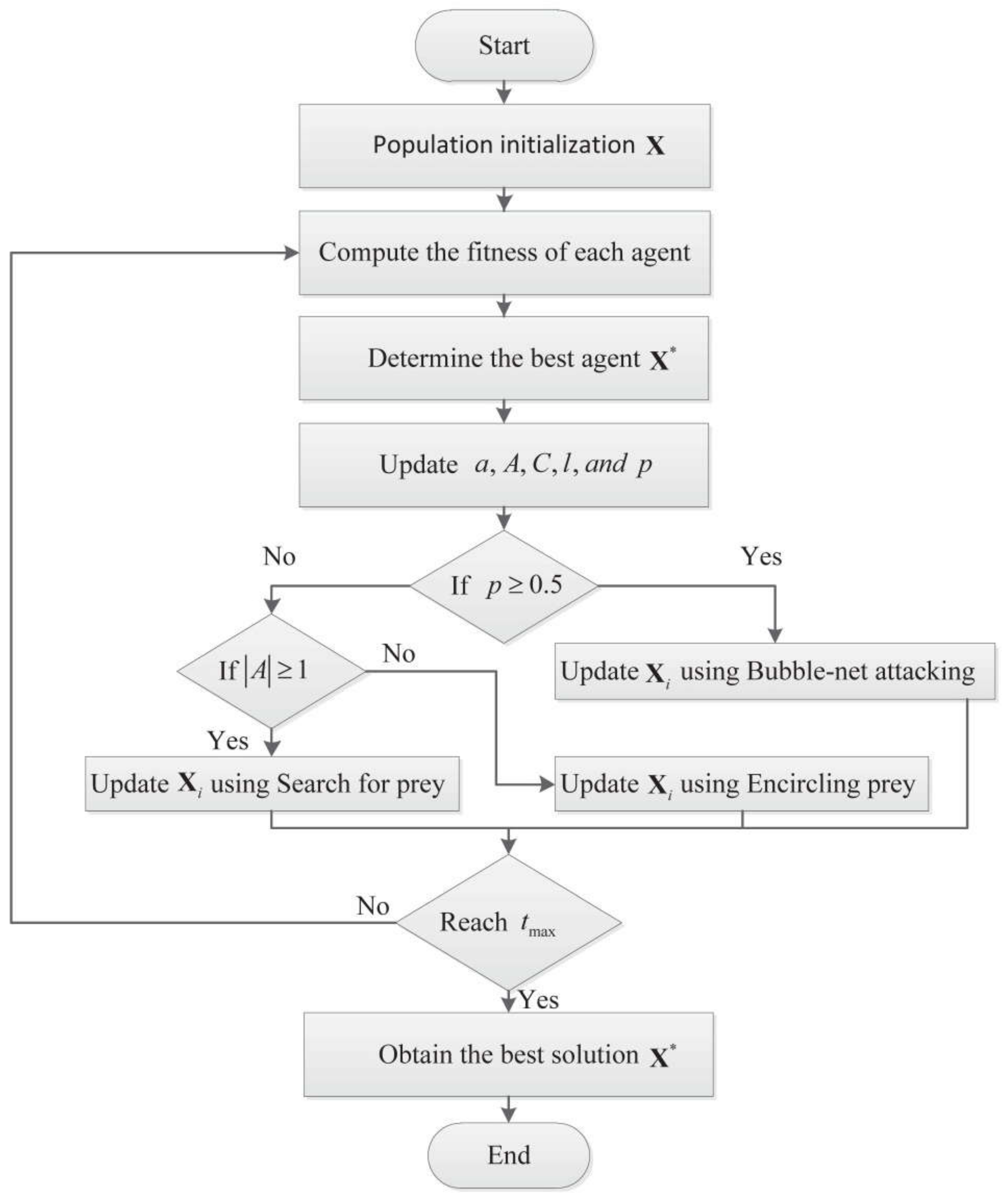

3.4. The Pseudo of MWOA-CS

The above-mentioned contents detail the improvement strategies of basic WOA to enhance its performance. The corresponding pseudo code of the modified WOA algorithm (MWOA-CS) is described in Algorithm 1.

| Algorithm 1: The description of MWOA-CS |

| Input: Population size

, Maximum iteration

, Problem dimension

|

|

1: Generate the whale population

|

|

2: Evaluate the fitness of each individual and achieve the best individual

|

|

3: While do |

|

4: Calculate

and by the Equations (10) and (11), respectively |

|

5: For do |

|

6: Compute

of the current population based on Equation (14) |

|

7:

, update the

and generate a random number

|

|

8: For do |

|

9: If then |

|

10: Ifthen |

|

11: Update the position of

by Equation (12) |

|

12: Else |

|

13: Select one random individual

|

|

14: Update the position of

by Equation (13) |

|

15: EndIf |

|

16: Else |

|

17: Update the position of

by Equation (12) |

|

18: EndIf |

|

19: EndFor |

|

20: EndFor |

|

21: If then |

|

22: Update the positions of the sub population

by Equations (16–18) |

|

23: EndIf |

|

24: Evaluate the fitness of each individual and Update

|

|

25:

|

|

26: End While |

| Output: The optimal solution

|

4. Experimental Results and Analysis

This section conducts numerical experiments to verify and discuss the optimization ability of proposed MWOA-CS on 30 large scale global optimization problems, as listed in

Table 1. MWOA-CS is compared with five other meta-heuristic algorithms in aspects of convergence speed and precision, in addition to stability. The comparison algorithms selected in this paper are ESPSO [

11], HBA [

13], CSO [

12], GWO [

36] and WOA [

20]. All experiments are programmed using Matlab and executed on a computer with Core

with

GHz and

main memory.

4.1. Benchmark Functions and Experimental Settings

According to the number of local optimal solutions, the benchmark functions to verify the performance of MWOA-CS are divided into two categories: unimodal function (UM) and multimodal function (MM). with only one global minimum represents the unimodal function, which is employed to test the exploitation ability of the studied meta-heuristic algorithm. with multiple local optimums are the multimodal functions, which are utilized to measure the exploration ability of a meta-heuristic algorithm. The dimensions of all test functions are taken as 300, 500 and 1000, respectively.

For convenience, the parameter settings among the selected comparative algorithms are shown in

Table 2. Meanwhile, due to containing random factors, each algorithm deals with each test function 30 times independently, for the accuracy of the experimental results.

4.2. Comparison and Analysis of Statistical Results

In the numerical experiments, the dimension of benchmark functions is set to 300, 500 and 1000, respectively. The experimental results on different dimensions are presented in

Table 3,

Table 4 and

Table 5, where the better results are highlighted in bold. Mean and standard deviation (std) are significant indicators to measure the performance of the meta heuristic algorithm. Based on the

Table 3,

Table 4 and

Table 5, the following conclusions are drawn:

(1) For the unimodal functions, the proposed MWOA-CS exhibits significantly better exploitation performance than the five other algorithms. Moreover, with the increase in benchmark functions, the performance of MWOA-CS is not affected, while the other comparison algorithms are damaged in some cases. For and , although HBA can obtain the best std as MWOA-CS, its solution accuracy is still inferior to MWOA-CS.

(2) For the multimodal functions, MWOA-CS displays a good exploration ability except for and . When dealing with and , MWOA-CS is the most efficient method, compared with the others. For and , both MWOA-CS and HBA can achieve the global solution, and WOA is the third most effective algorithm. For and , MWOA-CS, WOA and HBA show the best optimization performance, followed by CSO. For , MWOA-CS shows the best performance with respect to accuracy, but the standard deviation is inferior to ESPSO, HBA and WOA, and stronger than CSO and GWO. For and , CSO exhibits the best competitive method, and MWOA-CS the second best, followed by WOA.

Moreover, Wilcoxon rank sum test [

37] was adopted to statistically evaluate the performance of MWOA-CS. The results of all test functions with dimensions ranging from 300 to 1000 are shown in

Table 5. The

achieved from Wilcoxon rank sum test indicates the difference between MWOA-CS and the comparison method, where the significance level is 0.05.

indicates that MWOA-CS has more significant statistical advantage than the comparison algorithm;

indicates that the optimal solutions of the comparison algorithms are similar;

indicates that the statistical performance of MWOA-CS is weaker than the comparison algorithm. The results illustrate that the

of most test functions is less than 0.05, indicating that MWOA-CS can effectively solve test optimization problems more robustly. There still exist some cases where the

is larger than 0.05: MWOA-CS and HBA on

with the dimension of 300; and MWOA-CS and CSO on

and

with dimensions of 300, 500 and 1000.

In brief, the results illustrate that MWOA-CS shows excellent exploitation ability to jump out of local solutions, and good exploration ability to search the whole feasible region. Hence MWOA-CS can effectively solve large scale global optimization problems.

4.3. Convergence Analysis of Comparison Algorithms

In order to further observe the difference of the optimization process of the comparison algorithms,

Figure 4 provides the average convergence rate curves over 30 run times, where x-axis and y-axis are the iteration and best fitness values obtained so far, respectively. The dimension of the test functions is set at 1000. It can be seen that MWOA-CS has outstanding advantages in terms of convergence speed and accuracy. Although the performance of MWOA-CS is weaker than CSO for

and

, it is still superior to the other comparison algorithms. The results mean that MWOA-CS can effectively obtain one satisfactory solution of large scale optimization problems with less time.

4.4. Boxplot Analysis of Comparison Algorithms

The boxplot graphs of the comparison algorithms on the benchmark functions with 1000 dimension are portrayed in

Figure 5, which can further illustrate the stability of the meta-heuristic algorithm. Based on

Table 5 and

Table 6 and the graphs shown in

Figure 5, it is easy to determine that MWOA-CS outperforms the five other algorithms in solving most test optimization problems with 1000 dimension. For

and

, although MWOA-CS exhibits the ability to jump out the local solution, it still shows premature phenomenon in the convergence. Unfortunately, this avoidant ability is still weaker than CSO. Consequently, it can be concluded that MWOA-CS exhibits better efficiency and robustness than the other comparison algorithms in solving classical benchmark functions with large scale dimension.