1. Introduction

Tunnels are important components of traffic infrastructure. Tunnel traffic has high requirements for safe operations and high pressure to keep traffic flowing, due to its typical characteristics such as linear engineering, semi-enclosed space, concealed structure, and easy to become a bottleneck. Digital and intelligent means [

1] can be used to provide insight into changes in tunnel traffic elements, to master the rules of traffic operation, and to predict the development trend of traffic, which can produce great social value and economic benefits when applied to all links of tunnel traffic operations management and service.

The rapid development of information technologies such as the Internet of Things, cloud computing, and high-definition video monitoring have presented new opportunities for digital upgrading of tunnel operations management. The United States, Canada, Germany and other developed countries have previously studied and applied tunnel monitoring systems. Vehicle detectors, photocell detectors, and other means have usually been used to realize more accurate traffic and environmental information monitoring and management in tunnels. In 2004, the European Union (EU) issued the Directive on Minimum Safety Requirements for Tunnels on the Trans-European Road Network (2004/54/EC) [

2]. The EU countries all assess the safety risk of tunnel operations in the region based on this directive. In 2008, the World Road Association also proposed the monitoring and safety management method of highway tunnel operations [

3]. In the “code for design of urban road traffic facilities”, the “code for design of highway tunnels”, and the “code for design of highway tunnel traffic engineering” documents, China has clearly put forward the requirements for the construction of tunnel mechanical and electrical systems, including tunnel monitoring systems, tunnel ventilation and lighting systems, tunnel power supply and distribution systems, tunnel fire alarm systems, etc. The documents also classify field control networks, traffic monitoring systems, closed-circuit television systems, emergency telephone systems, wired broadcasting systems, and environmental monitoring systems as tunnel monitoring systems.

Researchers and engineers at home and abroad have carried out extensive exploration on the digital management and innovation of tunnels, and improved the operations management ability in different aspects. However, there are still some problems to be solved, which include: (1) The video surveillance for tunnel holographic perception is presented in the form of fragmentation. Tunnel surveillance videos have strong similarity and massive videos are presented in fragmentation, therefore, daily managers experience significant cognitive pressures viewing real-time videos. (2) The video data and service data are separated from each other in tunnel operations management. The video monitoring system is separated from the asset, emergency, electromechanical, and other subsystems of tunnel operations, which makes it difficult to communicate with each other and leads to high cost of cross-system integration. (3) There is a lack of means for automatic association among two-dimensional maps, surveillance videos, and three-dimensional scenes in digital management. A traditional business subsystem is usually presented in 2D mode, and lacks a means for 3D scene monitoring that conforms to the spatial cognition of managers, as well as emergency response methods and tools that can quickly link between 2D signal monitoring, 3D scene, and local video.

A digital twin provides new ideas for the digital transformation and high-quality development of tunnel operations. It creates a virtual model of a physical entity in a digital way [

4], simulates the behavior of the physical entity in the real environment with the help of data, and adds new capabilities to the physical entity by means of virtual-real interactive feedback, data fusion analysis, and iterative decision-making optimization. Aiming at the current problems of video fragmentation, separation of video and business data, and lack of 2D and 3D linkage response methods in tunnel digital operations, in this paper, we propose a novel tunnel digital twin construction and virtual-real integration operations application method. Based on the analysis of the characteristics and needs of tunnel operations business, from “person, vehicle, road (facilities), environment” the building of four elements, using BIM technology to build a basic model of tunnel facilities and real-time tunnel surveillance video fusion into 3D virtual scene. Multi-source Internet of Things (IoT) sensing data are gathered and fused to form a digital twin scene that is basically the same as the real tunnel traffic operations scene. The constructed digital twin scenarios are applied to tunnel traffic flow, accident rescue, facility management, emergency response and other aspects to achieve the tunnel digital twin perception and intelligent operations and maintenance management application of virtual-real integration.

The main contributions of this paper are as follows: Firstly, a video fusion method based on 3D registration and projection calculation is proposed to establish the spatio-temporal relationship between discrete surveillance video and 3D scene, and to solve the problem of video fragmentation in holographic perception of tunnel scene. Secondly, a tunnel digital twin construction and application method based on three-dimensional panoramic video fusion is proposed to reduce the pressure of all element modeling and to realize two-dimensional and three-dimensional linkage tunnel digital management.

The remainder of this paper is organized as follows: The related work and a general overview of the proposed method are presented in

Section 2 and

Section 3. In

Section 4, we describe the demand analysis of tunnel operations management based on video surveillance. Tunnel main structure modeling based on BIM, video fusion, IoT data aggregation, and traffic operations simulation are presented in

Section 5,

Section 6 and

Section 7, respectively. In

Section 8, we analyze the management application based on digital twins. Demonstration application and effect analysis is discussed in

Section 9, and the conclusions are drawn in

Section 10.

2. Related Works

This section analyzes the key technologies and application status of tunnel video surveillance, digital twin, and tunnel digital twin involved in this paper.

With respect to Tunnel video monitoring surveillance, LonWorks Distributed Monitoring Technology launched in the United States, German INTERBUS fieldbus technology, and Japan Cotroller-Link ring network control technology [

5,

6,

7] are widely used in distributed traffic safety monitoring of highway tunnels. The extra-long Mont Blanc Tunnel in France adopted a pre-monitoring system outside the tunnel and radar vehicle detection system inside the tunnel to avoid overloading or dangerous vehicles entering the tunnel and to manage the traffic inside the tunnel. Video monitoring and detection sensors are set up in the mountain ring highway tunnel passing through St. Gotha peak in the northwest Alps of Zurich to monitor air quality, illumination, and fire in the tunnel, which is managed and controlled by control equipment. Helmut Schwabach et al. [

8] from the Technical University of Graz, Austria, carried out special research on tunnel video surveillance named “VitUS-1” to realize automatic identification of alarm locations, notification of tunnel managers and road users, and automatic storage of traffic accident video sequences. Reyes Rios Cabrera [

9] of Belgium proposed a comprehensive solution for tunnel vehicle detection, tracking, and recognition, in which vehicles are identified and tracked through a non-overlapping camera network in a tunnel; the solution takes into consideration practical limitations such as real-time performance, poor imaging conditions, and decentralized architecture. Wang Xuyao [

10] integrated measuring robot, static leveling, joint gauge, and other equipment to form a remote automatic monitoring system for tunnel structure deformation and ballast bed settlement monitoring, which has been applied in the Qingdao Rail Transit Line 13. Li Feng [

11] combined image stitching and distorted image correction, and selected a fisheye camera to monitor the tunnel road conditions, and achieved tunnel scene coverage with small distortion and a large picture. Liu Zhihui et al. [

12] developed a smart tunnel operations management and control system based on the IoT, which integrated four subsystems: comprehensive monitoring, emergency rescue, maintenance management, and data analysis and auxiliary decision making, to monitor the traffic and environmental data in a tunnel, to anticipate dangerous situations and accidents, and to ensure the safety of traffic operations in the area affected by the tunnel. Chen Hao et al. [

13] proposed an intelligent monitoring platform for an expressway tunnel based on BIM (building information modeling) + GIS (geographic information system), with 2D and 3D integration and dynamic and static data fusion to realize digital, 3D, and accurate monitoring and management of a tunnel. Li Jianli et al. [

14] designed an “Internet plus intelligent high-speed” BIM tunnel intelligent monitor system for the complex and diverse monitoring data of tunnels, with data dynamic demand and low modeling efficiency. With the BIM model as the center of electromechanical information flow, the “six layers and two wings” structure has been adopted to realize information coordination among systems and intelligent monitoring of tunnel life cycle. Liu Pengfei et al. [

15] used high-definition video to transform and upgrade the analog video monitoring system of the Qiujiayakou Tunnel, which solved the problems of low video definition, high failure rate, and inability to realize secondary video application of the original monitoring system.

Video virtual reality fusion methods [

16,

17] use virtual reality technology to fuse discrete surveillance videos with different angles with a 3D model of the surveillance scene in real time, and therefore, establish the spatial correlation between different video pictures in a scene. At present, the mainstream methods of video fusion are divided into four categories: video tag map, video image mosaic, video overlay transition in 3D scene, and the fusion of video and 3D scene. The video label map method [

18,

19,

20] places the video on the two-dimensional base map in the form of label. This is a 2D method with simple implementation. However, it is difficult to truly reflect the perceptual effect of the fusion of video and virtual reality in three-dimensional space, because this method always needs to display the video separately from the base map, and video. The method of video image mosaic [

21,

22,

23,

24] forms a panoramic image with wide viewing angle or 360 degree viewing angle after image transformation, resampling, and image fusion. This is a 2D+ method, but the fixed virtual model of the sphere restricts the viewpoint to be limited near the shooting viewpoint, and the degree of freedom of interaction is limited. The method of video overlay transition in 3D scene [

25,

26,

27] is a pseudo 3D method. It realizes the overlay and fusion display of multiple videos based on 2D and 3D feature registration, but the viewing angle is limited to the camera path. The fusion method of video and 3D scene [

28,

29,

30,

31] is to capture the video image of a real object by camera, and register the video image in the virtual environment in real time in the form of texture. This method allows users to observe the fusion results from any virtual viewpoint, which is better than the other three methods in display effect and interaction. The method proposed in this paper belongs to the fusion method of video and 3D scene.

A digital twin [

32,

33] is a technical means to create a virtual entity of a physical entity in a digital way, and to simulate, verify, predict, and control the whole life cycle process of the physical entity with the help of historical data, real-time data, and an algorithm model. Digital twins were first proposed by Professor Michael Grieves when he taught at the University of Michigan in 2003, and since then have been widely adopted and practiced by some industries and enterprises. A digital twin virtual world describes the real physical world, and realizes the continuous iteration of diagnosis, prediction, and decision making based on data, model, and software, which helps to optimize the production or enterprise process and to reduce the trial-and-error cost. Professor Tao Fei’s team at the Beihang University put forward the concept of a “digital twin workshop” for the first time in the world, and published a review article entitled “Make More Digital Twins” online in Nature magazine [

34]. Lim et al. [

35] reviewed 123 papers on digital twinning, analyzed the tools and models involved in digital twinning, and proposed an architecture to maximize the interoperability between subsystems. The author’s team [

4] also studied a traffic scene digital twin, put forward five key technologies that needed to be further developed, and explored the application prospects of digital twin technology in vehicle development, intelligent operations, and maintenance of important traffic infrastructures; unmanned virtual test, analysis, and experiments of traffic problems; traffic science popularization; and traffic civilization education. Weifei Hu et al. [

36] provided a state-of-the-art review of DT history, different definitions and models, and six different key enabling technologies. Ozturk, G. B. et al. [

37] discussed the current patterns, gaps, and trends in digital twin research in the architectural, engineering, construction, operations, and facility management (AECO-FM) industry and proposed future directions for industry stakeholders. Gao, Y. et al. [

38] reviewed recent applications of four types of transportation infrastructure: railways, highways, bridges, and tunnels, and identified the existing research gaps. Jiang, F. et al. [

39] reviewed 468 articles related to DTs (digital twins), BIM, and cyber physical systems (CPS); proposed a DT definition and its constituents in civil engineering; and compared DT with BIM and CPS. Bao, L. et al. [

40] proposed a new digital twin (DT) concept of traffic based on the characteristics of DT and the connotation of traffic, and a three layer technical architecture was proposed, including a data access layer, a calculation and simulation layer, and a management and application layer.

Regarding a tunnel digital twin, Kasper Tijs [

41], from the Delft University of Technology in the Netherlands, analyzed the requirements and architecture of a tunnel digital twin, and found that a digital twin should be considered in tunnel operations and maintenance based on the results of a demand analysis, and thus, should enhance the operations and maintenance of tunnels in the Netherlands. Chen Yifei [

42] designed and implemented a set of 3D panoramic tunnel monitoring systems by using the 3D MAX modeling software, the Unity3D virtual reality engine, and the MY SQL database to integrate the design, construction, survey, and monitoring information of tunnel engineering with 3D panoramic images. However, the program applied only to set panoramic monitoring and data integration at fixed points. Zhu Hehua et al. [

43] from the Tongji University proposed a design for automatic identification of a tunnel’s surrounding rock grade and digital dynamic support based on a digital twin, which was demonstrated and applied in the Grand Canyon Tunnel of bid

Section 2,

Section 3,

Section 4,

Section 5 and

Section 6 of the Sichuan Ehan High-Speed Project, and solved the problems of serious disconnection, insufficient timeliness, and insufficient refinement of support design and construction in the practice of rock tunnel engineering. Wuxi Qingqi Tunnel [

44] built a tunnel electromechanical ”digital twin” system using the Internet of Things (IoT) and BIM technology. With the system, through interactive operation, the 3D perspective view and real-time monitoring images of a certain area can be mastered, real-time operations data of each equipment in the area can be obtained, and remote “simulation inspection” of all parts and components can be combined with virtual and real, which can effectively overcome the deficiency of human supervision mode and ensure that a tunnel is not flooded. Tomar, R. et al. [

45] proposed a digital twin method of tunnel construction for safety and efficiency, which could monitor both the construction process in real time and virtually visualize the performance of the tunnel boring machine (TBM) performance in advance. Nohut, B. K. [

46], in his Master’s thesis, focused on a road tunnel use case for a digital twin and created a tunnel twin framework for a given tunnel. Van Hegelsom, J. et al. [

47] developed a digital twin of the Swalmen Tunnel, which was used to aid the supervisory controller design process in its renovation projects. Yu, G. et al. [

48] proposed a highway tunnel pavement performance prediction approach based on a digital twin and multiple time series stacking (MTSS), which has been applied in the life cycle management of the highway-crossing Dalian Tunnel in Shanghai.

3. Method Overview

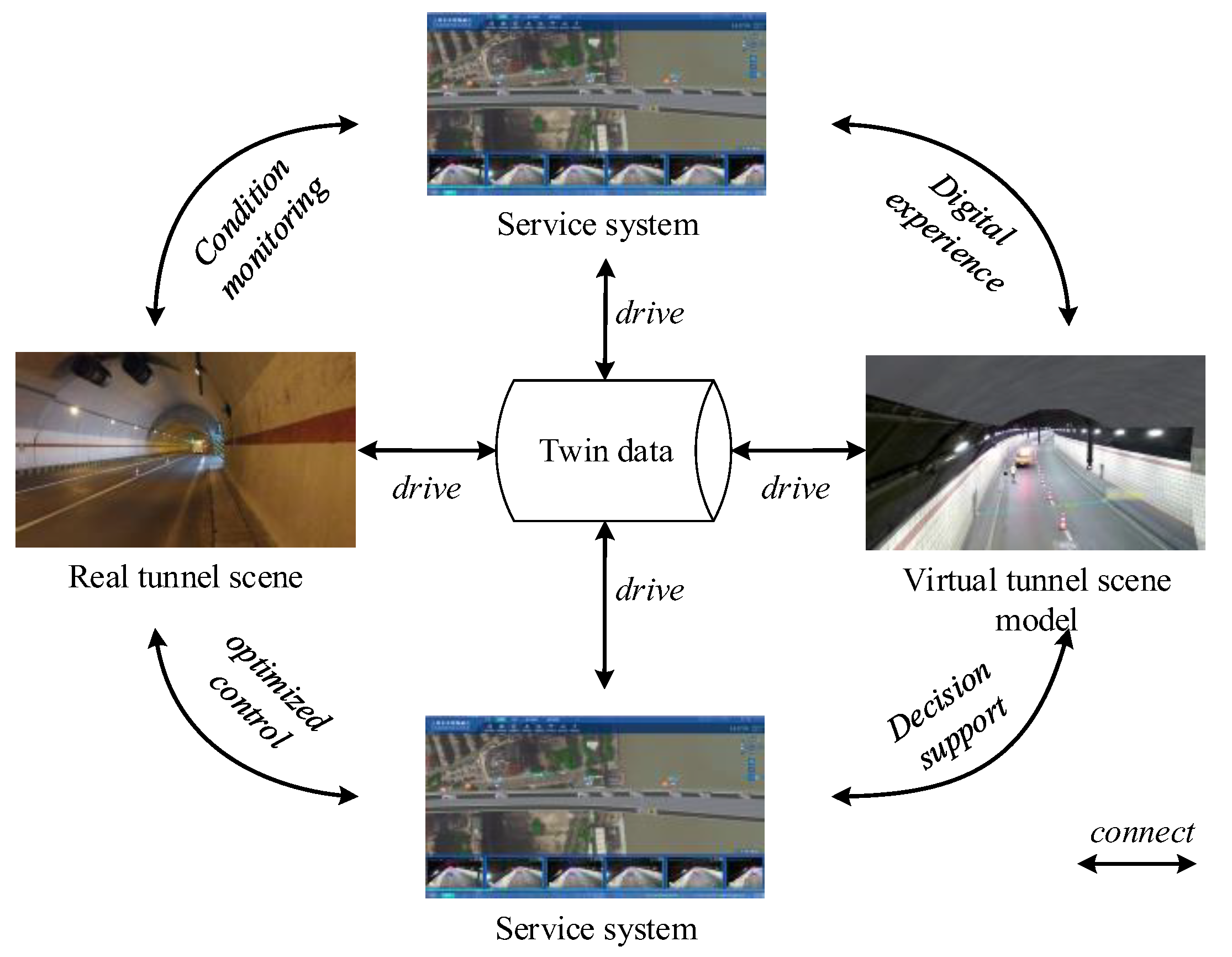

Referring to the digital twin five-dimensional structure model [

49] proposed by Tao Fei, a digital twin structure reference model suitable for tunnel scene was designed, as shown in

Figure 1, including a real tunnel scene, a virtual tunnel scene model, twin data, service systems, and the interconnection among the above four. Twin data are the basis of digital twin construction. The virtual digital twin scene model is the core of digital twin construction, and the service system is the carrier of digital twin implementation. It supports the loop iteration of condition monitoring, digital experience, auxiliary decision making, and optimal control between real scene and virtual model.

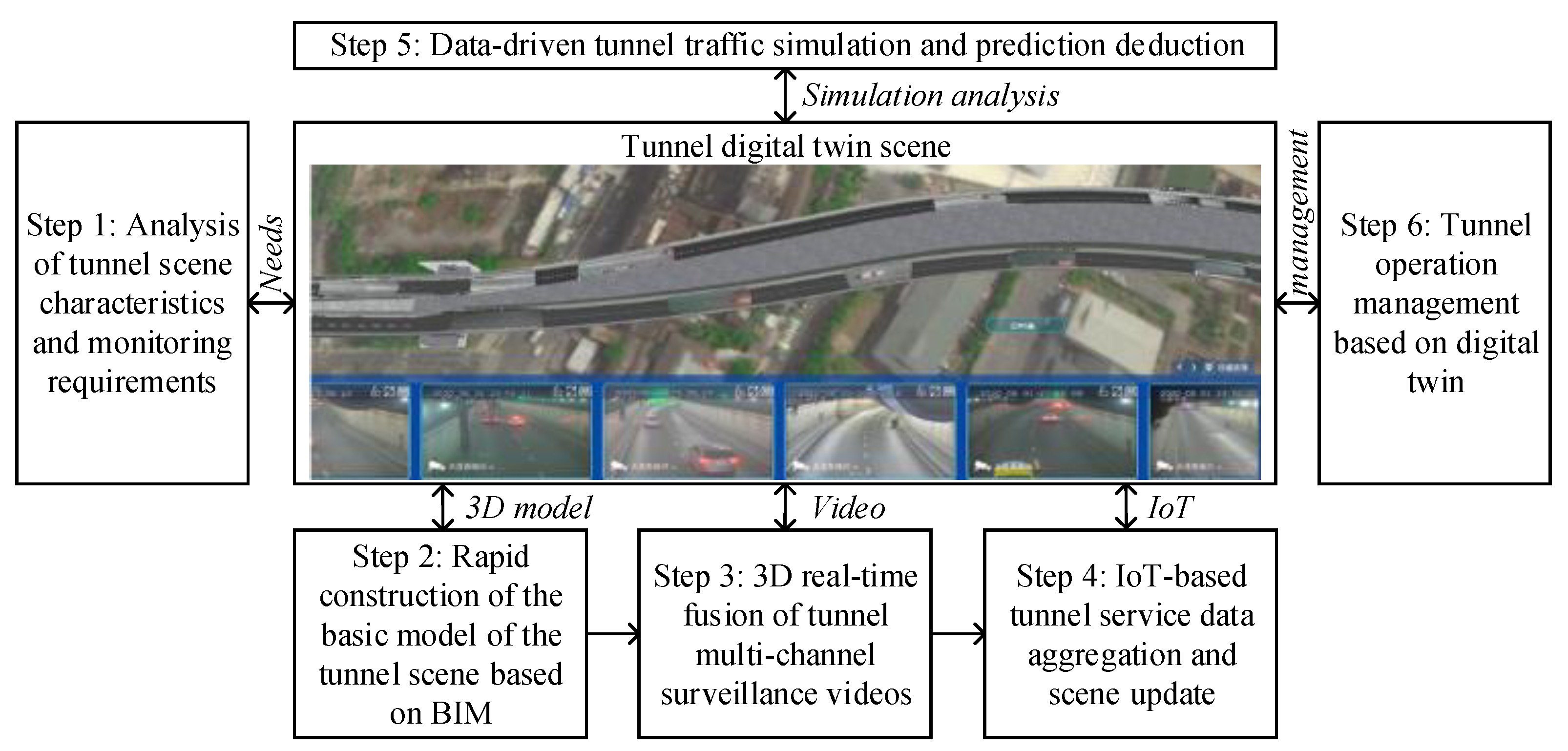

Combined with the technical advantages of tunnel digital twin structure reference model and 3D video fusion, a tunnel digital twin construction and application method based on 3D video fusion is proposed, as shown in

Figure 2. The main steps include: (1) An analysis of the tunnel scene characteristics and the business requirements of video monitoring, and clarification of the objectives of digital twin construction and application. (2) Three-dimensional reconstruction of the tunnel scene based on BIM and point cloud scanning to quickly generate the tunnel digital twin basic model. (3) Projection calculations and 3D real-time fusion of multiple videos in the tunnel establish the spatial correlation between discrete videos and 3D scenes. (4) Collection of the IoT monitoring data in tunnel scenes and enhancement in 3D scenes. (5) Carry out the tunnel traffic simulation and prediction analysis based on management demand and historical data. (6) Carry out the tunnel operations management application demonstration of virtual real integration based on the constructed digital twin scene. The details of each step are discussed in

Section 3,

Section 4,

Section 5,

Section 6,

Section 7 and

Section 8.

4. Analysis of Tunnel Scenarios and Monitoring Requirements

The main work objective of a tunnel operations unit is to “ensure smooth traffic”, which involves various business requirements including: operations monitoring, facility maintenance, civil maintenance, emergency disposal, etc. Operations monitoring involves the timely identification and warning of traffic congestion, accidents, car breakdowns, illegal intrusions, facility and equipment failures, emergencies, and other events, and then timely dealing with problems affecting the smooth driving of the tunnel, thus, maintaining safe and smooth traffic operations in the tunnel. Facility maintenance involves maintaining the facilities in the tunnel by introducing the maintenance design concept, carrying out active and preventive maintenance, effectively reducing maintenance costs, prolonging the overhaul cycle, improving the service quality of facilities, significantly reducing the impact on urban operation, improving urban operations efficiency, and enhancing the image of the city. Civil maintenance refers to routine cleaning and maintenance of tunnel civil construction. Emergency disposal refers to timely and early warning, response, disposal, aftermath, and recovery of emergencies related to “fire, water and electricity” in the tunnel, and the establishment of a perfect emergency disposal plan system and team. In addition, business requirements related to tunnel operations also include early warning patrol, anchoring traction, alarm reception and handling, electromechanical fault disposal, etc.

The demand for video monitoring in tunnel operations management mainly includes four aspects: tunnel traffic flow, accident rescue, facility management, and emergency response. Briefly, these four aspects involve: (1) tunnel traffic flow, i.e., patrolling the entire tunnel regularly, timely finding and responding to smooth operations of the tunnel through the monitoring video, and maintaining the tunnel traffic flow in combination with an analysis of traffic detection facilities in the tunnel, early warning of congestion events, traffic signal control, broadcasting, on-site disposal, and other measures; (2) accident rescues, for car breakdowns, traffic accidents, emergencies, facility failures, and other problems transferred to traffic police by phone, where monitoring cameras can be used to quickly confirm the location of a situation, and quickly dispatch a trailer for accident rescue and emergency treatment; (3) facility management using monitoring videos to patrol the important facilities and equipment in a tunnel, combined with the data and analysis of relevant IoT sensors, to judge the status of tunnel electromechanical facilities and equipment, and timely find and deal with facility faults; (4) emergency response to provide timely responses to emergencies, severe weather, and other events occurring during the operation of a tunnel, to guarantee the emergency process by using video monitoring and related IoT facilities, and to support emergency early warning, disposal, response, aftermath, and recovery.

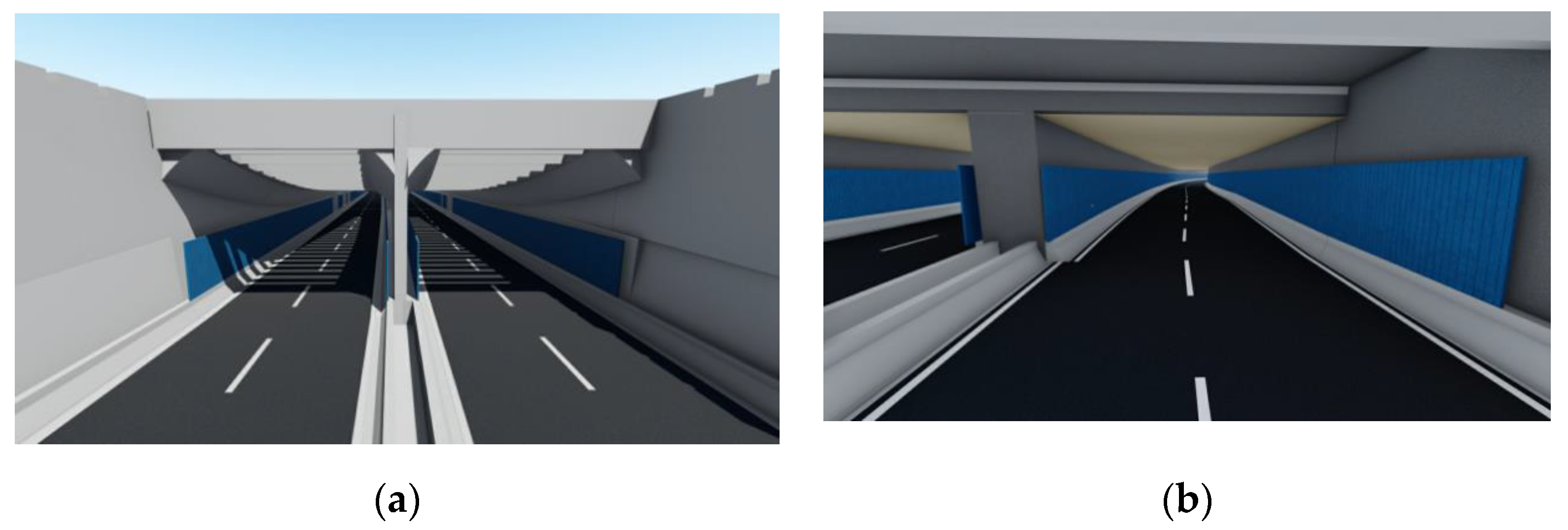

5. Rapid Construction of the Basic Model of the Tunnel Digital Twin Based on BIM

BIM technology is used to construct the parametric model of the tunnel body, lining, tunnel portal, road, concealed works, auxiliary facilities, electromechanical facilities, and other objects, therefore, forming the initial model of the digital twin scene which is basically consistent with the actual tunnel scene. Using BIM tools such as Revit and Civil 3D, the basic wall model, road model, anti-collision wall, drainage ditch, and decorative board of tunnel sections such as circular tunnel section, rectangular tunnel section, and approach section are modeled in three dimensions. At the same time, the substation, ventilation shaft, crossing duty booth, and other facilities are modeled and rendered to form a realistic overall tunnel engineering model, as shown in

Figure 3.

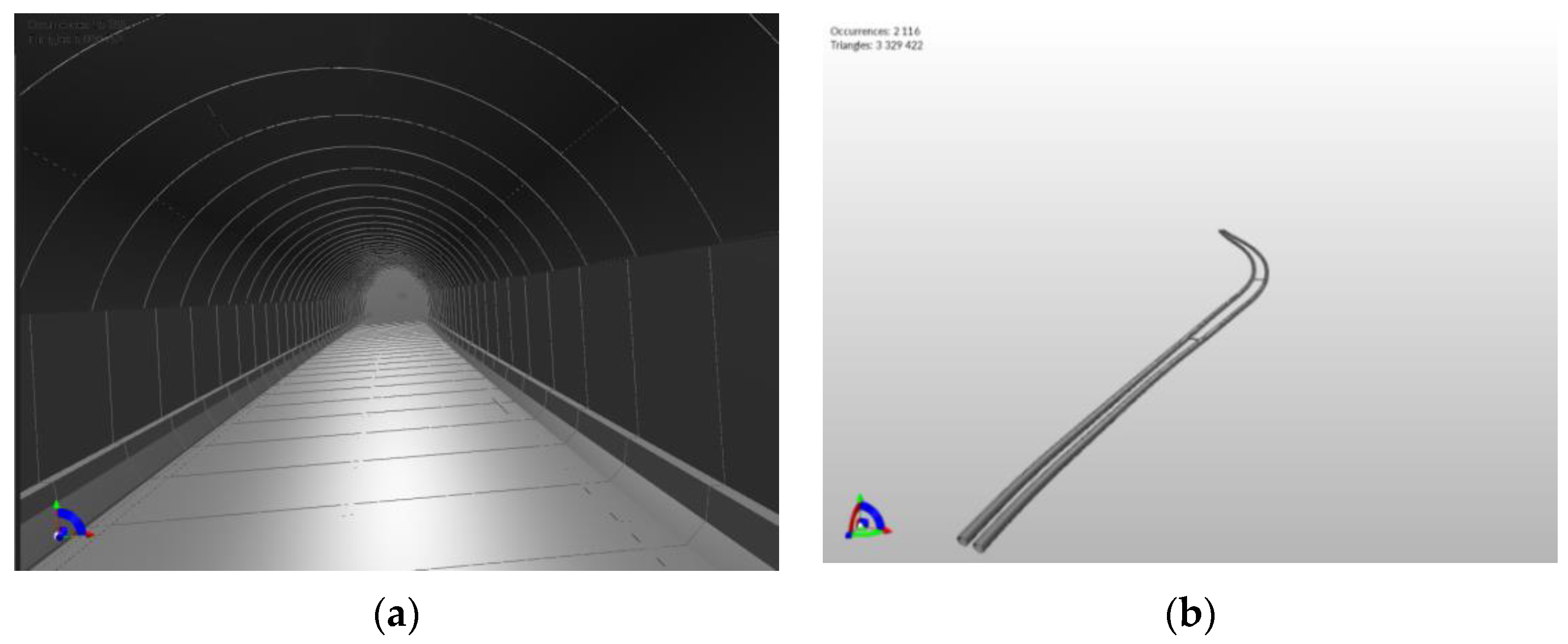

The accuracy of the BIM model built based on the design drawings is LOD (level of detail) 400, and integrates the multi-stage information of construction, management, maintenance, and transportation. The model has large volume, high accuracy, and excessive attribute information. For 3D real scene video fusion, the geometric apparent model of the tunnel is more important. The high-precision model details will seriously affect the efficiency of multi-channel video fusion and real-time rendering. Therefore, the original BIM model needs to be lightweight to meet the needs of real-time 3D virtual real scene fusion. A professional lightweight processing software is used to process the original BIM model, and the number of triangular patches and file size are reduced by merging, extracting, reorganizing, reducing, and repairing the data hierarchy. The number of triangular patches of the original BIM model of a tunnel model was 8.35 million (

Figure 4a), and the number of triangular patches of the model after lightweight processing was 3.33 million (

Figure 4b); the number of model patches was reduced by 60%. The original model file size was 28.5 m, and the model size was 12.5 m after lightweight treatment, which was reduced by 56%. This lightweight processing method and results can meet the needs of real-time 3D scene fusion and joint rendering, and maintain the integrity of the model.

6. 3D Real-Time Fusion of Tunnel Multi-Channel Surveillance Video

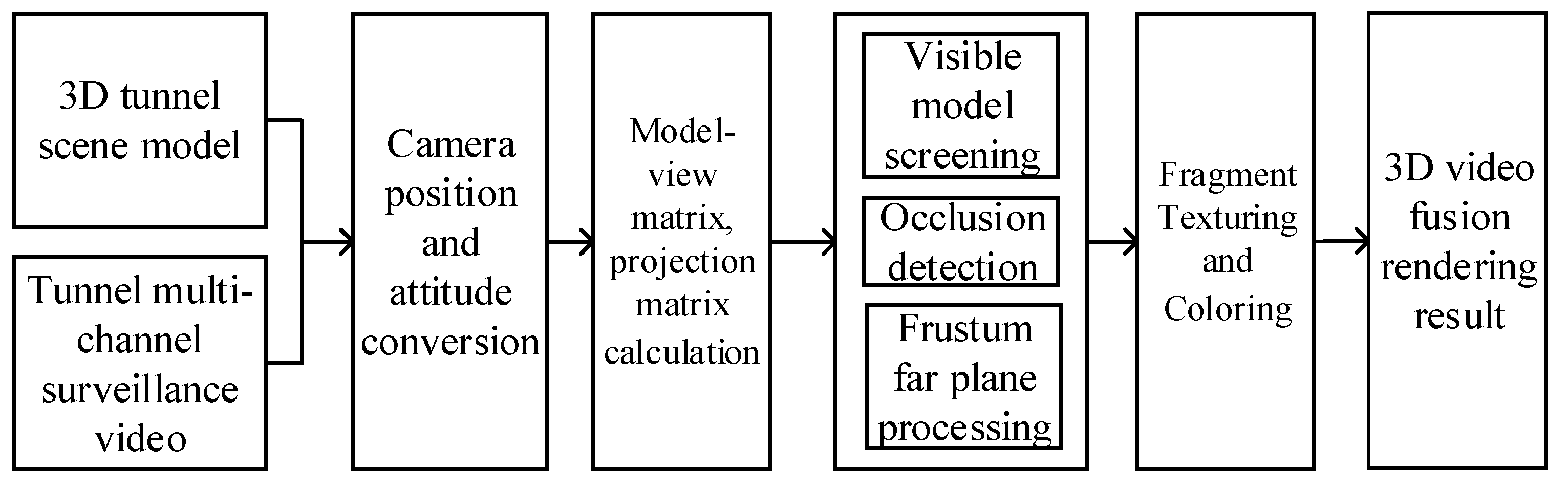

Based on the monitoring video acquisition and point calibration, the multi-channel monitoring video in the actual tunnel scene and the tunnel geometric model are fused in real time according to the topology through 3D registration and projection calculation. A 3D real-time fusion method of tunnel multi-channel video is proposed, as shown in

Figure 5, including six steps.

Step 1: The point position, orientation, height, focal length, camera type, and other information of the camera were calibrated. The corresponding relationship between the real longitude and latitude coordinates and 3D space coordinates was established by combining the topological structure and important landmarks of the scene. The position and attitude information of the camera in the real environment was converted to the position and attitude value in 3D space.

Step 2: Use the converted position and attitude to calculate the model view matrix,

Mmv, and projection matrix,

Mp, of the camera in three-dimensional space. The calculation method of

Mmv is shown in Formulas (1) and (2):

where

is the orientation vector of the camera viewing cone,

is the side vector,

is the positive up vector,

l is the viewpoint position,

t is the observation target position,

u is the position directly above the camera during observation,

is the scaling matrix,

is the rotation matrix, and

is the displacement matrix.

The calculation method of camera projection matrix

is shown in Formulas (3) and (4):

where (

r,

t) is the upper right position of the camera projection frustum,

a is the field angle of view of the camera,

is the aspect ratio of the taken picture,

f is the far clipping plane coefficient,

n is the near clipping plane coefficient, and

is the focal length.

Step 3: Use the model view matrix and projection matrix to calculate the view cone structure of the camera in 3D space, take the far clipping plane of the view cone as the supplementary scene structure of the far plane, and project the video image without corresponding scene model onto the far plane.

According to the width

w and height

h of the video picture, the viewing cone of the camera is constructed in the equipment space. Then, set the far clipping plane depth coefficient

and near clipping plane depth coefficient

of the camera viewing cone. The near clipping plane of the viewing cone is

, where the

coordinates are

, the coordinates of

are

, the coordinates of

are

, and the coordinates

of are

. The far clipping plane of the viewing cone is

, where the

coordinates are

, the coordinates of

are

, the coordinates of

are

, and the coordinates of

are

. For each vertex on the far clipping plane and near clipping plane of the viewing cone, the camera model view matrix (

Mmv) and projection matrix (

Mp) are used to project it back into the 3D scene to obtain the coordinates of 8 vertices of the camera viewing cone in 3D space, as shown in Formula (5):

where

is each vertex of the far and near clipping surface of the camera viewing cone in the equipment space and

is the window matrix corresponding to the camera video picture.

Step 4: Filter the model set visible to the camera according to the visual cone, reduce the amount of calculation, and accelerate the fusion process. Calculate the view cone bounding box of the camera, intersect the bounding box with the bounding box of each model in the scene, and the fusion calculation is only carried out on the model intersecting with the view cone bounding box of the camera, so as to avoid traversing all vertices of each model in the scene every time the video of each camera is fused with the scene.

Step 5: Use the model view matrix and projection matrix to render the scene depth information under the camera viewpoint, and detect the occlusion of the vertices of the model. The occluded part adopts the original texture of the model, and the non-occluded part is fused with the video image.

Step 6: Perform fragment texturing and coloring in the graphics card. After raster operations, the slice is finally converted to the pixels seen on the screen, as shown in Formulas (6) and (7):

where

t is the slice texture coordinates corresponding to the surface vertex

p,

c is the texture color of the slice,

T is the video image of the camera, and

f is the texture sampling function. After calculating the texture color of the slice element, the video image is drawn on the scene model to realize the fusion of video and 3D scene. The 3D fusion examples of the monitoring gun and fisheye camera in tunnel scene are shown in

Figure 6.

7. Tunnel Monitoring Data Aggregation and Scene Update Based on the IoT

Tunnel scenes include temperature, humidity, wind speed, height limit detection, variable intelligence board, ventilation, lighting, drainage, firefighting, fire alarm, CO/VI (visual identification) detector, broadcast system, speed limiting board, vehicle detection system, emergency phone, signal lamp, illuminance meter, and other IoT facilities and control systems. It can label and manage the digital assets of facilities and equipment in the 3D space of real scene fusion, access the real-time data of IoT sensors in message format, and carry out alarm linkage based on business requirements. The IoT sensing data include static data and dynamic data. Static data are mainly used for asset accounts and equipment attributes, and dynamic data mainly refer to real-time monitored traffic, facility status, the environment, and so on.

Figure 7 shows an example of tunnel data aggregation and fusion.

As shown in

Figure 7, the scene after real-time fusion of multi-channel video and BIM model is further labeled in real time with information such as camera point locations, covert engineering, and auxiliary labeling, which help to improve the cognitive ability of managers to understand the scene. In the digital twin scene, the point marking, status monitoring, and enhanced display of broadcasting, fans, and other facilities in the tunnel can be realized. In addition, the traffic detection, structural deformation monitoring, environmental monitoring, lighting, electric power, fire prevention, ventilation, water supply and drainage, traffic signal, variable information, broadcasting and telephone, and other IoT sensing facilities are associated with virtual devices in digital twin scenes and updated to 3D scenes at a certain frequency. Users can directly view device attributes and status information in 3D space. It also supports dynamic annotation of abnormal data and emergency response feedback control in 3D scenes. In order not to affect the normal use of the professional subsystems, when the uplink data are reported between the third-party application and the digital twin service system, the standard gateway Hypertext Transfer Protocol (HTTP) access is provided, and the third-party sends a standard BV JavaScript Object Notation (JSON) format to standard access gateway service. The service provides data analysis, authentication, parameter identification, and other operations. Users can also define and create various 3D labels in the 3D space, and directly associate important information related to service management to help scene cognition and service management.

8. Data-Driven Tunnel Traffic Operations Simulation and Prediction

Based on the historical traffic volume monitoring data and management requirements, the data channel is opened between the digital twin scene and the professional traffic simulation software, and can carry out 3D replay, predictive deduction, and auxiliary decision making of the traffic operations scene in the digital twin scene. Statistics and analysis of the actual tunnel monitoring video structured data and traffic monitoring data update the hourly origin destination (OD), vehicle type ratio, and other parameters of the traffic simulation model; fit the change law of the OD and vehicle type ratio in the same time period every week, month, and year; and update the recommended parameter values of the OD and vehicle type ratio in different periods on different days such as working days, weekends, holidays, and major activities, to support 3D replay and law analysis of tunnel traffic scenes. The tunnel simulation example is shown in

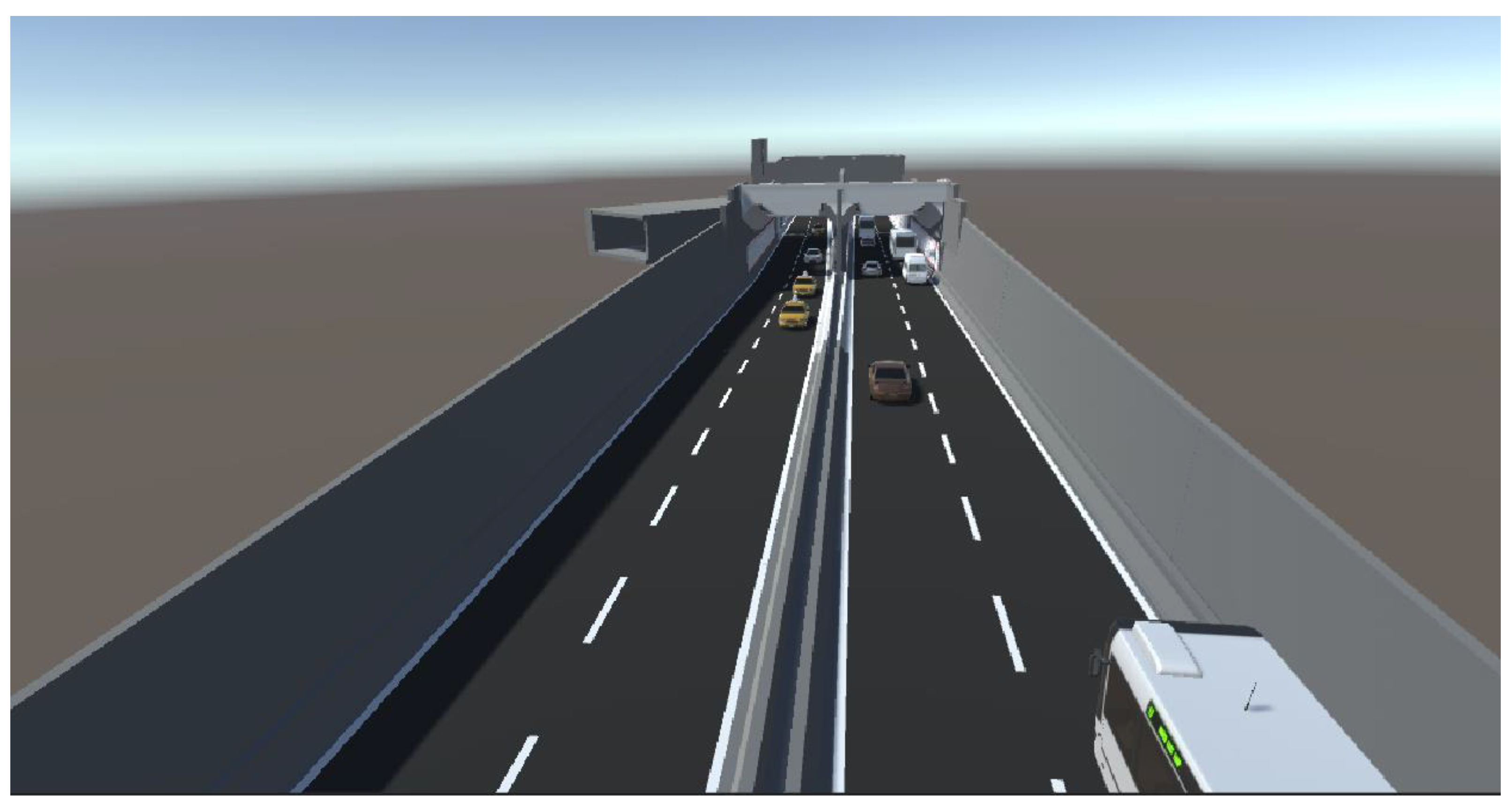

Figure 8.

As shown in

Figure 8, according to the needs or problems of a user in tunnel traffic management, it is possible to analyze the tunnel digital twin scene elements involved in the demand; to describe this demand or problem in the tunnel traffic digital twin scene; to adjust parameters that affect demand or problems and analyze the evolution results under different parameter values; to compare the results of different parameters, repeatable simulation analysis of demand, or problems; and to form auxiliary decision-making suggestions. Tunnel traffic simulation operations can be used to predict and deduce changes in vehicle flow, to regulate traffic signals, to respond to traffic incidents, and to test vehicle limits test with respect to truck limit, traffic congestion, and other factors related to vehicles, and therefore, find problems and give early and timely warnings.

9. Tunnel Digital Twin Operations Management Application Based on Virtual-Real Fusion

Using the constructed tunnel digital twin scene, combined with the actual business requirements of tunnel traffic flow, accident rescue, facility management, and emergency response in tunnel operations management, in this paper, we explored the tunnel operations management method based on the digital twin scene. As compared with the traditional video wall operations monitoring method, the digital twin scene adds spatial 3D information, which can control the tunnel scene in a more intuitive way. The reference application functions are shown in

Figure 9. The main application innovations are embodied in the following aspects as described below.

(1) Virtual top view global monitoring: By fusing the discrete monitoring video distributed in the tunnel area into the virtual reality scene model, the association relationship of the video in the virtual reality space is established to provide continuous and intuitive monitoring of multiple areas, which is convenient for monitoring managers to overview overall situations. Combined with the real-time association of 2D pictures, the global monitoring of the tunnel top view across cameras is realized.

(2) Tunnel automatic video patrol: Set key patrol points and patrol lines in the digital twin scene to realize automatic video 3D patrol along the driving direction in a 3D way in line with the cognitive habits of the monitoring personnel, reduce the 3D patrol along the driving direction, reduce the frequency of manual actual patrol and the pressure of handling ball camera operations and inspection, improve the pertinence of tunnel daily monitoring and patrol efficiency, and improve the control ability of tunnel to ensure unimpeded traffic.

(3) Two- and three-dimensional linkage emergency response: When an abnormality such as congestion, accident, or anchoring occurs in a tunnel, view and confirm the videos related to rapid dispatching in 3D space, according to the location of the alarm or abnormal conditions; provide support by viewing the status of upstream and downstream vehicles at congestion points; find and analyze the causes of congestion; and realize more intuitive and convenient abnormal event discovery, response, and disposal mechanisms.

(4) Three-dimensional integration and linkage supervision of tunnel facilities: By effectively fusing fragmented video and multi-source IoT sensing information, various types of dynamic IoT sensing information can be real-time accessed to the 3D dynamic tag. The monitoring video can be used to patrol the important facilities in the tunnel, combined with the relevant IoT sensor data and analysis, and thus, determine the status of tunnel facility equipment and timely discovery and dispose of facility failures.

The application of new technologies will inevitably lead to changes in corresponding management modes. Combined with the characteristics of digital twin technology, business requirements of tunnel operations monitoring, and usage habits of information platforms, it is necessary to constantly innovate the management mode and improve the management efficiency.

10. Demonstration Application and Effect Analysis

Considering a tunnel scene in China as an example, in this paper, we launch a demonstration application of tunnel digital twin construction and operations management based on 3D video fusion, and analyze the application effect of the demonstration.

10.1. Selection and Implementation of the Demonstration

The demonstration tunnel is divided into two east and west lines. The east tunnel is 2.56 km long and the west tunnel is 2.55 km long; in the middle of the two tunnels, there are two contact channels. The design speed of the tunnel is 40 km/h, the tunnel cross section is two-way four lanes, the lane width is 3.75 m, the height limit is 4.2 m, and the maximum capacity of two-way four lanes is 5248 vehicles/h. There are 48 surveillance cameras in the east and west lines of the tunnel, including gun and ball cameras. They are installed at an interval of about 100 m and 4.5–5 m high. The video is stored and managed by using a digital video recorder (DVR). The tunnel operations center has an integrated broadcasting system, variable information board, speed limiting board, vehicle detection system, emergency telephone, signal lamp, illuminance meter, intelligent control terminal, and other professional subsystems, with better scene perception and control conditions. The tunnel applies new information technologies such as BIM, 5G, and the Internet of Things, and is committed to building a demonstration tunnel of full life cycle management and a model of new technology application, with better data and network foundation.

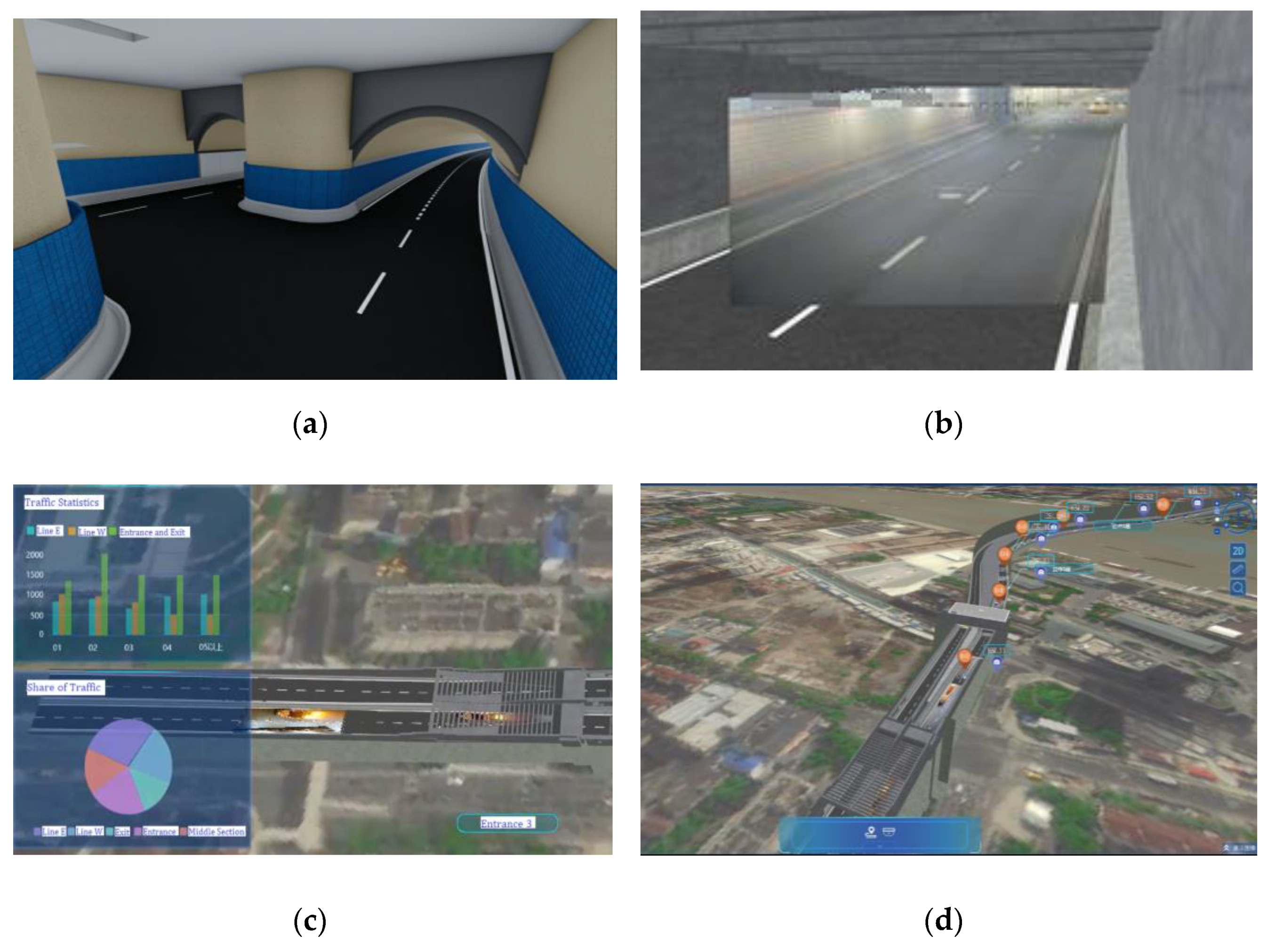

Through data acquisition, scene modeling, camera calibration, scene fusion, system design, function development, and other processes, the above key technologies are integrated to carry out the digital twin construction and operations management application of 3D video fusion for the demonstration tunnel. The demonstration process is shown in

Figure 10.

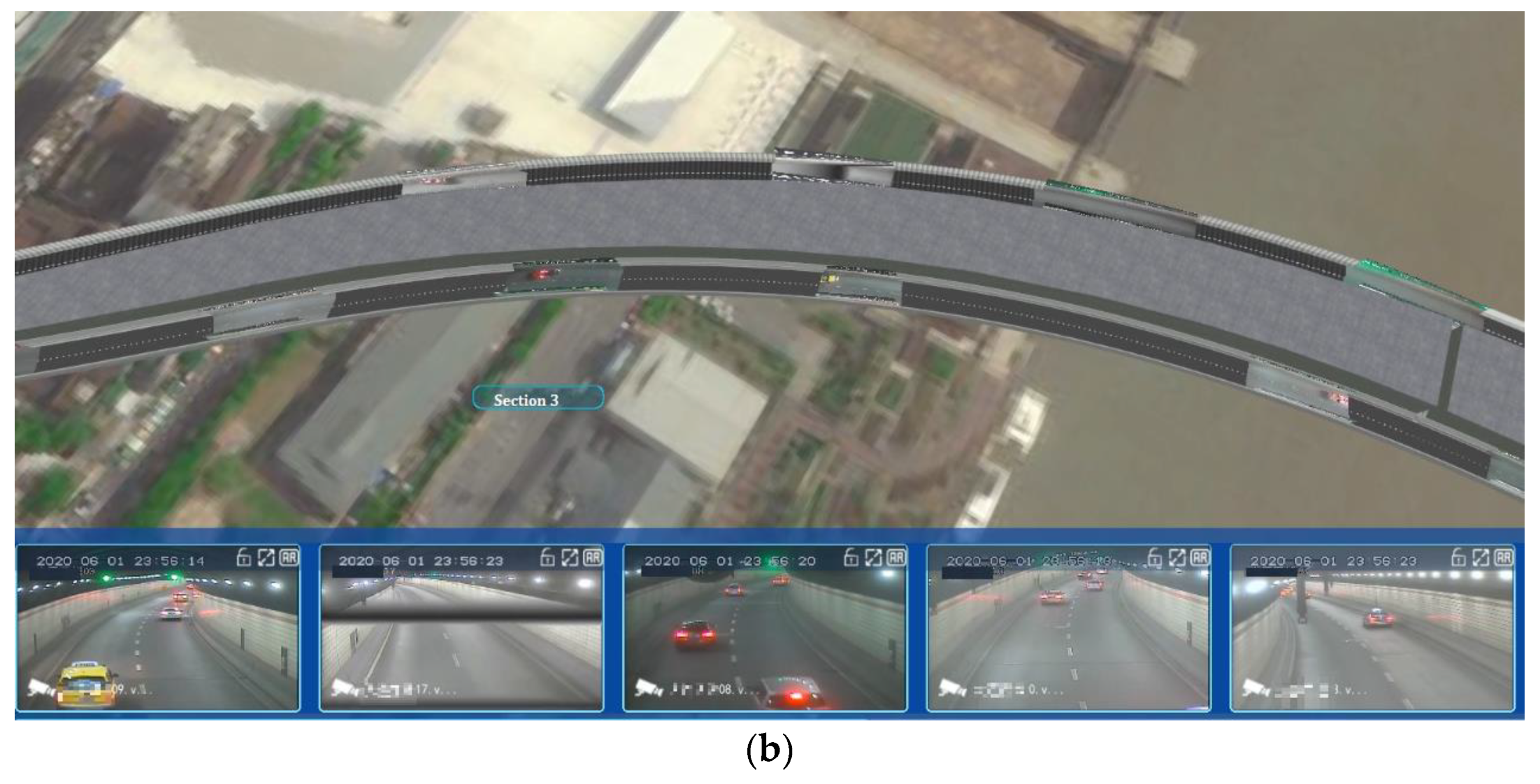

Figure 10a shows an example of the BIM modeling result of the demonstration application tunnel. It can be seen that the internal structure of the model maintains geometric integrity after the lightweight treatment;

Figure 10b shows an example of the effect of monitoring video fusion within the tunnel. The scene and video picture have a high matching degree by calibrating the position, orientation, and projection calculation of camera points.

Figure 10c shows an example of fusion of the IoT sensor data for tunnel services. In addition to the direct annotation of dynamic and static labels in 3D scenes, it is also possible to display real-time and historical data such as temperature and humidity, wind speed, and traffic volume in the form of charts.

Figure 10d shows a demonstration tunnel digital twin scene constructed by integrating the above data and key technologies, it can be seen that the tunnel scenes and data associations are more in line with managers visual awareness.

10.2. Demonstration Application Effect Analysis

Combined with the construction and operations status of the demonstration tunnel, we carry out the tunnel digital twin demonstration application verification based on 3D video fusion, develop a tunnel digital twin intelligent operations system, and carry out management applications such as tunnel traffic flow, accident rescue, facility management, and emergency response based on digital twin.

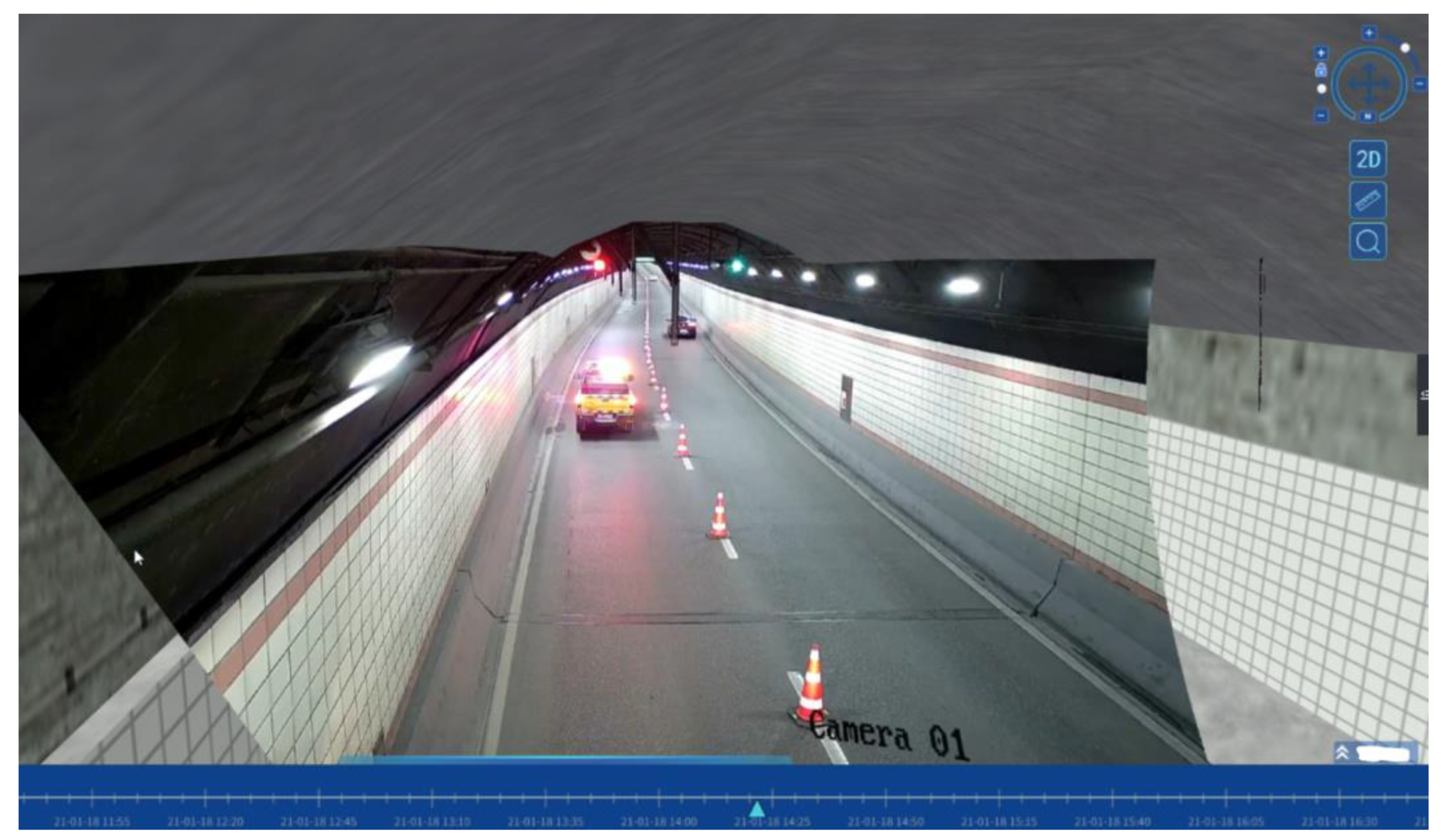

(1) The tunnel traffic flow global monitoring reduces cognitive pressures experienced by monitoring personnel. The tunnel digital twin intelligent operations system establishes a spatial relationship between 2D video and 3D scene. The global traffic status monitoring of the tunnel is provided with a virtual top view, and the user can visually view the running status of the tunnel, as shown in

Figure 11. It supports independent roaming viewing and analysis in 3D space, reducing the cognitive pressures experienced by monitoring personnel.

(2) The full-line intelligent video patrol reduces artificial patrol costs. With the constructed tunnel digital twin scene and system, users can customize patrol lines, patrol points, patrol frequencies, and patrol time in the scene. According to the set intelligent patrol lines, the system can carry out full-line automatic video patrols in line with driving cognition, as displayed in

Figure 12. It reduces the number of artificial field patrols, handles ball camera inspection, and reduces the cost of artificial patrol.

(3) The historical video panoramic renewal improves the incident traceability efficiency. By using the constructed tunnel digital twin scene and system, all the surveillance videos in the scene are unified clock control. The clock can be uniformly reversed to a certain time in the past according to the user’s requirements for tracing or evidence collection of the whole process of the occurrence, development, and disposal of an abnormal event, as displayed in

Figure 13, which improves the work efficiency of abnormal event tracing and tracking.

(4) The convergency fusion tunnel IoT facilities realizes 3D digital asset management. The constructed tunnel digital twin scene and system supports multi-source IoT sensing facilities, state and data real-time access, as displayed in

Figure 14, and therefore, realizes the 3D digital asset management of tunnel civil structure, electromechanical facilities, and special equipment.

(5) The tunnel alarm two- and three-dimensional linkage response improve accident rescue and emergency response capabilities. The tunnel digital twin system includes 2D map and 3D scene. Users can quickly jump, confirm, roam, and view between 2D and 3D when receiving an alarm and discovering abnormalities, as displayed in

Figure 15, which improves the operation unit’s capabilities of alarm confirmation, anomaly location, accident rescue, and emergency response.

In addition, the tunnel digital twin intelligent monitoring system with 3D live video fusion also supports multi-level control, space tracking, camera relay, magnifying glass, and other operations, which revolutionizes the 2D matrix monitoring scheme and realizes management services in 3D space.

10.3. Comparison of Effect before and after Demonstration

Through the construction of a digital twin scene, discrete 2D surveillance video and 3D tunnel scene are fused to realize the fusion of virtual and real monitoring, and the monitoring application effects before and after the tunnel pilot are compared.

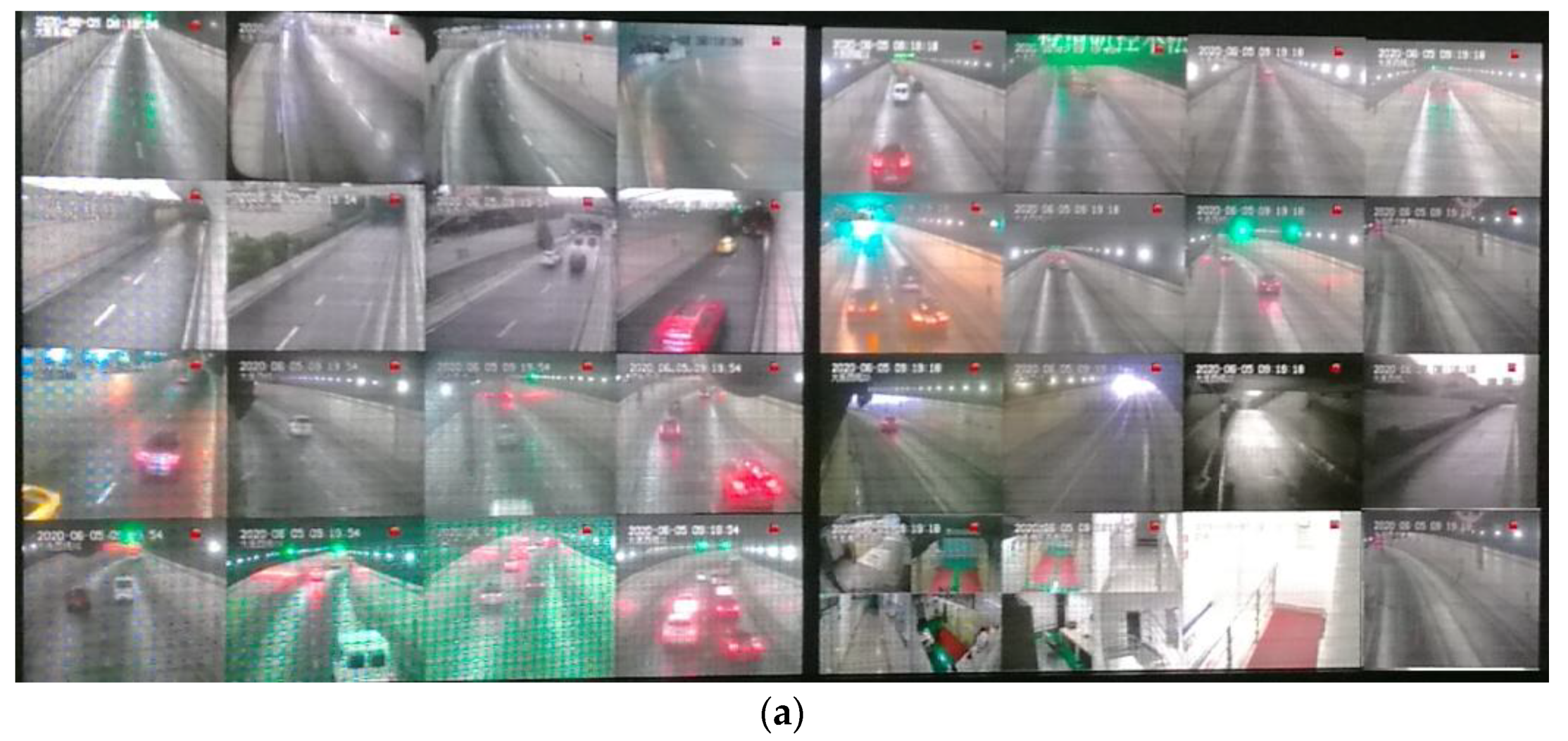

Figure 16a shows the current matrix monitoring scheme of the tunnel. It can be seen that the multi-channel guns are arranged on the large screen, and the similarity of each channel video is very high. It is difficult to quickly locate the video to the specific location in the real tunnel when an abnormal problem is discovered.

Figure 16b shows the global top view monitoring effect after the fusion of the real video. It can be seen that the spatial correlation between 2D video and 3D scenes is directly established in the scene after fusion. Combined with location auxiliary label information, it can quickly locate, roam, interactive, and view in 3D space. The 3D live video fusion tunnel scene can also be directly related to the 2D video to achieve the direct association and interaction of the two- and three-dimensional operation.

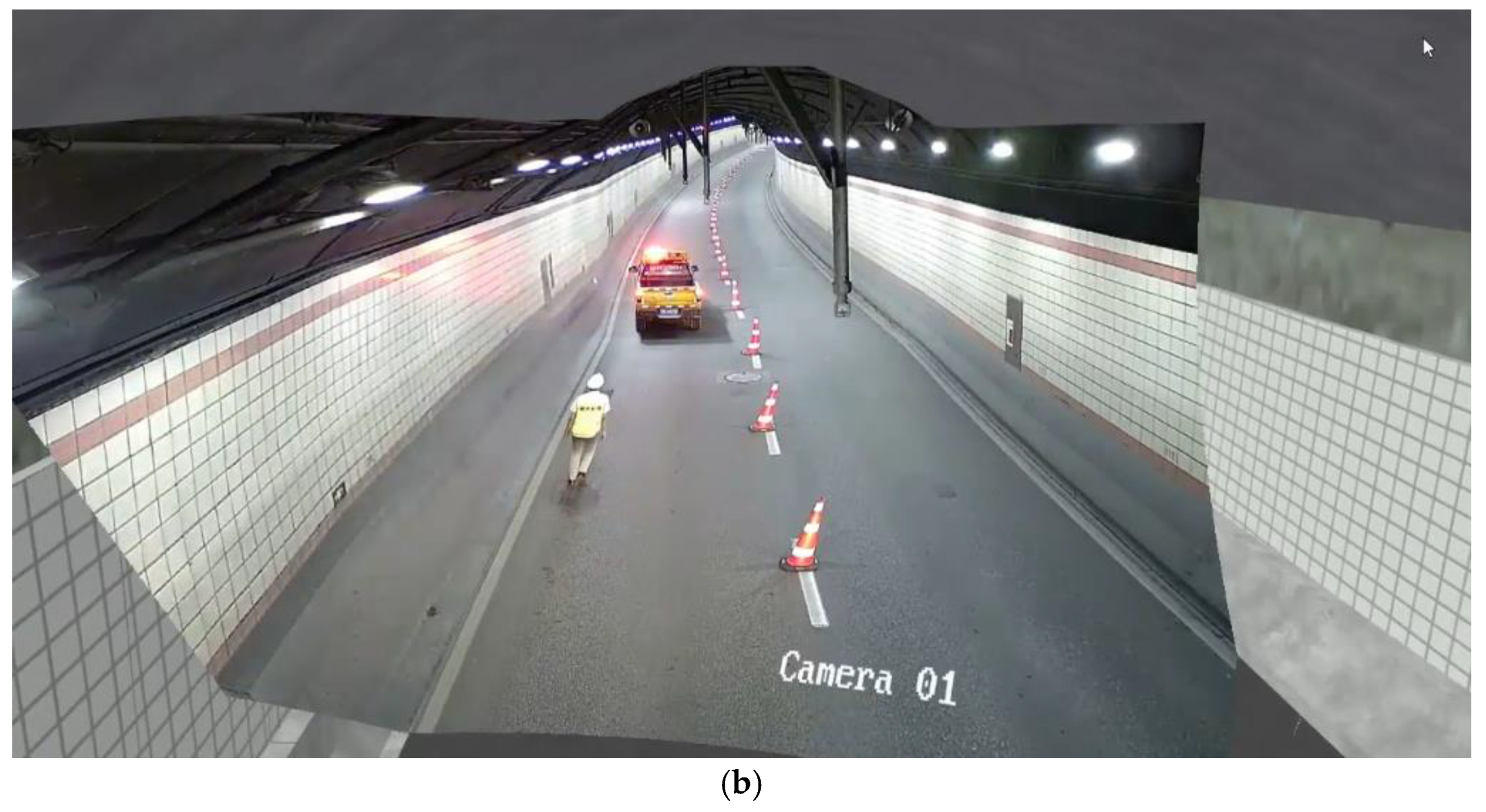

Figure 17a shows the situation where the tunnel monitoring personnel is performing ball camera manual operation inspection. In the demonstration tunnel, only four-way ball cameras are installed at the entrance and exit of the tunnel, focusing on monitoring the traffic conditions at the entrance and exit. The inspection inside the tunnel is still dominated by patrol vehicles.

Figure 17b shows the effect of automated 3D video patrol; the monitoring personnel can customize patrol lines, points, and frequencies, and the system can carry out automatic video rotation of the east and west lines of the tunnel in line with the spatial cognition of the monitoring personnel.

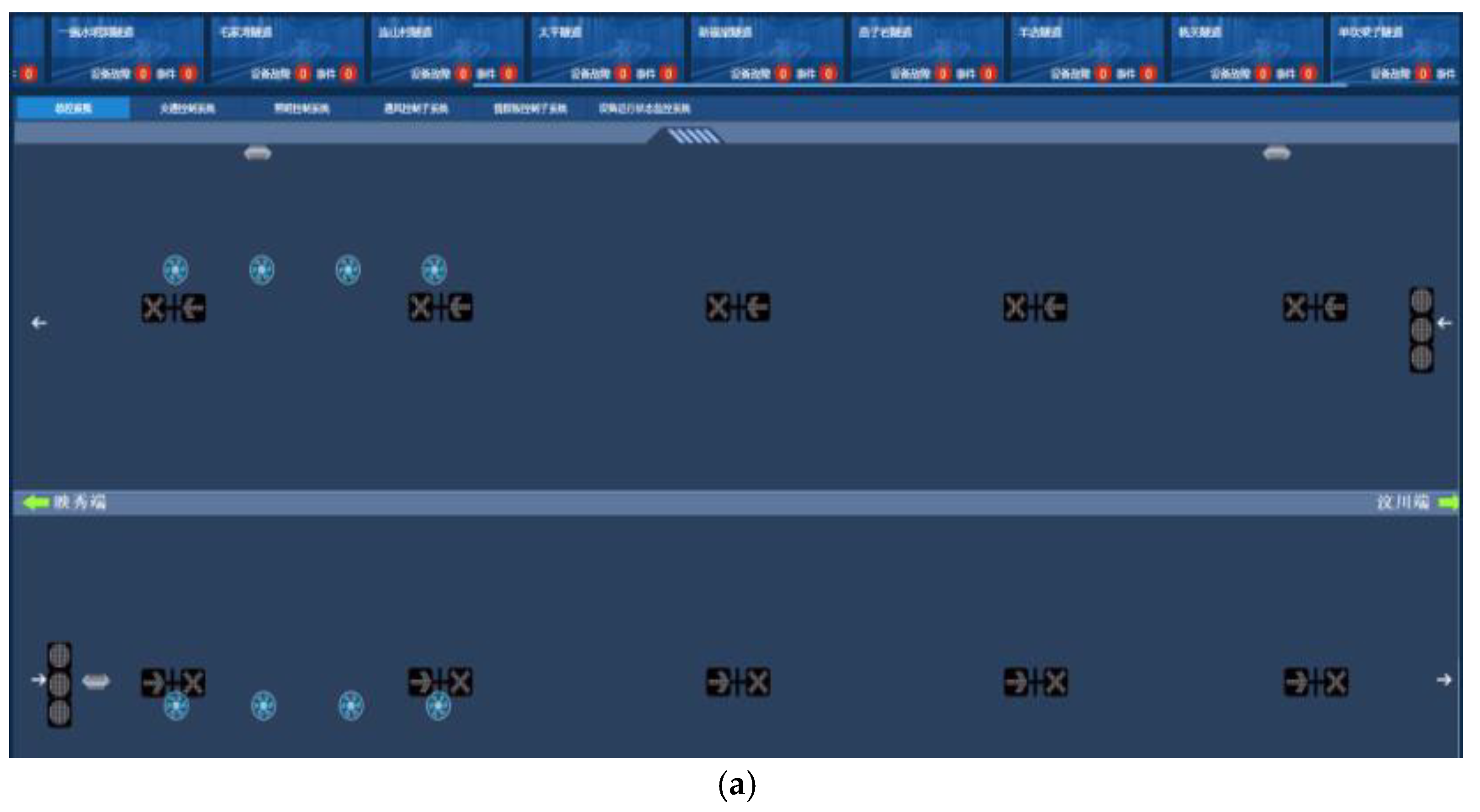

Figure 18a shows the 2D interfaces of the subsystems such as signal, ventilation, and broadcast of the tunnel monitoring center. It can be seen that each professional subsystem and video system are separated from each other, and the data are independent with complex linkages. In

Figure 18b, the fans and broadcasts in the tunnel are registered in 3D real space and displayed in real time, which realized the 3D integration of tunnel IoT sensing data.

Through the demonstration application, it can be seen that the problem of video fragmentation is solved by the tunnel digital twin intelligent operations technology and system based on 3D video fusion. It helps to resolution of the separation of video and business requirements, and provides tool support for the 2D and 3D linkage response of emergency events. It also improves the efficiency of managing business requirements such as tunnel traffic flow, accident rescue, facility management, and emergency response.

In fact, the construction and application cases of tunnel digital twins are still relatively rare at present. In this paper, we have outlined a beneficial exploration of this direction. The virtual-real fusion of multi-channel surveillance videos are used to replace all-element object modeling in tunnel scenes, which increases the realism of scene perception and reduces the workload of complex element modeling and simulation.

10.4. Demonstration Optimization Suggestions

Through the pilot application of the demonstration tunnel, it is found that there are still deficiencies, mainly including insufficient resolution of the analog camera, failure of the existing camera to achieve full coverage of the tunnel scene, reliance on other subsystems for the access of the IoT data, etc. In view of the above problems, the following suggestions for further optimization of the intelligent management of the demonstration tunnel scene are given:

(1) Improve the resolution of field monitoring cameras. The real scene fusion technology cannot solve the shortage of field monitoring resolution. It is suggested to improve the camera resolution of field monitoring terminals when conditions permit, and use high-definition digital cameras to replace the analog cameras currently in service, and therefore, improve the basic conditions of field monitoring cameras.

(2) Improve the coverage of field cameras. At present, the coverage area of the in-service cameras of the demonstration tunnel is limited and full coverage of the tunnel scene cannot be realized. It is suggested to increase the coverage of field cameras during the later field terminal upgrading. The main methods are as follows: First, reduce the distance between cameras and deploy cameras in scenes at intervals of 50 or 70 m. Second, increase the coverage and flexibility of scene monitoring using guns, balls, fisheye lens, etc. For example, use a fisheye or wide-angle camera for collection in curved areas, or install a ball camera in key monitoring area for space tracking, ball camera linkage, etc.

(3) Form dynamic IoT sensing data access, analysis, and application standards. The method of data transmission in message mode and associations among systems in control mode are in line with the reality of tunnel information management. For various types of IoT sensing data such as traffic detection, environmental detection, and structure detection, it is necessary to form a standard mode and data standard for data access, analysis, and application in combination with the reality of managing business requirements.

(4) Deepen the innovation of tunnel intelligent management mode based on 3D live video fusion. Based on the summary of pilot application experience, continue to deepen the fusion of business requirements and virtual reality fusion technology, and promote the management mode innovation based on 3D real-time video fusion technology and system, such as panoramic tunnel emergency command, tunnel subdivision, and hierarchical management.

11. Conclusions and Future Works

In this study, a digital twin scene of tunnel traffic was constructed and applied to tunnel traffic flow, accident rescue, facility management, emergency response, and other aspects. It provides new ideas and means for analyzing and solving complex traffic problems in tunnel traffic. In response to the problems existing in tunnel digital operation, such as video fragmentation, separation of video and business requirements data, and lack of 2D and 3D linkage response means, in this paper, we focused on the application method of tunnel digital twin construction and virtual-real fusion operations based on 3D video fusion. On the basis of analyzing the demand and characteristics of operational business requirements such as tunnel traffic flow, accident rescue, facility management, emergency response, BIM technology was used to form a 3D model of the tunnel body, lining, tunnel door, road, concealed works, ancillary facilities, electrical facilities, and other physical objects with the actual scene of the tunnel. Through 3D registration and projection calculation, the multi-channel surveillance video in tunnel scene and tunnel geometry model were combined in real time according to topology to form a panoramic digital twin scene of a tunnel with 3D real scene fusion. We integrated multi-source connected sensing data, associated the traffic detection, structural deformation monitoring, environmental monitoring, lighting, power, fire protection, ventilation, water supply and drainage, traffic signal, variable information board, broadcasting and telephone facilities and equipment in the actual scene of the tunnel, and used the digital twin in the virtual equipment to realize scene registration, data access, dynamic annotation, and real-time update.

Considering a tunnel in China as the example, we performed demonstration applications of tunnel operations management based on digital twin, such as virtual top view global monitoring, automatic tunnel video patrol, two- and three-dimensional linkage emergency response, 3D integration and linkage supervision of tunnel facilities, etc. The application results showed that the tunnel digital twin intelligent operations technology and system based on 3D video fusion solved the problem of video fragmentation, promoted the separation of video data and business data, and provided tool support for 2D and 3D linkage response of emergencies. It can help to improve the efficiency of managing business requirements such as tunnel traffic flow, accident rescue, facility management, and emergency response, and can support the accurate perception and fine management of tunnel infrastructure and traffic operation.

The disadvantages of the system and areas for improvement include: the camera position and attitude need to be calibrated one by one, the two-way interaction functions of virtual and real models need to be realized, the video data structure algorithm lacks integration, and so on. Future work should include multi-source online data-driven 3D scene updates; data-driven tunnel traffic operations simulations; and predictive deduction, tunnel digital twin perception, and application scenario analysis based on virtual and real fusion, etc.