5.1. Data Analysis in Relation to the Hotel Category

The relationship between different factors and the rating category of the accommodation is explored in order to gain a better understand of the rating category.

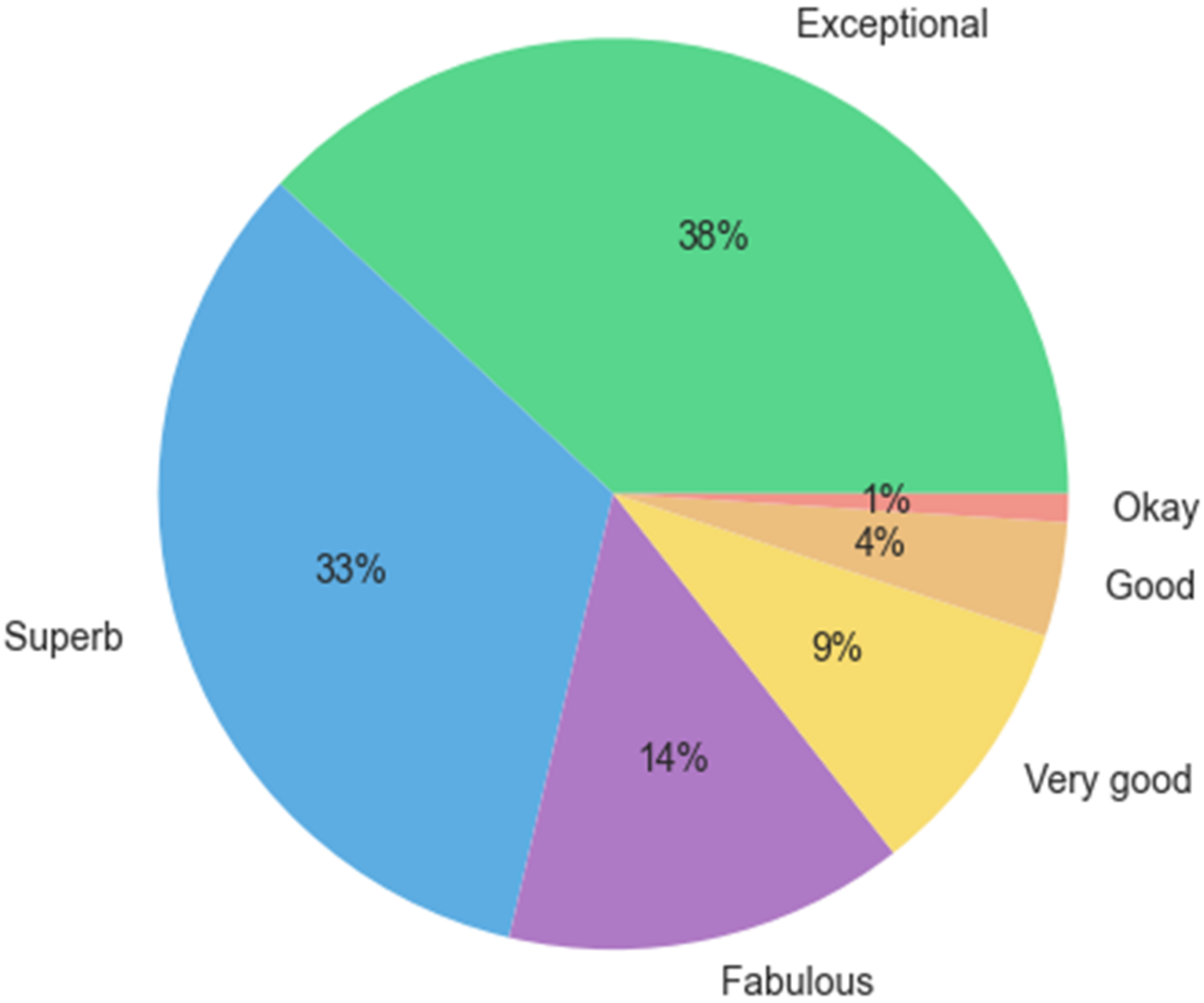

First the general properties of the dataset are explored, including the proportion of accommodations in each category. The largest share of observations belongs to two categories—

Exceptional and

Superb to be precise. The second largest are the categories

Fabulous and

Very good, followed by the categories

Good and

Okay, which have the lowest number of accommodations (

Figure 1).

The accommodations with the lowest ratings belong to the category

Okay, having a minimum rating of 4.9 (which is the lowest rating of this dataset) and a maximum of 6.9. The next worst category is

Good, which includes accommodations with a numerical rating ranging from 7.0 to 7.9, followed by

Very good with ratings from 8.0 to 8.5,

Fabulous with ratings from 8.6 to 8.9,

Superb with ratings from 9.0 and 9.4, and, lastly,

Exceptional with ratings from 9.5 to 10. The average number of reviews and the average size of the accommodation are explored to find out that the middle categories (

Very good,

Fabulous,

Superb) have on average more reviews than the extremely low or high categories (

Okay,

Good, and

Exceptional), and that the size of accommodation positively correlates with the accommodation category (

Table 2).

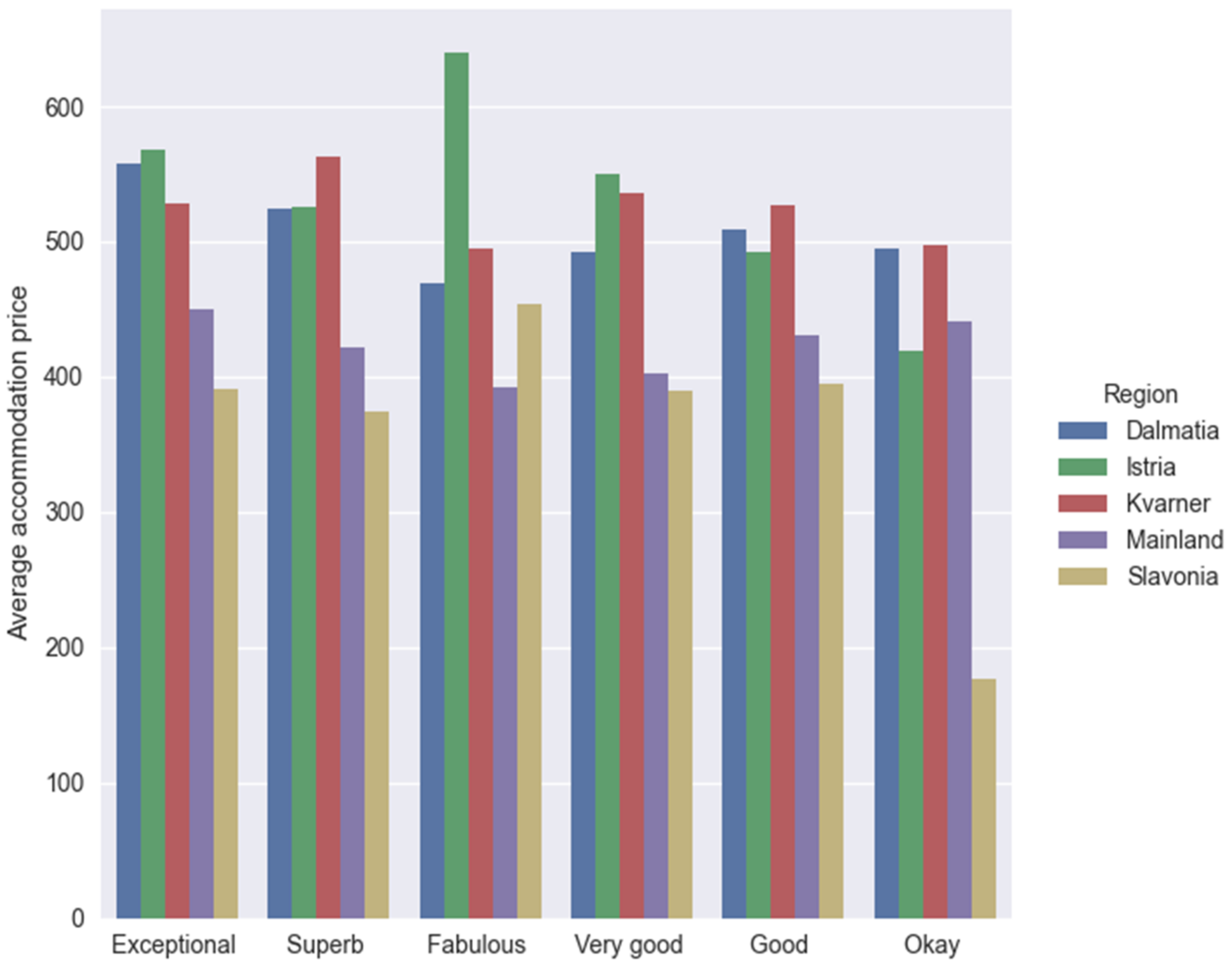

The analysis of average accommodation prices in relation to the region and category of the accommodation (

Figure 2) reveals that the region Mainland has the lowest deviations in prices between accommodation categories, as well as the region Kvarner and possibly Dalmatia. In other regions, there is a significant variation in prices between some of the categories. For example, Istria has on average more expensive accommodations of the category

Fabulous (the average price of accommodations in that category is above HRK 600 per night whilst all other categories have an average price well below HRK 600). A similar but different pattern can be observed for Slavonia. It has similar average prices for all categories except the category

Okay, which is the only category in the region with the average price below HRK 300 or even below HRK 200, to be precise.

Given the overall percentage of categories (

Figure 1), the category ratio per region provides insights on what to expect. This sort of analysis is important for determining that the category ratio per region is not unbalanced (e.g., 90% of the category

Exceptional belongs to one region and the remaining percentage is dispersed in other regions).

Table 3 shows that the distribution of accommodation categories per each region is approximately the same as the distribution on the overall dataset (with a slight exception in the region Slavonia, which has more than 50% of accommodations assigned to the category

Exceptional).

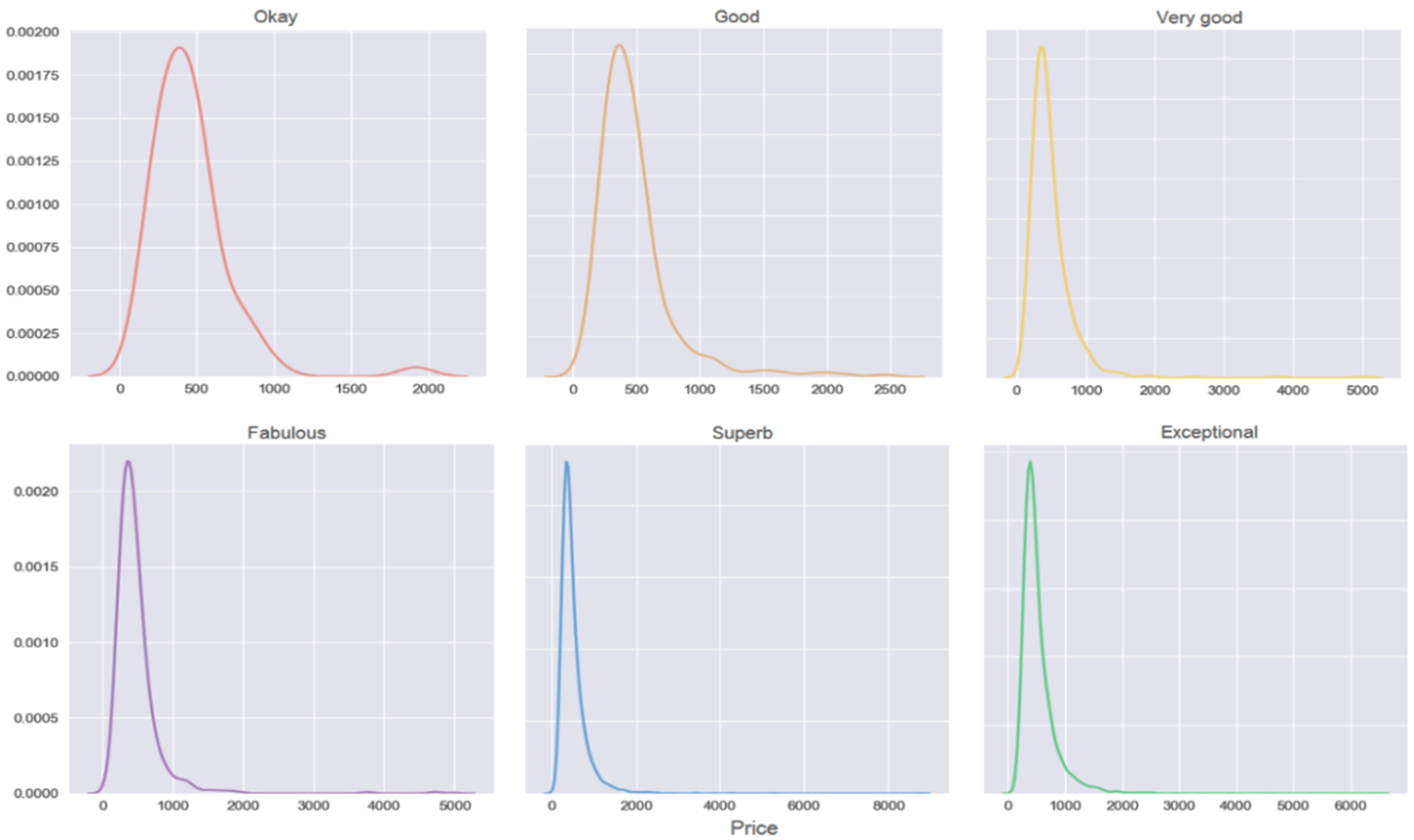

Price distribution per accommodation category is also explored. Given the distribution representation (

Figure 3) it is shown that all categories have approximately the same price distribution, which is right skewed, meaning that majority of accommodations associated with a category have an overnight price below HRK 1000, and that a minority of accommodations have an overnight stay cost of HRK 1000 or higher. Outliers (data points with an overnight price above HRK 1000) are further examined.

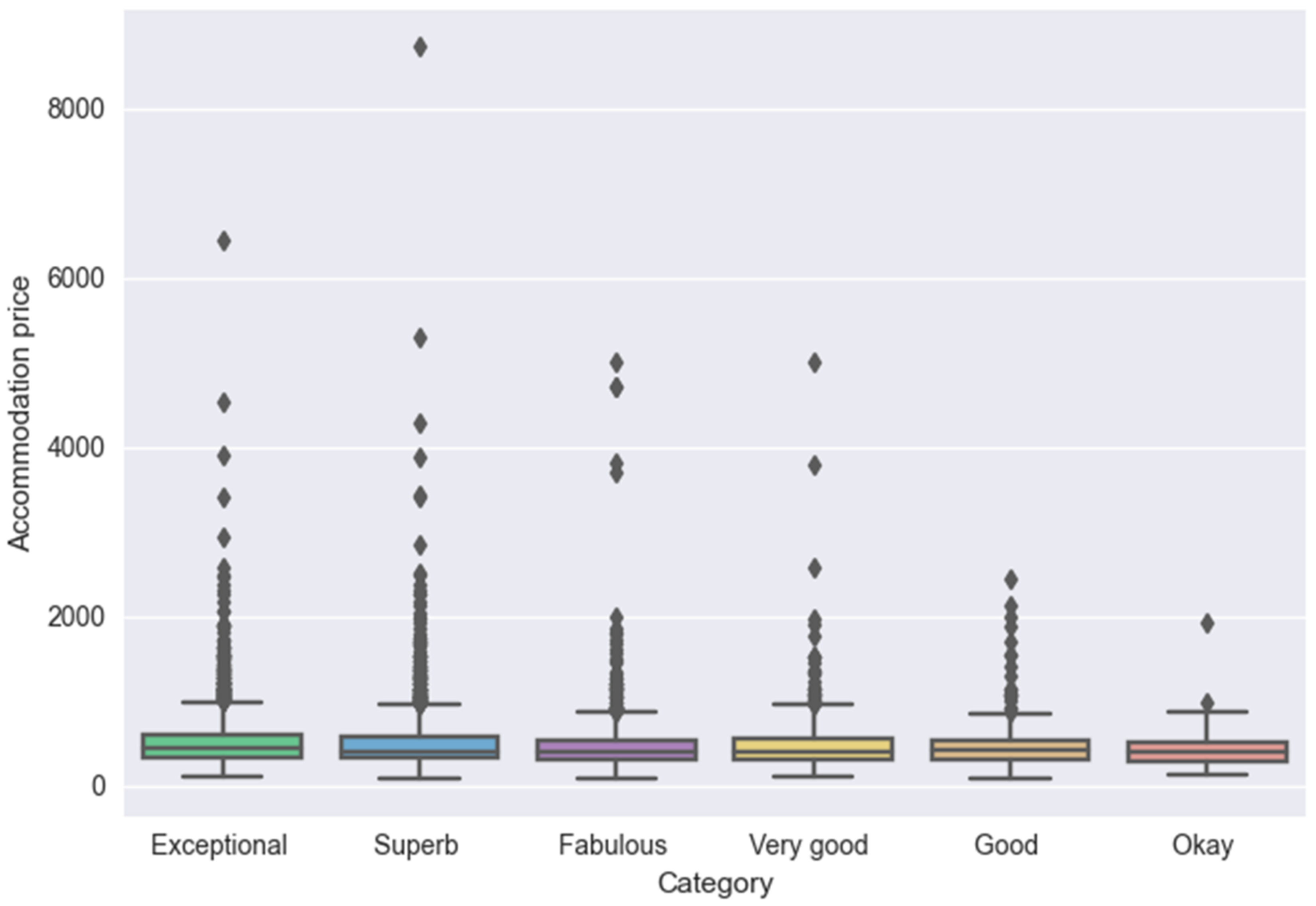

Figure 4 shows the interquartile price range for each accommodation category. The interquartile range (IQR) measures data dispersion. The width of the box represents the IQR, which shows the data points between the 25th (Q1) and 75th (Q3) quartile. The lines (whiskers) represent the data points between the minimum (

) and the maximum (

). Data points outside the whiskers (lines) are outliers, which are observations that do not share the same characteristics as the majority of the data in the category distribution (represented by the ‘tail’ in the distributions in

Figure 3). In terms of outliers, the categories share similar data characteristics, and the category price distributions differ to a lesser extent for all but the

Okay category.

Table 4 shows the percentage of accommodation properties with respect to the accommodation category. This table highlights several interesting facts about accommodation properties regarding the accommodation category:

Smoking, House pets and Parties have a higher approval rate in the accommodations with considerably lower ratings (Okay, Good, Very good) although the approval rate difference is more emphasized for Smoking and Parties.

Parking and Wi-Fi are widely available across all accommodation categories, even though Wi-Fi availability increases with better ratings.

No house rules are related to quiet hours. The percentage represents those accommodations which do not have house rules. It turns out that accommodations with lower ratings often have no house rules and that the percentage progressively decreases with an increase in rating. It means that accommodations with higher ratings are more prone to establish certain house rules that guests are obliged to respect.

5.2. One-Way Analysis of Variance (ANOVA) and Variable Correlation

ANOVA is a variance analysis method that is used to determine statistical differences in group means between three or more independent groups [

20]. In this study, it is used to check differences in review means between region groups. Variability is measured as follows:

where

F is the variance, Mean Square of Treatments (

MST) is the mean square between two groups, and

MSE is the mean square within groups (residual mean square).

T represents the group total,

the size of the group

i,

G a grand total of all observations,

n the total number of observations, and

stands for an observation. To summarize, this analysis measures two sources of data variance and compares their relative sizes. Two sources refer to the variance between groups (difference between the mean of a specific group and the overall mean of all groups) and the variance within groups (difference between a single value inside a group and the mean of that specific group. The following two hypotheses are examined:

H0: (null hypothesis) = the difference in means is not statistically significant.

H1: (alternative hypothesis) = the difference in means is statistically significant.

F measure: the ratio of variance between categories and within categories. High value of

F provides evidence against H

0 hypothesis because it indicates that the difference between groups is greater than the difference within groups. As is evident from

Table 5,

F value equals to 14.07 (which is considered as high variance) so the

p value is checked to ensure that the

null hypothesis can be rejected.

p value (value of probability): small p value, beside high F measure, proves that there is enough evidence for rejecting the null hypothesis, i.e., the differences between group means are statistically significant. Since p value equals to 1.99·, which is a low p value (every p value lower than 0.05 indicates statistical significance), the result is statistically significant.

One of the disadvantages of the ANOVA test is that it does not indicate groups between which the statistical difference exists. To overcome this issue, the Tukey HSD post hoc test can be applied. It shows the statistical difference between every pair of groups used in the ANOVA test.

Table 6 shows the results of the

Tukey HSD test. Each row shows the compared groups, difference between the group means,

p value, and whether the

null hypothesis should be rejected or approved. It can be asserted that statistically significant difference between means of the groups exists for the following group pairs:

Mainland and Dalmatia (p value of 0.001)

Mainland and Istria (p value of 0.001)

Mainland and Kvarner (p value of 0.001)

Dalmatia and Slavonia (p value of 0.001)

Istria and Slavonia (p value of 0.001)

Kvarner and Slavonia (p value of 0.001)

Taking a closer look at a specific group such as Mainland reveals that it is statistically different from all regions except Slavonia, which is actually its neighboring region. Likewise, Dalmatia and Slavonia, which are geographically far apart, also prove to be statistically different. More precisely, all regions that prove to be significantly different are located far apart from one another, whilst the regions that are not significantly different (e.g., Istria and Kvarner, Dalmatia and Kvarner) are neighbors in terms of geographical location.

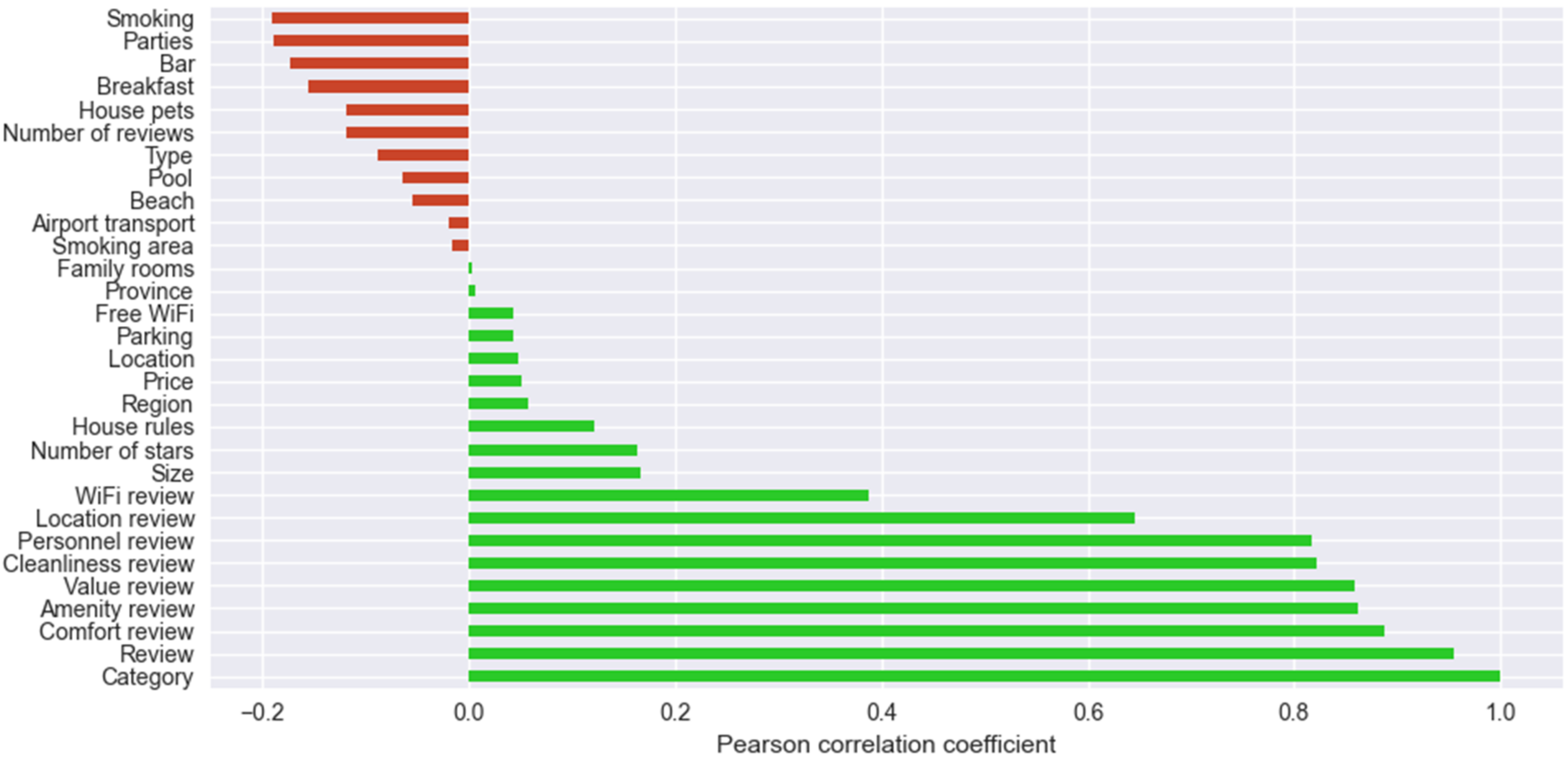

In order to define the subset of parameters (accommodation factors) to be used in the model training, the correlation analysis is conducted on all accommodation factors and their relationship with the accommodation category is explored (

Figure 5). The highest correlation in relation to the accommodation category expectedly belongs to the guest review (the accommodation category is based on the review score). The

review factor is therefore discarded from the dataset. Furthermore, high correlations (above 0.6, which is a strong linear relationship) are detected for certain accommodation properties (

personnel,

hotel facilities,

cleanliness,

comfort,

value,

location, and

internet), the highest being for

comfort and the lowest for

location.

Additionally, a verification is performed in order to determine whether the accommodation review is simply a mean of more specific reviews (in regard to the high correlation coefficient). Since the results do not overlap, i.e., the review does not match the mean, it can be concluded that the accommodation category is associated with the accommodation regardless of the reviews for accommodation properties (but they imply that higher or lower reviews for certain properties, such as accommodation comfort, have some kind of influence on the overall accommodation category, and that, e.g., comfort or cleanliness of the accommodation have greater influence on the final accommodation category than its location or Wi-Fi availability).

5.3. Guest Review Analysis

Complementary to the accommodation factors, scraping is performed for guest reviews in Croatian and English. Due to differences in the methods and tools available for the two languages, the obtained data are analyzed separately for each language. The reviews for each accommodation unit are shown separately and can be reached by following appropriate hyperlinks. Since the original dataset is imbalanced, reviews of the same number of accommodations are taken into account for each class. That number is based on the category with the smallest number of accommodations. The aim of this filtering procedure is to reduce the original imbalance (all categories have a final count of review sets equal to 68, which is the number of accommodations belonging to the category Okay). The number of individual reviews fetched within each review set varies and depends solely on the accommodation. After scraping, two separate datasets for English and Croatian reviews are collected, each containing the attributes numerical grade, title, and text.

In order to extract important information, data cleaning is performed prior to the review analysis. Lowercasing and stop words removal (e.g., “the”, “a”, “is” or “and”) is performed first. Next, Part of Speech (POS) tagging is conducted and all nouns are extracted. Finally, lemmatization is performed in order to remove inflectional endings and return the base forms of nouns (lemmas) [

21]. The description of the dataset is given in

Table 7. The English and Croatian review datasets have 10,361 and 3857 observations, respectively.

5.3.1. Word Frequency Analysis

Unigram and bigram analysis is conducted in order to analyze words that are most frequently used in reviews with respect to the accommodation category [

22].

Table 8 shows the results of the bigram word frequency analysis for English guest reviews. Approximately the same bigrams appear across all accommodation categories (“city-center”, “value-money”, “location-hotel”, etc.) but they have a rather different order of appearance (bigrams are presented in a descending order by the frequency count and the top ten bigrams are extracted). For example, unlike the other categories, the bigram “value-money” does not appear in the most frequently used bigrams for the category

Okay. Additionally, the bigram “staff-stay” (accommodation personnel) is among the most frequently used bigrams across all categories, though it is differently ordered.

Table 9 presents the results of the bigram word frequency analysis for Croatian guest reviews. In Croatian guest reviews, similarly to English reviews, approximately the same bigrams appear in the same accommodation categories, though they are differently ordered (e.g., regarding the category

Okay, guests mostly refer to the accommodation location and personnel; regarding categories with higher ratings, such as

Superb and

Exceptional, guests mostly refer to the proximity of the accommodation to the city center, given value for money, and also peace and quiet inside the accommodation).

Accommodation location is found across all categories, though in some categories it is the most frequently used of all other accommodation properties, while in others it only appears after personnel, parking, and peace and quiet. This indicates that comfort has the highest importance in certain categories. Evidence that comfort has a high influence on the accommodation category can also be found in

Figure 5, which shows that accommodation comfort review (of all guest reviews) has the largest influence on the accommodation category.

With respect to the accommodation category, the most frequent words in guest reviews do not differ much (

Table 10). Similar words are used throughout the reviews (such as personnel, location, view, city, etc.) with a few exceptions (kindness in the category

Exceptional in English reviews, pool in the category

Fabulous in English reviews, etc.) and a slightly different ordering (e.g., according to the guest reviews, the most important aspects of the accommodation in the category

Okay are location, room, view, and breakfast, while words related to the kindness of the host and rest in the accommodation start to appear in the top ten frequent words in the categories with higher ratings (

Fabulous,

Superb and

Exceptional), beside the accommodation location and personnel).

5.3.2. Jaccard Index

The Jaccard index represents a measure of similarity between texts based on tokens (words). It is calculated as the division between the number of common words between texts and the overall total of unique words [

23]. The equation for the index calculation is given as follows:

where

A and

B represent two different sets of text,

is the intersection of words between the sets (common words), and

is the union of unique words in the two sets. The value of the Jaccard index ranges from 0 to 1. The closer the value is to one, the more similar the texts are.

The Jaccard index for each pair of accommodation categories for English reviews can be seen in

Table 11. The largest similarity between guest reviews is found for the categories with lower ratings (beside

Fabulous, it is found for

Okay,

Good, and

Very good), with the lowest index being 0.89 (which is considered really high). Categories

Superb and

Exceptional differ the most from the other four categories. Moreover, the content of the

Exceptional category differs greatly from the first four categories (the Jaccard index of approximately 0.2). Even though it is still small, the category

Superb has greater similarity to the other four categories (Jaccard index of approximately 0.5).

The Jaccard index for each pair of accommodation categories for Croatia is given in

Table 12. The table differs greatly from

Table 11. Guest reviews for the category

Fabulous have the smallest similarity with other categories of guest reviews, while the category

Okay has the largest similarity to the other accommodation categories (followed by the category

Superb).

5.4. Naïve Bayes Classification Based on Guest Reviews

The Multinomial Naïve Bayes algorithm is trained separately for English and Croatian guest reviews. Since verbs and adjectives proved to have high predictive potential, they are used along with nouns for predicting accommodation categories.

Prior to training, the BOW approach is applied in order to extract text properties [

8], and the dataset is split into the training set, which comprises 70% of the dataset (7252 observations and 8981 variables for the English dataset, and 2.699 observations and 2.000 variables for Croatian—a variable/feature is a word extracted using the BOW vectorizer), and the test set which comprises 30% of the original dataset (3.109 observations for the English dataset, and 1158 for Croatian).

Furthermore, the SMOTE procedure is applied for balancing the observation ratios between categories. Since the oversampling method is used on the training sets, the samples increase in size, hence the English training dataset has a total of 26,412 observations while the Croatian training dataset has a total of 10,242 observations. Each category takes an equal proportion of the training set (16,667%), thus there are 4402 observations for each category in the English dataset and 1707 observations for each category in the Croatian dataset. The algorithms are hence trained on the oversampled and thus balanced training sets and tested on the imbalanced datasets.

Table 13 and

Table 14 show the results of the Multinomial Naïve Bayes models based on English and Croatian guest reviews. The tables report the following:

Model precision—the ratio between true positive cases (the number of correctly predicted cases) and the overall number of predicted cases for a specific category (regardless of whether they are true or false positives). The models achieve the greatest precision on the category Exceptional. The precision approaches approximately 80% for English and 90% for Croatian reviews, meaning that 20% and 10% of forementioned reviews are false positives.

Model recall—the ratio of correctly predicted cases of a specific category and the overall total number of cases of that specific category. Models have a recall of about 90% for the category Exceptional, meaning that the models correctly predict 90% of the Exceptional category cases.

F1 score—the ratio of precision and recall. F1 score is calculated based on the following equation:

- 4.

Support—the number of observations in a specific category inside the test set. These numbers are imbalanced since the SMOTE procedure is applied only to the training set to ensure the model is trained on the same number of observations per class (in order to learn patterns for all classes).

The overall model accuracy for the English model (based on all observations in the test set, irrelevant of the accommodation category) equals to 0.7125 (approximately 71%). Hyperparameter optimization is performed by changing one parameter value (alpha) while keeping all other parameters fixed for testing. It is found that the default option is the most optimal one (

Table 15).

Table 16 represents a confusion matrix for the Multinomial Naïve Bayes model of English reviews. This type of visualization is used for presenting correct and incorrect classifications. The rows represent actual categories and columns represent the categories predicted by the model. The intersections represent percentages of overlap between the real and predicted categories. For example, the model incorrectly predicted 1% of the category

Superb as

Okay, 6% as

Good, 3% as

Very Good, 3% as

Fabulous, and 35% as

Exceptional, concluding that the model correctly predicted 52% of the observations of the

Superb category. The diagonal represents correct predictions per category. The results indicate that the model predicts

Exceptional with the highest accuracy (85%) and

Fabulous with the lowest accuracy (33%).

Similarly, the algorithm is trained and tested on Croatian guest reviews in order to compare the accuracy on the two datasets. Hyperparameter optimization slightly improved the initial model accuracy, which ended up at 79% (

Table 17).

Table 18 represents a confusion matrix for the Multinomial Naïve Bayes model of Croatian reviews. In line with the overall accuracy results, the accuracy rate is higher in several categories when compared with the results obtained on English guest reviews—

Okay is 19% higher,

Fabulous 43%,

Superb 16%, and

Exceptional 5%. In the remaining two categories (

Good and

Very good), the accuracy rate is 7% and 19% lower, respectively.

5.5. Classification Based on Accommodation Factors

All accommodation factors with the absolute value of the correlation coefficient above 0.15 are selected for model training and appropriately preprocessed (SMOTE requires encoding textual values in a numerical form). Prior to applying SMOTE, the dataset size equals to 6133 observations and 14 variables, out of which 4293 are used for training and 1840 for testing (the same splitting procedure is applied as for the guest review datasets).

The final dataset (after SMOTE application) used for model training consists of 9654 accommodations, out of which every category has 1609 accommodations (observations) and the following 14 accommodation factors—guest reviews for comfort, accommodation value, accommodation facilities, cleanliness, personnel, location and internet, accommodation size, number of stars, variables based on accommodation properties (whether the accommodation provides breakfast, whether it has a bar, room for smokers, and whether it allows parties) (

Table 19).

The initial Decision Tree (DT) model consists of 655 nodes and 328 leaves with an accuracy of 79%. After excluding variables with low feature importance and after conducting hyperparameter tuning (the parameter of tree maximum depth was changed from

None to 10) (

Table 20), the model accuracy equals 82%. The results per category are presented in

Table 21. The confusion matrix (

Table 22) reveals that the model is slightly prone to misclassify categories with lower ratings,

Okay and

Good, into their neighboring categories (35% of the category

Okay is misclassified as

Good, and 22% of the category

Good is misclassified as

Very good). Categories with higher ratings are predicted with accuracy above 75%.

In the Random Forest (RF) model several parameters are changed—the number of estimators (the number of decision trees changed from 100 to 150), criterion (function for measuring the quality of branching changed from

Gini to

entropy), maximum depth of trees is changed from

None to 20, maximum features parameter (number of predictors used for data splitting) is changed from

auto to 3 and OOB score parameter (which instructs to use or not to use out-of-bag samples to estimate the generalization score) is changed from

False to

True (

Table 20). Accuracy after parameter tuning equals to 87.5%. The results per category are presented in

Table 23.

According to the confusion matrix (

Table 24), and in comparison to the DT model, the RF model has higher accuracy in every category except

Fabulous, for which the accuracy rate is 1% lower. Like the DT model, the RF model is also slightly prone to misclassify categories with lower ratings into their neighboring categories (misclassification rate ranging from 13 to 30%) but its overall precision and recall (

Table 23) are much higher than those of the DT model (

Table 21).

The initial Support Vector Machine (SVM) model has an accuracy of 73%. The parameter optimization procedure results in changing the values of two parameters—the kernel parameter (type of kernel used in the algorithm) is changed from

rbf (Radial Basis Function) to

linear since

rbf kernel is more appropriate when the data are not linearly correlated and the break ties parameter is changed from

false to

true (if

true, it breaks ties for predictions according to the confidence values of the decision function) (

Table 20). After parameter optimization, the accuracy equals 90%. The results are given in

Table 25. The confusion matrix (

Table 26) shows that the SVM model has the best overall accuracy, predicting each category correctly in approximately 80% of the cases or above, except the category

Okay which is correctly classified in 70% of cases. The RF model performs better on accommodations with considerably low ratings (

Okay and Good), while the SVM model performs the best on accommodations with considerably higher rating (

Superb and

Exceptional).

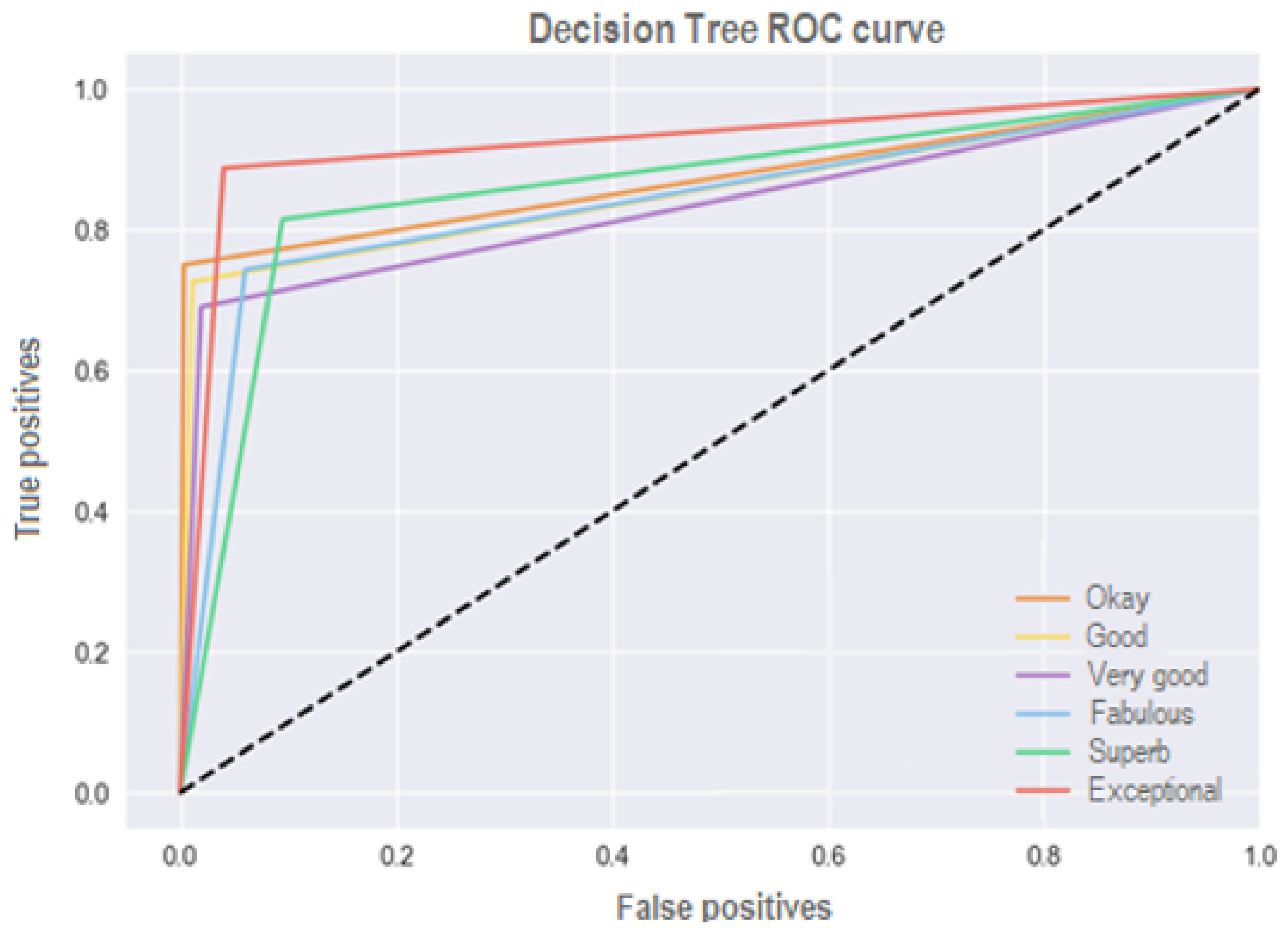

The Receiver Operating Characteristic (ROC) curve is used in order to visualize the ratio of true and false positive observations [

10]. The ROC curve visualization represents the accuracy of the test, i.e., the closer the category to the upper left corner, the more accurate the test. Test accuracy, in this case, is commonly referred to as area under the curve (AUC), i.e., the bigger the area under the curve, the more accurate the model is in predicting the specific category. If a model follows the diagonal line of the test it means that it has an equal probability of guessing the category, like a random coin toss.

The DT model has the largest AUC for the category

Exceptional (0.92), followed by

Okay (0.87),

Good and

Superb (0.86) and, lastly,

Very good and

Fabulous (0.84) (

Figure 6).

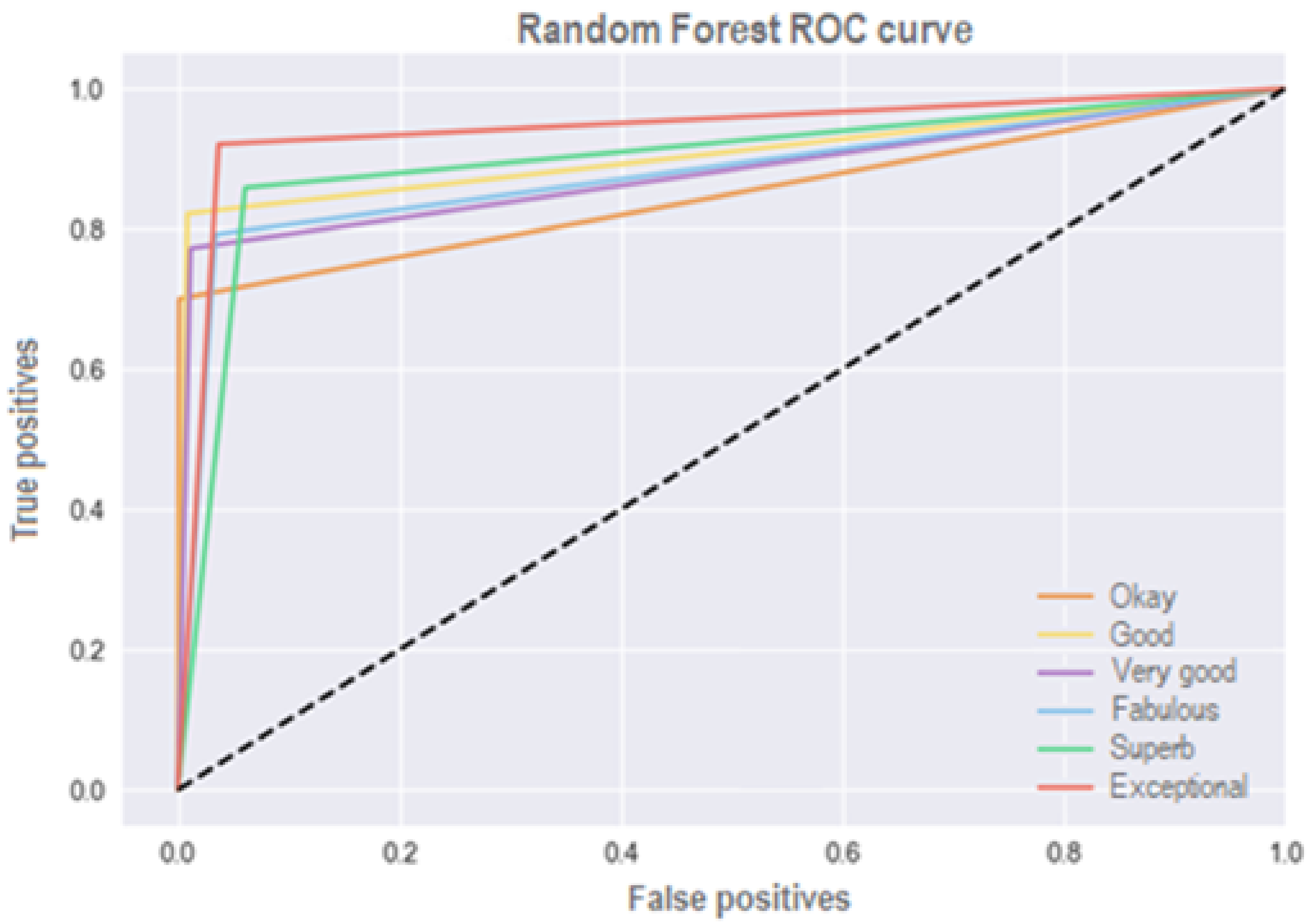

The RF model has the largest AUC for the category

Exceptional (0.94), followed by

Good (0.91),

Superb (0.90),

Very good and

Fabulous (0.88) and, lastly,

Okay with the lowest AUC (0.85) (

Figure 7).

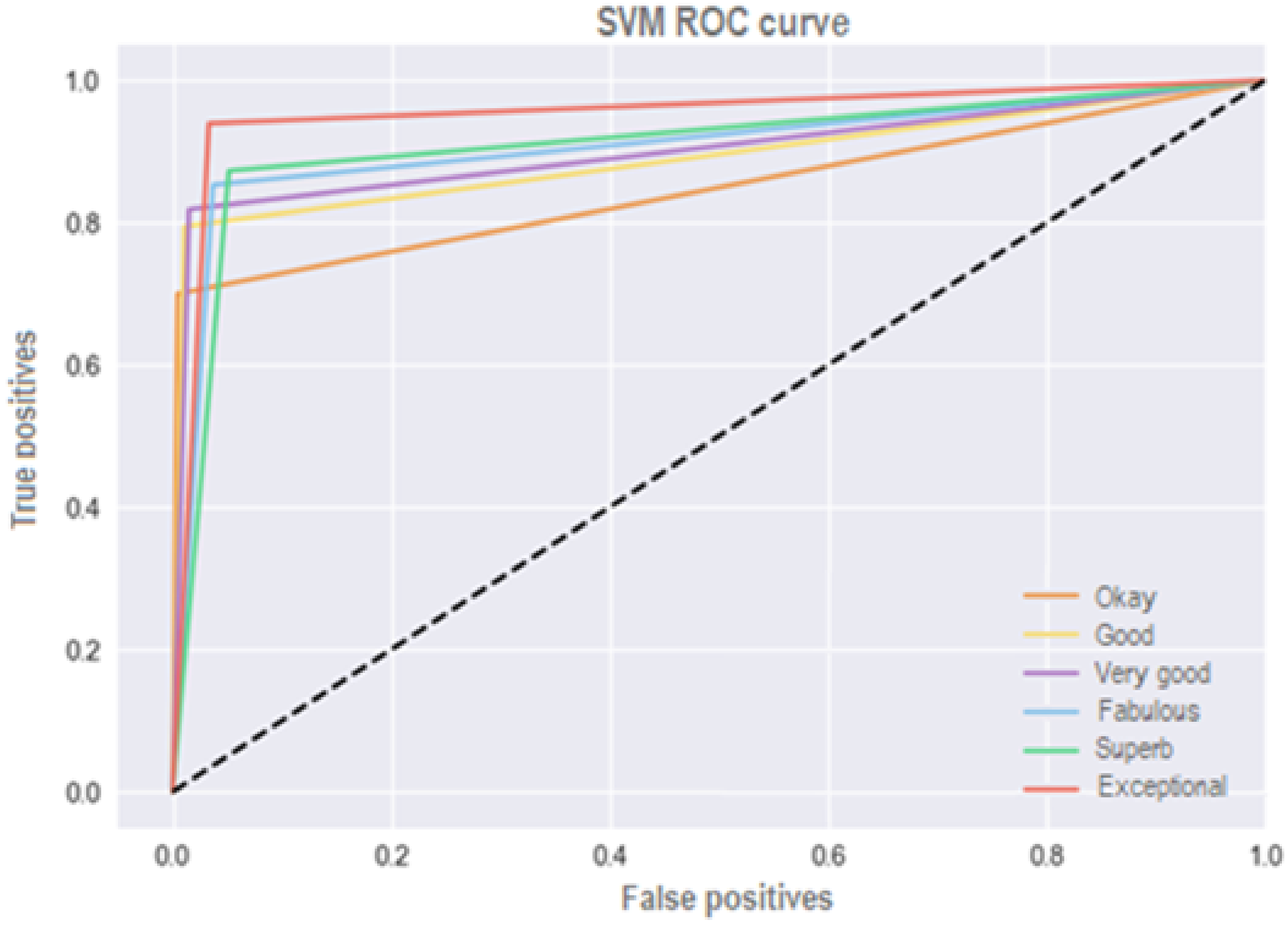

ROC curve for the SVM model (

Figure 8) somewhat resembles that of the RF model. Both have the largest AUC for categories with higher ratings, while the DT model has largest AUC for the category

Okay. The final rank list is created by summing up all AUC scores separately for each model and dividing it by the number of categories. In that way an average AUC for each model is obtained (

Table 27).

The RF model has higher precision and recall (including the F1 score) in the categories with lower ratings (

Okay,

Good and

Very good), while the SVM model outperforms the DT and RF model in the categories with higher ratings (

Table 28). The DT model outperforms the other two models by the AUC statistic for the category

Okay even though not by much (2% difference).