Class-GE2E: Speaker Verification Using Self-Attention and Transfer Learning with Loss Combination

Abstract

:1. Introduction

2. Related Works

2.1. Single-Head Attention (SHA) Layer

2.2. Multi-Head Attention (MHA) Layer

2.3. Generalized End-to-End Loss

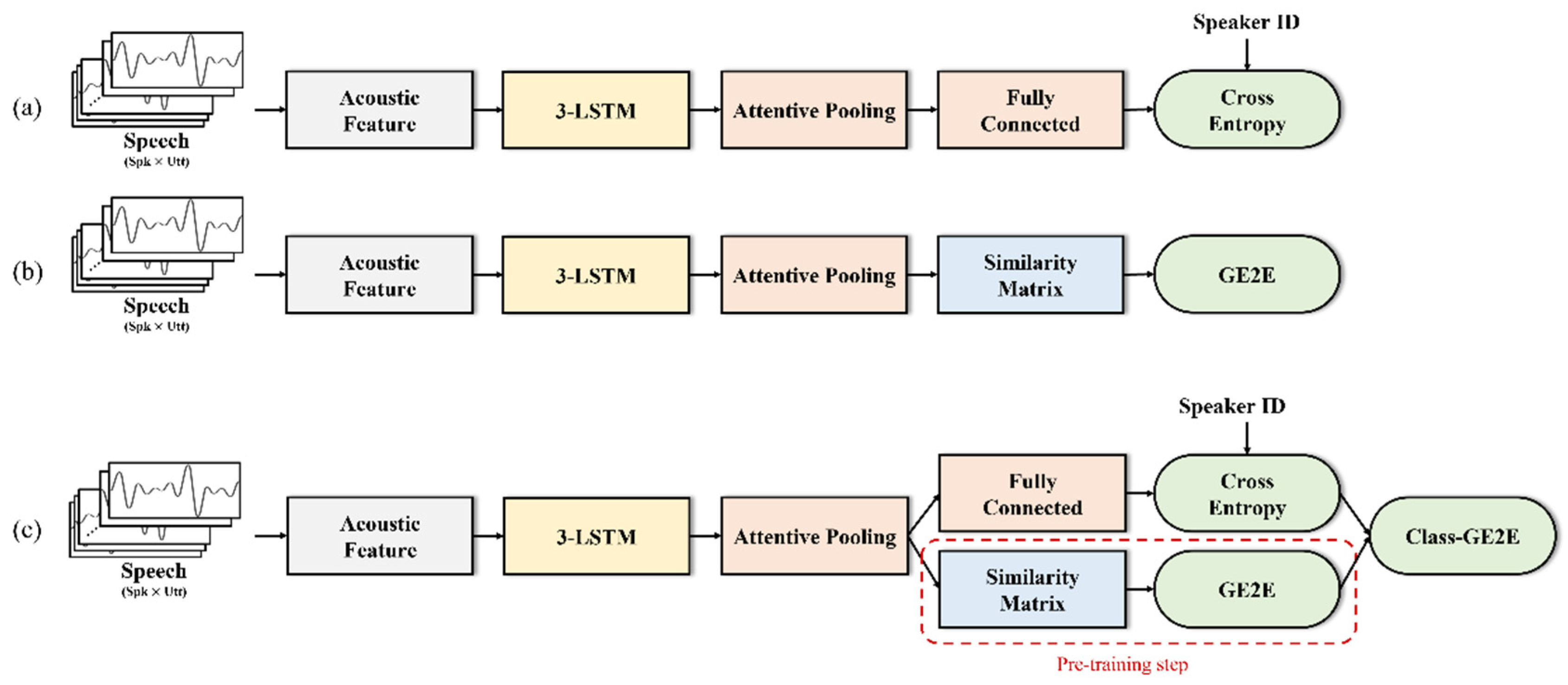

3. Proposed Methods

3.1. Sorted Multi-Head Attention (SMHA) Layer

3.2. Transfer Learning

4. Experiments

4.1. Dataset

4.2. Model Architecture

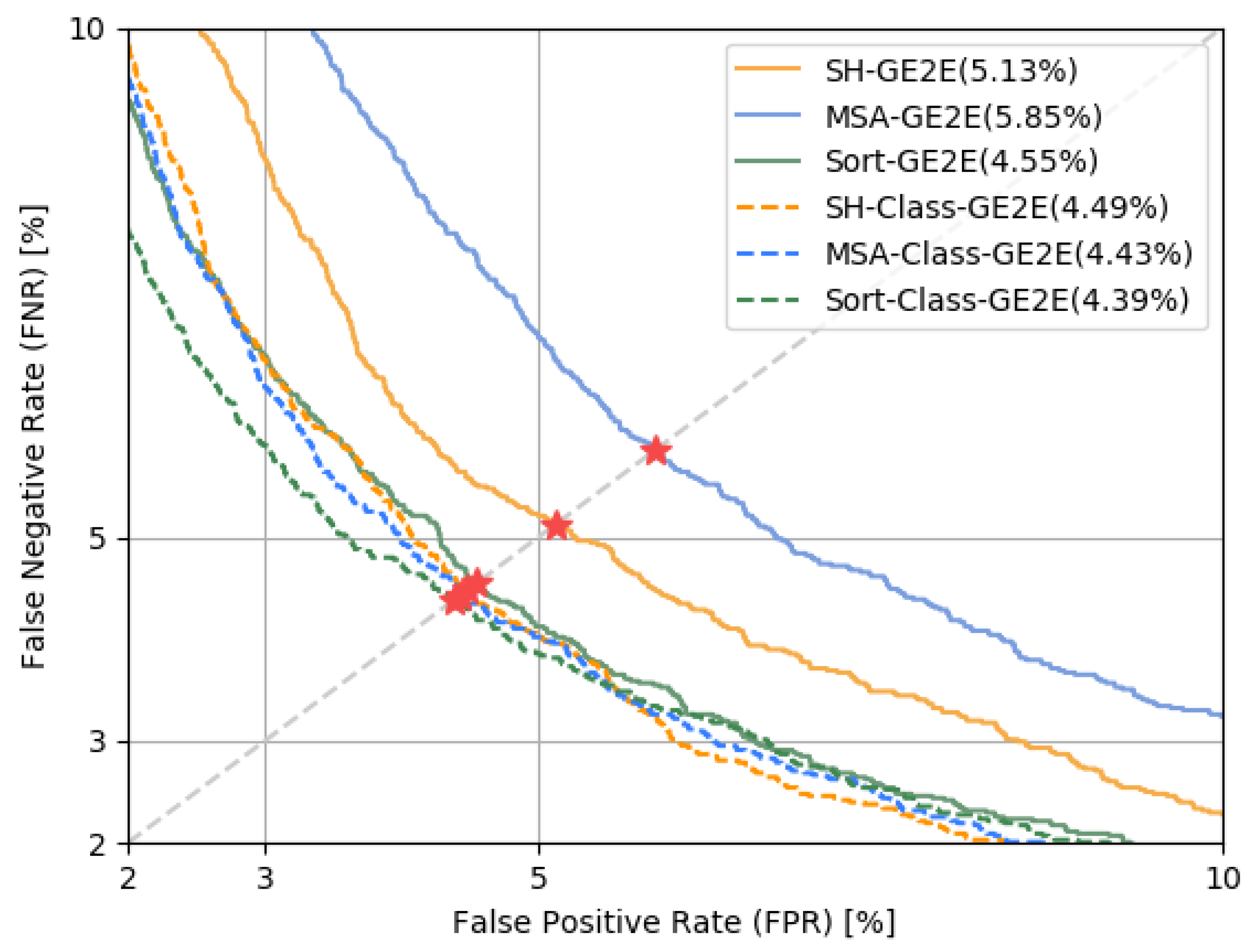

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lee, H.-S.; Lu, Y.-D.; Hsu, C.-C.; Tsao, Y.; Wang, H.-M.; Jeng, S.-K. Discriminative Autoencoders for Speaker Verification. In Proceedings of the 2017 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017. [Google Scholar]

- Rohdin, J.; Silnova, A.; Diez, M.; Plchot, O.; Matejka, P.; Burget, L. End-to-End DNN Based Speaker Recognition Inspired by I-Vector and PLDA. In Proceedings of the 2018 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Variani, E.; Lei, X.; McDermott, E.; Moreno, I.L.; Gonzalez-Dominguez, J. Deep Neural Networks for Small Footprint Text-Dependent Speaker Verification. In Proceedings of the 2014 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014. [Google Scholar]

- Liu, Y.; Qian, Y.; Chen, N.; Fu, T.; Zhang, Y.; Yu, K. Deep Feature for Text-Dependent Speaker Verification. Speech Commun. 2015, 73, 1–13. [Google Scholar] [CrossRef]

- Heigold, G.; Moreno, I.; Bengio, S.; Shazeer, N. End-to-End Text-Dependent Speaker Verification. In Proceedings of the 2016 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Povey, D.; Khudanpur, S. Deep Neural Network Embeddings for Text-Independent Speaker Verification. In Proceedings of the Interspeech 2017, Stockholm, Sweden, 20–24 August 2017. [Google Scholar]

- Snyder, D.; Garcia-Romero, D.; Sell, G.; Povey, D.; Khudanpur, S. X-Vectors: Robust DNN Embeddings for Speaker Recognition. In Proceedings of the 2018 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Wang, Q.; Downey, C.; Wan, L.; Mansfield, P.A.; Moreno, I.L. Speaker Diarization with LSTM. In Proceedings of the 2018 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Lin, Q.; Yin, R.; Li, M.; Bredin, H.; Barras, C. LSTM Based Similarity Measurement with Spectral Clustering for Speaker Diarization. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Zhu, Y.; Ko, T.; Snyder, D.; Mak, B.; Povey, D. Self-Attentive Speaker Embeddings for Text-Independent Speaker Verification. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018. [Google Scholar]

- Rezaur, F.A.; Chowdhury, R.; Wang, Q.; Moreno, I.L.; Wan, L. Attention-Based Models for Text-Dependent Speaker Verification. In Proceedings of the 2018 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Okabe, K.; Koshinaka, T.; Shinoda, K. Attentive Statistics Pooling for Deep Speaker Embedding. In Proceedings of the Interspeech 2018, Hyderabad, India, 2–6 September 2018. [Google Scholar]

- You, L.; Guo, W.; Dai, L.-R.; Du, J. Deep Neural Network Embeddings with Gating Mechanisms for Text-Independent Speaker Verification. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- India, M.; Safari, P.; Hernando, J. Self Multi-Head Attention for Speaker Recognition. In Proceedings of the Interspeech 2019, Graz, Austria, 15–19 September 2019. [Google Scholar]

- Fujita, Y.; Kanda, N.; Horiguchi, S.; Xue, Y.; Nagamatsu, K.; Watanabe, S. End-to-End Neural Speaker Diarization with Self-Attention. In Proceedings of the IEEE Automatic Speech Recognition and Understanding Workshop (ASRU) 2019, Singapore, 14–18 December 2019. [Google Scholar]

- Sankala, S.; Rafi, B.S.M.; Kodukula, S.R.M. Self Attentive Context Dependent Speaker Embedding for Speaker Verification. In Proceedings of the 2020 in National Conference on Communications (NCC), Kharagpur, India, 21–23 February 2020. [Google Scholar]

- Wan, L.; Wang, Q.; Papir, A.; Moreno, I.L. Generalized End-to-End Loss for Speaker Verification. In Proceedings of the 2018 in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018. [Google Scholar]

- Bae, A.; Kim, W. Speaker Verification Employing Combinations of Self-Attention Mechanisms. Electronics 2020, 9, 2201. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Chung, J.S.; Huh, J.; Mun, S.; Lee, M.; Heo, H.-S.; Choe, S.; Ham, C.; Jung, S.; Lee, B.-J.; Han, I. In Defence of Metric Learning for Speaker Recognition. In Proceedings of the Interspeech 2020, Shanghai, China, 25–28 October 2020. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How Transferable Are Features in Deep Neural Networks? In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3320–3328. [Google Scholar]

- Nagrani, A.; Chung, J.S.; Xie, W.; Zisserman, A. Voxceleb: Large-Scale Speaker Verification in the Wild. Comput. Speech Lang. March 2020, 60, 101027. [Google Scholar] [CrossRef]

| Models | GE2E | Class-GE2E |

|---|---|---|

| Single-Head Attention (SHA) | 5.13 | 4.49 |

| Multi-Head Attention (MHA) | 5.85 | 4.43 |

| Sorted Multi-Head Attention (SMHA) | 4.55 | 4.39 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bae, A.; Kim, W. Class-GE2E: Speaker Verification Using Self-Attention and Transfer Learning with Loss Combination. Electronics 2022, 11, 893. https://doi.org/10.3390/electronics11060893

Bae A, Kim W. Class-GE2E: Speaker Verification Using Self-Attention and Transfer Learning with Loss Combination. Electronics. 2022; 11(6):893. https://doi.org/10.3390/electronics11060893

Chicago/Turabian StyleBae, Ara, and Wooil Kim. 2022. "Class-GE2E: Speaker Verification Using Self-Attention and Transfer Learning with Loss Combination" Electronics 11, no. 6: 893. https://doi.org/10.3390/electronics11060893

APA StyleBae, A., & Kim, W. (2022). Class-GE2E: Speaker Verification Using Self-Attention and Transfer Learning with Loss Combination. Electronics, 11(6), 893. https://doi.org/10.3390/electronics11060893