Abstract

Each sparse representation classifier has different classification accuracy for different samples. It is difficult to achieve good performance with a single feature classification model. In order to balance the large-scale information and global features of images, a robust dictionary learning method based on image multi-vector representation is proposed in this paper. First, this proposed method generates a reasonable virtual image for the original image and obtains the multi-vector representation of all images. Second, the same dictionary learning algorithm is used for each vector representation to obtain multiple sets of image features. The proposed multi-vector representation can provide a good global understanding of the whole image contour and increase the content of dictionary learning. Last, the weighted fusion algorithm is used to classify the test samples. The introduction of influencing factors and the automatic adjustment of the weights of each classifier in the final decision results have a significant indigenous effect on better extracting image features. The study conducted experiments on the proposed algorithm on a number of widely used image databases. A large number of experimental results show that it effectively improves the accuracy of image classification. At the same time, to fully dig and exploit possible representation diversity might be a better way to lead to potential various appearances and high classification accuracy concerning the image.

1. Introduction

Image classification has always been one of the important research topics in the field of computer vision. It can effectively analyze the content of the image to obtain the key information in the image and give the correct judgment, which is of great significance to real work life and social development. Generally, image classification includes image preprocessing [1], image feature extraction [2,3], feature location, feature selection [4] and classifier design [5,6]. Image feature extraction is an important algorithm and faces many challenges in image classification, such as intra-class change, scale change, viewpoint change, illumination conditions and background interference. Therefore, how to effectively extract image features is an urgent problem to be solved.

In recent years, researchers have generally used sparse representation (SR) and dictionary learning (DL) to extract image features. These methods have a good learning ability for image representation and can show better recognition results in image classification tasks [7,8,9]. The original idea of sparse representation classification (SRC) is to assume that a given test sample can be linearly represented by all training samples and obtain the coefficients of linear representation. In classification, all training samples and coefficients of each class are used to obtain the predicted value [10]. The predicted value of all classes is different from test samples, and the class with the smallest difference is the class of test samples. However, when the data volume increases, the optimization process may become very slow. In order to speed up the sparse decomposition process, there are generally two ways: One is to reduce the operation scale by selecting training samples with certain properties (such as neighbor samples) [11,12,13], and the other is to obtain a more compact dictionary containing a large amount of identification information through DL, such as the classical K-Singular Value Decomposition algorithm (KSVD) [7], Discriminative K-SVD (D-KSVD) [14], Label Consistent K-SVD (LC-KSVD) [15] and fisher discrimination dictionary learning (FDDL) [16]. It is worth noting that there is a novel and prospective research field-hybrid method between metaheuristics and machine learning with computer vision/image processing applications. The novel research field successfully combines machine learning and swarm intelligence approaches and proved to be able to obtain outstanding results in different areas [17,18].

The key of dictionary learning is to obtain a robust dictionary, which can reduce the difference between the same class of test samples and training samples. There are two ways to achieve robustness. One is to impose label consistency constraints on deep sparse features [19,20]. Another is the representation-based classification method (RBCM) [21,22,23]. When RBCM is applied to image data, the dictionary learning algorithm converts each image into a vector. References [24,25] also used the idea of converting each image into a vector when processing images. In the case of the fixed algorithm, fully exploiting the characteristics of the original sample can improve the classification effect [26,27,28]. Similarly, potential multiple representations directly from the original data can provide different observations of the samples. Combining the features of multiple representations not only makes the sample categories sparse but also makes the classification effect more stable. The information obtained by these methods is sparse and contains local sensitive information of the sample, which can make the feature robust to noise and outliers in the sample.

The main objective behind the approach proposed in this study is to further improve the RBCM, increase the classification performance of DL, and avoid the features missing by obtaining novel representations of images, from a practical scope. It shows that the diversity of vector representations of the image can be further consolidated by matrix decomposition in dictionary learning, so the resultant complementary information can be better exploited. The contribution of this research is threefold:

- (1)

- A robust dictionary learning method based on image multi-vector representation is proposed.

- (2)

- A novel representation of images is designed, which is represented by virtual samples and multi-vectors. Based on the original algorithm, four new vector representation methods are added to better mine the large-scale information and global features of images.

- (3)

- A reasonable weighted fusion image classification algorithm is proposed. The influence factor is introduced to automatically adjust the weight of each classifier in the final decision results, which has a significant effect on better extracting image features and can obtain very stable and accurate classification results.

The organizational structure of this paper is as follows. In Section 2.1 and Section 2.2, how the new image representation method is generated is described in detail, and the process of weighted fusion algorithm based on SR is described. In Section 3, this paper analyzes the proposed algorithm and compares it with the original algorithm. In Section 4, comparative experiments are conducted with other algorithms on a large number of databases. The last part summarizes the findings of this research.

2. Proposed Method

2.1. To Obtain Novel Representations of Images

This paper proposes an improved image multi-vector representation algorithm based on [21] to represent the original image. Use the following steps to obtain a multi-vector representation of an image.

- (1)

- Generate a virtual image V from the original image I. The representation method of generating V is as follows:

Proposition 1.

When I is a gray-scale image, the maximum value ofis.

Proposition 2.

Whenor, .

Proposition 3.

When, .

The pixel value of the virtual image V generated in this way is symmetrical about the maximum value , which increases the frequency of pixels value in the medium-intensity pixel and subtracts some other useless characteristic information. In other words, the generated virtual image not only has stronger discriminative information, but also maintains the local geometric features of the original image.

- (2)

- Convert I and V into an improved multi-vector representation of the image.

I and V together constitute this experimental database. I is divided into training sample Y and test sample P. V is added to Y to enrich the training sample, and then all images are converted into multi-vector representations of images using the method in [21]. The study upgrades t = 4 in the original algorithm to t = 8.

For the m-th sample, this paper uses the vector to represent the i-th training sample, and the vector to represent the test sample. Both and are generated from the original image matrix and . The structure of is shown in (2). There are many ways to generate and . There are eight ways in the proposed algorithm:

Concatenate the first to last rows in the sequence of image matrices and , respectively, to obtain and . Concatenating the last to the first rows in the sequence of image matrices and , respectively, is the second representation way. The third way concatenates the first to last columns in the sequence of image matrices and , respectively. The fourth way, respectively, concatenate the last to first columns in the sequence of the same image matrices. The fifth way connects the elements in the same image matrix sequence from left to right according to the principal diagonal. The acquired and structures are shown in (3).

Similarly, the sixth way connects the elements in the same image matrix sequence from right to left according to the principal diagonal to obtain and . The structure after image vector transformation is shown in (4).

The seventh way connects the elements in the same image matrix from right to left by counter-diagonal. The acquired and structures are shown in (5).

The eighth way connects the elements in the same image matrix from left to right by counter-diagonal to obtain and .

Each image representation will form a new dataset and then be used in the dictionary learning image classification algorithm based on sparse representation. In addition to the eight vector representations described above, there are many possible vector representations. For instance, the image is divided into overlapped image blocks with as the size of the image block, and the image block is represented by the column vector according to the fixed step size (fixed pixels per interval). This point has been explained in [29,30].

2.2. The Weighted Fusion Classification Algorithm

This section explains how to do weighted fusion for the multi-vector representation of samples. After obtaining a variety of vector representations of the sample, it is applied to the dictionary learning image classification algorithm based on sparse representation. This paper constructs several sparse classifiers to verify the accuracy of classifiers generated by different features. Weights are allocated based on the accuracy of different classifiers in all feature cases. The weight proportions of different feature classifiers are updated continuously through iteration. Finally, the result is output by the final decision classifier. The specific process of the algorithm is as follows:

- (1)

- Suppose there are m classes of training samples. represents the set of training samples from the i-th class. represents the image vector representation of the j-th training sample of the c class and n is the number of training samples of the i-th class. The multi-vector representation method proposed in Section 2.2 is used to extract the feature of the whole training sample and obtain the characteristic matrix D of multiple sets of training samples.where d is the feature dimensions and k is the k-th class feature. is the feature vector of the i-th training sample of the k-th class sample.

- (2)

- Based on the methods in [21], corresponding sparse representation classifiers are generated for multiple feature vector matrices in (6). Multiple classifiers obtain the corresponding classification results of various sparse representations. Therefore, its residual is calculated via , where , . The degree of separation between different categories of the sparse classifier is obtained as follows:represents the degree of separation between the i-th sample of the k-th class classifier and the other classes in the whole training sample. The greater the separation degree, the more obvious the classification effect of the classifier on the training sample.

- (3)

- This study constructs and assigns initial weights for each sparse representation classifier. Using the degree of separation S of the first classifier output as the initial feature weight coefficient:represents the initialization weight ratio assigned to the i-th class sparse representation classification.

- (4)

- According to the weight coefficient of (8), the classification results are fused to determine the image category.. is the influence proportion of the j-th classifier to all samples under the influence of multi-classification fusion decision. We combine the classification result and its proportion value for linear weighting, and obtain the final classification result as follows:

- (5)

- This study iterates and optimizes the . of each classifier based on the validation data of the test samples, and use factor to adjust the weight ratio of each classifier. If all classifiers fail to detect rightly the vector representation of the sample is discarded. In the classification based on sparse representation, the weight coefficients are meaningful because they reflect the importance of each training sample.

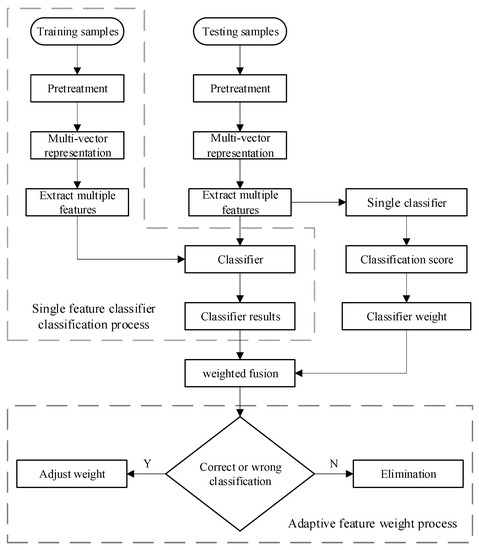

The main steps of this algorithm are shown in Figure 1:

Figure 1.

Flowchart of the proposed method.

Step 1. All the original samples are divided into training sample and testing sample and the corresponding preprocessing.

Step 2. The original sample generates a virtual sample by (1). The virtual sample and the original sample make up the experimental dataset.

Step 3. Obtain the multi-vector representation of all original samples using the methods in Section 2.1.

Step 4. The same dictionary learning algorithm is applied to each vector representation of the original image to obtain multiple sets of features.

Step 5. The k-th class features of the samples are classified using the same classification algorithm to obtain the classification results.

Step 6. Finally, the weighted fusion algorithm is used to classify the test samples.

3. The Analysis of the Proposed Algorithm

This section mainly analyses the differences between the original algorithm [21] and the improved algorithm.

The method in Section 2.2 is used to generate multiple representations in vector form directly from the original image. Each representation is generated by a specific transformation scheme. If the original image has t vector representations, the dataset will increase at least t times. It adds four representation methods to the original algorithm in this study. That is to increase the content of dictionary learning and enrich the features of the sample. The multi-vector representation of this study is reasonable because it reveals more possible changes in the original sample. In other words, multiple representations of an image can be understood as different observations of the same object. Using these multiple representations together can better extract information from objects.

The fusion scheme of the original algorithm has been improved. The influence factor is introduced to adaptively adjust the weight ratio of each classifier in the final decision results because each sparse representation classifier has different classification accuracy for different samples. Therefore, factor reasonably adjusts each adaptive classifier, and the value of also has a certain influence on the classification effect. The optimal multi-classifier fusion decision model is obtained by iterative and adaptive updating parameters. It is difficult to achieve good performance with a single feature classification model. However, the multi-vector representation can provide a good global understanding of the overall image contour. For the classification task, it is not ideal to classify images only by global features. The proposed weighted fusion classification algorithm focuses on the extraction of image contour and direction gradient features, and balances global features and local features. Under the multi-classifier fusion decision, it can deal with the image classification problem well.

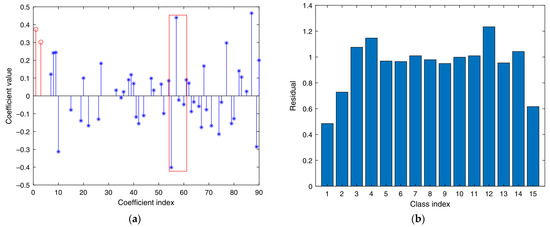

In order to intuitively illustrate the performance of improved algorithms in classification, the following will be analyzed in detail through the Yale face database experiments. The algorithm has more advantages than the original one. Choose the first face image of the first person as the test sample. Without multi-vector representation, through the SRC method, the sparse coefficient and residual value of each training sample corresponding to this test sample are shown in Figure 2. Each of the six index coefficients corresponds to a category, and the red box corresponds to the 10th class. It can be seen from Figure 2a that none of the representation coefficients of the test sample corresponding to the first type of training samples are less than 0. The 15th training samples also have relatively large positive coefficients. In addition, it is clear from Figure 2b that the residual values calculated from the first training sample do not differ much from those of the fifteenth training sample. This shows that, although the SRC method can correctly classify the test sample, the effect is not obvious.

Figure 2.

(a) Coefficients obtained by SRC method; (b) residuals obtained by SRC method.

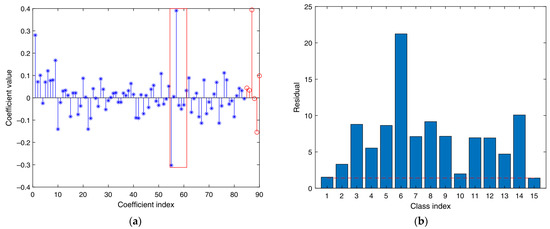

Figure 3a shows the coefficient values obtained by the original algorithm for this test sample, and the coefficient values of the fifteenth class are more significant. In Figure 3b, the residual value of the 15th class is smaller than the first class. Therefore, the test sample is misclassified into the 15th class.

Figure 3.

(a) Coefficients obtained by the original algorithm; (b) residuals obtained by the original algorithm.

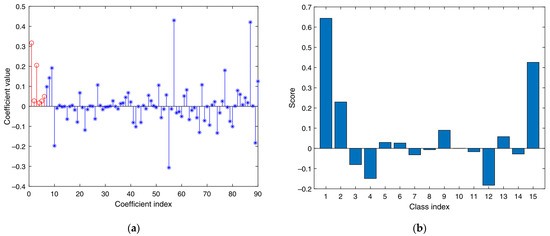

Figure 4 shows the coefficient values and classification scores obtained by the proposed algorithm. The first class has two coefficients greater than 0.2 and both are greater than 0. Figure 4b shows that the classification score of the test sample calculated by our algorithm is the highest, which shows that the test sample has achieved correct classification through the proposed algorithm.

Figure 4.

(a) Coefficients obtained by the proposed algorithm; (b) classification score values obtained by the proposed algorithm.

According to the above experimental analysis, it can be found that the “dense” vector representation in the original method will produce multiple representation coefficients, which may lead to classification errors. The weighted fusion algorithm can be used to balance the coefficients, which can avoid classification errors in some cases. This also proves the effectiveness of the proposed algorithm in classification.

4. Experiments and Results

This section evaluates the proposed algorithm experimentally on four publicly used databases. These databases include three face databases and one object database, specifically the Yale B face database [31], the PIE face database [32], the AR face database [33] and the COIL-20 database [34]. Statistical tests and comparisons with deep learning methods are also conducted.

In order to illustrate the performance of the proposed algorithm in classification, this paper compares it with the classical dictionary learning algorithm and the original algorithm [21]. The original algorithm is represented as improvement to K-SVD (IKSVD), improvement to D-KSVD (IDKSVD), improvement to LC-KSVD (ILCKSVD), improvement to FDDL (IFDDL) and improvement to RDCDL [27] (IRDCDL). The experimental process is as follows. Firstly, sample sets are divided into training samples and test samples. The original sample generates a virtual sample by (1). Virtual samples and original samples constitute the sample set. The multi-vector representation of all original samples is obtained by using the method in Section 2.2. Then, the multi-vector representation is applied to the same dictionary learning algorithm to obtain multiple sets of features. Finally, the weighted fusion algorithm is used to classify the test samples, and the classification results are obtained. Each dictionary learning algorithm performs 20 times and calculates its average accuracy. The results show that the accuracy of the proposed algorithm has been greatly improved by comparing with the original algorithm and other similar algorithms. The following is the specific experimental analysis of various datasets.

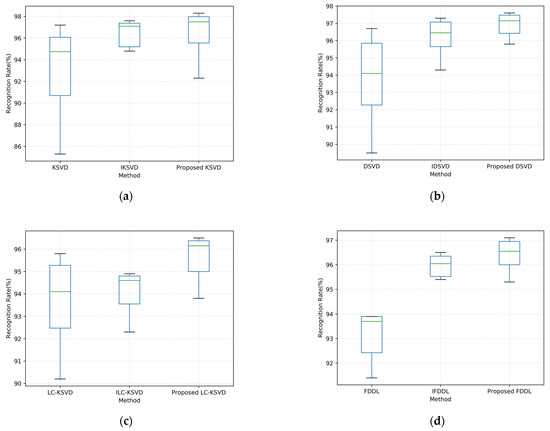

4.1. Experiments on the Extended Yale B Face Database

The Extended Yale B face database contains 2414 faces of 38 persons. Each person has nine different postures and 64 face images with different illuminations. According to the light intensity, it is divided into five subsets, namely subsets I–V. The light intensity of these five subsets is increasing, with a total of 2414 images. Some experimental samples are shown in Figure 5. All images are cut to 32 × 32 pixels. In the experiment, a total of 1170 face images were selected as the test set in subsets I–III, and their images were used as the training set. Figure 6 and Table 1 show the average recognition rate of different algorithms and improved algorithms under different atomic number when the number of training samples is extended concerning the Yale B face database.

Figure 5.

Examples of the Yale B’s extended face database.

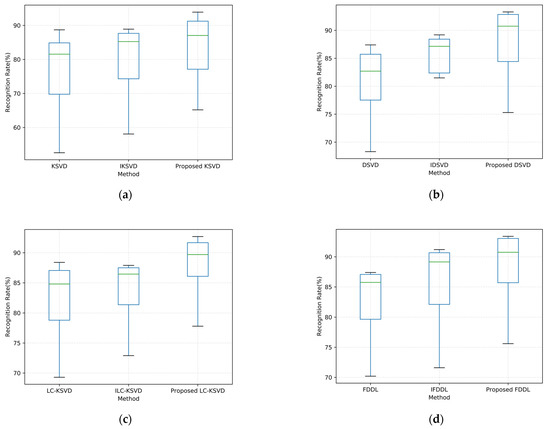

Figure 6.

(a) Comparison of different KSVD algorithms; (b) Comparison of different DSVD algorithms. (c) Comparison of different LC-KSVD algorithms; (d) Comparison of different FDDL algorithms; (e) Comparison of different RDCDL algorithms. The accuracy of image classification increases steadily with the increase of atomic number. Compared with other algorithms, the proposed algorithm generally has advantages.

Table 1.

Average accuracy (%) on the Yale B face database.

From Table 1, it can see that, in most cases, the improved algorithm obtains a higher average recognition rate than the original algorithm. When the atom is 342, our FDDL algorithm achieves 93.4% accuracy, which is 2.2% higher than that of IFDDL and 6% higher than that of the classical FDDL algorithm. The most obvious improvement is when the atom is 38; our improved FDDL algorithm has accuracy more than 10% higher than IFDDL, and nearly 15% higher than the common FDDL algorithm. Our KSVD is 3.51% higher than IKSVD on average. Our DSVD is 3.72% higher than IDSVD on average. For FDDL, our FDDL is 3.38% higher than IFDDL on average. With the increase of atomic number (K = 38, 76, …, 342, 380), our LC-KSVD increases by 4.28% on average compared with the original algorithm. By comparing the accuracy of the above algorithm, our improved algorithm has a certain degree of improvement, and the smaller the number of atoms, the more obvious, which verifies that our algorithm involves different observations of the same object. The improved algorithm has better classification accuracy and stability.

Finally, to establish a visual difference between methods included in the comparison, results over 20 runs for some benchmark instances and better performing methods using box and whiskers diagrams are shown in Figure 6.

4.2. Experiments on the PIE Face Database

This PIE face database contains 41,368 frontal images of 68 people. Each person collects facial images with 13 different poses, 43 different lighting conditions, and four different expressions. Figure 7 shows several example images from the PIE face database. We normalized the size of each image to 32 × 32, then randomly selected ten pictures of each person as training samples, and the remaining samples as test samples.

Figure 7.

Examples of the PIE face database.

Table 2 shows the average recognition rate of different algorithms in the PIE face database. It can be seen from Table 2 that the performance of our algorithm is significantly better than that of other algorithms. For example, when the atom is 68, the accuracy of our FDDL algorithm is 68.4%, which is 11% higher than IFDDL. Atoms rose by more than 5.12% overall from 68 to 340. For the LC-KSVD algorithm, the average recognition rate of our improved algorithm is 81.36%, and the average recognition rate of the original algorithm is 75.38%, which is 5.98% higher than the original algorithm. Compared with other common algorithms, the improved algorithm has higher accuracy.

Table 2.

Average accuracy (%) on the PIE face database.

4.3. Experiments on the AR Face Database

In the AR face database, 50 male and 50 female faces were selected to form a subset of the database, and 14 facial images with changes in lighting and expressions of each person were extracted, of which seven were used for training, and the remaining seven photos were used as the test set. The image size was uniformly adjusted to 40 × 50.

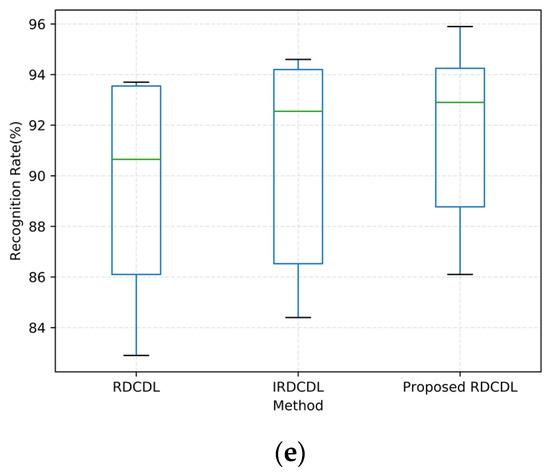

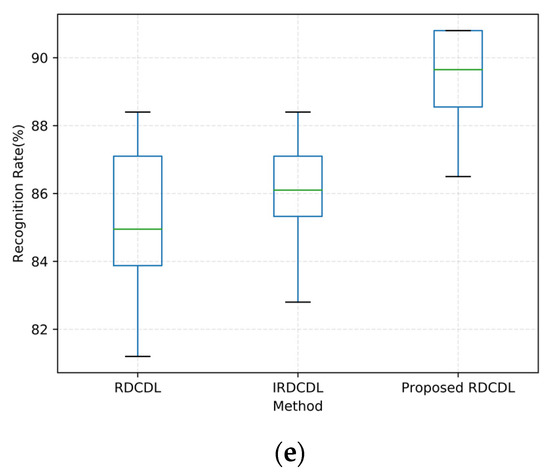

Table 3 shows the comparison of different algorithms in the AR face database. It can be seen from Table 3 that when the atomic number takes different values, our algorithm has higher recognition rate than other algorithms. The most significant improvement is that when the atom is 1080, the average accuracy of our KSVD algorithm reaches 94.5%, which is 14.4% higher than that of IKSVD. In KSVD, the overall average recognition rate of our algorithm is also the highest, reaching 1.97%. For the FDDL algorithm, although our improved algorithm has little improvement, the overall basic accuracy is as high as 88.7% (atoms from 120 to 1200), and the average accuracy is 95.72%. Figure 8b shows the average recognition rates of DSVD, IDSVD and our DSVD algorithms with different atomic numbers. When the atomic number increases, the average recognition rates of DSVD, IDSVD and our DSVD algorithms also gradually increase, but the performance of K-SVD and IKSVD is not stable. Results over 20 runs for some benchmark instances, and better performing methods using box and whiskers diagrams, are shown in Figure 8.

Table 3.

Average accuracy (%) on the AR face database.

Figure 8.

(a) Comparison of different KSVD algorithms; (b) Comparison of different DSVD algorithms. (c) Comparison of different LC-KSVD algorithms; (d) Comparison of different FDDL algorithms; (e) Comparison of different RDCDL algorithms. The accuracy of image classification increases steadily with the increase of atomic number. Compared with other algorithms, the proposed algorithm generally has advantages.

4.4. Experiments on the COIL-20 Database

The COIL-20 dataset is a collection of grayscale pictures, including 20 objects taken from different angles; one image is taken every five degrees, and 72 images are taken for each object. The dataset contains two subsets. The first group contains a total of 720 unprocessed images of 10 objects. After processing 20 things, the second group includes a total of 1440 images. The image size is uniformly adjusted to 32 × 32. Figure 9 shows several example images in the COIL-20 database. Randomly select ten images as training samples and the remaining images as test samples.

Figure 9.

Examples of the COIL-20 database.

The comparison results of classification accuracy of different algorithms in the COIL20 database are shown in Table 4. With the increasing number of atoms (K = 40, 60, …, 180, 200), our KSVD algorithm increases by 2.74% on average compared with IKSVD, and the overall average accuracy is 91.19%. When the atoms are 60 and 180, the improvement effect is the smallest, and our FDDL is only 0.4% higher than IFDDL. When the atoms are 120 and 140, the improvement effect is the largest, and our LSVD is 3.8% higher than IKSVD. For non-face database, the proposed algorithm can also effectively improve the accuracy of image classification.

Table 4.

Average accuracy (%) on the COIL-20 database.

4.5. Statistical Tests

In this paper, the Friedman test [35] and the Nemenyi test [36] are applied to show whether there are statistical differences between the performance of classification algorithms. The experiment first assumes that represents no significant difference between all algorithms (all classification algorithms perform equally), represents that there are significant differences between algorithms. This is assumed certain and evidence is searched for in the data to reject it.

This paper compares all the algorithms on the Yale B, PIE, AR and COIL-20 datasets. Each algorithm runs 20 times and takes the average as the test result. Then, each dataset is sorted according to the test performance from good to bad, and the order values are 1, 2, 3, …, 15. If the test performance of the algorithm is the same, the order value is divided equally, as shown in Table 5. Then use the Friedman test to determine whether these algorithms perform the same. Friedman statistics can be calculated as follows:

which is compared to an F-distribution with and degrees of freedom, where n denotes the total number of experiments, k is the amount of classification models and is the value of the Chi-square distribution with F degrees of freedom. The variable is calculated through (11). When the number of datasets is 4 and the number of algorithms is 15, the critical value of the F test is 1.935, so is rejected and is accepted. Nemenyi’s test is continued, and the critical value domain CD of the average ordinal value is calculated according to (12).

Table 5.

Ranks achieved by the Friedman and Nemenyi tests in the study. Our DSVD and our RDCDL algorithms achieve the best rank in the procedures. The statistics computed and related p-values are also shown.

The CD computed through Nemenyi’s test (CD = 3. 049) strongly suggests the existence of significant differences among the algorithms considered. The proposed DSVD and RDCDL algorithms obtained good rankings.

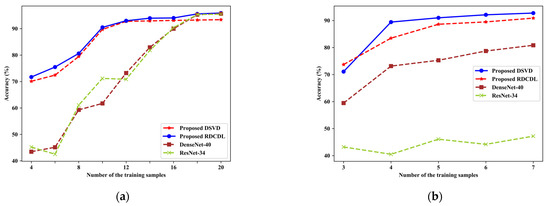

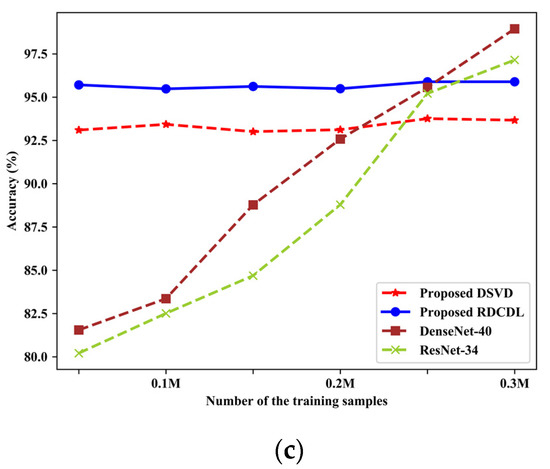

4.6. Comparison with Deep Learning Methods

In order to comprehensively evaluate the proposed methods, some methods based on deep learning are compared. For example, ResNet-34 [37] and DenseNet-40 [38]. We select the two methods (proposed DSVD and RDCDL) that perform best in statistical tests to compare with them. In this experiment, the operating system is Ubuntu18.04, the CPU is Intel(R) Core (TM) i7-8700 CPU 3.20 GHz and the GPU is NVIDIA GeForce GTX1080Ti 8 GB. Training and testing are carried out in Python language under the PyTorch deep learning framework. The batch-size in the training is set to 64, the initial learning rate is set to 0.05, and the dictionary size is set to 380 (10 atoms per category) in the Yale B database and 600 in AR database. All the methods are repeated 20 times, and their average accuracy is shown in Table 6.

Table 6.

Average accuracy (%) of various methods on the AR, Yale B database and CASIA-WebFace dataset.

In comparison with methods based on deep learning, the performance of the proposed DSVD and RDCDL is better than that of ResNet-34 and DenseNet-40 on the Extended Yale B and AR database. However, methods based on deep learning are better on the CASIA-WebFace dataset [39]. In order to further evaluate the performance of dictionary learning methods and deep learning methods based on these three databases, we investigated the accuracy of the proposed DSVD, the proposed RDCDL, ResNet-34 and DenseNet-40 under different training samples, as shown in Figure 10. According to Figure 10a, when the number of training samples is less than or equal to 16, the proposed DSVD and RDCDL has obvious advantages over ResNet-34 and DenseNet-40. Combined with Figure 10c, it is not difficult to find that ResNet-34 and DenseNe-40 are more suitable for large-scale training sets. When the dataset is small, a large number of effective features cannot be extracted. It can also be found from the figure that when the number of training samples is less than 16, the accuracy of ResNet-34 is very unstable. Because the discriminant information in the small-scale training data is insufficient to update the parameters in ResNet-34 to obtain stable accuracy, the accuracy is often determined by the randomly selected initial parameters. This indicates that the proposed method has a prominent superiority over deep leaning based methods when provided with only a few training samples on a small-size dataset.

Figure 10.

(a) The average performance of different training samples on the Extended Yale B database; (b) The average performance of different training samples on the AR database; (c) The average performance of different training samples on the CASIA-WebFace dataset.

This paper also conducted time cost experiments on the CASIA-WebFace dataset. Table 7 shows the average training and testing time of different methods on the CASIA-WebFace dataset. The time required for dictionary learning to extract features is very short, and their fastest time is less than 70 s, which is very obvious compared with ResNet-34 and DenseNet-40. Because methods based on deep learning have many parameters and the gradient descent method is used to iteratively train the convolution filter, it requires not only a large number of training samples, but also a large amount of calculation.

Table 7.

Average training time and testing time on CASIA-WebFace dataset.

The experimental results show that deep learning and traditional methods have their advantages and disadvantages. Methods based on deep learning require a large number of training samples, consume a lot of computing time and the model is complex and difficult to deploy. However, proposed models do not require much iterative training and the average test time is only 0.85 s.

5. Conclusions

In this paper, a new image classification algorithm is proposed, which uses different observations of the original sample to generate multiple vector representations, thereby enhancing the potential correlation of the original sample. This paper combines the multi-vector representation of images with robust dictionary learning, and designs a weighted fusion algorithm so that dictionary learning can extract higher-dimensional and abstract features from data, and effectively use the sample information between multi-vector representations to improve the discriminant ability. After comparative experiments, the classification performance of the proposed algorithm is better than that of the original algorithm before improvement. In addition, this algorithm effectively improves the classification accuracy, and has the advantages of simple implementation and high degree of automation. This paper uses the original sample and multi-vector representation to learn the dictionary for the same object. This operation makes the proposed algorithm more general. Research shows that more diversified and deeper features can be obtained through deep mining of the original samples.

A little flaw of the proposed method is that it has a higher computational cost than that of the conventional dictionary learning algorithm. Because there are kinds of representations, the next research direction is to explore more effective representation and fusion strategies, so as to improve classification performance and reduce computation.

Author Contributions

In order to balance the large-scale information and global features of images, a robust dictionary learning method based on image multi-vector representation is proposed in this paper. C.P. and Y.Z. conceptualized the methodology and all the research and wrote large sections of the paper. Z.W., Y.Z. and Z.C. were involved in the interpretation of the results. C.P. was responsible for the visualization and presentation of the mobility indicators. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Research Foundation for Advanced Talents of Guizhou University under Grant: (2016) No. 49, Key Disciplines of Guizhou Province Computer Science and Technology (ZDXK[2018]007), Research Projects of Innovation Group of Education (QianJiaoHeKY[2021]022), Project supported by the Guizhou Province Graduate Research Fund (YJSCXJH[2020]53, YJSCXJH[2020]189), and supported by the National Natural Science Foundation of China (62062023).

Data Availability Statement

The Extended Yale B face database: http://vision.ucsd.edu/~leekc/ExtYaleDatabase/download.html (accessed on 10 December 2021); The PIE face database: http://web.mit.edu/emeyers/www/face_databases.html (accessed on 8 December 2021); The AR face database: http://web.mit.edu/emeyers/www/face_databases.html (accessed on 8 December 2021); The COIL-20 database: https://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php (accessed on 8 December 2021); The CASIA-WebFace dataset: https://paperswithcode.com/dataset/casia-webface (accessed on 7 February 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, J.; Liu, W.; Bo, L.; Zhang, H.; Li, H.; Xu, S. Joint Reflectance Field Estimation and Sparse Representation for Face Image Illumination Preprocessing and Recognition. Neural Process. Lett. 2020, 1–14. [Google Scholar] [CrossRef]

- Wang, L.; Li, T. Research on Image Feature Extraction Method Fusing HOG and Canny Algorithm. In Proceedings of the 2021 4th International Conference on Data Science and Information Technology, Shanghai, China, 23–25 July 2021; pp. 208–211. [Google Scholar]

- Lacombe, T.; Favreliere, H.; Pillet, M. Modal features for image texture classification. Pattern Recognit. Lett. 2020, 135, 249–255. [Google Scholar] [CrossRef]

- Li, Y.; Liu, J.; Tang, Z.; Lei, B. Deep spatial-temporal feature fusion from adaptive dynamic functional connectivity for MCI identification. IEEE Trans. Med. Imaging 2020, 39, 2818–2830. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Li, X.; Zhang, S. Block-row sparse multiview multilabel learning for image classification. IEEE Trans. Cybern. 2015, 46, 450–461. [Google Scholar] [CrossRef]

- Luo, Y.; Liu, T.; Tao, D.; Xu, C. Multiview matrix completion for multilabel image classification. IEEE Trans. Image Process. 2015, 24, 2355–2368. [Google Scholar] [CrossRef] [Green Version]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Xu, Y.; Zhong, Z.; Yang, J.; You, J.; Zhang, D. A new discriminative sparse representation method for robust face recognition via l2 regularization. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2233–2242. [Google Scholar] [CrossRef]

- Li, Z.; Lai, Z.; Xu, Y.; Yang, J.; Zhang, D. A locality-constrained and label embedding dictionary learning algorithm for image classification. IEEE Trans. Neural Netw. Learn. Syst. 2015, 28, 278–293. [Google Scholar] [CrossRef]

- Zhang, H.; Wang, F.; Chen, Y.; Zhang, W.; Wang, K.; Liu, J. Sample pair based sparse representation classification for face recognition. Expert Syst. Appl. 2016, 45, 352–358. [Google Scholar] [CrossRef]

- Xian, Y.; Akata, Z.; Sharma, G.; Nguyen, Q.; Hein, M.; Schiele, B. Latent embeddings for zero-shot classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 69–77. [Google Scholar]

- Gao, J.; Xu, L. A novel spatial analysis method for remote sensing image classification. Neural Process. Lett. 2016, 43, 805–821. [Google Scholar] [CrossRef]

- Albukhanajer, W.A.; Jin, Y.; Briffa, J.A. Classifier ensembles for image identification using multi-objective Pareto features. Neurocomputing 2017, 238, 316–327. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, B. Discriminative K-SVD for dictionary learning in face recognition. In Proceedings of the 2010 IEEE Computer society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2691–2698. [Google Scholar]

- Jiang, Z.; Lin, Z.; Davis, L.S. Label consistent K-SVD: Learning a discriminative dictionary for recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2651–2664. [Google Scholar] [CrossRef] [PubMed]

- Yang, M.; Zhang, L.; Feng, X.; Zhang, D. Fisher discrimination dictionary learning for sparse representation. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 543–550. [Google Scholar]

- Bacanin, N.; Stoean, R.; Zivkovic, M.; Petrovic, A.; Rashid, T.A.; Bezdan, T. Performance of a novel chaotic firefly algorithm with enhanced exploration for tackling global optimization problems: Application for dropout regularization. Mathematics 2021, 9, 2705. [Google Scholar] [CrossRef]

- Malakar, S.; Ghosh, M.; Bhowmik, S.; Sarkar, R.; Nasipuri, M. A GA based hierarchical feature selection approach for handwritten word recognition. Neural Comput. Appl. 2020, 32, 2533–2552. [Google Scholar] [CrossRef]

- Hu, C.; Wu, X.J.; Shu, Z.Q. Discriminative feature learning via sparse autoencoders with label consistency constraints. Neural Process. Lett. 2019, 50, 1079–1091. [Google Scholar] [CrossRef]

- Huang, Y.; Quan, Y.; Liu, T.; Xu, Y. Exploiting label consistency in structured sparse representation for classification. Neural Comput. Appl. 2019, 31, 6509–6520. [Google Scholar] [CrossRef]

- Xu, Y.; Li, Z.; Tian, C.; Yang, J. Multiple vector representations of images and robust dictionary learning. Pattern Recognit. Lett. 2019, 128, 131–136. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, X.J.; Shu, Z. Multi-resolution dictionary collaborative representation for face recognition. Pattern Anal. Appl. 2021, 24, 1793–1803. [Google Scholar] [CrossRef]

- Zheng, S.; Zhang, Y.; Liu, W.; Zou, Y.; Zhang, X. A dictionary learning algorithm based on dictionary reconstruction and its application in face recognition. Math. Probl. Eng. 2020, 2020, 8964321. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, X.J.; Yin, H.; Xu, T.; Shu, Z. Locality-Constrained Collaborative Representation with Multi-resolution Dictionary for Face Recognition. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Beijing, China, 29 October–1 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 55–66. [Google Scholar]

- Fan, J.; Yang, C.; Udell, M. Robust Non-Linear Matrix Factorization for Dictionary Learning, Denoising, and Clustering. IEEE Trans. Signal Process. 2021, 69, 1755–1770. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, B.; Zhong, Z. Multiple representations and sparse representation for image classification. Pattern Recognit. Lett. 2015, 68, 9–14. [Google Scholar] [CrossRef]

- Lin, G.; Yang, M.; Yang, J.; Shen, L.; Xie, W. Robust, discriminative and comprehensive dictionary learning for face recognition. Pattern Recognit. 2018, 81, 341–356. [Google Scholar] [CrossRef]

- Li, L.; Peng, Y.; Qiu, G.; Sun, Z.; Liu, S. A survey of virtual sample generation technology for face recognition. Artif. Intell. Rev. 2018, 50, 1–20. [Google Scholar] [CrossRef]

- Li, H.; He, X.; Tao, D.; Tang, Y.; Wang, R. Joint medical image fusion, denoising and enhancement via discriminative low-rank sparse dictionaries learning. Pattern Recognit. 2018, 79, 130–146. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, Q.; Xiao, L.; Cui, Z. An improved two-step face recognition algorithm based on sparse representation. IEEE Access 2019, 7, 131830–131838. [Google Scholar] [CrossRef]

- Georghiades, A.S.; Belhumeur, P.N. Illumination cone models for faces recognition under variable lighting. In Proceedings of the CVPR98, Santa Barbara, CA, USA, 23–25 June 1998. [Google Scholar]

- Sim, T.; Baker, S.; Bsat, M. The CMU pose, illumination, and expression (PIE) database. In Proceedings of the Fifth IEEE International Conference on Automatic Face Gesture Recognition, Washington, DC, USA, 21–21 May 2002; pp. 53–58. [Google Scholar]

- Martinez, A.; Benavente, R. The AR Face Database: CVC Technical Report, 24; Autonomous University of Barcelona: Barcelona, Spain, 1998. [Google Scholar]

- Geusebroek, J.M.; Burghouts, G.J.; Smeulders, A.W. The Amsterdam library of object images. Int. J. Comput. Vis. 2005, 61, 103–112. [Google Scholar] [CrossRef]

- Iman, R.L.; Davenport, J.M. Approximations of the critical region of the fbietkan statistic. Commun. Stat. Theory Methods 1980, 9, 571–595. [Google Scholar] [CrossRef]

- Derrac, J.; García, S.; Molina, D.; Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 2011, 1, 3–18. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 630–645. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Yi, D.; Lei, Z.; Liao, S.; Li, S.Z. Learning face representation from scratch. arXiv 2014, arXiv:1411.7923. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).