Abstract

Motor rehabilitation exercises require recurrent repetitions to enhance patients’ gestures. However, these repetitive gestures usually decrease the patients’ motivation and stress them. Virtual Reality (VR) exer-games (serious games in general) could be an alternative solution to address the problem. This innovative technology encourages patients to train different gestures with less effort since they are totally immersed in an easy to play exer-game. Despite this evolution, patients, with available exer-games, still suffer in performing their gestures correctly without pain. The developed applications do not consider the patients psychological states when playing an exer-game. Therefore, we believe that is necessary to develop personalized and adaptive exer-games that take into consideration the patients’ emotions during rehabilitation exercises. This paper proposed a VR-PEER adaptive exer-game system based on emotion recognition. The platform contain three main modules: (1) computing and interpretation module, (2) emotion recognition module, (3) adaptation module. Furthermore, a virtual reality-based serious game is developed as a case study, that uses updated facial expression data and provides dynamically the patient’s appropriate game to play during rehabilitation exercises. An experimental study has been conducted on fifteen subjects who expressed the usefulness of the proposed system in motor rehabilitation process.

1. Introduction

Motor rehabilitation helps people to bring up dysfunctions in various parts of the body such as the lower or upper limbs [1]. The biggest challenge patients may face during training is having to repeat the exercises over and over. This may expose them to severe boredom, and later, affect their performance in exercises and lead to an imbalance in their treatment program that may be more and more extended. Recently, many researchers have been interested in exer-games and virtual reality, which they consider a viable solution to this issue. Exer-games are video games with user interfaces that require active participation and physical exertion from players. These exer-games are meant to detect the body movement and provide participants with both enjoyment and exercise. Exergames can be used to motivate older people to engage in physical activity [2,3]. Moreover, they can be used as an alternative to exercise because they are widely available, allow for autonomous practice, and provide control and personalized adjustment possibilities. Exer-games have been shown to be effective in enhancing motor and cognitive skills, minimizing the risk of falling [4], and increasing quality of life of old individuals [5], and people living with chronic diseases [6]. Furthermore, they may be used to enhance flexibility and balance [7], and improve cognitive inhibition. These technologies have helped many patients with their motor rehabilitation programs. Researchers [8,9] have found that VR can improve motivation and engagement when used in combination with exer-games. The use of exer-games became indispensable in several clinical cases. Thus, several projects have been developed and experienced with patients [10,11,12,13]. Virtual reality exer-games facilitate the exercises and give patients an impulse to finish the exercises as a kind of pleasure rather than the pain and boredom during motor rehabilitation sessions [14]. Despite the great success of these exer-games, patients’ behavior and responses vary, even if they play the same exer-game. This was the reason for the appearance of personalized or adaptive exer-games. It can be used in several fields (health, training, learning…) for their ability to motivate the user [15]. According to [16], the differences in the physical and gaming capabilities of the players requires providing a personal experience to achieve the targeted positive results. In addition, there are many studies that have proved the effectiveness of personalized and adaptive exer-games by conducting experiments in motor rehabilitation clinics. The game proposed by [17] was well accepted by the patients, and the results showed that the adaptive game is stimulating and attractive. In other experiments carried out by [18], it was noted through that adapting the gameplay parameters improved the physiological performance of the participants. Patients are sometimes faced with hard games that may stress them. This forces them to give up the game quickly. To avoid this tedious and hard situation, adaptive exer-games can be a relevant solution. In general, an adaptive system refers to automatically fitting any context of use continuously; performances and capacities have to be guaranteed too. Consequently, an adaptive game becomes a breakthrough for rehabilitation specialists in clinical situations. This adaptation can be perceived from various perspectives. However, the biggest challenge facing researchers in this field is how to adapt it to individual patients. End-users need access to adaptive interfaces to meet these requirements [19,20]. In this regard, the personalized exer-game addresses this issue. Several studies in the literature focused on such games, some of them are interested in static adaptation [21,22], which makes changes only at the beginning of the game, as opposed to dynamic adaptation, which allows you to adapt the game during the long period of play [17,22,23,24,25,26,27]. Even if the adaptation is dynamic, it is according to parameters that do not really express the situation of the patient such as the score, or other systems that rely mainly on specialists to intervene in every adaptation process [20,24].

During training, many researchers, including [28,29,30], have confirmed the existence of a relationship between the patient’s feelings and performance, as well as the results of physical therapy. In short-term group psychotherapy, [28] discovered that the experience and expression of positive emotions was related to positive therapeutic outcomes, whereas the intense expression of negative emotions was linked to negative outcomes. It was found in [29] that therapeutic change seemed to be related to the experience of positive emotions, while the experience of only negative emotions was associated with a bad therapeutic outcome. The goal of the research conducted by [30] is to look into the direct relationship between the patients’ performance quality and a positive or negative emotional state that has been artificially induced. Statistical analysis of the resulting EMG and Accelerometer signals revealed a significant difference in the quality of each subject’s physical exercise induced by various emotional triggers. All observer-based emotion recognition tools use facial expressions as a reliable indicator of positive and negative emotions. Seven basic emotions are universal to human beings, namely: neutrality, anger, disgust, fear, happiness, sadness, and surprise [31]. One study [32] proposed a dimensional approach, in which any emotion is represented in terms of three basic dimensions: valence (positive/pleasurable or negative/unpleasurable), arousal (engaged or not engaged), and dominance (degree of control that a person has over their affective states). In this paper, we will base on the valence dimensions (positive/negative). According to the above model, we will classified the emotions into three classes: positive (happy), negative (angry, disgust, fear, sad) and neutral (neutral). As for the feeling of surprise, some of them considered it positive [33,34], negative [35] or both [36,37]. Therefore, in this paper, we will divide the surprise probability into two halves. Thus, we will add half to the positive emotion probability and the other half to the negative emotion probability.

Facial emotions can be recognized in different ways that are divided in the literature into two parts: electrical and non-electrical methods [38]. The proposed solution adapts the exer-game to the patient based on his feeling. If he shows negative emotions, the system makes changes to reduce the difficulty of the game. On the other hand, if he is happy (positive emotions) and constructed (i.e., he can easily do it), the system increases the difficulty. This is exactly what the doctor does; he asks the patient to decrease or increase the exercise speed.

Computer vision researchers are using this technology to develop approaches for automatically recognizing emotions from facial expressions, thanks to advancements in automatic facial expression analysis. Therefore, we proposed a case study that allows us to know the patient’s emotion using on artificial intelligence method (CNN), by analyzing facial expressions of the patients.

Thus, our contribution through this work aims at developing the VR-PEER platform in which we implement facial expression and adaptive exer-games modules. The platform is divided into three phases: (1) the capturing and interpretation phase, in which we capture and interpret the contextual information of the patients; (2) analysis phase: in this phase, we analyze the interpreted information and recognize the emotions of the patient; (3) adaptation phase: the most important part of our architecture in which we make changes and adjustments to the exer-game according to the patient’s mood. The difficulty level of the game varies according to the patient’s facial expression during the exercise. For example, if we detect that the patient is sad, angry, fear or disgust, the system lowers the difficulty level of the exer-game, and vice versa if the patient is happy.

The upcoming pages will provide more details about our contribution. Firstly, we shall enumerate several related work. Secondly, we will present the proposed architecture. Thirdly, we will represent the study case of VR-PEER architecture. Finally, we will validate our solution with an experimental setup.

2. Related Work

The idea of introducing gamification into the therapeutic protocol of upper limbs rehabilitation was created to motivate patients during the rehabilitation programs. There are numerous studies looking for ways to develop serious games for rehabilitation, including what our team did, aiming at creating serious games in a virtual reality environment [39]. Several serious games for rehabilitation projects have appeared and showed a wide general interest in improving and sustaining virtual reality technology [8,40,41]. The core idea of VR-based rehabilitation is to use sensing devices to capture and quantitatively assess the movements of patients under treatment in order to track their progress more accurately [42]. Two of the sensing devices that can also be considered as natural user interfaces (NUI) are the Microsoft Kinect sensor and the Leap Motion Controller [43]. In the same context, there are several implementations that use, principally, these two types of devices to control the games and provide feedback to patients. On the other hand, we have two categories of developed games: non-personalized games and personalized games. In fact, non-personalized games represent those which could be used for a wide range of patients without operating changes on the exer-game according to the patients’ states, while personalized games were conceived to be suitable for each patient’s state changes.

Several works were carried out on non-personalized games. In [44], a Stable-Hand VR system was developed for hand rehabilitation. It uses a VR device and a leap controller to manage the hand’s movement. The exer-game focused on pinching gestures, supporting three tasks: (a) open-hand; (b) pinch position and (c) open-hand. The researchers in [39] developed fruit-based games and a runner game for rehabilitation of post-stroke upper limbs using Kinect with Oculus Rift HMD. The researchers in [23] were interested in cognitive rehabilitation and used only Oculus Go HMD. The mechanics of the game require memorizing geometric shapes while the player works in two modes, autonomous or manual. They defined two levels of difficulty depending on the number of elements to remember. The exer-game developed in [45] targeted to manage hand disabilities. Five hand gestures were selected to control a sphere in an environment composed of obstacles (increase speed, go backwards, jump, and make the sphere bigger or smaller). The goal is reached when the patient drops the ball in a basket. The system developed used CNN for hand gesture recognition via EMG signals.

Previous work on serious games has found the need for personalization to deliver appropriate levels of exercise to individuals and to make the game progressively more challenging to keep people engaged [46,47], and offer a personalized experience, i.e., adapting the game to the learners’ characteristics, situation, and needs.

Consequently, some work was carried out on personalized games. The researchers in [26] proposed a VR framework for Parkinson’s disease rehabilitation. The proposed system is designed to capture various movements of the upper limbs, unilateral or bilateral, and the captured motion is represented one-to-one in the virtual world of the video game. The difficulty of the game can be monitored remotely by the clinician. One study [22] investigated the use of free-hand interaction using gesture tracking sensors such as leap motion to support hand rehabilitation of patients with strokes or trauma. The researchers in [17] developed a Tetris-like game for motor rehabilitation after stroke. This system uses the Oculus Rift display device with Razer Hydra Motion Controllers, where patients perform pronation/supination movements with their hands. The difficulty of the exer-game changed dynamically according to the score obtained at a given time. The Rehab Bots game was created in [24]. It is based on the virtual assistant identified by therapists and contains three main levels that are: (1) a virtual assistance robot that shows patients how to properly perform the exercises, (2) an intelligent movement notifications module that analyzes and represents patients in 3D, then, if necessary, adjusts the game to the appropriate level to achieve the best outcome, and (3) a dynamic correction module that takes into account both the level of game complexity and the virtual assistant readings in order to create a series of exercises that are more suited to the patients’ capacities. Personalized Exer-game Language (PEL) [20] was used to generate exer-games with a focus on young people [21]. Azure Kinect DK was used to analyze the movement of the entire body. One study [48] proposed an open-source framework to develop serious games using client/server architecture to facilitate the connection between different platforms. Two examples of serious games were played: the classical Gym-Tetris game and a problem-solving task game. The disadvantage of the last two strategies is that modification needs the involvement of kinesthetic rehabilitation experts. This means that the system will not be able to adapt on its own. To offer a personalized serious game for rehabilitation, [49,50] propose a recommender system, based on interaction analysis and preferences of the user. A lot of suggestions about serious adaptive games are directed at adults in general, without caring for young people in particular, as they quickly get bored of rehabilitation exercises. That is why [21] suggests a serious game adaptation for young people, where they develop serious games that support plug-and-play metaphors for natural interaction. The author in this study insists on the need to personalize the rehabilitation process for each individual. Despite the effort made in personalized exer-games development, the emphasis, however, is on the game difficulty progress, not on the patient’s states. With personalized games proposed in the literature, it is difficult to know whether the patient is in good or bad situation. Thus, patients can present situations of stress, fatigue, or anxiety without the game detecting this. Here, the exer-game leads to a negative performance. Therefore, we are interested in studying the emotions of patients in the exer-game. Some studies have been interested in using this method to adapt serious games for learning. Thus, these studies did not specifically address motor rehabilitation. For example, in [51], the authors present a fruit-slicing exer-game to help a hemiparetic person, adaptive serious game in real-time. It is based on KINECT to recognize facial expressions and adjust the game’s complexity according to the player’s emotions. The real-time adaptation is executed using Facial Action Coding System (FACS) as described in [37]. FACS is a method of describing facial movements which are used to find the emotion of the person. Another study [52], had been carried to predict the players’ emotions. However, he did not use it for adaptation, but only to assess the learner’s cognitive states during the educational video game, they used binomial logistic regression method to predict the cognitive-affective states of flow.

The table below presents some previous studies and compares them to the proposed solution in this paper:

As we mentioned earlier, we aspire to find a way that enables us to develop serious games for physical rehabilitation, which can be adapted to the patient’s mood dynamically during exercise on a permanent basis. We notice from Table 1 that there are studies that have developed serious games for rehabilitation, but they are not adapted to the patient [3,40]. In the same context, if they are adapted they are personalized but not intended for motor rehabilitation [50], or the adaptation is only at the beginning of the exercise, regardless of what happens during it [17,19,24]. The previous works may be oriented to kinesthetic rehabilitation and adapted dynamically to the game’s surroundings, but they do not take into account the virtual reality aspect [46,49]. There are works, however, that track the patient’s state while playing, but they are unrelated to virtual reality or kinesthetic rehabilitation [51,52]. Therefore, the solution we propose will include all these shortcomings. It is suitable for virtual reality, directed to motor rehabilitation, and depends on the patient’s emotions dynamically for exer-game adaptation.

Table 1.

Comparison between our proposition VR-PEER and few existing methodology.

Thus, we propose an approach for a dynamic exer-game depending on patients’ facial emotions. We can switch from a difficult to an easy level of an exer-game according to the patients’ face states (e.g., positive or negative). The main contribution of this paper will be detailed in the next section.

3. VR-PEER Architecture

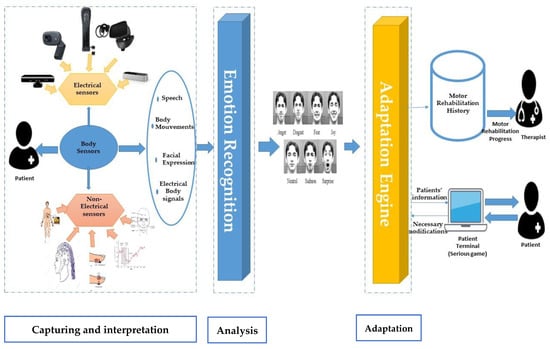

Proposing an architecture for exer-games is a very challenging task, especially concerning the personalization aspect due to the complexity of virtual reality systems. VR-PEER (Figure 1) has a highly modular structure in which an adaptive exer-game can be easily generated. Our architecture is divided into three main phases, namely:

Figure 1.

VR-PEER architecture.

(i) Capturing and Interpretation, (ii) Analysis, and (iii) Adaptation.

Typically, the architecture of a personalized exercise includes observations of the environment, analyzing and evaluating the captured data, and finally, selection of adaptations and their executions.

3.1. Capturing and Interpretation Phase

This phase forms the basis of our system. In order to be able to intercept context data, the capture layer presents a collection of sensors. One study [38] proposes a classification of these sensors to recognize human emotions automatically: (i) electrical sensors such as EEG, ECG and EMG signals and (ii) non-electrical sensors like Kinent, Web Camera and Occulus. Basing on this classification in our architecture, each type of sensor must be attached to a software component allowing access to the intercepted data. This layer contains two parts: a data provider and a data interpreter. The data provider collects contextual information from the user’s runtime environment, called “meta information” (e.g., captured patient photo, patient speech), while the data interpreter uses this information to generate a high-level representation to describe the context information and make it more exploitable and easier to manipulate (e.g., ECG signal, photo pixels).

3.2. Analysis Phase

In this paper, we seek to propose a system solution to adapt exer-games according to the patient’s situation. During the rehabilitation exercises, the patient performs several exercises for his limbs, which may change his psychological state and his feelings. He can be in pain, fear, or happiness. We thought of recognizing the patient’s emotions to adapt to the exer-game he was going to have. Emotion recognition is the process of identifying human emotions, most typically from facial expressions, human speech, etc. The use of multiple sources can lead to conflicting situations and contradictory results, which lead to imprecise or even totally incorrect situations. Therefore, this layer must have some form of intelligence in order to intercept and resolve these conflicts. To accomplish this process, we propose the use of one of the artificial intelligence methods such as recurrent neural networks (RNN) or convolutional neural networks (CNN) (which is the method that we used in our case study), etc.

3.3. Adaptation Phase

This is the most important stage. The objective of this phase is to adapt the exer-game according to the emotion transmitted by the analysis phase. It contains three parts: (i) Adaptation Engine: responsible for defining the various adaptation mechanisms as well as the various reactions that the system must perform following a change in the patient’s emotions. In the field of rehabilitation, several decisions and changes must be made in the metrics of motor rehabilitation (rehabilitation time, number of repetitions, and the difficulty of the exercise…). (ii) Motor rehabilitation history: every change will be saved in this part of the system, with the goal of following the progress of patients, preparing reports, and finally sending them to the therapists. Indeed, with this part, we can have access to various data whenever needed. (iii) Exercise or application: this part must be subscribed to the adaptation engine layer in order to make the appropriate changes in the game, to adapt to his psychological state of the patient.

Mathematical Description of Adaptation Phase

We will briefly describe the adaptation process in the form of a mathematical equation that contains a number of inputs. Each input represents the law that is applied during the adaptation process, provided that it is approved by the therapist so that the development of the game in terms of difficulty is logical and appropriate for patients during motor rehabilitation. Each input contains, in its turn, two inputs that are the parameters to be changed and the patient’s emotions at the moment of the adaptation process.

The program contains three exits: what to do if the patient feels pain (negative emotions), the second, if he does not feel pain (positive emotions), and the third is the exit condition. It is useful when the exercise must be changed, i.e., the condition will be executed if the patient is happy and has completed the exercise. We propose using some other parameters to decide if the patient has completed the exercisesor not. In our case, we will take average of the gesture numbers completed in every 30 s. Thus, the adaptation in the same exercise will be based on the patient’s emotions when the passage relies on another parameter chosen by the therapist. It should be noted that each exercise has this adaptation equation (see Equation (1)) that changes the number of inputs and outputs according to it, and that it is implemented at every moment of the system adaptation to the patient in real time. In our case, for example, we chose the time range for executing this equation to be every 10 s. This value can be increased or decreased depending on the exercise, game, or training period. An example of how to apply this function will be given in the next session.

where, Fn: equation using to change the parameter to get an adaptive exer-game; Pn: adaptation parameter; En: patient’s emotion in the moment of adaptation; APn: the value of the parameter after the adaptation process.

4. VR-PEER Case Study

In interpersonal communication, facial expressions (FE) are one of the most important information conduits. We can indicate (and recognize) a variety of emotions through our facial expressions. We have applied the VR-PEER architecture presented in the previous section, in which we chose the device used in the capturing phase and the method for recognizing emotions based on facial expressions.

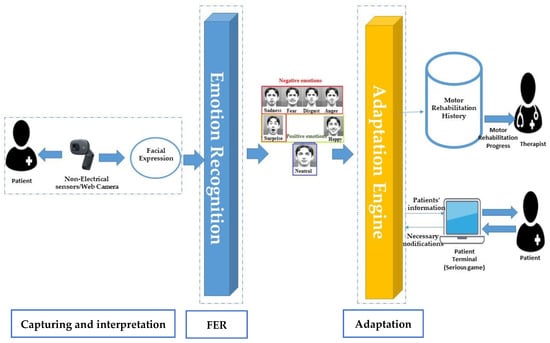

4.1. Adaptation Based Facial Emotion Recognition

The architecture consists of three modules, as shown in Figure 2:

Figure 2.

Applying VR-PEER architecture with facial emotion recognition using web camera.

- Capturing and analysis module: At this stage, a video of the patient’s face is captured during the rehabilitation program, in the form of a set of images (frames). Each image is then analyzed to detect and extract the face that will be used later for recognition.

- Module for Facial Emotion Recognition (FER)

We focus in this process on recognizing facial emotions of patients using the convolutional neural networks (CNN) and the DeepFace model proposed by [53]; knowing that we used in our solution its training set.

DeepFace is one of the most popular facial recognition models. It consists of 9 layers. The first layer consists of an input image with dimensions of 152 × 152 × 3. It is convolved with 32 filters of size 11 × 11 × 3, resulting in dimensions of 32 × 11 × 11 × 3 × 152 × 152. The second layer is a pooling operation with filter size 3 × 3 and stride of 2 separately for each channel. Hence, the dimensions of resulting image will be 14 × 14 × 6. Similarly, the third layer also involves a convolution operation with 16 filters of size 9 × 9 × 16. The purpose of these three first layers is to extract texture and edges, followed by three locally connected layers (L4, L5 and L6). Finally, DeepFace is followed by two fully connected convolutional layers (F7 and F8). The output of the last fully connected layer is fed to a K-way softmax (where K is the number of classes), which produces a distribution over the class labels. In our case, the final layer will be a softmax output layer with ‘7′ possible classes. Moreover, we will look at these seven emotions that can be classified into three classes, called 1) “positive emotion” that includes happy emotions (Equation (1)), “negative emotion” that includes (angry, sad, surprised, Disgusted, and Afraid) emotions described in (Equation (2)), and “neutral emotion”.

We have, negative = angrysadsurpriseddisgustafraid, so the equation will be:

During training, the web cam captures facial expressions and calculates P (negative), P (positive), P (normal) in each frame, and the adaptation is done every Time = T, we must therefore calculate the average emotion (positive, negative, neutral) at each T. (see Equations (3)).

where, Average = {APos, ANeg, ANeu}, Emotion = {positive, negative, normal},

N: Number of frames in T.

Thus, the result of process will be,

- Real-time-adaptation module:

This part receives the patient’s mood. There are three possibilities for these outcomes that are either positive, neutral, or negative emotions. Real-time adaptation module depends on this result to change the game to be suitable and adapted to the patient’s emotion; it becomes more difficult if the patient is in a good condition, and the difficulty decreases if the patient feels physical pain. However, if the patient is in a normal condition, the game is kept as it is. Based on the result of the previous process to adapt the 3D exer-game automatically, the patient does not need to change the parameters of the exergame manually. Our system will be intelligent, as it will pay attention to every change that will occur in the patient’s face emotion in real time. The adaptation process will be executed with every change in the patient’s emotion.

4.2. Experimental Setup

In order to evaluate the system that we previously suggested, we developed a simple 3D exer-game for the rehabilitation of the upper limbs. We, first, determine the patient’s mood based on the detection of facial emotions (positive, negative, or neutral), and then increase or decrease the game difficulty.

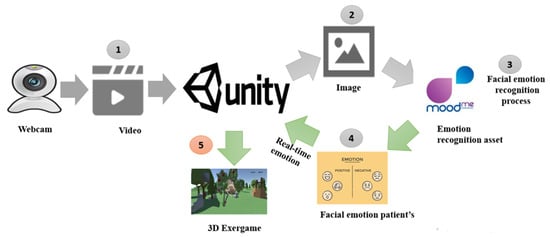

4.2.1. Logical Architecture of Our Serious Game

A serious game was developed using Unity 3D (Figure 3). The exer-game is developed based on the architecture proposed in advance. We have used a web camera as a sensor whose role is to track the patient’s face. The video will be used by Unity to detect real-time patient emotions based on the combination of the Mood Me [54] asset and the DeepFace framework [53]. Deepface is a framework to be used in Python, and Mood me can be used in Unity. We have extracted the neural network (weight model) from DeepFace, and then, included the model in the Mood Me asset. We based our system on this asset to preprocess the real-time photos of the patient, and to detect his face during training using the Barracuda package [55]. Then, Mood Me used the DeepFace model to predict the real-time users’ emotions. The emotion result will be the entry of the adaptation equation to decide which actions will be executed in the game.

Figure 3.

The logical architecture of the developed game.

Our game, developed as a 3D application for rehabilitation, is based on 3D interaction (I3D) using the Leap Motion Controller [56]. In this work, the virtual headset is not planned. The idea is to have an easy-to-use application in hospitals and reeducation centers. Thus, to avoid cognitive overload, we used a large screen and a leap motion to create a virtual environment with semi-immersion, and ensure the 3D interaction of the patient.

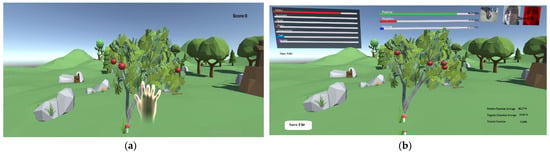

In this game, we considered only one gesture. The table below represents the description of the gestures and adaptation parameter (Figure 1). The gamer will extend the arm completely and move it from top to bottom and similarity from bottom to top, to drop the apple into the floor. One apple will appear every.

As mentioned earlier, the patient in this game tries to extend his arm to drop the apples to the ground (see Figure 4). We derived from the equation previously cited in the adaptation process another adaptation equation with predefined parameter and function (see Equation (6)), containing one entry and one exit (see Equation (5)). changed by the adaptation’s law (Equation (6)).

Figure 4.

Adaptive serious game developed based on VR-PEER architecture. (a): participant’s interface, (b): interface used to test the facial emotion recognition.

Our purpose is to make the patient feel that the game is designed for them because it adapts to their facial emotions, whether they feels pain caused by pressure on their limbs and wants exercises to be easier, or is willing to do more difficult exercises.

4.2.2. Tests and Evaluation

We carried out two types of tests to prove the efficiency and performance of our system. The first is to prove the efficacy of the first part of the Facial Emotion Recognition process, while the second is for testing the adaptation process. This first phase of our system evaluation is done to evaluate the performance and robustness of the adaptation system by taking into account facial emotions. We, therefore, seek to answer the following question: is the adaptation robust or not. Thus, the goal of this evaluation is to know if the adaptation is done independently of the patients. We used a laptop with the following characteristics: I7–9th generation processor, with a GeForce NVidia graphics card, a Web Camera integrated into the pc, and a leap motion controller.

- Emotion test

We made a 10-min test. Facial emotion recognition (FER) using the DeepFace method is done in each frame since there are 24 frames in every second, i.e., the number of emotional recognitions during this period will be 14,400. The adaptation will be executed every 10 s. As a result, we will calculate the average of the positive, negative, and neutral emotions (results represented in Figure 5). We will obtain 60 values calculated with the equation below:

Figure 5.

Emotion-state progression values for case study subject.

During testing, we found that the system succeeded in recognizing the predominant user emotions every 10 s, and 57 out of 60 tests were correct. From the three faulty tests, which were represented by the confusion between happy and neutral emotions, we conclude that our system is effective in most cases, except a few ones, which is logical as it is difficult to know whether a person is happy or normal.

- Adaptation test

Our system is divided into two parts: a part to determine the patient’s emotion and a part to adapt the game according to his facial expressions. In the first, we used a method that was proven in the previous study, meaning that there is no need to re-test it. As for the second part, in this table, we tested the response of the adaptation process to the part of the emotion process.

We have tested the adaptation process on a group of 15 subjects who have tried the game (6 men and 9 women ranging from 21 to 60 years old). Overall, 70% of them have never used VR serious games and 15% are not used to VR games but tried them before; the rest are used to such games. The duration of each test is 5 min. Subjects change their facial expressions during exercises randomly.

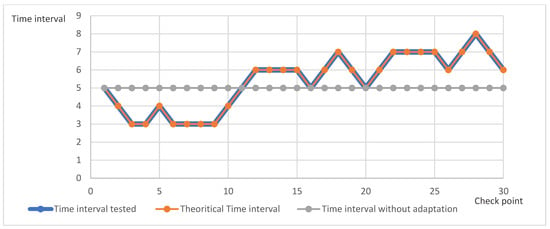

The adaptation process is executed every 10 s; thus, the number of adaptations (points check) during the test is 30. Every 10 s, the game will be adapted according to the subject emotion (positive, negative, or neutral). We have to save every 10 s each subject’s emotion, the tested adaptation parameter, and theoretical adaptation parameter (see Table 2).

Table 2.

Example of the data saved for each subject during the training.

In the chart below (Figure 6), we have changed the value when applying the adaptation system (tested and theoretical) and when not applying it too.

Figure 6.

Time interval value every check point with applying and without applying adaptation.

We note that the time interval changes dynamically after each execution of the adaptation process, while it remains constant if it is not applied. We also note that the values we obtain in the test are the same as those calculated manually. Thus, we conclude that the function we used for adaptation is applied correctly in the game.

As we said, the information mentioned in the Table 3 below will be included for each experimenter in our system. Based on the information from the 15 subjects, we will create a comprehensive table, which will contain the sum of successful adjustments as well as the accuracy which is calculated in the following way:

Table 3.

Experimenter about system.

The table shows that all the adjustments we made to each person given by the system exactly match what was calculated manually. The average number of adaptation operations for each person is 30, and the total number is 450. From these results, we conclude that the percentage of its total effectiveness is 100.

To find the performance of the proposed system, we will calculate its accuracy. Our system is divided into two parts: (1) Emotion recognition process (ERP) using DeepFace Framework. It will be applied at every check point (10 s). We found in the first test 57 correct results out of 60 tests. (2) Adaptation process (AP), which means that the overall system’s accuracy will be the average of the adaptation process accuracy and the DeepFace accuracy Framework, which represented 97.35% [16], as well as real-time facial emotion recognition (Rt-FER).

The accuracy comes out to 97.45%. This means that our solution is doing a great job in adapting the exer-game according to the patient’s emotions.

5. Conclusions

Developing a personalized exer-game is a very challenging problem because it requires a heavy effort to enhance the performance measures of motor rehabilitation. This field of adaptive serious games is gaining attention owing to its applications to motivate people during training. This paper presented a detailed global architecture based on emotion recognition. It is divided into three main phases: (1) computing and interpretation, (2) emotion recognition, (3) and adaptation. Furthermore, a case study using facial emotion recognition and web camera has been presented in this work. Finally, we have presented an experimental setup in order to validate the proposed solution. We also conducted two types of tests where we, first, tested the effectiveness of the system to track changes that occur in the patient and, second, tested 15 people to determine the effectiveness of adaptation based on facial emotions.

The results were very satisfying as we reached accuracy. This system is not suitable for the use of a virtual reality headset. It requires other techniques for emotion recognition (ECG signal, EMG signal, speech, body movement, etc.). Our system has not been tested in rehabilitation centers. These shortcomings provide opportunities for future work to evaluate the therapeutic side of the system by testing our application in real situations, introducing other methods of adaptation, and comparing them with the method presented in this paper. We plan to use other devices such as the VR headset, and other techniques for emotion recognition such as body movements, electrical body signals, and the history of the gestures made by the patient. Thus, our future work will be an extension of the global architecture presented in this paper.

Author Contributions

Conceptualization, Y.I.; methodology, Y.I.; software, Y.I; formal analysis, S.O., S.B.; resources, Y.I., S.B., S.O.; writing—original draft preparation, Y.I., S.B.; writing—review and editing, Y.I., S.B., S.O., M.M.; supervision, S.O., S.B., A.K.; project administration, S.O., S.B., N.Z. All authors have read and agreed to the published version of the manuscript.

Funding

We would like to thank the IBISC Laboratory and the Ile de France region and CDTA research center for their funding support. This material is based upon work supported by the FEDER CESAAR-AVC project under Grant N° IF 001 1053.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tanaka, K.; Parker, J.R.; Baradoy, G.; Sheehan, D.; Holash, J.R.; Katz, L. A Comparison of Exergaming Interfaces for use in Rehabilitation Programs and Research. Loading… 2012, 6. [Google Scholar]

- Valenzuela, T.; Okubo, Y.; Woodbury, A.; Lord, S.R.; Delbaere, K. Adherence to Technology-Based Exercise Programs in Older Adults: A Systematic Review. J. Geriatr. Phys. Ther. 2018, 41, 49–61. [Google Scholar] [CrossRef] [PubMed]

- Meekes, W.; Stanmore, E.K. Motivational Determinants of Exergame Participation for Older People in Assisted Living Facilities: Mixed-Methods Study. J. Med. Internet Res. 2017, 19, e238. [Google Scholar] [CrossRef] [PubMed]

- Alhagbani, A.; Williams, A. Home-Based Exergames for Older Adults Balance and Falls Risk: A Systematic Review. Phys. Occup. Ther. Geriatr. 2021, 39, 1–17. [Google Scholar] [CrossRef]

- Piech, J.; Czernicki, K. Virtual Reality Rehabilitation and Exergames—Physical and Psychological Impact on Fall Prevention Among the Elderly—A Literature Review. Appl. Sci. 2021, 11, 4098. [Google Scholar] [CrossRef]

- Cugusi, L.; Prosperini, L.; Mura, G. Exergaming for Quality of Life in Persons Living with Chronic Diseases: A Systematic Review and Meta—Analysis. PM R 2020, 13, 756–780. [Google Scholar] [CrossRef]

- Pacheco, T.B.F.; De Medeiros, C.S.P.; De Oliveira, V.H.B.; Vieira, E.; De Cavalcanti, F.A.C. Effectiveness of exergames for improving mobility and balance in older adults: A systematic review and meta-analysis. Syst. Rev. 2020, 9, 1–14. [Google Scholar] [CrossRef]

- Yoo, S.; Kay, J. Vrun: Running-In-Place Virtual Reality Exergame. In Proceedings of the 28th Australian Conference on Computer-Human Interaction, Association for Computing Machinery, Launceston, Australia, 29 November–2 December 2016; Volume 29, pp. 562–566. [Google Scholar]

- Mütterlein, J. The Three Pillars of Virtual Reality? Investigating the Roles of Immersion, Presence, And Interactivity. In Proceedings of the 51st Hawaii International Conference on System Sciences, Kauai, HI, USA, 3–6 January 2018. [Google Scholar]

- Kappen, D.L.; Mirza-Babaei, P.; Nacke, L.E. Older Adults’ Physical Activity and Exergames: A Systematic Review. Int. J. Hum. Comput. Interact. 2019, 35, 140–167. [Google Scholar] [CrossRef]

- Skjæret, N.; Nawaz, A.; Morat, T.; Schoene, D.; Helbostad, J.L.; Vereijken, B. Exercise and Rehabilitation Delivered through Exergames in Older Adults: An Integrative Review of Technologies, Safety and Efficacy. Int. J. Med. Inform. 2016, 85, 1–16. [Google Scholar] [CrossRef] [Green Version]

- Barry, G.; Galna, B.; Rochester, L. The Role of Exergaming in Parkinson’s Disease Rehabilitation: A Systematic Review of the Evidence. J. Neuroeng. Rehabil. 2014, 11, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Reis, E.; Postolache, G.; Teixeira, L.; Arriaga, P.; Lima, M.L.; Postolache, O. Exergames for Motor Rehabilitation in Older Adults: An Umbrella Review. Phys. Ther. Rev. 2019, 24, 84–99. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, Y.; Guo, Z.; Bao, D.; Zhou, J. Comparison Between the Effects of Exergame Intervention and Traditional Physical Training on Improving Balance and Fall Prevention in Healthy Older Adults: A Systematic Review and Meta-Analysis. J. Neuroeng. Rehabil. 2021, 18, 1–17. [Google Scholar] [CrossRef]

- Gamboa, E.; Ruiz, C.; Trujillo, M. Improving Patient Motivation Towards Physical Rehabilitation Treatments with Playtherapy Exergame. Stud. Health Technol. Inform. 2018, 249, 140–147. [Google Scholar]

- Streicher, A.; Smeddinck, J.D. Personalized and Adaptive Serious Games. In Entertainment Computing and Serious Games; Springer: Cham, Switzerland, 2016; Volume 9970, pp. 332–377. [Google Scholar]

- Ferreira, B.; Menezes, P. An Adaptive Virtual Reality-Based Serious Game for Therapeutic Rehabilitation. Int. J. Online Biomed. Eng. 2020, 16, 63–71. [Google Scholar] [CrossRef] [Green Version]

- Palaniappan, S.M.; Suresh, S.; Haddad, J.M.; Duerstock, B.S. Adaptive Virtual Reality Exergame for Individualized Rehabilitation for Persons with Spinal Cord Injury. In Computer Vision—Eccv 2020 Workshops; Bartoli, A., Fusiello, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 518–535. [Google Scholar]

- Cristian, G.-P.; David, V.; Ana-Isabel, C.-S.; Rodríguez-Hernández Marta, L.M.-C.J.; Santiago, S.-S. A Platform Based on Personalized Exergames and Natural User Interfaces to Promote Remote Physical Activity and Improve Healthy Aging in Elderly People. Sustainability 2021, 13, 7578. [Google Scholar] [CrossRef]

- Vallejo, D.; Gmez-Portes, C.; Albusac, J.; Glez-Morcillo, C.; Castro-Schez, J.J. Personalized Exergames Language: A Novel Approach to The Automatic Generation of Personalized Exergames for Stroke Patients. Appl. Sci. 2020, 10, 7378. [Google Scholar] [CrossRef]

- Cristian, G.-P.; Lacave Carmen, I.M.A.; David, V. Home Rehabilitation Based on Gamification and Serious Games for Young People: A Systematic Mapping Study. Appl. Sci. 2020, 10, 8849. [Google Scholar] [CrossRef]

- Afyouni, I.; Qamar, A.M.; Hussain, S.O.; Ur Rehman, F.; Sadiq, B.; Murad, A. Motion-Based Serious Games for Hand Assistive Rehabilitation. In Proceedings of the 22nd International Conference on Intelligent User Interfaces Companion, Limassol, Cyprus, 13–16 March 2017; pp. 133–137. [Google Scholar]

- Varela-Aldás, J.; Palacios-Navarro, G.; Amariglio, R.; García-Magariño, I. Head-Mounted Display-Based Application for Cognitive Training. Sensors 2020, 20, 6552. [Google Scholar] [CrossRef]

- Afyouni, I.; Murad, A.; Einea, A. Adaptive Rehabilitation Bots in Serious Games. Sensors 2020, 20, 7037. [Google Scholar] [CrossRef]

- Guimarães, V.; Oliveira, E.; Carvalho, A.; Cardoso, N.; Emerich, J.; Dumoulin, C.; Swinnen, N.; De Jong, J.; de Bruin, E.D. An Exergame Solution for Personalized Multicomponent Training in Older Adults. Appl. Sci. 2021, 11, 7986. [Google Scholar] [CrossRef]

- Paraskevopoulos, I.; Tsekleves, E. Use of Gaming Sensors and Customised Exergames for Parkinson’s Disease Rehabilitation. In Proceedings of the 2013 5th International Conference on Games and Virtual Worlds for Serious Applications, Poole, UK, 11–13 September 2013; pp. 1–5. [Google Scholar]

- Li, X.; Han, T.; Zhang, E.; Shao, W.; Li, L.; Wu, C. Memoride: An Exergame Combined with Working Memory Training to Motivate Elderly with Mild Cognitive Impairment to Actively Participate in Rehabilitation. In Human Aspects of It for the Aged Population. Supporting Everyday Life Activities; Gao, Q., Zhou, J., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 90–105. [Google Scholar]

- Piper, W.E.; Ogrodniczuk, J.S.; Joyce, A.S.; Mccallum, M.; Rosie, J.S. Relationships Among Affect, Work, And Outcome in Group Therapy for Patients with Complicated Grief. Am. J. Psychother. 2002, 56, 347–361. [Google Scholar] [CrossRef] [Green Version]

- Mergenthaler, E. Shifts from Negative to Positive Emotional Tone: Facilitators of Therapeutic Change. In Proceedings of the Presentation at the 34th Annual Meeting of The Society for Psychotherapy Research, Weimar, Germany, 25−29 June 2003. [Google Scholar]

- Kritikos, J.; Caravas, P.; Tzannetos, G.; Douloudi, M.; Koutsouris, D. Emotional Stimulation During Motor Exercise: An Integration to The Holistic Rehabilitation Framework. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23−27 July 2019. [Google Scholar] [CrossRef]

- Ekman, P. Universal Facial Expressions of Emotion. Calif. Ment. Health 1970, 8, 151–158. [Google Scholar]

- Russell, J.A.; Mehrabian, A. Evidence for A Three-Factor Theory of Emotions. J. Res. Personal. 1977, 11, 273–294. [Google Scholar] [CrossRef]

- Toisoul, A.; Kossaifi, J.; Bulat, A.; Tzimiropoulos, G.; Pantic, M. Estimation of continuous valence and arousal levels from faces in naturalistic conditions. Nat. Mach. Intell. 2021, 3, 42–50. [Google Scholar] [CrossRef]

- Bălan, O.; Moise, G.; Petrescu, L.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. Emotion Classification Based on Biophysical Signals and Machine Learning Techniques. Symmetry 2020, 12, 21. [Google Scholar] [CrossRef] [Green Version]

- Hussain, S.; Alzoubi, O.; Calvo, R.; D’mello, S. Affect Detection from Multichannel Physiology During Learning Sessions with Autotutor. In International Conference on Artificial Intelligence in Education; Biswas, G., Bull, S., Kay, J., Mitrovic, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 131–138. [Google Scholar] [CrossRef]

- Jin, X.; Wang, Z. An Emotion Space Model for Recognition of Emotions in Spoken Chinese. In Affective Computing and Intelligent Interaction; Tao, J., Tan, T., Picard, R.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3784, pp. 397–402. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V. Facial Action Coding System; American Psychological Association: Washington, DC, USA, 1978. [Google Scholar]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human Emotion Recognition: Review of Sensors and Methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [Green Version]

- Benrachou, D.E.; Masmoudi, M.; Djekoune, O.; Zenati, N.; Ousmer, M. Avatar-Facilitated Therapy and Virtual Reality: Next-Generation of Functional Rehabilitation Methods. In Proceedings of the 2020 1st International Conference on Communications, Control Systems and Signal Processing (CCSSP), El Oued, Algeria, 16–17 May 2020; pp. 298–304. [Google Scholar]

- Trombetta, M.; Henrique, P.P.B.; Brum, M.R.; Colussi, E.L.; De Marchi, A.C.B.; Rieder, R. Motion Rehab Ave 3d: A Vr-Based Exergame for Post-Stroke Rehabilitation. Comput. Methods Programs Biomed. 2017, 151, 15–20. [Google Scholar] [CrossRef]

- Miclaus, R.; Roman, N.; Caloian, S.; Mitoiu, B.; Suciu, O.; Onofrei, R.R.; Pavel, E.; Neculau, A. Non-Immersive Virtual Reality for Post-Stroke Upper Extremity Rehabilitation: A Small Cohort Randomized Trial. Brain Sci. 2020, 10, 655. [Google Scholar] [CrossRef]

- Kim, W.-S.; Cho, S.; Ku, J.; Kim, Y.; Lee, K.; Hwang, H.-J.; Paik, N.-J. Clinical Application of Virtual Reality for Upper Limb Motor Rehabilitation in Stroke: Review of Technologies and Clinical Evidence. J. Clin. Med. 2020, 9, 3369. [Google Scholar] [CrossRef]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Review of Three-Dimensional Human-Computer Interaction with Focus on the Leap Motion Controller. Sensors 2018, 18, 2194. [Google Scholar] [CrossRef] [Green Version]

- Pereira, M.F.; Prahm, C.; Kolbenschlag, J.; Oliveira, E.; Rodrigues, N.F. A Virtual Reality Serious Game for Hand Rehabilitation Therapy. In Proceedings of the 2020 IEEE 8th International Conference on Serious Games and Applications for Health (SeGAH), Vancouver, BC, Canada, 12–14 August 2020; pp. 1–7. [Google Scholar]

- Nadia, N.; Sergio, O.-E.; Miguel, C. An Semg-Controlled 3d Game for Rehabilitation Therapies: Real-Time Time Hand Gesture. Sensors 2020, 20, 6451. [Google Scholar]

- Dharia, S.; Eirinaki, M.; Jain, V.; Patel, J.; Varlamis, I.; Vora, J.; Yamauchi, R. Social Recommendations for Personalized Fitness Assistance. Pers. Ubiquitous Comput. 2018, 22, 245–257. [Google Scholar] [CrossRef]

- Hagen, K.; Chorianopoulos, K.; Wang, A.I.; Jaccheri, L.; Weie, S. Gameplay as Exercise. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; pp. 1872–1878. [Google Scholar]

- Bernava, G.; Nucita, A.; Iannizzotto, G.; Caprì, T.; Fabio, R.A. Proteo: A Framework for Serious Games in Tele-Rehabilitation. Appl. Sci. 2021, 11, 5935. [Google Scholar] [CrossRef]

- González-González, C.S.; Toledo-Delgado, P.A.; Muñoz-Cruz, V.; Torres-Carrion, P.V. Serious Games for Rehabilitation: Gestural Interaction in Personalized Gamified Exercises Through a Recommender System. J. Biomed. Inform. 2019, 97, 103266. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.; Arya, A.; Orji, R.; Chan, G. Effects of A Personalized Fitness Recommender System Using Gamification and Continuous Player Modeling: System Design and Long-Term Validation Study. JMIR Serious Games 2020, 8, E19968. [Google Scholar] [CrossRef]

- Tadayon, R.; Amresh, A.; Mcdaniel, T.; Panchanathan, S. Real-Time Stealth Intervention for Motor Learning Using Player Flow-State. In Proceedings of the 2018 IEEE 6th International Conference on Serious Games and Applications for Health (SeGAH), Vienna, Austria, 16–18 May 2018; pp. 1–8. [Google Scholar]

- Verma, V.; Rheem, H.; Amresh, A.; Craig, S.D.; Bansal, A. Predicting Real-Time Affective States by Modeling Facial Emotions Captured During Educational Video Game Play. In Proceedings of the GALA 2020: Games and Learning Alliance, Laval, France, 9–10 December 2020; Springer: Cham, Switzerland; pp. 447–452. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.; Wolf, L. Deepface: Closing the Gap to Human-Level Performance in Face Verification. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Mood Me. Available online: https://www.Mood-Me.Com/Products/Unity-Face-Ai-Ar-Sdk/ (accessed on 10 November 2021).

- Unity-Technologies. Available online: https://Github.Com/Unity-Technologies/Barracuda-Release (accessed on 10 November 2021).

- Ultraleap. Available online: https://www.Ultraleap.Com/Product/Leap-Motion-Controller/ (accessed on 10 November 2021).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).