Understanding Coding Behavior: An Incremental Process Mining Approach

Abstract

1. Introduction

- Presenting the application of incremental process mining techniques that can prospectively be used online to provide software programmers feedback to immediately understand wrong behaviors and suggest corrective actions to take;

- Evaluating a specific incremental process mining system to assess its performance and scalability, and to understand whether it is a suitable candidate for supporting the previous goal;

- Studying specific cases of application of the proposed approach and system.

2. Related Work

3. Background

3.1. Process Mining

3.2. WoMan Framework

- Conformance Checking

- to check whether a new process execution is compliant to a given process model;

- Workflow Discovery

- to learn a process model starting from descriptions of specific executions;

- Activity Prediction

- to guess what will be the next activities that are likely to happen at a given point of a process execution, according to a given process model;

- Process Prediction

- to guess the process that is likely to be enacted in a given (even partial) execution, among those specified in a given set of models;

- Simulation

- to generate possible cases that are compliant with a given model, also considering the likelihood of the different tasks and of their allowed combinations.

- T

- is the timestamp of the event; timestamps must be unique for each log entry, and in principle should be directly proportional to the time passed from the start of the process execution, so as to allow the system to draw statistics about the timing and duration of the activities.

- E

- is the type of event; 6 types of events are handled by the system:

- begin_process

- involves specifying that a process execution has been started;

- begin_activity

- involves specifying that a task execution (i.e., an ‘activity’) has been started;

- end_activity

- involves specifying that an activity has been completed;

- atomic_activity

- involves specifying that an activity whose time span is not significant has been executed;

- context_description

- involves specifying that contextual information is being provided, other than activity execution;

- end_process

- involves specifying that a process execution has been completed.

- P

- is the identifier of the process the event refers to; each different process handled by the system must have a different identifier that will be used by the system to name the corresponding model.

- C

- is the identifier of the process execution (the ‘case’) the event refers to; each different case for a given process handled by the system must have a different identifier that will be used by the system to denote the corresponding trace and information.

- A

- If begin_activity, end_activity}, it is the name of a task relevant to process P. If context_description, it is a set of first-order logic atoms built on domain-specific predicates that describe relevant contextual information associated with the timestamp, to be interpreted as the logical conjunction (AND) of the atoms.

- N

- If begin_activity, end_activity}, it is a progressive integer distinguishing the activities in the same case associated with the same task; this is relevant in the case of many concurrent executions of the same task, to properly match their begin_activity and end_activity events. If begin_process, this parameter can be used to specify a noise tolerance threshold in , such that the process model components with frequency less than N are considered as noise and ignored during compliance checks.

- R

- is the role of the agent that is carrying out the activity or an identifier of the specific agent (the ‘resource’); the system can be provided with the associations of resources to roles and with a taxonomy of roles, so as to allow generalizations over them. If agents are not relevant, or unknown, for the process model, this information can be dropped, and thus log items are 6-tuples rather than 7-tuples.

- ok

- the event is compliant with the process model;

- warning

- the event is not compliant with the process model, for one of several reasons:

- task: The event has started the execution of a task that is not accounted for in the current model;

- transition: The event has started an activity for a task that is recognized by the model, but not applicable in the current status of the case;

- loop: A task or transition was run more times than expected;

- time: The event started or terminated a task, or a transition, outside the allowed period (with some tolerance) specified by the model;

- step: The event started or terminated a task, or a transition, outside the allowed step interval required by the model (each activity in the case increments the number of steps carried out thus far);

- agent: The activity was carried out by an agent whose role is not allowed by the current model for that task in general, or for that task in the specific transition that occurred;

- pre-condition or post-condition: Various kinds of pre or post conditions (learned by the system from the structure or context of past process executions) for carrying out a task, or a transition, or a task in a specific transition, are not satisfied.

Each warning carries a different degree of severity, expressed as a numeric weight. In particular, some warnings encompass others (e.g., a new task also implies a new transition), and thus have a greater severity degree than the others. - error

- the event cannot be processed, due to one of the following reasons:

- Incorrect syntax of the entry;

- Unknown process or case;

- Termination of an activity that was never started;

- Completion of a case while activities are still running.

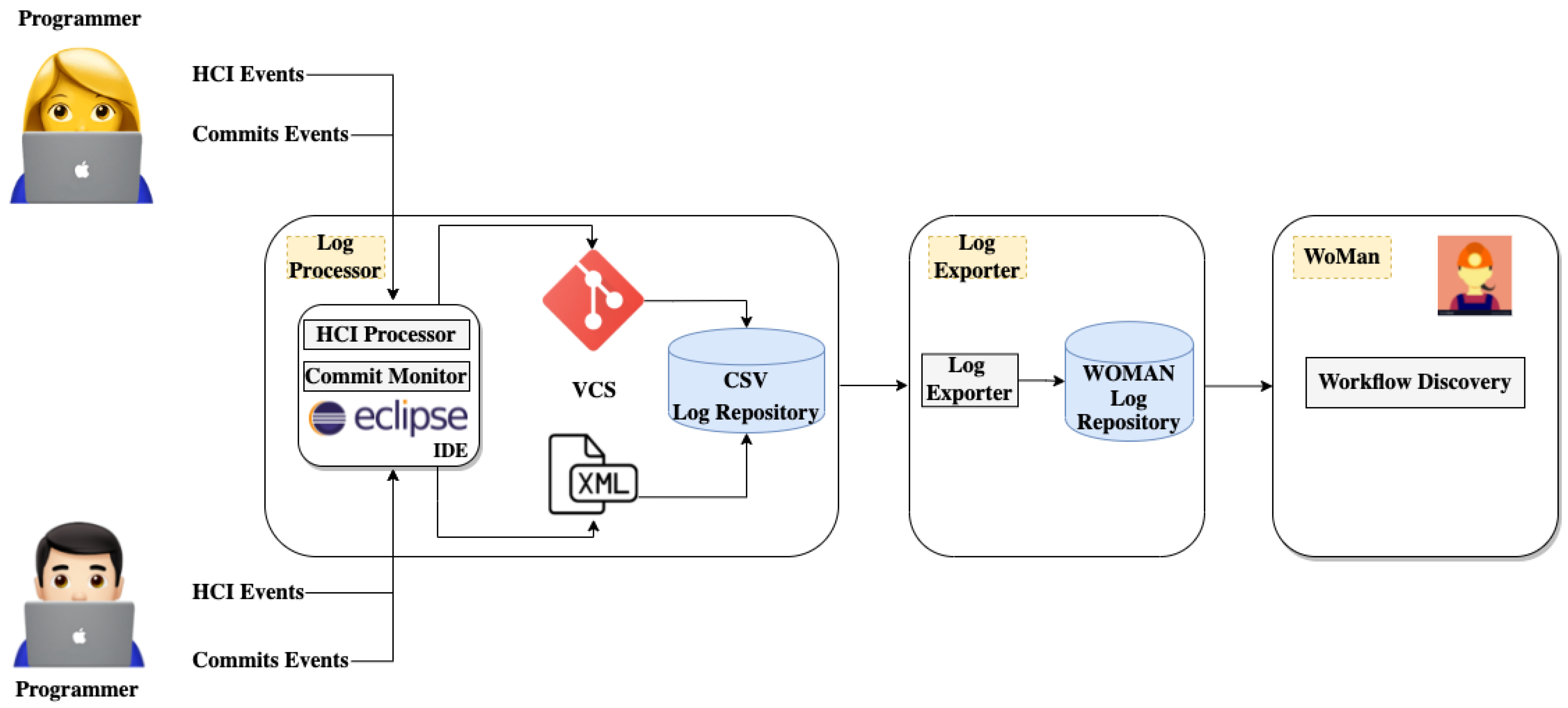

4. Proposed Approach

4.1. Log Processor

4.2. Log Exporter

- T

- is the timestamp of the development session.

- E

- is the type of event; 5 types of events are handled by the Log Exporter:

- begin_process

- involves specifying that a development session has been started;

- begin_activity

- involves specifying that a command execution (i.e., an ‘activity’) has been started;

- end_activity

- involves specifying that an activity has been completed;

- atomic_activity

- involves specifying that an activity whose time span is not significant has been executed;

- end_process

- involves specifying that a development session has been completed.

- P

- is the identifier of the programmer the event refers to; each different programmer handled by the system must have a different identifier that will be used by the system to name the corresponding model.

- C

- is the identifier of the development session (the ‘case’) the event refers to; each different case for a given programmer handled by the system must have a different identifier, and is composed by the identifier of the programmer with the initial timestamp session.

- A

- is the name of a command relevant to model P.

- N

- is a progressive integer distinguishing the commands in the same development session associated with the same main command; this is relevant in the case of many concurrent executions of the same command, to properly match their begin_activity and end_activity events.

4.3. WoMan Miner Tool

5. Case Study and Lesson Learned

5.1. Evaluation Setting

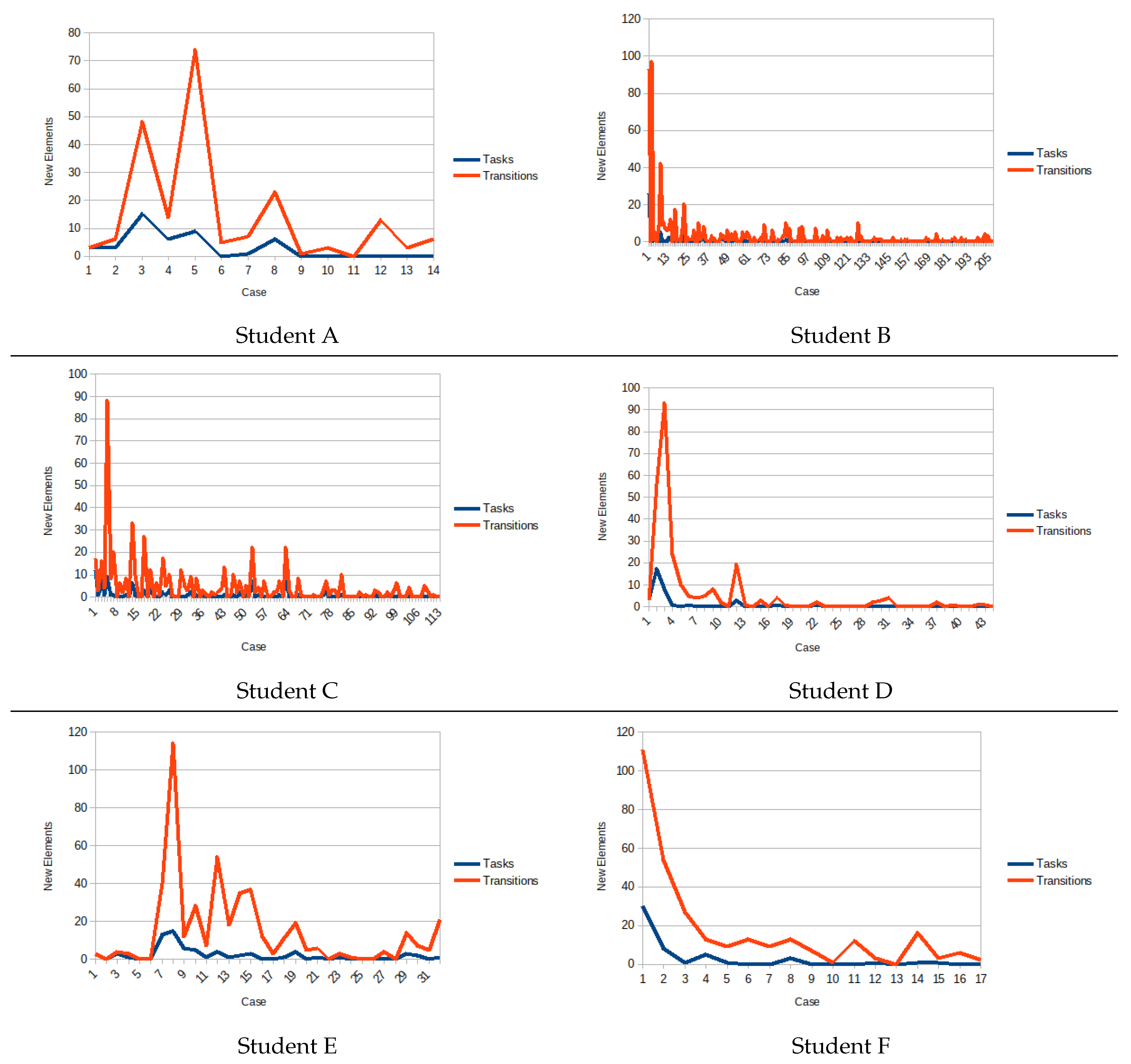

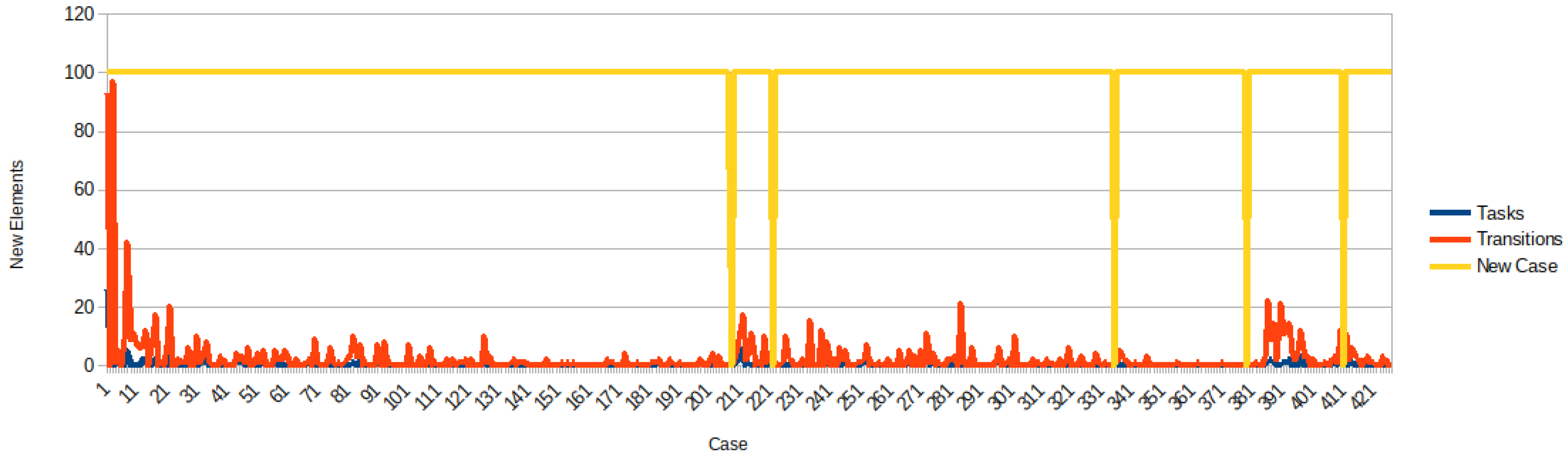

5.2. Discussion about the Coding Behavior

5.3. Discussion about Student’s Misconception

5.4. Programmer Learning Curve

6. Threats to Validity

6.1. External Validity

6.2. Internal Validity

6.3. Construct Validity

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ardimento, P.; Bernardi, M.L.; Cimitile, M. Malware Phylogeny Analysis using Data-Aware Declarative Process Mining. In Proceedings of the 2020 IEEE Conference on Evolving and Adaptive Intelligent Systems (EAIS 2020), Bari, Italy, 27–29 May 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Leemans, M.; van der Aalst, W.M.P. Process mining in software systems: Discovering real-life business transactions and process models from distributed systems. In Proceedings of the 2015 ACM/IEEE 18th International Conference on Model Driven Engineering Languages and Systems (MODELS), Ottawa, ON, Canada, 30 September–2 October 2015; pp. 44–53. [Google Scholar] [CrossRef]

- Liu, C.; van Dongen, B.; Assy, N.; van der Aalst, W.M.P. Component behavior discovery from software execution data. In Proceedings of the 2016 IEEE Symposium Series on Computational Intelligence (SSCI 2016), Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar] [CrossRef]

- van der Aalst, W. Big software on the run: In vivo software analytics based on process mining (keynote). In Proceedings of the ICSSP 2015: International Conference on Software and Systems Process 2015, Tallinn, Estonia, 24–26 August 2015; pp. 1–5. [Google Scholar]

- Hundhausen, C.D.; Olivares, D.M.; Carter, A.S. IDE-Based Learning Analytics for Computing Education: A Process Model, Critical Review, and Research Agenda. ACM Trans. Comput. Educ. 2017, 17, 1–26. [Google Scholar] [CrossRef]

- Ardimento, P.; Bernardi, M.L.; Cimitile, M.; Maggi, F.M. Evaluating coding behavior in software development processes: A process mining approach. In Proceedings of the 2019 IEEE/ACM International Conference on Software and System Processes (ICSSP), Montreal, QC, Canada, 25–26 May 2019; pp. 84–93. [Google Scholar] [CrossRef]

- Ferilli, S. Woman: Logic-based workflow learning and management. IEEE Trans. Syst. Man Cybern. Syst. 2013, 44, 744–756. [Google Scholar] [CrossRef]

- Carolis, B.D.; Ferilli, S.; Redavid, D. Incremental Learning of Daily Routines as Workflows in a Smart Home Environment. ACM Trans. Interact. Intell. Syst. (TiiS) 2015, 4, 1–23. [Google Scholar] [CrossRef]

- Ferilli, S.; Angelastro, S. Activity prediction in process mining using the WoMan framework. J. Intell. Inf. Syst. 2019, 53, 93–112. [Google Scholar] [CrossRef]

- Ardimento, P.; Bernardi, M.L.; Cimitile, M.; De Ruvo, G. Mining Developer’s Behavior from Web-Based IDE Logs. In Proceedings of the 2019 IEEE 28th International Conference on Enabling Technologies: Infrastructure for Collaborative Enterprises (WETICE), Naples, Italy, 12–14 June 2019; pp. 277–282. [Google Scholar] [CrossRef]

- Ardimento, P.; Bernardi, M.L.; Cimitile, M.; De Ruvo, G. Learning analytics to improve coding abilities: A fuzzy-based process mining approach. In Proceedings of the 2019 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), New Orleans, LA, USA, 23–26 June 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Mylyn. Available online: https://www.eclipse.org/mylyn/ (accessed on 19 November 2021).

- Murphy, G.C.; Kersten, M.; Findlater, L. How are Java software developers using the Elipse IDE? IEEE Softw. 2006, 23, 76–83. [Google Scholar] [CrossRef]

- Soh, Z.; Khomh, F.; Guéhéneuc, Y.; Antoniol, G. Towards understanding how developers spend their effort during maintenance activities. In Proceedings of the 2013 20th Working Conference on Reverse Engineering WCRE, Koblenz, Germany, 14–17 October 2013; pp. 152–161. [Google Scholar] [CrossRef]

- Bavota, G.; Canfora, G.; Penta, M.D.; Oliveto, R.; Panichella, S. An Empirical Investigation on Documentation Usage Patterns in Maintenance Tasks. In Proceedings of the 2013 IEEE International Conference on Software Maintenance, Eindhoven, The Netherlands, 22–28 September 2013; pp. 210–219. [Google Scholar] [CrossRef]

- Ying, A.T.T.; Robillard, M.P. The Influence of the Task on Programmer Behaviour. In Proceedings of the 2011 IEEE 19th International Conference on Program Comprehension, Kingston, ON, Canada, 22–24 June 2011; pp. 31–40. [Google Scholar] [CrossRef][Green Version]

- Murphy-Hill, E.; Parnin, C.; Black, A.P. How We Refactor, and How We Know It. IEEE Trans. Softw. Eng. 2012, 38, 5–18. [Google Scholar] [CrossRef]

- Astromskis, S.; Bavota, G.; Janes, A.; Russo, B.; Di Penta, M. Patterns of developers behaviour: A 1000-h industrial study. J. Syst. Softw. 2017, 132, 85–97. [Google Scholar] [CrossRef]

- Van Der Aalst, W.; Adriansyah, A.; De Medeiros, A.K.A.; Arcieri, F.; Baier, T.; Blickle, T.; Bose, J.C.; Van Den Brand, P.; Brandtjen, R.; Buijs, J.; et al. Process Mining Manifesto. In Business Process Management Workshops: Proceedings of the BPM 2011 International Workshops, Clermont-Ferrand, France, 29 August 2011; Springer: London, UK, 2011; pp. 169–194. [Google Scholar]

- van der Aalst, W. Process Mining: Overview and Opportunities. ACM Trans. Manag. Inf. Syst. 2012, 3, 7.1–7.17. [Google Scholar] [CrossRef]

- van der Aalst, W. The Application of Petri Nets to Workflow Management. J. Circuits Syst. Comput. 1998, 8, 21–66. [Google Scholar] [CrossRef]

- Pesic, M.; van der Aalst, W.M.P. A declarative approach for flexible business processes management. In Business Process Management Workshops; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4103, pp. 169–180. [Google Scholar]

- Weijters, A.; van der Aalst, W.M.P. Rediscovering Workflow Models from Event-Based Data. In Proceedings of the11th Dutch-Belgian Conference on Machine Learning (Benelearn 2001), Antwerp, Belgium, 21 December 2001; pp. 93–100. [Google Scholar]

- van der Aalst, W.; Weijters, T.; Maruster, L. Workflow Mining: Discovering Process Models from Event Logs. IEEE Trans. Knowl. Data Eng. 2004, 16, 1128–1142. [Google Scholar] [CrossRef]

- de Medeiros, A.K.A.; van Dongen, B.F.; van der Aalst, W.M.P.; Weijters, A.J.M.M. Process Mining: Extending the α-Algorithm to Mine Short Loops; Technical Report, BETAWorking Paper Series; Eindhoven University of Technology: Eindhoven, The Netherlands, 2004. [Google Scholar]

- Wen, L.; Wang, J.; Sun, J. Detecting Implicit Dependencies Between Tasks from Event Logs. In Frontiers of WWW Research and Development - APWeb 2006; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3841, pp. 591–603. [Google Scholar]

- Van der Aalst, W.M.P.; De Medeiros, A.K.A.; Weijters, A.J.M.M. Genetic process mining. In Applications and Theory of Petri Nets 2005; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3536, pp. 48–69. [Google Scholar]

- de Medeiros, A.K.A.; Weijters, A.J.M.M.; van der Aalst, W.M.P. Genetic process mining: An experimental evaluation. Data Min. Knowl. Discov. 2007, 14, 245–304. [Google Scholar] [CrossRef]

- Chesani, F.; Lamma, E.; Mello, P.; Montali, M.; Riguzzi, F.; Storari, S. Exploiting Inductive Logic Programming Techniques for Declarative Process Mining. In Transactions on Petri Nets and Other Models of Concurrency II; Springer: Berlin/Heidelberg, Germany, 2009; pp. 278–295. [Google Scholar]

- Maggi, F.M. Declarative Process Mining. In Encyclopedia of Big Data Technologies; Sakr, S., Zomaya, A.Y., Eds.; Springer: Cham, Switzerland, 2019; pp. 625–632. [Google Scholar] [CrossRef]

- Maggi, F.M.; Bose, R.P.J.C.; van der Aalst, W.M.P. Efficient Discovery of Understandable Declarative Process Models from Event Logs. In Advanced Information Systems Engineering; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7328, pp. 270–285. [Google Scholar]

- Cattafi, M.; Lamma, E.; Riguzzi, F.; Storari, S. Incremental Declarative Process Mining. In Smart Information and Knowledge Management; Studies in Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2010; Volume 260, pp. 103–127. [Google Scholar]

- Yoon, Y.; Myers, B.A. Capturing and Analyzing Low-Level Events from the Code Editor. In Proceedings of the PLATEAU ’11: Proceedings of the 3rd ACM SIGPLAN Workshop on Evaluation and Usability of Pogramming Languages and Tools, Portland, OR, USA, 24 October 2011; pp. 25–30. [Google Scholar] [CrossRef]

- Mehta, P.; Pandya, S. A review on sentiment analysis methodologies, practices and applications. Int. J. Sci. Technol. Res. 2020, 9, 601–609. [Google Scholar]

- Pandya, S.; Shah, J.; Joshi, N.; Ghayvat, H.; Mukhopadhyay, S.C.; Yap, M.H. A novel hybrid based recommendation system based on clustering and association mining. In Proceedings of the 2016 10th International Conference on Sensing Technology (ICST), Nanjing, China, 11–13 November 2016; pp. 1–6. [Google Scholar]

| User | #cases | #activities | #activ./case | runtime | ms/activ. | #tasks | #transit. |

|---|---|---|---|---|---|---|---|

| A | 14 | 4078 | 291.29 | 51 s | 13 | 41 | 206 |

| B | 207 | 128,389 | 620.24 | 2 h 16 min 25 s | 64 | 71 | 580 |

| C | 113 | 60,725 | 537.39 | 44 min | 43 | 85 | 521 |

| D | 44 | 30,413 | 691.20 | 47 min 5 s | 93 | 33 | 254 |

| E | 32 | 20,881 | 652.53 | 24 min 58 s | 72 | 68 | 466 |

| F | 17 | 10,977 | 645.71 | 4 min 39 s | 25 | 49 | 299 |

| Average | 71.17 | 42,577.17 | 573.06 | 43 min | 52 | 57.83 | 387.67 |

| Total | 427 | 255,463 | - | 4 h 17 min 58 s | - | - | - |

| Overall | 427 | 255,463 | 598.27 | 4 h 21 min 41 s | 61 | 132 | 1078 |

| User | #exam score | #insertions | #deletions | #insertions-deletions |

|---|---|---|---|---|

| A | low | 1761 | 130 | 1631 |

| B | high | 8014 | 4340 | 3674 |

| C | high | 4536 | 1131 | 3405 |

| D | medium | 4089 | 850 | 3239 |

| E | high | 5434 | 1448 | 3986 |

| F | medium | 4380 | 407 | 3973 |

| Measure | Min | Q1 | Average | Q3 | Max | IQR | Lower | Upper |

|---|---|---|---|---|---|---|---|---|

| #activ./case | 291.29 | 558.10 | 573.06 | 650.82 | 691.2 | 92.72 | 419.018 | 789.90 |

| #insertions-deletions | 1631 | 3280.5 | 3318 | 3898.25 | 3986 | 617.75 | 2353.87 | 4824.87 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ardimento, P.; Bernardi, M.L.; Cimitile, M.; Redavid, D.; Ferilli, S. Understanding Coding Behavior: An Incremental Process Mining Approach. Electronics 2022, 11, 389. https://doi.org/10.3390/electronics11030389

Ardimento P, Bernardi ML, Cimitile M, Redavid D, Ferilli S. Understanding Coding Behavior: An Incremental Process Mining Approach. Electronics. 2022; 11(3):389. https://doi.org/10.3390/electronics11030389

Chicago/Turabian StyleArdimento, Pasquale, Mario Luca Bernardi, Marta Cimitile, Domenico Redavid, and Stefano Ferilli. 2022. "Understanding Coding Behavior: An Incremental Process Mining Approach" Electronics 11, no. 3: 389. https://doi.org/10.3390/electronics11030389

APA StyleArdimento, P., Bernardi, M. L., Cimitile, M., Redavid, D., & Ferilli, S. (2022). Understanding Coding Behavior: An Incremental Process Mining Approach. Electronics, 11(3), 389. https://doi.org/10.3390/electronics11030389