Abstract

Along with rising traffic jams, accurate counting of vehicles in surveillance images is becoming increasingly difficult. Current counting methods based on density maps have achieved tremendous improvement due to the prosperity of convolution neural networks. However, as highly overlapping and sophisticated large-scale variation phenomena often appear within dense images, neither traditional CNN methods nor fixed-size self-attention transformer methods can implement exquisite counting. To relieve these issues, in this paper, we propose a novel vehicle counting approach, namely the synergism attention network (SAN), by unifying the benefits of transformers and convolutions to perform dense counting assignments effectively. Specifically, a pyramid framework is designed to adaptively utilize the multi-level features for better fitting in counting tasks. In addition, a synergism transformer (SyT) block is customized, where a dual-transformer structure is equipped to capture global attention and location-aware information. Finally, a Location Attention Cumulation (LAC) module is also presented to explore the more efficient and meaningful weighting regions. Extensive experiments demonstrate that our model is very competitive and reached new state-of-the-art performance on TRANCOS datasets.

1. Introduction

Nowadays, the intelligent transportation system (ITS) has become an indispensable part of our daily lives with the popularity of vehicles and the crowding of all corners of the main traffic lanes. Among them, mitigating and solving traffic congestion on the road is the primary task that the ITS needs to face today, especially in urban regions. Traffic congestion often leads to lower productivity for citizens and pollution through the emission of gases into the environment. Therefore, estimating the number of intricate condition and overlapping vehicles takes a key role in controlling more severe traffic congestion in the future. Simultaneously, it is also preemptive work for guiding traffic flow optimization, such as by planning routes dynamically to avoid congested roads [1,2], controlling traffic lights automatically, and assisting in designing new transport corridors, to name just a few.

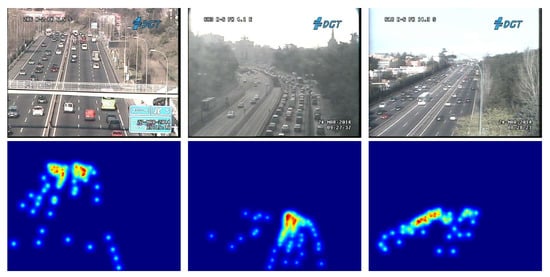

To implement effective counting, many traditional techniques have been proposed to extract a suitable area first and then count this area by handcrafted features such as HOG [3] and SIFT. However, these handcrafted features are susceptible to external conditions. Meanwhile, traffic surveillance devices that are equipped with these technologies are mostly deployed in the outdoor environment with diverse geographical locations, suffering severely from challenges [4,5,6] such as large perspective gaps and vehicle scale differences. As shown in Figure 1, the dense traffic image has multiple occlusions, such as pedestrian bridges, street lights, and road signs. Obviously, the vehicles closer to the horizon are difficult to observe because of their micro-scale in the scenes. Vehicle counting based solely on traditional techniques is fatigued for these reasons. In order to overcome these shortcomings, some researchers have paid attention to convolution neural networks (CNNs) [7,8,9] in recent years, which are brilliant in the computer vision discipline due to its powerful ability to implement potential hierarchical representations.

Figure 1.

Examples of dense traffic images from TRANCOS [10] dataset and its corresponding density map.

Approaches modeling the vehicle counting task as a regression map estimation have been systematically proven to be state of the art [11]. This allows the derivation of estimated vehicle density maps for occluded images. Many estimation networks [12,13] utilizing the convolution structure [14] as a backbone were fabricated for mapping between the original image and the regressed density one. Then, a density map can be employed to obtain the specific quantity of vehicles. For instance, Sooksatra et al. [15] designed a multi-level estimation network with regard to traffic maps under complicated scales. Even the latest work [16] proposed an adaptive density map generator to avoid errors caused by fixed Gaussian kernels for achieving better counting performance. These CNN-based methods have achieved remarkable improvements in the counting discipline, yet they are stuck in another dilemma: being constrained by the handcrafted design of an estimation network [17].

Accordingly, transformer models sprang up in the field of natural language-processing tasks [18] on account of their global self-attention mechanism. Recently, transformers have been successfully introduced into the visual field by the vision transformer [19,20]. Although the performance of transformers in up-down stream vision tasks can keep pace with or surpass CNNs [21], the application of transformers in the counting discipline is still in its infancy. As a pioneer work, Wang et al. [22] innovatively proposed an estimation network in which a CNN and transformer are connected in series. Furthermore, by considering global information to enhance feature association, Lin et al. [23] showed great dedication to designing a robust local attention mechanism, exploring the mapping between high-density regions and accounting for addressing the critical issue of large-scale variations. Undoubtedly, the significant effects of these models, which combine a CNN and transformer, authenticate their meliority. However, it is irrational for these approaches to utilize fixed-size attention and encode single-scale features as tokens directly. Unfortunately, single-scale tokens always fail to resolve the puzzle of sophisticated scale variations.

Inspired by these insights, we propose a novel synergism attention network (SAN) that merges the strengths of both CNNs and transformers in a new mode. First, in response to the aforementioned flaws in large-scale variations, we design an SAN stage block which adopts a pyramid structure to yield corresponding multi-scale feature maps. Different from the previous models that generate fixed-size CNN outputs, the SAN stage block can produce feature maps of different receptive fields step by step. Consequently, the structure provides a reliable way to adapt to the dramatic scale variations in traffic images and achieves better counting performance.

Secondly, we design a synergism transformer (SyT) module which adopts two branches (i.e., a multi-head global branch and multi-head mask weighted branch) as the backbone. To be specific, the two divisions focus particular attention on different aspects to complement each other. While the former utilizes a multi-head attention mechanism to produce global information, the latter focuses on the relevance of the local location region. On this basis, we realize a location attention mechanism that employs the Location Attention Cumulation (LAC) module, concentrating on the most relevant information in a single-scale range. In addition, a penalty of location attentional deviation is imposed for attaining great training results. Eventually, our algorithm achieves state-of-the-art performance in both qualitative and quantitative experiments. The following are the main innovations and contributions:

- A novel network architecture, namely the synergism attention network, is proposed that adopts a pyramid structure to suit large-scale variations and effectively unify the merits of both CNNs and transformers;

- A synergism transformer (SyT) module is devised to incorporate the global information and location region attention, boosting the ability to allocate the appropriate weights to salient targets;

- These modules are delicately assembled, whose results on the TRANCOS dataset show that we reach new state-of-the-art performance both qualitatively and quantitatively.

2. Methodology

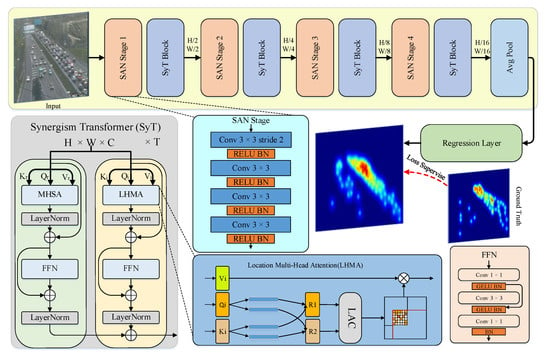

In this section, we elaborate on the proposed synergism attention network, which includes two main assemblies: the synergism transformer and the SAN stage. A detailed network diagram is shown in Figure 2. In the following, we describe in detail the SAN framework in Section 2.1, synergism transformer in Section 2.2, and the loss in Section 2.3.

Figure 2.

Overview of our synergism attention network (SAN).

2.1. Framework Overview

An overview of the framework is given in Figure 2. The dense traffic image is first put into the SAN stage block. For each input image , the SAN stage block utilizes a series of convolution kernels to extract feature maps and configure the convolution gstride=2 with a particular stride equal to two for image downsampling to obtain the low-resolution features. The formula for this is given as follows:

where G(·) is the mapping function implemented by three convolution layers and μ(·) is the activation function.

Inspired by the PVT [24], we design multiple SAN stage blocks to reduce the transformer’s computational burden and generate numerous scale features (e.g., , and to search for the corresponding dense image objects adaptively. Subsequently, the elementswill enter the dual-branch synergismtransformer to gain hybrid attention that ismore conducive to counting tasks. Finally, a regression layer is utilized to estimate the densitymap.

2.2. Synergism Transformer

Global Self-Attention. After acquiring SAN stage block results at different hierarchies, the synergism transformer starts to work by flattening the features to calculate the self-attention. Among them, the traditional self-attention [19] branch considers the paired relationship between the input and output position and yields the global information of the current feature:

where denotes the softmax function, , , and are the weight metrics, and , , and represent the query, key, and value vectors from the source input, respectively.

Location Multi-Head Attention. The SAN stage mentioned above resolves the problem of inefficient learning of cross-scale spatial information, but there still exists the issue that the preset attention does not match the counting task. Therefore, we employ a modified transformer [23], namely location multi-head attention (LMHA), as one of the two branches due to its success in seeking location attention. Specifically, we apply a distinct filtering mechanism to push the features to learn the most appropriate rectangle-sized window.

First, given an input feature (), LMHA maps them separately to query , key , and value vectors. Among them, and share parameters. Then, the two-head attention maps can be computed by

where and denote different attention maps and , , , and are trainable weight matrices.

Second, an LAC module is established for the purpose of selecting an appropriate region, focusing more on the imperceptible scale of the corresponding object and achieving more precise counting. In this regard, and behave as the input, which leads to the following formula:

The discussion of the function will be given in more detail in the next subsection.

Location Attention Cumulation. For obtaining a two-dimensional attention map, and need to be reshaped into three-order shapes first. Then, they are divided into multiple feature maps along the first axis. Generally, determining a rectangular region requires the intersection of two areas. In other words, we choose a filter function, namely Location Attention Cumulation (LAC), to find two rectangular areas along opposite directions and then select the overlapping rectangular regions as the location attention. More specifically, we define a position in where and . LAC can finally be expressed as

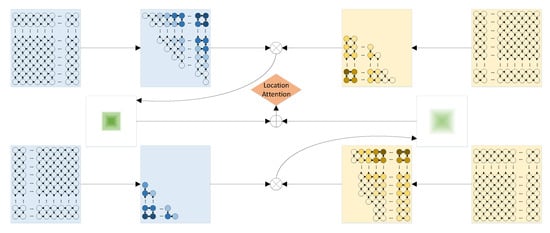

where represents the direction from one end to the diagonal and denotes the accumulation of attention scores from to . Roughly, Figure 3 depicts the entire procedure of this module. After obtaining an area where attention spreads in a fixed direction, we need to concentrate on maps spreading in the opposite direction in , and the formula is as follows:

Figure 3.

Illustration of Location Attention Cumulation (LAC), which exposes the concrete working process by spreading attention scores along a fixed direction.

Thus, we finally obtain the location attention by overlapping two known regions:

where ∗ is a Hadamard product. Obviously, the we calculate only contains a single-scale object, which may encounter the phenomenon of assigning too many zeros to other positions and resulting in few overlapped regions. To avoid this issue, we perform opposite operations directly on the original map while calculating our cumulation attention scores and then add them to , obtaining the required rectangular region no matter which direction the diffusion starts from. Therefore, we reset Equation (7) to be the following:

Finally, after obtaining the results of two SyT branches, we combine the scores for both location attention and global self-attention, and the output can be computed as follows:

2.3. Loss

In this work, we typically model the counting task as an estimation of a regression density map. Therefore, we cannot directly utilize the annotation of the TRANCOS dataset [10] as the ground truth. In other words, we need to first convert the dot-level annotation of this dataset into a density map through a Gaussian kernel function to supervise the training process. In this setting, for each traffic image , the ground truth density map can be represented as follows [25]:

where q denotes a position in and is a normalized two-dimensional Gaussian function. The point set of all inputs is defined as , is the mean value, and denotes an isotropic covariance matrix. With the density map , the Euclidean distance is utilized to measure the difference between the ground truth density map and the predicted one, whose formulation can be written as follows:

where N is the total number of training images, denotes the jth input image, and is the ground truth density map of , while stands for the above synergism attention network.

Aside from that, in order to ensure that location multi-head attention can frame the appropriate object and determine that the generated rectangle shape is not too large or too small, a loss is further appended to regulate LMHA, which can be formulated as follows:

where denotes the mean allocation of the location attention values in all regions. Finally, is considered together with to achieve better optimization.

3. Experiment

In this section, extensive experiments are performed on the TRANCOS dataset to exhibit the effectiveness and superiority of the proposed model. Across the entire model, we utilized the density maps from the dataset annotation as the ground truth (see Equation (10)). For model optimization, a stochastic gradient descent (SGD) method was employed in the training process with the learning rate set constantly.

3.1. Dataset

We used TRANCOS, a recent dataset containing vehicle images of heavy traffic, to train, validate, and test our model. More specifically, this dataset provides a set of 1244 static traffic images from different perspectives of surveillance cameras, with a total of 46,796 cars labeled. It is worth noting that traffic scenes under diverse surveillance conditions (e.g., under illumination and from multiple perspectives) are challenging for vehicle-counting tasks. In the preprocessing phase, we followed a common partitioning scheme for the training set, test set, and validation set. The train samples had 403 scenes, while the validation and test samples had 420 and 421 scenes, respectively. For better generalization performance, some data augmentation approaches were adopted. Specifically, we generated a random cropping and a random flip per image for each epoch.

3.2. Experimental Settings

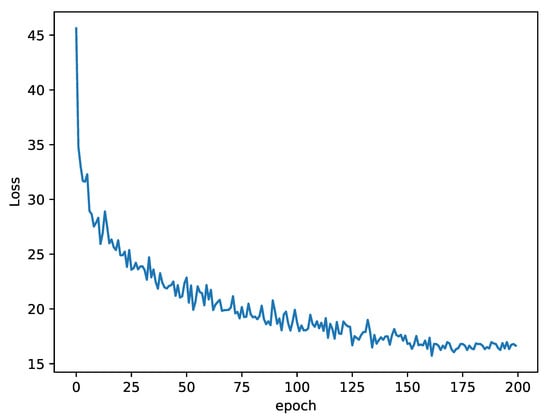

All experiments were conducted using the Pytorch framework with a batch size of eight. Figure 4 shows the convergence speed of the SAN, in which the ordinate denotes the changing loss values against the epochs shown in the abscissa. Evidently, the loss dropped rapidly in the initial phase. With 150 epochs reached, though certain fluctuations still existed, the overall loss values tended to be stable. The learning rate of the optimizer was fixed at . In order to numerically compare the estimation performance of our approach with other competing methods, the mean absolute error (MAE) and the mean squared error (MSE) were used as evaluation metrics. Specifically, their computational equations can be written as follows:

where denotes the predicted count of the ith traffic image, denotes the corresponding ground truth count of the ith traffic image, and N is the number of test set images. The proposed approach was compared with five recently reported state-of-the-art models:

Figure 4.

Illustration of the convergence speed of the SAN.

- -

- MCNN: Single-image crowd counting via a multi-column convolutional neural network, proposed by Zhang et al. [13];

- -

- P2PNet: Rethinking counting and localization in crowds through a purely point-based framework, proposed by Song et al. [26];

- -

- DMcount: Distribution matching for crowd counting, proposed by Wang et al. [27];

- -

- CCNN: An effort toward perspective-free object counting with deep learning, proposed by Daniel et al. [25];

- -

- Tracount: A deep convolutional neural network for highly overlapping vehicle counting, proposed by Shiv [28].

3.3. Quantitative Comparisons

At the testing phase, the images were divided and categorized into five spans based on the number of vehicles: 0–10, 11–20, 21–30, 31–40, and 41–50. According to the strategy of density division, we can intuitively observe from Table 1 that with the increasing number of vehicles in the images, both the MAE and MSE evaluation metrics showed a significant upward trend. This is reasonable, since more target materials often lead to easier capturing of the required features. It is encouraging that whenever the density of vehicles was low or high, we could easily find that our SAN ranked at the top or near the top in terms of performance. From an overall perspective, specific to the scores for the MAE (MSE), the average improvement by the SAN over the MCNN, P2PNet, DMcount, TraCount, and the CCNN was 2.644 (4.542), 0.24 (0.76), 0.284 (0.956), 2.804 (4.374), and 4.712 (7.51), respectively. These promotions are exhilarating, as these baseline methods were proven to achieve state-of-the-art performance in their respective articles. Among different spans, the SAN attained the optimal results in most cases, while P2PNet obtained the second-best values at most. Moreover, as the number of vehicles rose, the performance gap between our SAN and P2PNet became increasingly clear.

Table 1.

Numerical comparison with multiple methods on TRANCOS datasets (where ↑Higher is better and ↓Lower is better). The best values are highlighted in boldface, and the second-best values are highlighted by underlines.

Moreover, we compared the running efficiency of different models. The results are shown in Table 2. Due to the introduction of the latest transformer as a framework, the parameter size of our model reached 30.95 M, which was larger than the competitors. However, in terms of the computational criteria of FLOPs, the SAN reached a medium level. Considering the outstanding performance achieved by our model, we believe that a certain sacrifice of a more demanding parameter is acceptable.

Table 2.

Parameter comparison with multiple methods. The best values are highlighted in boldface.

In summary, we evaluated our methods in a quantitative fashion. The results demonstrate that the superiority of the SAN surpassed existing algorithms, further proving the effectiveness of the proposed modules.

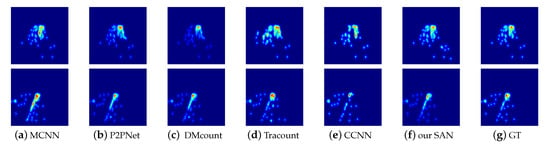

3.4. Qualitative Comparisons

In this subsection, to further verify the counting performance of our model, we implement a visual operation on the density map generated by all competing models. The results are given in Figure 5, in which the main color of the density maps is based on blue and green, representing the density position of the vehicle object. Technically, it should be noted that the more vehicle targets the unit scene contained, the redder the color would be.

Figure 5.

Qualitative comparisons with baselines and the ground truth (GT) on TRANCOS dataset: (a) MCNN, (b) P2PNet, (c) DMcount, (d) Tracount, (e) CCNN, (f) our SAN, and (g) GT.

For the first column in Figure 5, when compared with the ground truth, it is obvious that more high-density areas existed in the whole image. Among them, the object located in the lower right area was not accurately predicted, and the object located on the left part was only partially detected. Clearly, we can claim that the MCNN failed in this experiment, presenting imprecise density representation compared with its competitors. Although P2PNet and DMcount generated more similar density maps to the ground truth, there were still phenomena that were not fine enough with a careful look and only gave approximately equal results in the qualified region. Aside from that, the density map generated by the Tracount method showed unsatisfactory results in both the dense and sparse regions. Comparatively, the results derived from our SAN method were much closer to the GT image and were more credible than all the state-of-the-art competitors; that is to say, the SAN successfully captured the target features of different scales and retained better details. Evidently, these qualitative results prove the superiority of our proposed modules.

4. Discussion

Unlike most previous studies, the SAN is more concerned with the variable object scale in the counting task. Note that any singleton network architecture, whatever the convolutions or transformers are, can only explore the global or local relationships of the input data. Accordingly, we devised a novel network structure equipped with dual branches that can adapt to the large-scale variations of the surveillance targets. In terms of the current situation in the field, the single data type, the low volume of dataset, and the poor annotation all become the shackles of algorithms achieving better performance.

In future works, we plan to explore a lightweight network structure where the defect of a large number of parameters can be relieved. A rough idea lies in the adoption of 1 × 1 convolution for a unified operation of partial dual-branch operations. In addition, our SAN would be shifted into other datasets to check if it is highly generalizable. That aside, we would also validate the efficacy and efficiency of the proposed components in other visual applications.

5. Conclusions

In this paper, we proposed a novel synergism attention network to enhance the ability of transformers in disposing of large-scale variations for dense vehicle counting. Mainly, a pyramid structure was proposed to capture multiple-scale object features. On that basis, a lightweight downscaling operation was implemented from an SAN’s multi-stage block, and the attention features that blend global information and location-aware local information from the synergism transformer block were obtained. Among them, to gain local trainable location-aware information in SyT, we devised a Location Attention Cumulation module to realize the weighted localization of the region by utilizing the diffusion of attention accumulation. Extensively quantitative and qualitative comparisons were conducted which proved the superiority of our proposed SAN in dense vehicle counting tasks.

Author Contributions

Conceptualization, J.W.; methodology, Y.J.; writing—original draft preparation, J.W.; formal analysis, W.W.; validation, Y.W.; supervision, X.Y.; resources, Y.J.; data curation, J.Z.; project administration, Y.J.; funding acquisition, X.Y.; investigation, J.W.; writing—review and editing, J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Key Research and Development Program of China (No. 2018YFE0126100), the National Natural Science Foundation of China (No. 62276232), the National Natural Science Foundation of China (No. 61873240), the Research Foundation of the Department of Education of Zhejiang Province (No. Y202145927), the Open Project Program of the State Key Lab of CAD&CG (No. A2210), and the Zhejiang Provincial Natural Science Foundation (No. LY20F030018).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ke, R.; Li, Z.; Tang, J.; Pan, Z.; Wang, Y. Real-time traffic flow parameter estimation from UAV video based on ensemble classifier and optical flow. IEEE Trans. Intell. Transp. Syst. 2018, 20, 54–64. [Google Scholar] [CrossRef]

- Bas, E.; Tekalp, A.M.; Salman, F.S. Automatic vehicle counting from video for traffic flow analysis. In Proceedings of the 2007 IEEE Intelligent Vehicles Symposium, Istanbul, Turkey, 13–15 June 2007; pp. 392–397. [Google Scholar]

- Khairdoost, N.; Monadjemi, S.A.; Jamshidi, K. Front and rear vehicle detection using hypothesis generation and verification. Signal Image Process. 2013, 4, 31. [Google Scholar] [CrossRef]

- Kong, X.; Duan, G.; Hou, M.; Shen, G.; Wang, H.; Yan, X.; Collotta, M. Deep Reinforcement Learning based Energy Efficient Edge Computing for Internet of Vehicles. IEEE Trans. Ind. Inform. 2022, 18, 6308–6316. [Google Scholar] [CrossRef]

- Shen, G.; Han, X.; Chin, K.; Kong, X. An Attention-Based Digraph Convolution Network Enabled Framework for Congestion Recognition in Three-Dimensional Road Networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 14413–14426. [Google Scholar] [CrossRef]

- Zhang, S.; Wu, G.; Costeira, J.P.; Moura, J.M. Fcn-rlstm: Deep spatio-temporal neural networks for vehicle counting in city cameras. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3667–3676. [Google Scholar]

- Kong, X.; Wu, Y.; Wang, H.; Xia, F. Edge Computing for Internet of Everything: A Survey. IEEE Internet Things J. 2022, 1–14, Early Access. [Google Scholar] [CrossRef]

- Zheng, J.; Feng, Y.; Bai, C.; Zhang, J. Hyperspectral Image Classification Using Mixed Convolutions and Covariance Pooling. IEEE Trans. Geosci. Remote. Sens. 2021, 59, 522–534. [Google Scholar] [CrossRef]

- Xu, H.; Zheng, J.; Yao, X.; Feng, Y.; Chen, S. Fast Tensor Nuclear Norm for Structured Low-Rank Visual Inpainting. IEEE Trans. Circ. Syst. Video Technol. 2022, 32, 538–552. [Google Scholar] [CrossRef]

- Guerrero-Gómez-Olmedo, R.; Torre-Jiménez, B.; López-Sastre, R.; Maldonado-Bascón, S.; Onoro-Rubio, D. Extremely overlapping vehicle counting. In Pattern Recognition and Image Analysis; Springer: Cham, Switzerland, 2015; pp. 423–431. [Google Scholar]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar]

- Ma, Z.; Hong, X.; Wei, X.; Qiu, Y.; Gong, Y. Towards a universal model for cross-dataset crowd counting. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 11–17 October 2021; pp. 3205–3214. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-image crowd counting via multi-column convolutional neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 589–597. [Google Scholar]

- Feng, Y.; Zheng, J.; Qin, M.; Bai, C.; Zhang, J. 3D Octave and 2D Vanilla Mixed Convolutional Neural Network for Hyperspectral Image Classification with Limited Samples. Remote Sens. 2021, 13, 4407. [Google Scholar] [CrossRef]

- Sooksatra, S.; Yoshitaka, A.; Kondo, T.; Bunnun, P. The Density-Aware Estimation Network for Vehicle Counting in Traffic Surveillance System. In Proceedings of the 2019 15th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Sorrento, Italy, 26–29 November 2019; pp. 231–238. [Google Scholar]

- Wan, J.; Wang, Q.; Chan, A.B. Kernel-based density map generation for dense object counting. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 1357–1370. [Google Scholar] [CrossRef] [PubMed]

- Sindagi, V.A.; Patel, V.M. A survey of recent advances in cnn-based single image crowd counting and density estimation. Pattern Recognit. Lett. 2018, 107, 3–16. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–19922. [Google Scholar]

- Feng, Y.; Xu, H.; Jiang, J.; Liu, H.; Zheng, J. ICIF-Net: Intra-Scale Cross-Interaction and Inter-Scale Feature Fusion Network for Bitemporal Remote Sensing Images Change Detection. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Wang, F.; Liu, K.; Long, F.; Sang, N.; Xia, X.; Sang, J. Joint CNN and Transformer Network via weakly supervised Learning for efficient crowd counting. arXiv 2022, arXiv:2203.06388. [Google Scholar]

- Lin, H.; Ma, Z.; Ji, R.; Wang, Y.; Hong, X. Boosting Crowd Counting via Multifaceted Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 19628–19637. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Onoro-Rubio, D.; Roberto, J.L.-S. Towards Perspective-Free Object Counting with Deep Learning. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 615–629. [Google Scholar]

- Song, Q.; Wang, C.; Jiang, Z.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wu, Y. Rethinking counting and localization in crowds: A purely point-based framework. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3365–3374. [Google Scholar]

- Wang, B.; Liu, H.; Samaras, D.; Nguyen, M.H. Distribution matching for crowd counting. Adv. Neural Inf. Process. Syst. 2020, 33, 1595–1607. [Google Scholar]

- Surya, S. TraCount: A deep convolutional neural network for highly overlapping vehicle counting. In Proceedings of the Tenth Indian Conference on Computer Vision, Graphics and Image Processing, Guwahati Assam, India, 18–22 December 2016; pp. 1–6. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).