Accelerated Chambolle Projection Algorithms for Image Restoration

Abstract

1. Introduction

2. Related Works

2.1. Chambolle Projection Algorithm

| Algorithm 1 Chambolle projection algorithm (CP) |

Input: f, , Initialization:

1: for

do 2: . 3: end for 4: . 5: return

u 6: Output: The restoration clean image u. |

2.2. Frank–Wolfe Algorithm

| Algorithm 2 Frank–Wolfe Algorithm (FW) |

Initialization: . 1: for

do 2: . 3: . 4: end for 5: return . |

3. Chambolle Projection Algorithms Based on Frank–Wolfe

| Algorithm 3 Chambolle projection based on Frank–Wolfe (CP–FW) |

Input: f, Initialization:

1: for

do 2: . 3: . 4: . 5: end for 6: . 7: return u. 8: Output: The restoration clean image u. |

| Algorithm 4 Chambolle projection based on accelerated Frank–Wolfe (CP–AFW) |

Input: f, Initialization: 1: for

do 2: . 3: . 4: . 5: . 6: . 7: end for 8: . 9: return u. 10: Output: The restoration clean image u. |

4. Accelerated Chambolle Projection for Poisson Denoising

| Algorithm 5 Chambolle projection algorithm (CP (Poisson)) |

Input: . Initialization:

1: while

do 2: for do 3: . 4: end for 5: . 6: and let . 7: end while 8: return u. 9: Output: The restoration clean image u. |

| Algorithm 6 Chambolle projection based on Frank–Wolfe for Poisson noise (CP–FW (Poisson)) |

Input: . Initialization: . 1: while

do 2: for do 3: . 4: . 5: . 6: end for 7: . 8: and let . 9: end while 10: return u. 11: Output: The restoration clean image u. |

| Algorithm 7 Chambolle projection based on Accelerated Frank–Wolfe for Poisson noise (CP–AFW (Poisson)) |

Input: f, , . Initialization: . Output: output result restoration clean image u. 1: while

do 2: for do 3: . 4: 5: 6: 7: . 8: end for 9: . 10: and let . 11: end while 12: return u. 13: Output: The restoration clean image u. |

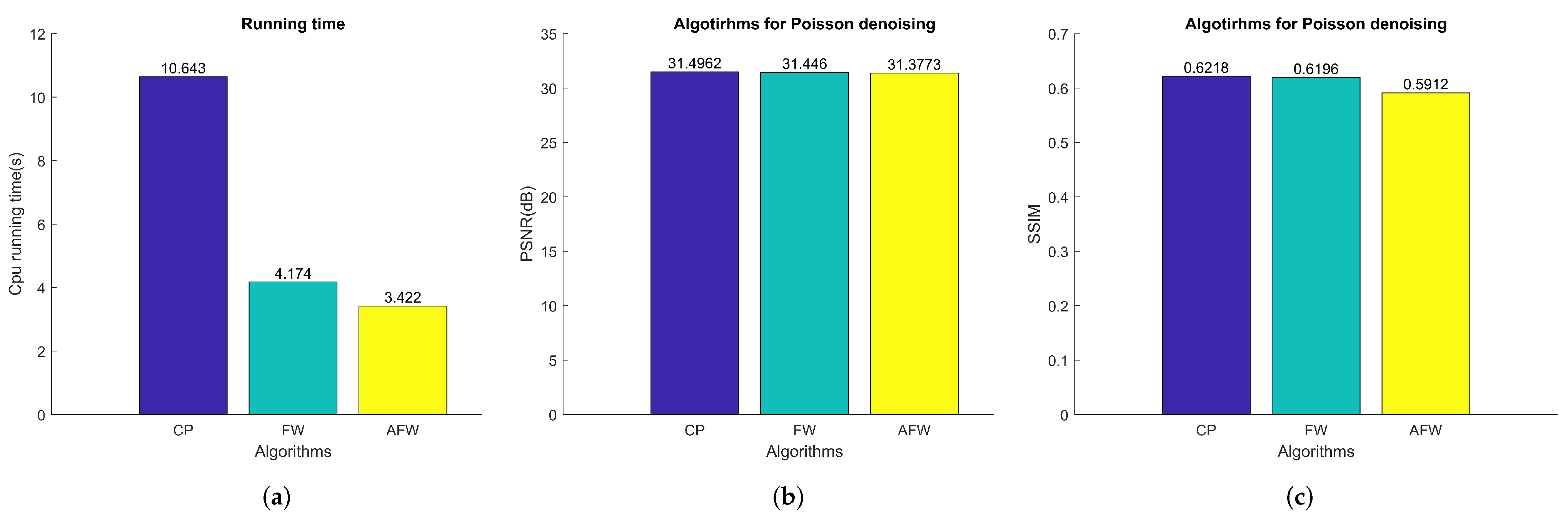

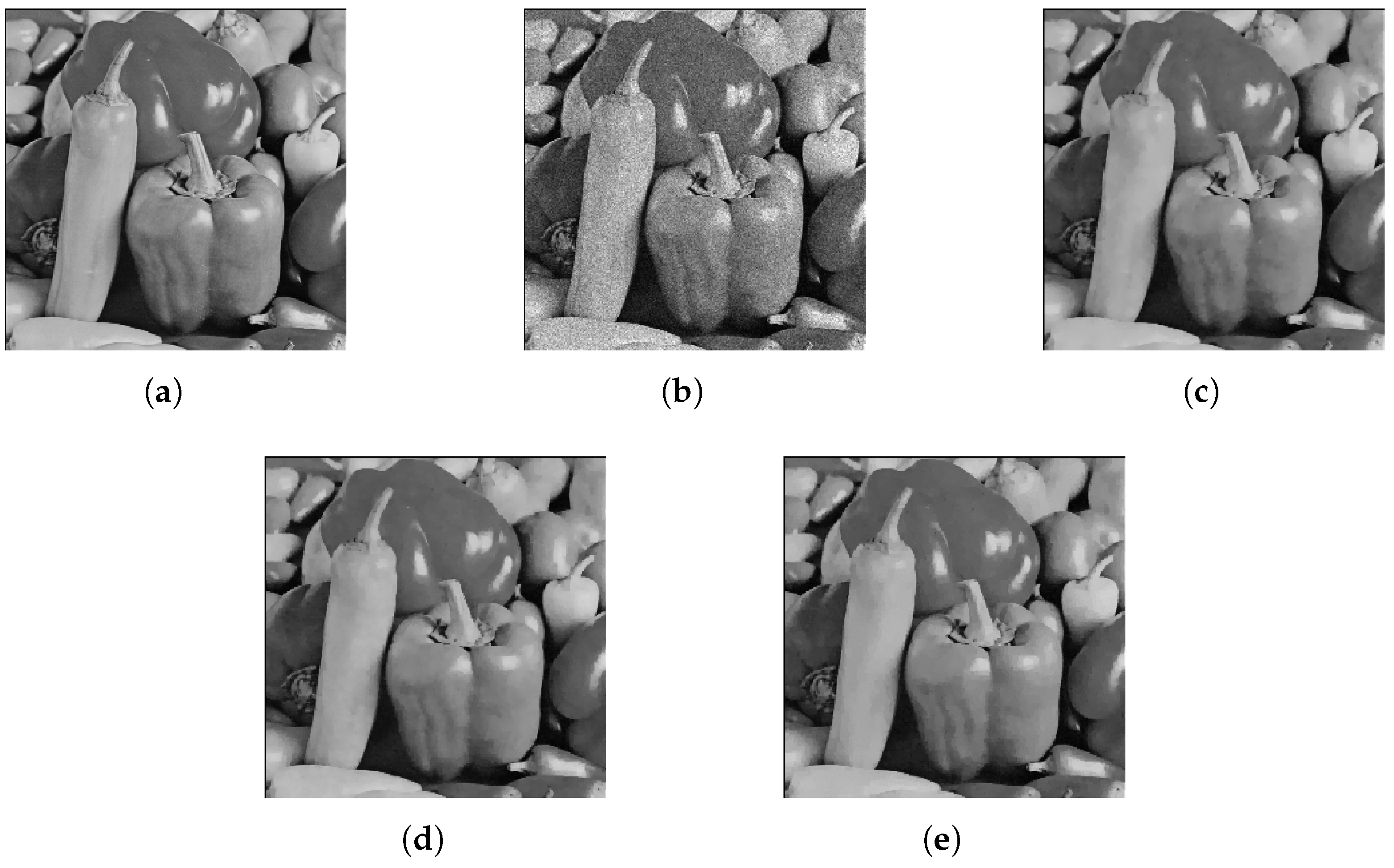

5. Experiments

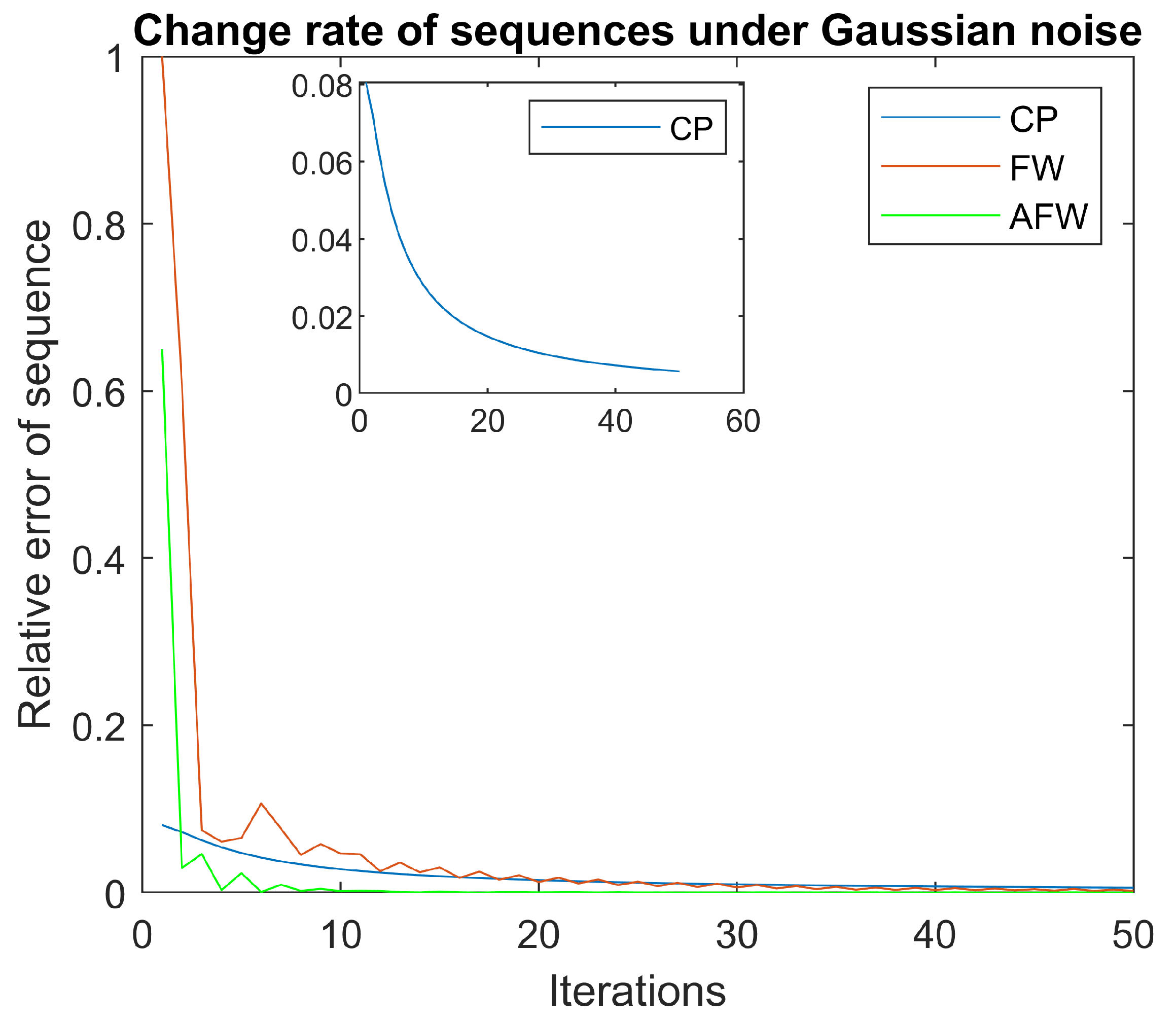

5.1. Change Rate of Sequence and Value of Energy Functional

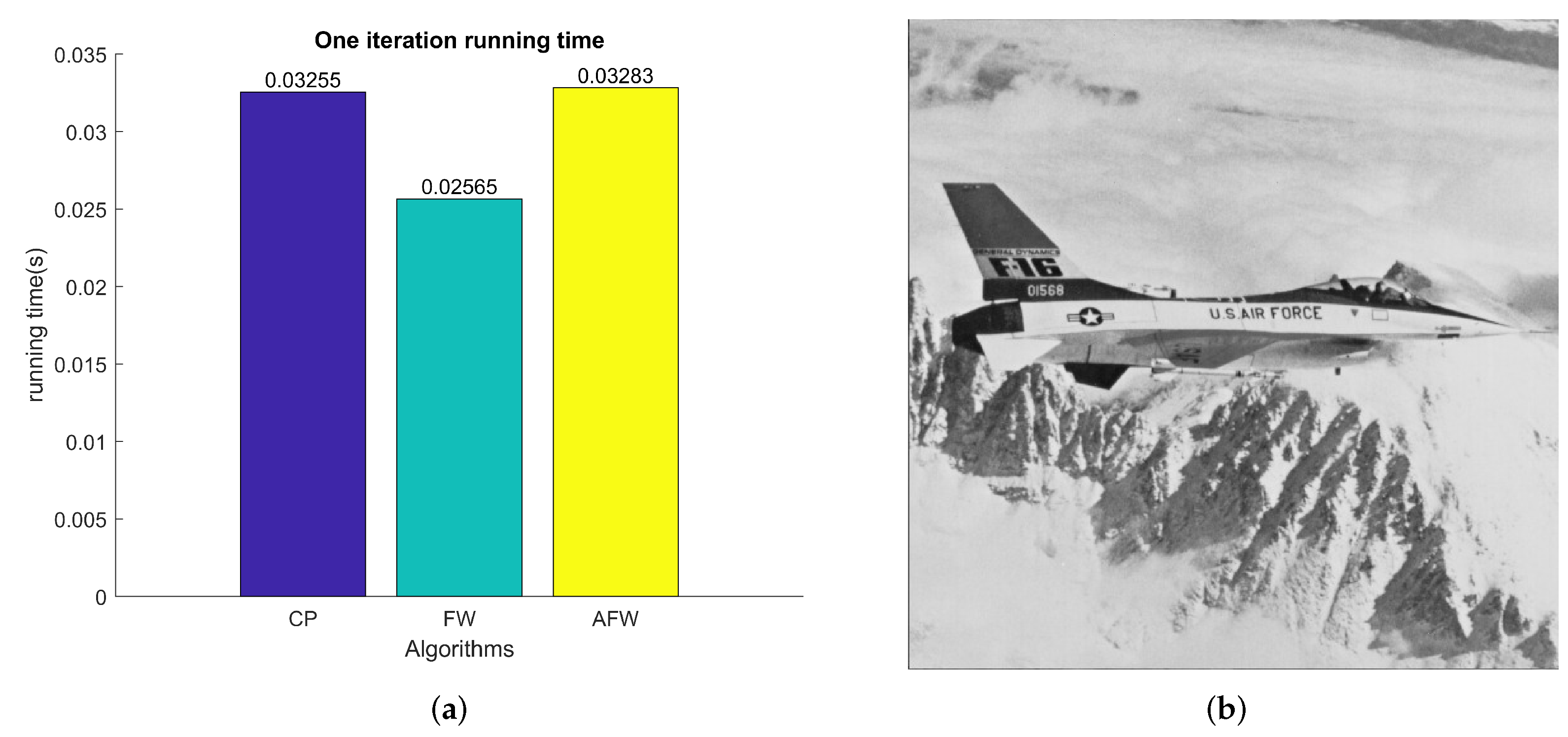

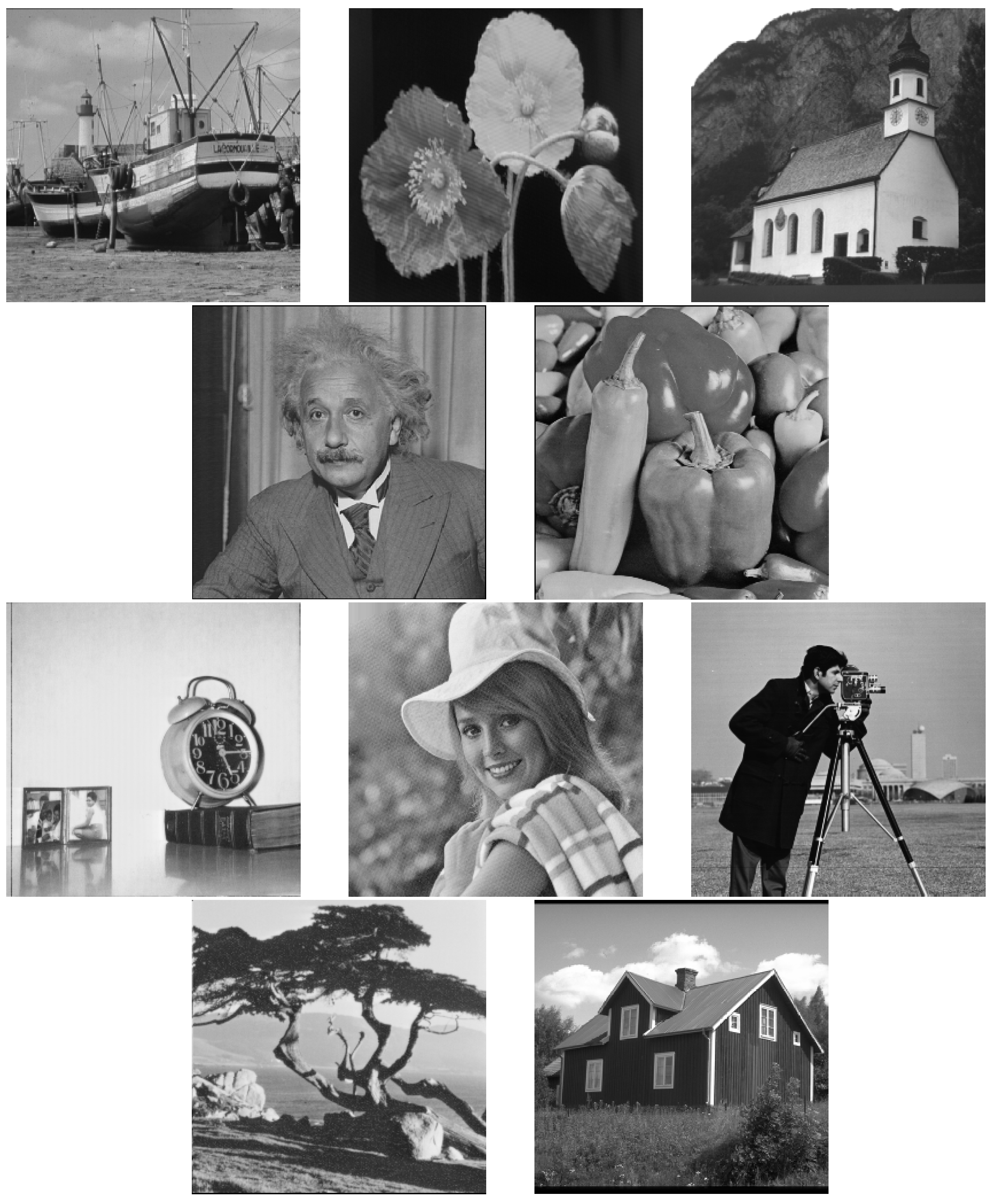

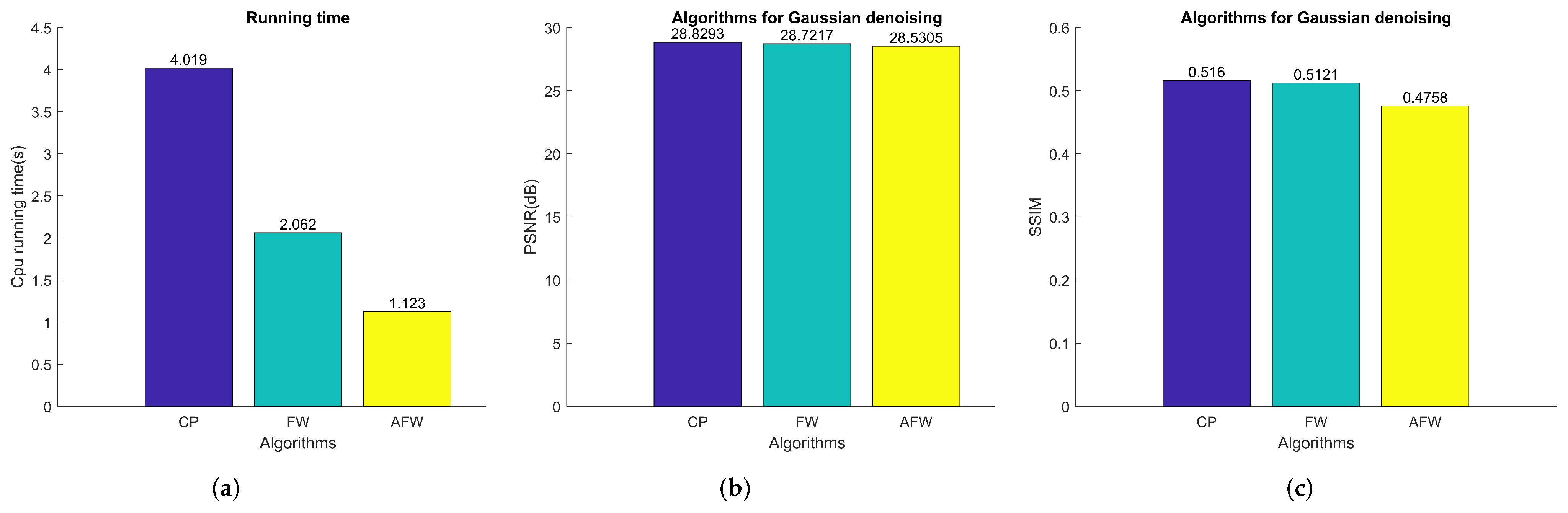

5.2. Test Algorithms on Dataset

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Y.; Yang, J.; Yin, W.; Zhang, Y. A new alternating minimization algorithm for total variation image reconstruction. SIAM J. Imaging Sci. 2008, 1, 248–272. [Google Scholar] [CrossRef]

- Chambolle, A.; Caselles, V.; Cremers, D.; Novaga, M.; Pock, T. An Introduction to Total Variation for Image Analysis. In Theoretical Foundations and Numerical Methods for Sparse Recovery; Fornasier, M., Ed.; De Gruyter: Berlin, Germany; De Gruyter: New York, NY, USA, 2010; pp. 263–340. [Google Scholar]

- Beck, A.; Teboulle, M. Fast Gradient-Based Algorithms for Constrained Total Variation Image Denoising and Deblurring Problems. IEEE Trans. Image Process. 2009, 18, 2419–2434. [Google Scholar] [CrossRef]

- Chambolle, A. An Algorithm for Total Variation Minimization and Applications. J. Math. Imaging Vis. 2004, 20, 89–97. [Google Scholar]

- Zhou, L.; Tang, J. Fraction-order total variation blind image restoration based on L1-norm. Appl. Math. Model. 2017, 51, 469–476. [Google Scholar] [CrossRef]

- Yang, J.H.; Zhao, X.L.; Mei, J.J.; Wang, S.; Ma, T.H.; Huang, T.Z. Total variation and high-order total variation adaptive model for restoring blurred images with Cauchy noise. Comput. Math. Appl. 2019, 77, 1255–1272. [Google Scholar] [CrossRef]

- Bayram, I.; Kamasak, M.E. Directional Total Variation. IEEE Signal Process. Lett. 2012, 19, 781–784. [Google Scholar] [CrossRef]

- Bredies, K.; Kunisch, K.; Pock, T. Total Generalized Variation. SIAM J. Imaging Sci. 2010, 3, 492–526. [Google Scholar] [CrossRef]

- Asaki, T.J.; Le, T.; Chartrand, R. A variational approach to reconstructing images corrupted by poisson noise. J. Math. Imaging Vis. 2007, 27, 257–263. [Google Scholar]

- Wang, W.; He, C. A fast and effective method for a Poisson denoising model with total variation. IEEE Singal Process. Lett. 2017, 24, 269–273. [Google Scholar] [CrossRef]

- Rahman Chowdhury, M.; Zhang, J.; Qin, J.; Lou, Y. Poisson image denoising based on fractional-order total variation. Inverse Probl. Imaging 2020, 14, 77–96. [Google Scholar] [CrossRef]

- Sawatzky, A.; Brune, C.; Koesters, T.; Wuebbeling, F.; Burger, M. EM-TV Methods for Inverse Problems with Poisson Noise. In Level Set and PDE Based Reconstruction Methods in Imaging; Springer: Cham, Switzerland, 2013. [Google Scholar]

- Parikh, N.; Boyd, S. Proximal Algorithms. Found. Trends Optim. 2014, 1, 127–239. [Google Scholar] [CrossRef]

- Zhang, B.; Zhu, Z.; Luo, Z. A modified Chambolle-Pock primal-dual algorithm for Poisson noise removal. Calcolo 2020, 57, 28. [Google Scholar] [CrossRef]

- Jiang, L.; Huang, J.; Lv, X.-G.; Liu, J. Alternating direction method for the high-order total variation-based Poisson noise removal problem. Numer. Algorithms 2015, 69, 495–516. [Google Scholar] [CrossRef]

- Wen, Y.; Chan, R.H.; Zeng, T. Primal-dual algorithms for total variation based image restoration under Poisson noise. Sci. China Math. 2016, 59, 141–160. [Google Scholar] [CrossRef]

- Zhang, J.; Duan, Y.; Lu, Y.; Ng, M.K.; Chang, H. Bilinear constraint based ADMM for mixed Poisson-Gaussian noise removal. Inverse Probl. Imaging 2020, 15, 1–28. [Google Scholar] [CrossRef]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Marguerite, F.; Wolfe, P. An algorithm for quadratic programming. Nav. Res. Logist. Q. 1956, 3, 95–110. [Google Scholar]

- Jaggi, M. Revisiting Frank–Wolfe: Projection-Free Sparse Convex Optimization. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Li, B.; Coutiño, M.; Giannakis, G.B.; Leus, G. A Momentum-Guided Frank–Wolfe Algorithm. IEEE Trans. Signal Process. 2021, 69, 3597–3611. [Google Scholar] [CrossRef]

- Salmon, J.; Harmany, Z.; Deledalle, C.A.; Willett, R. Poisson Noise Reduction with Non-local PCA. J. Math. Imaging Vis. 2014, 48, 279–294. [Google Scholar] [CrossRef]

- Bindilatti, A.A.; Mascarenhas, N.D.A. A Nonlocal Poisson Denoising Algorithm Based on Stochastic Distances. IEEE Signal Process. Lett. 2013, 20, 1010–1013. [Google Scholar] [CrossRef]

- Marais, W.; Willett, R. Proximal-Gradient methods for poisson image reconstruction with BM3D-Based regularization. In Proceedings of the 2017 IEEE 7th International Workshop on Computational Advances in Multi-Sensor Adaptive Processing (CAMSAP), Curacao, The Netherlands, 10–13 December 2017. [Google Scholar]

- Azzari, L.; Foi, A. Variance stabilization for noisy + estimate combination in iterative poisson denoising. IEEE Signal Process. Lett. 2016, 23, 1086–1090. [Google Scholar] [CrossRef]

- Mäkitalo, M.; Foi, A. Poisson-gaussian denoising using the exact unbiased inverse of the generalized anscombe transformation. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 1081–1084. [Google Scholar]

- Makitalo, M.; Foi, A. Optimal Inversion of the Generalized Anscombe Transformation for Poisson-Gaussian Noise. IEEE Trans. Image Process. 2013, 22, 91–103. [Google Scholar] [CrossRef]

- Chang, H.; Lou, Y.; Duan, Y.; Marchesini, S. Total Variation–Based Phase Retrieval for Poisson Noise Removal. SIAM J. Imaging Sci. 2018, 11, 24–45. [Google Scholar] [CrossRef]

- di Serafino, D.; Pragliola, M. Automatic parameter selection for the TGV regularizer in image restoration under Poisson noise. arXiv 2022, arXiv:2205.13439. [Google Scholar]

- Lv, X.G.; Jiang, L.; Liu, J. Deblurring Poisson noisy images by total variation with overlapping group sparsity. Appl. Math. Comput. 2016, 289, 132–148. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, F.; Liu, Q.; Wang, S. VST-Net: Variance-stabilizing Transformation Inspired Network for Poisson Denoising. J. Vis. Commun. Image Represent. 2019, 62, 12–22. [Google Scholar] [CrossRef]

- Kumwilaisak, W.; Piriyatharawet, T.; Lasang, P.; Thatphithakkul, N. Image denoising with deep convolutional neural and multi-directional long short-term memory networks under Poisson noise environments. IEEE Access 2020, 8, 86998–87010. [Google Scholar] [CrossRef]

- Bauschke, H.H.; Combettes, P.L. Convex Analysis and Monotone Operator Theory in Hilbert Spaces; CMS Books in Mathematics; Springer: New York, NY, USA, 2011. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, W.; Feng, X. Accelerated Chambolle Projection Algorithms for Image Restoration. Electronics 2022, 11, 3751. https://doi.org/10.3390/electronics11223751

Wei W, Feng X. Accelerated Chambolle Projection Algorithms for Image Restoration. Electronics. 2022; 11(22):3751. https://doi.org/10.3390/electronics11223751

Chicago/Turabian StyleWei, Wenyang, and Xiangchu Feng. 2022. "Accelerated Chambolle Projection Algorithms for Image Restoration" Electronics 11, no. 22: 3751. https://doi.org/10.3390/electronics11223751

APA StyleWei, W., & Feng, X. (2022). Accelerated Chambolle Projection Algorithms for Image Restoration. Electronics, 11(22), 3751. https://doi.org/10.3390/electronics11223751