Abstract

Due to advances in depth sensor technologies, the use of these sensors has positively impacted studies of human-computer interaction and activity recognition. This study proposes a novel 3D action template generated from depth sequence data and two methods to classify single-person activities using this 3D template. Initially, joint skeleton-based three-dimensional volumetric templates are constructed from depth information. In the first method, images are obtained from various view angles of these three-dimensional templates and used for deep feature extraction using a pre-trained convolutional neural network. In our experiments, a pre-trained AlexNet model trained with the ImageNet dataset is used as a feature extractor. Activities are classified by combining deep features and Histogram of Oriented Gradient (HOG) features. The second approach proposes a three-dimensional convolutional neural network that uses volumetric templates as input for activity classification. Proposed methods have been tested with two publicly available datasets. Experiments provided promising results compared with the other studies presented in the literature.

1. Introduction

The classification and prediction of human actions is a complex problem studied in computer vision literature broadly. There are many studies and different applications for this subject. Most current implementations are for digital gaming, entertainment, or security systems. Methods that use depth sensors are proportionally new compared with video-based methods. Early studies [1] focused on working with RGB video data sets. Some studies [1,2] are based on temporal patterns. The extracted person silhouettes are combined with various approaches to create two-dimensional templates in these methods. Template matching methods or classifiers trained by features extracted from these templates are used for action classification. Essential action posture selection and sequence-matching technics are also used for motion recognition [3]. After selecting the key poses, these poses are converted into a sequence. Ultimately, sequence matching or string-matching approaches are applied to classify these sequences. Several methods use invariant interest point properties to create temporal patterns [4,5]. These methods aim to find the invariant features in a 3D temporary template obtained by combining 2D frames sequentially on the third dimension. In this study, we are inspired by person silhouettes and sequence-based methods and propose a 3D template to represent action sequences compactly.

Deep learning is an increasingly popular approach in computer vision research. Instead of generalizing features from a finite set of observations, it uses a large set of observations to calculate space parameters and properties. Deep learning-based motion recognition studies generally use 2D image sequences [6]. The proposed methods tried to cope with difficult challenges due to different camera view angles, very complex crowd activity patterns, and other variations. However, most of these methods did not manage to discriminate the effective features for complex viewpoint, scale, and occlusion problems of human action. This study presents motion recognition approaches based on deep learning. A 3D template was created using the joint skeleton data. In the literature, most skeletal-based [7,8] methods combine 2D skeletons as a sequence or create a template of positions of joint coordinates. In our approach, first, we converted 2D joint skeletons into 3D isosurfaces. Then the isosurfaces were stacked around the hip joint point to create the template.

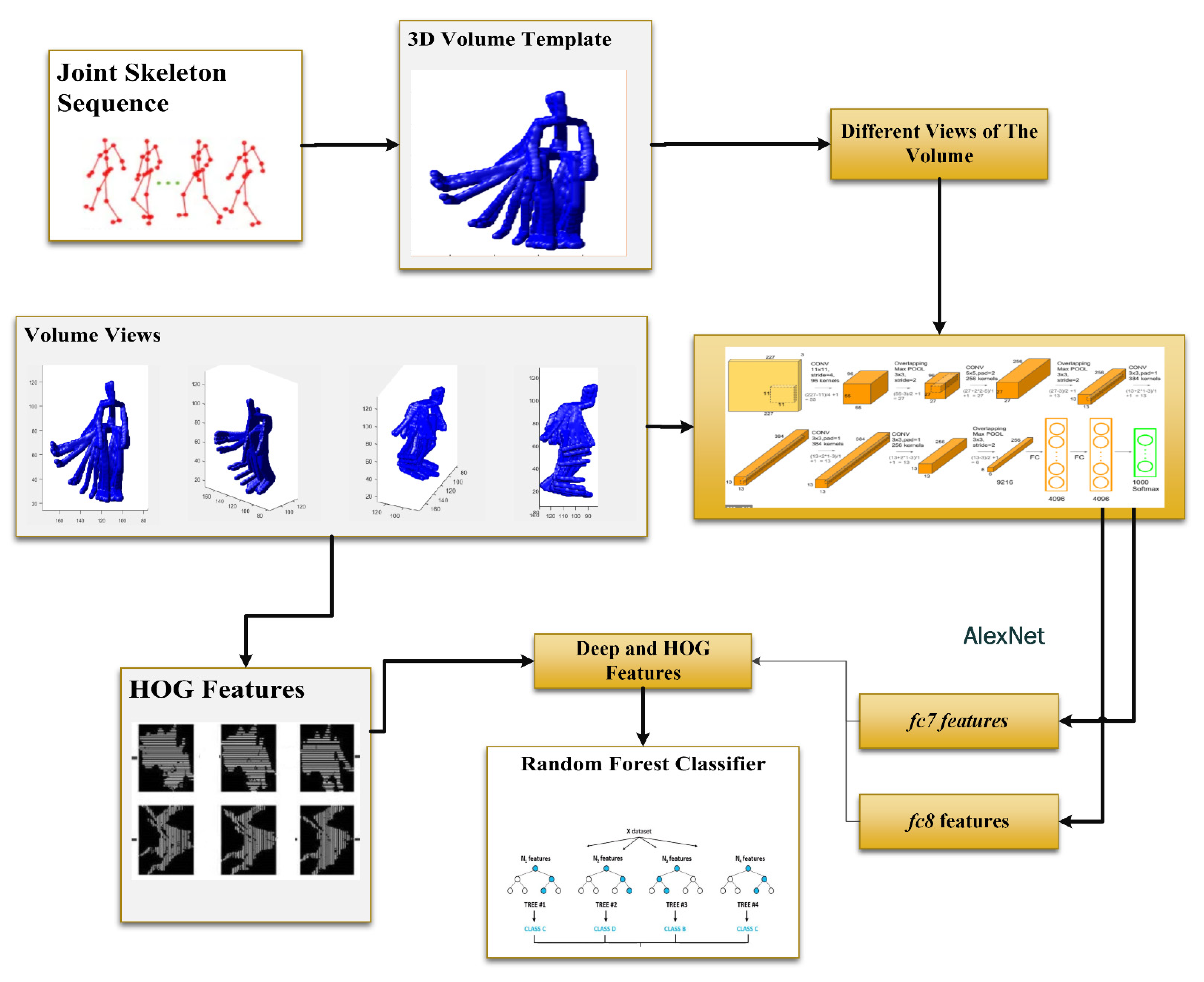

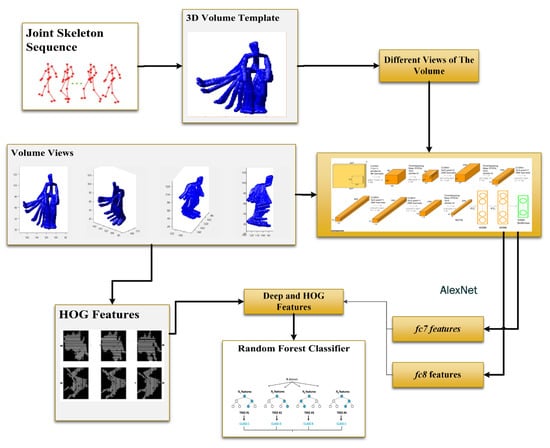

Two different pipelines are proposed to prove the efficiency of the proposed template. These volumes are also used for feature extraction with the help of a pre-trained deep model. Classical HOG features and deep features obtained from these templates are combined for action classification to acquire better results. The working steps of the transfer learning-based method are presented in Figure 1. In addition, a 3D CNN is employed to classify actions directly using these templates. Acquired results show that the proposed method is effective in single-action recognition. The proposed method was tested with two well-known datasets, MSRAction3D and UTKinect. The MSRAction3D dataset is the most commonly used test benchmark dataset for performance evaluation of action recognition methods. The results obtained from this dataset are comparable with the other studies in the literature.

Figure 1.

General Workflow of the proposed classical method.

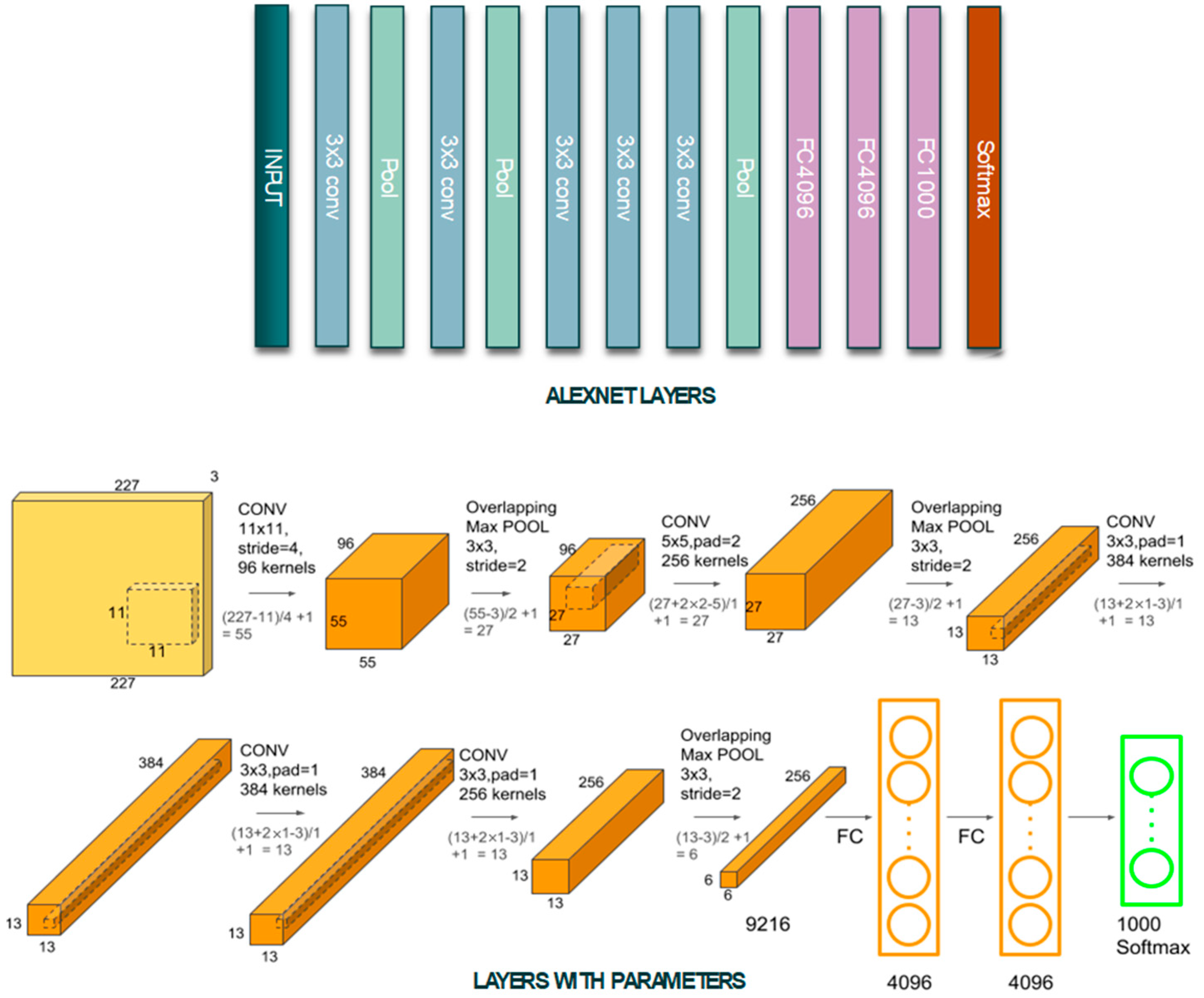

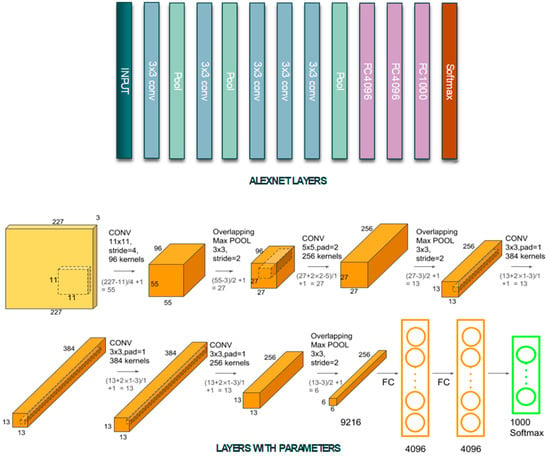

Using skeleton information [7] instead of raw depth information obtains more accurate spatial information about motion. The images of this 3D template created from various angles were taken, and deep features were extracted using AlexNet [9]. AlexNet is a deep artificial neural network trained using a large data set. The components of the AlexNet are shown in Figure 2. Besides, HOG features were obtained from the images captured from the 3D template to increase the classification performance. In the first method, a Random Forest classifier was trained with HOG features extracted as well as deep features.

Figure 2.

Layers and architecture of the AlexNet.

In addition, the generated 3D templates are classified with an end-to-end 3D CNN model as a second approach. The results obtained from transfer learning and 3D CNN are compared with the other studies in the literature. The proposed methods were tested with two widely used publicly available datasets. The experimental results are comparable to those presented by the current state-of-art studies in the literature. The main contributions of the method are listed below:

- To capture both high and low low-level representations of the frames and obtain discriminative features, a novel 3D skeleton is constructed. Rather than using a sequential model, an end-to-end 3D CNN model is applied, and high classification accuracies are acquired. Most of the studies in the literature combine 2D skeletal templates as a sequence to classify. In our approach, we stack skeletons to create a 3D spatio-temporal template.

- Because of the complexity of the problem’s nature, the activity is a challenging task. Traditional hand-crafted features have a limited performance to discriminate against these complex action patterns. Two different models are proposed. The first model combines learned features from a pre-trained deep network with HOG features; the second is an end-to-end 3D CNN.

In summary, a 3D skeletal volume is constructed to capture temporal information and implement two different pipelines. The rest of this manuscript is organized as follows: Section 2 provides related literature. Section 3 explains the proposed methods. Two different models have proposed utilizing this 3D representation. The first classical model combines transfer learning and classical HOG features. The second model is an end-to-end 3D CNN. Section 4 provides the experimental results obtained from MSRaction3D and UTKinect-3D datasets. The MSRAction3D dataset is used in the performance evaluation of action recognition studies. This dataset contains 20 types of different activities, and UTKinect-3D contains ten different actions. In the last section, we provide conclusions and future study directions.

2. Related Work

There are many studies on activity recognition in the literature. Most of these studies are based on color, intensity levels, and motion attributes obtained from video images. The utilization of depth information in motion recognition is a relatively new field. While some studies use raw depth information, some use joint skeletons obtained from depth information [5,10,11]. There are also studies using the features obtained through RGB and depth information together [12].

Xia et al. [13] mapped common positions into a global coordinate system to provide an aspect invariance. Throughout any movement, the positions of the joints are stored, and a histogram of the global coordinate positions is built. These histograms are used as feature vectors to predict activities. Oreifej and Liu [14] proposed the histogram of depth sequence images. After creating a 4D volume from the depth series, the normals of the 4D volume were computed. After the surface normal values were calculated using the finite gray-value difference in all voxels in deep bodies, a histogram of oriented surface normals (HON4D) was created.

Further projectors are derived from corner vectors of 4D space volumes, thus making 4D normals more distinctive. Finally, a Random Forest classifier has been trained for classification. Raptis et al. [15] used a skeletal model to recognize dance movements and applied PCA to joint properties. DTW method is used for the recognition of the movements. Another method that utilizes depth and joint skeletons were proposed by Ji et al. [16]. A machine learning approach named Contrasted Feature Distribution Model (CFDM) is proposed for prediction. Ji et al. [17] proposed a joint-skeleton-based study of action recognition. After extracting skeletal joint features, a contrast-mining approach has been used to recognize basic poses. Keçeli et al. [18] combine 2D and 3D features to predict single and dyadic human activities.

Deep learning is also widely used in action recognition and is still a somewhat new approach. Ji et al. [6] applied a 3D-CNN model for activity classification from RGB videos. First, multiple channels were generated from the input video frames. Three-dimensional convolution is applied to successive frames to encode action. Le et al. [19] used independent field analysis (ISA) with a convolutional neural network for motion recognition. ISA was used for temporal high-level abstract representations learned with the CNN model. Wu and Shao [20] used deep neural networks and skeletal features. Baccouche et al. [21] used deep learning to recognize action sequences. Their method takes motion videos as 3D input data and is classified with a 3D CNN model. Wang et al. [22] used a CNN working with depth image sequences. The 3D depth arrays have been converted into weighted hierarchical depth motion maps (WHDMM) color images. Then, transfer learning was applied for classification. Valle and Starostenko [23] used 2D CNN to recognize walking and running movements. Tran et al. [24] used 3D CNN on RGB video sequences to learn the temporal properties of actions. A CNN Ltsm combination-based method is proposed by Zhao [25]. Shen et al. [26] utilized skeleton graphs with the Lstm classifier.

3. Method

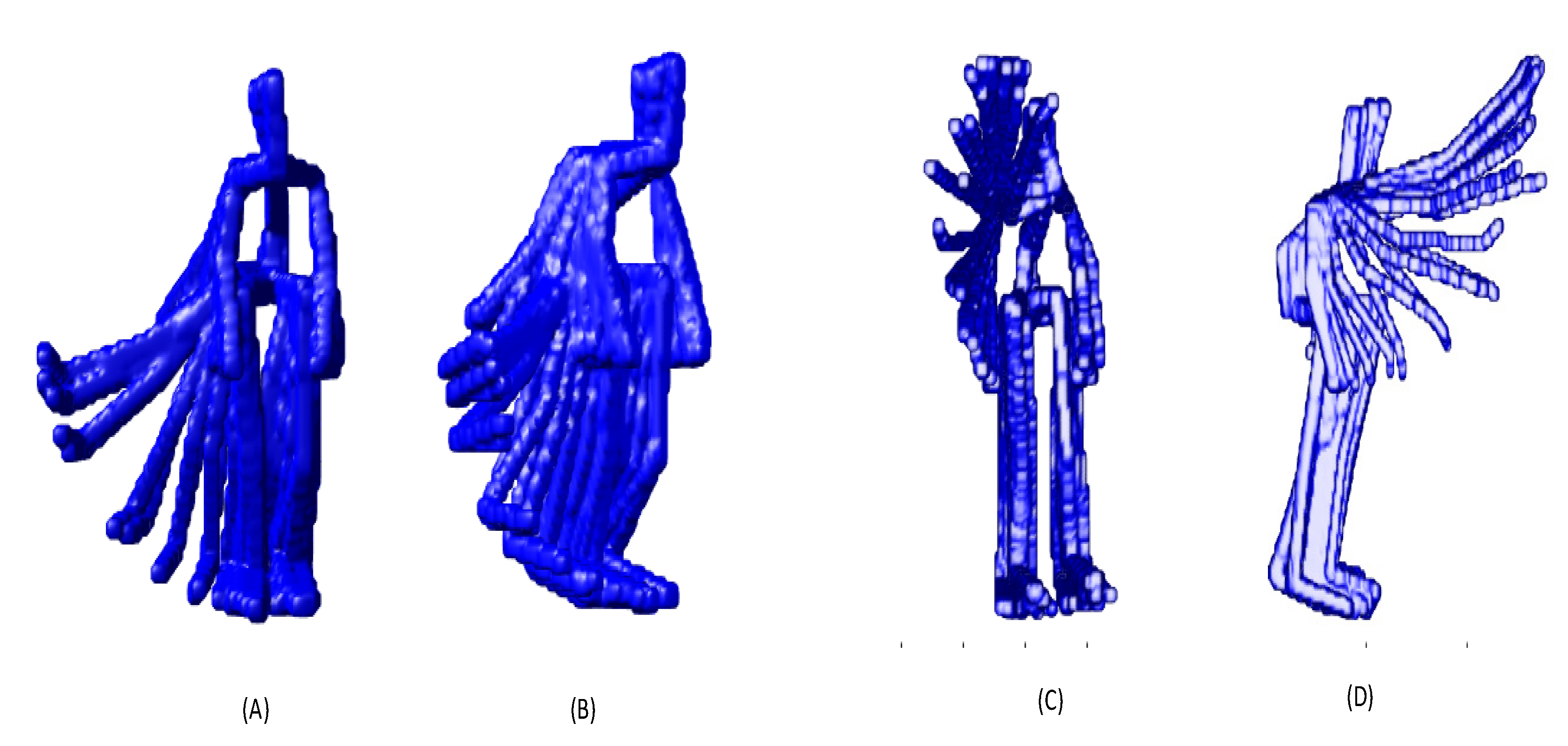

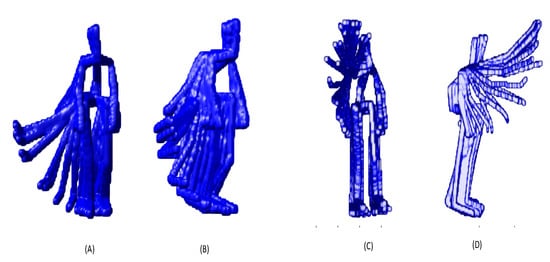

The proposed methods utilize a 3D Skeletal motion template. A three-dimensional template was constructed from joint skeletons obtained from depth information. The joint skeleton obtained was placed in a three-dimensional coordinate space with the center point of the hip joint. The three-dimensional templates shown in Figure 3 were obtained by overlapping the skeletons in the three-dimensional center along the entire motion sequence. The two-dimensional volume has been provided to be smoother by softening the joint skeletons. The isosurface of the figure formed by overlapping the skeletal joints was created, and a 3D template was obtained. The joint skeletons are obtained using the method proposed by Shotton et al. [7].

Figure 3.

3D Skeletal volume templates. (A–D) are different views of hand wave action template.

The method proposed by Shotton et al. [7] utilizes RGBD sensors to acquire a 3D joint skeleton. The method is based on the recognition of different human body parts. After recognition of the body parts, undetected body joints are predicted. The human body is represented as 31 parts in this method. These parts are the head, neck, trunk, waist, right-left foot, hand, wrist, elbow, shoulder, knee, calf, and their sub-components. A body part recognition Random Forests model is trained by using depth and offset values obtained from the depth images. 300.000 different depth sequences are used during the training. After each pixel is assigned to a related body part, the joint location estimation is done with a mean-shift algorithm by using 2D coordinates and depth values.

After the 3D template was constructed, images from different angles were obtained by rotating this template to be used in the first approach. The rotation of a 3D template can be accomplished by viewing the template from different viewpoints as a virtual camera moves along two axes. The rotation is accomplished by revealing the viewpoint with azimuth and elevation. The first rotation parameter azimuth (A) is the horizontal transformation around the z-axis gauged in degrees from the negative y-axis. Positive values indicate that the viewpoint rotates counterclockwise. The second parameter, elevation (E), is vertical elevation. Positive values correspond to changing the angle of view towards the volume. Negative values correspond to moving under the volume. The central point of the coordinate system is the center of the generated 3D template. Rotation is performed with the given azimuth and elevation angle pairs. In the 3D CNN-based approach, the model’s obtained template volumes are directly given to the model and classified. In the following two subsections, the details of the first approach were given. In the last subsection, the architecture of the 3D CNN model utilized in the second approach is explained in detail.

3.1. Feature Extraction

CNN trained on large-scale image databases may provide better results than manually generated features [8]. Using a pre-trained deep neural network in feature extraction with a large data set can yield very effective results [27,28,29]. It has been known that CNNs trained with large-scale datasets are eager to detect spatial structures in the input images. AlexNet [8], a CNN trained with ImageNet, was used in this study. Images obtained from the three-dimensional volume were given as input to AlexNet.

AlexNet CNN’s input layer is a fixed-size input layer, so all input data is resized to 227 × 227 pixels. Then multiple images of the 3D volume are passed to the CNN model as input. Front and side views of the 3D volume from different angles were used for feature extraction from a pre-trained CNN. A more distinctive classifier is produced with features from different views. Different angle images used in feature extraction for a three-dimensional template are shown in Figure 3. It was obtained by using the elevation and rotation angle values of (180.0) (−45.0) (−90.0) (−135.0), respectively. Activation values obtained from AlexNet’s fc-7 and fc-8 layers were used for all input images. The representations from different layers of this model are utilized as features. The representations learned from multiple layers of CNN correspond to different abstraction levels [29].

After extracting the depth features, it was tried to increase the classification performance by extracting HOG features from the same images. The histogram of directional gradients (HOG) is found by counting gradient orientation events in local parts of an image. Gradient points are the rate of increase in a particular direction. First, gradient values in the x and y directions are found to compute HOG features. The slopes of the edge detection algorithm are computed using the reduction operators. Next, the slope orientation of a pixel is calculated by Equation (1):

In Equation (1), , is the gradient values in the x-direction, and is the gradient in the y-direction, and is the orientation at point (x,y). Orientation values are generally between 0 and 360 degrees. The results obtained by using depth features, HOG features, and two feature groups together are shown in Table 1. As can be seen from these experimental results, although deep features produce successful results according to HOG features, combining two groups of attributes has significantly increased the performance.

Table 1.

Training Parameters.

3.2. Random Forest Training

The Random Forest algorithm is a well-known ensemble model widely used [30]. It is based on multiple decision trees. Data sampling on a dataset is applied with bootstrap or bagging to train decision trees. The aim of bootstrapping or bagging is to reduce the bias and increase the variance for a more generalized model. Using random feature subsets with bagging increases the model’s accuracy. Each subtree is trained with different randomly sampled sub-datasets. During the training phase, 1/3 of the data is selected as a validation set to provide a better generalization. The Random Forest training mechanism is very effective and durable in overfitting. Our method utilizes the Random Forest algorithm since it produces more superior outcomes on unbalanced datasets than most traditional classification methods. Different tree sizes have been experimented with between 100 and 200, and a performance improvement cannot be seen in more than 150 trees.

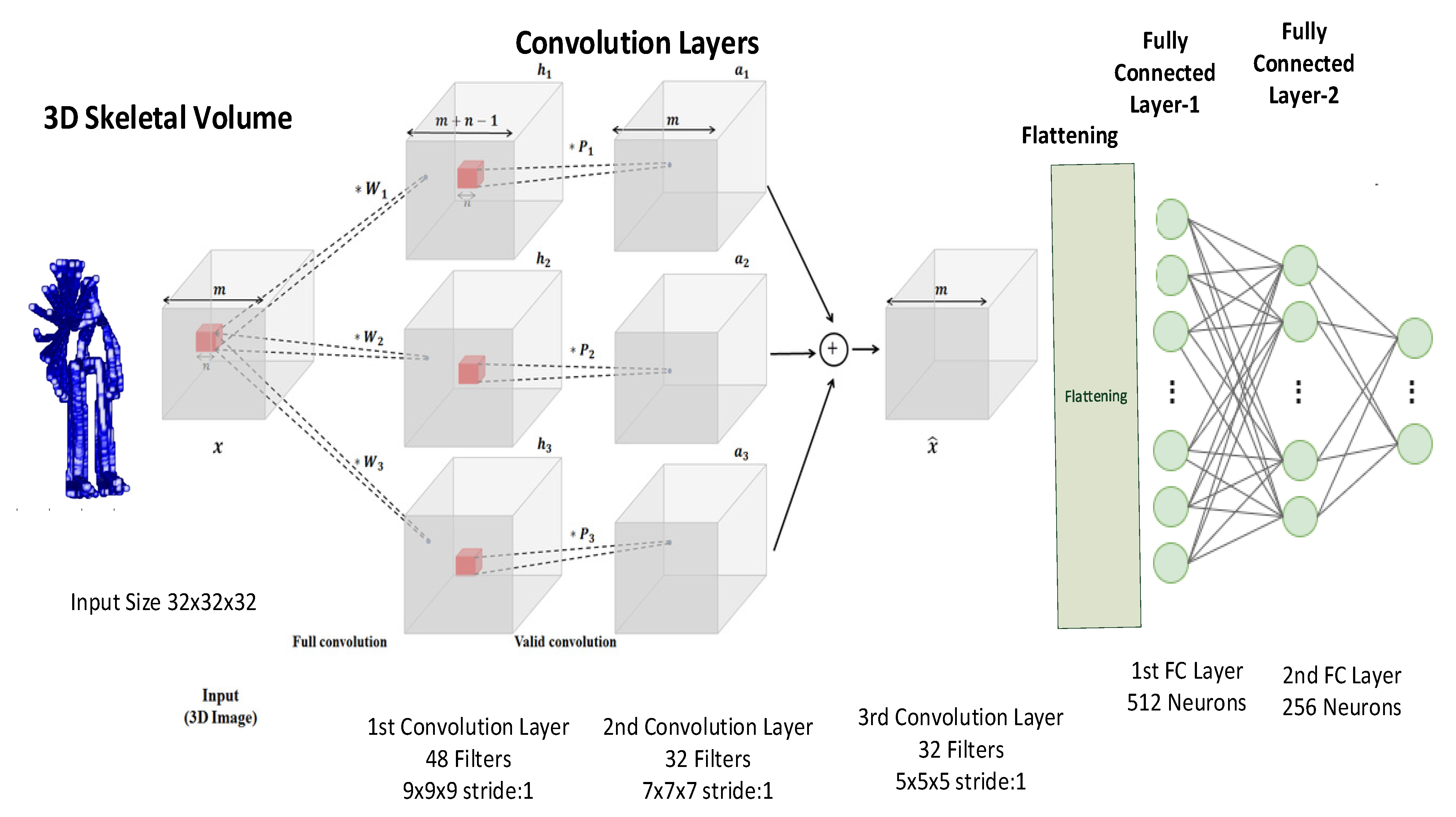

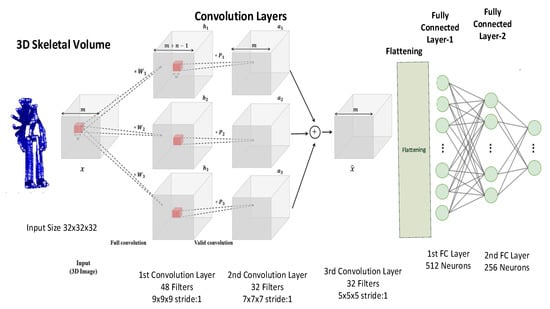

3.3. 3D CNN

An end-to-end 3D CNN model is also proposed as an alternative for experiments with the 3D skeletal templates. A five-layer 3D CNN was built to contain three 3D convolution layers and two dense layers. The general structure of the 3D CNN is presented in Figure 4. Three-dimensional CNNs were built on a 3Dconvolution process. A 3D kernel or filter is used in 3D convolution. The activation map of a CNN layer was obtained with Equation (2). In this equation, is the activation map, is the feature map of the previous layer of the network, tanh is the hyperbolic tangent function to gain non-linearity to activations, b is the bias value and P, Q, and R denote the spatial dimensions of the kernel. The network is a set of weighted multiplication and addition operations. Kernels or filters are used in convolution operations to craft features with different abstraction layers.

Figure 4.

Structure of the 3D CNN. * represents multiplication.

The input volume obtained from the concatenation of skeleton sequences is processed by the first pair of convolution and pooling layers. Then, the second convolution and pooling layers repeat the same operation. The first convolution layer contains 48 filters, while the second and third layers contain 32 filters. The outputs of the convolution layers are flattened and given to the fully-connected layers. The kernel sizes of the convolution layers are 9 × 9 × 9, 7 × 7 × 7, and 5 × 5 × 5. The dimensions of the fully-connected layers are 512 and 256. The training parameters of the proposed 3D CNN model are given in Table 1.

4. Experiments

The proposed methods were tested on MSRAction-3D and UTKinect-Action3D datasets. Different test methods have been used in similar studies on these data sets. Samples of half of the actors were used in the training phase and the other half while testing with the MSRAction-3D. The leave-one-subject-out method was used in the tests performed on the UTKinect-Action3D dataset. In this test method, the movements of one actor are used for the test, while the actions of other actors are used in the training phase. The results obtained with these data sets are shown in Table 2 and Table 3. The classification accuracy is represented as CA in the tables.

Table 2.

Results Obtained from Msraction3d with Different Features.

Table 3.

Results Obtained from Utkinect-Action3d Dataset with Different Features.

The comparison of the experiments performed on the MSRAction-3D dataset with other methods in the literature is given in Table 4. The developed method has been compared with basic and current studies. The method developed is comparable with other related studies in the literature. There are ten actions in this dataset. These actions can be listed as high arm wave, horizontal arm wave, two hand wave, hand clap, hand catch, bend, forward kick, sidekick, tennis swing, or golf swing. The actions in this dataset are performed by subjects facing the camera and positioned in the center. The main challenges of this dataset are the high similarity between different groups of action classes and the changes in the speed of the subject during the activity.

Table 4.

Comparison of The Results Obtaıned from MSRAction3D Dataset with the Other Studies in the Literature.

The comparison of the results obtained with UTKinect-Action3D is given in Table 5. The results obtained with this data set produced results similar to other methods in the literature in terms of performance. The action types included in this dataset are walk, sit-down, stand-up, pick-up, carry, throw, push, pull, wave, and clap-hand—similar to the MSR Action 3D dataset. The challenges in this dataset are actions recorded from different angles and the occlusions caused by object interaction or by the absence of body parts in the field of view. To prove the robustness of our method, we want to test the method with a dataset that contains actions from captured different views. Our method also achieved fairly good results on this dataset too. Compared with other studies in Table 5, our approach reached an average accuracy of 93%, which is slightly higher than the closest study.

Table 5.

Comparison of the Results Obtained From UTKinect-Action3D Dataset with the Other Studies in the Literature.

The results obtained from the 3D CNN have a lower classification accuracy compared with the SVM classifier. In most cases, deep features are superior to traditional ones [31,32]. By using AlexNet, we can transfer the knowledge obtained from a large-scale dataset (ImageNet) to our problem.

For both datasets, the accuracy of our method overperformed most of the methods in the literature. For MSRAction3D the method proposed in [36] made an exception. This method used both raw depth information and skeletal information. The other related studies commonly use only skeletal information. Its performance is reduced to 83.5% in the case of only using skeletal information.

5. Conclusions

This paper proposes action recognition methods that utilize 3D volumetric skeleton templates. Initially, a volume from the skeleton sequence is generated, and then images from different angles of this volume are obtained. Secondly, deep features from a pre-trained CNN and HOG features are extracted. Finally, a Random Forest classifier is trained with these features to recognize actions. A 3D convolutional neural network that uses volumetric templates as input is proposed.

Proposed methods have been evaluated with the publicly available datasets: MSRAction3D and UTKinect-Action3D. Experiments carried out with frequently used datasets show the efficiency and effectiveness of the methods. Experiments showed that obtaining features from a pre-trained CNN is more beneficial than training a 3D CNN. Although promising results are obtained from the 3D CNN classifier, these results are not at the desired level. The main reason for this is the limitation of the input data. Using a CNN trained with a huge amount of data gives more robust features than a 3D CNN trained with limited data. In most cases, training a CNN from scratch has not provided promising results when there is limited training data. For such cases, utilizing a pre-trained network on a large dataset as a feature extractor for these problems may be a better approach.

The main contribution of this study is creating a 3D skeletal action template and mapping the different views of this template to 2D deep features using a pre-trained CNN. The deep features are combined with HOG features to construct a more robust feature set. The experiments showed that the proposed methods provide promising results for both the UTKinect-Action3D and MSRAction3D datasets. Experimental results prove that our proposed method is comparable with the state of art methods.

One of the main limitations of the proposed method is the unavailability of dyadic and crowd actions. Different views of the same volume can have high intra-class variability for dyadic and crowd actions, which can limit the classification accuracy of the proposed method. Another limitation is the high computational cost of the 3D CNN model. High parameter numbers of 3D CNN models cost a lot of training time. The input size may be reduced to cope with this problem, which may also reduce the model’s success.

We aim to combine features extracted from raw depth images and joint skeletons for future work. More snapshots can be acquired with small intervals of both azimuth and elevation. After combining high-dimensional features, feature reduction methods can also be examined for a future study. We also plan to implement a multi-stream deep network that takes a cube of pre-trained features extracted from each frame and 3D video sequence simultaneously as input.

Author Contributions

Conceptualization, A.S.K.; methodology, A.S.K.; software, A.S.K. and A.K.; validation, A.S.K., A.K. and A.B.C.; formal analysis, A.B.C.; investigation, A.S.K.and A.K.; resources, A.B.C.; data curation, A.K. and A.B.C.; writing—original draft preparation, A.S.K.; writing—review and editing, A.S.K., A.K.; visualization, A.S.K.; supervision, A.B.C.; project administration, A.S.K.; funding acquisition, none. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bobick, A.F.; Davis, J.W. The recognition of human movement using temporal templates. IEEE Trans. Pattern Anal. 2001, 23, 257–267. [Google Scholar] [CrossRef]

- Schuldt, C.; Laptev, I.; Caputo, B. Recognising human actions: A local SVM approach. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004; pp. 32–36. [Google Scholar]

- Yuan, X.; Yang, X. A robust human action recognition system using single camera. In Proceedings of the Computational Intelligence and Software Engineering (CiSE), Wuhan, China, 11–13 December 2009; pp. 1–4. [Google Scholar]

- Ghamdi, M.A.; Zhang, L.; Gotoh, Y. Spatio-temporal SIFT and its application to human action classification. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 301–310. [Google Scholar]

- Noguchi, A.; Yanai, K. A surf-based spatio-temporal feature for feature-fusion-based action recognition. In Proceedings of the European Conference on Computer Vision, Crete, Greece, 5–11 September 2010; pp. 153–167. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Shotton, J.; Sharp, T.; Kipman, A.; Fitzgibbon, A.; Finocchio, M.; Blake, A.; Cook, M.; Moore, R. Real-time human pose recognition in parts from single depth images. Commun. ACM 2013, 56, 116–124. [Google Scholar] [CrossRef]

- Yang, F.; Wu, Y.; Sakti, S.; Nakamura, S. Make skeleton-based action recognition model smaller, faster and better. In Proceedings of the ACM Multimedia Asia, Beijing, China, 16–18 December 2019; pp. 1–6. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Yang, X.; Tian, Y.L. Eigenjoints-based action recognition using naive-bayes-nearest-neighbor. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Held, RI, USA, 16–21 June 2012; pp. 14–19. [Google Scholar]

- Yang, X.; Zhang, C.; Tian, Y. Recognising actions using depth motion maps-based histograms of oriented gradients. In Proceedings of the 20th ACM International Conference on Multimedia, Virtual Event, China, 29 October–2 November 2012; pp. 1057–1060. [Google Scholar]

- Popa, M.; Koc, A.K.; Rothkrantz, L.J.; Shan, C.; Wiggers, P. Kinect sensing of shopping related actions. In Proceedings of the International Joint Conference on Ambient Intelligence, Amsterdam, The Netherlands, 16–18 November 2011; pp. 91–100. [Google Scholar]

- Xia, L.; Chen, C.-C.; Aggarwal, J. View invariant human action recognition using histograms of 3d joints. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Held, RI, USA, 16–21 June 2012; pp. 20–27. [Google Scholar]

- Oreifej, O.; Liu, Z. Hon4d: Histogram of oriented 4d normals for activity recognition from depth sequences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 716–723. [Google Scholar]

- Raptis, M.; Kirovski, D.; Hoppe, H. Real-time classification of dance gestures from skeleton animation. In Proceedings of the 2011 ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Vancouver, BC, Canada, 5–7 August 2011; pp. 147–156. [Google Scholar]

- Ji, Y.; Cheng, H.; Zheng, Y.; Li, H. Learning contrastive feature distribution model for interaction recognition. J. Vis. Commun. Image R 2015, 33, 340–349. [Google Scholar] [CrossRef]

- Ji, Y.; Ye, G.; Cheng, H. Interactive body part contrast mining for human interaction recognition. In Proceedings of the Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Keçeli, A.S.; Kaya, A.; Can, A.B. Combining 2D and 3D deep models for action recognition with depth information. Signal Image Video Proc. 2018, 12, 1197–1205. [Google Scholar] [CrossRef]

- Le, Q.V.; Zou, W.Y.; Yeung, S.Y.; Ng, A.Y. Learning hierarchical invariant spatio-temporal features for action recognition with independent subspace analysis. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 3361–3368. [Google Scholar]

- Wu, D.; Shao, L. Leveraging hierarchical parametric networks for skeletal joints based action segmentation and recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 724–731. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In Proceedings of the International Workshop on Human Behavior Understanding, Amsterdam, The Netherlands, 16 November 2011; pp. 29–39. [Google Scholar]

- Wang, P.; Li, W.; Gao, Z.; Zhang, J.; Tang, C.; Ogunbona, P.O. Action recognition from depth maps using deep convolutional neural networks. IEEE Trans. Hum. Mach. Syst. 2015, 46, 498–509. [Google Scholar] [CrossRef]

- Valle, E.A.; Starostenko, O. Recognition of human walking/running actions based on neural network. In Proceedings of the Electrical Engineering, Computing Science and Automatic Control (CCE 2013), Mexico City, Mexico, 30 September–4 October 2013; pp. 239–244. [Google Scholar]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning spatiotemporal features with 3d convolutional networks. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 4489–4497. [Google Scholar]

- Zhao, X.J.C.I. Neuroscience. Research on Athlete Behavior Recognition Technology in Sports Teaching Video Based on Deep Neural Network. Comput. Intell. Neurosci. 2022, 2022, 7260894. [Google Scholar] [CrossRef] [PubMed]

- Shen, X.; Ding, Y.J.J.O.V.C.; Representation, I. Human skeleton representation for 3D action recognition based on complex network coding and LSTM. J. Vis. Commun. Image Represent. 2022, 82, 103386. [Google Scholar] [CrossRef]

- Kaya, A.; Keceli, A.S.; Catal, C.; Yalic, H.Y.; Temucin, H.; Tekinerdogan, B. Analysis of transfer learning for deep neural network based plant classification models. Comput. Electr. Agric. 2019, 158, 20–29. [Google Scholar] [CrossRef]

- Understanding AlexNet. Available online: https://www.learnopencv.com/understanding-alexnet/ (accessed on 30 October 2022).

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. In Proceedings of the ICML, Beijing, China, 21–26 June 2014; pp. 647–655. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Shie, C.K.; Chuang, C.H.; Chou, C.N.; Wu, M.H.; Chang, E.Y. Proceedings of the 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 711–714. Available online: https://ieeexplore.ieee.org/xpl/conhome/7302811/proceeding (accessed on 30 October 2022).

- Li, W.; Zhang, Z.; Liu, Z. Action recognition based on a bag of 3d points. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 9–14. [Google Scholar]

- Zanfir, M.; Leordeanu, M.; Sminchisescu, C. The moving pose: An efficient 3d kinematics descriptor for low-latency action recognition and detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2752–2759. [Google Scholar]

- Luo, J.; Wang, W.; Qi, H. Spatio-temporal feature extraction and representation for RGB-D human action recognition. Pattern Recogn. Lett. 2014, 50, 139–148. [Google Scholar] [CrossRef]

- Ohn-Bar, E.; Trivedi, M. Joint angles similarities and HOG2 for action recognition. In Proceeding of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Portland, OR, USA, 23–28 June 2013; pp. 465–470. [Google Scholar]

- Devanne, M.; Wannous, H.; Berretti, S.; Pala, P.; Daoudi, M.; Bimbo, A.D. Space-time pose representation for 3D human action recognition. In Proceedings of the International Conference on Image Analysis and Processing, Genova, Italy, 9–13 September 2013; pp. 456–464. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).