Abstract

Semantic segmentation of mobile LiDAR point clouds is an essential task in many fields such as road network management, mapping, urban planning, and 3D High Definition (HD) city maps for autonomous vehicles. This study presents an approach to improve the evaluation metrics of deep-learning-based point cloud semantic segmentation using 3D geometric features and filter-based feature selection. Information gain (IG), Chi-square (Chi2), and ReliefF algorithms are used to select relevant features. RandLA-Net and Superpoint Grapgh (SPG), the current and effective deep learning networks, were preferred for applying semantic segmentation. RandLA-Net and SPG were fed by adding geometric features in addition to 3D coordinates (x, y, z) directly without any change in the structure of the point clouds. Experiments were carried out on three challenging mobile LiDAR datasets: Toronto3D, SZTAKI-CityMLS, and Paris. As a result of the study, it was demonstrated that the selection of relevant features improved accuracy in all datasets. For RandLA-Net, mean Intersection-over-Union (mIoU) was 70.1% with the features selected with Chi2 in the Toronto3D dataset, 84.1% mIoU was obtained with the features selected with the IG in the SZTAKI-CityMLS dataset, and 55.2% mIoU with the features selected with the IG and ReliefF in the Paris dataset. For SPG, 69.8% mIoU was obtained with Chi2 in the Toronto3D dataset, 77.5% mIoU was obtained with IG in SZTAKI-CityMLS, and 59.0% mIoU was obtained with IG and ReliefF in Paris.

1. Introduction

Three-dimensional (3D) point clouds are one of the most significant data sources, providing an accurate 3D representation of the world, and have been deployed in various applications, such as urban geometry mapping, autonomous driving, virtual reality, cultural heritage, augmented reality, and more [1,2,3,4]. Point clouds are a useful data type for representing and processing objects in the environment because they contain 3D information, such as geometry, color, intensity, normality, and more. Additionally, 3D point clouds provide more information about the geometric structure of objects than 2D images and allow sensor systems to perceive the environment better [5].

The development of automated artificial intelligence technologies has recently enabled the widespread usage of autonomous systems [6]. Robust and real-time sensing of the environment with high spatial accuracy is an important requirement for autonomous driving [7]. Additionally, precise positioning is a vital issue for autonomous driving. To fulfill these tasks, many sensors such as RGB camera for detection, light detection and ranging (LiDAR), depth camera or Radar sensors are added to autonomous vehicles. Because they provide direct space measurements, precise and quick 3D representations of the world, LiDARs have become a critical component in perception systems. LiDAR sensors are widely used to reconstruct the shape and surface of objects [8]. Mobile LiDAR point clouds are data obtained using laser scanners mounted on a moving vehicle. Mobile LiDAR point clouds provide useful data for many applications such as road network management, architecture and urban planning, and 3D high definition (HD) city maps for autonomous vehicles [9]. In particular, mobile LiDAR point clouds are expected to be the main data source used for autonomous driving and decision makers to produce detailed 3D High Definition (HD) maps. Successful execution of all these tasks is made possible by assigning each point in the point cloud to the correct semantic tag and performing the 3D scene analysis correctly [10]. Evaluating the singular features of the points together and collecting them under a meaningful cluster is called semantic segmentation [11].

Point cloud semantic segmentation has become an important research topic in the last decade. Traditionally, point cloud semantic segmentation relies on machine learning algorithms and rule-based methods that define a set of discriminatory rules to distinguish points for each class. However, these methods are insufficient for accurate semantic segmentation of complex, irregular, and large point cloud data generated in dynamic environments [12]. Deep learning methods, which had successful results in classification, detection, and segmentation in 2D images [13,14,15,16,17], have also been used in point cloud semantic segmentation. Initially, projection and voxelization methods were developed to regularize the point cloud with preprocessing steps for point cloud semantic segmentation based on 2D images. Voxelization requires a significant computational cost for large-scale dense 3D data because memory and compute requirements rise cubically, when the data are scaled up. In projection-based methods, 3D information loss may occur while the point cloud is projected to a two-dimensional plane. Point-based deep learning models have been developed in recent years to overcome these difficulties [9]. Although point cloud structure makes it potentially challenging for the DL approach to process the point cloud directly, point-based methods have advantages, as they prevent information loss and have no preprocessing step.

This study aims to examine the effects of 3D geometric features and appropriate feature selection in the accuracy of deep-learning-based point cloud semantic segmentation. RandLA-Net [18] and SPG [19] methods, which are up-to-date approaches, are used for semantic segmentation. Each point in the point cloud is defined by a 3D feature vector. Thus, helpful features are provided to the deep learning network. Furthermore, the most effective features were determined by filter-based feature selection algorithms, and the semantic segmentation results were improved. A study is presented to investigate the comparative performances of filter-based information gain (IG), Chi-squared (Chi2) [20], and ReliefF [21] for point cloud semantic segmentation. Experiments were carried out on mobile LiDAR point clouds, which are an important data source for autonomous driving. Large-scale outdoor benchmark MLS datasets Toronto3D [22], SZTAKI-CityMLS [23], and Paris-CARLA-3D [24] were chosen as the datasets.

2. Related Works

Deep learning approaches are used for point cloud semantic segmentation because of the inability of machine learning approaches to provide sufficient performance from large and complex data. The approaches in the first deep learning studies are usually based on 2D projection [25] and voxelization [26]. After projection-based and voxelization approaches, DL approaches fed directly with point cloud have been developed. Therefore, it is possible to collect semantic segmentation approaches under three main headings: point-based, voxel-based, and projection-based.

2.1. Point Cloud Semantic Segmentation with Point-Based Methods

The first point-based methods are point-wise multi-layer perceptrons (MLPs). Point-wise MLPs learn the properties of each point through shared MLPs. However, the relationship between the points is ignored, since the points are evaluated individually. PointNet [27] was developed for the first time as a unique method that directly uses the point cloud. PointNet++ [28] is provided as an enhanced version of PointNet. PointNet++ uses a hierarchical neural network that employs PointNet recursively on the input point set. PointSIFT [29] is a PointNet-like algorithm developed based on the Scale Invariance Feature Transform (SIFT) algorithm [30] used in 2D images. Engelmann et al. [31] proposed a combination of K-clustering and KNN to define two neighborhoods in world space and feature space separately. PointWeb [32] defines the relationship between points using the Adaptive Feature Adjustment (APA) module. RandLA-Net [18] proposed a local feature aggregation module used to capture complex local features and spatial relationships. ShellNet [33] is a permutation-invariant convolution that works directly on the point cloud.

To develop fixed MLPs of point-wise approaches, point convolution methods attempt to recognize weights based on learned features with convolutions with more inputs. Thomas et al. [34] present Kernel Point Convolution (KPConv) that is inspired by image-based convolution, but it uses kernel points to define the area where the kernel weight is applied instead of the kernel pixel used by image-based convolution. PointCNN [35] that applies transformation to the input points prevents the loss of shape information and variance in the point order resulting from applying direct convolution. SpiderCNN [36] calculates the distance order of neighboring points and generates a family of polynomial functions with different weights for each neighbor. ConvPoint [37] includes a continuous convolution operation that learns the weighted sum from the feature convolution operation and simple MLP operations of spatial features.

Graph-based methods construct point clouds as super graphs and feed a graph convolution network by extracting local shape information from neighbors. The graph-based methods assume points as nodes of a graph, and point relationships are defined as edges [38]. DGCNN [39] obtains local features using the nearest points. Then, EdgeConv operators, which are edge convolutions, are used to extract global shape features using local features. 3D-GCN [40] proposes deformable kernels for shift and scale-invariant features. The SPG method [19], similar to DGCNN, considers the point cloud as a super point graph, and establishes point relations as edges. PointNet is used for embedding before the final prediction. DPAM [41] offers a deep learning architecture designed to dynamically sample and group points.

2.2. Point Cloud Semantic Segmentation with Voxel-Based Methods

Voxel-based approaches do not process points individually, but by grouping them into regular geometric shapes. Point clouds are transformed into structured data. These transformations cause a loss of information and resolution in point clouds. The first developed algorithms performed semantic segmentation by applying 3D convolutions to the generated voxels [23]. VoxNet [42], one of the most popular methods, defines the point cloud as an occupancy grid as input to CNNs. CNN models are widely preferred for voxel-based semantic segmentation. In addition, there are methods that apply semantic segmentation with hand-crafted features calculated within voxels [43]. The disadvantage of voxel-based approaches is the unnecessary memory usage caused by empty voxels. To overcome this problem, some approaches are proposed in the literature [44].

2.3. Point Cloud Semantic Segmentation with Projection-Based Methods

Projection-based methods project the point cloud to a two-dimensional plane. Similar to voxel-based approaches, the point cloud is transformed from an irregular structure to a regular one. Inspired by the SqueezeNet [45] architecture, SqueezeSeg [46] generates a range image by applying spherical projection to the point cloud for semantic point cloud segmentation. SqueezeSegv2 [7] has been presented in the literature with some improvements on SqueezeSeg. Another approach that has emerged recently is the RangeNet architecture [47], which was inspired by the Darknet53 architecture. SalsaNext [48], the enhanced version of SalsaNet [49], which adds a dilated convolution stack with 1 × 1 and 3 × 3 cores to the head of the network to improve context information. Additionally, there are studies that apply classical image segmentation algorithms after reducing the point cloud to the image plane [50,51]. Multi-view PointNet [52] purposes aggregation of 2D multi-view image features into 3D point clouds. There are also algorithms that perform object detection and labeling with the bird’s-eye-view method [53].

3. Materials and Methods

3.1. Datasets

3.1.1. Toronto-3D

Toronto-3D dataset [22] is a large-scale urban outdoor mobile LiDAR dataset for semantic segmentation. The point cloud was captured by a vehicle-mounted Teledyne Optech Maverick MLS system. The dataset has approximately 78.3 million points in 1 km of the road segment. The point cloud density is approximately 1000 points/m. Each point is defined with 10 attributes; 3D coordinates of the point (x, y, z), color (red, green, blue), intensity, GPS time, scan angle rank and label. Object classes were defined as road, road marking, natural, building, utility line, pole, car, fence, and unclassified. The dataset is divided into four parts (L001, L002, L003, and L004), each covering a distance of 250 m. L001, L003, and L004 are used for the training set, and L002 is used for the testing set, following the guidelines from the original paper on the Toronto-3D dataset.

3.1.2. SZTAKI-CityMLS

The SZTAKI-CityMLS Point Cloud dataset (SZTAKI-CityMLS) [23] has been used to evaluate 3D semantic point cloud segmentation algorithms in urban environments based on mobile laser scanning (MLS) measurements of a Riegl VMX-450 mobile mapping system. SZTAKI-CityMLS dataset contains around 327 Million annotated points from various urban scenes, including main roads with both heavy and solid traffic, public squares, parks, sidewalk regions, various types of cars, trams and buses, several pedestrians, and diverse vegetation. Since there is no official division in the SZTAKI-CityMLS dataset, four parts of the dataset consisting of six parts were used for training, one part for validation, and one part for testing.

3.1.3. Paris-CARLA-3D

The Paris-CARLA-3D (PC3D) [24] dataset was created with a mobile mapping system including a LiDAR (Velodyne HDL32) inclined at 45° to the horizon and a 360° poly-dioptric Ladybug5. Paris-Carla-3D consists of two datasets: real (Paris) and synthetic (Carla). The dataset consists of data collected on a route 550 m in Paris and 5.8 km in CARLA. Only the real part, Paris, was used in this study. The Paris dataset consists of six point clouds containing 10 million points (S0 to S5), with a total of 60 million points. The points are labeled under 23 classes. In addition, since the mobile LiDAR system also includes a camera, the point cloud is colored (RGB) due to the necessary orientation processes. Although the real part does not cover a large area (it includes three streets in the center of Paris), it is captured in areas where the number and variety of urban objects, pedestrian movements and vehicles are dense, allowing for various analyses.

3.2. Filter-Based Feature Selection

Although more features are used to address the lack of information and increase the distinctiveness of algorithms, not all of these features have the same effect. Some features may be more suitable for semantic segmentation, while others may be irrelevant. Feature selection is defined as the task of determining the minimum number of features that will accurately represent the data [54]. Feature selection algorithms are used to find compact and robust subsets of relevant and informative features to enhance accuracy, improve computational efficiency with respect to both time and memory consumption, and retain relevant features. Feature selection methods can be grouped as filter-based, wrapper-based, and embedded methods. Since both wrapper-based and embedded methods contain classifier algorithms, they can have better selection performance than filter-based methods. However, this performance is still dependent on the applied classifier, and the optimum properties may change when the classifier changes. Filter-based methods are independent of the classifier. Feature selection methods are simple and efficient, as they calculate the feature importance score based on only training data [10].

3.2.1. Information Gain

Information Gain (IG), an entropy-based feature selection algorithm widely used in machine learning, can be defined as the amount of information provided by features. With the inflammation gain, the importance of the features for classification is measured, and it is decided which features are appropriate to use [55]. It is widely used in the literature, especially for text classification.

where C is set of feature, is feature set without feature t. The value shows the importance of the feature. The t features with the highest should be selected. m is the number of class.

3.2.2. Chi2 Algorithm

The Chi2 feature selection algorithm [20], which is a filter-based feature selection technique, is based on the Chi-squared () statistic. The basic working principle of this method is to calculate how much the () statistic differs from the actual value and the expected value. The Chi2 algorithm calculates the correlation between two variables and the degree of independence from each other. When Chi2 is used for feature selection, it predicts the independence of the observation class with a particular feature in the dataset [56]. The null hypothesis establishes that two variables are unrelated or independent. The value is calculated for every feature value i and class j using Equation (2).

where r defines the number of distinct values in a feature vector. k is the number of classes. The number of samples with a value of i in class j is represented as , and the expected number of samples with a value of i in class j is represented as .

3.2.3. ReliefF

The ReliefF algorithm [21] was developed based on the Relief method used by [57] for solving two-class features. ReliefF algorithm is a feature selection algorithm that assigns higher weights to features related to classes, quickly eliminates irrelevant features, and provides high efficiency in solving multiple classification problems. ReliefF aims to measure the quality of the features based on the distinction it makes between randomly selected samples which were close to each other. These were selected from the training set [56]. The k nearest neighbors with the same label and k neighbors with different classes are selected for the selected random points. If samples with the same label have different values for a certain feature, the weight of the feature is decreased. If samples with different labels have different values for a certain feature, the weight of the feature is increased. The score is computed using Equation (3).

where is the score of . is the class label of the sample . defines the probability of a sample being from class y. presents the values of on feature and is the function used to calculate the difference between and . represents neighbors having the same class label. represents neighbors that have different class labels [54]. N is the number of samples in input data.

3.3. 3D Geometric Features

Eigen-based features describe the local geometry around the point and are commonly used in LiDAR processing. Neighboring points around a point can be determined using a sphere or other geometric shape, with that point as the center. This neighborhood area is called the support area [58]. In this study, the support area was determined with a sphere. The critical parameter when creating the support area is the radius of the sphere. The sphere radius that best describes the local geometry should be determined.

Eigen-based features are calculated by the eigenvalues (, , ) of the eigenvectors (, , ) derived from the covariance matrix of any point p of the point cloud [10]:

where is the centroid of the neighborhood N. The calculated eigen-based features using eigenvalues: linearity (5), planarity (6), sphericity (7), omnivariance (8), anisotropy (9), eigenentropy (10), surface variation (11), and verticality (12). In addition to eigen-based features height differences (13) in the support area of a point have been added.

3.4. RandLA-Net

Random sampling and an effective local feature aggregator (RANDLA-Net) [18] was used as the segmentation algorithm. A large-scale point cloud with millions of points inevitably requires these points to be down-sampled efficiently without losing their beneficial point properties in order to process it with a deep neural network. In RandLA-Net, a simple and fast random sampling approach has been used to drastically reduce point density while applying a carefully designed local feature aggregator to preserve remarkable features. The computational complexity is independent of the total number of entry points, i.e., it is fixed time and thus inherently scalable. The local feature aggregator module is designed to effectively preserve complex local structures by explicitly considering neighboring geometries and significantly increasing receptive fields. This module consists of feed-forward MLPs, so it is computationally efficient.

A local feature aggregation module is applied to each 3D point in parallel, and it consists of three neural units: (1) local spatial encoding (LocSE), (2) attentive pooling, and (3) a dilated residual block. Given a point cloud where each point has certain features (RGB and 3D geometric features for this study), the local spatial coding unit determines the properties of each point within the neighborhood area of that point. Thus, the LocSE unit clearly observes the local geometric patterns, and it can learn the complex local structures of the whole network effectively. The K-nearest neighbors (KNN) algorithm based on the Euclidean distance is used to determine the neighborhood area. For each of the nearest K points of the center point , the relative point position is encoded as follows:

where and are the 3D coordinates of points. calculates the Euclidean distance. Neighboring point , the encoded relative point positions and point features are combined to create augmented feature vector . The LocSE unit produces a new set of local features .

In the attentive pooling unit, a unique attention score is calculated using a shared function for each local feature .

where is the learnable weights of a shared MLP. Features are weighted summed by using attention scores, and informative feature vector is produced.

Two sets of LocSE and attention pooling are stacked to increase the receptive field size within a dilated residual block. After the first LocSE/Attention Pooling process, information was obtained from K neighbor points and then again from points by observing the K neighbors of the receptive field in the first process. Thus, the efficiency of the algorithm has been increased by expanding the receptive field [18].

3.5. Superpoint Graph (SPG)

The Superpoint Graph (SPG) [19] is a deep-learning-based approach developed for the semantic segmentation of large-scale point clouds based on partition into simple shapes. The point cloud is defined by superpoint graphs derived from geometrically homogeneous elements. The advantages of SPG are that it can classify a part of the object rather than a point or voxel, it can provide long-range modeling by defining the SPG size according to the object parts in the scene, and it can describe the relationship between adjacent objects in detail. A deep learning architecture consisting of PointNets and graph convolutions is implemented in detail without significant loss of information.

SPG consists of three main steps. In the first step, the point cloud is divided into small and meaningful superpoints. The geometrically homogeneous partition is defined as the constant connected components of the solution of the generalized minimal partition problem. Secondly, point clouds are downsampled to a smaller number of points. The SPG can be computed from this partition. The SPG is a representation of the point cloud, defined as a directed graph () consisting of the set of superpoints S, superedges E, and features F characterizing the adjacency relationship between superpoints. is defined as symmetric Voronoi adjacency graph of the input point cloud C. If one edge of the considers a connection between S and T, Superpoints S and T are adjacent.

Secondly, large point clouds are subsampled to a smaller number of point clouds, thus implementing PointNet. Finally, contextual segmentation is performed. SPG is smaller than graphs from the entire point cloud. Deep learning algorithms based on graph convolutions classify SPG’s nodes using edge features that increase long-range interaction.

3.6. Experimental Details

As a preprocessing step, local geometric properties were calculated for each dataset. The neighborhood area was determined for each point in order to calculate the local geometric properties. The neighborhood area was determined by the nearest point approach. In addition, a distance threshold has been applied to prevent the detection of unrelated neighbors to the point. The optimum parameters determined experimentally are 100 nearest neighbor points and 0.5 m distance threshold. Then, feature selection algorithms were applied to the geometric features. Thus, the aim was to determine the most suitable features for training and testing. The importance of the features were determined with Information Gain, Chi2 and ReliefF algorithms, respectively. Subsets were created with the selected features. Feature selection algorithms were implemented by using Scikit-learn library in Python [59] and WEKA workbench [60].

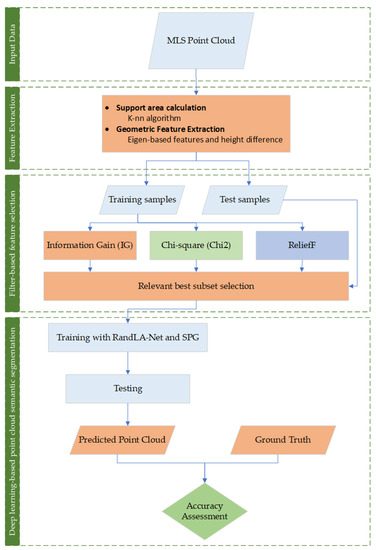

The Toronto3D and Paris datasets contain 3D coordinates (x, y, z) and RGB information. In the SZTAKI-CityMLS dataset, there are only 3D coordinates. In Toronto3D and Paris, the RGB values of the points are also used as features in the training. All of the deep learning experiments were implemented in a Python programming language and performed with a single GPU. RandLA-Net is applied by using Open3D-ML library [61]. As RandLA-Net training parameters, 200 epochs and 50 iterations in each epoch, learning rate 0.001, and batch size 2 were determined. We used 500 epoch and 1 iteration, learning rate 0.01, and a batch size of 2 for SPG. For the experiments, i7-11800H, 2.30 GHz processor, GTX 3070 graphics card, and 32 GB RAM hardware was used. The workflow of the study is shown in Figure 1. The results are evaluated by the mean Intersection over-Union (mIoU) and mean accuracy (MA).

where and , respectively, refer to predicted and ground-truth points that belong to class c. c∈ (1, 2, …, N) is the index of the class. refers to number of true predictions for each class.

Figure 1.

Workflow of the study.

4. Results

4.1. Feature Selection and Creating Subsets

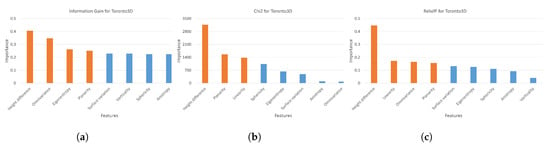

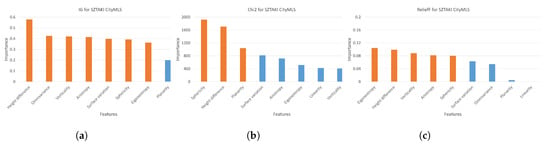

The most suitable feature subsets were determined through filter-based feature selection algorithms. In addition, training using all features and only 3D coordinates was conducted to emphasize the effect of feature selection. Feature importance values are calculated and ordered from largest to smallest. Specific breakpoints were determined as the threshold value, and features with importance less than that threshold value were eliminated. A different number of features were selected for each algorithm. In the Toronto3D dataset, four features with the highest importance value were selected with IG. We also selected three features with Chi2 and three features with ReliefF (Figure 2). While the height difference has the highest importance value in all three methods, the linearity is out of the dataset. In the SZTAKI-CityMLS dataset, seven features were selected with IG, three features with Chi2, and five features with ReliefF (Figure 3). In the Paris dataset, the same five features had highest importance, which was calculated with IG and ReliefF. Three features were selected with Chi2 (Figure 4).

Figure 2.

Importance of each feature by filter-based feature selection algorithms for Toronto3D. Selected features are marked as orange. (a) Feature importance calculated with IG (b) Feature importance calculated with Chi2. (c) Feature importance calculated with ReliefF.

Figure 3.

Importance of each feature calculated using filter-based feature selection algorithms for SZTAKI-CityMLS. Selected features are marked as orange. (a) Feature importance calculated with IG (b) Feature importance calculated with Chi2. (c) Feature importance calculated with ReliefF.

Figure 4.

Importance of each feature by filter-based feature selection algorithms for Paris. Selected features are marked as orange. (a) Feature importance calculated with IG (b) Feature importance calculated with Chi2. (c) Feature importance calculated with ReliefF.

After feature selection, subsets were created for training and testing. In order to examine the effect of geometric features, training and testing were carried out with different datasets. Since the Toronto 3D and Paris also contain RGB information, more feature combinations were created than the SZTAKI-CityMLS dataset. The ten subsets obtained from Toronto3D are named to . Five subsets from to were produced from the SZTAKI-CityMLS data set. Ten subsets to were obtained from Paris. The descriptions of the subsets are given below.

For Toronto3D dataset:

- : only 3D coordinates (x, y, z).

- : 3D coordinates and RGB.

- : 3D coordinates and all geometric features.

- : 3D coordinates and 4 selected features with IG.

- : 3D coordinates and 3 selected features with Chi2.

- : 3D coordinates and 4 selected features with ReliefF.

- : 3D coordinates, RGB and all geometric features.

- : 3D coordinates, RGB, and 4 selected features with IG.

- : 3D coordinates, RGB, and 3 selected features with Chi2.

- : 3D coordinates, RGB, and 4 selected features with ReliefF.

For SZTAKI-CityMLS dataset:

- : only 3D coordinates (x, y, z).

- : 3D coordinates and all geometric features.

- : 3D coordinates and 7 selected features with IG.

- : 3D coordinates and 3 selected features with Chi2.

- : 3D coordinates and 5 selected features with ReliefF.

For Paris dataset:

- : only 3D coordinates (x, y, z).

- : 3D coordinates and RGB.

- : 3D coordinates and all geometric features.

- : 3D coordinates and 5 selected features with IG.

- : 3D coordinates and 3 selected features with Chi2.

- : 3D coordinates and 5 selected features with ReliefF.

- : 3D coordinates, RGB and all geometric features.

- : 3D coordinates, RGB, and 5 selected features with IG.

- : 3D coordinates, RGB, and 3 selected features with Chi2.

- : 3D coordinates, RGB, and 5 selected features with ReliefF.

4.2. Results of Semantic Segmentation on Toronto3D

RandLA-Net and SPG algorithms were trained separately using each subset. The aim was to determine the most suitable feature subset for point cloud semantic segmentation. The process was repeated in the same way for each case.

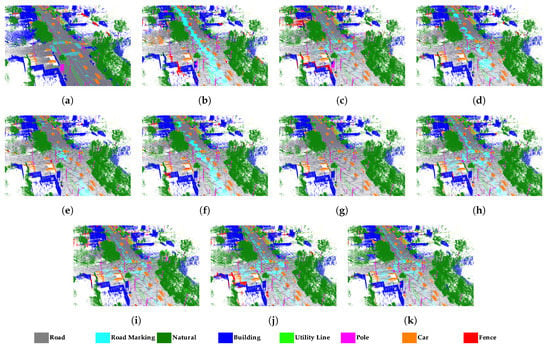

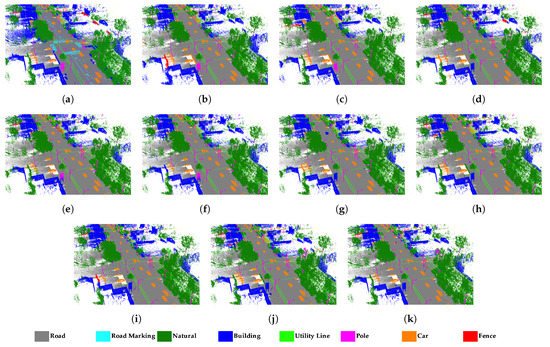

For RandLA-Net, although the IoU value of the road marking class, where only 3D coordinates and geometric features are used, remained below 10%, when RGB information was included, close to 50% IoU was obtained. The highest IoU values were achieved in the natural class. It has over 90% IoU value in all subsets. Buildings are also classified with an IoU value of 92.2%. The highest IoU value in the Fence class was obtained with the subset as 31.9%. The highest value in mean accuracy was obtained as 87.4% using the subset. The second best result was obtained by using and , where the mean accuracies are 87.2% for both subsets. The lowest mIoU and MA have the experiment with the subset containing only 3D coordinates. The mIoU and MA metrics for are 60.8% and 74.2%, respectively. The features selected with Chi2 have a greater increase in accuracy. Although this advantage was slight when used with RGB, the mean accuracy was 2.6% higher than and 4.2% higher than when using the subset. Class-based results are presented in the Table 1. The qualitative assessment is illustrated in Figure 5.

Table 1.

Class-based results of RandLA-Net on subsets of Toronto3D. Highest values are marked in bold.

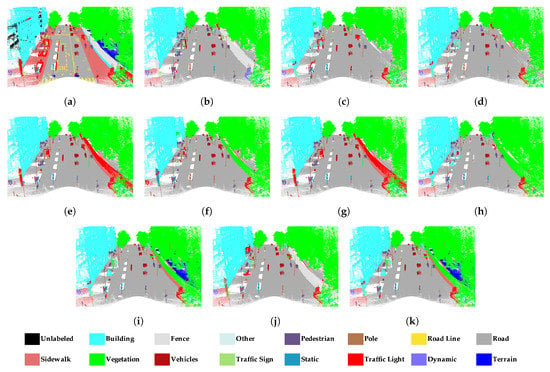

Figure 5.

Qualitative results of the methods for Toronto3D with RandLA-Net. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) ; (j) ; (k) .

The road class has over 94% IoU on all subsets when using SPG. The entire road marking class could not be determined. Similar to RandLA-Net, SPG also does not have successful results from the fence class. The highest IoU value for the Fence class was reached in the subset. The SPG method was successful in building, natural, and car classes. Chi2 is superior to other methods in semantic segmentation of Toronto3D with SPG. The highest values in both mean accuracy and mIoU are achieved using three features selected with Chi2. In the subset, 75.9% MA and 69.8% mIoU was obtained. Subset has the second highest mIoU at 67.5%. has the lowest MA and mIoU values of 68.8% and 63.4%, respectively. The subsets and containing the geometric features selected by the ReliefF method have lower accuracy than the other subsets. and containing selected features from IG and ReliefF have 5.7% higher mIoU than . Subset , which includes features selected with Chi2, also has 3.1% higher mIoU than . Although adding the RGB value improves accuracy metrics, adding geometric features further increases accuracy. Class-based results are presented in the Table 2. The qualitative assessment is illustrated in Figure 6.

Table 2.

Class-based results of SPG on subsets of Toronto3D. Highest values are marked in bold.

Figure 6.

Qualitative results of the methods for Toronto3D with SPG. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) ; (j) ; (k) .

4.3. Results of Semantic Segmentation on SZTAKI-CityMLS

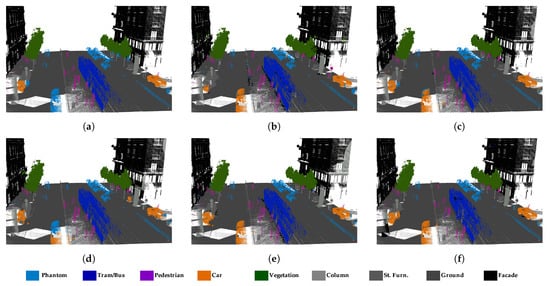

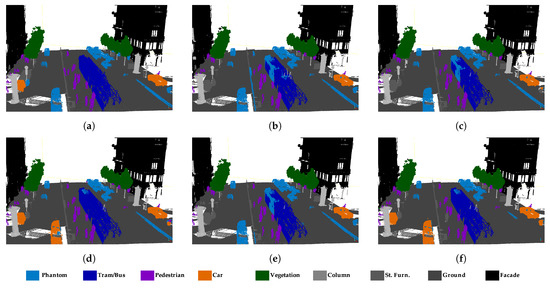

In the SZTAKI-CityMLS dataset, the IoU values of vegetation, ground, and facade classes are over 97% in all generated subsets with RandLA-Net. Although there were similar results in the Tram/Bus class, an IoU value of 89.8% was obtained in the subset. Phantom objects have the lowest IoU values. The classes with the second lowest IoU are Pedestrian and Car classes. Significant residuals are achieved by adding geometric features in the car class. The feature subsets generated with IG have almost the highest IoU, at 73.0%. Compared with the subset, both mIoU and mean accuracy values increased in all subsets to which geometric features were added. IG algorithm significantly outperforms other algorithms for SZTAKI-CityMLS dataset in all measurements. The subset generated with IG achieves the highest mIoU and mean accuracy, with 84.1% and 93.7%, respectively. The second highest evaluation metrics are obtained with , which includes 3D coordinates and all geometric features. In created with the ReliefF algorithm, the mean accuracy is lower than other methods. The results obtained in the SZTAKI-CityMLS dataset are presented in Table 3. The qualitative assessment of SZTAKI-CityMLS is illustrated in Figure 7.

Table 3.

Class-based results of RandLA-Net on subsets of SZTAKI-CityMLS. Highest values are marked as bold.

Figure 7.

Qualitative results of the methods for SZTAKI-CityMLS with RandLA-Net. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) .

The SPG algorithm has extracted vegetation, ground and facade classes above 98% mIoU. The highest IoU in the Phantom class was obtained in the subset with 68.0%. Tram/Bus class is extracted with 99% IoU with the features selected with ReliefF. In the subset, the IoU of the car class has decreased dramatically. The highest mIoU value, 84.1%, belongs to the subset, which contains features selected with IG. The lowest mIoU was obtained with the subset containing only the 3D coordinates. SPG with 50.1% IoU for street furniture performed worse than RandLA-Net. Subset created with Chi2 has lower mIoU than and . The evaluation metrics of SPG in SZTAKI-CityMLS dataset are presented in Table 4. Predicted clouds are shown in Figure 8.

Table 4.

Class-based results of SPG on subsets of SZTAKI-CityMLS. Highest values are marked as bold.

Figure 8.

Qualitative results of the methods for SZTAKI-CityMLS with SPG. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) .

4.4. Results of Semantic Segmentation on Paris

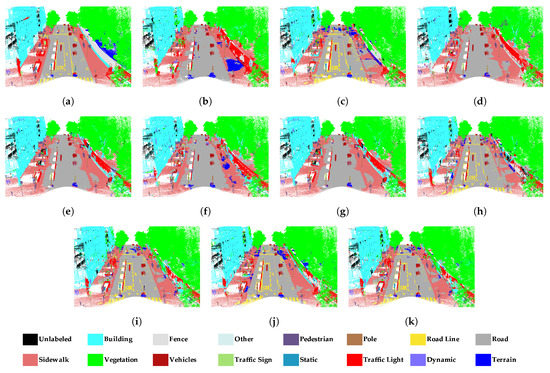

There are many classes with different geometrical structures in the Paris dataset. Successful results were obtained in all subsets in building, road, and vegetation compared to other classes. Since IG and ReliefF select the same features, the results of the subsets created by the two methods are the same. While the road line class has a 0.0 IoU ratio when only geometric features are used, the algorithm can extract the road line class with up to 70% IoU by adding RGB information. IoU increases up to 10% in subsets created by feature selection in the traffic light class. and have 55.2% mIoU. The lowest mIoU with 42.7% was obtained in the subset containing only 3D coordinates (x, y, z). The results of RandLA-Net in the Paris dataset are presented in the Table 5. The qualitative assessment of the Paris dataset with RandLA-Net is illustrated in Figure 9.

Table 5.

Class-based results of RandLA-Net on subsets of Paris. Highest values are marked as bold.

Figure 9.

Qualitative results of the methods for Paris dataset with RandLA-Net. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) ; (j) ; (k) .

With the SPG method, high metrics were obtained in the building and vegetation in the Paris dataset. Road class IoU decreased compared to RandLA-Net results. Sidewalk could not be detected in almost any subset. It is usually assigned to the road class. The SPG method could not detect the road line in any of the subsets. Similar to Sidewalk, the road line is mixed with the road class. The terrain class reached 55.8% IoU in and subsets, which consist of features selected with IG and RelieF algorithms. The IoU of the fence class decreased when all geometric features were used, but increased when feature selection was applied. The vehicles class reached the highest IoU with 87.0% and 91.6% IoU, respectively, in and subsets containing features selected with Chi2. The highest accuracy was achieved in the and subsets, resulting in 59.0% IoU. The lowest IoU (52.3%) was obtained in the subset, where 3D coordinates and all geometrical properties were used. The Chi2 algorithm has lower metrics than IG and ReliefF in the SPG method, as in RandLA-Net. The results of RandLA-Net in Paris dataset are presented in the Table 6. The qualitative assessment of Paris dataset with RandLA-Net is illustrated in Figure 10.

Table 6.

Class-based results of SPG on subsets of Paris. Highest values are marked as bold.

Figure 10.

Qualitative results of the methods for Paris dataset with SPG. (a) Ground Truth; (b) ; (c) ; (d) ; (e) ; (f) ; (g) ; (h) ; (i) ; (j) ; (k) .

5. Discussion

The results of the study allow general inferences to be made regarding the usage of suitable 3D geometric features for deep-learning-based point cloud segmentation.

- When Table 1, Table 2, Table 3, Table 4, Table 5 and Table 6 are examined, it is concluded that the use of geometric features improves IoU and mean accuracy. The RandLA-Net algorithm achieved higher performance in , , and subsets where 3D coordinates and all 3D geometric features are used together, compared to , , and subsets containing only 3D point coordinates. In Toronto3D, accuracy metrics have increased in subsets where RGB and 3D geometric features are used together, except for the subset, because there are features that do not positively impact accuracy among 3D geometric features. Obtaining the highest mean accuracy metrics with the , and subsets applied to the feature selection confirms this situation. Furthermore, the addition of RGB information of the points improves accuracy. has higher mIoU than , and with an added 3D geometric feature. The main reason for this is that the road marking class is detected more accurately in . When the mIoU average of the classes is calculated by subtracting the road marking, has 73.4% mIoU, and , and have 72.4%, 76.0% and 75.0% mIoU, respectively. Geometric features improve accuracy in many classes, especially when Chi2 and ReliefF algorithms are applied. In the SZTAKI-CityMLS dataset, although two features were eliminated with the IG method in the subset, more successful results were obtained by 3.4% in mIoU and 1.6% in MA than , which includes all geometric features. Despite the large number of classes, RandLA-Net has successful results in the Paris dataset. In the subset, which includes all of the RGB and 3D geometric features, there is a decrease in accuracy compared to . However, the highest mIoU value is obtained in and using the features selected with IG and ReliefF. When 3D coordinates and geometric features are used together, there is no significant metric difference between filter-based algorithms. Since RGB information is advantageous for finding classes such as road line, subsets , , , , and containing RGB information significantly increased in IoU compared to those without RGB.

- RandLA-Net performed well in the road, building, and natural classes in Toronto3D. However, road marking was confused by the road. As seen in Table 1, road marking IoU values are very low, especially in subsets , , , , and without RGB. Road markings cover pavement markings including driving lines, arrows, and pedestrian crossings. These markings do not differ from the road class geometrically. Therefore, it is not possible to distinguish road markings using only 3D geometric features. The main difference between road and road markings is in the RGB information. Therefore, road marking has higher IoU in subsets with RGB compared to others. In the subset, the road marking IoU reached approximately 50%. In the fence class, IoU values are generally below 30%. Because fence class has a fewer numbers of points than the others, it was predicted with lower IoU. In SZTAKI-CityMLS dataset, tram/bus, vegetation, column, ground, and facade classes are predicted correctly in all cases. Here, phantom objects are usually the class with the lowest IoU. Classification of phantom objects is a challenge in MLS point clouds. Phantom objects that exist in point clouds representing temporary objects (vehicles, people, or animals) cannot be used for mapping purposes. Phantom objects are confused with other objects because they have irregular geometric structure. It is quite difficult to detect phantom objects using 3D geometric features. When all geometric features are used in the subset, the IoU of the phantom class decreases. However, the IoU value was significantly increased, with seven features selected by the IG method. It seems that the planarity and linearity features negatively affect the accuracy of the phantom class. Significant improvement in the accuracy of the phantom class has been achieved with optimal feature selection. The pedestrian class is often mixed with other classes located nearby. According to Table 5, building, road, and vegetation were successfully extracted in all subsets with RandLA-Net in Paris dataset. Higher IoU is achieved when RGB information is added to the feature vector for the road line class, as in Toronto3D. The classes with the lowest IoU are fence, other, static, dynamic, and terrain. These classes are often confused with other classes of similar characteristics. Dynamic objects can be assigned to other classes because they are geometrically complex and diverse. While the Terrain class is almost never removed in other subsets, the IoU value reaches 55.8% IoU with the and subsets. It is often confused with vegetation and road. and selected with Chi2 are not enough to distinguish terrain.

- Feature selection improves evaluation metrics in all datasets in the semantic segmentation performed with SPG. Adding RGB or geometric features in the Toronto3D dataset increases the accuracy of semantic segmentation. Chi2 is superior to other methods in semantic segmentation of Toronto3D with SPG. The mIoU of is 1.2% higher than , which includes all geometric features, and the mIoU of is 2.8% higher than , which includes RGB and all geometric features. Features selected with ReliefF reduce the semantic segmentation accuracy of SPG in Toronto3D. In SZTAKI-CityMLS, the highest accuracy was obtained in the subset created with the features selected with IG. In the Paris dataset, as in RandLA-Net, the highest mIoU was obtained in and created with IG and ReliefF. Generally, the subset results are similar to RandLA-Net. Thus, it was concluded that the features determined via filter-based methods have similar effects in different algorithms.

- Road markings are assigned as road. Since road and road marking (line) have the same geometric structure, they cannot be distinguished by SPG, which mostly uses geometric relationships. The most significant differences between the subsets in the Toronto3D dataset occur in the fence class. Adding RGB information in particular increases the IoU of the car class. Although SPG has a better result in determining the phantom class in SZTAKI-CityMLS, some points belonging to the tram/bus class are assigned to the phantom class. IoU increased in most classes with IG, while it decreased with ReliefF.

- IG and Chi2 algorithms performed more successful feature selection in the Toronto3D dataset. Semantic segmentation with features determined by ReliefF has lower accuracy. According to the results presented in Figure 2, ReliefF calculated the effect of features other than height difference as both very low and close to each other. In addition, although similar features have high importance, it was concluded that the combination of features is important for semantic segmentation. Although there is only one feature difference between and , an increase of approximately 3% mIoU and 1.6% MA is achieved with . Even though only the omnivariance feature was added in , mIoU decreased by 1.2% and MA by 4.2% compared to . When RGB is added to the selected features, the highest metrics created with Chi2 are obtained. In the semantic segmentation of the SZTAKI-CityMLS dataset, the positive effect of 3D geometric features on accuracy is seen more clearly. All other subsets containing 3D geometric features are superior to the dataset , which contains only the 3D coordinates of the points. Although there is no significant difference between filter-based algorithms when only selected geometric features are used, when RGB information is added, approximately 2.5% improvement in mIoU is achieved in subsets created with features selected by IG and ReliefF algorithms compared to Chi2 in Paris dataset.

- However, instead of using all of the geometric features, the results are more successful when the most suitable ones are selected with feature selection. Although all geometric features were used in the subsets , , , , and , the highest metrics could not be obtained. Some of the features can negatively affect semantic segmentation. For this reason, applying feature selection methods enabled the development of semantic segmentation results by eliminating unnecessary features. This is confirmed by the results of the study.

6. Conclusions

In this study, the effect of using 3D geometric features for deep-learning-based semantic segmentation of mobile point clouds was examined, and appropriate feature selection was carried out. The performances of three filter-based methods for feature selection (IG, Chi2 and ReliefF) were compared on two different datasets. RandLA-Net and SPG were used as a deep learning network. For the semantic segmentation of Mobile LiDAR point clouds, we obtained the following conclusions by comparing the 3D coordinate information, geometric features, spectral features, and feature subsets created using filter-based methods.

- Using all geometric features does not guarantee better results. Feature selection methods improve semantic segmentation accuracy by identifying suitable features. This improvement becomes even more evident, especially if there are geometrical differences between classes. The usage of effective geometric features provides an advantage in semantic segmentation.

- Successful results were obtained by selecting features with the IG method in all datasets. Thanks to the feature selection with the Chi2 method, the highest mean accuracy is obtained in Toronto3D, while the IG method is more successful than Chi2 in the SZTAKI-CityMLS and Paris datasets. The feature selection problem may differ depending on the dataset. The fact that the datasets are different and the datasets have different features related to each other causes the appropriate feature selection method to change. Similar feature selection algorithms can be used for datasets with similar features.

- Evaluation metrics increase if spectral information is used together with 3D geometric features. Spectral features are useful for separating features such as road lines.

- Mobile point clouds are often captured in a dynamic environment. Future studies will focus on eliminating the noise caused by dynamic objects (moving car, moving pedestrian, other living beings, etc.) in the mobile LiDAR point clouds.

Author Contributions

Conceptualization, M.E.A. and Z.D.; methodology, M.E.A.; software, M.E.A.; validation, M.E.A.; formal analysis, M.E.A.; investigation, M.E.A.; resources, Z.D.; data curation, M.E.A.; writing—original draft preparation, M.E.A.; writing—review and editing, Z.D.; visualization, M.E.A.; supervision, Z.D; project administration, Z.D.; funding acquisition, Z.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Istanbul Technical University Scientific Research Office (BAP) grant number MDK-2021-42992.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The research presented in this article constitutes a part of the first author’s Ph.D. thesis study at the Graduate School of Istanbul Technical University (ITU).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bello, S.A.; Yu, S.; Wang, C.; Adam, J.M.; Li, J. Review: Deep learning on 3D point clouds. Remote Sens. 2020, 12, 1729. [Google Scholar] [CrossRef]

- Griffiths, D.; Boehm, J. A Review on deep learning techniques for 3D sensed data classification. Remote Sens. 2019, 11, 1499. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

- Duran, Z.; Aydar, U. Digital modeling of world’s first known length reference unit: The Nippur cubit rod. J. Cult. Herit. 2012, 13, 352–356. [Google Scholar] [CrossRef]

- Hoang, L.; Lee, S.H.; Lee, E.J.; Kwon, K.R. GSV-NET: A Multi-Modal Deep Learning Network for 3D Point Cloud Classification. Appl. Sci. 2022, 12, 483. [Google Scholar] [CrossRef]

- He, Y.; Chen, W.; Li, C.; Luo, X.; Huang, L. Fast and accurate lane detection via graph structure and disentangled representation learning. Sensors 2021, 21, 4657. [Google Scholar] [CrossRef]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. SqueezeSegV2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a LiDAR point cloud. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Akyol, O.; Duran, Z. Low-Cost Laser Scanning System Design. J. Russ. Laser Res. 2014, 35, 244–251. [Google Scholar] [CrossRef]

- Rim, B.; Lee, A.; Hong, M. Semantic segmentation of large-scale outdoor point clouds by encoder–decoder shared mlps with multiple losses. Remote Sens. 2021, 13, 3121. [Google Scholar] [CrossRef]

- Weinmann, M.; Jutzi, B.; Hinz, S.; Mallet, C. Semantic point cloud interpretation based on optimal neighborhoods, relevant features and efficient classifiers. ISPRS J. Photogramm. Remote Sens. 2015, 105, 286–304. [Google Scholar] [CrossRef]

- Atik, M.E.; Duran, Z.; Seker, D.Z. Machine learning-based supervised classification of point clouds using multiscale geometric features. ISPRS Int. J. Geo-Inf. 2021, 10, 187. [Google Scholar] [CrossRef]

- Atik, M.E.; Duran, Z. Classification of Aerial Photogrammetric Point Cloud Using Recurrent Neural Networks. Fresenius Environ. Bull. 2021, 30, 4270–4275. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland; pp. 234–241. [Google Scholar]

- Atik, S.O.; Ipbuker, C. Building Extraction in VHR Remote Sensing Imagery Through Deep Learning. Fresenius Environ. Bull. 2022, 31, 8468–8473. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Atik, S.O.; Ipbuker, C. Integrating convolutional neural network and multiresolution segmentation for land cover and land use mapping using satellite imagery. Appl. Sci. 2021, 11, 5551. [Google Scholar] [CrossRef]

- Atik, S.O.; Atik, M.E.; Ipbuker, C. Comparative research on different backbone architectures of DeepLabV3+ for building segmentation. J. Appl. Remote Sens. 2022, 16, 024510. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-Net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, DC, USA, 16–18 June 2020; pp. 11108–11117. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-Scale Point Cloud Semantic Segmentation with Superpoint Graphs. arXiv 2017, arXiv:1711.09869. [Google Scholar]

- Liu, H.; Setiono, R. Chi2: Feature selection and discretization of numeric attributes. In Proceedings of the Seventh IEEE International Conference on Tools with Artificial Intelligence, Herndon, VA, USA, 29–31 May 1995; pp. 388–391. [Google Scholar]

- Robnik-Šikonja, M.; Kononenko, I. Theoretical and Empirical Analysis of ReliefF and RReliefF. Mach. Learn. 2003, 53, 23–69. [Google Scholar] [CrossRef]

- Tan, W.; Qin, N.; Ma, L.; Li, Y.; Du, J.; Cai, G.; Yang, K.; Li, J. Toronto-3D: A large-scale mobile LiDAR dataset for semantic segmentation of urban roadways. arXiv 2020, arXiv:2003.08284. [Google Scholar]

- Nagy, B.; Benedek, C. 3D CNN-based semantic labeling approach for mobile laser scanning data. IEEE Sens. J. 2019, 19, 7269. [Google Scholar] [CrossRef]

- Deschaud, J.E.; Duque, D.; Richa, J.P.; Velasco-Forero, S.; Marcotegui, B.; Goulette, F. Paris-CARLA-3D: A Real and Synthetic Outdoor Point Cloud Dataset for Challenging Tasks in 3D Mapping. Remote Sens. 2021, 13, 4713. [Google Scholar] [CrossRef]

- Lawin, F.J.; Danelljan, M.; Tosteberg, P.; Bhat, G.; Khan, F.S.; Felsberg, M. Deep projective 3D semantic segmentation. In Proceedings of the 2017 International Conference on Computer Analysis of Images and Patterns, Ystad, Sweden, 22–24 August 2017; pp. 95–107. [Google Scholar]

- Meng, H.Y.; Gao, L.; Lai, Y.K.; Manocha, D. VV-net: Voxel VAE net with group convolutions for point cloud segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27–28 October 2019; pp. 8500–8508. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep learning on point sets for 3D classification and segmentation. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 77–85. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5105–5114. [Google Scholar]

- Jiang, M.; Wu, Y.; Zhao, T.; Zhao, Z.; Lu, C. PointSIFT: A SIFT-like Network Module for 3D Point Cloud Semantic Segmentation. arXiv 2018, arXiv:1807.00652. [Google Scholar]

- Lowe, G. SIFT—The Scale Invariant Feature Transform. Int. J. Comput. Vis. 2004, 2, 91–110. [Google Scholar] [CrossRef]

- Engelmann, F.; Kontogianni, T.; Schult, J.; Leibe, B. Know what your neighbors do: 3D semantic segmentation of point clouds. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhao, H.; Jiang, L.; Fu, C.W.; Jia, J. Pointweb: Enhancing local neighborhood features for point cloud processing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5565–5573. [Google Scholar]

- Zhang, Z.; Hua, B.S.; Yeung, S.K. ShellNet: Efficient point cloud convolutional neural networks using concentric shells statistics. In Proceedings of the IEEE International Conference on Computer Vision, Thessaloniki, Greece, 23–25 September 2019; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2019; pp. 1607–1616. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L. KPConv: Flexible and deformable convolution for point clouds. arXiv 2019, arXiv:1904.08889. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution on X-transformed points. In Advances in Neural Information Processing Systems 31; Bengio, S., Wallach, H., Larochelle, H., Grauman, K., Cesa-Bianchi, N., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2018; pp. 820–830. [Google Scholar]

- Xu, Y.; Fan, T.; Xu, M.; Zeng, L.; Qiao, Y. SpiderCNN: Deep learning on point sets with parameterized convolutional filters. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Boulch, A. ConvPoint: Continuous convolutions for point cloud processing. Comput. Graph. 2020, 88, 24–34. [Google Scholar] [CrossRef]

- Zhou, H.; Feng, Y.; Fang, M.; Wei, M.; Qin, J.; Lu, T. Adaptive Graph Convolution for Point Cloud Analysis. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual, 19–25 June 2021; pp. 4965–4974. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J.M. Dynamic graph Cnn for learning on point clouds. ACM Trans. Graph. 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Lin, Z.H.; Huang, S.Y.; Wang, Y.C.F. Convolution in the cloud: Learning deformable kernels in 3D graph convolution networks for point cloud analysis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1800–1809. [Google Scholar]

- Liu, J.; Ni, B.; Li, C.; Yang, J.; Tian, Q. Dynamic points agglomeration for hierarchical point sets learning. Proc. IEEE Int. Conf. Comput. Vis. 2019, 2019, 7545–7554. [Google Scholar]

- Maturana, D.; Scherer, S. VoxNet: A 3D Convolutional Neural Network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 922–928. [Google Scholar]

- Liao, L.; Tang, S.; Liao, J.; Li, X.; Wang, W.; Li, Y.; Guo, R. A Supervoxel-Based Random Forest Method for Robust and Effective Airborne LiDAR Point Cloud Classification. Remote Sens. 2022, 14, 1516. [Google Scholar] [CrossRef]

- Choy, C.; Gwak, J.; Savarese, S. 4D Spatio-Temporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3075–3084. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. SqueezeSeg: Convolutional Neural Nets with Recurrent CRF for Real-Time Road-Object Segmentation from 3D LiDAR Point Cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Milioto, A.; Vizzo, I.; Behley, J.; Stachniss, C. RangeNet ++: Fast and Accurate LiDAR Semantic Segmentation. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Macau, China, 4–8 November 2019; IEEE: New York, NY, USA, 2019; pp. 4213–4220. [Google Scholar]

- Cortinhal, T.; Tzelepis, G.; Aksoy, E.E. SalsaNext: Fast, Uncertainty-Aware Semantic Segmentation of LiDAR Point Clouds. In Advances in Visual Computing ISVC 2020 Lecture Notes in Computer Science; Bebis, G., Ed.; Springer: Cham, Switzerland, 2020; Volume 12510, pp. 207–222. [Google Scholar]

- Aksoy, E.E.; Baci, S.; Cavdar, S. SalsaNet: Fast Road and Vehicle Segmentation in LiDAR Point Clouds for Autonomous Driving. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; pp. 926–932. [Google Scholar]

- Biasutti, P.; Lepetit, V.; Aujol, J.F.; Bredif, M.; Bugeau, A. LU-net: An efficient network for 3D LiDAR point cloud semantic segmentation based on end-to-end-learned 3D features and U-net. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Atik, M.E.; Duran, Z. An Efficient Ensemble Deep Learning Approach for Semantic Point Cloud Segmentation Based on 3D Geometric Features and Range Images. Sensors 2022, 22, 6210. [Google Scholar] [CrossRef] [PubMed]

- Jaritz, M.; Gu, J.; Su, H. Multi-view PointNet for 3D Scene Understanding. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCVW), Seoul, Korea, 1 October 2019; pp. 3995–4003. [Google Scholar]

- Meng, Q.; Wang, W.; Zhou, T.; Shen, J.; Jia, Y.; Van Gool, L. Towards a Weakly Supervised Framework for 3D Point Cloud Object Detection and Annotation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 4454–4468. [Google Scholar] [CrossRef]

- Wu, B.; Chen, C.; Kechadi, T.M.; Sun, L. A comparative evaluation of filter-based feature selection methods for hyper-spectral band selection. Int. J. Remote Sens. 2013, 34, 7974–7990. [Google Scholar] [CrossRef]

- Lei, S. A feature selection method based on information gain and genetic algorithm. In Proceedings of the 2012 International Conference on Computer Science and Electronics Engineering, Hangzhou, China, 23–25 March 2012; Volume 2, pp. 355–358. [Google Scholar]

- Colkesen, I.; Kavzoglu, T. Selection of Optimal Object Features in Object-Based Image Analysis Using Filter-Based Algorithms. J. Indian Soc. Remote Sens. 2018, 46, 1233–1242. [Google Scholar] [CrossRef]

- Kononenko, I. Estimating attributes: Analysis and extensions of RELIEF. In Proceedings of the European Conference on Machine Learning; Springer: Catania, Italy, 1994; pp. 171–182. [Google Scholar]

- Duran, Z.; Ozcan, K.; Atik, M.E. Classification of photogrammetric and airborne lidar point clouds using machine learning algorithms. Drones 2021, 5, 104. [Google Scholar] [CrossRef]

- Pedregosa, F.; Gramfort, N.A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; Vanderplas, J.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Holmes, G.; Donkin, A.; Witten, I.H. WEKA: A machine learning workbench. In Proceedings of the ANZIIS ’94—Australian New Zealnd Intelligent Information Systems Conference, Brisbane, Australia, 29 November–2 December 1994. [Google Scholar]

- Zhou, Q.Y.; Park, J.; Koltun, V. Open3D: A Modern Library for 3D Data Processing. arXiv 2018, arXiv:1801.09847. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).