1. Introduction

The modern world uses a lot of digital imaging, both motion and static, captured through a myriad of devices of all kinds, and the trend is rapidly growing. All these images must be processed, implying understanding the content and making decisions. Most of the digital content is, in the end, irrelevant for the decision-making process but must be examined nevertheless in order to categorize it. While the human worker is still the best choice for understanding an image, the sheer amount of digital content to be processed is beyond their capabilities. Nobody can afford to hire and retain a huge army of people to analyze the digital imagery that must be processed. Besides being a huge task, it is also a repetitive and, after all, menial task, which makes it prone to errors. This is where computers can step in to perform the menial, repetitive tasks that make up the bulk of the processing and leave only final decision-making steps to humans. Even some simpler decisions can be entrusted to computers.

The understanding of digital imagery content by computers is generally referred to as “computer vision”, an umbrella term that covers all the research conducted in this field.

The processing power of computers constantly grows, but at the same time the amount of processing needed also grows, which leads to an “arms race”. Time is a critical resource and reducing the processing time is the main goal. Computers need better arms in this fight, which are materialized in better algorithms. Technological advances translate into images with higher resolution. More and more pixels are available for computers to process, but for many tasks, not all those pixels are relevant. One of the “weapons” in the arsenal is the use of lower resolution images during processing and then applying the results to the full-scale image. The lower resolution contains relevant information for the processing algorithm and limits the consumption of computing power to what matters. However, it cannot be said in advance which reduced scale is best suited for a given process. Finding a perfect balance between consumed time and quality of results can be difficult or impossible, taking too much time and thus defeating the initial goal of reducing the processing time. A way around this conundrum is the use of several reduced-scale images with various scaling factors. In the literature, this is called “multi-scale” or “pyramid strategy”, and researches indicate that it can be successfully used in multiple tasks.

This paper is structured in sections as follows.

Section 2 provides a brief review of state-of-the-art works regarding multi-scale processing techniques.

Section 3 summarizes the memetic approach to image registration and the algorithm we introduced in [

1].

Section 4 is the main part of this article, in which we describe in detail the new method that extends the bio-inspired clustered technique presented in

Section 3 to a multi-scale approach. Computation is carried out in different reduced representations to obtain promising initial solutions and identify search directions leading to the global optimum. The section also includes the computation of the search space, a justification for using multi-scale images, and the proposed methodology. The proposed algorithm was tested on various images, and the experimental results are reported in

Section 5. Conclusions derived from a comparative analysis against some classical image registration methods proved the validity of the proposed algorithm, outperforming those methods from an accuracy point of view. In addition, the results show significant improvements regarding time consumption when compared to the algorithm presented in

Section 3. In the last section, we summarize our conclusions and future development directions.

2. Literature Review

The idea of using multi-scaling is not new; the reasons behind it manifesting themselves early in the development of computers and computer vision. Key articles at the base of multi-scaling [

2,

3,

4] indicate two main reasons for using images with lower resolutions in various processing stages of computer vision: first, the obvious reduction in processing power needed; second, a lower resolution eliminates distracting pixels, and thus the context of the image is better understood and can be applied on the high-resolution image.

Multi-scale images have been successfully used for various resource-intensive tasks. The identification of elements in images is one such task intensively approached. One direction looks into the identification of objects of interest in an image (salient objects), while another direction looks to identify the same object in a set of images acquired by various sources. In [

5], the authors show that multi-scale images are related to the way biological vision works for segmenting, identifying objects, and understanding the perceived image.

The detection of salient segments using adapted graph algorithms and numerical techniques through multi-scale versions of an image is explored in [

6]. In [

7,

8], Convolutional Neural Networks (CNN) are used to create models for salient object detection (objects that attract the attention of the eye) with the purpose of object detection. The CNN are trained using reduced-scale images, which provide the needed information without wasting computing time on irrelevant details. The trained network can be used to detect objects of interest in high-resolution images for various purposes, including personal identification. Multiple scale versions of an image are also used to identify targets of various sizes (both big and small) in an image through the use of the YOLO (You Only Look Once) v3 CNN in [

9].

Re-identification (or identification of the same subject in multiple images) is an important task in processing images captured by various cameras. In [

10], the potential of meaningful information in reduced scale images is harnessed for vehicle re-identification in images captured by non-overlapping security cameras. In [

11,

12], the same idea is used for the re-identification of persons. Various reduced-scale images provide useful explicit information for the stated goal. The identification of objects in static or motion images is also accomplished using multi-scale image processing in [

13].

For a more engineering-based approach, multi-scale images have been used to detect and classify specific elements of importance. In [

14], reduced-scale images (scale 2 and 4) are used to train a CNN to identify and outline six types of possible damages in civil infrastructure, thus relieving human inspectors of a huge workload, usually beyond their physical capabilities. An improved image denoising method that uses multi-scaling in combination with a Normalized Attention Neural Network is proposed in [

15]. In [

16], the authors use multi-scaling to overcome the difficulties in the detection and identification of very specific objects (ships) in single sensor images in infrared and visible spectra.

The biomedical field also benefits from the added information that can be found in reduced scale images. In [

17], authors show that machine learning technology performs very well in image recognition, thus helping diagnosis, but not so well in prognosis. The authors also discuss the integration of machine learning with multi-scale modelling to improve prognosis performance.

3. Memetic Approach of Image Registration

Hybrid and memetic algorithms are among the most commonly used metaheuristics for image alignment, as the evolutionary process is enhanced with local search techniques that reduce the risk of premature convergence and speed up computation. In the following, we present the basic method we proposed in [

1]. The method will be further improved to speed up computation, extending it to multi-scale processing and including specialized mechanisms to avoid premature convergence.

The degradation model is of geometric type, consisting of translations, rotations, and non-uniform scaling. We denote the target image by T and let S be the observed image, i.e., the perturbed version of T, where

and

The parameter vector defining (2) is

, where (a, b) is the translation vector,

and

are the scale factors,

, and

is the rotation angle. Note that the rotation is relative to the upper left corner of the image. From a mathematical point of view, aligning S to T means computing g, the transformation to reverse (2), that is:

The inverse transformation

is computed as:

where

and

.

To solve the image alignment problem using evolutionary computing one has to define the chromosome representation, the search space, and the fitness function. In our work, the images are binarized by applying an edge detector. The chromosome space coincides with the phenotype space, and it is defined by

. The boundaries of the translation parameters are evaluated based on

,

, and the object pixels in T and S, respectively [

1]. The quality of a chromosome corresponding to a parameter vector p1 is defined in our work by the Dice similarity between the target and the result computed by (3):

The Dice coefficient of the binary images

and

is defined by [

18]:

where

is the cardinal function.

The registration mechanism combines a version of Firefly Algorithm (FA) global optimization [

19,

20] with the local search implemented by Two Membered Evolutionary Strategy (2MES) [

21] applied on clustered data. Let

be an input individual, and we denote the initial step size by

. At each moment t, 2MES iteratively updates the point

using,

where

is a random value drawn from

. The step size parameter

is updated according to the celebrated 1/5 rule [

21].

where

and

is the success rate, i.e., the displacement rate corresponding to the last

updates.

FA is a nature-inspired search that simulates the behavior of fireflies in terms of bioluminescence evolution. The position of a firefly corresponds to a candidate solution of (1), its fitness being measured by the corresponding light intensity. Each firefly j attracts less bright fireflies i, i.e., j modifies the positions

according to:

where

is the randomness parameter,

is a draw from

,

is the attractiveness of firefly j seen by firefly i. The attractiveness function is defined as:

where

is the distance between fireflies i and j,

indicates the brightness at

and

stands for the light absorption coefficient. In our work, we use

, the updating rule, and the border reflection mechanism introduced in [

22].

The registration algorithm reported in [

1] is summarized below as Algorithm 1, where the FA parameters are

, the 2MES input arguments are

, and the size of the sequence of individuals, MAX.

| Algorithm 1 Cluster-Based Memetic Algorithm |

| 1. | Input: the binarized versions of S and T, 2MES parameters, FA parameters, the number of iterations NMax, K (K < Nmax), and the fitness value threshold |

| 2. | Compute the boundaries of the search space |

| 3. | Compute the initial population, randomly generate the candidate solutions, and apply the 2MES procedure to locally improve a small number of individuals |

| 4. | Evaluate the initial population and find the best; time = 0 |

| 5. | while and the highest fitness value do |

| 6. | Execute one FA iteration |

| 7. | Compute the best individual |

| 8. | if the best fitness value has not been improved |

| 9. | if the best fitness value has not been improved during the last K iterations, apply the premature convergence avoidance mechanism: |

| 10. | Increase the step size of 2MES, |

| 11. | Increase the number of clusters |

| 12. | Replace a small number of individuals with randomly generated and locally improved ones |

| 13. | end if |

| 14. | Apply k-means to split the population into clusters and locally improve the best individual from each class using 2MES |

| 15. | Keep the best individual in the current population |

| 16. | end if |

| 17. | |

| 18. | end while |

| 19. | Output: The best individual corresponding to the perturbation parameter vector |

4. The New Multi-Scale Methodology

The algorithm aligns an observed image S to the target T by extending the bio-inspired cluster-based technique described in S3 to a multi-scale approach that includes mechanisms especially tailored to avoid premature convergence. The ideas underlying the proposed methodology are that the computation carried out in different reduced representations can produce both promising initial solutions and individuals able to redirect the search to the global optimum. Furthermore, multi-scale processing may significantly improve registration accuracy and lead to faster algorithms.

4.1. The Geometric Degradation Model and the Search Space

The proposed approach aims to register images perturbed by translations, rotations, and non-uniform scaling according to (1). The translation domain

can be narrowed down by considering the following transformation instead of (2).

where

and the rotation is relative to the center of the image (m, n). In this case, the inverse transformation

is given by:

The search space boundaries are set as follows. We assume that

. The alignment is performed using a binarized version of S and T computed by the Canny edge detector [

23]; therefore, the input images are represented by sets of contour pixels. We denote by

and

the binarized versions of T and S, respectively. Since the elements in B(S) are obtained from those in B(T) using (11), we obtain:

By straightforward computation, we obtain that

:

where

Note that the functions

and

are bounded and attain their margins. From a practical point of view, the extreme values of

and

can be computed in many ways. In our work, we used the pattern search method implemented by the MATLAB function patternsearch [

24].

4.2. The Multi-Scale Representation of Images

A long series of research works involving multi-scale image processing using various mathematical tools have been reported in the literature [

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17]. In our approach, scaling refers to the uniform change of object sizes in processed images and corresponds to the standard geometric transformation (11) defined by the parameter vector

. Note that the dimension of the input images remains unchanged, while the size of the binary representations

and

decrease proportionally to s.

Let

be a stretching factor, T the target image, S the version of T perturbed by (11) with

and

the search space corresponding to the inputs S and T. The representation of S and T in scale s, denoted by

and

, leads to a narrowed search space

. Indeed, denoting by

, we obtain:

Since

, the following relation holds:

Consequently, aligning S to T can be reduced to registering

and

. That is, computing p’.

From an implementation point of view, digital image scaling using large scale factors s involves information loss due to object shrinkage. Therefore, the size of the binary representations and is smaller than the size of and , yielding to faster registration algorithms.

The multi-scale memetic algorithm introduced in the next section involves stages that use images represented at different scale factors. The algorithm evolves from one stage to another by changing the chromosome values and the boundaries of the translation parameter according to (20).

4.3. The Proposed Multi-Scale Registration Method

The extension of the clustered-based memetic algorithm provided in S3 registers pairs of monochrome images (S, T) in the case of the perturbation model defined by (11). If S and T are colored images, monochrome representation can be considered instead to obtain the inverse transformation (11).

The proposed algorithm involves a pre-processing stage, as follows. First, the binary representations B(S) and B(T) are computed using a noise invariant edge detector, and the search space boundaries are evaluated according to (13)–(16). Then, the data is represented at two scales . The scale s1 is used in developing the registration procedure from (Algorithm 2), while s2 is used to generate the initial population (Algorithm 3) and to avoid getting stuck in a local optimum (Algorithm 4). The genotypes computed in the search space defined by s2 are converted in the algorithm’s scale s1 using transformation (2), where . One can generalize the multi-scale approach by using multiple scale parameters to develop Algorithms 3 and 4, respectively. The fitness function that controls the evolution of the memetic algorithm is defined by (6), while the method used to cluster the current population of individuals is the k-mean. Note that the fitness values lie in [0, 1].

First, the population is randomly instantiated. Then, a few chromosomes computed by Algorithm 1 using the s2 scale are added to it. The memetic registration is an iterative process that applies an FA iteration to compute the new generation followed by locally 2MES-based improvement of the best chromosome of clustered data. The data is grouped into k clusters, which varies depending on the population quality.

where

is a constant that controls the maximum number of clusters, and c_number stands for the initial number of clusters.

To avoid premature convergence, we apply the following procedure. If the best fitness value bval is the same during the last iterations, increase the step size of 2MES, multiplying it by where is a constant tuning the perturbation size z in (7). If the best fitness value bval is the same during the last it’ > it iterations, new locally improved individuals and the result computed by Algorithm 1 with replace individuals belonging to the current population.

The proposed method is summarized by Algorithm 2. Using , we denote the population at moment t. The input arguments are grouped depending on their use, as follows:

general parameters: the input images, S and T; the maximum number of iterations, NMax; the population size, n; the threshold fitness value, ; the scales s1 and s2; the constants c_number, ct1, and ct2; 2MES inputs, , and ; FA parameters ;

Algorithm 3 parameters, corresponding to Algorithm 1 arguments: 2MES parameters, and MAX; FA parameters (same as those belonging to the list of general parameters); NMax1, K1, and the threshold value ;

Algorithm 4 parameters: the number of non-effective successive iterations, i.e., without highest quality changes, cp; it, it’, and as described above; 2MES parameters (same as those belonging to the list of general parameters); Algorithm 1 parameters (same as those described in the list of Algorithm 3 parameters); the population C_Pop.

| Algorithm 2 Multi-Scale Memetic Algorithm |

| 1. | Input:, , , , , , , , , , , , , , , , , , , , , |

| 2. | Compute , , , , and the corresponding boundaries of each search space |

| 3. | |

| 4. | Compute using Algorithm 3 and the input arguments , , , , , , , , , , , and |

| 5. | Obtain the representation of in the s1 scale search space |

| 6. | Evaluate and compute |

| 7. | while and do |

| 8. | Apply an FA iteration and get |

| 9. | Compute : |

| 10. | if |

| 11. | Apply the premature convergence avoiding mechanism Algorithm 4 with the input arguments , , , , , , , , , , , , , , , , , , , , , and represented in the s2 scale search space |

| 12. | Get the representation of in the s1 scale search space |

| 13. | Get the clusters using k-means |

| 14. | for |

| 15. | Compute the best candidate solution |

| 16. | Apply 2MES with the arguments , , , , to locally improve |

| 17. | end for |

| 18. | Compute |

| 19. | if |

| 20. | ; cp = 0 |

| 21. | else |

| 22. | Keep the best individual in |

| 23. | cp = cp + 1 |

| 24. | end if |

| 25. | end if |

| 26. |

|

| 27. | end while |

| 28. | Output: |

| Algorithm 3 Population at t = 0 |

| 1. | Input:, , , , , , , , , , , , and |

| 2. | Randomly generate a set of n-nr individuals |

| 3. | for |

| 4. | Apply Algorithm 1 to compute |

| 5. | end for |

| 6. | Output: |

| Algorithm 4 Premature Convergence Avoiding Mechanism |

| 1. | Inputs:, , , , , , , , , , , , , , , , , , , , , and |

| 2. | if cp = it1 |

| 3. | |

| 4. | end if |

| 5. | if counter = it2 |

| 6. | for |

| 7. | Randomly generate an individual |

| 8. | Apply 2MES with the arguments , , to locally improve |

| 9. | end for |

| 10. | Apply Algorithm 1 to compute |

| 11. | Apply 2MES with the arguments , , to locally improve |

| 12. | Replace individuals in by |

| 13. | end if |

| 14. | Output: |

5. Experimental Results and Discussion

A long series of tests on binary, monochrome, and colored images have been performed to assess the performances of the new registration algorithm. The computer used for testing has the following configuration: Intel Core i7-10870H, 16GB RAM DDR4, SSD 512GB, NVIDIA GeForce GTX 1650Ti 4GB GDDR6.

The algorithm performances have been measured using runtime and registration accuracy. The accuracy has been evaluated by a series of measures to reflect the effectiveness of Algorithm 2 in a comprehensive manner. The main indicator is the mean success rate recorded for NR runs of Algorithm 2, where a successful run is the one that produces an individual whose quality exceeds a certain limit. The indicator measures the capability of Algorithm 2 to compute approximations of the fitness global optimum. The success rate of the algorithm that aligns the image S to the target T is computed by:

where NS represents the number of attempts with correct registration, and S and T are of the same size,

.

We also evaluated the accuracy of Algorithm 2 through similarity indicators computed between the images T and , where is the image obtained by aligning S using the result of Algorithm 2. Denoting the density function by , the similarity measures are the following:

Signal-to-Noise-Ratio (SNR).

Shannon normalized mutual information [

25,

26].

where

Tsallis normalized mutual information of order α [

27,

28].

where

A good approximation

is such that the value of

is very large (infinite for

), while

and

are both near 1. Note that, in case of significant perturbations, the information residing in the observed image S is not enough to completely reconstruct T; that is, reversing the exact geometric transformation leads to an image T’ possible different from T. For this reason, the correct way to measure the quality of the registration is to evaluate the ratio.

where

; the theoretical maximum value being 1.

If T can be completely reconstructed using a geometric transformation, the fitness threshold value is usually set above 0.8. In case of significant perturbations, the threshold value is set to [0.5, 0.6].

Since the proposed method is of stochastic type, the above-mentioned measures is applied NR times on each pair of images, and the recorded result is computed using the corresponding mean value. Consequently, if we denote by

the images obtained when S is aligned using the geometric transformations computed by Algorithm 2, the accuracy measures are defined by:

The evaluation of the computation complexity is assessed by:

where

are the corresponding runtimes.

We used various parameter settings and uniform scaling factors to implement the proposed multi-scale memetic approach and optimize the alignment accuracy and the execution times.

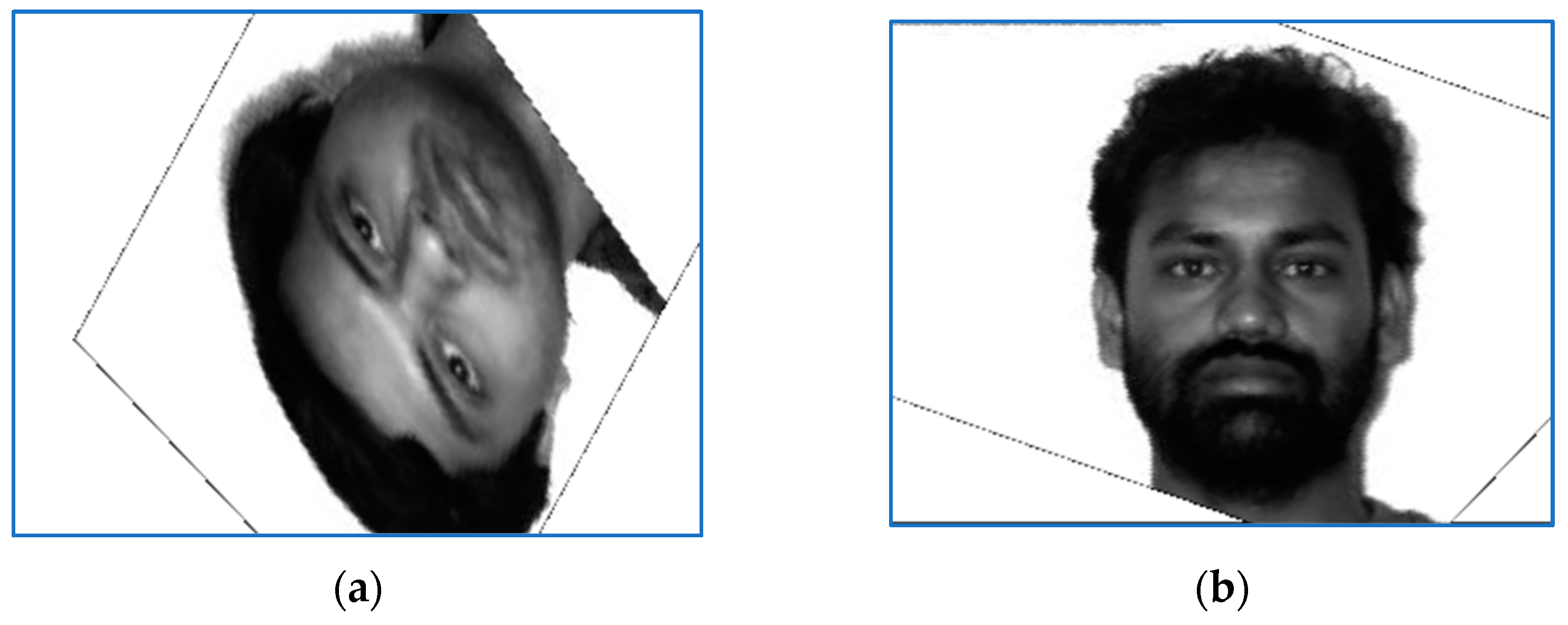

Below, we provide a summary of the registration results obtained for images belonging to the Yale Face Database [

29]. The database consists of 165 monochrome face images of 15 persons, 11 samples for each. The spatial resolution for all images is 320 × 243 pixels.

Table 1,

Table 2,

Table 3 and

Table 4 present results for 30 test images (two for each person).

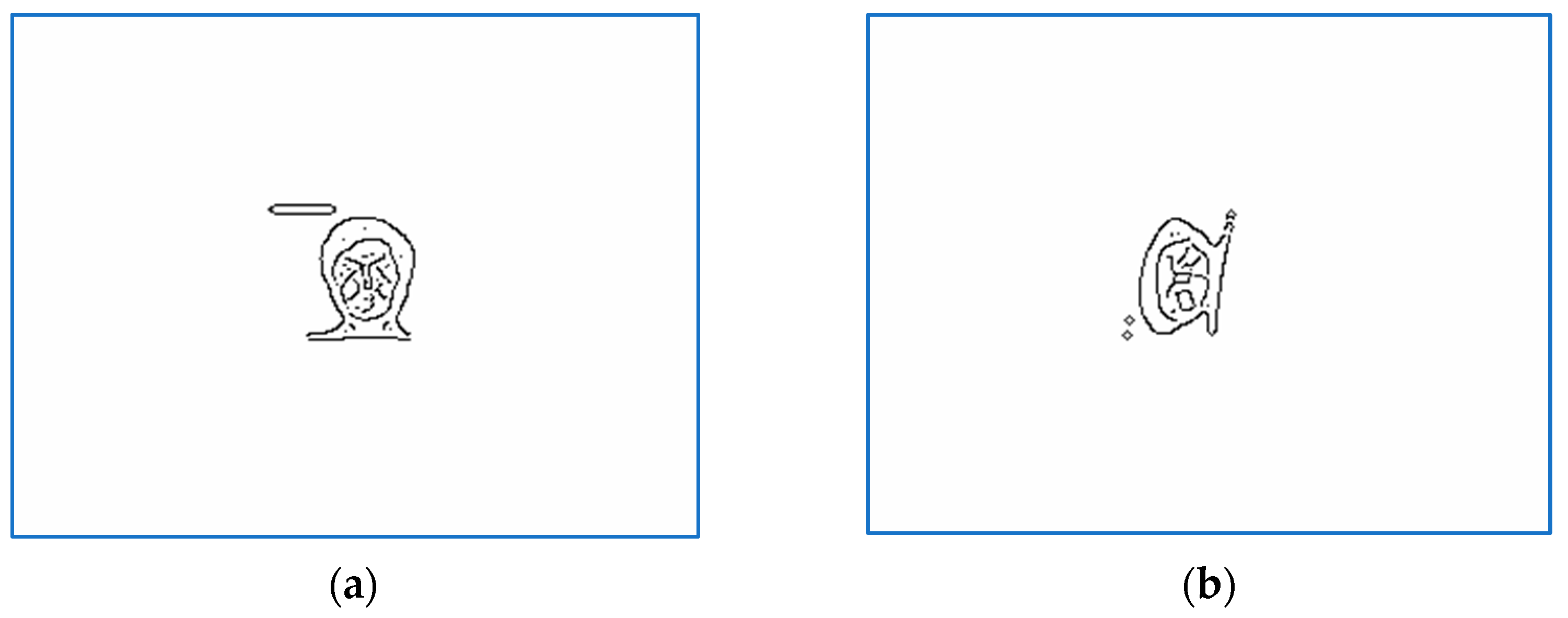

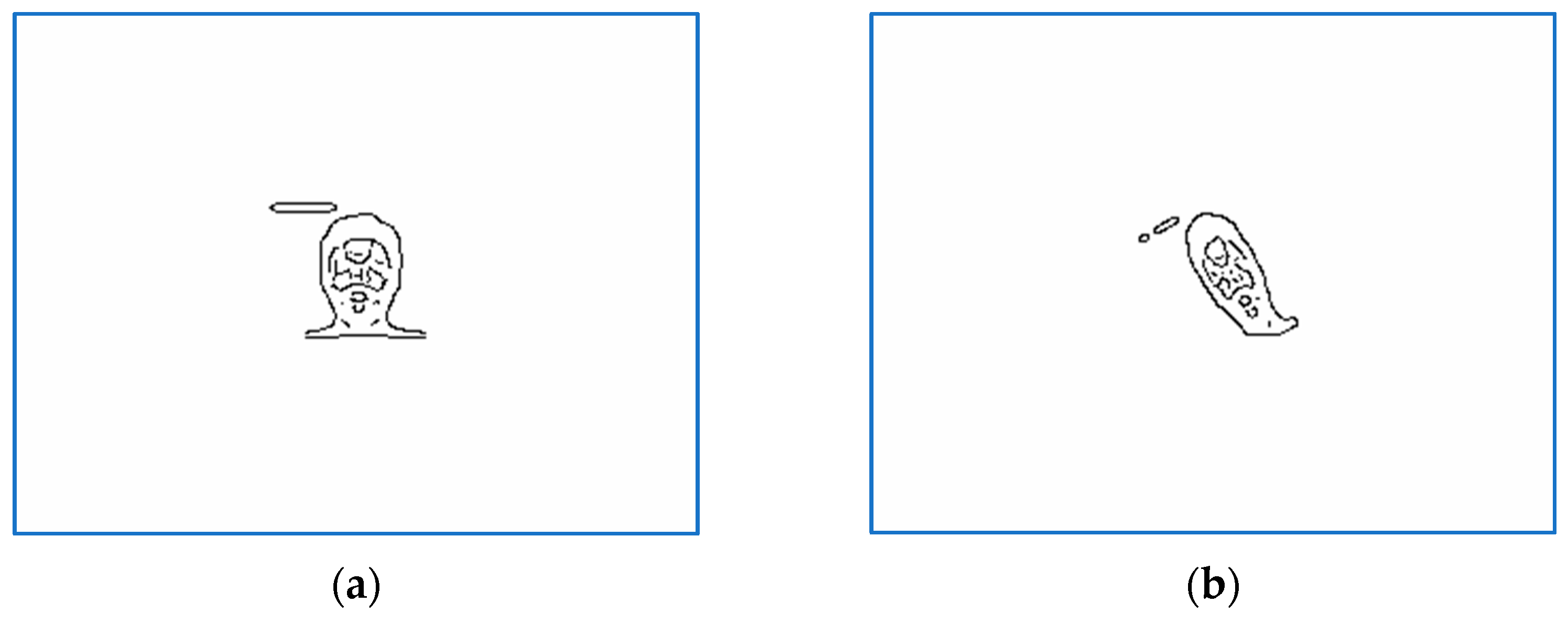

Figure 1,

Figure 2,

Figure 3,

Figure 4,

Figure 5,

Figure 6 and

Figure 7 show a selection of images for two persons that includes target, perturbed, and aligned pictures and also binarized and scaled versions used during computations.

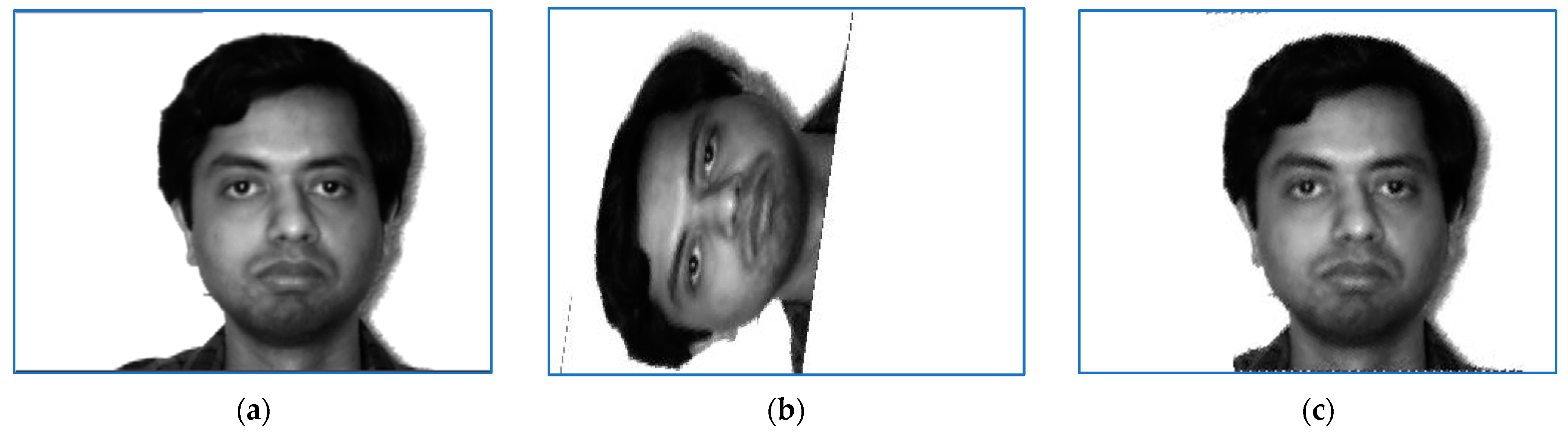

The perturbation model is given by (11), with the parameters:

,

,

and

. The sensed images shown in

Figure 1 and are perturbed by

, and

, respectively.

The proposed alignment procedure computes an approximation of the perturbation parameter vector in the search space narrowed down by the stretching factor , while significantly larger scaling values s2 are used to generate the initial population and it prevent becoming stuck in a local optimum. In our work, the scaling parameter was between 11 and 15.

The results of applying Algorithm 2 are summarized below.

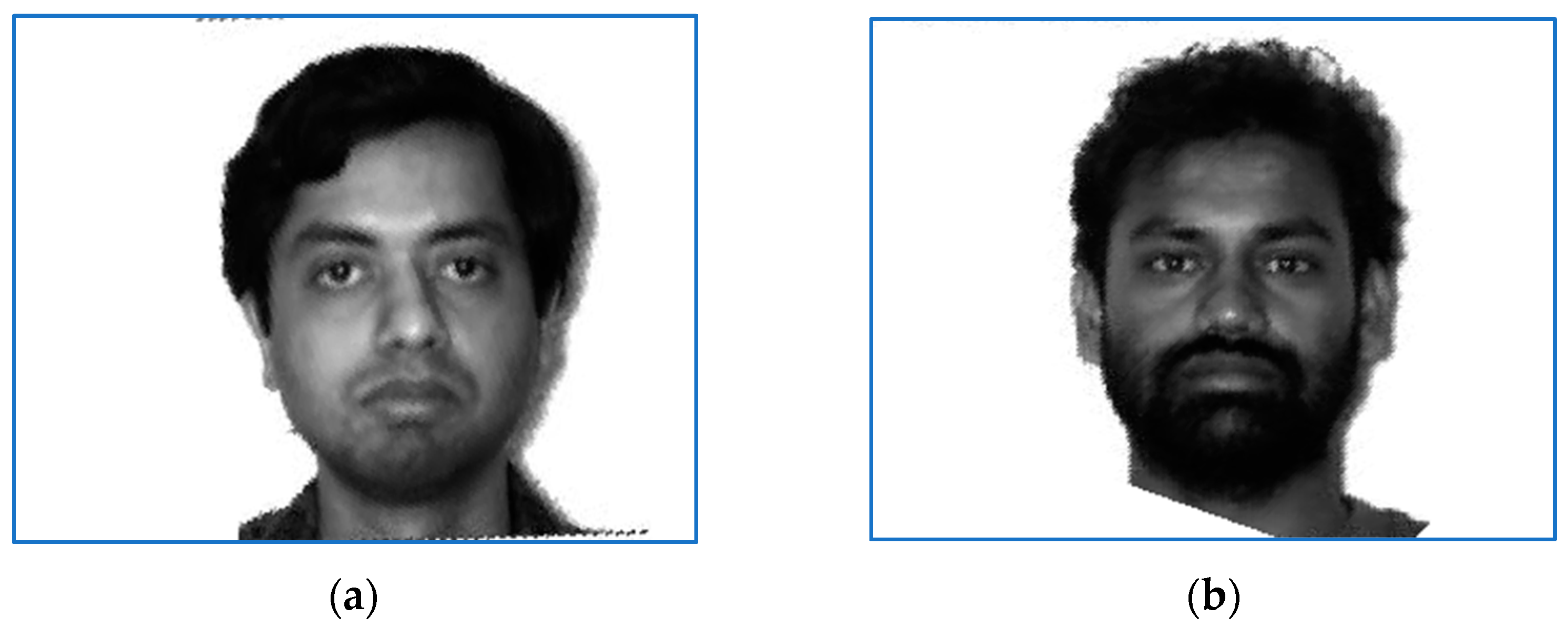

Figure 1 and

Figure 2 show recorded, sensed, and registered images for two test samples, subjects 10 and 7. The corresponding numerical results are presented in rows 10 and 7 in

Table 1,

Table 2,

Table 3 and

Table 4.

Algorithm 2 parameters were set to:

,

,

,

,

,

,

,

,

,

,

,

, and

. Each time the algorithm gets caught by a local optimum,

new locally improved individuals and the result computed by Algorithm 1 with

replace

individuals belonging to the current population. Note that the parameters’ values used in 2MES and FA algorithms are set in line with values widely used in various reported works [

19,

20,

21], which constitute a de facto standard.

Figure 5 present the alignment results of Algorithm 2.

The proposed algorithm has yielded a perfect success rate, correctly aligning all the test image pairs. Note that Algorithm 1 correctly aligns the considered images, but the recorded runtimes are substantially larger than those obtained by the proposed method. The numeric results reported below refer to the mean value and the standard deviation of the runtimes computed for Algorithms 2 and 1, respectively. The data in

Table 1 prove that Algorithm 2 is significantly faster than Algorithm 1.

The mean values and the standard deviation values computed for the accuracy measures are displayed in

Table 2,

Table 3 and

Table 4. Note that we used

to compute the Tsallis mutual information. The maximum value of the functions defined by (31) is 1, but due to rounding and computation errors, slightly larger values may be obtained.

Additionally, in order to derive comprehensive conclusions regarding the performances of Algorithm 2, we tested it against two classical methods for monomodal image registration, the regular step gradient descent optimization (RS-GD) based on mean squares image similarity metric (MS) [

30,

31], and Principal Axes Transform (PAT) [

18]. RS-GD based registration adjusts the geometric transformation parameters so that the evolution of the considered metric is toward the extrema. PAT is an image registration technique based on features automatically extracted from images, where the image features are defined by the corresponding set of principal axes.

Figure 6 present the results of applying the PAT method, and

Figure 7 present the results of applying the RS-GD algorithm.

The accuracy results of all tested methods are reported in

Table 2,

Table 3 and

Table 4. The mean and standard deviation values of RSNR correspond to Algorithm 2 and RSNR values recorded for PAT and RS-GD are provided in

Table 2. In addition,

Table 2 show the success ratios of Algorithm 2 and whether classical methods managed to correctly align the tested pairs of images. Note that, in the case of severely perturbed sensed images, both classical methods may misregister the inputs. The resulted accuracy rate of PAT is only 26.7%, while for RS-GD, it is 53.3%. Algorithm 2 had a 100% accuracy, correctly registering all tested images in all runs.

The mean and standard deviation values of

and

computed in the case of Algorithm 2 are displayed in

Table 3 and

Table 4, respectively. The tables also present the values of

and

corresponding to PAT and RS-GD methods.

The numerical results indicate that Algorithm 2 produces more accurate results than PAT and RS-GD in the light of all informational and quantitative indicators used. In addition, the new method is considerably faster than the method reported in [

1].

6. Conclusions

The aim of the paper was to propose a new comprehensive multi-scale method that extends the approach reported in [

1] to obtain accurate and efficient registration algorithms. The input images were pre-processed by a noise-insensitive edge detector to obtain binarized versions, i.e., the sets containing contour pixels. Isotropic scaling transformations were used to compute multi-scale representations of the binarized inputs. The registration was then carried out in different reduced representations to obtain promising initial solutions and to identify search directions leading to the global optimum. The process combined bio-inspired and evolutionary computation techniques with clustered search and implemented a procedure specially tailored to address the premature convergence issue.

A long series of tests involving monochrome images were conducted to ascertain meaningful conclusions regarding the registration capabilities of the proposed method. The experiments involved accuracy and efficiency measures, expressed in terms of SNR, Shannon mutual information, Tsallis entropy, and runtime. We compared Algorithm 2 against the basic method introduced in [

1] and two of the most commonly used alignment procedures for monomodal images, namely the regular step gradient descent optimization based on MS image similarity metric and PAT registration. In terms of accuracy, Algorithm 2 is similar to Algorithm 1, with a success rate of 100%, which means that it has always managed to correctly align the input images. In contrast, both RS-GD and registration and PAT alignment failed to solve the problem of severely perturbed sensed images, their corresponding success rate being far less than 100%. In terms of efficiency, there were significant improvements over Algorithm 1, with the proposed method being at least two times faster.

The experimentally established results validate the proposed method and open the path for further developments and extensions to more complex transformations. In addition, metaheuristics involving other promising bio-inspired techniques, such as the flower pollination algorithm, cuckoo search, and bat algorithm, will be considered for the population-based optimization component. In addition, an experimental study on the influence of parameter values on the performance of the proposed method is in progress.