Multilevel Features-Guided Network for Few-Shot Segmentation

Abstract

1. Introduction

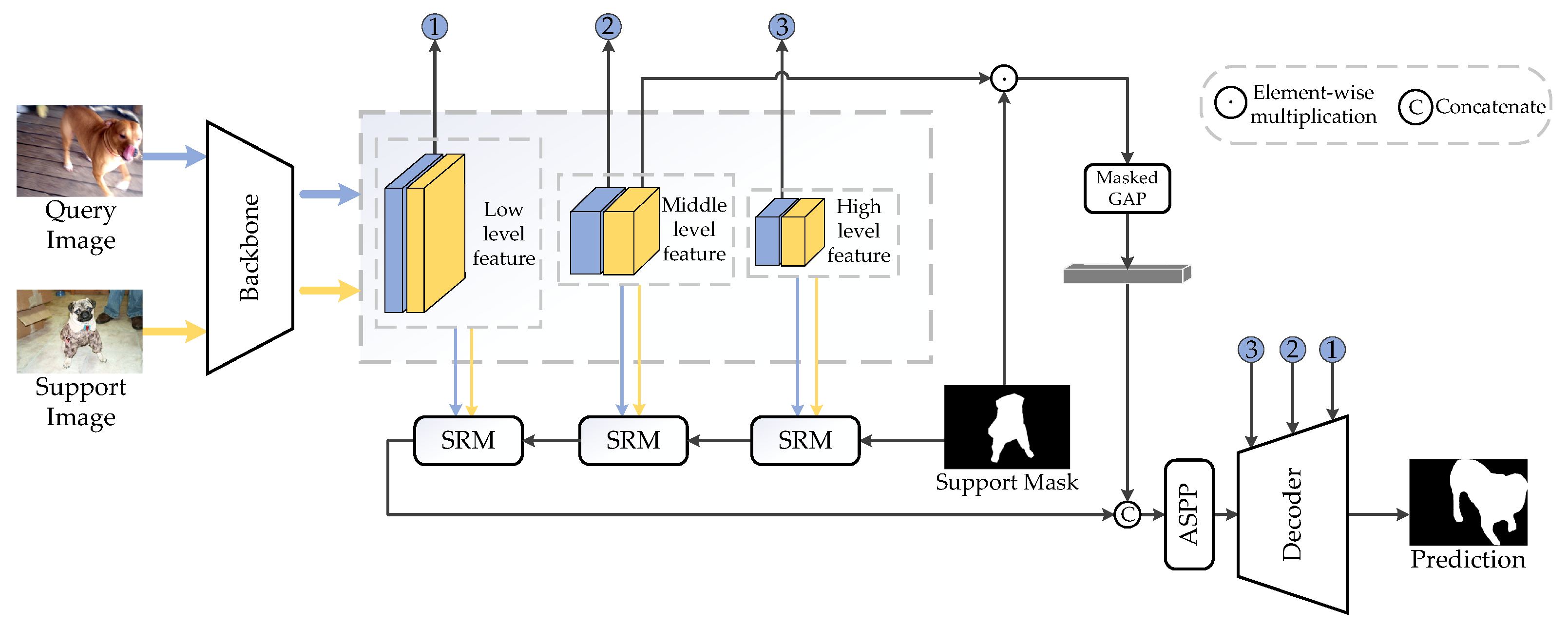

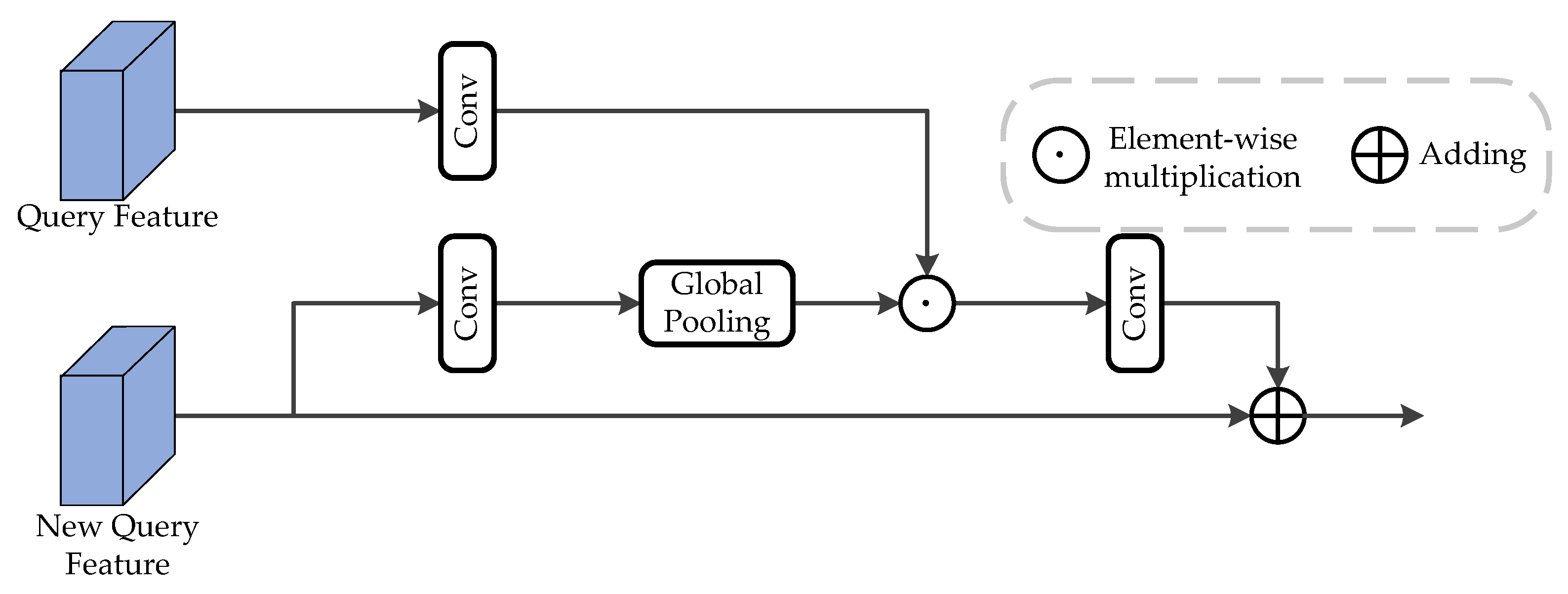

- We propose a similarity-guided feature reinforcement module (SRM) to enrich query features and enhance the coherence of support features to query feature guidance, so that more semantic information can be included when predicting query image segmentation results.

- Connecting the multilevel features of the encoder to the decoder provides more accurate segmentation guidance for the decoder upsampling, which effectively alleviates the accuracy loss caused by the depthwise convolution process, and a reasonable approach with limited use of cross-connect features also avoids the negative impact of non-critical information on segmentation.

- Compared to previous work, our proposed method has improved performance on the PASCAL-5 dataset and also performs well on the COCO-20 dataset.

2. Related Work

2.1. Semantic Segmentation

2.2. Few-Shot Learning

2.3. Few-Shot Semantic Segmentation

3. Material and Methods

3.1. Problem Definition

3.2. The Proposed Model

| Algorithm 1 The main process of training multilevel features-guided network |

| Input: Support set

, Query set 1: Initialize the network nodes (the backbone part is initialized with pre-trained weights) 2: for each iteration do 3: Feed and to backbone to get and . 4: Feed and to the SRMs to get . 5: Feed and to the ASPP to get . 6: Feed to the decoder to get the prediction . 7: Compare with , update network nodes except backbone. 8: end for |

3.3. Similarity-Guided Feature Reinforcement Module (SRM)

3.4. Multilevel Features-Guided Decoder

3.5. Loss Function

4. Implementation Details

4.1. Datasets

4.2. Experimental Setting

4.3. Evaluation Metrics

5. Results

5.1. Comparison with Other Methods

5.2. Ablation Study

5.2.1. Number of SRMs

5.2.2. Number of Features Cross-Connect to the Decoder

5.2.3. Different Methods of Using Multilevel Features to Guide Segmentation

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2016), Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Piscataway, NJ, USA, 2016; pp. 770–778. [Google Scholar]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2011–2023. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015; Bengio, Y., LeCun, Y., Eds.; Conference Track Proceedings. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.E.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Piscataway, NJ, USA, 2015; pp. 1–9. [Google Scholar]

- Xu, Y.; Du, B.; Zhang, L. Robust Self-Ensembling Network for Hyperspectral Image Classification. IEEE Trans. Neural Netw. Learn. Syst. 2022; 1–14, in press. [Google Scholar] [CrossRef]

- Xu, Y.; Du, B.; Zhang, L. Self-Attention Context Network: Addressing the Threat of Adversarial Attacks for Hyperspectral Image Classification. IEEE Trans. Image Process. 2021, 30, 8671–8685. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef] [PubMed]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., III, Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2015), Boston, MA, USA, 7–12 June 2015; IEEE Computer Society: Piscataway, NJ, USA, 2015; pp. 3431–3440. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Piscataway, NJ, USA, 2017; pp. 6230–6239. [Google Scholar]

- Everingham, M.; Gool, L.V.; Williams, C.K.I.; Winn, J.M.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Lin, T.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Computer Vision—ECCV 2014; Fleet, D.J., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2014; Volume 8693, pp. 740–755. [Google Scholar]

- Shaban, A.; Bansal, S.; Liu, Z.; Essa, I.; Boots, B. One-Shot Learning for Semantic Segmentation. In Proceedings of the British Machine Vision Conference 2017, London, UK, 4–7 September 2017. [Google Scholar]

- Satorras, V.G.; Estrach, J.B. Few-Shot Learning with Graph Neural Networks. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. Conference Track Proceedings. [Google Scholar]

- Graves, A.; Wayne, G.; Danihelka, I. Neural Turing Machines. arXiv 2014, arXiv:1410.5401. [Google Scholar]

- Wang, P.; Liu, L.; Shen, C.; Huang, Z.; van den Hengel, A.; Shen, H.T. Multi-attention Network for One Shot Learning. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Piscataway, NJ, USA, 2017; pp. 6212–6220. [Google Scholar]

- Rakelly, K.; Shelhamer, E.; Darrell, T.; Efros, A.A.; Levine, S. Conditional Networks for Few-Shot Semantic Segmentation. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. Workshop Track Proceedings. [Google Scholar]

- Bhunia, A.K.; Bhunia, A.K.; Ghose, S.; Das, A.; Roy, P.P.; Pal, U. A deep one-shot network for query-based logo retrieval. Pattern Recognit. 2019, 96, 106965. [Google Scholar] [CrossRef]

- Park, Y.; Seo, J.; Moon, J. CAFENet: Class-Agnostic Few-Shot Edge Detection Network. In Proceedings of the 32nd British Machine Vision Conference 2021, Online, 22–25 November 2021; p. 275. [Google Scholar]

- Yang, Y.; Meng, F.; Li, H.; Ngan, K.N.; Wu, Q. A New Few-shot Segmentation Network Based on Class Representation. In Proceedings of the 2019 IEEE Visual Communications and Image Processing (VCIP 2019), Sydney, Australia, 1–4 December 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–4. [Google Scholar]

- Zhu, K.; Zhai, W.; Cao, Y. Self-Supervised Tuning for Few-Shot Segmentation. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 11–17 July 2020; Bessiere, C., Ed.; pp. 1019–1025. [Google Scholar]

- Wu, Z.; Shi, X.; Lin, G.; Cai, J. Learning Meta-class Memory for Few-Shot Semantic Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 497–506. [Google Scholar]

- Liu, W.; Wu, Z.; Ding, H.; Liu, F.; Lin, J.; Lin, G. Few-Shot Segmentation with Global and Local Contrastive Learning. arXiv 2021, arXiv:2108.05293. [Google Scholar]

- Zhang, B.; Xiao, J.; Qin, T. Self-Guided and Cross-Guided Learning for Few-Shot Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2021), Virtual, 19–25 June 2021; pp. 8312–8321. [Google Scholar]

- Lu, Z.; He, S.; Zhu, X.; Zhang, L.; Song, Y.; Xiang, T. Simpler is Better: Few-shot Semantic Segmentation with Classifier Weight Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8721–8730. [Google Scholar]

- Yang, L.; Zhuo, W.; Qi, L.; Shi, Y.; Gao, Y. Mining Latent Classes for Few-shot Segmentation. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 8701–8710. [Google Scholar]

- Min, J.; Kang, D.; Cho, M. Hypercorrelation Squeeze for Few-Shot Segmentation. arXiv 2021, arXiv:2104.01538. [Google Scholar]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11211, pp. 833–851. [Google Scholar]

- Li, H.; Xiong, P.; Fan, H.; Sun, J. DFANet: Deep Feature Aggregation for Real-Time Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2019), Long Beach, CA, USA, 16–20 June 2019; pp. 9522–9531. [Google Scholar]

- Xu, Y.; Ghamisi, P. Consistency-Regularized Region-Growing Network for Semantic Segmentation of Urban Scenes With Point-Level Annotations. IEEE Trans. Image Process. 2022, 31, 5038–5051. [Google Scholar] [CrossRef]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. arXiv 2016, arXiv:1606.02147. [Google Scholar]

- Snell, J.; Swersky, K.; Zemel, R.S. Prototypical Networks for Few-shot Learning. In Proceedings of the Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., von Luxburg, U., Bengio, S., Wallach, H.M., Fergus, R., Vishwanathan, S.V.N., Garnett, R., Eds.; pp. 4077–4087. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.S.; Hospedales, T.M. Learning to Compare: Relation Network for Few-Shot Learning. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1199–1208. [Google Scholar]

- Li, W.; Wang, L.; Xu, J.; Huo, J.; Gao, Y.; Luo, J. Revisiting Local Descriptor Based Image-To-Class Measure for Few-Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7260–7268. [Google Scholar]

- Santoro, A.; Bartunov, S.; Botvinick, M.M.; Wierstra, D.; Lillicrap, T.P. Meta-Learning with Memory-Augmented Neural Networks. In Proceedings of the 33nd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Volume 48, pp. 1842–1850. [Google Scholar]

- Thrun, S.; Pratt, L.Y. Learning to Learn: Introduction and Overview. In Learning to Learn; Thrun, S., Pratt, L.Y., Eds.; Springer: Cham, Switzerland, 1998; pp. 3–17. [Google Scholar]

- Finn, C.; Abbeel, P.; Levine, S. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; Volume 70, pp. 1126–1135. [Google Scholar]

- Wang, X.; Yu, F.; Wang, R.; Darrell, T.; Gonzalez, J.E. TAFE-Net: Task-Aware Feature Embeddings for Low Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 1831–1840. [Google Scholar]

- Yu, M.; Guo, X.; Yi, J.; Chang, S.; Potdar, S.; Cheng, Y.; Tesauro, G.; Wang, H.; Zhou, B. Diverse Few-Shot Text Classification with Multiple Metrics. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 1 (Long Papers), pp. 1206–1215. [Google Scholar]

- Gidaris, S.; Komodakis, N. Dynamic Few-Shot Visual Learning Without Forgetting. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4367–4375. [Google Scholar]

- Siam, M.; Oreshkin, B.N.; Jägersand, M. AMP: Adaptive Masked Proxies for Few-Shot Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5248–5257. [Google Scholar]

- Wang, K.; Liew, J.H.; Zou, Y.; Zhou, D.; Feng, J. PANet: Few-Shot Image Semantic Segmentation With Prototype Alignment. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9196–9205. [Google Scholar]

- Zhang, C.; Lin, G.; Liu, F.; Guo, J.; Wu, Q.; Yao, R. Pyramid Graph Networks With Connection Attentions for Region-Based One-Shot Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9586–9594. [Google Scholar]

- Yang, B.; Liu, C.; Li, B.; Jiao, J.; Ye, Q. Prototype Mixture Models for Few-Shot Semantic Segmentation. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12353, pp. 763–778. [Google Scholar]

- Zhang, X.; Wei, Y.; Yang, Y.; Huang, T.S. SG-One: Similarity Guidance Network for One-Shot Semantic Segmentation. IEEE Trans. Cybern. 2020, 50, 3855–3865. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Lin, G.; Liu, F.; Yao, R.; Shen, C. CANet: Class-Agnostic Segmentation Networks With Iterative Refinement and Attentive Few-Shot Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5217–5226. [Google Scholar]

- Nguyen, K.; Todorovic, S. Feature Weighting and Boosting for Few-Shot Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 622–631. [Google Scholar]

- Wang, H.; Zhang, X.; Hu, Y.; Yang, Y.; Cao, X.; Zhen, X. Few-Shot Semantic Segmentation with Democratic Attention Networks. In Computer Vision—ECCV 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2020; Volume 12358, pp. 730–746. [Google Scholar]

- Yang, X.; Wang, B.; Zhou, X.; Chen, K.; Yi, S.; Ouyang, W.; Zhou, L. BriNet: Towards Bridging the Intra-class and Inter-class Gaps in One-Shot Segmentation. In Proceedings of the 31st British Machine Vision Conference 2020, Virtual Event, UK, 7–10 September 2020. [Google Scholar]

- Xie, G.; Liu, J.; Xiong, H.; Shao, L. Scale-Aware Graph Neural Network for Few-Shot Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 5475–5484. [Google Scholar]

- Tian, Z.; Zhao, H.; Shu, M.; Yang, Z.; Li, R.; Jia, J. Prior Guided Feature Enrichment Network for Few-Shot Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 44, 1050–1065. [Google Scholar] [CrossRef]

- Ao, W.; Zheng, S.; Meng, Y. Few-shot semantic segmentation via mask aggregation. arXiv 2022, arXiv:2202.07231. [Google Scholar]

- Lang, C.; Tu, B.; Cheng, G.; Han, J. Beyond the Prototype: Divide-and-conquer Proxies for Few-shot Segmentation. In Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 23–29 July 2022; pp. 1024–1030. [Google Scholar]

- Hariharan, B.; Arbelaez, P.; Bourdev, L.D.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 991–998. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.S.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Xie, G.; Xiong, H.; Liu, J.; Yao, Y.; Shao, L. Few-Shot Semantic Segmentation with Cyclic Memory Network. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 7273–7282. [Google Scholar]

| Backbone | Methods | 1-Shot | 5-Shot | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fold-0 | Fold-1 | Fold-2 | Fold-3 | Mean | FB-IoU | Fold-0 | Fold-1 | Fold-2 | Fold-3 | Mean | FB-IoU | ||

| ResNet50 | CANet [48] | 52.5 | 65.9 | 51.3 | 51.9 | 55.4 | 66.2 | 55.5 | 67.8 | 51.9 | 53.2 | 57.1 | 69.6 |

| BriNet [51] | 56.5 | 67.2 | 51.6 | 53.0 | 57.1 | - | - | - | - | - | - | - | |

| PFENet [53] | 61.7 | 69.5 | 55.4 | 56.3 | 60.8 | 73.3 | 63.1 | 70.7 | 55.8 | 57.9 | 61.9 | 73.9 | |

| CMN [58] | 64.3 | 70.0 | 57.4 | 59.4 | 62.8 | 72.3 | 65.8 | 70.4 | 57.6 | 60.8 | 63.7 | 72.8 | |

| HSNet [28] | 64.3 | 70.7 | 60.3 | 60.5 | 64.0 | 76.7 | 70.3 | 73.2 | 67.4 | 67.1 | 69.5 | 80.6 | |

| MANet [54] | 62.0 | 69.4 | 51.8 | 58.2 | 60.3 | 71.4 | 66.0 | 71.6 | 55.1 | 64.5 | 64.3 | 75.2 | |

| DCP [55] | 63.8 | 70.5 | 61.2 | 55.7 | 62.8 | 75.6 | 67.2 | 73.2 | 66.4 | 64.5 | 67.8 | 79.7 | |

| Ours | 64.4 | 70.8 | 63.4 | 60.3 | 64.7 | 76.4 | 67.3 | 73.7 | 66.2 | 64.9 | 68.0 | 79.3 | |

| ResNet101 | FWB [49] | 51.3 | 64.5 | 56.7 | 52.2 | 56.2 | - | 54.8 | 67.4 | 62.2 | 55.3 | 59.9 | - |

| PFENet [53] | 60.5 | 69.4 | 54.4 | 55.9 | 60.1 | 72.9 | 62.8 | 70.4 | 54.9 | 57.6 | 61.4 | 73.5 | |

| DAN [50] | 54.7 | 68.6 | 57.8 | 51.6 | 58.2 | 71.9 | 57.9 | 69.0 | 60.1 | 54.9 | 60.5 | 72.3 | |

| HSNet [28] | 67.3 | 72.3 | 62.0 | 63.1 | 66.2 | 77.6 | 71.8 | 74.4 | 67.0 | 68.3 | 70.4 | 80.6 | |

| MANet [54] | 63.9 | 69.2 | 52.5 | 59.1 | 61.2 | 71.4 | 67.0 | 70.8 | 54.8 | 65.5 | 64.5 | 74.1 | |

| Ours | 66.1 | 72.8 | 64.9 | 62.0 | 66.5 | 76.8 | 67.6 | 74.5 | 67.2 | 65.4 | 68.7 | 79.6 | |

| Backbone | Methods | 1-Shot | 5-Shot | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Fold-0 | Fold-1 | Fold-2 | Fold-3 | Mean | FB-IoU | Fold-0 | Fold-1 | Fold-2 | Fold-3 | Mean | FB-IoU | ||

| ResNet50 | BriNet [51] | 32.9 | 36.2 | 37.4 | 30.9 | 34.4 | - | - | - | - | - | - | - |

| CMN [58] | 37.9 | 44.8 | 38.7 | 35.6 | 39.3 | 61.7 | 42.0 | 50.5 | 41.0 | 38.9 | 43.1 | 63.3 | |

| HSNet [28] | 36.3 | 43.1 | 38.7 | 38.7 | 39.2 | 68.2 | 43.3 | 51.3 | 48.2 | 45.0 | 46.9 | 70.7 | |

| MANet [54] | 33.9 | 40.6 | 35.7 | 35.2 | 36.4 | - | 41.9 | 49.1 | 43.2 | 42.7 | 44.2 | - | |

| DCP [55] | 40.9 | 43.8 | 42.6 | 38.3 | 41.4 | - | 45.9 | 49.7 | 43.7 | 46.7 | 46.5 | - | |

| Ours | 40.8 | 45.5 | 41.1 | 39.1 | 41.6 | 65.2 | 46.1 | 52.3 | 46.2 | 44.3 | 47.2 | 69.1 | |

| ResNet101 | FWB [49] | 17.0 | 18.0 | 21.0 | 28.9 | 21.2 | - | 19.1 | 21.5 | 23.9 | 30.1 | 23.7 | - |

| DAN [50] | - | - | - | - | 24.4 | 62.3 | - | - | - | - | 29.6 | 63.9 | |

| PFENet [53] | 36.8 | 41.8 | 38.7 | 36.7 | 38.5 | 63.0 | 40.4 | 46.8 | 43.2 | 40.5 | 42.7 | 65.8 | |

| HSNet [28] | 37.2 | 44.1 | 32.4 | 41.3 | 41.2 | 69.1 | 45.9 | 53.0 | 51.8 | 47.1 | 49.5 | 72.4 | |

| Ours | 41.0 | 45.6 | 40.6 | 39.6 | 41.7 | 65.6 | 46.5 | 53.1 | 45.6 | 43.2 | 47.1 | 69.1 | |

| SRM | Mean-IoU | ||

|---|---|---|---|

| Low-Level | Mid-Level | High-Level | |

| - | - | - | 57.5 |

| - | ✓ | ✓ | 63.1 |

| ✓ | - | ✓ | 64.4 |

| ✓ | ✓ | - | 58.5 |

| ✓ | - | - | 58.2 |

| - | - | ✓ | 63.6 |

| ✓ | ✓ | ✓ | 65.7 |

| Query Features | Mean-IoU | ||

|---|---|---|---|

| Low-Level | Mid-Level | High-Level | |

| - | - | - | 64.2 |

| - | ✓ | ✓ | 63.9 |

| ✓ | ✓ | - | 64.4 |

| - | - | ✓ | 62.8 |

| ✓ | - | - | 64.9 |

| ✓ | ✓ | ✓ | 65.7 |

| Feature-Guided Method | Mean-IoU | |

|---|---|---|

| Three-Level | Low-Level | |

| - | 64.2 | 64.2 |

| Concatenate by channel | 61.1 | 62.5 |

| Element-wise product | 63.6 | 63.3 |

| Matmul product | 64.4 | 63.1 |

| limited use of features | 65.7 | 64.9 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xin, C.; Li, X.; Yuan, Y. Multilevel Features-Guided Network for Few-Shot Segmentation. Electronics 2022, 11, 3195. https://doi.org/10.3390/electronics11193195

Xin C, Li X, Yuan Y. Multilevel Features-Guided Network for Few-Shot Segmentation. Electronics. 2022; 11(19):3195. https://doi.org/10.3390/electronics11193195

Chicago/Turabian StyleXin, Chenjing, Xinfu Li, and Yunfeng Yuan. 2022. "Multilevel Features-Guided Network for Few-Shot Segmentation" Electronics 11, no. 19: 3195. https://doi.org/10.3390/electronics11193195

APA StyleXin, C., Li, X., & Yuan, Y. (2022). Multilevel Features-Guided Network for Few-Shot Segmentation. Electronics, 11(19), 3195. https://doi.org/10.3390/electronics11193195