1. Introduction

Realistic media technology that makes it possible to experience or appreciate specific scenes in the media based on user interaction is being developed. A variety of realistic media contents are being produced based on hand motions to combine the virtual space with the real space [

1,

2,

3,

4]. Users can replace and create plots, and experience reactions to user interactions while watching movies. The interactive media production platform provides tools to create active interactions between media and users based on a variety of multimedia content (video/photos/text) and enables the editing of story branches. In addition, it provides a unique function for video segment editing, an intuitive interface that allows the creator to easily create images, and provides an embedded code and API to provide web-based interactive video insertion capabilities [

5,

6,

7,

8,

9].

However, until now, there has been no web-based authoring platform that can create the interaction between the media and the users based on hand motions, especially on complex hand motions. The hand can be used as an effective tool in the authorizing platform to create various interactions between the media and the media users. The motion of the hand is recognized via a variety of sensors, from wearable ones to non-contact ones [

10,

11,

12].

In this paper, we propose a web-based authoring platform which can create scene-specific interactions with complex hand motions. For example, it becomes easy with the proposed method to create an interaction that provides life-like driving experience when watching a driving scene, etc. This kind of interaction was formerly not easy to create with programmable authorizing platforms. In other words, we propose an Interactive complex hand interaction authorizing tool which can compose diverse two-hand interactions from several one-hand interactive components. Many other scene-specific actions can also be created easily with our complex hand motion editor.

We summarize the main contributions of the proposed method as follows:

To our knowledge, the proposed system is the first one to authorize complex two-hand motions from single hand motions. Even though there exist some works [

12,

13,

14] which propose authorizing tools for human interaction, these systems do not compose complex interactions from single ones;

The authorizing tool is a web-based one which can also control a touchless sensor, so that there is no need for any wearable device. There are only a few web-based authorizing tools, and they do not use motion control devices [

5,

6,

7,

8,

9];

The proposed platform can create very complex hand motions out of motion sensors which can only recognizes rather simple hand motions;

The authorizing tool can provide content creators with the ability to create interactive media very easily without programming technology;

This tool can contribute to creating a new interactive platform, similar to YouTube, which is specialized to interactive content.

The user hand interaction proposed in this paper can be applied to various applications, i.e., to applications in the field of NUI (Nature User Interface) that are nowadays actively being studied. The paper is constituted as follows: in

Section 2, we introduce the configuration of the complex hand interaction (ComplexHI) authoring tool. In particular, we introduce the configuration of interaction metadata for editing, creating, and playing complex hand interaction, and the Interactive Media Player (IMP) for operating complex hand interaction. In

Section 3, we describe the types and recognition algorithms of a single hand interaction and the generation of complex two-hand interactions based on the single hand ones. Finally, in

Section 4, we show the environment in which the complex hand interaction operates and the results of the user behavior, and mention future research challenges for the expansion of the complex hand interaction.

2. Complex Hand Interaction Authoring Tool

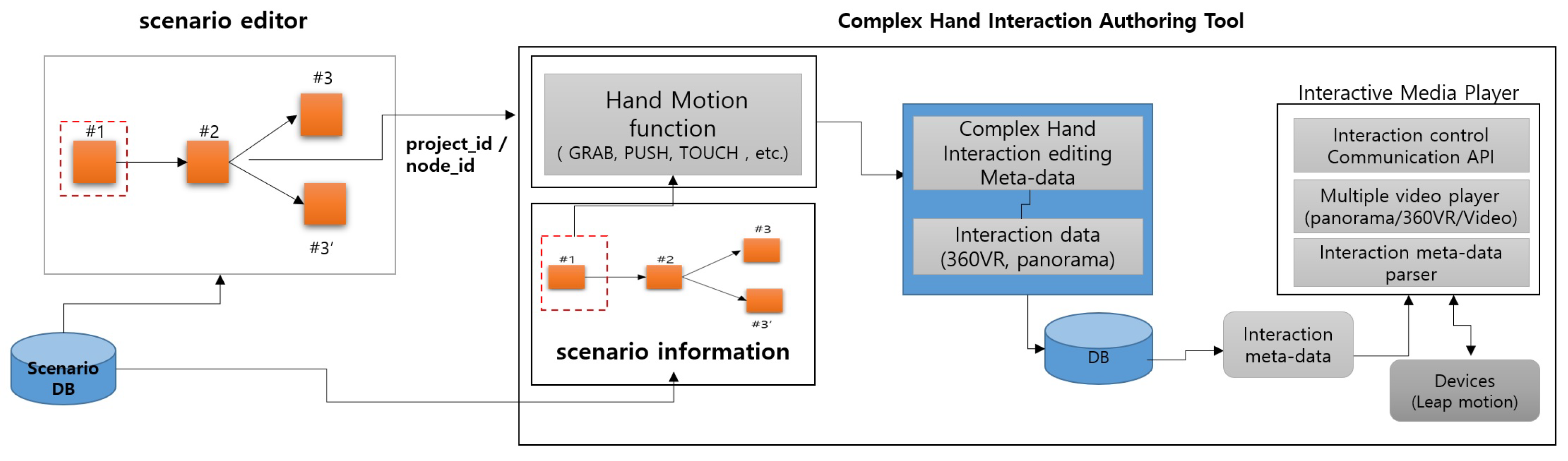

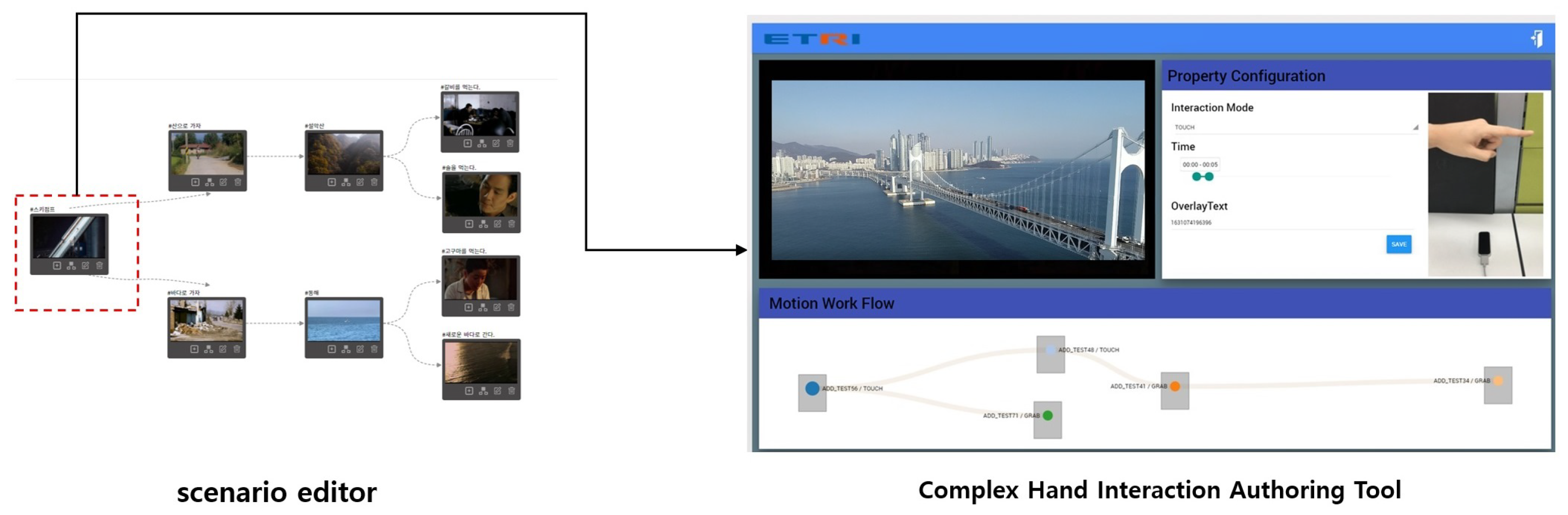

Figure 1 shows the overall function diagram of the proposed complex hand interaction (ComplexHI) authoring tool. The ComplexHI authoring tool provides the functions with which to create complex motions by combining several single motions. For instance, let us assume that we want to design a driving motion and a stopping motion. A driving motion can be seen as a two-hand motion which can be composed of two single hand motions where the hands are moving in opposite directions of each other while grabbing both hands. Meanwhile, we can design the stopping motion by an opening of the grabbing hands.

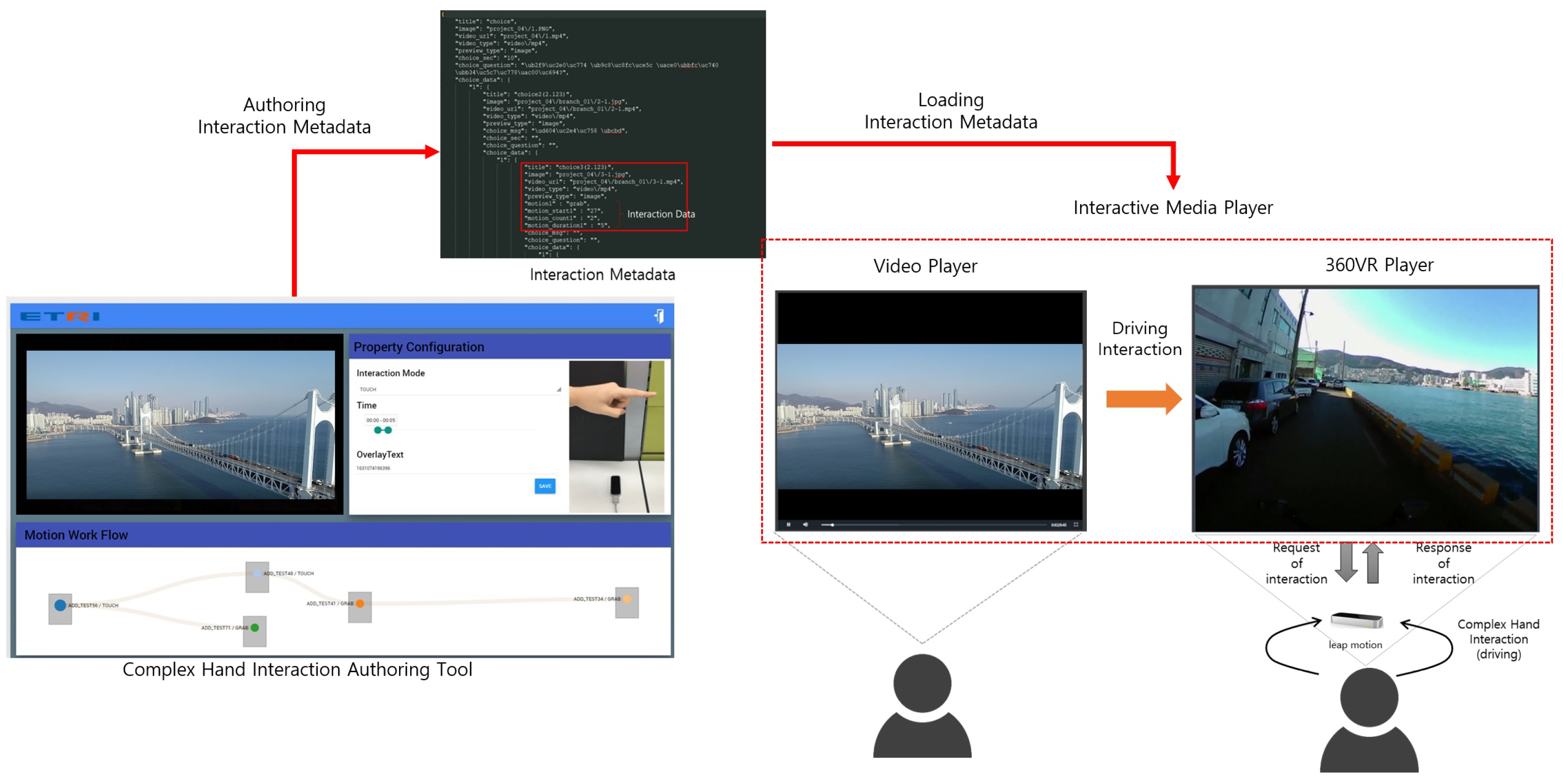

Figure 2 explains how the ComplexHI authoring tool is linked to the scenario editor and how the complex motion is generated in conjunction with the scenario editor. A scene in the scenario editor is represented by a node. When the creator clicks on a specific scene node, the ComplexHI authoring tool is started and a screen appears, where the creator can authorize an interaction. The creator configures the user’s device and edits the story by editing the complex hand interactions. The edited interaction information is stored in a form of metadata which is parsed at the time that the IMP is played.

As shown in

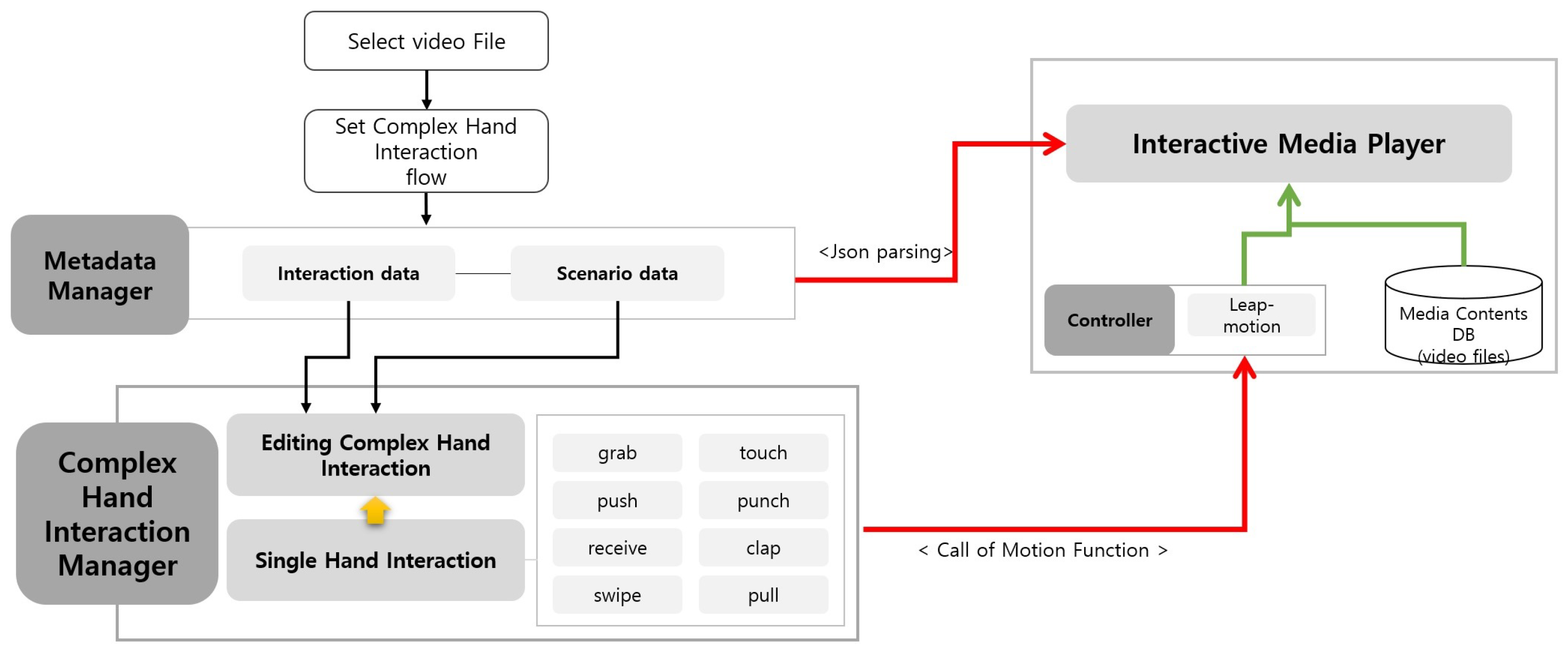

Figure 3, the ComplexHI consists of a metadata manager for generating and managing metadata for complex motion behavior, a complex motion manager for user hand motion recognition, and an interactive media player for recognizing and viewing user interactions. After the creator executes on the composite motion editor, the interaction metadata and the scenario data are generated for the creation of the composite motion. This is automatically generated when the user sets the motion flow in the GUI. The generated metadata, in turn, automatically parse the metadata when the user watches the media and goes through a motion recognition process in conjunction with the complex motion manager when the corresponding motion interaction occurs. The Interactive Media Player (complex motion player) is equipped with a parser that can parse the metadata produced by the composite motion editor, so that it interprets the composite motion metadata and recognizes them in conjunction with the motion function when a specific user interaction occurs according to the metadata information.

Figure 4 shows the operation flow chart of the complex hand interaction authoring tool and the GUI. The video file to which the complex hand interactions is to be applied is loaded and a list of single hand interaction is placed in the motion flow editor for complex hand interactions production. A single hand interaction is expressed as a single node, where a complex motion can be created by connecting multiple nodes. After arranging a single motion, one can set the properties of the motion and preview each motion. In addition, in order to confirm that the motions composed of multiple nodes are actually applied to the video, they can be checked with a preview function. Furthermore, the Motion Flow Editor helps one to freely edit and create complex motions on a node-by-node basis.

The ComplexHI is operated in the following sequence:

The image to which the complex hand interaction is applied is loaded from the scenario editor;

Move to the Motion Workflow area to create the complex hand interaction;

Create a new one- or two-hand interaction in the Motion Workflow area. Each interaction is represented by a single node in the Motion Workflow area;

Set the properties of the hand interaction. That is, set the properties of the interaction mode, the occurrence time, the delay time, and the overlay text;

Repeat step 4, for other hand interactions. Every new hand interaction will appear as a new node in the Motion Workflow area;

After the completion of the generation of the complex hand interaction, save the Motion Work Flow and check the hand interaction information;

Right-click on the Motion Work Flow to check the operation of the complex hand interaction as a video with the preview function;

Finally, using a preview, the complex hand interaction operation is checked with the video file;

Hereafter, the generated interaction can be further modified in the Motion Workflow area at any time.

2.1. Interaction Metadata

To specify settings for interactive functions in general media files without any special equipment or encoding, a separate metadata is required. In this paper, for compatibility with the interactive media authorizing tool provided as a web service, we propose to generate the language of metadata in the form of JavaScript Object Notification (JSON), an object format that has been created in JavaScript format. Using the user interaction metadata, the user can add any kind of new interactive function. The user interaction metadata include setting information such as the type of device to which the user interaction is applied, interaction mode, interaction overlay text, interaction start time, interaction waiting time, image resource information, single hand interaction mode setting, complex hand interaction mode setting, etc. Metadata are stored in the form defined in

Table 1, and users can experience interactive media according to the user motion, motion recognition, and waiting time information recorded in these data.

For example, the metadata for a driving interaction becomes: {“mode”:“hand”, “device”:“leap”, “video_path”:“test.mp4”, “start_time”:“0.00”, “delay_time”:5, “player_mode”:“360VR”,“data”:“360VR.mp4”, “single hand interaction mode”:[“GRAB”,“PUSH”],“complex hand interaction mode”:“drive”}. The value for the “mode’’ command is set to “hand” as the interactive motion is a hand motion. Likewise, the “player_mode” is set to “360VR” which means that a 360 VR video will be played during the driving interaction. The kinds of single hand interactions are “Grab” and “Push”, which are composed into a complex hand interaction mode “drive”. The value for “data” is the path to the 360 VR video, which will be played during the driving interaction.

2.2. Interactive Media Player (IMP)

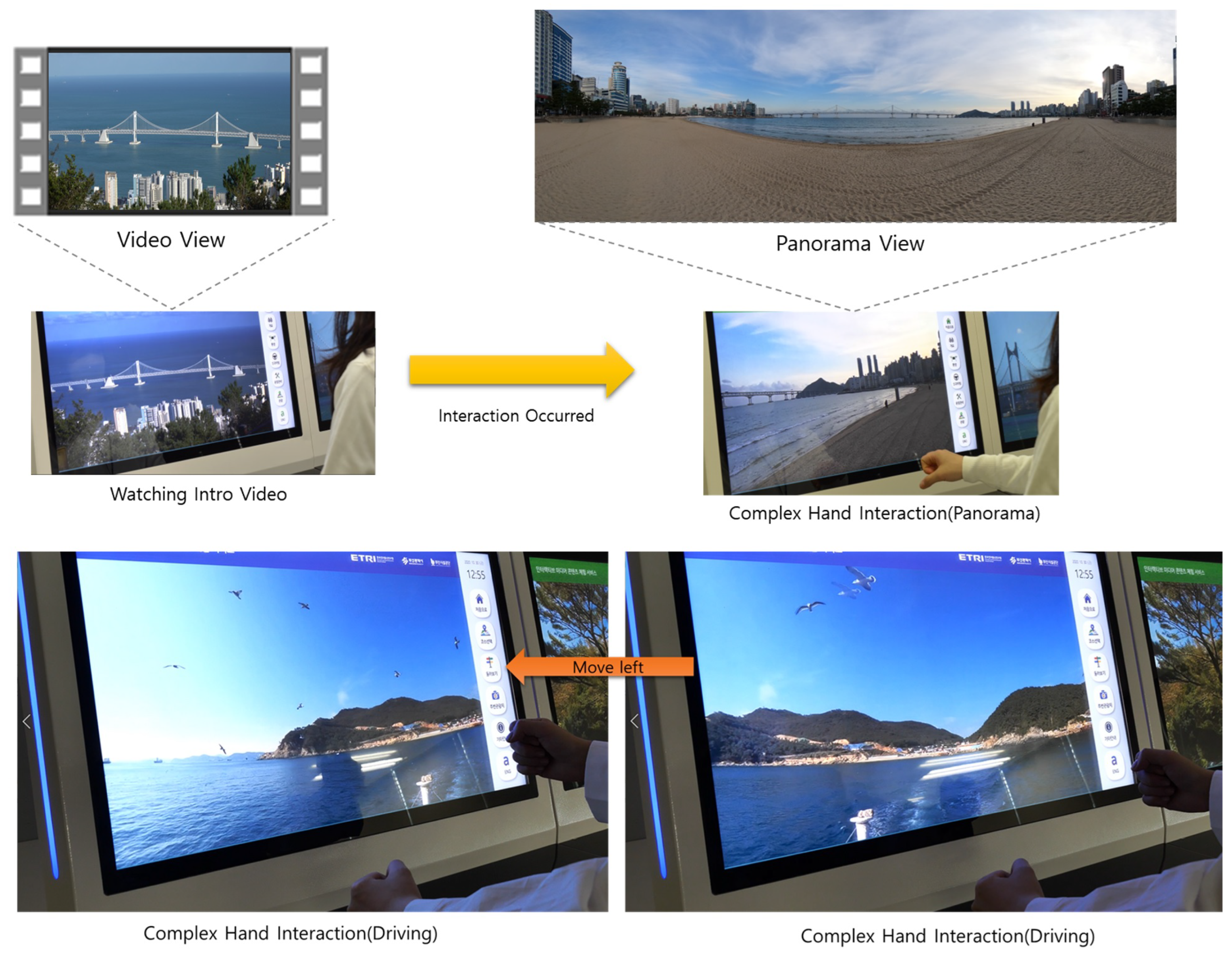

The CHI is equipped with an Interactive Media Player. The Interactive Media Player is an image control player that reads the user-generated interaction metadata, and operates according to the user’s interaction at the time that the interaction occurs. For, example, we show in

Figure 5 the case of a driving scene where the video interacts with the user based on complex hand interactions. When an interaction occurs during the video playback, the video itself turns into a 360 VR video that can interact with complex hand interactions. In fact, it is the video player that changes to a 360 VR video player. After the user has interacted with the video for a predefined time, that is, when the user’s interaction operation time ends, the regular video player is restored again instead of the interactive 360 VR player.

3. Composing Complex Hand Interactions

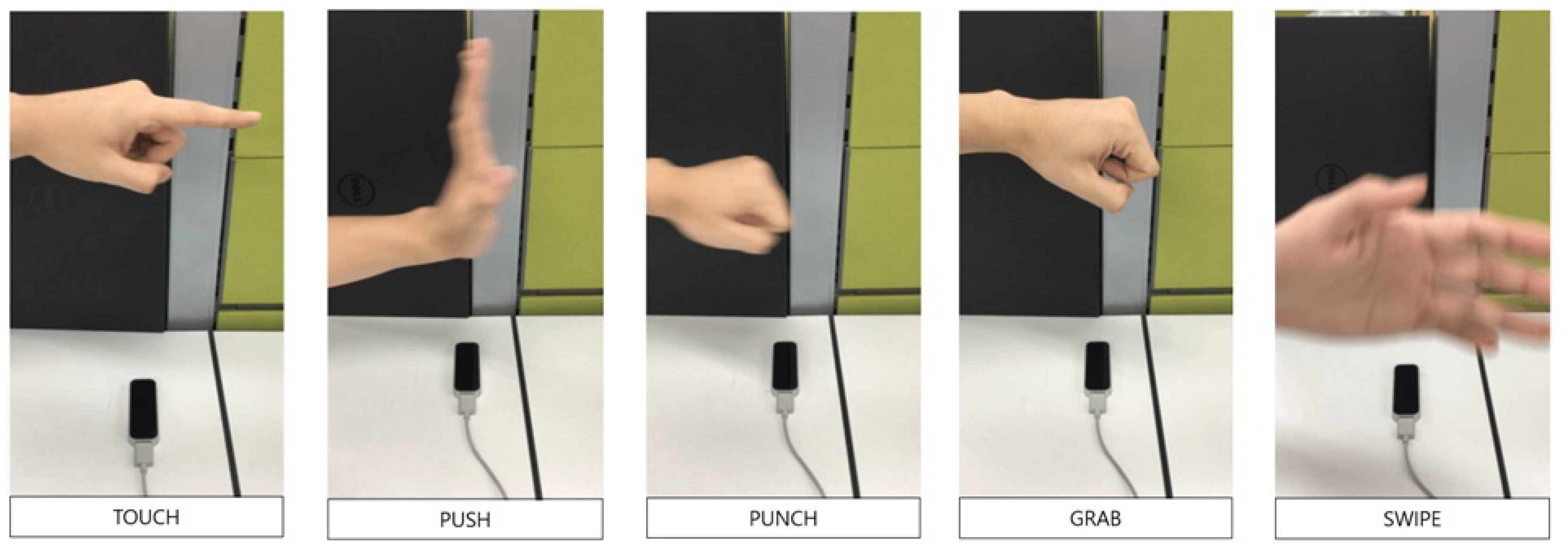

A complex hand interaction can be created by simply composing a set of single hand motions. However, to compose a complex hand interaction, first some basic single hand motions have to be defined and developed. To this end, we first developed a diversity of single motion user hand motion recognition processes. In particular, for the development of touchless user interaction we used a leap motion sensor which is suitable for low-cost hand tracking.

Table 2 briefly illustrates various single hand interaction processes, which are used to create other complex motions by connecting the single motions in a continuous flow.

The basic single hand interactions have to be defined by an algorithm which has to be programmed. The single hand interactions that can be recognized by Algorithm 1 based on the sensed data of the leap motion are GRAB, SWIPE, TOUCH, PUNCH, and PUSH. The interaction recognition time is the time required by the input of the interaction metadata. Furthermore, the motion data, the motion latency, and the motion count are taken over as parameters according to each recognition function. They serve to find a match to the motion name in the conditional statement within this function and call the motion function. In addition, the motion waiting time may be measured by setting the interaction start time just before entering the corresponding function. In addition, when the function is called, the interactive media player is stopped, and when user motion is recognized according to a specific interaction, the stopped interactive media player is played in the next video. In the case of GRAB, the number of fists is limited to one using grabflag, and in the case of TOUCH, PUSH and PUNCH, when the axis of movement of the hand falls within a specific value range, it is recognized separately.

A single hand interaction can be developed either for a one-hand interaction or a two-hand interaction. Whether it is used for a one hand or two-hand interaction can be configured by setting the mode to a ‘single’ mode or a ‘multiple’ mode. When a single hand Interaction has been developed for a two-hand interaction, it can be combined with any kind of other single hand interactions by combining the interactions in the Motion Workflow area in the authoring tool. The single hand Interactions described in

Table 2 recognize the user hand motions according to Algorithm 1.

Figure 6 shows the cases where the hand motions are recognized for each single hand interaction.

| Algorithm 1 GRAB, TOUCH, PUSH, PUNCH single hand interaction. |

procedureMotion(,) ▹ The motion function while do ▹ Motion standby if then if then ; end if if then if AND then for do if then ; end if end for end if if OR then if then if then ; end if end if if then if then ; end if end if end if end if end if end while return ▹ Single hand interaction Finish end procedure

|

Table 3 shows an example of collecting single motions as shown in

Table 2 and creating complex hand interactions. A complex hand interaction may be generated, such as the motion of driving or the motion of appreciating a panorama image by a user’s hand motion or clapping. In the case of a complex motion that drives, it is a composite motion created by combining the GRAB and the PUSH single motions. In this paper, we composed a driving interaction using the authoring tool as an example. When a driving interaction occurs in conjunction with a 360 VR image, the user uses both hands to experience driving. So, it becomes a complex hand interaction. The complex hand interactions used in the driving scenario are composed of the single hand interactions described in

Table 2. When one clenches one’s fists with both hands, the video is played, and when one opens both hands, the video stops. To drive to the left or right directions, both hands should hold fists and switch to the left or right by changing the vertical height. This operation can be confirmed in the experimental result image.

The operation of the complex hand interaction reads the hand interaction metadata information defined by the authoring tool. When the actual video image is played, it is first recognized by the metadata information whether the interaction is a complex hand interaction or a single hand interaction. The hand interaction recognition process during the video playback is the same as described in Algorithm 2. First, it is recognized whether the user’s hand is a one-hand or a two-hand action. After the information of both hands are recognized, the Start ComplexHandInteraction() function for complex hand interaction recognition operates. The ComplexHandInteractionCheck() function receives the interaction metadata, the hand type, and the frame input through the Leap motion sensor as inputs. This function checks the Leap motion information, the hand interaction information in the interaction metadata, and the hand information for each frame.

A complex hand interaction can be created in the scenario editor by selecting the complex hand induction authoring tool menu. Algorithm 2 is a general loop on how to open and initialize an edited complex hand interaction in the player software.

Figure 7 shows the operation of a complex hand interaction, which shows Driving, Panorama, and CLAP Interactions. For example, the Driving Interaction uses two hands to adjust the left and right directions using the difference in the height between the two hands.The actual operation results of the Driving interaction are shown on the test result screen in the experimental results section.

| Algorithm 2 Setting for complex hand interaction. |

procedureMotion2() ▹ The complex hand interaction function while do ▹ Motion standby if then if then if then ; end if end if ▹ complex hand interaction processing end if end while return ▹ complex hand interaction Finish end procedure

|

4. Experimental Results

This complex hand interaction tool has been developed to work with scenario editors. As shown in

Figure 8, it starts to work when one selects the complex hand induction authoring tool menu in the scenario editor. The single motions are consequently applied to the frames in the video by the motion work flow editor. In the motion work flow, one can see the resultant screen where the single motions are connected to each other to create the complex motion. Each single motion may have different attributes of operation time, waiting time, and overlay text. Each single motion corresponds to a function which checks a hand motion to be used by the user through an operation window of the single motion. In the development environment of the complex motion editor, the user hand recognition algorithm was developed with Javascript, and PHP and MySQL databases were used to store the motion information. As for the web service, it was developed to be operated and serviced by the Apache web server.

Figure 9 shows the result of the operation of the Driving interaction, a complex hand interaction. A Driving interaction may be operated as a compose of start, stop, right, and left operations. If a user experiences a Driving interaction while watching a video, the IMP converts the Video Player to a 360 VR video Player. The user can then experience a Driving interaction using the start, stop, right, and left operations.

Figure 10 shows a kiosk product where the complex motion developed by the proposed ComplexHI is applied. It also shows a screen where the user experiences a Driving and a Panorama interaction while watching a 360 VR video played on the KIOSK. The 360 VR video was taken beforehand by a 360 degree camera while driving in a car through the driving scene. By providing the user with a non-contact interaction according to the complex motion, the user can enjoy the interactive content without any screen touch or sensor contact.

We performed two user tests on the well-known PSSUQ [

15] scale; one for the users of the editor side and the other for the end users side. The PSSUQ consists of 19 post-test survey prompts that calculate three crucial metrics (System Quailty/Information Quality/Interface Quality) to rate the usefulness of a product as shown in

Table 4.

Table 5 shows the reliability analysis of PSSUQ. We set all the 19 items in the survey, and surveyed seven participants who used both the authoring tool to write the interactive media content, and the end product to which the complex hand interaction was applied. As shown in

Table 6, the average PSSUQ of the user for the authoring tool and content experience is 2.64 and 3.15, respectively, out of a scale from 1 to 7, where a smaller score indicates a higher satisfaction. Interestingly, the usage of the authoring tool shows a smaller PSSUQ score than the usage of the end-product. This is due to the fact that, for the end-product, the gestures have to be learned by the end-user, which is not so easy a task. However, the authoring tool shows a relatively low PSSUQ average value that verifies the usefulness of the proposed authoring tool system.

5. Comparison with Other Interactive Content Authoring Tools

In this section, we compare the proposed content authoring tool with other existing interactive media authoring platforms shown in

Table 7. Existing interactive media authoring platforms provide interaction editing functions, video upload and editing, and scenario editing functions that enable scenario branching in a web environment. RaptMedia [

6] provides the ability to branch out scenarios by utilizing videos, photos, and text. Eko [

7] provides story branching, object tracking, and user interaction for product sales. Rancontr [

8] is a cloud-based real-time interactive media authoring tool that provides VR functions. Meanwhile, WireWax [

9] automatically detects a face while uploading a video, providing a service for users to easily label. Furthermore, it simply inserts a link into an object to provide a function to connect to the corresponding website and purchase products.

The proposed authoring tool contains all the features provided by existing interactive media authoring platforms. In addition, the proposed system can also compose diverse two-hand interactions with a hand motion control device. In other words, by providing a hand interaction function using a user device, it is possible to create a scene-specific hand interaction with composite motion according to the scenario.

6. Conclusions

These days, with the advance of digital broadcasting, the media environment provides users with an opportunity to enjoy differentiated contents in a more aggressive fashion through user-media interactions based on computer technology. In this paper, we first proposed an interactive media player that can recognize the user’s motion and control the video in a web service environment without installing any specific program. Then, we proposed and developed an interactive media authoring tool with which immersive interactive media can be produced and enjoyed. The authorizing tool is able to create complex two-hand interactions based on single one-hand actions as it can compose and edit the hand motions with mere hand interactions with the authoring system. This is the first time that an authorizing system is proposed to authorize complex two-hand motions from single hand motions, as most of the authoring tools authorize interactions by programming. The complex hand interaction authoring tool makes it possible to apply complex hand motions to diverse contents without the need for programming. With the proposed system, it is possible to create any kind of custom or composite motions. It is expected that, in the future, the proposed system will allow individual creators to produce their own composite motion and gain competitiveness through differentiated composite motion production. Furthermore, it is anticipated that the proposed system can be applied to areas such as tourism and education as the authoring tool would be useful in producing funny and informative interactive educational and advertising content easily. In order to develop complex hand interactions effectively in the future, object control algorithms through user interaction that are grafted with object control technology will be studied. In addition, research will be conducted to automatically recognize user devices in conjunction with multiple contactless devices.

Author Contributions

Conceptualization, B.D.S. and H.C.; methodology, B.D.S. and S.-H.K.; software, B.D.S.; validation, B.D.S.; formal analysis, B.D.S. and H.C.; investigation, B.D.S.; resources, S.-H.K.; writing—original draft preparation, B.D.S.; writing—review and editing, B.D.S. and H.C.; visualization, S.-H.K.; supervision, B.D.S.; project administration, S.-H.K. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by Electronics and Telecommunications Research Institute (ETRI) grant funded by the Korean government. [22ZH1200, The research of the basic media-contents technologies].

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- Peter, M.; Claudia, C. A Privacy Framework for Games Interactive Media. IEEE Games Entertain. Media Conf. (GEM) 2018, 11, 31–66. [Google Scholar]

- Guleryuz, O.G.; Kaeser-Chen, C. Fast Lifting for 3D Hand Pose Estimation in AR/VR Applications. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018. [Google Scholar]

- Nooruddin, N.; Dembani, R.; Maitlo, N. HGR: Hand-Gesture-Recognition Based Text Input Method for AR/VR Wearable Devices. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020. [Google Scholar]

- Zhou, Y.; Habermann, M.; Xu, W.; Habibie, I.; Theobalt, C.; Xu, F. Monocular Real-time Hand Shape and Motion Capture using Multi-modal Data. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Netflix (Interactive Content). Available online: http://www.netflix.com (accessed on 1 July 2022).

- RaptMedia (Interactive Video Platform). Available online: http://www.raptmedia.com (accessed on 1 July 2022).

- Eko (Interactive Video Platform). Available online: https://www.eko.com/ (accessed on 1 July 2022).

- Racontr (Interactive Media Platform). Available online: https://www.racontr.com/ (accessed on 1 July 2022).

- Wirewax (Interactive Video Platform). Available online: http:///www.wirewax.com/ (accessed on 1 July 2022).

- Lu, Y.; Gao, B.; Long, J.; Weng, J. Hand motion with eyes-free interaction for authentication in virtual reality. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; pp. 715–716. [Google Scholar]

- Nizam, S.; Abidin, R.; Hashim, N.; Lam, M.; Arshad, H.; Majid, N. A review of multimodal interaction technique in augmented reality environment. Int. J. Adv. Sci. Eng. Inf. Technol. 2018, 8, 1460–1469. [Google Scholar] [CrossRef]

- Ababsa, F.; He, J.; Chardonnet, J.-R. Combining hololens and leap-motion for free hand-based 3D interaction in MR environments. In AVR 2020; De Paolis, L.T., Bourdot, P., Eds.; LNCS; Springer: Cham, Switzerland, 2020; Volume 12242, pp. 315–327. [Google Scholar]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Review of three-dimensional human-computer interaction with focus on the leap motion controller. Sensors 2018, 18, 2194. [Google Scholar] [CrossRef] [PubMed]

- Yoo, M.; Na, Y.; Song, H.; Kim, G.; Yun, J.; Kim, S.; Moon, C.; Jo, K. Motion estimation and hand gesture recognition-based human–UAV interaction approach in real time. Sensors 2022, 22, 2513. [Google Scholar] [CrossRef] [PubMed]

- Lewis, J.R. Psychometric Evaluation of the PSSUQ Using Data from Five Years of Usability Studies. Int. J.-Hum.-Comput. Interact. 2002, 14, 463–488. [Google Scholar]

| Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).