Multi-Class Pixel Certainty Active Learning Model for Classification of Land Cover Classes Using Hyperspectral Imagery

Abstract

:1. Introduction

- Robustness to the changes in image representation;

- Absence or a small amount of differences in classifiers during the manipulation of objects and pixels.

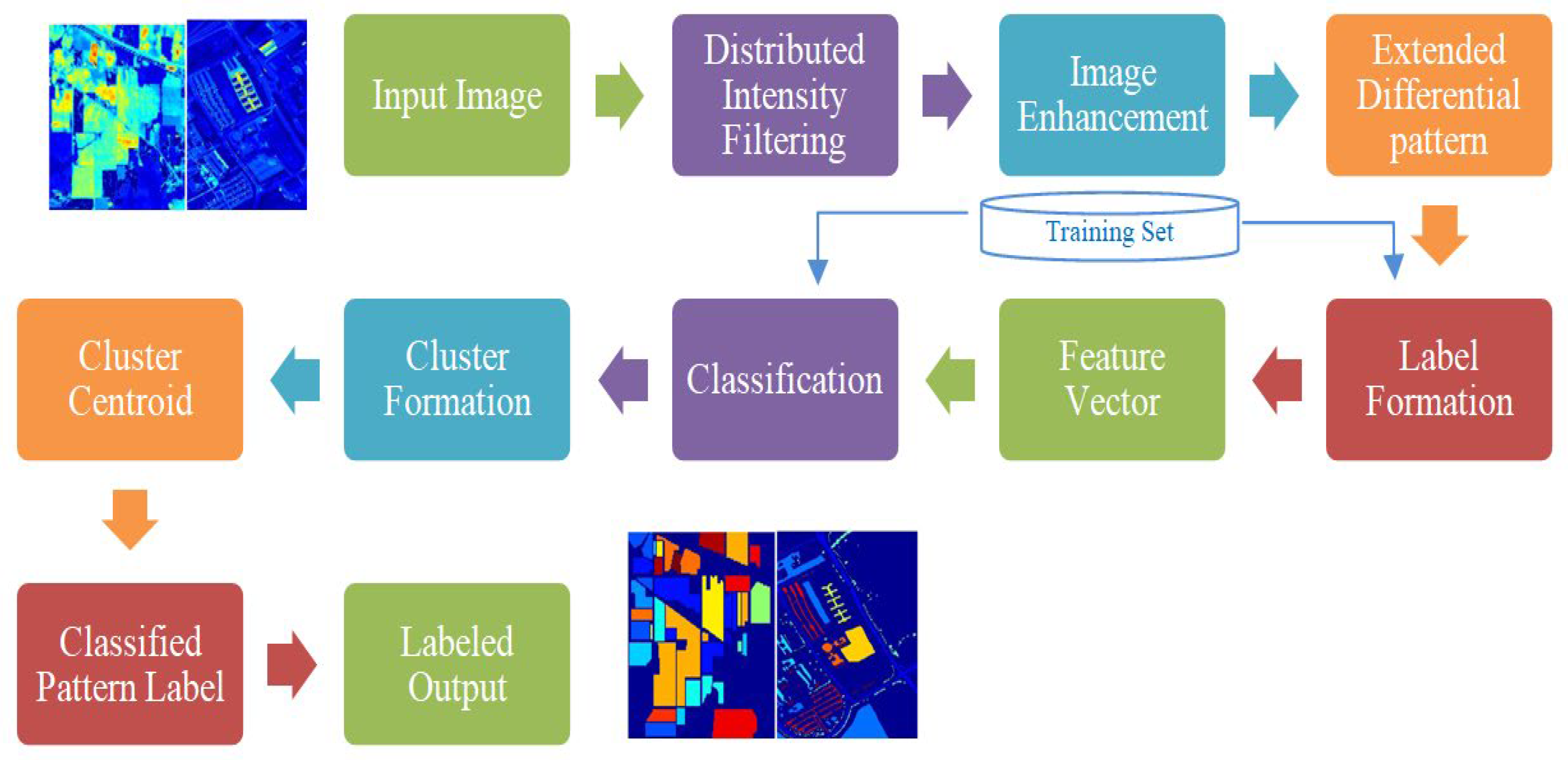

- The use of distributed intensity filtering (DIF) and histogram equalization (HE) reduces noise and improves image quality [10], ensuring the accuracy of pixels.

- The fusion and classification of labels under the merging of spectrum bands are supported by an extended differential pattern (EDP) dependent texture patterns extraction.

- The utilization of PCAL on the EDP-based features provided the labeled output corresponding to the cluster index value. This facilitates the inclusion of contextual and positional information and improves the robustness of the noise variations in pixels.

2. Related Work

3. Pixel Certainty Active Learning

- Distributed intensity filtering (DIF);

- Extended differential pattern (EDP);

- Pixel-certainty active learning (PCAL).

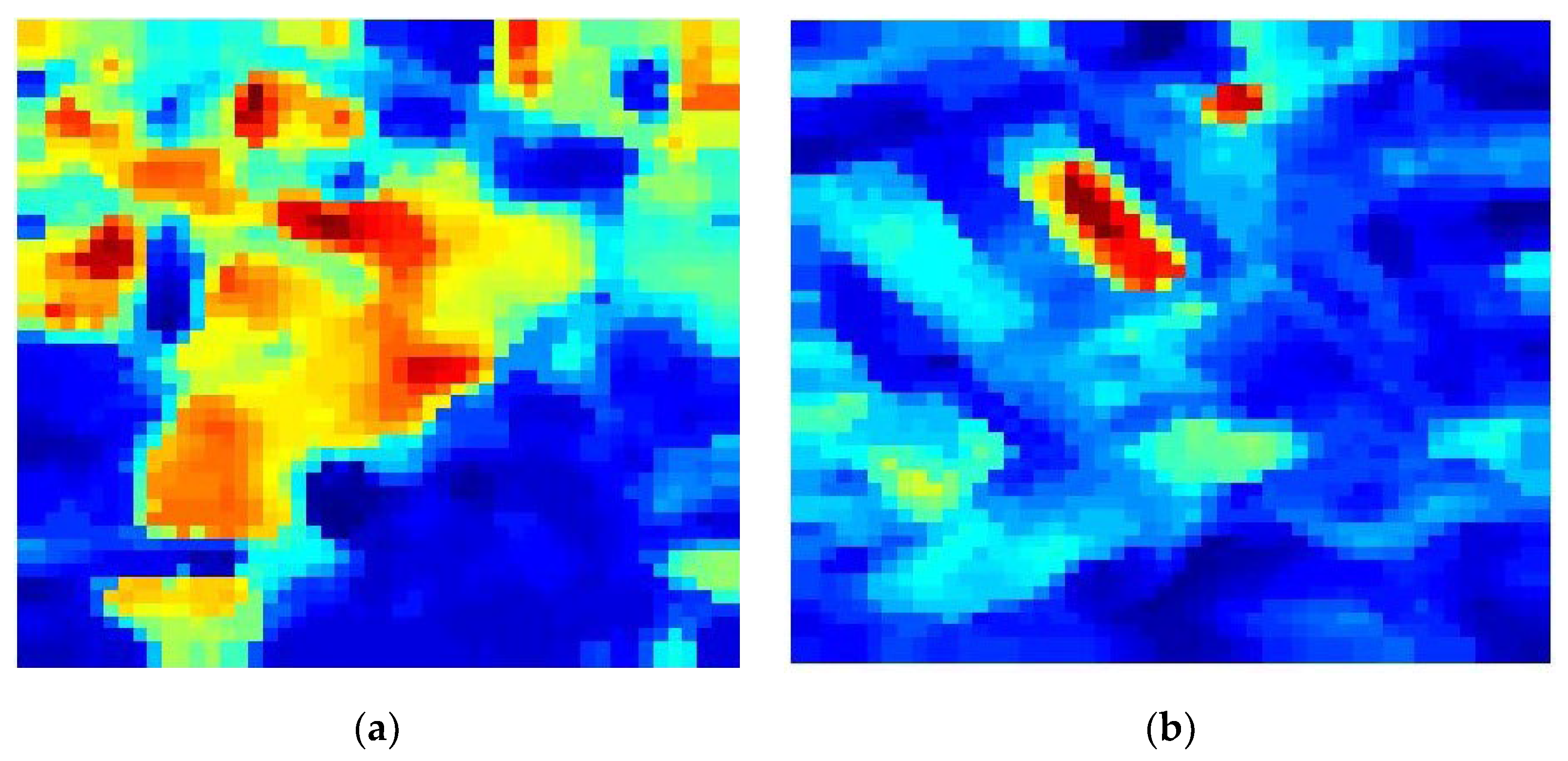

3.1. Distributed Intensity Filtering

- Locating the neighborhood about the point to be examined.

- Using the center value, examine the pixel intensities of the neighborhood.

- Substitute the analyzed result from the previous step for the original pixel value.

3.2. Extended Differential Pattern

| Algorithm 1. Extended Differential Pattern |

| Input: Enhanced Image ‘’ Output: Texture pattern ‘’ S-1: Initialize the 5 × 5 window matrix S-2: Project window over the enhanced image () For ( to row_size-2) For (j = 3 to Column_size-2) S-3: Compute the median value for the window If (, i−1,j)>= && (i−1,j+1) ≥ (1) = 1; Else if (i−1,j)<&&(i−1,j+1) ≥ (2) = 2; Else if (i−1,j)<&& (i−1,j+1) < (3) = 3; Else if (i−1,j)>= && (i−1,j+1) < (4) = 4; End if S-5: Compute the magnitude value from the newlyformed window by using Equation (4) S-6: Compute the patterns S-7: For (𝑖=2 to (𝑅𝑜𝑤_𝑠𝑖𝑧𝑒)−1) For (𝑗=2 to (𝐶𝑜𝑙𝑢𝑚𝑛_𝑠𝑖𝑧𝑒)−1) Assign the original image to the temporary variable S-8: Check the condition S-9: Compute the patterns End Loop j End Loop i S-10: Perform the bitwise OR operation between two patterns |

3.3. Active Learning

Pixel-Certainty Active Learning

| PCAL Algorithm |

| Input: Image Pattern Output: Clustering Output (C) Step_1: Initialize the cluster for output (C) and the variable (m) to store the minimum index Step_2: Select the sample from the patterns Step_3: Compute the d distance among samples Step_4: Extract minimum index score correspond to minimum distance ω = min (d) Step_5: Construct the array() for minimum index, distance values Step_8: Replace the index with the best index ω and best = ω. Step_9: Update the distance, index and cluster values Step_10: Update the Distance function by using the following equation |

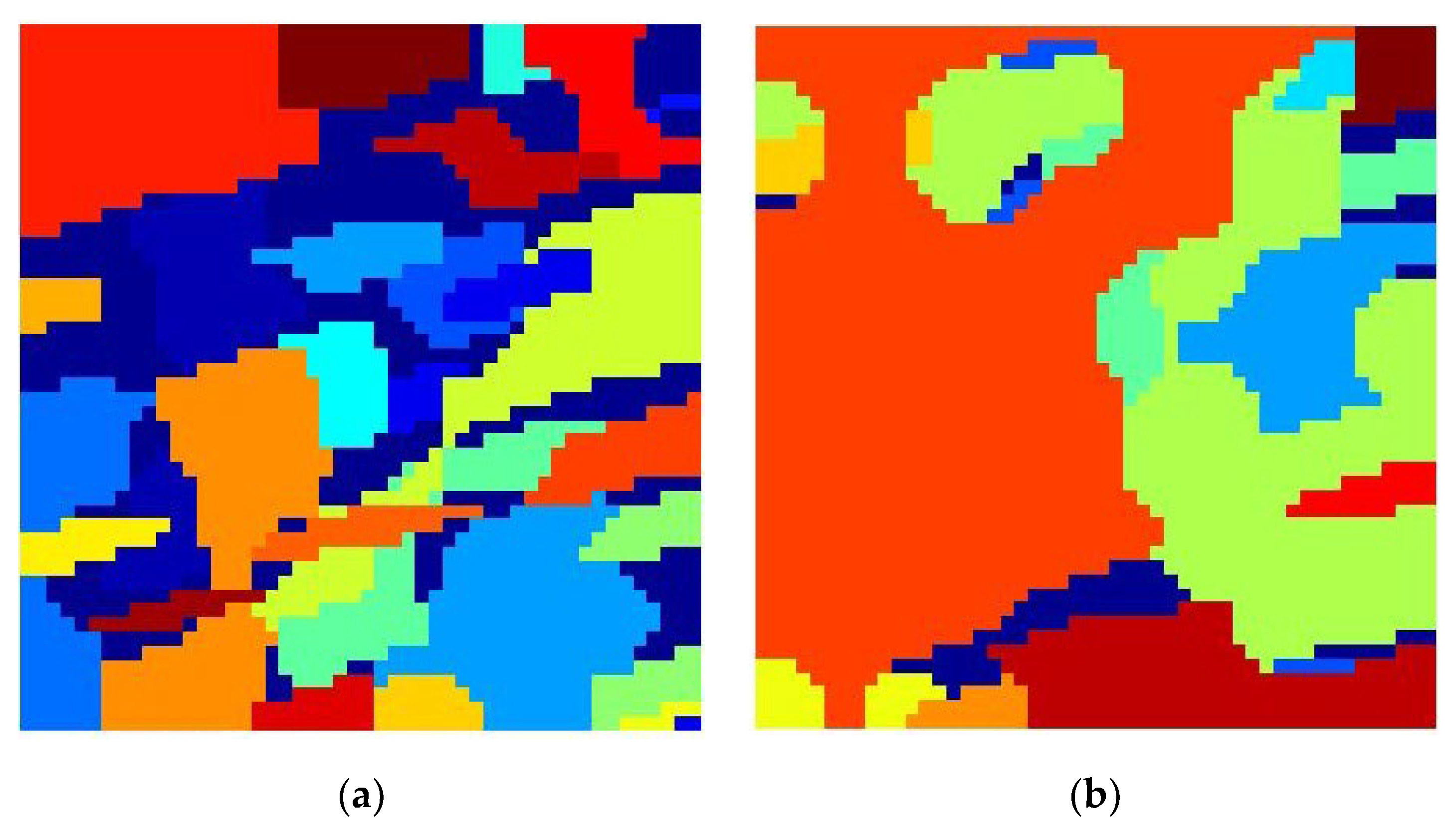

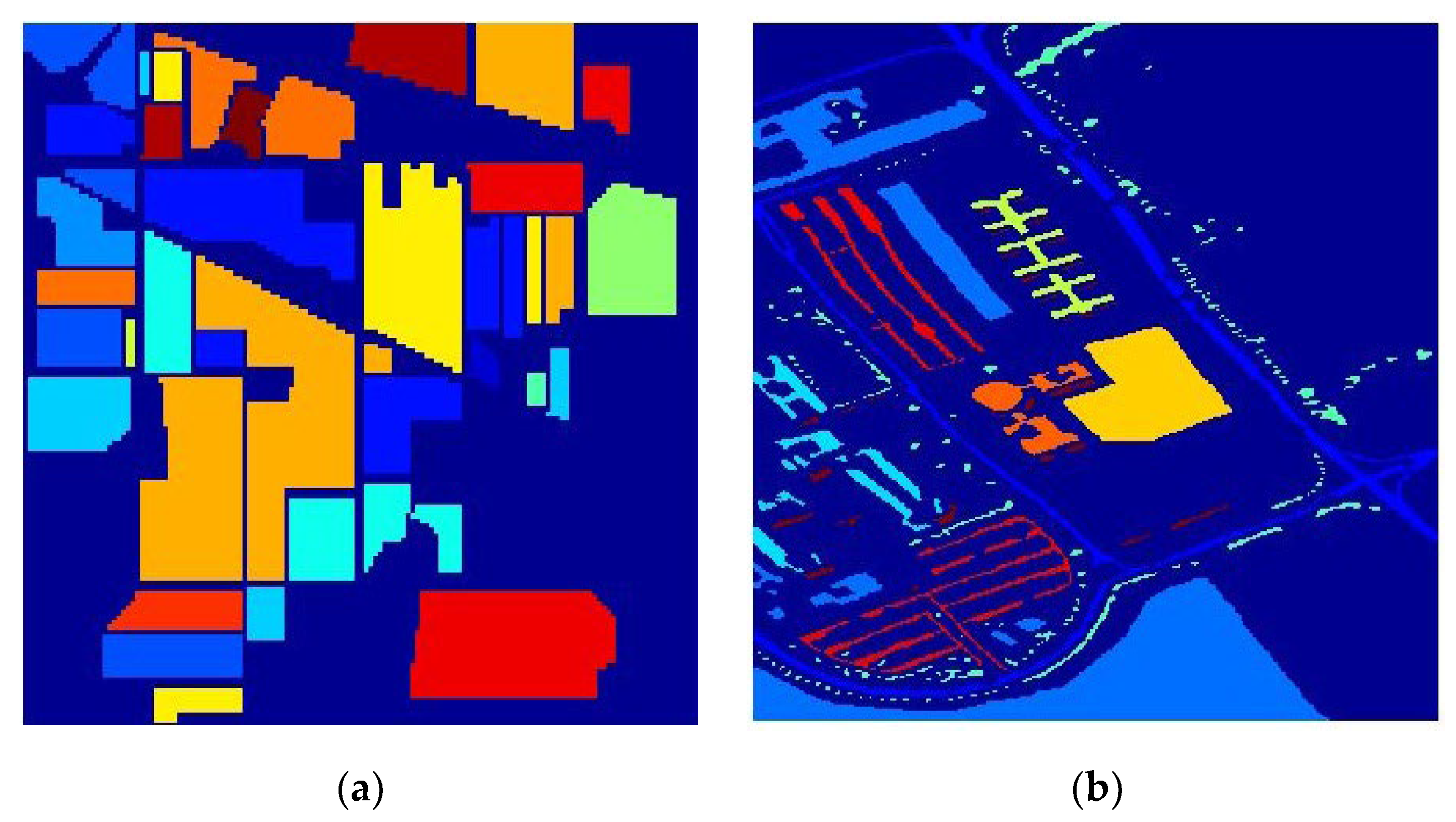

4. Performance Analysis

4.1. Classification Accuracy and Kappa Coefficient Analysis

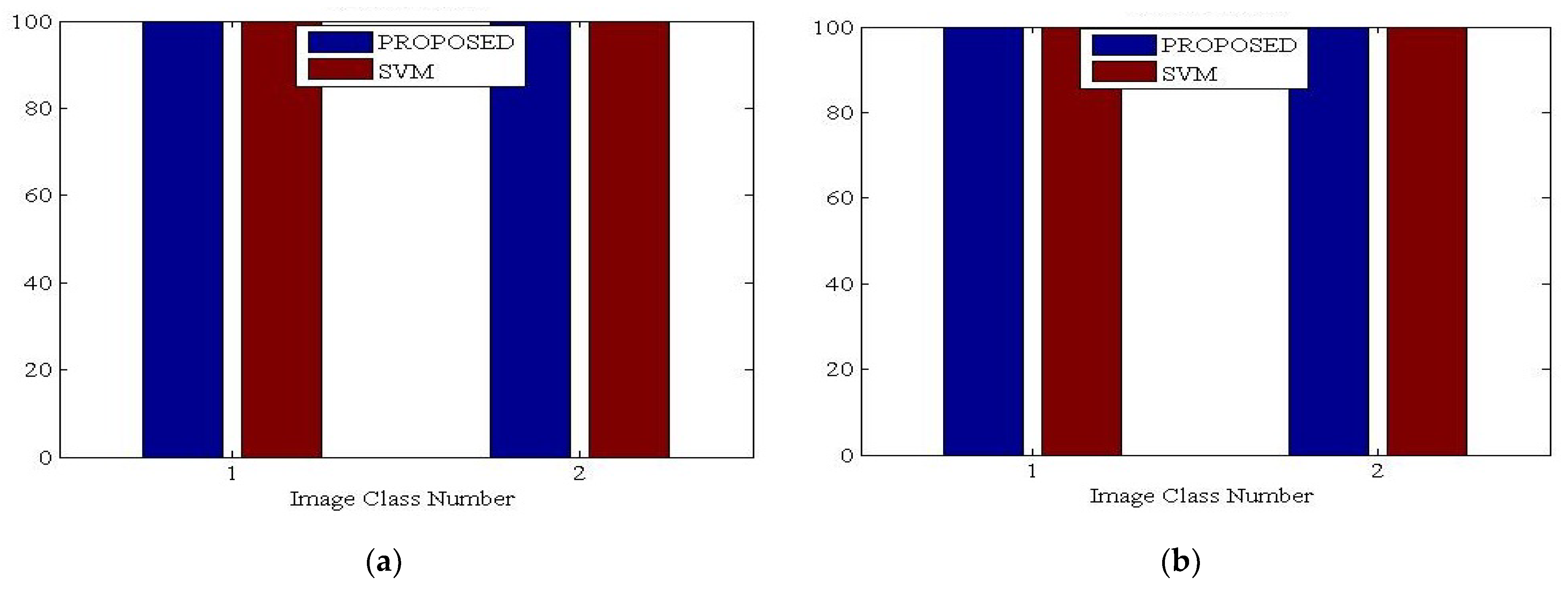

4.2. Acceptance/Rejection Rate Analysis

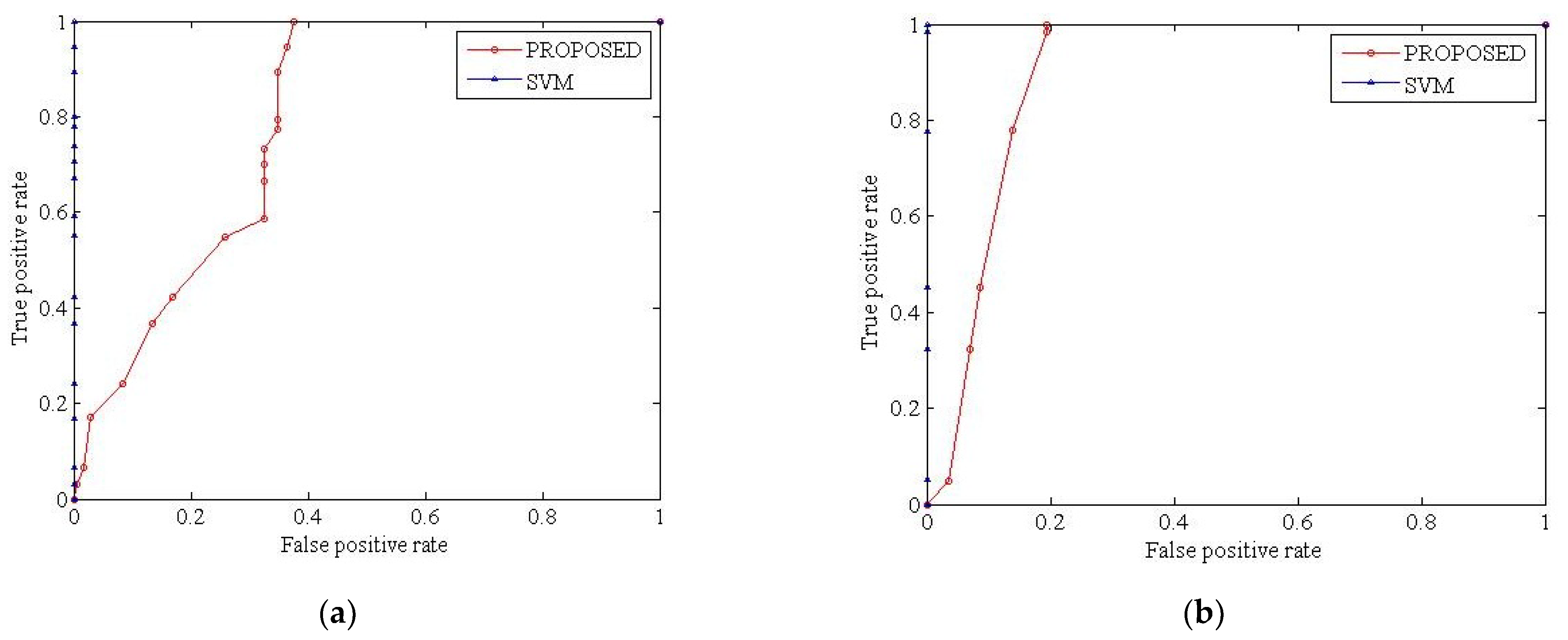

4.3. ROC Analysis

4.4. Overall Accuracy Analysis

4.5. Accuracy Analysis with Existing AL Approaches

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pradhan, S.R.M.K.; Sinha, B.L. Extended differential pattern-based large scale live active learning model for classification of remote sensing data. Int. J. Chem. Stud. 2019, 7, 1610–1620. [Google Scholar]

- Haq, M.A. Intellligent sustainable agricultural water practice using multi sensor spatiotemporal evolution. Environ. Technol. 2021, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Benediktsson, J.A.; Chanussot, J.; Moon, W.M. Very High-Resolution Remote Sensing: Challenges and Opportunities [Point of View]. Proc. IEEE 2012, 100, 1907–1910. [Google Scholar] [CrossRef]

- Camps-Valls, G.; Tuia, D.; Bruzzone, L.; Benediktsson, J.A. Advances in Hyperspectral Image Classification: Earth Monitoring with Statistical Learning Methods. IEEE Signal Process. Mag. 2014, 31, 45–54. [Google Scholar] [CrossRef]

- Fauvel, M.; Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J.; Tilton, J.C. Advances in spectral-spatial classification of hyperspectral images. Proc. IEEE 2013, 101, 652–675. [Google Scholar] [CrossRef]

- Persello, C.; Boularias, A.; Dalponte, M.; Gobakken, T.; Naesset, E.; Schölkopf, B. Cost-Sensitive Active Learning With Lookahead: Optimizing Field Surveys for Remote Sensing Data Classification. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6652–6664. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, H.L.; Prasad, S.; Pasolli, E.; Jung, J.; Crawford, M. Ensemble Multiple Kernel Active Learning for Classification of Multisource Remote Sensing Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 845–858. [Google Scholar] [CrossRef]

- Li, X.; Guo, Y. Adaptive active learning for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 859–866. [Google Scholar]

- Pasolli, E.; Melgani, F.; Tuia, D.; Pacifici, F.; Emery, W.J. SVM Active Learning Approach for Image Classification Using Spatial Information. IEEE Trans. Geosci. Remote Sens. 2013, 52, 2217–2233. [Google Scholar] [CrossRef]

- Haq, M.A. Planetscope Nanosatellites Image Classification Using Machine Learning. Comput. Syst. Sci. Eng. 2022, 42, 1031–1046. [Google Scholar] [CrossRef]

- Jia, S.; Ji, Z.; Qian, Y.; Shen, L. Unsupervised Band Selection for Hyperspectral Imagery Classification without Manual Band Removal. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 531–543. [Google Scholar] [CrossRef]

- Bioucas-Dias, J.M.; Plaza, A.; Dobigeon, N.; Parente, M.; Du, Q.; Gader, P.; Chanussot, J. Hyperspectral unmixing overview: Geometrical, statistical, and sparse regression-based approaches. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 354–379. [Google Scholar] [CrossRef] [Green Version]

- Dopido, I.; Villa, A.; Plaza, A.; Gamba, P. A Quantitative and Comparative Assessment of Unmixing-Based Feature Extraction Techniques for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 421–435. [Google Scholar] [CrossRef]

- Srinivas, U.; Chen, Y.; Monga, V.; Nasrabadi, N.M.; Tran, T.D. Exploiting Sparsity in Hyperspectral Image Classification via Graphical Models. IEEE Geosci. Remote Sens. Lett. 2012, 10, 505–509. [Google Scholar] [CrossRef]

- Huang, X.; Guan, X.; Benediktsson, J.A.; Zhang, L.; Li, J.; Plaza, A.; Dalla Mura, M. Multiple Morphological Profiles from Multicomponent-Base Images for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4653–4669. [Google Scholar] [CrossRef]

- Kang, X.; Li, S.; Fang, L.; Benediktsson, J.A. Intrinsic Image Decomposition for Feature Extraction of Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2241–2253. [Google Scholar] [CrossRef]

- Li, J.; Huang, X.; Gamba, P.; Bioucas-Dias, J.M.B.; Zhang, L.; Benediktsson, J.A.; Plaza, A. Multiple Feature Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1592–1606. [Google Scholar] [CrossRef]

- Liu, T.; Gu, Y.; Jia, X.; Benediktsson, J.A.; Chanussot, J. Class-Specific Sparse Multiple Kernel Learning for Spectral–Spatial Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7351–7365. [Google Scholar] [CrossRef]

- Haq, Q.S.U.; Tao, L.; Sun, F.; Yang, S. A Fast and Robust Sparse Approach for Hyperspectral Data Classification Using a Few Labeled Samples. IEEE Trans. Geosci. Remote Sens. 2011, 50, 2287–2302. [Google Scholar] [CrossRef]

- Zhang, X.; Song, Q.; Gao, Z.; Zheng, Y.; Weng, P.; Jiao, L.C. Spectral–Spatial Feature Learning Using Cluster-Based Group Sparse Coding for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4142–4159. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Olmo, M.; Abarca-Hernandez, F.; Atkinson, P.M.; Jeganathan, C. Random Forest classification of Mediterranean land cover using multi-seasonal imagery and multi-seasonal texture. Remote Sens. Environ. 2012, 121, 93–107. [Google Scholar] [CrossRef]

- Yadav, C.S.; Sharan, A. Feature Learning Using Random Forest and Binary Logistic Regression for ATDS. In Applications of Machine Learning; Springer: Berlin/Heidelberg, Germany, 2020; pp. 341–352. [Google Scholar]

- Xia, J.; Chanussot, J.; Du, P.; He, X. Spectral–Spatial Classification for Hyperspectral Data Using Rotation Forests with Local Feature Extraction and Markov Random Fields. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2532–2546. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. Active Learning for Domain Adaptation in the Supervised Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4468–4483. [Google Scholar] [CrossRef]

- Polewski, P.; Yao, W.; Heurich, M.; Krzystek, P.; Stilla, U. Active learning approach to detecting standing dead trees from ALS point clouds combined with aerial infrared imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 10–18. [Google Scholar]

- Gao, L.; Li, J.; Khodadadzadeh, M.; Plaza, A.; Zhang, B.; He, Z.; Yan, H. Subspace-Based Support Vector Machines for Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2014, 12, 349–353. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Combining Support Vector Machines and Markov Random Fields in an Integrated Framework for Contextual Image Classification. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2734–2752. [Google Scholar] [CrossRef]

- Zhou, X.; Prasad, S.; Crawford, M.M. Wavelet-Domain Multiview Active Learning for Spatial-Spectral Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4047–4059. [Google Scholar] [CrossRef]

- Haq, M.A. CDLSTM: A Novel Model for Climate Change Forecasting. Comput. Mater. Contin. 2022, 71, 2363–2381. [Google Scholar] [CrossRef]

- Cui, Y.; Wang, J.; Liu, S.; Wang, L. Hyperspectral image feature reduction based on Tabu Search Algorithm. J. Inf. Hiding Multim. Signal Process. 2015, 6, 154–162. [Google Scholar]

- Zhang, E.; Jiao, L.; Zhang, X.; Liu, H.; Wang, S. Class-Level Joint Sparse Representation for Multifeature-Based Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4160–4177. [Google Scholar] [CrossRef]

- Chunsen, Z.; Yiwei, Z.; Chenyi, F. Spectral–Spatial Classification of Hyperspectral Images Using Probabilistic Weighted Strategy for Multifeature Fusion. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1562–1566. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Spectral–Spatial Classification of Hyperspectral Data Using Loopy Belief Propagation and Active Learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 844–856. [Google Scholar] [CrossRef]

- Ayerdi, B.; Romay, M.G. Hyperspectral Image Analysis by Spectral–Spatial Processing and Anticipative Hybrid Extreme Rotation Forest Classification. IEEE Trans. Geosci. Remote Sens. 2015, 54, 2627–2639. [Google Scholar] [CrossRef]

- Wan, L.; Tang, K.; Li, M.; Zhong, Y.; Qin, A.K. Collaborative Active and Semisupervised Learning for Hyperspectral Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2384–2396. [Google Scholar] [CrossRef]

- Demir, B.; Persello, C.; Bruzzone, L. Batch-Mode Active-Learning Methods for the Interactive Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1014–1031. [Google Scholar] [CrossRef]

- Di, W.; Crawford, M.M. Active Learning via Multi-View and Local Proximity Co-Regularization for Hyperspectral Image Classification. IEEE J. Sel. Top. Signal Process. 2011, 5, 618–628. [Google Scholar] [CrossRef]

- Sun, S.; Zhong, P.; Xiao, H.; Wang, R. Active Learning With Gaussian Process Classifier for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1746–1760. [Google Scholar] [CrossRef]

- Shrivastava, V.K.; Pradhan, M.K. Rice plant disease classification using color features: A machine learning paradigm. J. Plant Pathol. 2021, 103, 17–26. [Google Scholar] [CrossRef]

- Pradhan, M.K.; Minz, S.; Shrivastava, V.K. A Kernel-Based Extreme Learning Machine Framework for Classification of Hyperspectral Images Using Active Learning. J. Indian Soc. Remote Sens. 2019, 47, 1693–1705. [Google Scholar] [CrossRef]

- Pradhan, M.K.; Minz, S.; Shrivastava, V.K. Entropy Query by Bagging-Based Active Learning Approach in the Extreme Learning Machine Framework for Hyperspectral Image Classification. Curr. Sci. 2020, 119, 934–943. [Google Scholar] [CrossRef]

- Shrivastava, V.K.; Pradhan, M.K.; Minz, S.; Thakur, M.P. Rice plant disease classification using transfer learning of deep convolutional neural network. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 3, 631–635. [Google Scholar] [CrossRef]

- Shrivastava, V.K.; Pradhan, M.K.; Thakur, M.P. Application of Pre-Trained Deep Convolutional Neural Networks for Rice Plant Disease Classification. In Proceedings of the 2021 International Conference on Artificial Intelligence and Smart Systems (ICAIS), Coimbatore, India, 25–27 March 2021; pp. 1023–1030. [Google Scholar]

- Almulihi, A.; Alharithi, F.; Bourouis, S.; Alroobaea, R.; Pawar, Y.; Bouguila, N. Oil Spill Detection in SAR Images Using Online Extended Variational Learning of Dirichlet Process Mixtures of Gamma Distributions. Remote Sens. 2021, 13, 2991. [Google Scholar] [CrossRef]

- Alam, M.S.; Islam, M.N.; Bal, A.; Karim, M.A. Hyperspectral target detection using Gaussian filter and post-processing. Opt. Lasers Eng. 2008, 46, 817–822. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, M.; Zhang, J.; Kang, S.; Wang, Y. Improved Active Deep Learning for Semi-Supervised Classification of Hyperspectral Image. Remote Sens. 2021, 14, 171. [Google Scholar] [CrossRef]

- HSI dataset: KSC and BOT. [Online]. 2017. Available online: http://www.ehu.eus/ccwintco/index.php/Hyperspectral_Remote_sensing_Scenes (accessed on 22 September 2017).

| S.No | Variable | Parameter |

|---|---|---|

| 1 | α | Distance Function |

| 2 | β | Accumulation Array |

| 3 | Total Sum Distance | |

| 4 | Index | |

| 5 | λ | Best Total Sum Distance |

| 6 | Summed | |

| 7 | μ | Best Summed |

| 8 | Emptyerror |

| Class | Train | Test |

|---|---|---|

| Asphalt | 310 | 6206 |

| Meadows | 806 | 16,123 |

| Gravel | 94 | 1880 |

| Trees | 146 | 2933 |

| Metal | 67 | 1345 |

| Bare Soil | 251 | 5029 |

| Bitumen | 66 | 1330 |

| Bricks | 184 | 3682 |

| Shadow | 47 | 947 |

| Total | 1971 | 39,475 |

| Class | Train | Test |

|---|---|---|

| Oats | 10 | 20 |

| Grass-mowed | 13 | 26 |

| Alfalfa | 27 | 54 |

| Bldg-grass-drives | 50 | 380 |

| Corn | 50 | 234 |

| Corn-Min | 50 | 834 |

| Corn-notill | 50 | 1434 |

| Grass/Pasture | 50 | 497 |

| Grass/Trees | 50 | 747 |

| Hay-windrowed | 50 | 489 |

| Soybeans-clean | 50 | 614 |

| Soybeans-Min | 50 | 2468 |

| Soybeans-notill | 50 | 968 |

| Stone-steel-towers | 50 | 95 |

| Wheat | 50 | 212 |

| Woods | 50 | 1294 |

| Total | 700 | 10,366 |

| CLASS | SVM-DMP [31] | SRC-DMP [31] | JSRC-DMP [31] | Raw [32] | MNF [32] | VS-SVM [32] | EDP-AL | PCAL |

|---|---|---|---|---|---|---|---|---|

| 1 | 82.75 | 83.14 | 85.1 | 82.93 | 68.85 | 100 | 97.92 | 97.6 |

| 2 | 83.48 | 87.85 | 90.92 | 60.66 | 73.99 | 94.26 | 97.8 | 99.6 |

| 3 | 87.83 | 89.18 | 86.74 | 41.07 | 53.99 | 91.39 | 99.96 | 100 |

| 4 | 91.35 | 88.92 | 87.34 | 31.82 | 55.76 | 82.65 | 99.96 | 99.92 |

| 5 | 92.22 | 93.41 | 91.36 | 59.13 | 80.39 | 96.77 | 100 | 99.76 |

| 6 | 96.11 | 94.36 | 92.98 | 88.29 | 96.3 | 99.59 | 100 | 100 |

| 7 | 92.5 | 97.08 | 81.67 | 96.3 | 100 | 100 | 100 | 99.96 |

| 8 | 97.16 | 97.13 | 95.54 | 97.1 | 99.35 | 100 | 99.96 | 99.44 |

| 9 | 51.58 | 56.32 | 48.95 | 63.64 | 100 | 100 | 100 | 100 |

| 10 | 71.64 | 83.48 | 86.83 | 61.32 | 61.83 | 88.54 | 100 | 99.72 |

| 11 | 90.22 | 90.51 | 96.17 | 78.29 | 83.24 | 97.42 | 100 | 100 |

| 12 | 73.46 | 78.78 | 79.78 | 45.29 | 56.86 | 97.93 | 100 | 99.84 |

| 13 | 97.61 | 97.91 | 98.61 | 88.44 | 97.14 | 99.66 | 100 | 100 |

| 14 | 97.99 | 98.19 | 98.9 | 89.99 | 93.34 | 100 | 100 | 99.8 |

| 15 | 94.93 | 96.45 | 88.53 | 56.28 | 70.36 | 94.88 | 100 | 99.76 |

| 16 | 78.11 | 79.56 | 74.67 | 98.89 | 95.7 | 97.84 | 100 | 99.96 |

| Kappa Coeff | 86.65 | 89.21 | 90.71 | 62.5 | 72.92 | 95.16 | 96.42 | 97.1 |

| CLASS | SVM-DMP [31] | SRC-DMP [31] | JSRC-DMP [31] | RAW [32] | MNF [32] | VS-SVM [32] | EDP-AL | PCAL |

|---|---|---|---|---|---|---|---|---|

| 1 | 93.77 | 84.41 | 87.95 | 81.99 | 84.86 | 92.12 | 99.68 | 98.2 |

| 2 | 97.35 | 97.09 | 97.89 | 94.22 | 84.5 | 99.56 | 98.4 | 100 |

| 3 | 65.04 | 56.76 | 61.9 | 68.11 | 74.32 | 85.65 | 98.72 | 99.48 |

| 4 | 93.7 | 90.64 | 93.75 | 79.92 | 75.06 | 98.24 | 97.97 | 99.52 |

| 5 | 72.91 | 83.9 | 89.9 | 97.94 | 99.55 | 99.7 | 97.49 | 99.84 |

| 6 | 81.84 | 64.12 | 71.66 | 65.43 | 78.58 | 94.43 | 97.01 | 99.68 |

| 7 | 65.28 | 75.05 | 77.43 | 67.85 | 82.72 | 90.45 | 96.53 | 100 |

| 8 | 89.35 | 72.21 | 79.21 | 67.79 | 78.9 | 92.34 | 96.05 | 100 |

| 9 | 69.03 | 84.02 | 89.21 | 100 | 100 | 100 | 95.57 | 100 |

| Kappa Coeff | 86.7 | 80.28 | 84.65 | 76.76 | 77.89 | 94.72 | 95.87 | 97.72 |

| METHODOLOGY (Percentage Training for IP and PU) | Overall Accuracy | |

|---|---|---|

| INDIAN PINES (IP) | PAVIA UNIVERSITY (PU) | |

| MPM-LMP [33] 10.00% (IP), 0.68%(PU) | 94.76 | 85.78 |

| AHERF [34] 2.50% (IP), 3.00% (PU) | 93.67 | 98.09 |

| LORSAL-MILL [33] 10.00% (IP), 0.68% (PU) | 92.72 | 85.57 |

| AHERF [34] 3.00% (IP), 2.50% (PU) | 93.58 | 97.17 |

| LORSAL [33] 10.00% (IP), 0.68% (PU) | 82.6 | 85.42 |

| SVM [33] 10.00% (IP), 0.68% | 80.56 | 80.99 |

| AHERF [34] 1.50% (IP), 0.50% (PU) | 87.93 | 87.81 |

| PCAL | 97.6 | 98.48 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yadav, C.S.; Pradhan, M.K.; Gangadharan, S.M.P.; Chaudhary, J.K.; Singh, J.; Khan, A.A.; Haq, M.A.; Alhussen, A.; Wechtaisong, C.; Imran, H.; et al. Multi-Class Pixel Certainty Active Learning Model for Classification of Land Cover Classes Using Hyperspectral Imagery. Electronics 2022, 11, 2799. https://doi.org/10.3390/electronics11172799

Yadav CS, Pradhan MK, Gangadharan SMP, Chaudhary JK, Singh J, Khan AA, Haq MA, Alhussen A, Wechtaisong C, Imran H, et al. Multi-Class Pixel Certainty Active Learning Model for Classification of Land Cover Classes Using Hyperspectral Imagery. Electronics. 2022; 11(17):2799. https://doi.org/10.3390/electronics11172799

Chicago/Turabian StyleYadav, Chandra Shekhar, Monoj Kumar Pradhan, Syam Machinathu Parambil Gangadharan, Jitendra Kumar Chaudhary, Jagendra Singh, Arfat Ahmad Khan, Mohd Anul Haq, Ahmed Alhussen, Chitapong Wechtaisong, Hazra Imran, and et al. 2022. "Multi-Class Pixel Certainty Active Learning Model for Classification of Land Cover Classes Using Hyperspectral Imagery" Electronics 11, no. 17: 2799. https://doi.org/10.3390/electronics11172799

APA StyleYadav, C. S., Pradhan, M. K., Gangadharan, S. M. P., Chaudhary, J. K., Singh, J., Khan, A. A., Haq, M. A., Alhussen, A., Wechtaisong, C., Imran, H., Alzamil, Z. S., & Pattanayak, H. S. (2022). Multi-Class Pixel Certainty Active Learning Model for Classification of Land Cover Classes Using Hyperspectral Imagery. Electronics, 11(17), 2799. https://doi.org/10.3390/electronics11172799