A Reinforcement Learning-Based Routing for Real-Time Multimedia Traffic Transmission over Software-Defined Networking

Abstract

:1. Introduction

2. Related Work

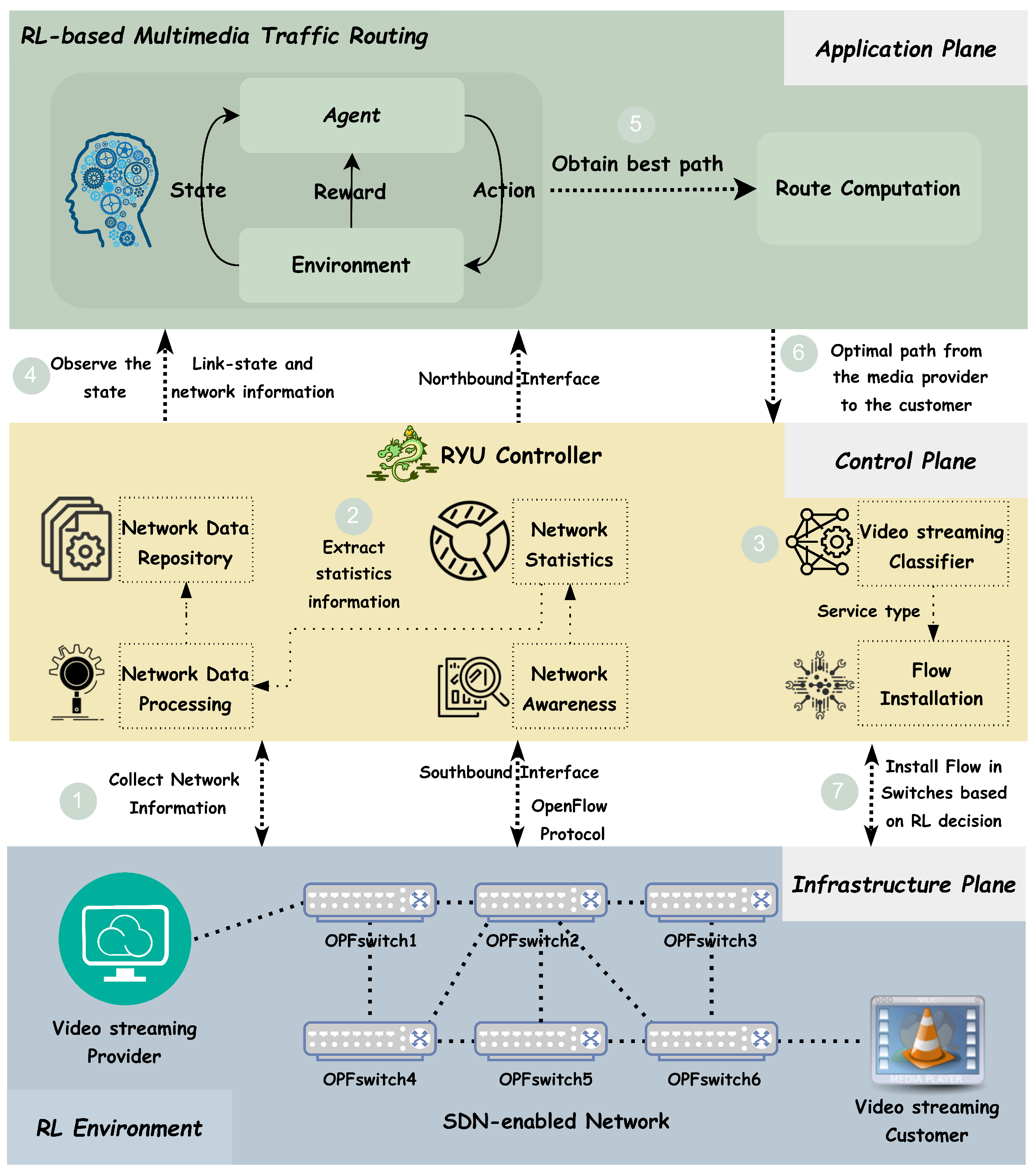

3. RL-Based Multimedia Traffic Routing Architecture

3.1. Architecture and Components

3.1.1. Infrastructure Plane

3.1.2. Control Plane

3.1.3. Application Plane

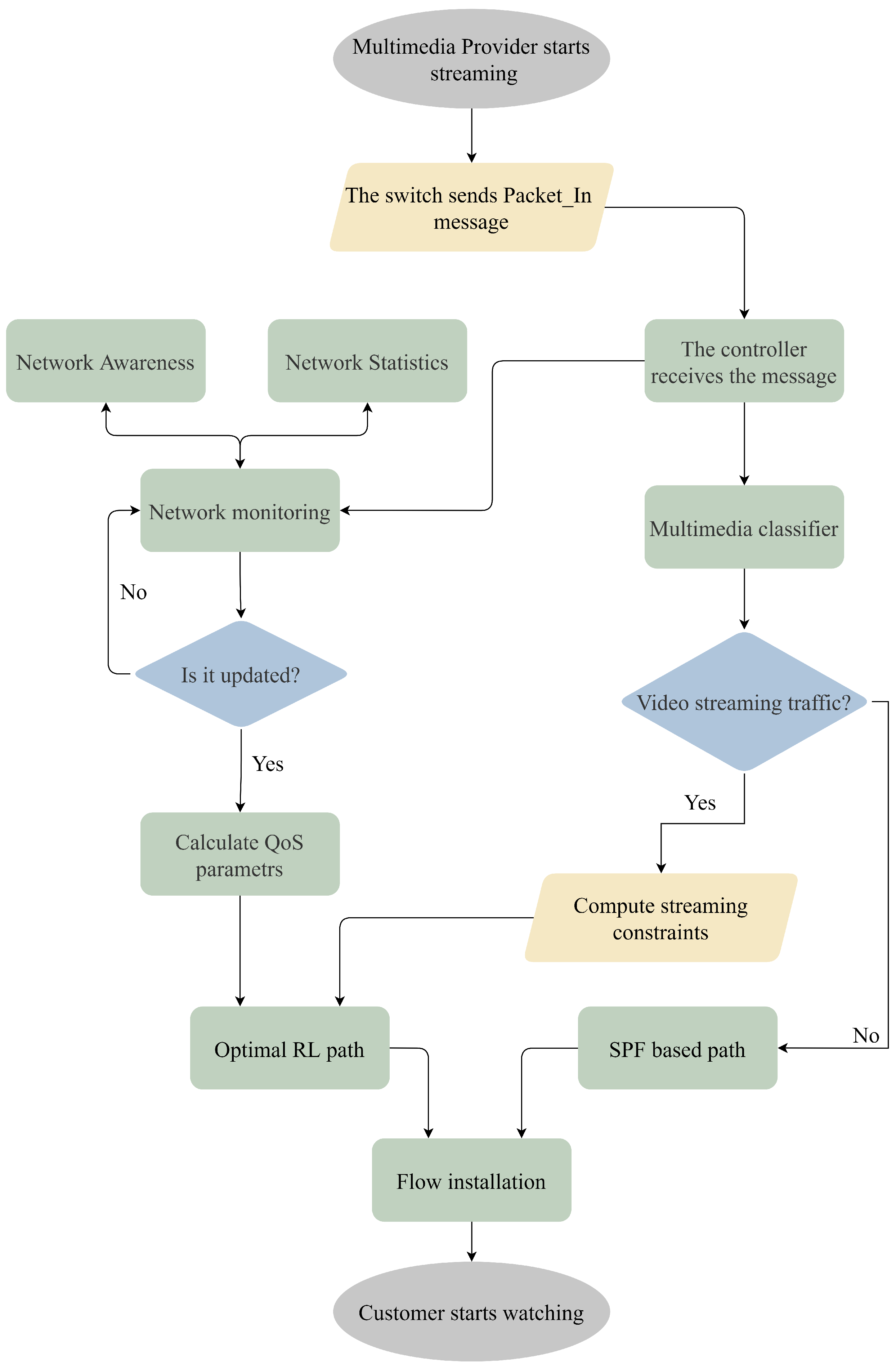

3.2. Process Description

4. RL-Based Decision Making Solution

4.1. Problem Domain

4.2. RL-Based Solution

4.2.1. State Space

4.2.2. Action Space

4.2.3. Exploration-Exploitation Strategy

4.2.4. Reward Function

4.3. RL-Based Multimedia Traffic Routing Algorithm

| Algorithm 1 Q-Learning-based Multimedia Traffic Routing |

|

5. Evaluation

5.1. Test-Bed Preparation

5.2. QoE Metrics Measurements

5.3. Learning Parameters Settings

5.4. Evaluation Scenarios

6. Results and Discussions

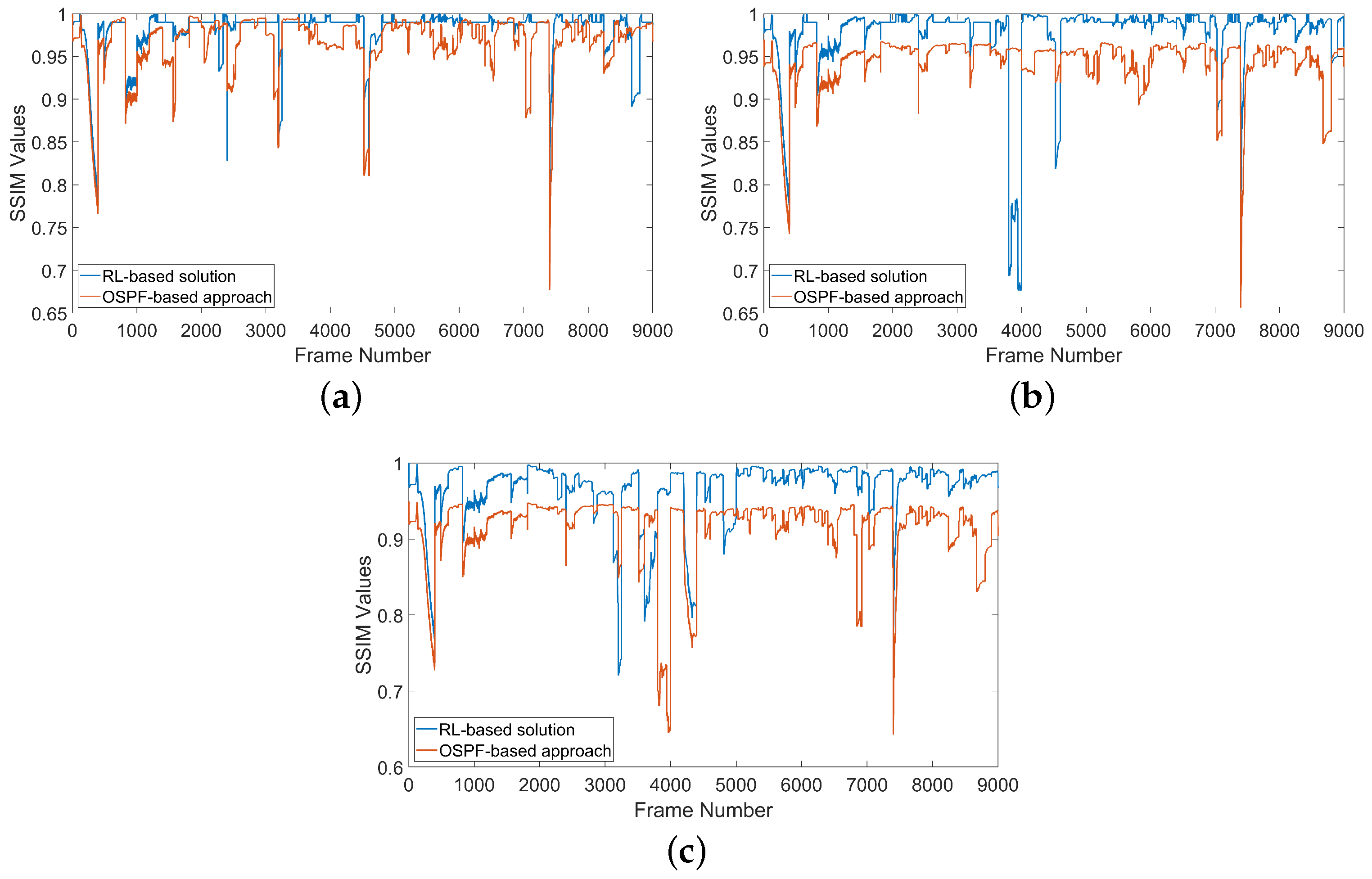

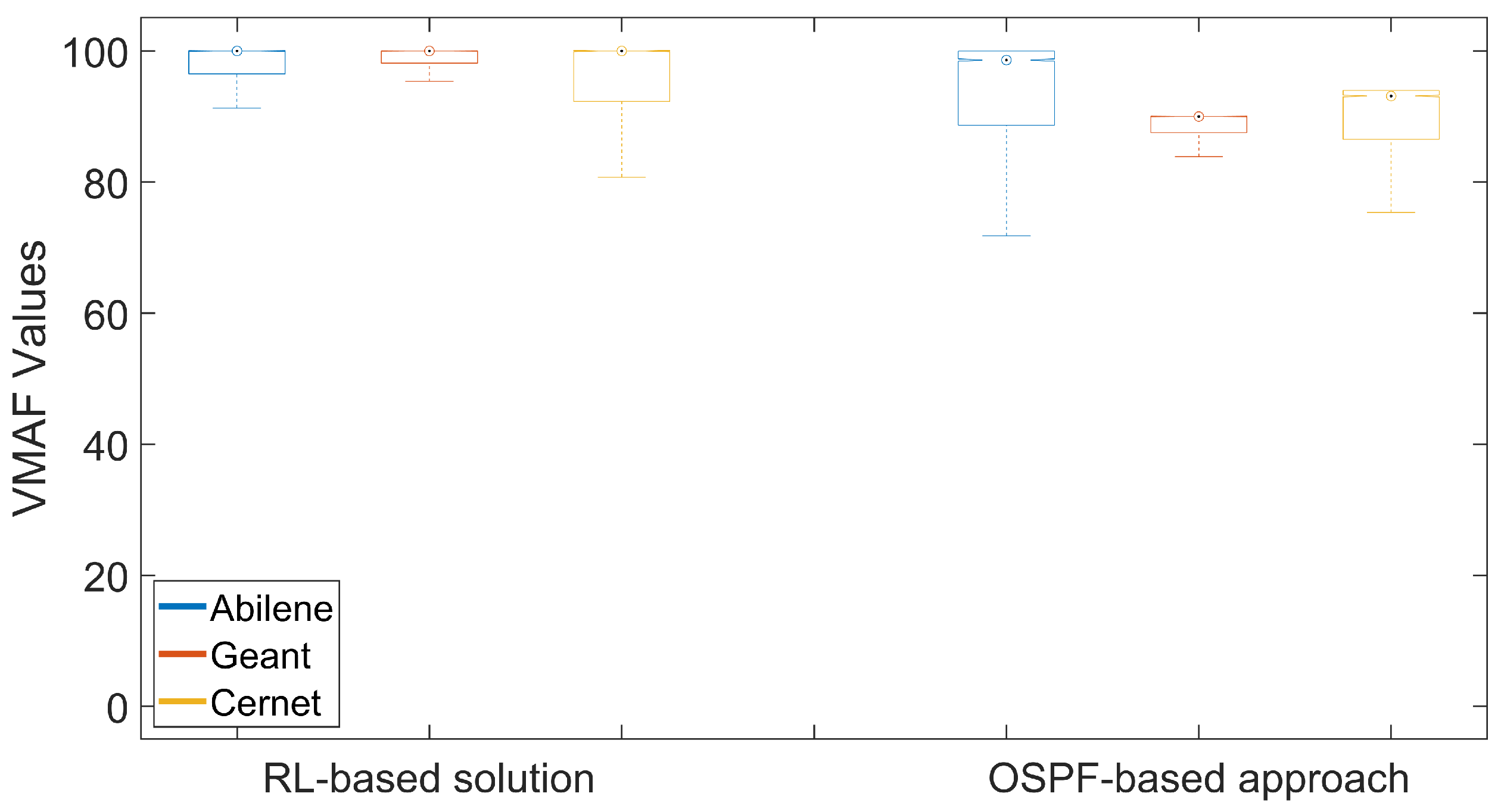

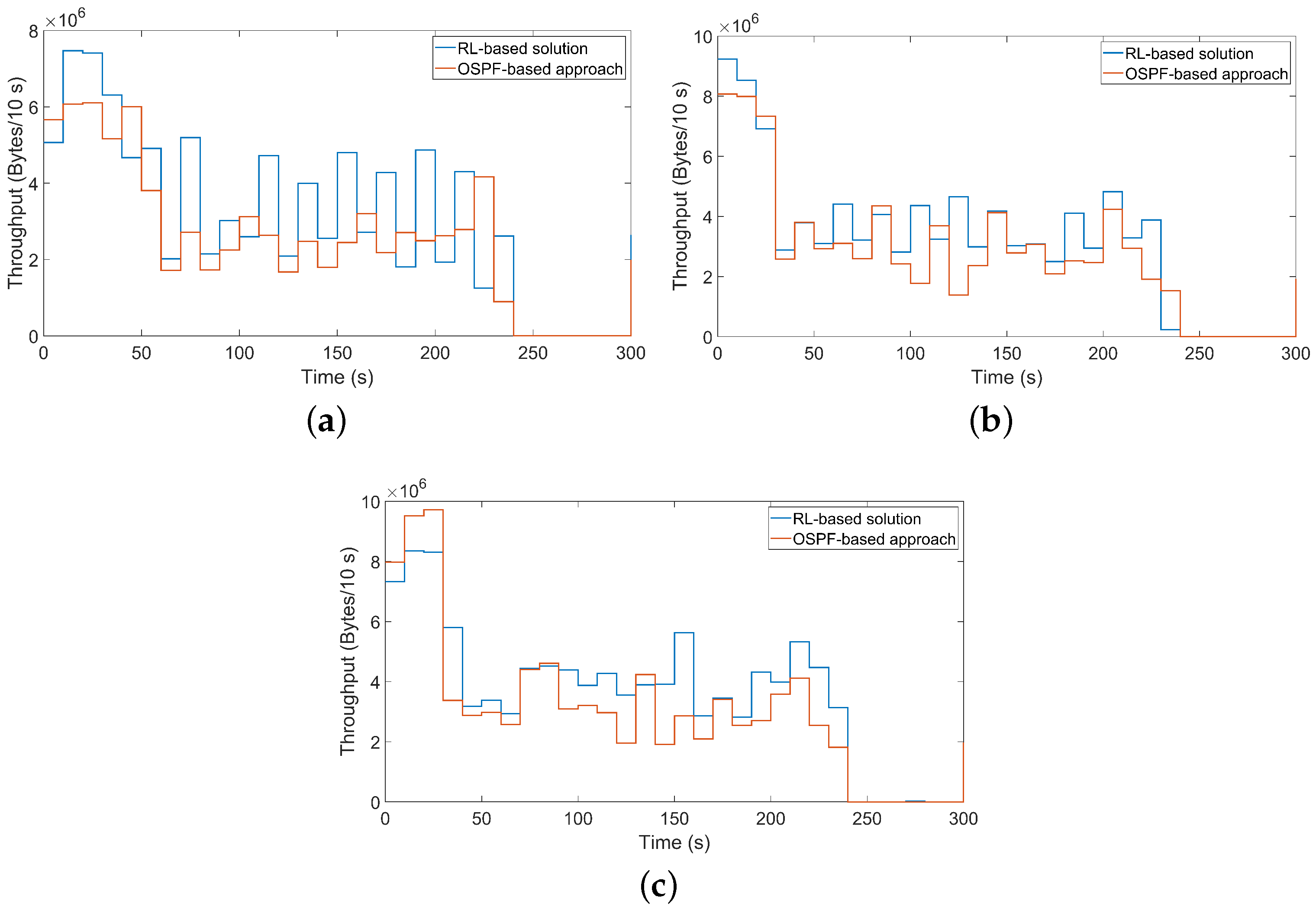

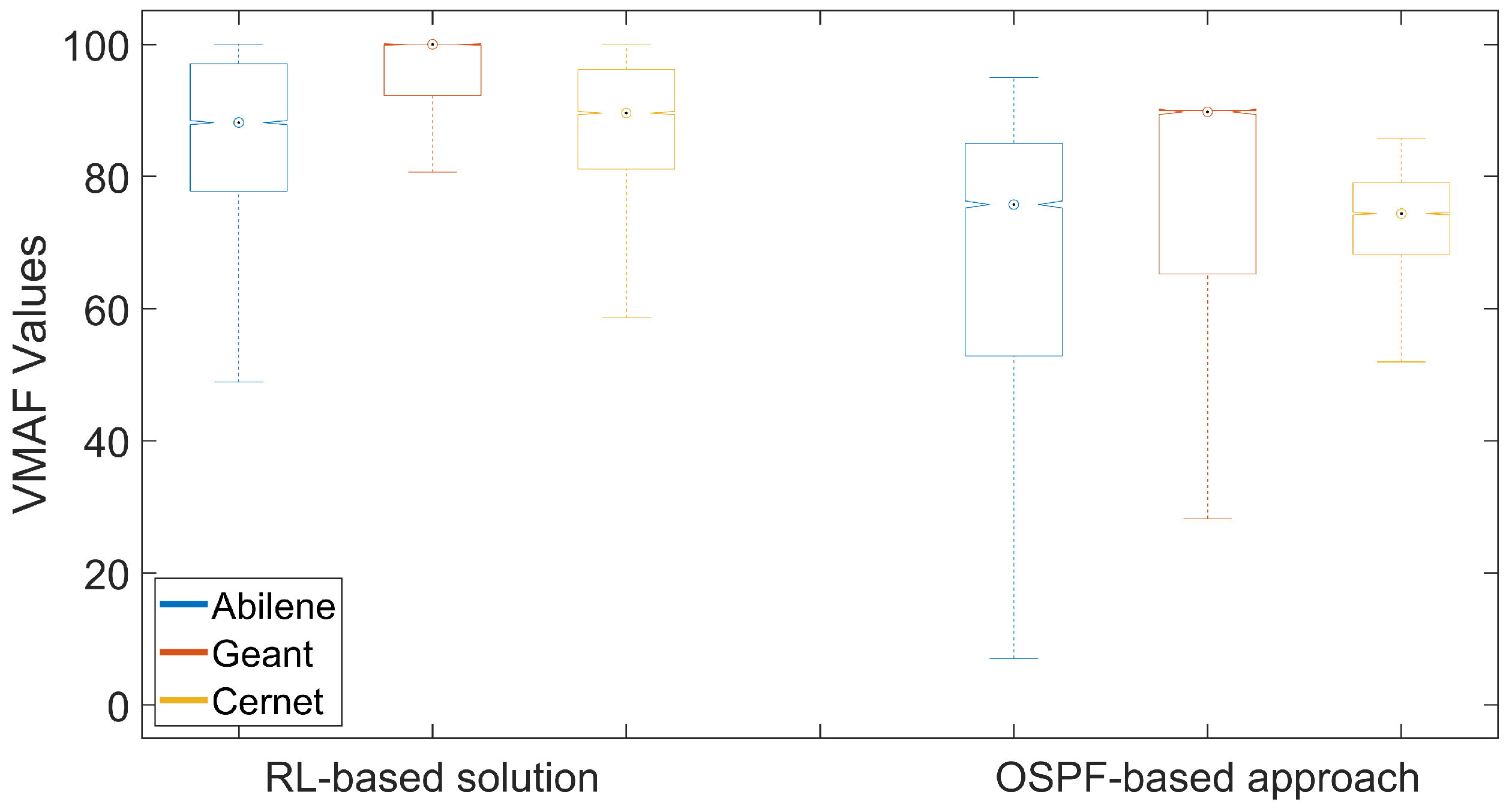

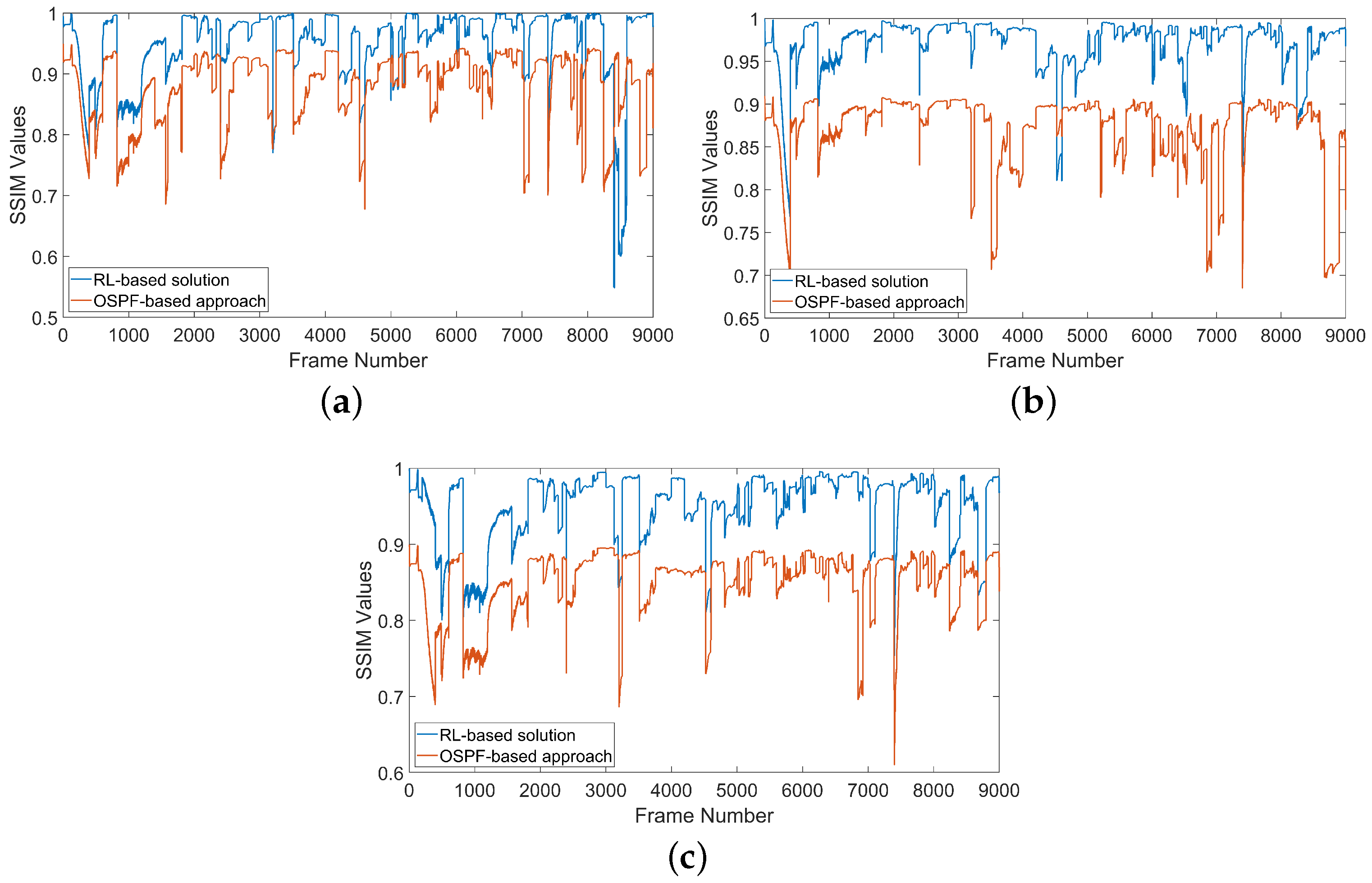

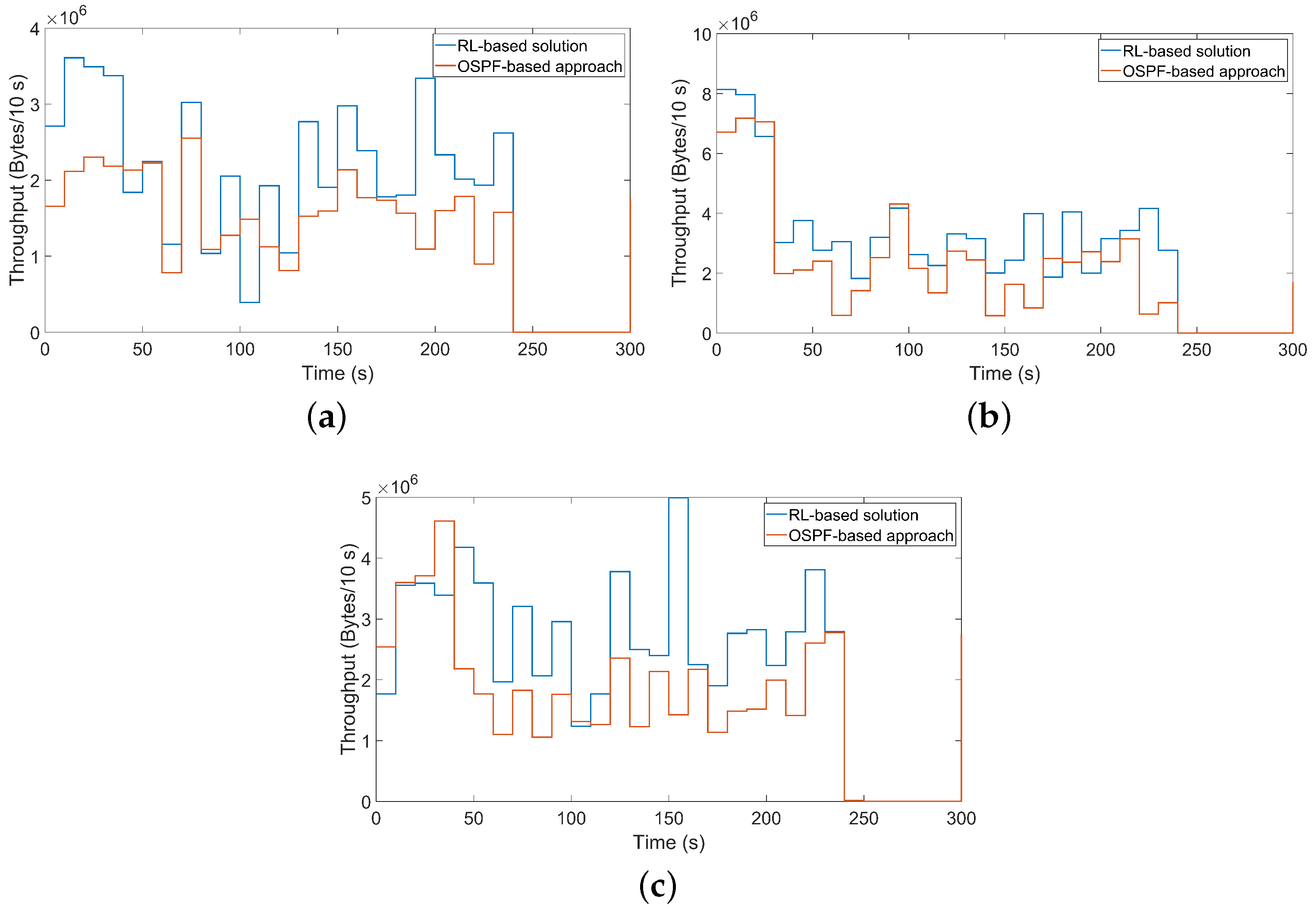

6.1. The Impact of Low Traffic Load on Client Satisfaction

6.2. The Impact of High Traffic Load on Client Satisfaction

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sandvine. The Global Internet Phenomena Report COVID-19 Spotlight; Sandvine: Waterloo, ON, Canada, 2020. [Google Scholar]

- Trestian, R.; Comsa, I.S.; Tuysuz, M.F. Seamless multimedia delivery within a heterogeneous wireless networks environment: Are we there yet? IEEE Commun. Surv. Tutor. 2018, 20, 945–977. [Google Scholar] [CrossRef] [Green Version]

- Doumanoglou, A.; Zioulis, N.; Griffin, D.; Serrano, J.; Phan, T.K.; Jiménez, D.; Zarpalas, D.; Alvarez, F.; Rio, M.; Daras, P. A system architecture for live immersive 3D-media transcoding over 5G networks. In Proceedings of the 2018 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Valencia, Spain, 6–8 June 2018; pp. 11–15. [Google Scholar]

- Jawad, N.; Salih, M.; Ali, K.; Meunier, B.; Zhang, Y.; Zhang, X.; Zetik, R.; Zarakovitis, C.; Koumaras, H.; Kourtis, M.A.; et al. Smart television services using NFV/SDN network management. IEEE Trans. Broadcast. 2019, 65, 404–413. [Google Scholar] [CrossRef]

- Barakabitze, A.A. QoE-Centric Control and Management of Multimedia Services in Software Defined and Virtualized Networks. Ph.D. Thesis, University of Plymouth, Plymouth, UK, 2020. [Google Scholar]

- Martin, A.; Egaña, J.; Flórez, J.; Montalban, J.; Olaizola, I.G.; Quartulli, M.; Viola, R.; Zorrilla, M. Network resource allocation system for QoE-aware delivery of media services in 5G networks. IEEE Trans. Broadcast. 2018, 64, 561–574. [Google Scholar] [CrossRef]

- Comşa, I.S.; Muntean, G.M.; Trestian, R. An innovative machine-learning-based scheduling solution for improving live UHD video streaming quality in highly dynamic network environments. IEEE Trans. Broadcast. 2020, 67, 212–224. [Google Scholar] [CrossRef] [Green Version]

- Huang, X.; Yuan, T.; Qiao, G.; Ren, Y. Deep reinforcement learning for multimedia traffic control in software defined networking. IEEE Netw. 2018, 32, 35–41. [Google Scholar] [CrossRef]

- Grigoriou, E. Quality of Experience Monitoring and Management Strategies for Future Smart Networks. 2020. Available online: https://iris.unica.it/handle/11584/284401 (accessed on 16 February 2022).

- Ullah, Z.; Al-Turjman, F.; Mostarda, L.; Gagliardi, R. Applications of artificial intelligence and machine learning in smart cities. Comput. Commun. 2020, 154, 313–323. [Google Scholar] [CrossRef]

- Lekharu, A.; Moulii, K.; Sur, A.; Sarkar, A. Deep learning based prediction model for adaptive video streaming. In Proceedings of the 2020 International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 7–11 January 2020; pp. 152–159. [Google Scholar]

- Anand, D.; Togou, M.A.; Muntean, G.M. A Machine Learning Solution for Automatic Network Selection to Enhance Quality of Service for Video Delivery. In Proceedings of the 2021 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Chengdu, China, 4–6 August 2021; pp. 1–5. [Google Scholar]

- Kattadige, C.; Raman, A.; Thilakarathna, K.; Lutu, A.; Perino, D. 360NorVic: 360-degree video classification from mobile encrypted video traffic. In Proceedings of the 31st ACM Workshop on Network and Operating Systems Support for Digital Audio and Video, Istanbul, Turkey, 28 September–1 October 2021; pp. 58–65. [Google Scholar]

- Anerousis, N.; Chemouil, P.; Lazar, A.A.; Mihai, N.; Weinstein, S.B. The Origin and Evolution of Open Programmable Networks and SDN. IEEE Commun. Surv. Tutor. 2021, 23, 1956–1971. [Google Scholar] [CrossRef]

- Egilmez, H.E.; Civanlar, S.; Tekalp, A.M. An optimization framework for QoS-enabled adaptive video streaming over OpenFlow networks. IEEE Trans. Multimed. 2012, 15, 710–715. [Google Scholar] [CrossRef]

- Juttner, A.; Szviatovski, B.; Mécs, I.; Rajkó, Z. Lagrange relaxation based method for the QoS routing problem. In Proceedings of the Conference on Computer Communications—Twentieth Annual Joint Conference of the IEEE Computer and Communications Society (Cat. No. 01CH37213), Anchorage, AK, USA, 22–26 April 2001; Volume 2, pp. 859–868. [Google Scholar]

- Yu, T.F.; Wang, K.; Hsu, Y.H. Adaptive routing for video streaming with QoS support over SDN networks. In Proceedings of the 2015 International Conference on Information Networking (ICOIN), Siem Reap, Cambodia, 12–14 January 2015; pp. 318–323. [Google Scholar]

- Ongaro, F.; Cerqueira, E.; Foschini, L.; Corradi, A.; Gerla, M. Enhancing the quality level support for real-time multimedia applications in software-defined networks. In Proceedings of the 2015 International Conference on Computing, Networking and Communications (ICNC), Garden Grove, CA, USA, 16–19 February 2015; pp. 505–509. [Google Scholar]

- Rego, A.; Sendra, S.; Jimenez, J.M.; Lloret, J. OSPF routing protocol performance in Software Defined Networks. In Proceedings of the 2017 Fourth International Conference on Software Defined Systems (SDS), Valencia, Spain, 8–11 May 2017; pp. 131–136. [Google Scholar]

- Rego, A.; Sendra, S.; Jimenez, J.M.; Lloret, J. Dynamic metric OSPF-based routing protocol for software defined networks. Clust. Comput. 2019, 22, 705–720. [Google Scholar] [CrossRef]

- Elbasheer, M.O.; Aldegheishem, A.; Lloret, J.; Alrajeh, N. A QoS-Based routing algorithm over software defined networks. J. Netw. Comput. Appl. 2021, 194, 103215. [Google Scholar] [CrossRef]

- Uzakgider, T.; Cetinkaya, C.; Sayit, M. Learning-based approach for layered adaptive video streaming over SDN. Comput. Netw. 2015, 92, 357–368. [Google Scholar] [CrossRef]

- Sendra, S.; Rego, A.; Lloret, J.; Jimenez, J.M.; Romero, O. Including artificial intelligence in a routing protocol using software defined networks. In Proceedings of the 2017 IEEE International Conference on Communications Workshops (ICC Workshops), Paris, France, 21–23 May 2017; pp. 670–674. [Google Scholar]

- Al-Jawad, A.; Shah, P.; Gemikonakli, O.; Trestian, R. LearnQoS: A learning approach for optimizing QoS over multimedia-based SDNs. In Proceedings of the 2018 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Valencia, Spain, 6–8 June 2018; pp. 1–6. [Google Scholar]

- Hossain, M.B.; Wei, J. Reinforcement learning-driven QoS-aware intelligent routing for software-defined networks. In Proceedings of the 2019 IEEE global conference on signal and information processing (GlobalSIP), Ottawa, ON, Canada, 11–14 November 2019; pp. 1–5. [Google Scholar]

- Godfrey, D.; Kim, B.S.; Miao, H.; Shah, B.; Hayat, B.; Khan, I.; Sung, T.E.; Kim, K.I. Q-learning based routing protocol for congestion avoidance. Comput. Mater. Contin. 2021, 68, 3671. [Google Scholar] [CrossRef]

- Al-Jawad, A.; Comşa, I.S.; Shah, P.; Gemikonakli, O.; Trestian, R. An innovative reinforcement learning-based framework for quality of service provisioning over multimedia-based sdn environments. IEEE Trans. Broadcast. 2021, 67, 851–867. [Google Scholar] [CrossRef]

- Al-Jawad, A.; Comşa, I.-S.; Shah, P.; Gemikonakli, O.; Trestian, R. REDO: A reinforcement learning-based dynamic routing algorithm selection method for SDN. In Proceedings of the 2021 IEEE Conference on Network Function Virtualization and Software Defined Networks (NFV-SDN), Online, 9–11 November 2021; pp. 54–59. [Google Scholar]

- Guo, Y.; Wang, W.; Zhang, H.; Guo, W.; Wang, Z.; Tian, Y.; Yin, X.; Wu, J. Traffic Engineering in hybrid Software Defined Network via Reinforcement Learning. J. Netw. Comput. Appl. 2021, 189, 103116. [Google Scholar] [CrossRef]

- Liu, W.x.; Cai, J.; Chen, Q.C.; Wang, Y. DRL-R: Deep reinforcement learning approach for intelligent routing in software-defined data-center networks. J. Netw. Comput. Appl. 2021, 177, 102865. [Google Scholar] [CrossRef]

- Gueant, V. iPerf—The Ultimate Speed Test Tool for TCP, UDP and SCTPTEST the Limits of Your Network + Internet Neutrality Test. Available online: https://iperf.fr/ (accessed on 10 December 2021).

- Asadollahi, S.; Goswami, B.; Sameer, M. Ryu controller’s scalability experiment on software defined networks. In Proceedings of the 2018 IEEE international conference on current trends in advanced computing (ICCTAC), Bangalore, India, 1–2 February 2018; pp. 1–5. [Google Scholar]

- Vega, M.T.; Perra, C.; Liotta, A. Resilience of video streaming services to network impairments. IEEE Trans. Broadcast. 2018, 64, 220–234. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.J.; Yun, D.G.; Kim, H.S.; Cho, K.S.; Choi, S.G. QoE assessment model for video streaming service using QoS parameters in wired-wireless network. In Proceedings of the 2012 14th International Conference on Advanced Communication Technology (ICACT), Pyeongchang, Korea, 19–22 February 2012; pp. 459–464. [Google Scholar]

- Chen, Y.; Wu, K.; Zhang, Q. From QoS to QoE: A tutorial on video quality assessment. IEEE Commun. Surv. Tutor. 2014, 17, 1126–1165. [Google Scholar] [CrossRef]

- Oginni, O.; Bull, P.; Wang, Y. Constraint-aware software-defined network for routing real-time multimedia. ACM SIGBED Rev. 2018, 15, 37–42. [Google Scholar] [CrossRef]

- Benmir, A.; Korichi, A.; Bourouis, A.; Alreshoodi, M.; Al-Jobouri, L. GeoQoE-Vanet: QoE-aware geographic routing protocol for video streaming over vehicular ad-hoc networks. Computers 2020, 9, 45. [Google Scholar] [CrossRef]

- Mammeri, Z. Reinforcement learning based routing in networks: Review and classification of approaches. IEEE Access 2019, 7, 55916–55950. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Juluri, P.; Tamarapalli, V.; Medhi, D. Measurement of quality of experience of video-on-demand services: A survey. IEEE Commun. Surv. Tutor. 2015, 18, 401–418. [Google Scholar] [CrossRef]

- Al Shalabi, L.; Shaaban, Z. Normalization as a preprocessing engine for data mining and the approach of preference matrix. In Proceedings of the 2006 International Conference on Dependability of Computer Systems, Szklarska Poręba, Poland, 25–27 May 2006; pp. 207–214. [Google Scholar]

- de Oliveira, R.L.S.; Schweitzer, C.M.; Shinoda, A.A.; Prete, L.R. Using Mininet for emulation and prototyping Software-Defined Networks. In Proceedings of the 2014 IEEE Colombian Conference on Communications and Computing (COLCOM), Bogota, Colombia, 4–6 June 2014; pp. 1–6. [Google Scholar] [CrossRef]

- Henni, D.E.; Ghomari, A.; Hadjadj-Aoul, Y. A consistent QoS routing strategy for video streaming services in SDN networks. Int. J. Commun. Syst. 2020, 33, e4177. [Google Scholar] [CrossRef] [Green Version]

- Kirstein, P.T. European International Academic Networking: A 20 Year Perspective. In Proceedings of the TERENA Networking Conference, Rhodes, Greece, 7–10 June 2004. [Google Scholar]

- Liu, Y. Current situation and prospect of CERNET. In China’s e-Science Blue Book 2020; Springer: Berlin/Heidelberg, Germany, 2021; pp. 327–334. [Google Scholar]

- Lahoulou, A.; Larabi, M.C.; Beghdadi, A.; Viennet, E.; Bouridane, A. Knowledge-based taxonomic scheme for full-reference objective image quality measurement models. J. Imaging Sci. Technol. 2016, 60, 60406-1. [Google Scholar] [CrossRef]

- Li, Z.; Bampis, C.; Novak, J.; Aaron, A.; Swanson, K.; Moorthy, A.; Cock, J. Netflix Technology Blog—VMAF: The Journey Continues. 2018. Available online: http://mcl.usc.edu/wp-content/uploads/2018/10/2018-10-25-Netflix-Worked-with-Professor-Kuo-on-Video-Quality-Metric-VMAF.pdf (accessed on 25 March 2022).

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef] [Green Version]

- Big Buck Bunny. Available online: https://peach.blender.org/ (accessed on 18 January 2022).

| Name | Nodes | Links |

|---|---|---|

| Cernet (large-scale topology) | 36 | 48 |

| Geant (middle-scale topology) | 23 | 37 |

| Abilene (small-scale topology) | 12 | 20 |

| MOS | VMAF | SSIM |

|---|---|---|

| 5 (Excellent) | 80–100 | >0.99 |

| 4 (Good) | 60–79 | ≥0.95 & <0.99 |

| 3 (Fair) | 40–59 | ≥0.88 & <0.95 |

| 2 (Poor) | 20–39 | ≥0.5 & <0.88 |

| 1 (Bad) | <20 | <0.5 |

| Network Topology | Abilene | Geant | Cernet | ||||

|---|---|---|---|---|---|---|---|

| Client-Server | H5–H1 | H10–H4 | H15–H6 | H18–H9 | H7–H35 | H22–H19 | |

| OSPF-based appraoch | Packets dropped (out of 127,551) | 39 | 73 | 84 | 117 | 128 | 57 |

| Average packet jitter (in ms) | 0.008 | 0.017 | 0.013 | 0.015 | 0.022 | 0.004 | |

| RL-based solution | Packets dropped (out of 127,551) | 45 | 41 | 29 | 1 | 28 | 51 |

| Average packet jitter (in ms) | 0.010 | 0.015 | 0.018 | 0.017 | 0.008 | 0.019 | |

| Network Topology | Abilene | Geant | Cernet | ||||

|---|---|---|---|---|---|---|---|

| Client-Server | H5–H1 | H10–H4 | H15–H6 | H18–H9 | H7–H35 | H22–H19 | |

| OSPF-based appraoch | Packets dropped (out of 178,572) | 1176 | 193 | 1264 | 1809 | 196 | 641 |

| Average packet jitter (in ms) | 0.009 | 0.010 | 0.017 | 0.011 | 0.017 | 0.011 | |

| RL-based solution | Packets dropped (out of 178,572) | 826 | 123 | 100 | 122 | 146 | 297 |

| Average packet jitter (in ms) | 0.022 | 0.026 | 0.025 | 0.007 | 0.008 | 0.008 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Jameel, M.; Kanakis, T.; Turner, S.; Al-Sherbaz, A.; Bhaya, W.S. A Reinforcement Learning-Based Routing for Real-Time Multimedia Traffic Transmission over Software-Defined Networking. Electronics 2022, 11, 2441. https://doi.org/10.3390/electronics11152441

Al Jameel M, Kanakis T, Turner S, Al-Sherbaz A, Bhaya WS. A Reinforcement Learning-Based Routing for Real-Time Multimedia Traffic Transmission over Software-Defined Networking. Electronics. 2022; 11(15):2441. https://doi.org/10.3390/electronics11152441

Chicago/Turabian StyleAl Jameel, Mohammed, Triantafyllos Kanakis, Scott Turner, Ali Al-Sherbaz, and Wesam S. Bhaya. 2022. "A Reinforcement Learning-Based Routing for Real-Time Multimedia Traffic Transmission over Software-Defined Networking" Electronics 11, no. 15: 2441. https://doi.org/10.3390/electronics11152441

APA StyleAl Jameel, M., Kanakis, T., Turner, S., Al-Sherbaz, A., & Bhaya, W. S. (2022). A Reinforcement Learning-Based Routing for Real-Time Multimedia Traffic Transmission over Software-Defined Networking. Electronics, 11(15), 2441. https://doi.org/10.3390/electronics11152441