Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking

Abstract

1. Introduction

2. Problem Statement and Contributions

3. Materials and Methods

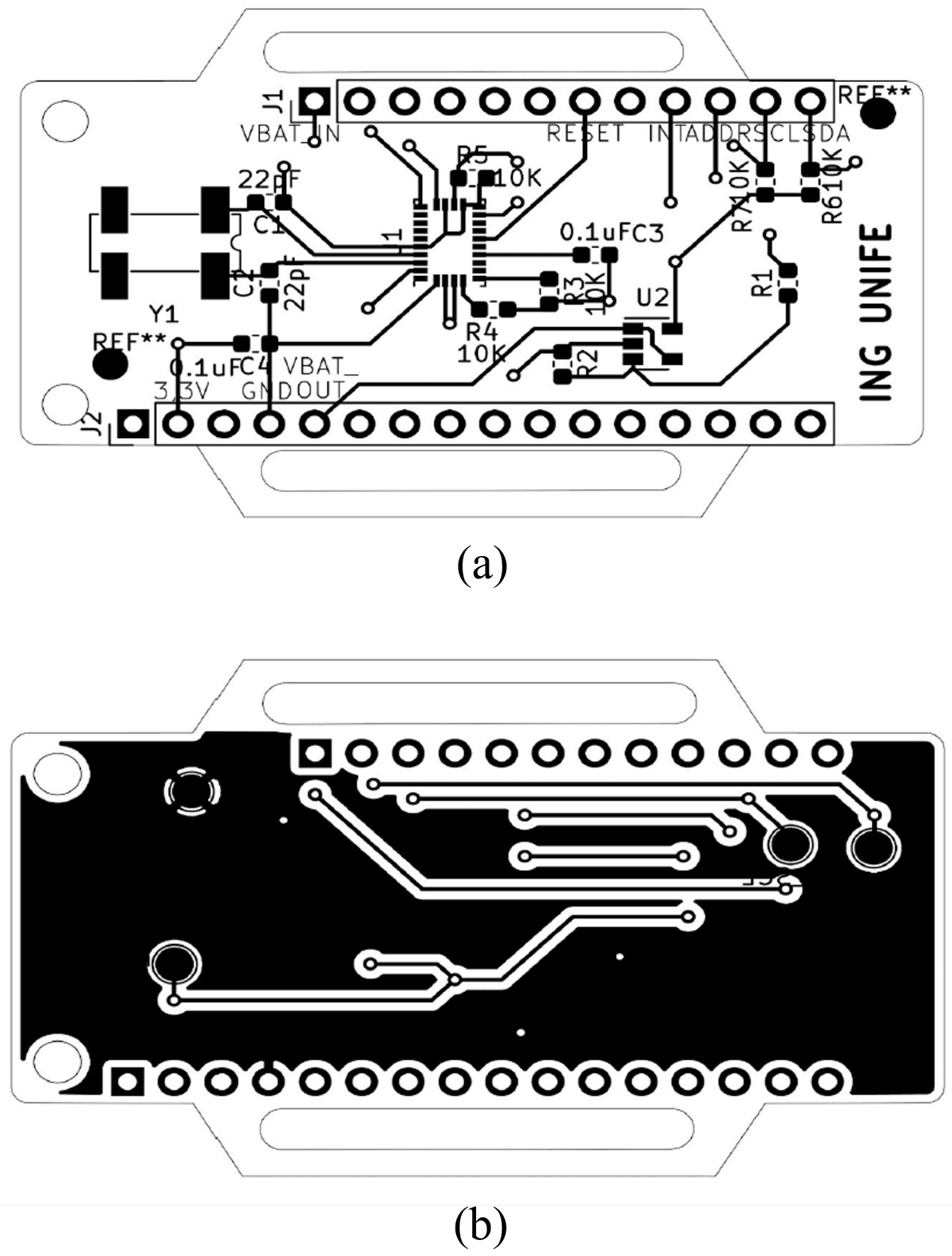

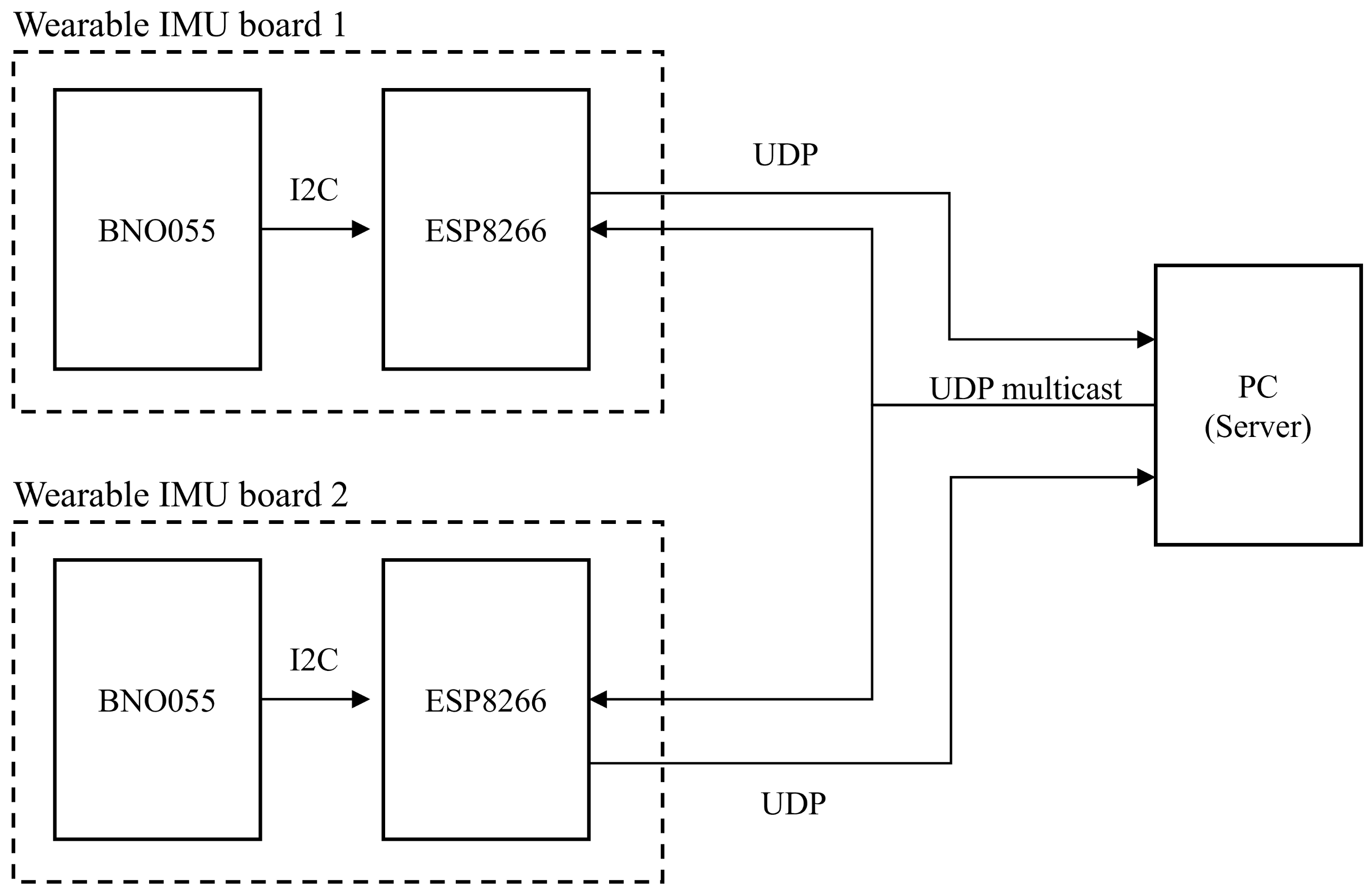

3.1. Custom-Made Wearable Devices

3.2. Sensor-Fusion Algorithm

Alternative Formulations

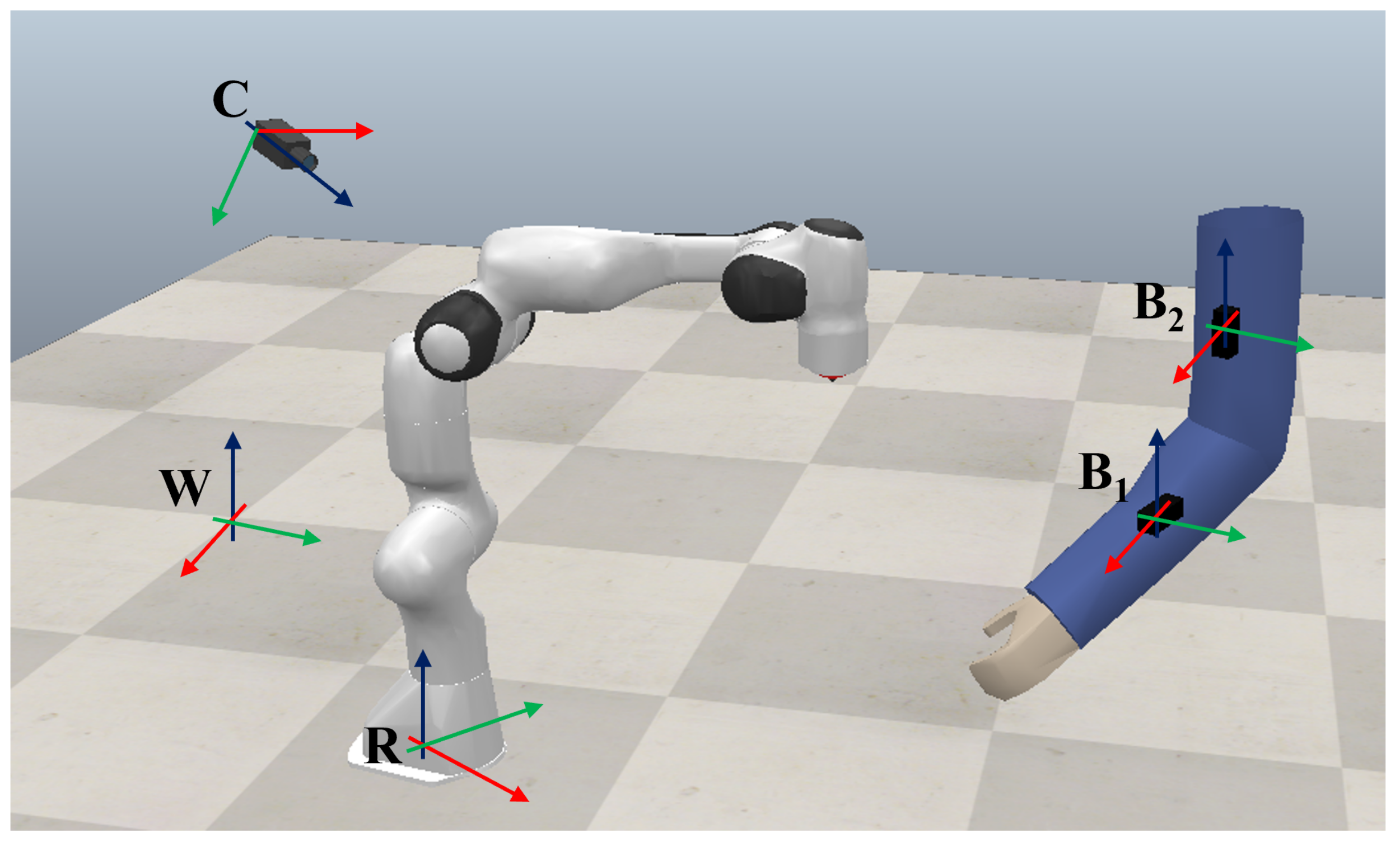

3.3. Mixed-Reality Motion Planner

- 3D and kinematics robot models, including a dedicated plugin for inverse kinematics calculations;

- scripting Lua and C++ APIs to create custom scripts for the scene objects; and

- remote interfacing, including a ROS plugin to interface the simulation with ROS publishers and subscribers

4. Experiments

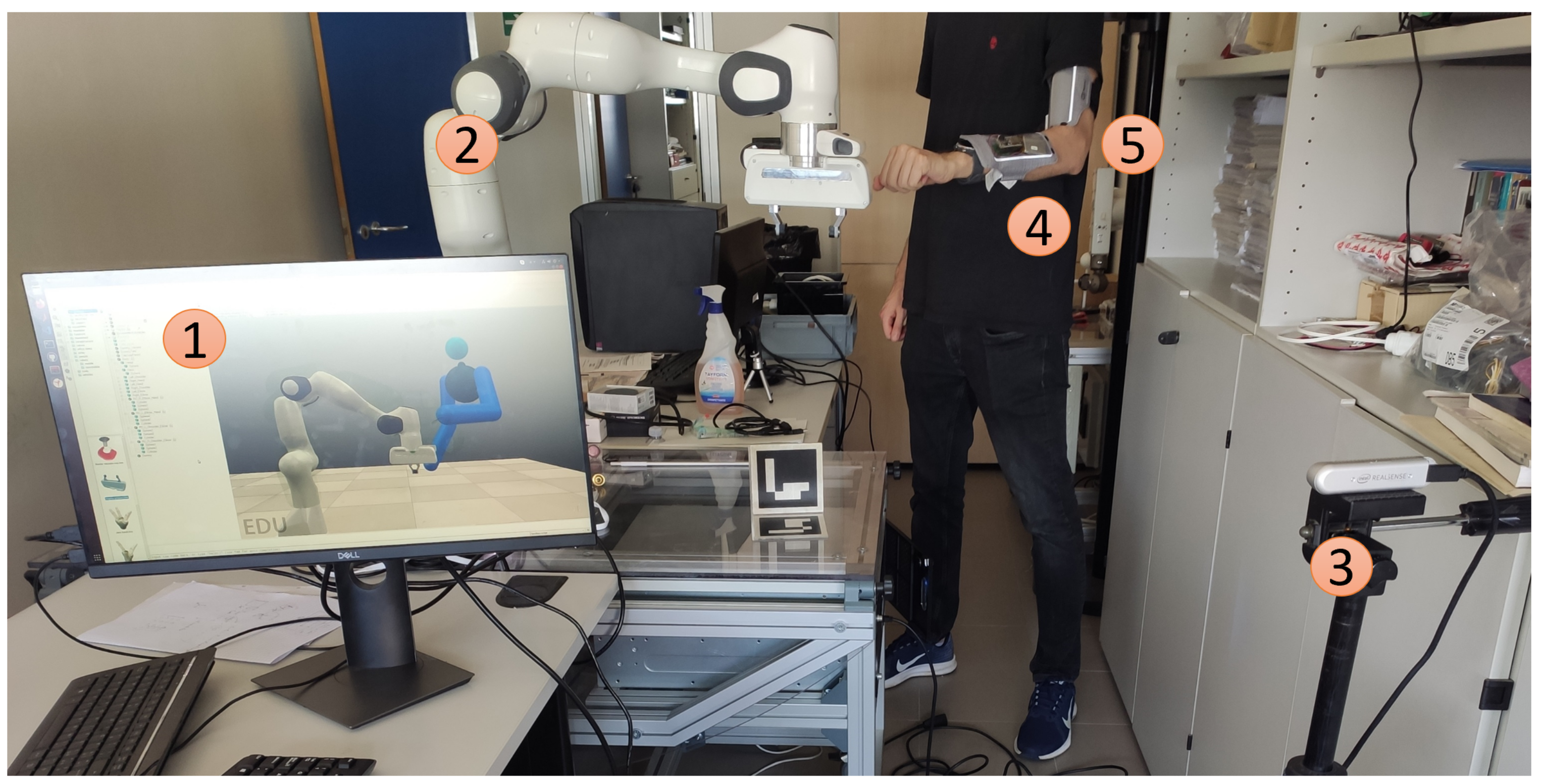

4.1. Experimental Setup

4.2. Workcell Registration

- : the transformation matrix of R to W can be found using the three-point calibration method, as described e.g., in [28].

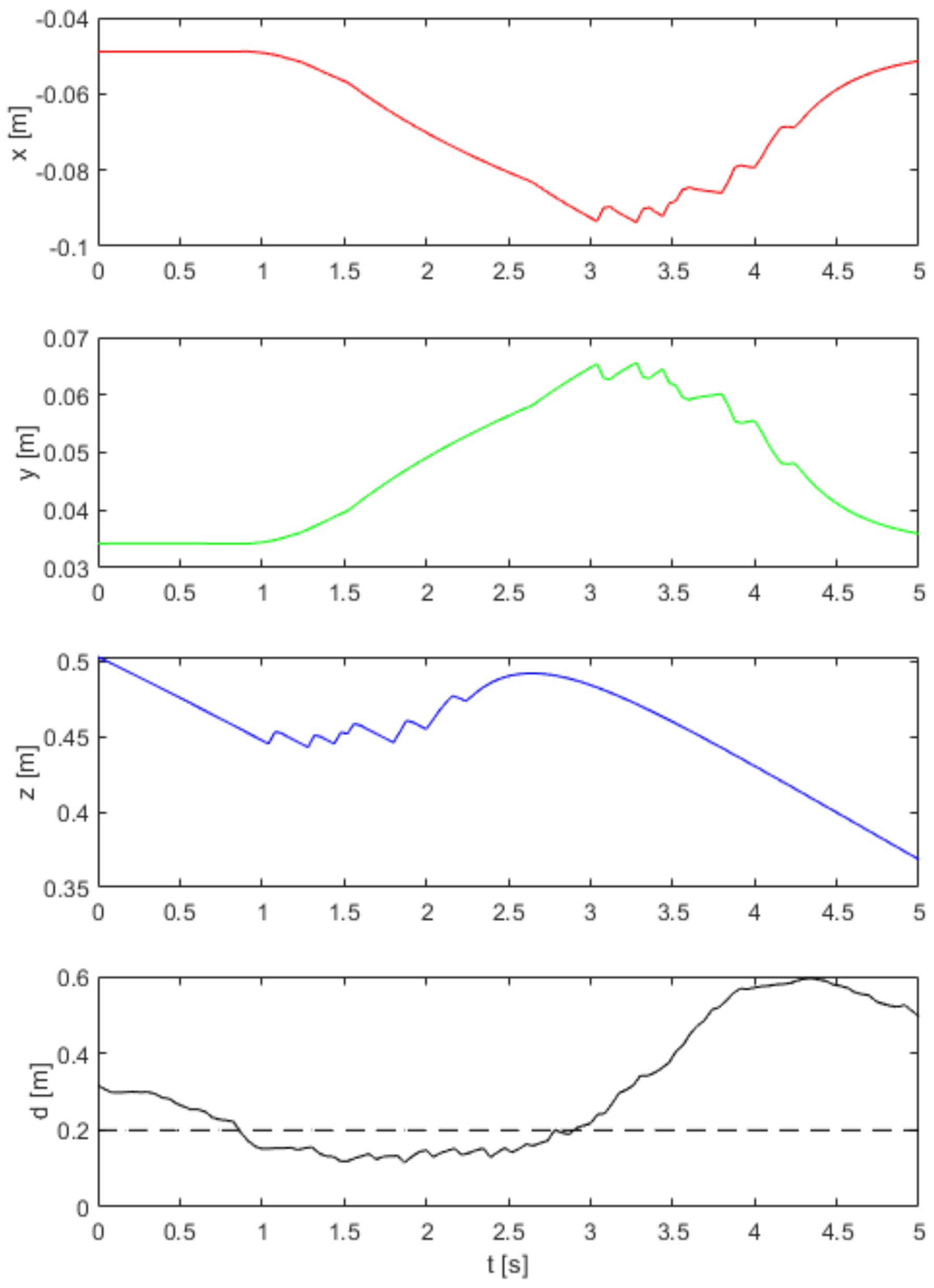

4.3. Results

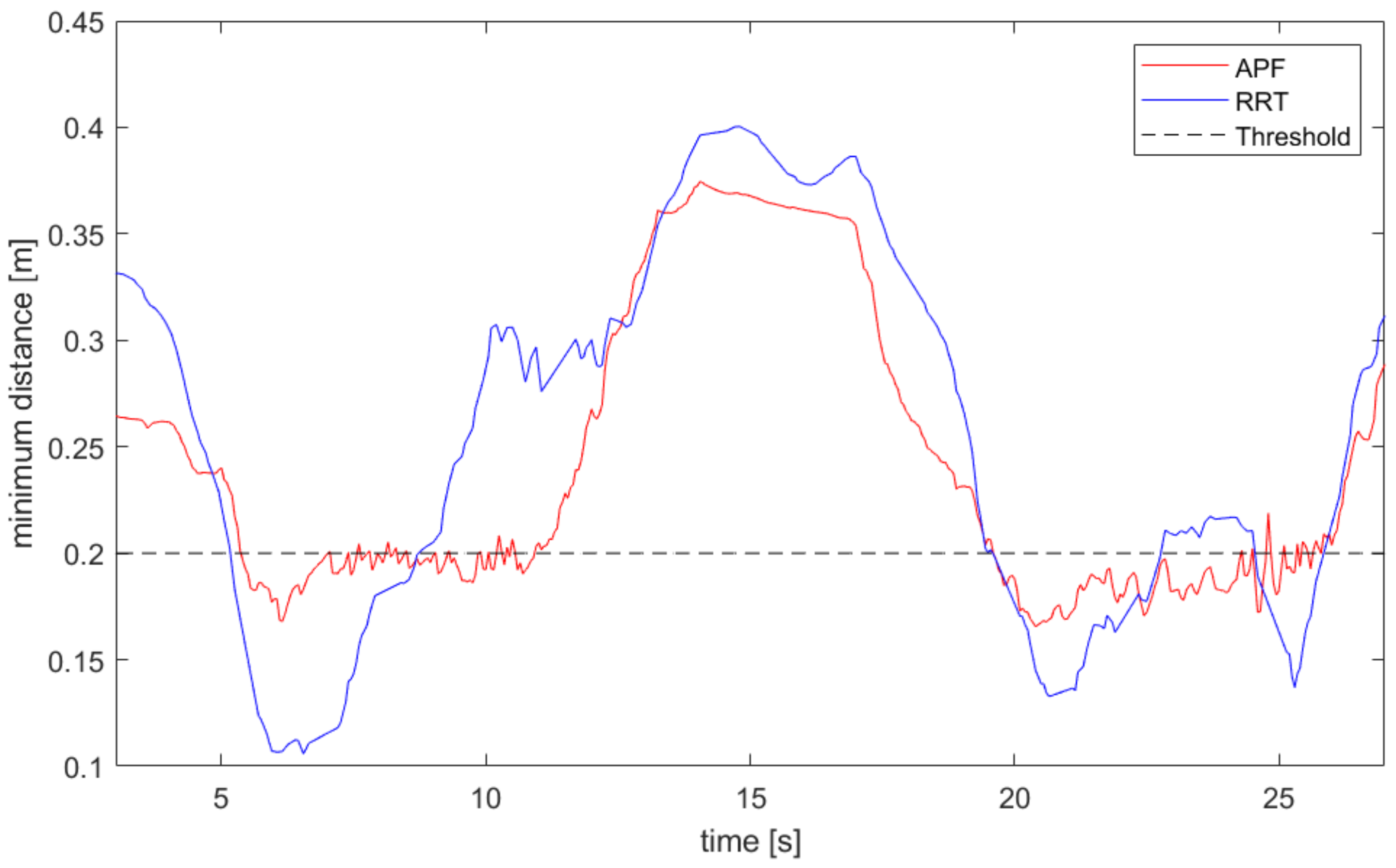

5. Comparative Analyses

5.1. Comparison among Alternative Kalman Filter Formulations

5.2. Comparison between Different Planning Approaches

- 1.

- Find a collision-free robot configuration for the target end-effector pose;

- 2.

- Plan collision-free robot motions from the current configuration to the goal configuration exploiting the RRT-Connect algorithm provided by the Open Motion Planning Library (OMPL) [29] wrapper of CoppeliaSim; and

- 3.

- Execute the motion. If the robot has been moving for at least a minimum time and the minimum distance between the human and the robot exceeds the threshold:

- a.

- Stop the robot;

- b.

- Restart the procedure from point 1.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Aivaliotis, P.; Aivaliotis, S.; Gkournelos, C.; Kokkalis, K.; Michalos, G.; Makris, S. Power and force limiting on industrial robots for human–robot collaboration. Robot. Comput.-Integr. Manuf. 2019, 59, 346–360. [Google Scholar] [CrossRef]

- Farsoni, S.; Ferraguti, F.; Bonfè, M. Safety-oriented robot payload identification using collision-free path planning and decoupling motions. Robot. Comput.-Integr. Manuf. 2019, 59, 189–200. [Google Scholar] [CrossRef]

- Szafir, D. Mediating human–robot interactions with virtual, augmented, and mixed reality. In Proceedings of the International Conference on Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2019; pp. 124–149. [Google Scholar]

- Badia, S.B.i.; Silva, P.A.; Branco, D.; Pinto, A.; Carvalho, C.; Menezes, P.; Almeida, J.; Pilacinski, A. Virtual Reality for Safe Testing and Development in Collaborative Robotics: Challenges and Perspectives. Electronics 2022, 11, 1726. [Google Scholar] [CrossRef]

- Hönig, W.; Milanes, C.; Scaria, L.; Phan, T.; Bolas, M.; Ayanian, N. Mixed reality for robotics. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5382–5387. [Google Scholar] [CrossRef]

- Andersen, R.S.; Madsen, O.; Moeslund, T.B.; Amor, H.B. Projecting robot intentions into human environments. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 294–301. [Google Scholar]

- Vogel, C.; Walter, C.; Elkmann, N. A projection-based sensor system for safe physical human–robot collaboration. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 5359–5364. [Google Scholar]

- Casalino, A.; Guzman, S.; Zanchettin, A.M.; Rocco, P. Human pose estimation in presence of occlusion using depth camera sensors, in human–robot coexistence scenarios. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–7. [Google Scholar]

- Nguyen, M.H.; Hsiao, C.C.; Cheng, W.H.; Huang, C.C. Practical 3D human skeleton tracking based on multi-view and multi-Kinect fusion. Multimed. Syst. 2022, 28, 529–552. [Google Scholar] [CrossRef]

- Servi, M.; Mussi, E.; Profili, A.; Furferi, R.; Volpe, Y.; Governi, L.; Buonamici, F. Metrological Characterization and Comparison of D415, D455, L515 RealSense Devices in the Close Range. Sensors 2021, 21, 7770. [Google Scholar] [CrossRef] [PubMed]

- Longo, U.G.; De Salvatore, S.; Sassi, M.; Carnevale, A.; De Luca, G.; Denaro, V. Motion Tracking Algorithms Based on Wearable Inertial Sensor: A Focus on Shoulder. Electronics 2022, 11, 1741. [Google Scholar] [CrossRef]

- Farsoni, S.; Bonfè, M.; Astolfi, L. A low-cost high-fidelity ultrasound simulator with the inertial tracking of the probe pose. Control Eng. Pract. 2017, 59, 183–193. [Google Scholar] [CrossRef]

- Gultekin, H.; Dalgıç, Ö.O.; Akturk, M.S. Pure cycles in two-machine dual-gripper robotic cells. Robot. Comput.-Integr. Manuf. 2017, 48, 121–131. [Google Scholar] [CrossRef][Green Version]

- Foumani, M.; Gunawan, I.; Smith-Miles, K.; Ibrahim, M.Y. Notes on feasibility and optimality conditions of small-scale multifunction robotic cell scheduling problems with pickup restrictions. IEEE Trans. Ind. Inform. 2014, 11, 821–829. [Google Scholar] [CrossRef]

- Sensortec, B. Intelligent 9-axis Absolute Orientation Sensor. BNO055 Datasheet. 2014. Available online: https://www.bosch-sensortec.com (accessed on 25 June 2022).

- Mesquita, J.; Guimarães, D.; Pereira, C.; Santos, F.; Almeida, L. Assessing the ESP8266 WiFi module for the Internet of Things. In Proceedings of the 2018 IEEE 23rd International Conference on Emerging Technologies and Factory Automation (ETFA), Torino, Italy, 4–7 September 2018; Volume 1, pp. 784–791. [Google Scholar]

- Assa, A.; Janabi-Sharifi, F. A Kalman Filter-Based Framework for Enhanced Sensor Fusion. IEEE Sens. J. 2015, 15, 3281–3292. [Google Scholar] [CrossRef]

- Farsoni, S.; Landi, C.T.; Ferraguti, F.; Secchi, C.; Bonfè, M. Real-time identification of robot payload using a multirate quaternion-based kalman filter and recursive total least-squares. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 2103–2109. [Google Scholar]

- Wan, E.; Van Der Merwe, R. The unscented Kalman filter for nonlinear estimation. In Proceedings of the IEEE Adaptive Systems for Signal Processing, Communications, and Control Symposium, Lake Louise, AB, Canada, 1–4 October 2000; pp. 153–158. [Google Scholar]

- Zhao, F.; Van Wachem, B. A novel Quaternion integration approach for describing the behaviour of non-spherical particles. Acta Mech. 2013, 224, 3091–3109. [Google Scholar] [CrossRef]

- Siciliano, B.; Sciavicco, L.; Villani, L.; Oriolo, G. Robotics: Modelling, Planning and Control; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Rohmer, E.; Singh, S.P.N.; Freese, M. V-REP: A versatile and scalable robot simulation framework. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 1321–1326. [Google Scholar] [CrossRef]

- Sozzi, A.; Bonfè, M.; Farsoni, S.; De Rossi, G.; Muradore, R. Dynamic motion planning for autonomous assistive surgical robots. Electronics 2019, 8, 957. [Google Scholar] [CrossRef]

- Abdi, M.I.I.; Khan, M.U.; Güneş, A.; Mishra, D. Escaping Local Minima in Path Planning Using a Robust Bacterial Foraging Algorithm. Appl. Sci. 2020, 10, 7905. [Google Scholar] [CrossRef]

- Garrido-Jurado, S.; Muñoz-Salinas, R.; Madrid-Cuevas, F.J.; Marín-Jiménez, M.J. Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recognit. 2014, 47, 2280–2292. [Google Scholar] [CrossRef]

- Ferraguti, F.; Minelli, M.; Farsoni, S.; Bazzani, S.; Bonfè, M.; Vandanjon, A.; Puliatti, S.; Bianchi, G.; Secchi, C. Augmented reality and robotic-assistance for percutaneous nephrolithotomy. IEEE Robot. Autom. Lett. 2020, 5, 4556–4563. [Google Scholar] [CrossRef]

- Zhang, W.; Ma, X.; Cui, L.; Chen, Q. 3 points calibration method of part coordinates for arc welding robot. In Proceedings of the International Conference on Intelligent Robotics and Applications, Wuhan, China, 15–17 October 2018; Springer: Berlin/Heidelberg, Germany, 2008; pp. 216–224. [Google Scholar]

- Sucan, I.A.; Moll, M.; Kavraki, L.E. The open motion planning library. IEEE Robot. Autom. Mag. 2012, 19, 72–82. [Google Scholar] [CrossRef]

| Symbol | Qty | Manufacturer | Features |

|---|---|---|---|

| Y1 | 1 | IQD frequency products | Crystal SMD, 32.768 KHz, 12.5 pF |

| C1, C2 | 2 | Generic | 22.0 pF, 50 V |

| C3, C4 | 2 | Generic | 100.0 nF, 50 V |

| R3, R4, R5, R6, R7 | 5 | Generic | 10 kOhm, 50 V |

| U1 | 1 | Bosch Sensortec | BNO055, IMU Accel/Gyro/Mag I2C |

| U2 | 1 | Microchip | General purpose amplifier |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farsoni, S.; Rizzi, J.; Ufondu, G.N.; Bonfè, M. Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking. Electronics 2022, 11, 2407. https://doi.org/10.3390/electronics11152407

Farsoni S, Rizzi J, Ufondu GN, Bonfè M. Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking. Electronics. 2022; 11(15):2407. https://doi.org/10.3390/electronics11152407

Chicago/Turabian StyleFarsoni, Saverio, Jacopo Rizzi, Giulia Nenna Ufondu, and Marcello Bonfè. 2022. "Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking" Electronics 11, no. 15: 2407. https://doi.org/10.3390/electronics11152407

APA StyleFarsoni, S., Rizzi, J., Ufondu, G. N., & Bonfè, M. (2022). Planning Collision-Free Robot Motions in a Human–Robot Shared Workspace via Mixed Reality and Sensor-Fusion Skeleton Tracking. Electronics, 11(15), 2407. https://doi.org/10.3390/electronics11152407