Abstract

This research develops an effective single-image super-resolution (SR) method that increases the resolution of scanned text or document images and improves their readability. To this end, we introduce a new semantic loss and propose a semantic SR method that guides an SR network to learn implicit text-specific semantic priors through self-distillation. Experiments on the enhanced deep SR (EDSR) model, one of the most popular SR networks, confirmed that semantic loss can contribute to further improving the quality of text SR images. Although the improvement varied depending on image resolution and dataset, the peak signal-to-noise ratio (PSNR) value was increased by up to 0.3 dB by introducing the semantic loss. The proposed method outperformed an existing semantic SR method.

1. Introduction

Single-image super-resolution, shortly super-resolution (SR) hereafter, is the task of generating a high-resolution (HR) image from a given low-resolution (LR) image, which is used in various applications such as surveillance, microscopy, medical imaging, and real-time virtual reality streaming. It is inherently ill-posed because there exist multiple HR images that could generate one LR image. Therefore, traditional SR methods use image priors such as sparsity [1] and edge [2] to constrain the solution space.

Following the success of convolutional neural networks (CNN) [3] in various types of computer vision tasks, recent SR studies have attempted to use various types of CNNs, and the resulting learning-based SR methods outperform traditional ones in accuracy and efficiency [4].

Digitizing books or documents of historical or personal importance ensures that a person or organization has access to unique and valuable content for far longer than anticipated. However, as books and documents fade and become damaged over time, it is necessary to improve the readability of digitized (or scanned) text or document images using SR methods. Effective SR methods are also required to improve the accuracy of text or character recognition. Therefore, we aim to develop a CNN-based SR method for enhancing text or document images in this study.

For generalization, most CNN-based SR methods are not optimized to enhance specific image categories, which makes them less effective for SR of text or document images. Although it is possible to consider training SR models using only text or document images, the models will be over-fitted (this will be shown later). Therefore, an SR method, specialized in text or document images and free of over-fitting, is required for our goal.

Several SR studies have attempted to increase the resolution of text or document images using CNNs, and some of them have shown that it is possible to further improve the quality of SR images by using text-specific semantic priors [5,6,7,8,9]. However, they needed to use specialized CNN models such as generative adversarial network (GAN), long short-term memory (LSTM), or transformer to obtain the priors. Additionally, most of them are available only for text images and not images from other categories.

It is believed that, while text images are semantically the same, they can have slightly different local contexts or textures, and the SR process for text images can be improved by reducing the difference. Therefore, we propose a semantic SR method that guides an SR-CNN model to learn text-specific semantic priors via self-distillation and upscale text images with higher accuracy by introducing a semantic loss (quantifying the difference between images belonging to the same category). The proposed method does not necessitate any specialized CNN models and can be applied to any existing SR models.

Note that our method can be applied to images of categories other than text (such as faces) without modification. However, this study focuses on super-resolving text images.

The primary contributions of this study, which focuses on developing an effective text SR method is as follows:

- We introduce a semantic loss to measure the difference between text SR images.

- We propose a semantic SR method using self-distillation that forces semantically similar text SR images by minimizing semantic loss.

- The proposed method can be applied to one of the existing SR models without modifying its network structure and is used as it is for SR of other categories’ images.

- The performance of the proposed method is validated on different text image datasets in various aspects. The experiments show that the proposed method outperforms a GAN-based semantic SR method.

2. Related Work

2.1. Self-Distillation

Knowledge distillation is the process of training student networks to find a better solution using extra supervised information from the pretrained teacher model. It is commonly used for network compression. Self-distillation is one of the knowledge distillation techniques that efficiently optimize a single network from the consistent distributions of data representations without the assistance of other models [10]. Therefore, it can be used as a regularization technique for matching or distilling the predictive distribution of the network between different samples of the same label [11].

In this study, we use self-distillation to optimize a single network based on the semantic similarity between the network outputs of text images belonging to the same category.

2.2. Enhanced Deep Super-Resolution Network (EDSR)

SR models mainly learn the residuals between LR and HR images. Residual network designs are, therefore, of high importance. Based on the fact that it is not optimal to use a residual neural network (ResNet) designed to solve high-level problems, such as image classification, to solve low-level problems, such as SR, EDSR is a model that significantly improved SR performance by modifying the ResNet (i.e., removing unnecessary layers) and stacking it much deeper [12]. Deeper and wider models have been proposed since the introduction of EDSR, but EDSR has been widely used as a baseline model in a number of current SR methods due to its high performance and effectiveness [13,14].

In this study, we also use EDSR as a baseline model for building our semantic SR model.

2.3. Deep Learning-Based Text Image Super-Resolution

Existing text image SR methods have primarily been used as a preprocessing step before performing scene text recognition. Dong et al. [15] used the SRCNN [16] as the backbone for their SR. Pandey et al. [17] proposed three network architectures for performing SR on binary document images, each employing transposed convolution, parallel convolution, and sub-pixel convolution layers. Tran et al. [18] used the Laplacian-pyramid framework to progressively upsample LR text images. However, these methods regard text images as natural scene images, ignoring the categorical (or semantic) information of text; thus, their generic SR frameworks are unsuitable for text image SR.

There have been a few works that attempted to use text-specific categorical (or semantic) information to perform text image SR. Xu et al. [5] proposed a multi-class GAN with multiple discriminators, one of which was trained to distinguish between fake and real text images, thereby learning an implicit text-specific prior. Wang et al. [6] proposed an SRResNet [7] modification with bi-directional LSTMs to capture sequential information in text images. Chen et al. [8] proposed a transformer-based framework containing a self-attention module to extract sequential information and a position-aware module, and a content-aware module to highlight each character’s position and content, respectively. The same authors also focused on the internal stroke-level structures of characters in text images. Thus, they designed rules for decomposing English characters and digits at the stroke level and proposed using a pretrained text recognizer to provide stroke-level attention maps as positional cues [19]. Ma et al. [9] provided guidance to recover HR text images by introducing an explicit text prior to the character probability sequence obtained from a text recognition model. To consider the detailed structural formation of characters, Nakaune et al. [20] introduced a skeleton loss that measures the differences between character skeletons. They used a skeletonization network to generate skeletons from input images.

In this study, we calculate a semantic loss and use self-distillation to learn an implicit specific text prior to a training set of text images. No special types of CNNs or additional recognition models are required for our method.

2.4. Semantic Super-Resolution

Semantic SR methods attempt to incorporate contextual or semantic information about the scene into the SR process, resulting in more accurate SR results.

In traditional exemplar-based SR, Sun et al. [21] proposed context-constrained SR by constructing a training set of texturally similar HR/LR image segment pairs. Timofte et al. [22] investigated semantic priors by training specialized models for each semantic category separately and showed that semantic information could help guide the SR process to enhance local image details. Enforcing category-specific priors in CNN has been attempted by Xu et al. [5]. The prior was obtained at the image level by using the multi-class GAN, where two discriminators learned category-specific information of face and text images, respectively. To assume multiple categorical priors co-existing in an image, Wang et al. [23] used a segmentation network to obtain the semantic categorical prior at the pixel level. Then, they proposed a spatial feature transform layer to efficiently incorporate the category-specific information. Chen et al. [24] applied mini-batch K-means to feature vectors of HR images to obtain a semantic-aware texture prior to blind SR.

In this study, the text-specific semantic prior is obtained at the image level and incorporated into the deep learning-based SR process via self-distillation.

3. Proposed Method

Inspired by the work [11] that improved the generalization ability of the CNN models for image classification via class-wise self-distillation, we attempt to distill the category-specific semantic information between different SR images of the same category (“text” in our study) while training an SR-CNN model. To this end, we propose a semantic loss that requires the SR results of images in the same category to be semantically or contextually similar. Formally, given an LR image and another randomly sampled LR image belonging to the same category, the semantic loss is defined as follows:

where and are SR backbone network outputs, indicates the feature map (We use feature maps after the maxpooling layer, unlike those before the maxpooling layer that have been commonly used in perceptual SR methods [7]. The feature maps after the last maxpooling layer performed better in our preliminary experiments. We also considered using multi-scale feature maps derived from layers of different depths, but they were ineffective.) obtained from the last maxpooling layer of the pretrained VGG-19 network [25] given an input , and and are feature map dimensions. Since the VGG-19 network was trained for image classification, it can return the feature maps that similarly represent images of the same category.

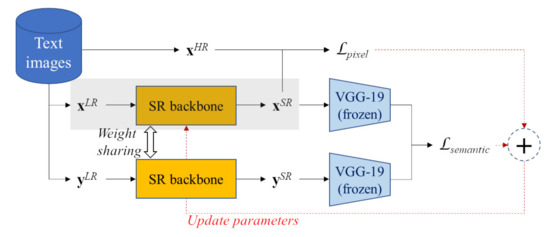

In this study, we use one of the SR-CNN models as the backbone network without making any structural change (see Figure 1). We minimize the loss when training the network:

where is a semantic loss weight that we set to 0.006 in our experiments (The smaller , the less the semantic information was learned. However, a large failed to recover local detailed textures and degraded the SR image quality. This will be shown in the experimental results.). is the pixel loss and is defined as:

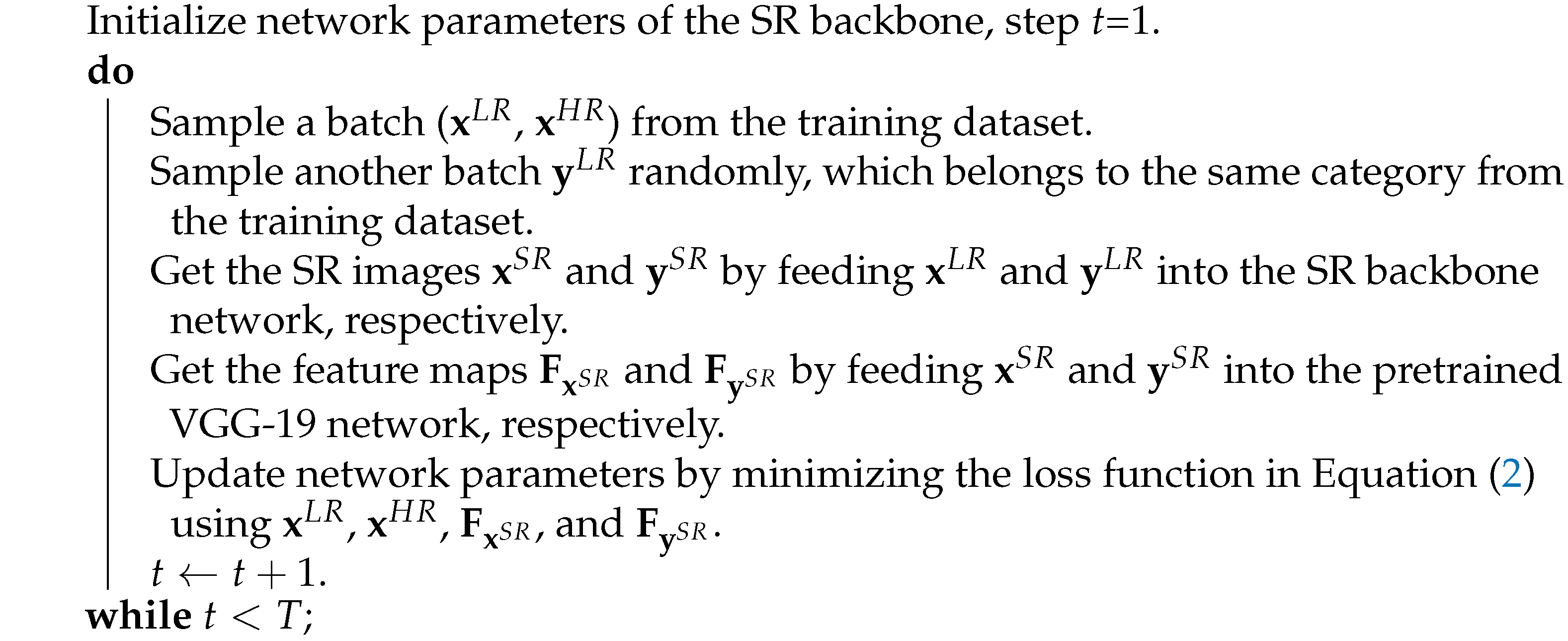

where W and H are the image dimensions. The training procedure is summarized in Algorithm 1. Only the shaded part in Figure 1 is active during inference, so there is no computational overhead for semantic SR.

| Algorithm 1: Semantic SR via self-distillation |

|

Figure 1.

Process flow of the proposed method.

4. Experimental Results and Discussion

4.1. Setup

We used the EDSR model with 16 residual blocks [12] as the backbone for our experiment and modified the open-source code [26] implemented using the TensorFlow library. The network was trained from scratch (the weights were initialized using the Xavier method) using the Adam optimizer with learning rate and momentum terms , upscale factor , batch size , and total steps (T) = 100,000 on a single RTX 3090 GPU. Finally, the weights with the highest PSNR are saved.

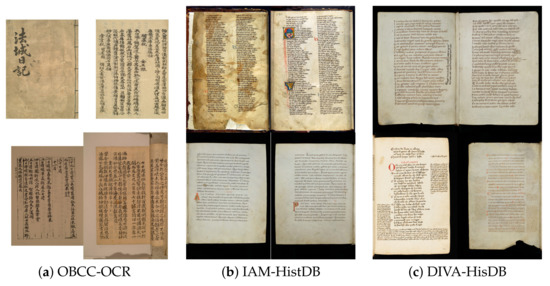

We used two training datasets, Old book Chinese character OCR (OBCC-OCR) [27] and DIV2K [28], with 1108 and 1000 images, respectively, and two test datasets, i.e., IAM-HistDB [29] and DIVA-HisDB [30], consisting of 127 and 120 images, respectively. The OBCC-OCR, IAM-HistDB, and DIVA-HisDB datasets only contain scanned text images of handwritten or printed historical manuscripts written in various languages, including Chinese, German, English, and Latin (see Figure 2). The DIV2K dataset, consisting of images of various categories, was used to show the usefulness of semantic SR. A total of 10% of the images in the OBCC-OCR and DIV2K datasets were used for validation, and another 10% were used for testing. The original images were used as HR images and were downscaled using cubic interpolation with antialiasing to create LR images. The HR images were randomly cropped into patches of size 96 × 96, rotated, and flipped for training. The cropping size had little influence on the experimental results. In Equation (1), , , and the depth of the feature maps was 512.

Figure 2.

A part of images from text image datasets used in our experiments.

For performance analysis, we computed the peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) to evaluate the quality of SR images. PSNR and SSIM values were averaged for all images in each test dataset in the tables below.

4.2. Results and Discussion

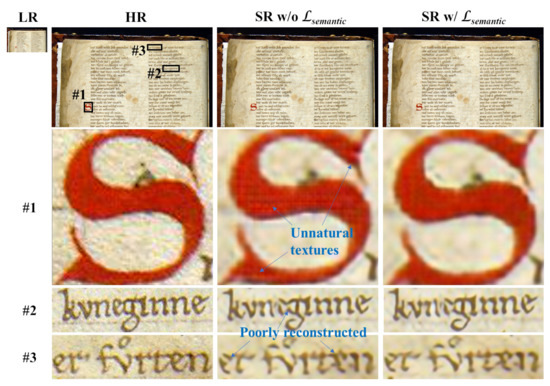

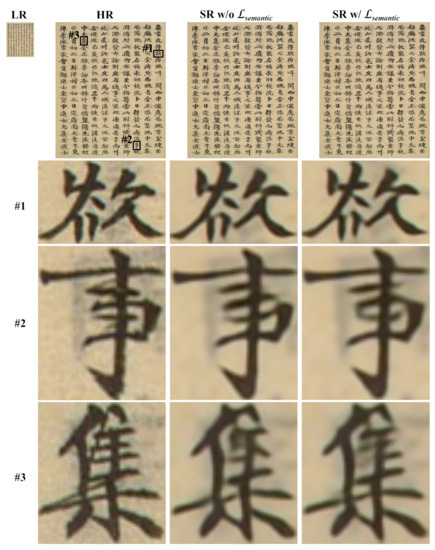

Table 1, where the EDSR model was trained using the OBCC-OCR dataset, shows that the semantic loss improved the quality of text SR images. The improvement was not great (there was little difference in text readability between the SR images generated with and without the semantic loss), but it was consistent. Without the semantic loss, as shown in Figure 3 and Figure 4, text-unrelated textures may appear inside the letters, or the letters may be more seriously distorted, reducing text readability. This indicates that the semantic loss has provided the SR network with text-specific semantic information useful for upscaling text images from other images in the same category. However, when the EDSR model was trained using the DIV2K dataset in Table 2, the quality of the SR images deteriorated with the semantic loss. This is because the training images do not belong to the same semantic category and the semantic loss incorrectly enforces the SR images of different categories to be semantically similar.

Table 1.

Quality improvement of SR images with the semantic loss when training using the text image dataset (OBCC-OCR). The values to the left and right of ‘/’ represent the PSNR and SSIM, respectively.

Figure 3.

Visual comparison (case 1) of text SR images with and without the semantic loss between training using OBCC-OCR. The images were enlarged to improve visibility. Without semantic loss, the letters either have unnatural and unpleasant textures or are poorly reconstructed.

Figure 4.

Visual comparison (case 2) of text SR images with and without the semantic loss between training using OBCC-OCR. The images are enlarged to improve visibility. The letters are better reconstructed by using semantic loss.

Table 2.

Quality improvement of SR images with the semantic loss when training using the non-text image dataset (DIV2K). The values to the left and right of ‘/’ represent the PSNR and SSIM, respectively.

When using the DIV2K training dataset, the SR results of IAM-HistDB and DIVA-HisDB were better without semantic loss. This is because, when using the OBCC-OCR training dataset, the model will be over-fitted to the OBCC-OCR dataset without using the semantic loss, rendering it ineffective for the other text image datasets, although they are semantically equivalent. Furthermore, the OBCC-OCR images do not have sufficient or various textures as the DIV2K images and the textures that do not exist in the OBCC-OCR images (OBCC-OCR, IAM-HistDB, and DIVA-HisDB are text image datasets that can include locally or partially different textures.) can be recovered more effectively using the DIV2K training dataset. This is the same in the DIV2K SR results.

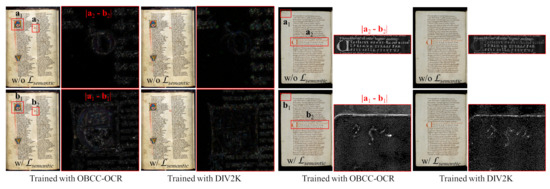

In most results, the visual enhancement of the SR images with the semantic loss could not be clearly observed with the naked eye, as shown in Figure 3 and Figure 4. To indirectly visualize the enhancement, we computed the difference between SR images with and without semantic loss, as shown in Figure 5. The difference images represent the additional information obtained by adopting semantic loss. They were bright in the text regions as expected, but this held true even when the DIV2K training dataset was used. However, we could deduce that the text-specific information was not correctly learned when using the DIV2K training dataset because the difference was unclear in some text regions, especially colored or light text regions. The difference was more noticeable when using the OBCC-OCR training dataset, indicating that the text-specific information was well-learned, improving the quality of text SR images.

Figure 5.

Visual comparison of text SR images with the semantic loss between training using OBCC-OCR and DIV2K. The difference images were equally boosted to improve visibility.

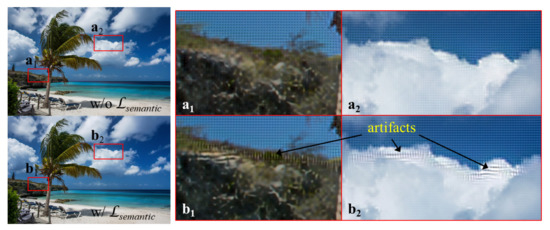

Figure 6 shows the SR results of non-text images in DIV2K when the OBCC-OCR training dataset was used. Basically, the image quality was poor due to the lack of image textures present in OBCC-OCR. Furthermore, we discovered that the network trained with the semantic loss produced visual artifacts in the non-text SR images even when the PSNR and SSIM values in Table 1 were increased. This is understandable given that the proposed method may degrade the SR results of non-text images by optimizing the SR process for text images.

Figure 6.

Visual comparison of non-text SR images with and without the semantic loss when training using OBCC-OCR.

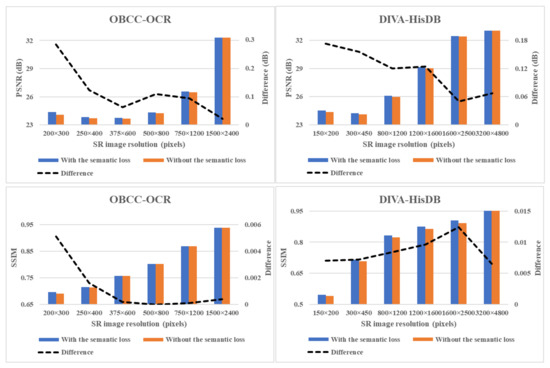

As shown in Figure 7, we discovered that the effect of the semantic loss was more visible when the image resolution was low. By introducing the semantic loss into the OBCC-OCR results, the PSNR values increased by 0.3 dB when producing 200 × 300 SR images from 50 × 75 LR images while increasing by 0.03 dB when producing 1500 × 2400 SR images from 375 × 600 LR images. This would be because the lower the image resolution, the less semantic information is lost than pixel information.

Figure 7.

Differences in PSNR and SSIM with and without semantic loss, depending on SR image resolution.

Given that the proposed method’s performance depends on the weight in Equation (2), we set to 0.006 in our experiments, as mentioned before. This is because, as shown in Table 3, both the small and large s had lower PSNR and SSIM values. When , the PSNR and SSIM values were lower than when the semantic loss was not used. From the results, we believe that small s make it difficult to learn semantic information, and large s prevent the recovery of local details at the pixel level.

Table 3.

Effect of in Equation (2) on the performance of the proposed method. The values to the left and right of ‘/’ represent the PSNR and SSIM, respectively.

Finally, we compared the proposed method to the multi-class GAN-based one [5]. To ensure fairness, the EDSR model was used as a generator in the GAN-based method, a single discriminator was trained for text images, and the hyperparameters (such as learning rate, momentum terms, and batch size) common in both methods were set as the same (We did not consider fine-tuning the hyperparameters for each method. However, the hyperparameters were set similar to most SR studies, and fine-tuning them does not significantly affect the performance of each method. Furthermore, it is not the main concern of this study). In the GAN-based method, weighting factors , , , and margin were set to 1, , 0.1, and 1, respectively, as given in [5]. This comparison shows which approach best guides the EDSR model in learning text-related semantic information. As shown in Table 4, the proposed method outperformed the GAN-based method when using different datasets in the training and test phases. For the DIVA-HisDB dataset, the GAN-based method performed worse than even the naive EDSR model (i.e., “w/o ” in Table 1). However, for the OBCC-OCR dataset, the GAN-based method outperformed the proposed method. This suggests that the GAN-based method is over-fitted to the training dataset and is ineffective for extracting and learning semantic information from the dataset.

Table 4.

Performance comparison between the proposed and multi-class GAN-based methods. The values to the left and right of ‘/’ represent the PSNR and SSIM, respectively.

4.3. Limitations

A training dataset of semantically similar images is required for the proposed method. Therefore, images in the same semantic category should not have significantly different contexts or textures. The difference will negatively affect the proposed method’s performance. This makes it difficult to build a training dataset suitable for the proposed method. This is also why the proposed method’s improvements in PSNR and SSIM were not statistically significant in our experimental results.

5. Conclusions and Future Work

In this study, we proposed a semantic SR method for more effectively upscaling text images using the baseline SR-CNN model. The method used a semantic loss to measure the semantic difference between the SR results of text images, which led the baseline SR model to learn an implicit text-specific semantic prior via self-distillation without modification of its network structure. The prior was then shown to improve the SR results of text images (up to 0.3 dB in PSNR), and the proposed method outperformed the GAN-based semantic SR method.

However, the training dataset used in our experiments lacks sufficient textures; details of text images with different textures were not fully recovered. Therefore, we should expand our text training dataset to include more text images with different textures and to analyze how well our semantic SR method can upscale text images with various textures, which remain as future work. In another future work, we plan to apply our semantic SR method to upscaling the images from other categories, such as faces and medical images.

Funding

This work was supported by the National Research Foundation of Korea (NRF) Grant by the Korean Government through the MSIT under Grant 2021R1F1A1045749.

Acknowledgments

This research utilized “Old book Chinese character OCR” dataset built with the support of the National Information Society Agency and with the fund of the Ministry of Science and ICT of South Korea. The dataset can be downloaded from aihub.or.kr.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SR | Super-resolution |

| HR | High-resolution |

| LR | Low-resolution |

| CNN | Convolutional neural network |

| GAN | Generative adversarial network |

| LSTM | Long short-term memory |

| EDSR | Enhanced deep super-resolution network |

| ResNet | Residual neural network |

| PSNR | Peak signal-to-noise ratio |

| SSIM | Structural similarity index measure |

References

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Tai, Y.W.; Liu, S.; Brown, M.S.; Lin, S.C.F. Super resolution using edge prior and single image detail synthesis. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 2400–2407. [Google Scholar]

- Yamashita, R.; Nishio, M.; Do, R.; Togashi, K. Convolutional neural networks: An overview and application in radiology. Insights Into Imaging 2018, 9, 611–629. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Chen, J.; Hoi, S.H. Deep Learning for Image Super-Resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3365–3387. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Sun, D.; Pan, J.; Zhang, Y.; Pfister, H.; Yang, M.H. Learning to Super-Resolve Blurry Face and Text Images. In Proceedings of the IEEE International Conference on Computer Vision (ICCV) 2017, Venice, Italy, 22–29 October 2017; pp. 251–260. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Liu, X.; Wang, W.; Liang, D.; Shen, C.; Bai, X. Scene Text Image Super-Resolution in the Wild. In Proceedings of the European Conference on Computer Vision 2020, Glasgow, UK, 23–28 August 2020; pp. 650–666. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017, Honolulu, HI, USA, 22–25 July 2017; pp. 105–114. [Google Scholar] [CrossRef]

- Chen, J.; Li, B.; Xue, X. Scene Text Telescope: Text-Focused Scene Image Super-Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) 2021, Virtual, 19–25 June 2021; pp. 12021–12030. [Google Scholar] [CrossRef]

- Ma, J.; Guo, S.; Zhang, L. Text Prior Guided Scene Text Image Super-resolution. arXiv 2021, arXiv:2106.15368. [Google Scholar]

- Xu, T.B.; Liu, C.L. Data-Distortion Guided Self-Distillation for Deep Neural Networks. Proc. AAAI Conf. Artif. Intell. 2019, 33, 5565–5572. [Google Scholar] [CrossRef]

- Yun, S.; Park, J.; Lee, K.; Shin, J. Regularizing Class-Wise Predictions via Self-Knowledge Distillation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 13873–13882. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar] [CrossRef]

- Luo, Z.; Huang, Y.; Li, S.; Wang, L.; Tan, T. Learning the Degradation Distribution for Blind Image Super-Resolution. arXiv 2022, arXiv:2203.04962. [Google Scholar]

- Zhang, Y.; Wang, H.; Qin, C.; Fu, Y. Learning Efficient Image Super-Resolution Networks via Structure-Regularized Pruning. In Proceedings of the Tenth International Conference on Learning Representations 2022, Virtual, 25 April 2022. [Google Scholar]

- Dong, C.; Zhu, X.; Deng, Y.; Loy, C.C.; Qiao, Y. Boosting Optical Character Recognition: A Super-Resolution Approach. arXiv 2015, arXiv:1506.02211. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision 2014, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Pandey, R.K.; Vignesh, K.; Ramakrishnan, A.G.; Chandrahasa, B. Binary Document Image Super Resolution for Improved Readability and OCR Performance. arXiv 2018, arXiv:1812.02475. [Google Scholar]

- Tran, H.T.M.; Ho-Phuoc, T. Deep Laplacian Pyramid Network for Text Images Super-Resolution. arXiv 2018, arXiv:1811.10449. [Google Scholar]

- Chen, J.; Haiyang, Y.; Jianqi, M.; Li, B.; Xue, X. Text Gestalt: Stroke-Aware Scene Text Image Super-Resolution. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; pp. 285–293. [Google Scholar]

- Nakaune, S.; Iizuka, S.; Fukui, K. Skeleton-aware Text Image Super-Resolution. In Proceedings of the 32nd British Machine Vision Conference, Online, 22–25 November 2021. [Google Scholar]

- Sun, J.; Zhu, J.; Tappen, M. Context-Constrained Hallucination for Image Super-Resolution. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 231–238. [Google Scholar] [CrossRef]

- Timofte, R.; Smet, V.; Van Gool, L. Semantic super-resolution: When and where is it useful? Comput. Vis. Image Underst. 2015, 142, 1–12. [Google Scholar] [CrossRef][Green Version]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 606–615. [Google Scholar]

- Chen, C.; Shi, X.; Qin, Y.; Li, X.; Han, X.; Yang, T.; Guo, S. Blind Image Super Resolution with Semantic-Aware Quantized Texture Prior. arXiv 2022, arXiv:2202.13142. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Available online: https://github.com/krasserm/super-resolution (accessed on 13 December 2021).

- Available online: https://aihub.or.kr/aidata/30753 (accessed on 13 December 2021).

- Agustsson, E.; Timofte, R. NTIRE 2017 Challenge on Single Image Super-Resolution: Dataset and Study. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1122–1131. [Google Scholar] [CrossRef]

- Available online: https://fki.tic.heia-fr.ch/databases (accessed on 7 July 2022).

- Available online: https://www.unifr.ch/inf/diva/en/research/software-data/diva-hisdb.html (accessed on 7 July 2022).

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).