High-Performance Enhancement of SWIR Images

Abstract

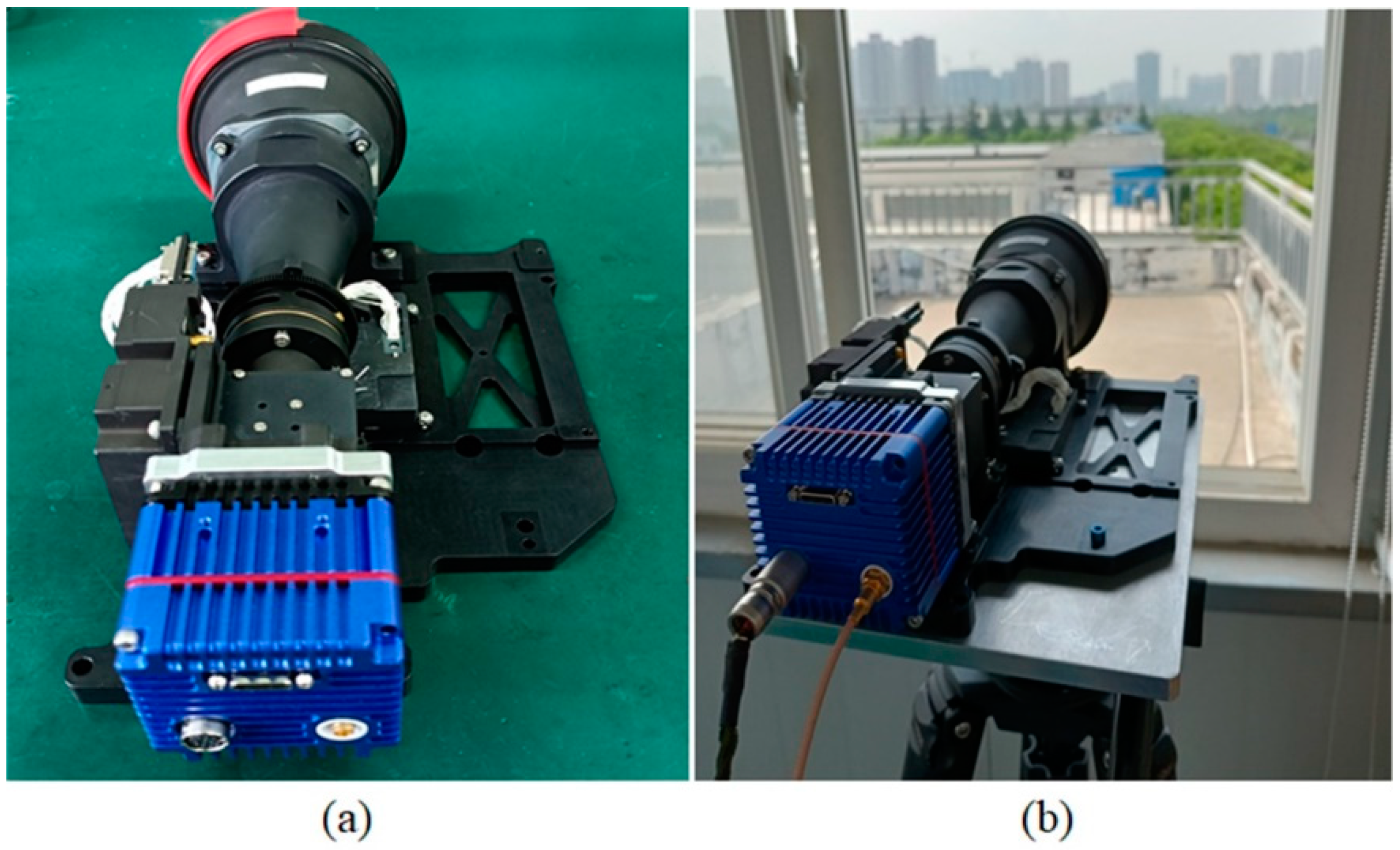

:1. Introduction

2. Related Work

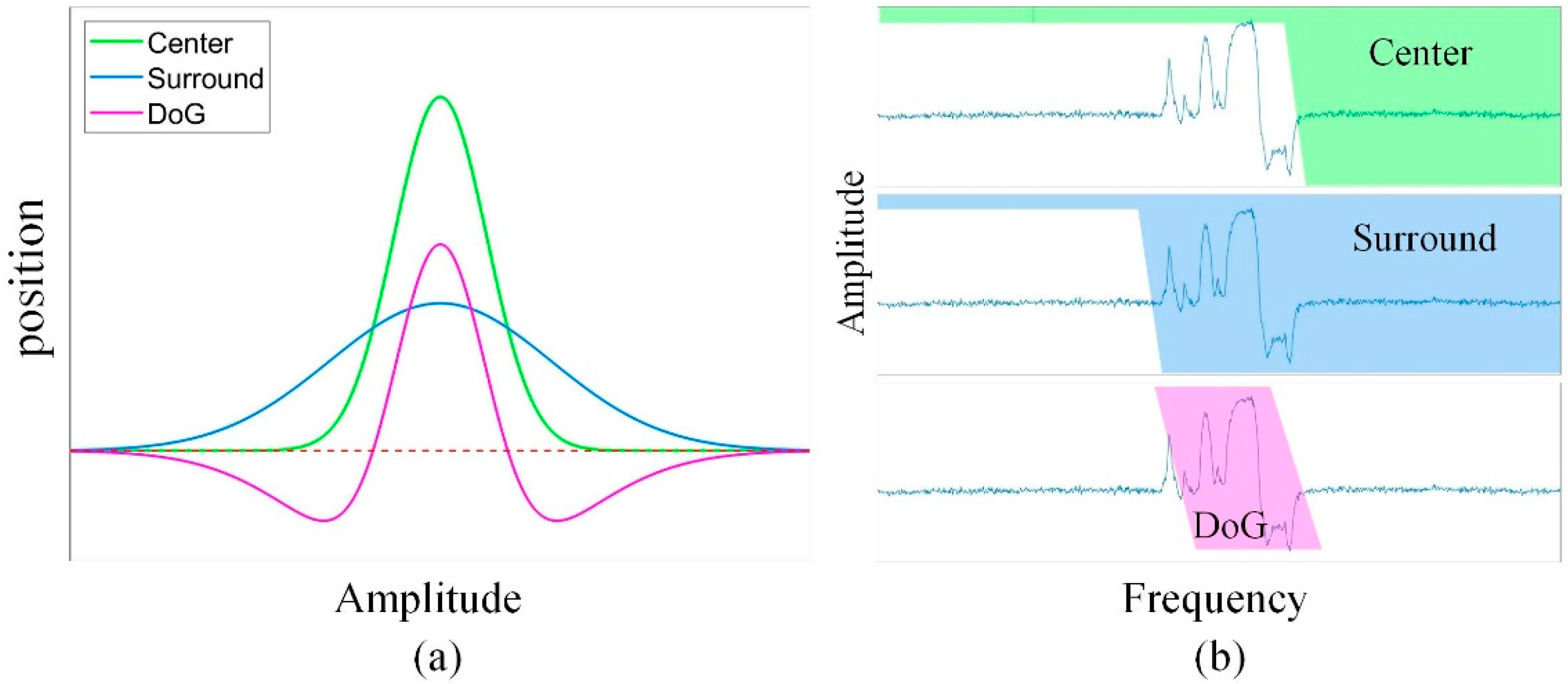

2.1. Difference of Gaussian Filter

2.2. Plateau Equalization

3. Principle of the Proposed Method

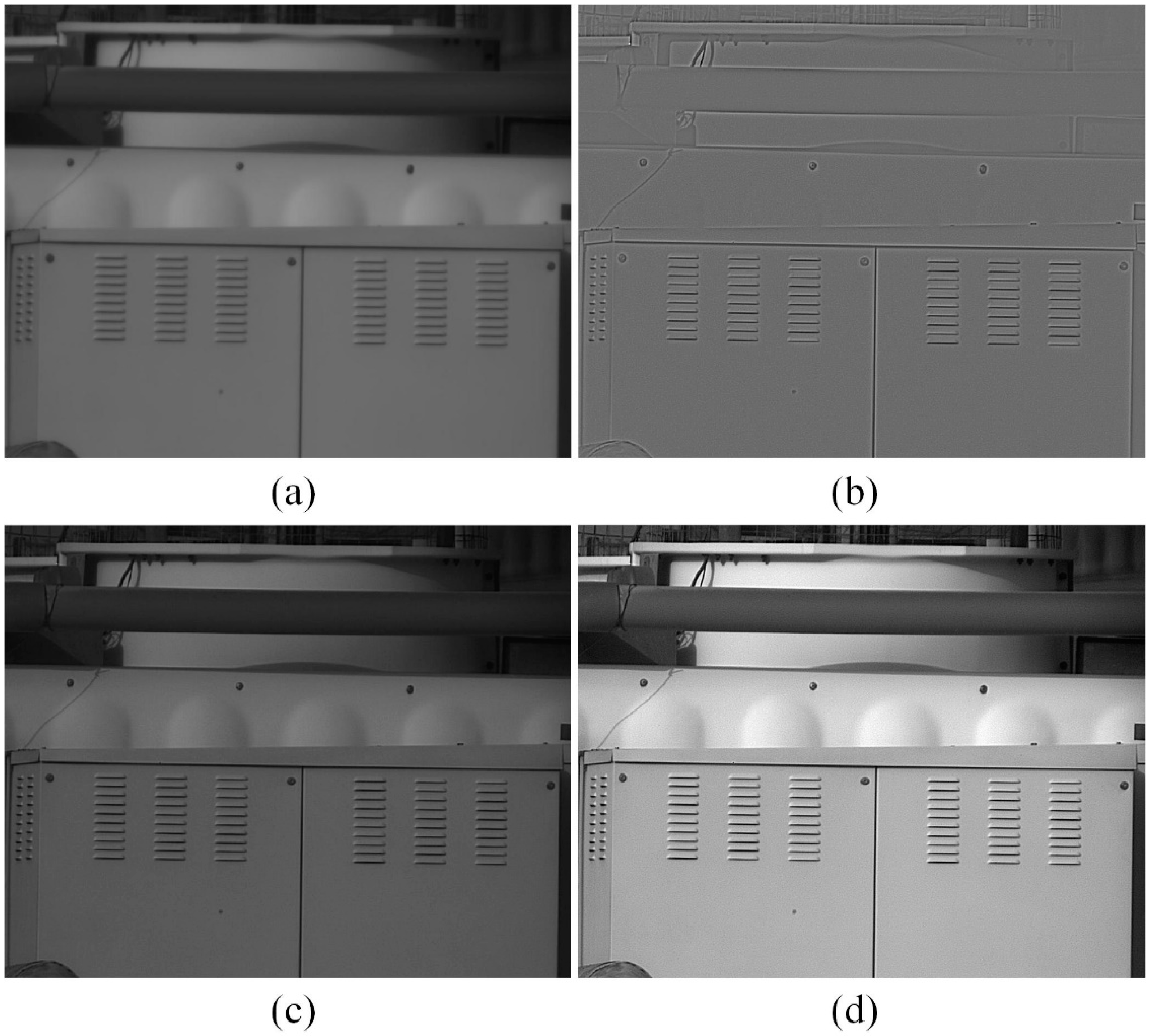

3.1. Enhancement via Fast Edge Extraction

3.2. Equalization and Remapping

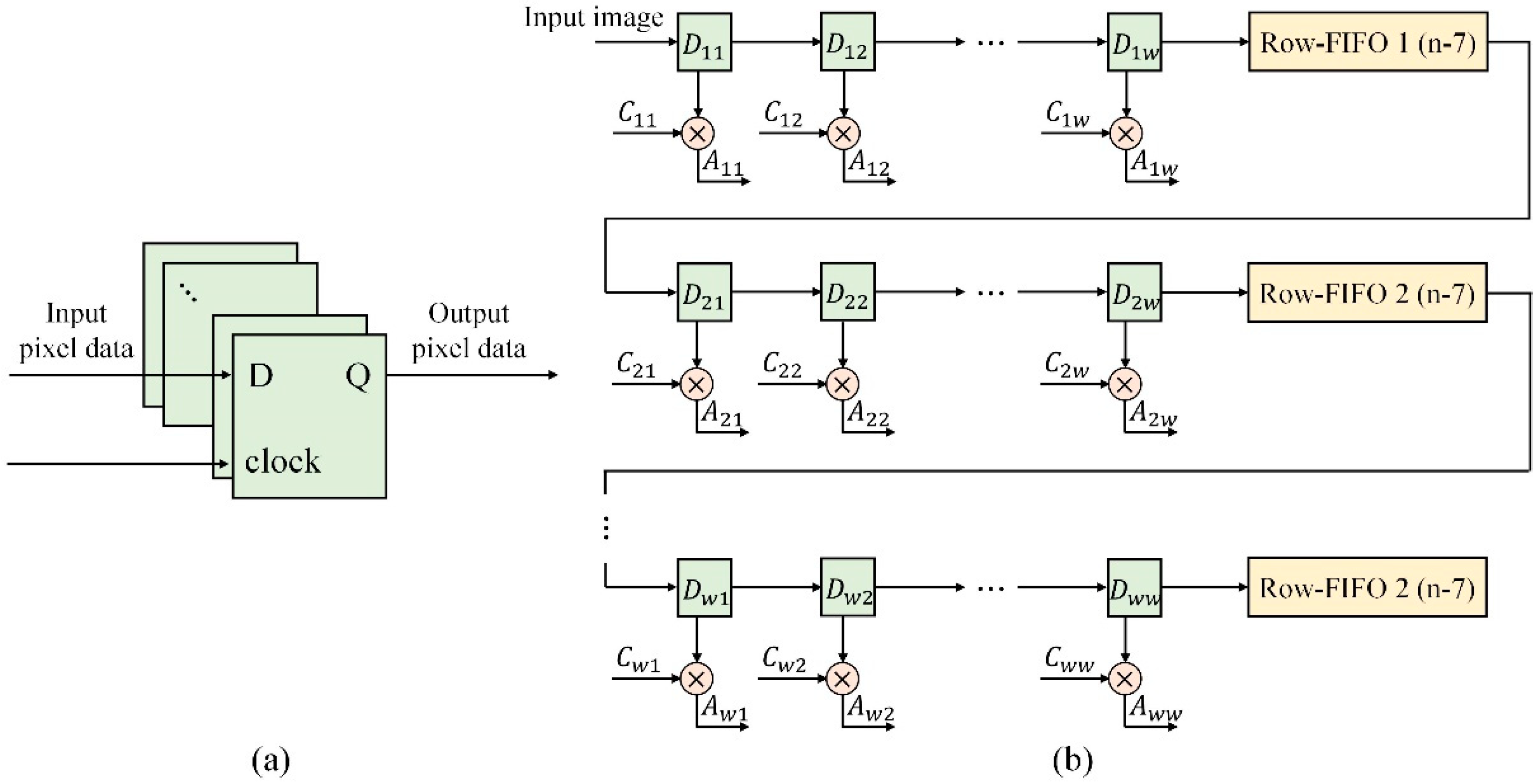

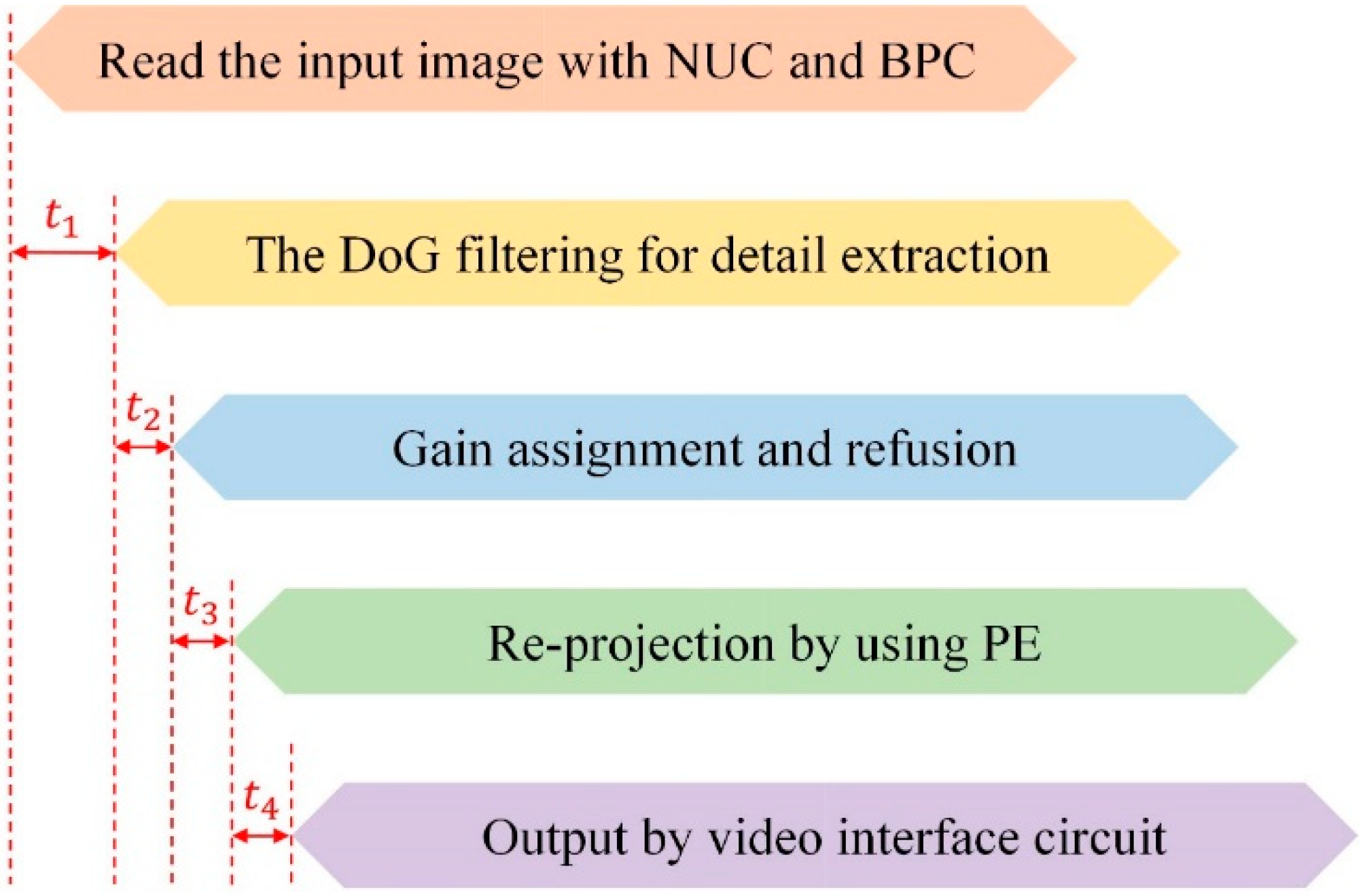

3.3. Guidance for Implementation on FPGA

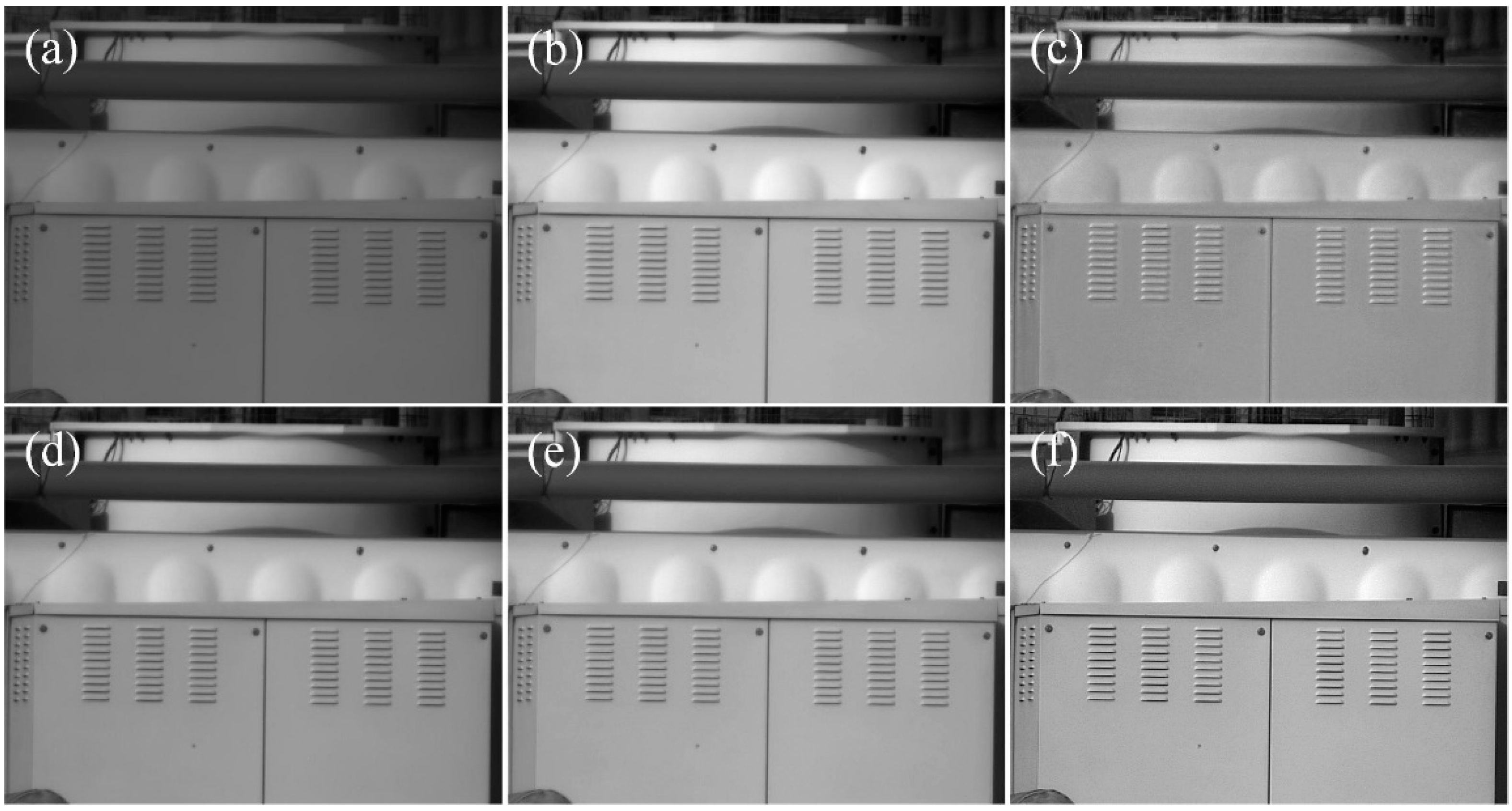

4. Experimental Results Comparison and Discussions

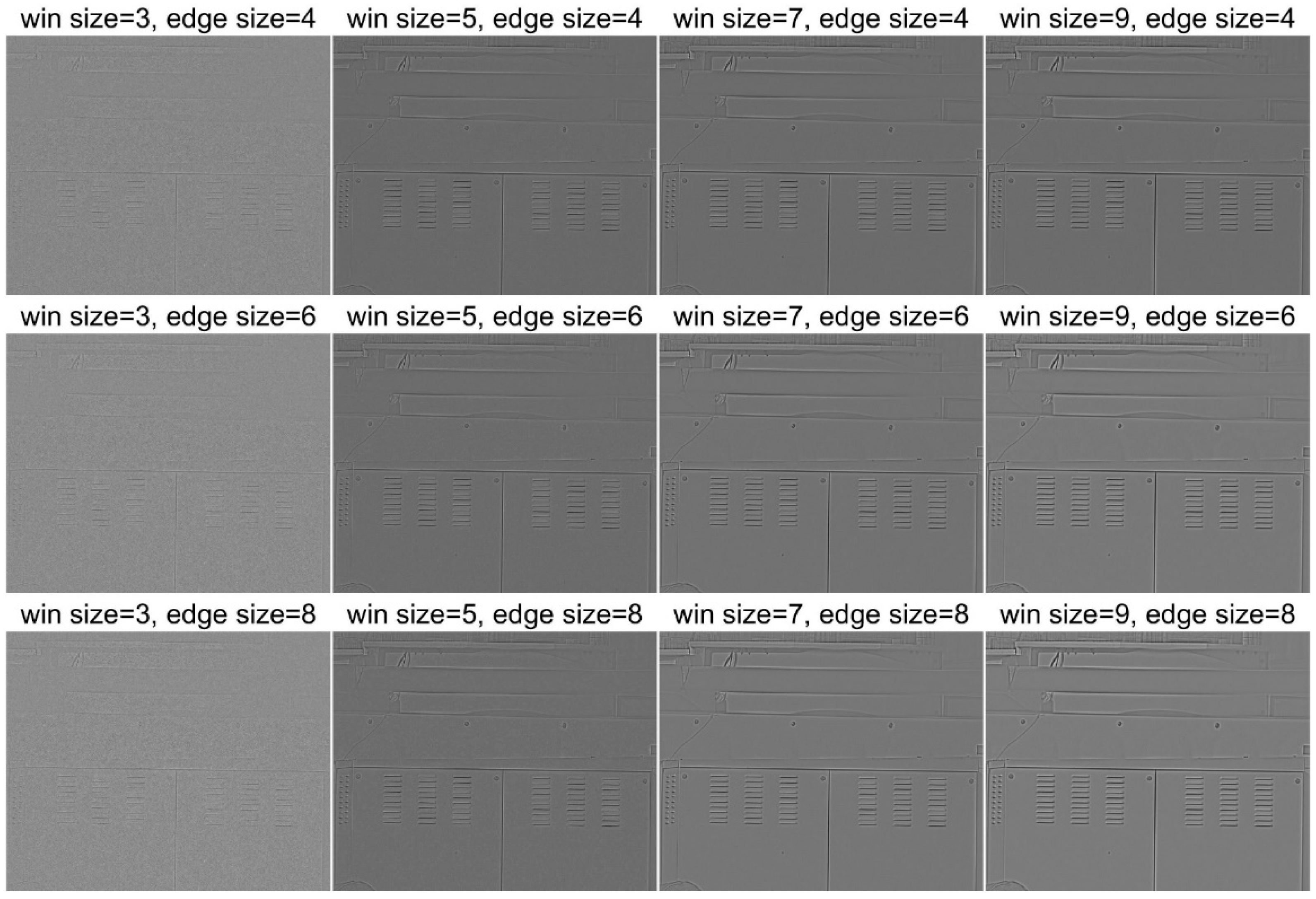

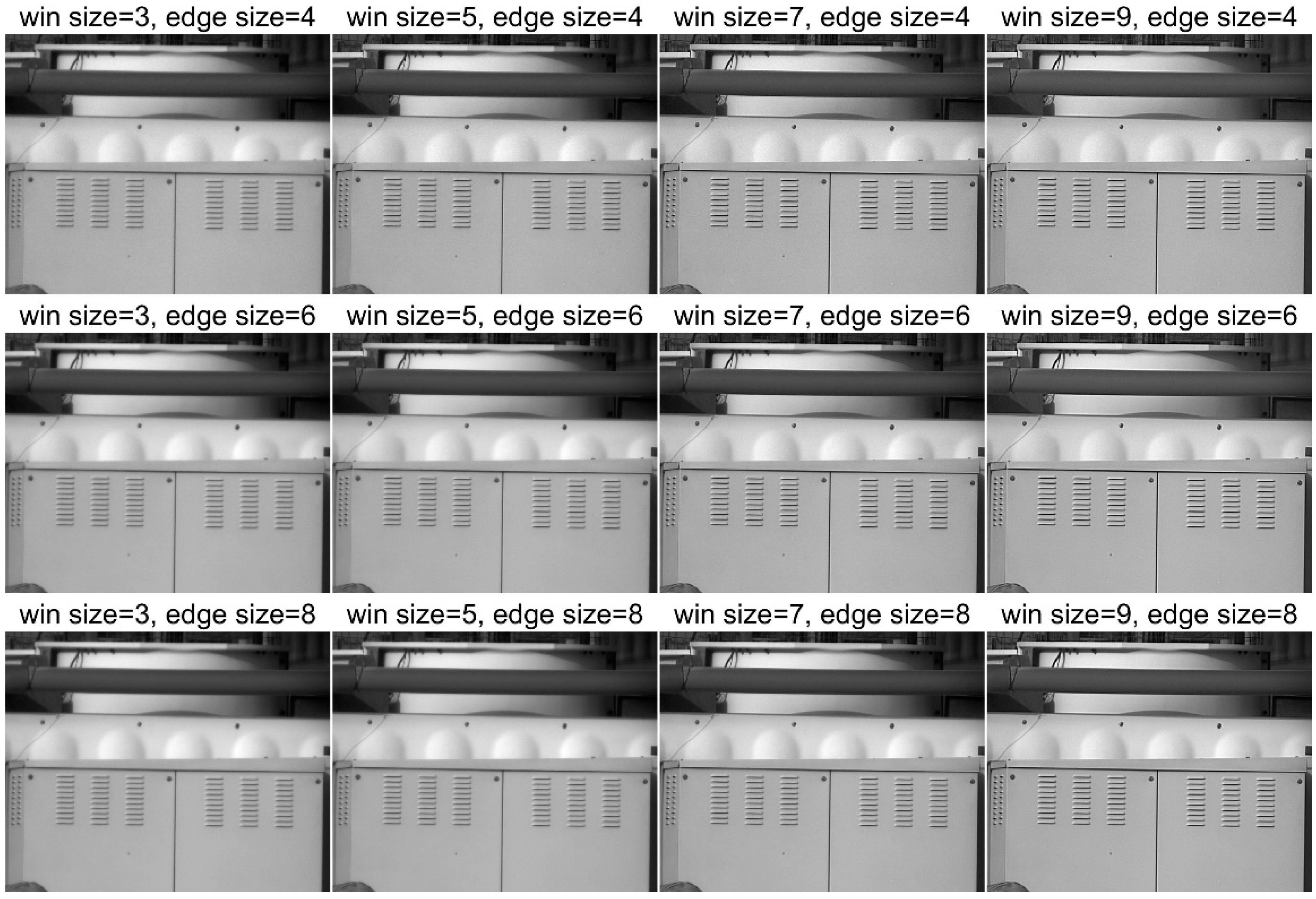

4.1. Performance Comparison of Different Parameters

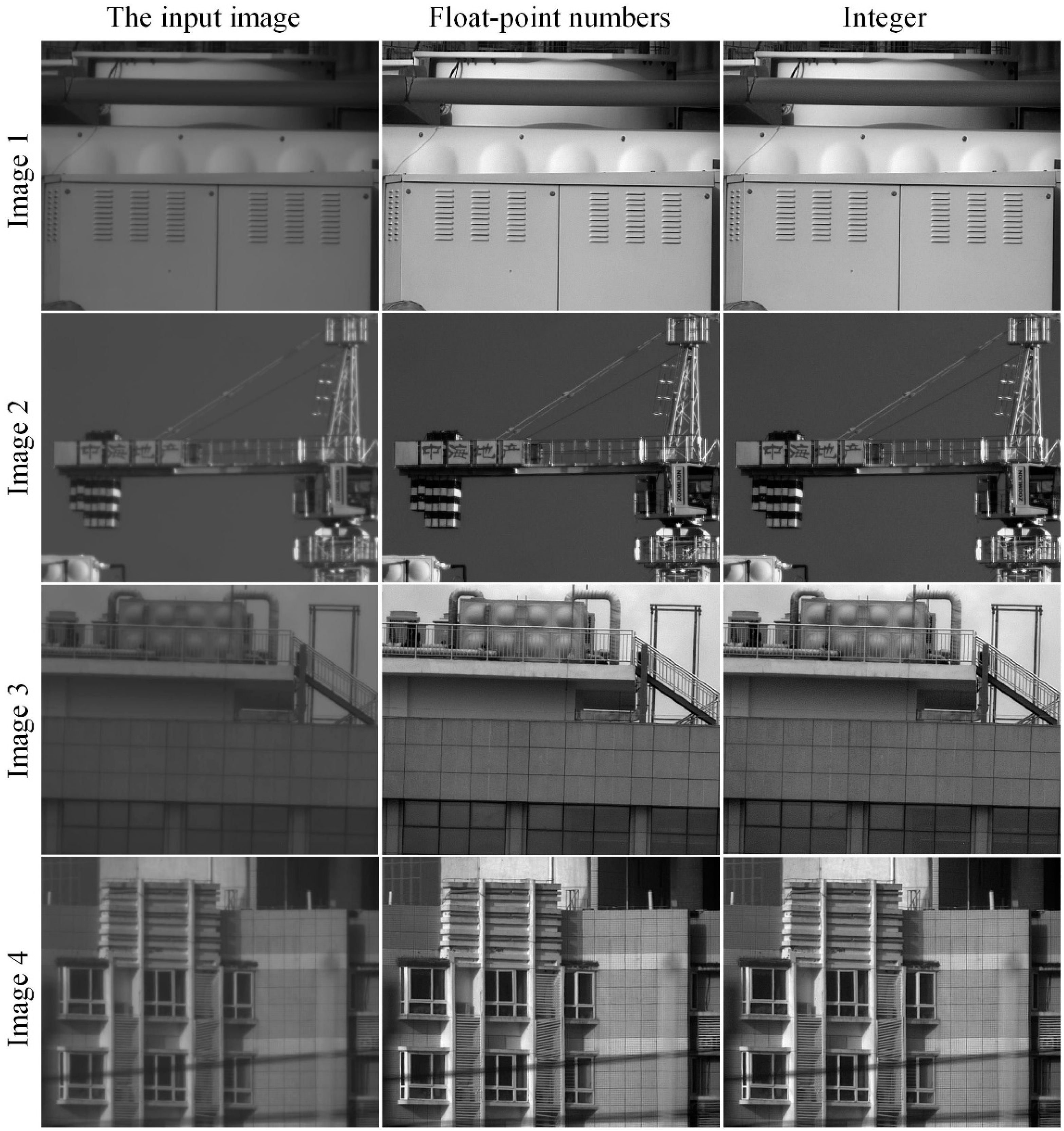

4.2. Performance between CPU and FPGA

4.3. Performance among Different Methods

4.4. Limitations

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Park, S.; Choi, Y. Applications of unmanned aerial vehicles in mining from exploration to reclamation: A review. Minerals 2020, 10, 663. [Google Scholar] [CrossRef]

- Chen, Y.; Kang, J.U.; Zhang, G.; Cao, J.; Xie, Q.; Kwan, C. High-performance concealment of defective pixel clusters in infrared imagers. Appl. Opt. 2020, 59, 4081–4090. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Zhu, Y.; Zeng, B.; Hu, J.; Ouyang, H.; Li, Z. Review of high dynamic range infrared image enhancement algorithms. Laser Technol. 2018, 42, 718–726. [Google Scholar]

- Zhang, F.; Xie, W.; Ma, G.; Qin, Q. High dynamic range compression and detail enhancement of infrared images in the gradient domain. Infrared Phys. Technol. 2014, 67, 441–454. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast Limited Adaptive Histogram Equalization; Academic Press Inc.: Cambridge, MA, USA, 1994. [Google Scholar]

- Vickers, V.E. Plateau equalization algorithm for real-time display of high-quality infrared imagery. Opt. Eng. 1996, 35, 1921. [Google Scholar] [CrossRef]

- Chen, Y.; Kang, J.U.; Zhang, G.; Cao, J.; Xie, Q.; Kwan, C. Real-time infrared image detail enhancement based on fast guided image filter and plateau equalization. Appl. Opt. 2020, 59, 6407–6416. [Google Scholar] [CrossRef] [PubMed]

- Branchitta, F. New technique for the visualization of high dynamic range infrared images. Opt. Eng. 2009, 48, 096401. [Google Scholar] [CrossRef]

- Zuo, C.; Chen, Q.; Liu, N.; Ren, J.; Sui, X. Display and detail enhancement for high-dynamic-range infrared images. Opt. Eng. 2011, 50, 127401. [Google Scholar] [CrossRef]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the Sixth International Conference on Computer Vision (IEEE Cat. No.98CH36271), Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar]

- Paris, S.; Durand, F. A fast approximation of the bilateral filter using a signal processing approach. Int. J. Comput. Vis. 2009, 81, 24–52. [Google Scholar] [CrossRef] [Green Version]

- Liu, N.; Zhao, D. Detail enhancement for high-dynamic-range infrared images based on guided image filter. Infrared Phys. Technol. 2014, 67, 138–147. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J. Fast Guided Filter. arXiv 2015, arXiv:1505.00996. [Google Scholar] [CrossRef]

- R.P. Ltd. High Performance SWIR Imaging Cameras; 2015; Available online: https://www.raptorphotonics.com/wp-content/uploads/2015/10/Ninox-White-Paper-Final.pdf (accessed on 9 May 2022).

- Harris, L.; Llewellyn, G.M.; Holma, H.; Warren, M.A.; Clewley, D. Characterization of Unstable Blinking Pixels in the AisaOWL Thermal Hyperspectral Imager. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1695–1703. [Google Scholar] [CrossRef]

- Ouyang, H.; Li, Z.; Zhou, Y.; Wang, S.; Zhu, X.; Zeng, B.; Zhao, D.; Hu, J. Review of Dynamic Range Compression Algorithms for Infrared Images. Infrared Technol. 2021, 43, 208–217. [Google Scholar]

- Gooch, B.; Reinhard, E.; Gooch, A. Human facial illustrations: Creation and psychophysical evaluation. ACM Trans. Graph. 2004, 23, 27–44. [Google Scholar] [CrossRef]

- Chen, Y.; Zhang, H.; Zhao, Z.; Wang, Z.; Wang, H.; Kwan, C. Ultra-fast detail enhancement for a short-wave infrared image. Appl. Opt. 2022, 61, 5112. [Google Scholar] [CrossRef]

- Marr, D.; Hildreth, E. Theory of edge detection. Proc. R. Soc. Lond. Ser. B Biol. Sci. R. Soc. 1980, 207, 215–217. [Google Scholar]

- HWinnemöller, H.; Kyprianidis, J.E.; Olsen, S.C. XDoG: An eXtended difference-of-Gaussians compendium including advanced image stylization. Comput. Graph. 2012, 36, 740–753. [Google Scholar] [CrossRef] [Green Version]

- Kim, S.; Yang, Y.; Lee, J.; Park, Y. Small target detection utilizing robust methods of the human visual system for IRST. J. Infrared Millim. Terahertz Waves 2009, 30, 994–1011. [Google Scholar] [CrossRef]

| Samples | Resolution | Capture Condition | Image Characteristics |

|---|---|---|---|

| Image 1 | 1280 × 1024 | Outdoor/noon/low integration time | Electric box at a distance of 400 m |

| Image 2 | 1280 × 1024 | Outdoor/afternoon/long integration time | Tower cranes around 4.5 km away |

| Image 3 | 1280 × 1024 | Outdoor/afternoon/medium integration time | Building with fence around 1 km away |

| Image 5 | 1280 × 1024 | Outdoor/noon/medium integration time | Building around 1 km away |

| CPU | FPGA | |

|---|---|---|

| Image 1 | 25.4599 | 25.0362 |

| Image 2 | 23.1785 | 23.9471 |

| Image 3 | 31.7146 | 33.2149 |

| Image 4 | 37.6565 | 37.5983 |

| Mean value | 29.5024 | 29.9491 |

| LUT | Slices | FF | BRAM | DSP Slices | |

|---|---|---|---|---|---|

| Hardware use | 946 | 311 | 982 | 6 | 31 |

| Total resources of the FPGA | 101,440 | 15,850 | 126,800 | 135 | 420 |

| PE | BF&DDE | GF&DDE | FGF&PE | Proposed | |

|---|---|---|---|---|---|

| Image 1 | 13.5015 | 18.5207 | 11.4644 | 15.2999 | 25.0362 |

| Image 2 | 13.1319 | 19.3775 | 11.6492 | 13.0507 | 23.9471 |

| Image 3 | 15.7237 | 18.5607 | 10.9612 | 16.3301 | 32.2149 |

| Image 4 | 18.3325 | 24.8741 | 15.3778 | 20.0062 | 37.5983 |

| Mean value | 15.1724 | 20.3333 | 12.3632 | 16.1717 | 29.6991 |

| PE | BF&DDE | GF&DDE | FGF&PE | Proposed | |

|---|---|---|---|---|---|

| Image 1 | 14.3 | 207.6 | 252.1 | 106.3 | 18.9 |

| Image 2 | 14.5 | 202.3 | 249.6 | 104.1 | 20.0 |

| Image 3 | 14.6 | 201.4 | 261.4 | 101.2 | 19.7 |

| Image 4 | 14.6 | 196.4 | 247.6 | 102.5 | 20.6 |

| Mean value | 14.5 | 201.9 | 252.7 | 103.5 | 19.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, S.; Xiong, Z.; Li, R.; Chen, Y.; Wang, H. High-Performance Enhancement of SWIR Images. Electronics 2022, 11, 2001. https://doi.org/10.3390/electronics11132001

Dong S, Xiong Z, Li R, Chen Y, Wang H. High-Performance Enhancement of SWIR Images. Electronics. 2022; 11(13):2001. https://doi.org/10.3390/electronics11132001

Chicago/Turabian StyleDong, Sen, Zhi Xiong, Rongbing Li, Yaohong Chen, and Hao Wang. 2022. "High-Performance Enhancement of SWIR Images" Electronics 11, no. 13: 2001. https://doi.org/10.3390/electronics11132001

APA StyleDong, S., Xiong, Z., Li, R., Chen, Y., & Wang, H. (2022). High-Performance Enhancement of SWIR Images. Electronics, 11(13), 2001. https://doi.org/10.3390/electronics11132001