1. Introduction

In recent years, communication technology has developed rapidly, and the process of miniaturization, portability, and intelligence of communication equipment has intensified. The electromagnetic environment is becoming more and more complex, and radio spectrum resources are becoming more and more precious. In order to improve spectrum utilization and alleviate spectrum scarcity, spectrum sharing and dynamic spectrum access have been successively introduced [

1]. However, the work of dynamic spectrum access is exposed to the risk of various attacks. Therefore, spectrum anomaly detection techniques have been widely focused on and have gradually become a hot topic in cognitive radio. Spectrum anomaly detection is an essential component in cognitive radio and spectrum monitoring, enabling the detection of anomalies such as malicious interference, user conflicts, and illegal occupancy to improve communication quality [

2]. At the same time, spectrum anomaly detection and research are essential for radio equipment management and electromagnetic environment posture assessment [

3].

Anomaly detection algorithms are generally divided into two types: the first based on appropriate feature extraction for event representation, and the second is to establish a model that can evaluate whether an event is an anomaly [

4]. Traditional electromagnetic spectrum anomaly detection algorithms are based on feature extraction. Kullback–Leibler Divergence [

5], Brier’s-Score [

6], Compressed-Sensing [

7], Random-Forest [

8], Hidden-Markov [

9], Fractional Fourier [

10], and Machine Learning [

11] have also been used for anomaly detection and achieved certain results. With the continuous development of the artificial intelligence industry, deep learning has demonstrated its powerful data analysis ability and scholars have made some achievements in anomaly detection using deep learning. The data is encoded and downscaled by deep networks to achieve feature extraction, combined with clustering methods to achieve anomaly detection [

12,

13]. This feature-extraction-based approach has some anomaly detection capability, but its anomaly detection results depend heavily on the expressiveness of the features and the results of the clustering algorithm. In addition, biased data in the training data can cause the clustering algorithm to be biased and affect the anomaly detection results.

Without using the basic features of the data in the study of this paper, the focus is on building a network model that enables anomaly detection. Existing algorithms discriminate anomalies by reconstruction error and prediction error, and some results have been achieved. SAIFE is a spectrum anomaly detection algorithm based on an adversarial autoencoder (AAE) [

14]. The system can realize anomaly detection by discriminating reconstruction errors of received spectrum data. LSTM networks can detect a variety of abnormal states in the LTE signal spectrum by predicting spectral data and can detect anomalies across the frequency band by transfer learning [

15]. In order to solve the abnormal spectrum status in satellite communication, LSTM networks can realize the detection of multi-variable abnormal states and subtle abnormal states on the basis of spectrum prediction [

16]. The encoder is combined with the GAN network to form a new anomaly detection network. Compared with the conventional model, the encoder module is added to the algorithm to realize anomaly detection based on reconstruction errors; however, the system network complexity is high [

17]. In the case of small samples, ACGAN is able to expand the training samples to achieve anomaly detection of spectral data [

18]. In general, the anomaly detection algorithm based on reconstruction still achieves data feature selection, extraction, and dimensionality reduction through the deep network but is still affected by deviated data and has poor sensitivity to low power spectrum anomalies. In contrast, the spectral anomaly detection algorithm based on the prediction method can not only make full use of the time sequence characteristics of data but also realize the detection of low-power anomalies with high prediction accuracy [

19,

20,

21]. However, existing anomaly detection algorithms, whether based on reconstruction or prediction methods, do not take full advantage of historical data. Existing algorithms use historical spectral data to produce the dataset required for deep learning and have taken the data in a sliding window to train a deep model. However, this approach fails to take into account the spatial and temporal correlation of spectrum data. In fact, most of the frequency-using devices are affected by time variation, and the data intensity of some frequency bands has a periodical variation with time in terms of days. Therefore, this paper processes the historical data from different time scales into the depth model, which has obtained higher accuracy of spectrum prediction and thus improved the anomaly detection performance of the model.

Scholars have obtained some research results regarding ways of inputting the obtained historical data at different scales into the network and obtain and fuse the features. Existing algorithms [

22,

23] input historical data processed at three-time scales into different branches of the network in parallel and obtain prediction results through feature fusion. However, inputting historical data from different time scales into the depth model in a parallel mode may result in redundancy of data leading to degradation of prediction performance. The parallel input mode requires a lot of repetition of the feature extraction modules in the network, and the number of algorithm parameters increases significantly. In fact, in order to input historical data on a time scale of days and hours into the deep network, it is necessary to average them over time. After processing, the short-term change characteristics of the data become blurred, and the trend characteristics become more obvious. Since the correlation of spectrum data is strong in a short period, the prediction of spectrum data in the next moment mainly relies on the spectrum data in a short time period, and the historical data processed on a large scale should assist the short-time-period spectrum data in improving the prediction performance.

In order to make full use of the multi-timescale historical data and achieve efficient anomaly detection of spectrum data, this paper proposes a framework for spectrum anomaly detection based on spatio-temporal network prediction. Firstly, we analyze the spectrum data, set the historical data in the time scale of days and hours, and use the sliding window method to construct the data set; then, we build a deep network capable of fusing multiple moderate temporal features, which uses the LSTM module to mine the temporal features of the data in a multi-timescale and combine the short-time-period spectral features extracted by the convolutional network to realize the spectrum prediction. Finally, the identification function is analyzed and determined to compare the differences between the predicted and received data to achieve anomaly detection. Experiments are conducted on several frequency bands, and the experimental results show that the anomaly detection algorithm based on spatio-temporal network prediction proposed in this paper is superior to the existing algorithms.

The main contributions are as follows:

We analyze the historical spectrum data to clarify the periodicity of most frequency bands and the length of the period. The data are processed on a time scale of days and hours, and the data set is generated using a sliding window approach.

A spectrum prediction network that can make full use of multi time scale historical information is designed. The network contains depth feature extraction modules and a multi-timescale feature extraction module, which can effectively extract the features of spectrum data at different time scales.

Comparative analysis of the discriminatory function and anomaly detection experiments in multiple frequency bands verify the rationality of the anomaly detection algorithm framework in this paper.

The rest of this paper is organized as follows. In

Section 2, the problem of anomaly detection based on spectrum prediction is clearly stated. In

Section 3, a spectrum prediction model capable of fusing multi-timescale data is proposed, and each feature extraction module is explained in detail. In

Section 4, experimental results are given, and the performance is evaluated. Conclusions and suggestions for future work are presented in

Section 5.

2. Problem Description of Anomaly Detection Based on Spectrum Prediction

The main task of spectrum anomaly detection is to detect the received spectrum data and clarify whether it contains anomalous signals or anomalous interference. To describe the anomaly detection problem in detail, we first model the received signal as shown in Equation (1).

where,

and

are conventional and abnormal data respectively,

is received signal,

is normal user data,

is abnormal interference signal, and

is noise signal,

N is the number of received signals.

The variation in the time domain is an important feature in the use of spectrum, so we adopt STFT to represent the time-frequency of the received signal, as shown in Equation (2):

where

represents the window function of a particular type and length, * denotes complex conjugate,

F is the sampling rate, and

m represents the cycle frequency depending on

F. Unlike the traditional algorithms, which require complex statistical models and high computational complexity, the anomaly detection model based on deep learning can intelligently learn the data distribution

from the source data set

, and identify the anomaly when the test data deviates from the data distribution.

We use spectrum prediction to implement anomaly detection so that the problem can be further described. It is assumed that the spectrum data of the received signal is the measured value of F frequency points in T time slots, and the time-frequency domain sequence can be expressed in the form of a matrix in the simplified symbol, . Where, represents the collected data of each frequency point and is the row in the matrix. represents the data at each time and is the column in the matrix, means matrix transpose operation. Therefore, the time series data at the k frequency point can be represented as ; similarly, the data at each frequency point at the t time can be represented as .

Conventional spectrum prediction is based on the spectrum data in a short period of time to predict the future multi-slot data. If the current moment is

and the frequency point is

k, the data

of the first

s time slots is used to predict the data

of the future

time slots, as shown in Formula (3) below.

This paper proposes a prediction method based on a spatio-temporal network, which processes historical data at multi-timescale and combines spectral data in a short time period to further improve the prediction performance of the model. The historical data processed at different scales is defined as

, then the prediction model in this paper can be described as:

If

is used to represent the real received data,

is the model-predicted data, the identification function is set as

D, and the detection threshold is set as

, the anomaly detection process can be described as follows:

3. Spectrum Anomaly Detection Framework

In this paper, we use deep learning to implement modelling of spectrum prediction. Most of the existing prediction-based anomaly detection algorithms use the temporal characteristics of the spectrum data to build a network using LSTM modules. They can enhance the prediction effect by appropriate structural transformations. Some algorithms treat the time-frequency data as images for processing and use convolutional networks to extract the depth features of the data. However, both of these structures have certain limitations. The LSTM module using temporal features cannot realize the parallel input of multidimensional data, which limits its feature extraction capability. The network performance is poor when the data contains many frequency points and a long time period. The model using convolutional networks can quickly extract depth features. However, when the spectrum data is highly volatile, it is difficult to obtain the change characteristics of the data, and the prediction performance has a significant decrease.

In addition, the existing algorithms do not make good use of the historical data and fail to adequately explore the change trends of the spectrum data on large time scales. Therefore, the spectrum historical data are analyzed to clarify the change trends of the spectrum data under different time scales. Then, referring to classical network structures such as U-Net [

24], we propose a network structure combining convolutional and LSTM modules. The network consists of two deep feature extraction modules with convolutional kernels of different sizes and one multi-timescale feature extraction module. The network has a powerful feature extraction and fusion capability. Finally, the historical data are processed on the scale of days and hours, respectively. The prediction results are obtained by combining the spectrum data of a short period into the network. The anomaly detection is realized according to the difference between the predicted spectrum data and the received data.

3.1. Correlation Analysis of Spectrum Data

First, we briefly describe the data, simulation anomalies, and experimental conditions used in this paper. The spectrum data used in the experiments are divided into two main parts: the spectrum data from the publicly available network and the spectrum data collected by monitoring with a software radio device. In particular, the publicly available spectrum data were obtained from RWTH Aachen University. The researchers conducted rigorous and comprehensive spectrum measurements at two monitoring sites in Aachen, Germany, and one monitoring site in Ma, the Netherlands. The monitored frequency band ranges from 20 MHz to 6 GHz and includes four sub bands with a bandwidth of 1.5 GHz and a frequency point resolution of 200 kHz. In the measurements, the sweep interval is 1.8 s. The monitoring system can obtain power spectral density (PSD) values for 1000 frequency points every half hour, and the maximum period of continuous monitoring is about 14 days [

25]. The data contains several commonly used frequency bands, such as GSM900 uplink (UL), GSM900 downlink (DL), TV, etc. A total of 6 data sets are generated based on the commonly used service bands. In addition, the data from multiple frequency bands will be collected and processed separately using Software Defined Radio (SDR) in Hefei, China, in February 2022. The SDR acquisition lasts for a few days, which is insufficient to support a multi-timescale prediction and anomaly detection framework. However, the data fluctuates and changes rapidly, so this dataset serves as an aid to verify the model performance in this paper. The available datasets are generated based on the active service bands in the two types of spectrum data, as shown in

Table 1.

Spectral data can be regarded as a special kind of multidimensional time series. Unlike conventional multidimensional time series, the spectrum data contains more frequency points, and there is a strong correlation between the frequency points. In addition, the prediction-based spectrum anomaly detection is also different from the general time series anomaly detection. From the current state of research and problem description, it is clear that the input and output of spectral anomaly detection are matrices constructed from time-frequency data, which are fundamentally different in structure from time series anomaly detection. However, the spectrum data has similarities with regular time series and has some characteristics of time series. Data such as traffic flow, weather conditions, air quality, and room occupancy are influenced by human behaviour or environmental characteristics and are periodic on some time scale. Most of the frequency bands are also periodic due to the periodic nature of the behaviour of the frequency-using device or the primary user of the band. The Pearson correlation coefficient [

26] is used as an indicator to analyze the correlation between the frequency band data from the time domain perspective, which is calculated as shown in Equation (6).

where,

represents two random variables,

represents sample mean,

represents sample standard deviation,

and

represent covariance and expectation operator.

Taking GSM1800 Uplink and GSM1800 Downlink as examples, the periodicity of spectrum data is analyzed by using detrending and Pearson correlation coefficient, and the results are shown in

Figure 1 and

Figure 2. In

Figure 1b and

Figure 2b, the sampled data at approximately 800-time points are used to represent the spectrum variation within a day. Continuous blocky areas appear in

Figure 1b as well as in

Figure 2b, which indicates the existence of correlation variations in the data in terms of days. It can also be found that both frequency bands contain a large number of points with correlations close to 1. Some of the points have a high correlation even though they have a long-time difference between them. This indicates that the spectrum history data show complex similarities and correlations on different time scales. In

Figure 1c as well as in

Figure 2c, the existence of a clear day-based cycle of the data can be found after detrending. The GSM 1800 Downlink band energy variation is relatively large, with a varied range of about 5 dBm. The signal strength shows a rising and then falling change during the day, which is also in line with the habits of cell phone users. The above analysis shows that some frequency bands have periodic changes and the historical data have a high correlation with the current spectrum data. In addition, the processing of historical data with different time scales can obtain the time pattern and magnitude pattern of spectrum data changes. The correlation and change pattern between the data can enable the depth model to obtain more information and improve the prediction results of the current spectrum state, thus improving the anomaly detection performance.

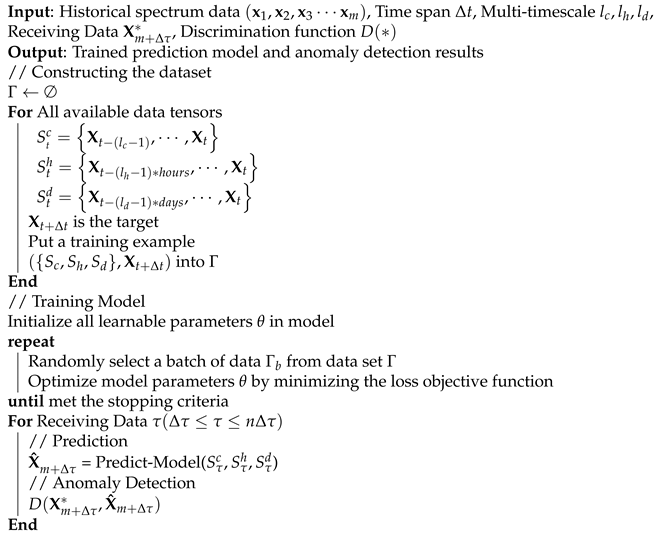

3.2. Algorithmic Framework

The overall framework of the proposed spectrum anomaly detection algorithm is shown in

Figure 3, which can be divided into two parts: spectrum prediction and anomaly detection. First, we process the received historical data and extract the spectrum data in the short term, hourly scale, and day scale, respectively. Then, the spectrum data are fed into a spectrum prediction network containing different feature extraction modules to achieve spectrum prediction. Finally, the discrimination function calculates the difference between the predicted data and the actual received data, and when the difference exceeds a preset threshold, it is considered that there is an anomaly in the spectrum.

According to the previous description, the spectral history data of multiple time scales are represented in the form of a matrix. Then we use

to denote the spectrum data of a short period,

to denote the spectrum data of every hour, and

to denote the spectrum data of a day. The data are processed in the form of sliding windows, and the continuous matrix is transformed into tensor form to generate the required dataset for training. The deep network for spectrum prediction in this paper consists of a depth feature extraction module and a multi-scale feature extraction module, which can extract different features of the data. The extracted features are selected and fused to achieve spectrum data prediction. If we use

and

as the representation functions of the depth feature extraction module and the multi-scale feature extraction module, respectively, the process of feature fusion and spectrum prediction can be expressed as Equation (7). Where

denotes the parameters that can be continuously optimized by learning, and

denotes the combined data of different time scale data. The process of spectrum prediction is shown in Algorithm 1.

| Algorithm 1 Spectrum anomaly detection algorithm using deep learning |

![Electronics 11 01770 i001]() |

3.3. Feature Extraction Module

3.3.1. Depth Feature Extraction Module

If the time-frequency data matrix is treated as an image, then the spectral data prediction problem can be transformed into an image prediction problem. Convolutional neural networks exhibit powerful image information processing capability and can better extract depth information from the time-frequency data. CNN is a class of feedforward neural networks that includes convolutional computation and has a deep structure with powerful representation learning capability. Through convolutional operations, CNN can realize the change of data dimensionality and extract high-dimensional features of the input signal, which can better capture the potential pattern of data change than the original signal. The size of the convolutional kernel determines the dimensionality of the features extracted by the network, and the stacking of convolutional kernels can obtain more deep data.

The basic convolution operation and the activation function uses the Rectified Linear Unit (ReLU) function with the equation shown in Equations (8) and (9):

where,

f denotes the activation function,

denotes the input matrix, and

is the convolution kernel matrix. The

x denotes the input, when

, the output of ReLU function is linear with the input; when

, the output of ReLU function is 0.

Therefore, a depth feature extraction module is constructed using convolutional layers, and its structure is shown in

Figure 4. This module uses convolution and pooling operations to continuously improve the data dimension and obtain depth features. Using the merging operation to parallelize the data with the same dimensionality in the network before and after the convolutional change avoids the loss of information due to the pooling operation. Compared with the traditional U-Net structure, this deep feature extraction module has fewer layers and a simpler structure. In the overall framework of the proposed algorithm, two depth feature extraction modules are included. In the depth feature module 1, the size of the convolution kernel is (2, 2); in the depth feature module 2, the size of the convolution kernel changes from (7, 7) to (3, 3) with the convolution process and back to (7, 7) with the deconvolution operation. Convolution kernels of different scales can use the depth module with different sensing fields to obtain different depth features. Among them, the small-scale convolution kernel in depth feature extraction module 1 can obtain the association between adjacent data, and the large-scale convolution kernel in depth feature extraction module 2 increases the perceptual field to obtain the features between spectral data in a certain area.

3.3.2. Multi-Timescale Feature Extraction Module

The module of convolutional structure has a strong feature extraction ability, but the spectrum data has a special time-frequency correlation, so to further improve the performance of the network it is also necessary to extract the temporal characteristics of the data. In addition, the use of multi-timescale data to enhance the performance of the algorithm is one of the key issues to be considered in this paper. The existing algorithms mostly construct a base model, then input the spectrum data of different scales into the parallel network composed of the base model separately, and finally merge the features of different branches to achieve spectrum prediction.

In this processing, the addition of multi-timescale historical data increases the number of model parameters exponentially, and this approach also tends to cause redundancy of feature information. We construct a multi-timescale feature extraction module for extracting temporal features from multi-timescale data by jointly using CNN and LSTM modules.

An LSTM network is a special kind of recurrent neural network, which can effectively solve the gradient explosion problem in the network training process. Especially for long sequence processing problems, it has obvious performance advantages over the basic RNN. the LSTM contains three control units within the input gate, the forgetting gate, and the output gate which control the transmission state by gating the state, remembering what needs to be remembered for a long time and forgetting the unimportant information. A single LSTM module is shown in

Figure 5, where red is the forgetting gate, green is the input gate, and blue is the output gate.

The forgetting factor

in the forgetting gate determines how much information from the previous moment’s cell state

is preserved to the current moment’s cell state

; the input gate determines how much of the current moment’s network input

is preserved to the cell state

; and the output gate determines how much information from

is output to the current output value

of the LSTM, whose formula is shown in Equation (10).

are weights,

are the bias terms,

is the activation function of the network,

is the temporary cell state,

is the current input,

is the cell state of the previous cell, and

is the output of the previous cell. The LSTM can efficiently learn the intrinsic laws of the data and realize the spectrum prediction.

In the process of spectrum prediction, the spectrum data received in a short period of time provides the vast majority of key feature information, and the feature information extracted from multi-timescale historical data is an effective supplement to enhance the performance of the algorithm. Based on the above ideas, a multi-scale feature extraction module is constructed in this paper. The module consists of a convolutional layer as well as an LSTM network, which is a typical ‘CNN+LSTM’ network structure. The parallel multi-timescale spectral data are fed into three consecutive convolutional layers to change the dimensionality of the multi-timescale data, and the convolutional operation of the data at different timescales can better discover the correlation between the data in high-dimensional space and select the more effective features in the multi-timescale data. Based on this, the LSTM network is used to extract the temporal features in the high-dimensional data output from the convolutional network.

The network achieves multiple feature extraction of the spectrum data through the depth feature extraction module and the multi-time scale feature extraction module. Finally, the output of each feature extraction module is fused by summation to complete the spectrum prediction.

4. Experiment

4.1. Dataset and Anomaly Simulation

In reality, many situations can lead to anomalies in the electromagnetic spectrum, such as occupancy by illegal users and interference with signals from non-cooperating parties. In addition, the interference between electromagnetic equipment components and temperature variations can affect the received electromagnetic data [

27,

28]. It is difficult to obtain standard anomaly data in most anomaly detection studies because anomalies tend to occur with small and random probability, limiting the validation of experimental effects. We artificially add anomalies to the pure electromagnetic spectrum data to solve this problem. In this paper, four spectrum anomalies are generated by referring to the literature [

13,

16], which are (i) tone-anomaly: continuous interference is made up of one or more sinusoids with a random center frequency; (ii) pulse-anomaly: time-pulsed signal having a variable start and end time; (iii) chirp-anomaly: chirp signal with a variable center frequency and hopping rate; (iv) wpulse-anomaly: wideband signals that pulse over the whole frequency spectrum. In order to detect the pervasiveness of the algorithm, the variety of anomalous states is increased, and in addition to the above four interference anomalies, any two anomalies are randomly selected for mixing so that the variety of anomalous states increases to 10. The TV band (614–698 MHZ) data is used as an example to simulate the spectrum anomaly, as shown in

Figure 6. The horizontal coordinate in the figure is the frequency, and the vertical coordinate is the number of spectrum samples. The four original disturbances designed in this experiment whose insertion locations, durations, and other factors are random, enhancing the difficulty of anomaly detection.

It should be noted that there are many types of electromagnetic spectrum anomalies, and we have simulated only some of them. The detection of anomalies relies on the difference between the actual spectrum and the predicted spectrum. Therefore, theoretically, no matter what kind of anomalies are involved, whether or not they are within the simulated anomalies, as long as the difference between the actual spectrum and the predicted spectrum exceeds a threshold, the algorithm discriminates this spectrum state as an anomaly.

4.2. Prediction Performance Comparison

In this paper, we construct a network model to fuse depth features to achieve an efficient prediction of spectrum data. On this basis, we complete the detection of spectrum anomaly data. We conduct experimental validation in several frequency bands and demonstrate the superiority of the algorithm performance mainly through the following three experiments.

Since the prediction performance of the models directly affects the effect of the anomaly algorithm, experiments are conducted in multiple frequency bands in the dataset to verify the prediction performance of each model. The comparison models consist of two main categories. The first category of models does not use multi-timescale data. The comparison models include RNN, U-Net [

24], LSTM [

25], ConvLSTM [

29], CNN, three variant models that do not use multi-timescale data, STS-PredNets-C [

22], DTS-Resnet-C [

23], and Proposed-C proposed in this paper. The second type of model uses multi-timescale data. Moreover, prediction models include STS-PredNets [

22], DTS-Resnet [

23], the Proposed algorithm, and its two variants, Proposed-OD (only day scale) and Proposed-OH (only hour scale).

The first type of model does not involve the processing of data at different time scales; the model input is the historical data and the output is the forecast data of . Since the second type of model uses multi-timescale data as input, assuming that a certain sample with the current moment as is generated, the sample contains the most recent period of historical data (, same as the first type of model), the mean-processed historical data of the last three days (), and the mean-processed historical data of the last three hours (). Where, m represents the time slot of historical data, n represents the time slot of predicted data, and k represents the number of frequency points. In this experiment, we set , , and .

In the experiments, the number of hidden layers of RNN and LSTM is set to 256, and both models contain three core modules and three fully connected layers. CNN contains five convolutional layers with 256 convolutional channels. We used Python as the programming language to implement each network using the Keras deep learning framework. We use the Adam optimizer instead of the stochastic gradient descent (SGD) algorithm to minimize the loss function in model training. Mean Square Error (MSE) is used as the loss function:

Mean Absolute Error (MAE) and Root Mean Square Error (RMSE) are used as the evaluation metrics for the prediction results. The models with better prediction performance are used for smaller MAEs as well as RMSEs.

Table 2 shows the prediction results of the first type of model on the publicly available spectral dataset from Aachen.

Table 3 shows the prediction results on the SDR acquisition dataset as follows. The prediction results for the second type of model on the Aachen open spectrum data set are shown in

Table 4. In order to be able to distinguish the data model performance clearly, the optimal metrics for each frequency band are bolded.

Table 2 shows that the Proposed-C model achieves the smallest MAE and RMSE values in the five bands and shows the most robust prediction ability. The GSM1800UL band data distribution is more straightforward, the prediction performance of the models does not differ much, and the LSTM model has the smallest RMSE value. We also found that the average performance of CNN and U-Net based convolutional module models outperformed the recurrent neural network-based models except for the ISM bands. The intense signal spectrum within the Aachen open dataset fluctuates less. The depth features extracted by the convolution operation are more expressive than the time-series features. We analyze

Table 3, and Proposed-C achieves optimal performance in all four frequency bands, indicating an average improvement of about 4.1% and 4.4% over the optimal baseline in MAE and RMSE. It is not difficult to determine that the model based on recurrent neural network structure outperforms the model based on the convolutional module. This is because the data collected by SDR are more volatile and more time-series correlated when the time series features are more critical for the prediction results. The variant model corresponding to the model in this paper that does not use multi-timescale data outperforms the other two variants. In particular, the poor prediction performance of the STS-PredNets-C model is because the input of a single spectral data is less suitable for this model structure. Proposed-C shows strong prediction performance on both datasets because the unique structure of the prediction model can fuse the depth features of the convolution with the temporal features, which is more robust.

Analysis of the results in

Table 3 and

Table 4 shows that the inclusion of multiple time scale data for the three compared algorithms effectively improves the model’s prediction performance. In

Table 3, the proposed model’s prediction performance is better than the other two algorithms. The difference is most apparent in the GSM900 DL band, where the algorithm in this paper reduces MAE by 5.12% and 8.55%, and RMSE by 2.4% and 4.2%, respectively, compared to the two models DTS-Resnet and STS-PredNets. In addition, by comparing the results in

Table 2 and

Table 4, we find that the Proposed model reduces the MAE by about 2.3% and the RMSE by about 1.8% on average after adding the multi-timescale data. The above results fully illustrate the effectiveness of multi-timescale data. From another perspective, the addition of multi-timescale information enriches the structure of the model, increases the information sources of the model, enables the model to learn the features of the data more effectively, and achieves the improvement of the prediction performance.

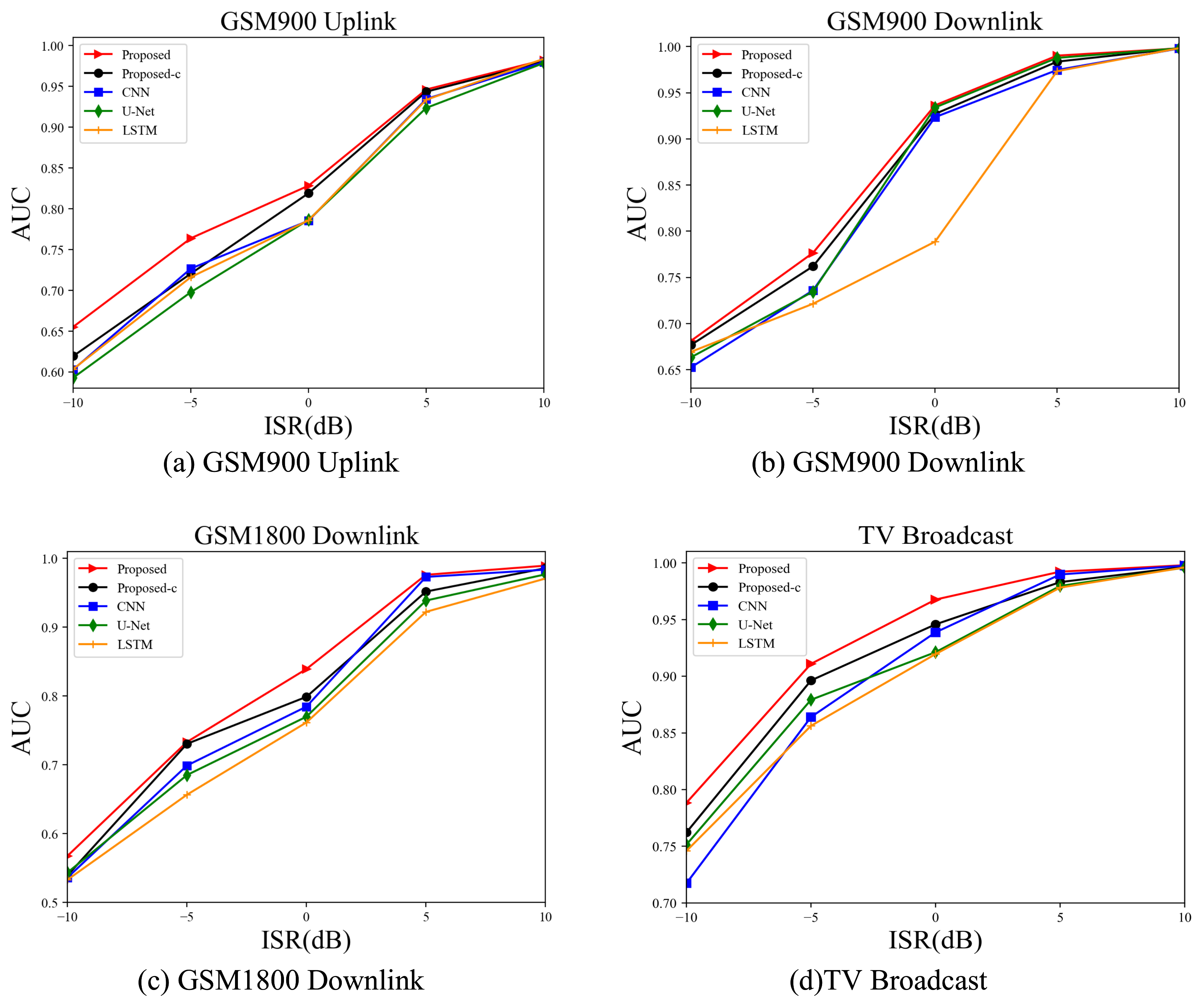

4.3. Effect of Discriminant Function on Anomaly Detection

Analysis of the anomaly detection framework shows that its performance is jointly determined by the prediction model and the discriminant function. Conventional prediction-based anomaly detection algorithms use MSE as the discriminant function. This metric can well describe the overall error between the predicted data and the actual received data, reflecting a relatively good anomaly detection performance. However, the anomaly detection model using MSE as the discriminant function is often not sensitive enough to some subtle anomalies. Especially when the power of the anomalous signal is small, the error brought by the anomalous data may be ignored by the prediction error, and the model using MSE as the discriminating function is less effective at this time. To further improve the model’s performance, this paper searches for a more suitable discriminative function for spectral anomaly detection by comparing multiple discriminative functions. The compared discrimination functions include MSE, MAE, Dynamic Time Warping (DTW) [

30], Ramp-score [

31], and Hausdorff distance. The DTW algorithm can calculate the similarity of two sequences, the Ramp-score can effectively find anomalies in continuous sequences, and the Hausdorff distance can more effectively describe the distance of two sequences in space.

Since lowering the detection threshold will increase the false alarm rate while improving the detection rate, this paper uses the receiver operating characteristic curve (ROC) and the area under curve (AUC) as metrics to evaluate the anomaly detection performance of the model. When the curve is closer to the upper left corner, it indicates a good detection and a large value of AUC. If the model can achieve perfect detection, the ROC curve can reach the (0, 1) point in space when the corresponding AUC value is 1. Varying the interference signal power ratio ISR ∈ [−10 dB, 10 dB], experiments are conducted using Proposed model and CNN.

Figure 7 shows the AUC of each discrimination function. Where (a), (b), and (c) correspond to the curves of the AUC corresponding to each discrimination function with ISR for the GSM900UL, GSM900DL, and TV bands, respectively, of this paper’s algorithm, and (d), (e), and (f) correspond to the performance variation of the CNN model.

Analysis of the results in

Figure 7 shows that the use of DTW as the discriminating function has the best anomaly detection capability with the most considerable AUC value. When the ISR is −10 dB in the TV broadcast band, the anomaly detection effect using DTW as the discriminating function is significantly improved. The AUC value is improved by about 8% compared to the following best Hausdorff distance. When ISR is 10 dB, the difference of AUC values corresponding to each discriminative function in three different frequency bands is insignificant. This is because the anomalous signal power is higher, making the accurate spectrum data containing anomalies more different from the predicted data and easier to detect. DTW as a discriminative function generally shows excellent anomaly performance in several frequency bands. This experiment illustrates that DTW is more suitable for the spectrum anomaly detection problem studied in this paper among several commonly used discriminatory functions. As a result of this experiment, we use DTW as the discriminative function for anomaly detection in all models in subsequent experiments.

4.4. Anomaly Detection Performance Comparison

The anomaly detection performance under ISR ∈ [−10 dB, 10 dB] is investigated by varying the intensity of the anomaly data. The algorithm performance experiments in the GSM900 UL band, GSM900 DL, GSM1800 DL, and TV bands. The comparison models include LSTM, CNN, U-Net, Proposed-C, and Proposed models, and

Figure 8 shows the variation of AUC values with ISR for each model.

Analysis of

Figure 8 shows that the ISR increases with the intensity of the anomalous data, and the algorithm anomaly detection accuracy increases. The duration of the presence of anomalous interference, the bandwidth, and the location of the frequencies where it occurs all impact the anomaly detection performance of the algorithm. In the environment of high ISR, the anomaly exists for a long time and with high energy, and the anomalous interference changes the spectrum data significantly and is less difficult to detect. As the ISR decreases, the anomaly existence time becomes shorter, the energy decreases, and part of the anomalous interference may be submerged in the intense signal spectrum. Short-time anomalies and narrow-bandwidth anomalous interference make the difference between the true and predicted spectrum minimal and difficult to detect, which is the main reason for the degradation of anomaly detection performance.

Since the anomaly detection performance of the model is directly related to the prediction performance of the model. It has been verified in the above experiments that the prediction model based on multiple time scales proposed in this paper has higher prediction accuracy compared to other models. Correspondingly, it shows a superior anomaly detection performance. We are taking the GSM900UL band as an example; when ISR = −10 dB, the anomaly detection performance of the Proposed model is improved by about 8% compared with the CNN model and about 5.7% compared with the LSTM model, which fully illustrates the impact of the prediction performance of the illustrated model on the detection results. However, the anomaly detection performance of each model is similar when ISR = 10 dB and almost all of them can achieve perfect anomaly detection. It can be found that when the ISR is negative, the improvement of anomaly detection accuracy brought by prediction accuracy is more obvious.

In addition, comparing the anomaly detection performance of Proposed and Proposed-C, we know that the inclusion of multi-timescale data successfully improves the anomaly detection performance of the model. The performance improvement is evident at low ISR, about 5.8% and 5% in GSM900UL and GSM1800DL bands, respectively, when ISR = −10 dB. Compared with the existing algorithm models, the algorithm in this paper effectively utilizes the multi-timescale information to improve the model prediction performance, which is more efficient in anomaly detection and the performance is relatively less affected by the ISR variation.

The paper explores the prediction performance of the model, the appropriate discriminative function, and the anomaly detection performance of the model through three experiments, respectively. By analyzing and summarizing the experimental results, we verify the effectiveness and rationality of the anomaly detection framework proposed in this paper. If analyzed in terms of network structure, the model can achieve the fusion and selection of multiple features, improving model performance. The algorithm in this paper can effectively utilize historical data of different time scales, which enhances the anomaly detection performance.