DC-STGCN: Dual-Channel Based Graph Convolutional Networks for Network Traffic Forecasting

Abstract

1. Introduction

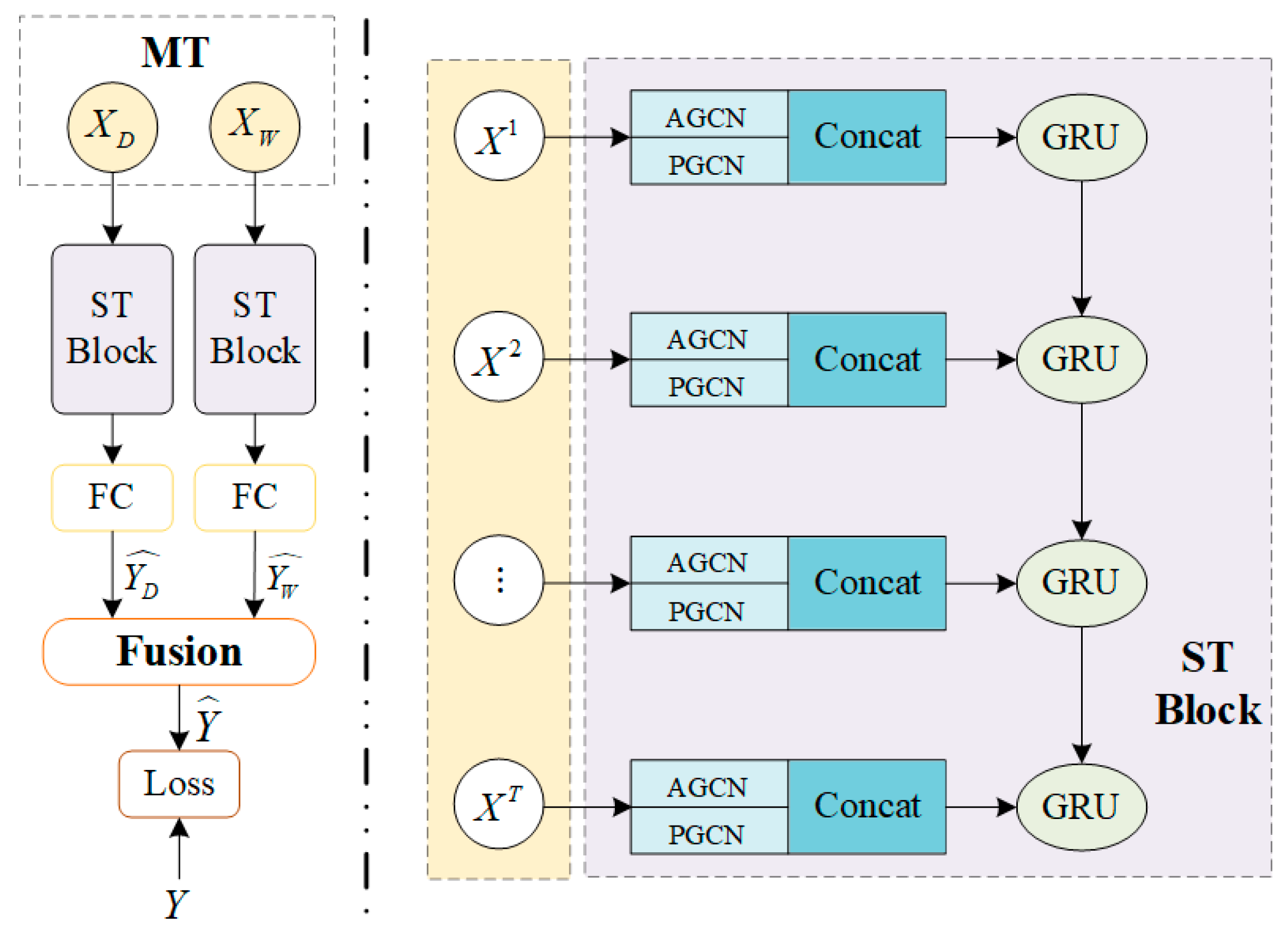

- A multitemporal input component is designed which consists of two independent time components that model the daily and weekly correlation of the network traffic.

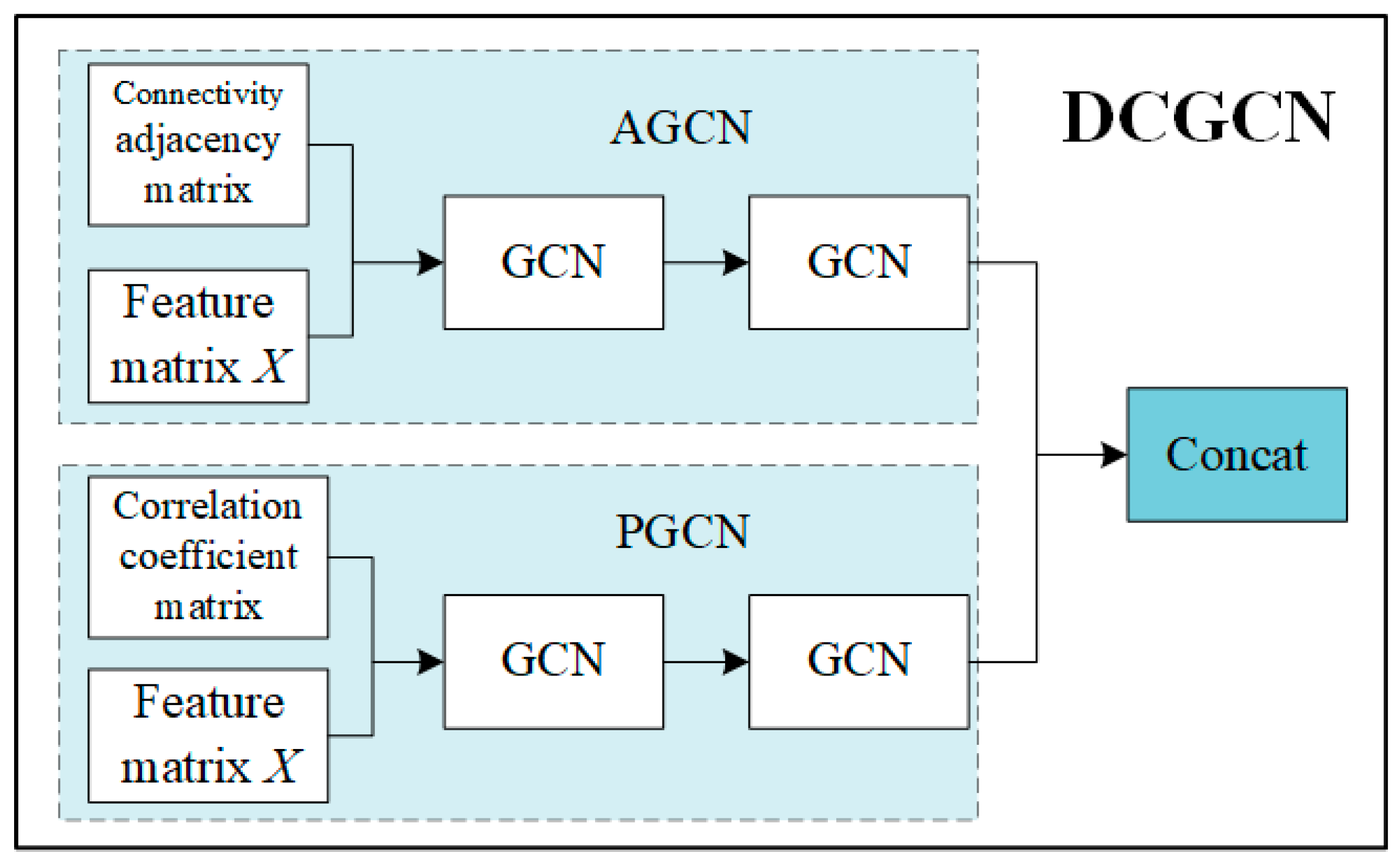

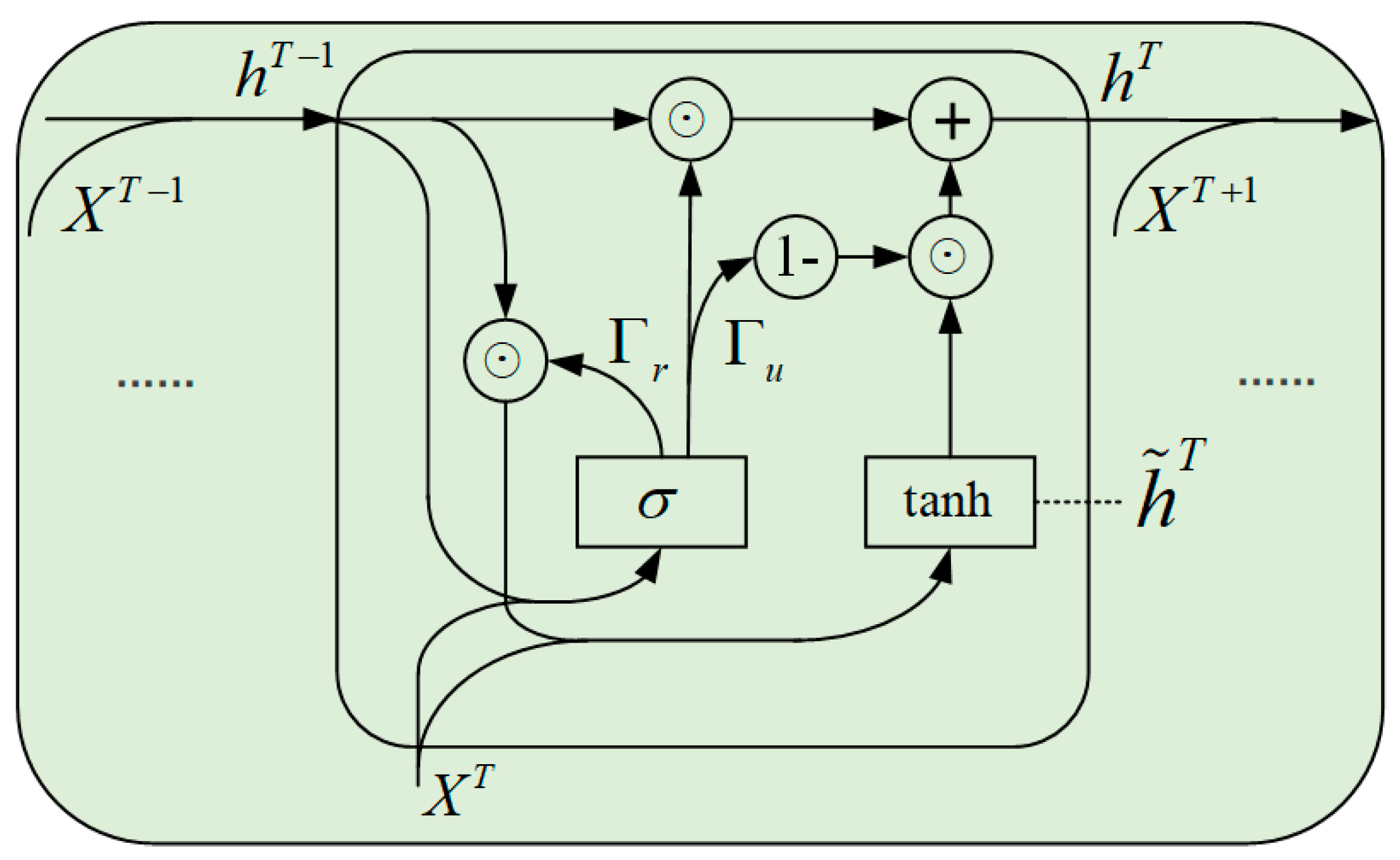

- Each component contains a spatial-temporal feature extraction module consisting of a DCGCN and a GRU. The DCGCN can effectively extract spatial features between the network nodes, and the GRU captures the temporal characteristics of the time series.

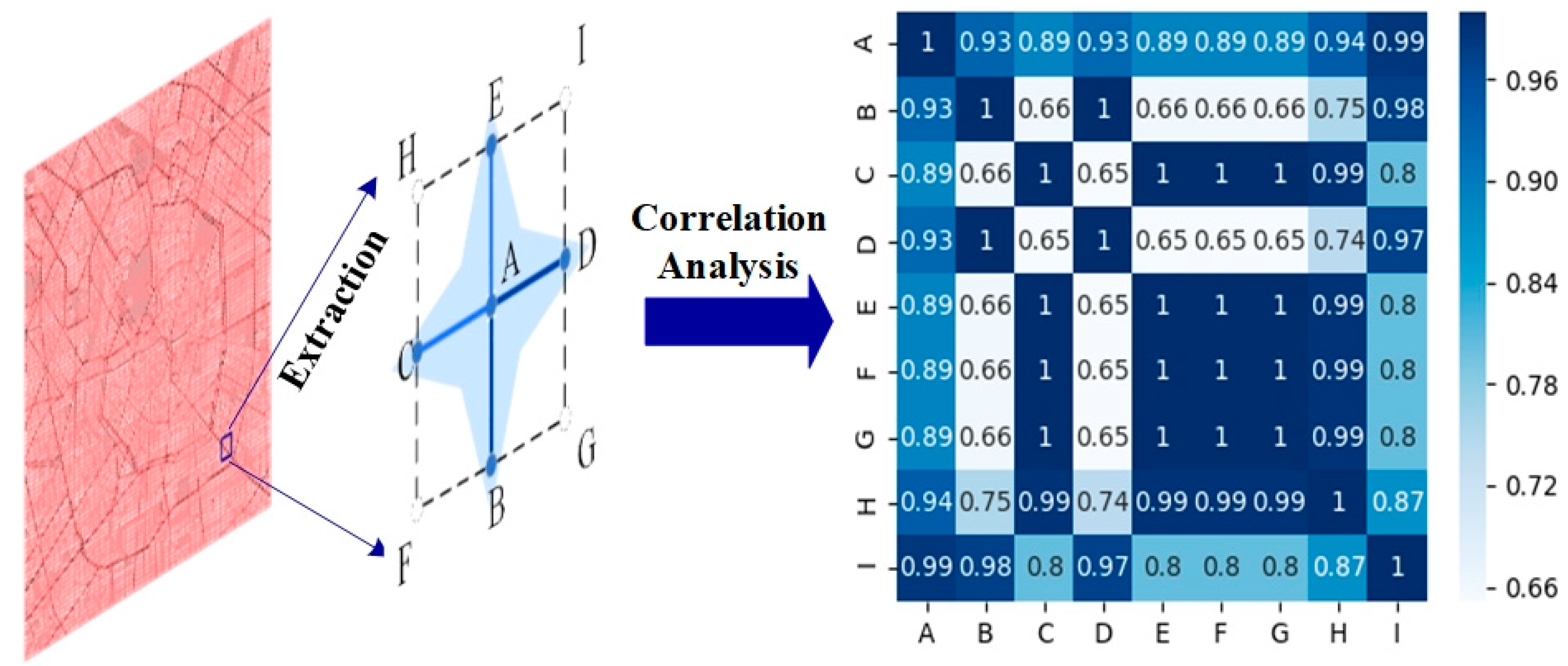

- The proposed DCGCN consists of an adjacency feature extraction module (AGCN) and a correlation feature extraction module (PGCN). These enable DCGCN to capture connectivity between the nodes as well as near-correlation.

- The DC-STGCN model is trained several times on the traffic network dataset of the city of Milan and the results show that the DC-STGCN model has the best prediction error, prediction accuracy and correlation coefficients compared to several existing baselines and has the ability of long-term prediction.

2. Related Work

3. Methods

3.1. Problem Definition

3.1.1. Traffic Network

3.1.2. Traffic Prediction

3.2. Spatial Feature Modeling

3.3. Temporal Feature Modeling

3.4. DC-STGCN Model

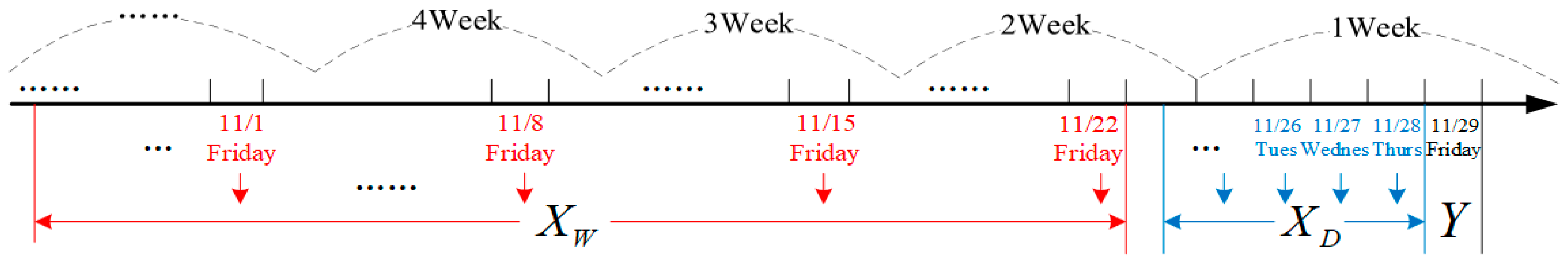

3.4.1. Multitime Component Input

3.4.2. Spatial-Temporal Feature Extraction Unit

3.4.3. Feature Fusion

4. Experimental Implementation and Analysis

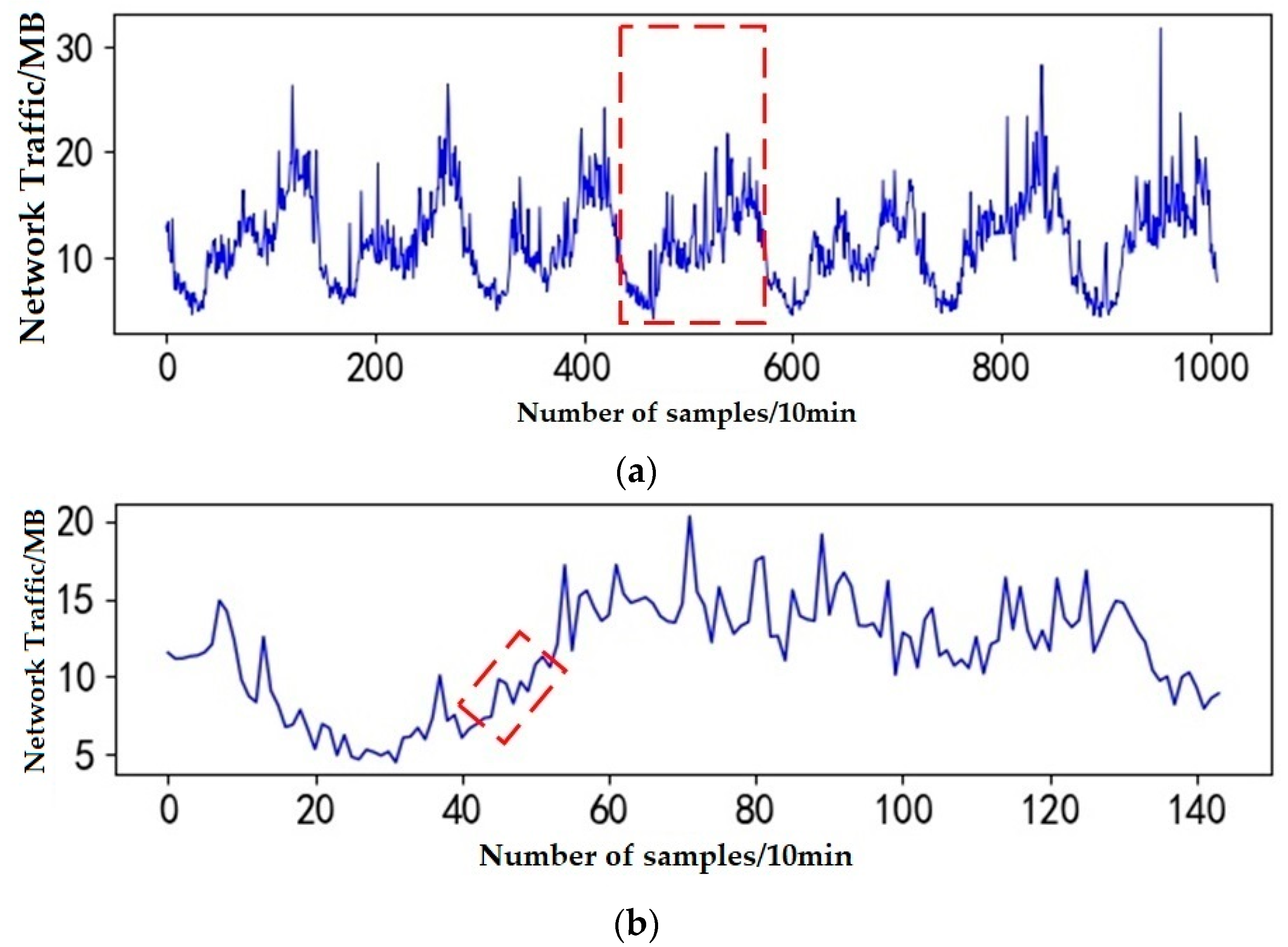

4.1. Data Description

4.2. Evaluation Indicators

- 1

- Root Mean Square Error (RMSE), which reflects the prediction error of the model. The error value is in the range. The closer the error to zero, the better the model. The formula is as follows.

- 2

- Accuracy reflects the accuracy of a model’s predictions. The range of accuracy is . The closer the value of Accuracy to 1, the better the model. Which is defined as follows:

- 3

- Coefficient of Determination ( score): the value of indicates the degree of model excellence. The evaluation criterium is the same as for the accuracy:where and denote the actual and predicted values at the time , respectively, denotes the mean value of the data samples, and is the number of samples.

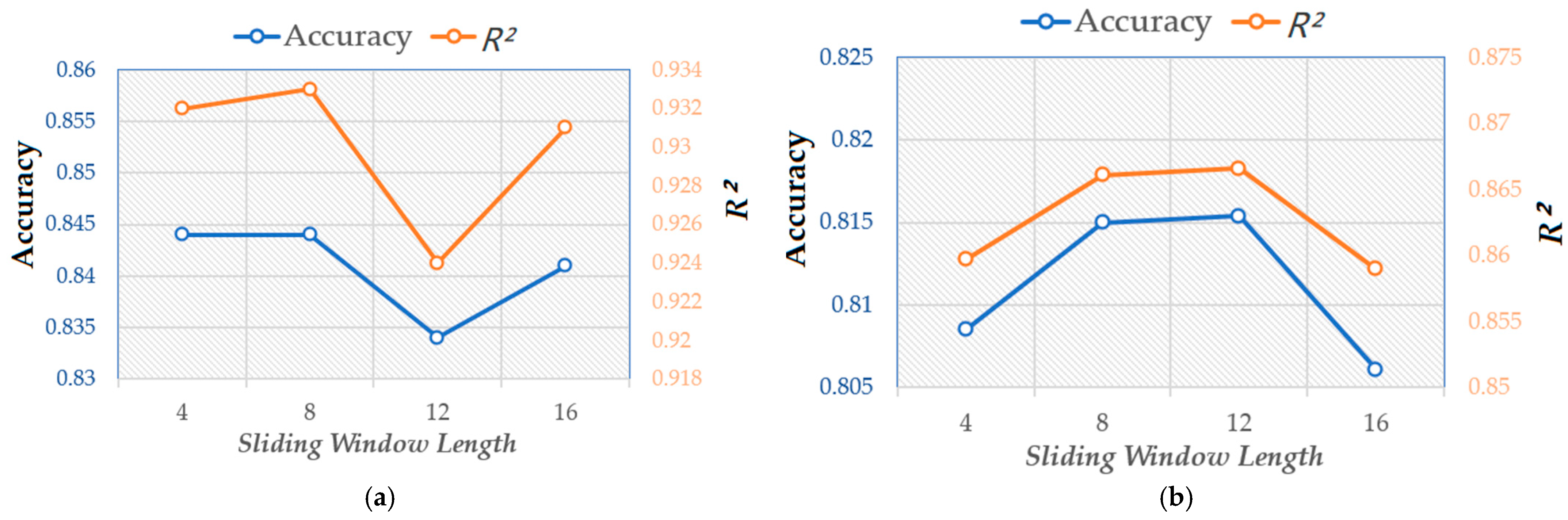

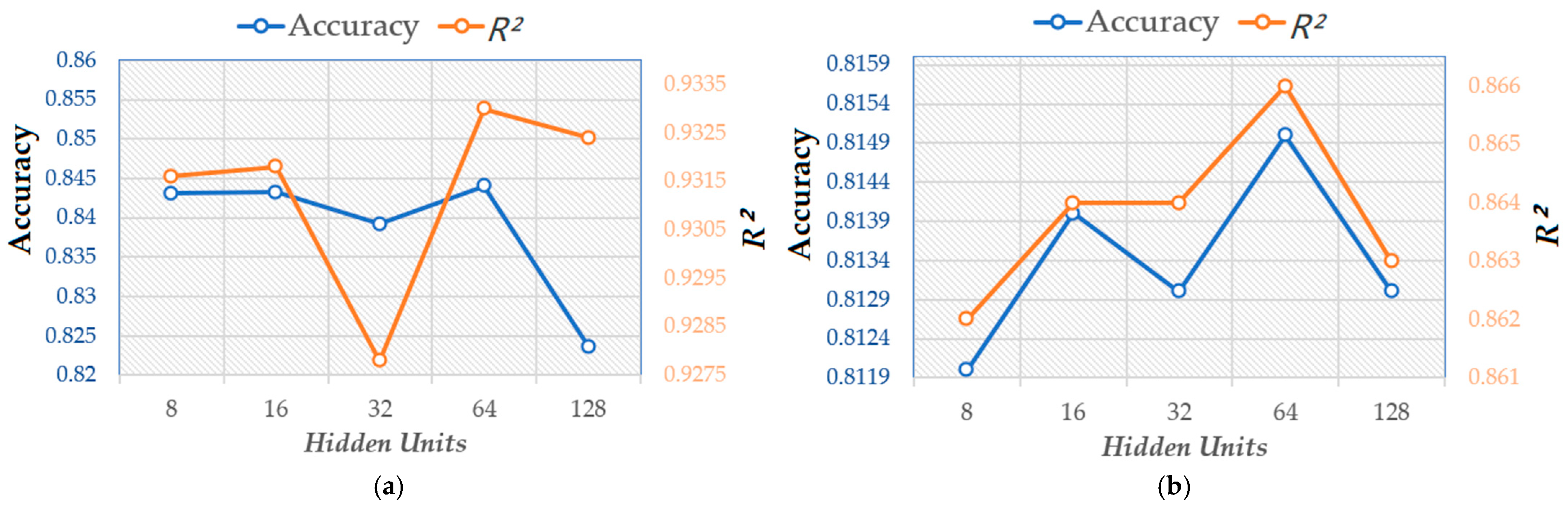

4.3. Parameter Design

4.4. Experimental Results

- (1)

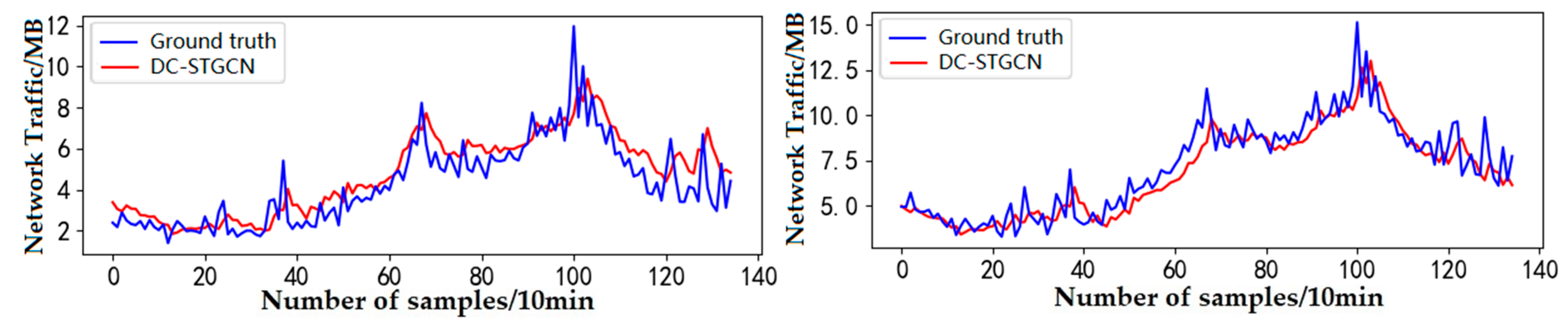

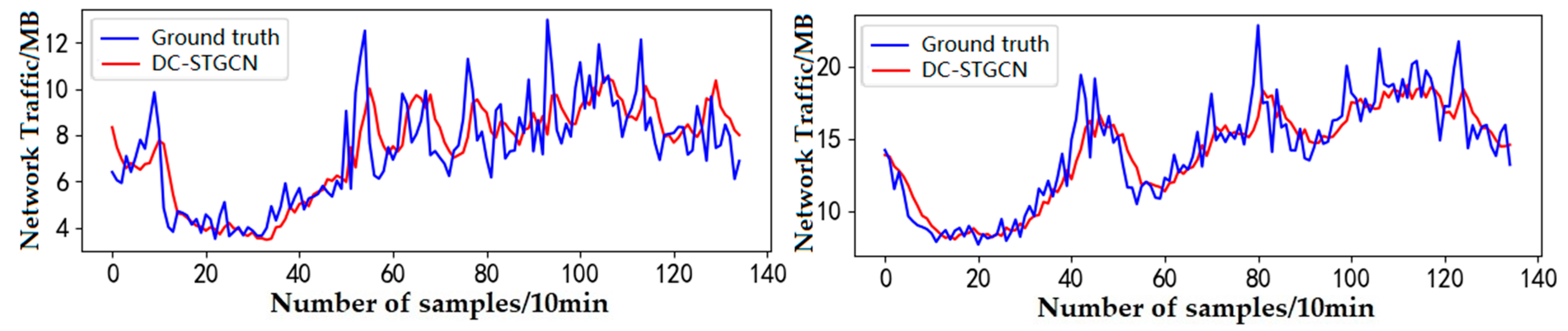

- The DC-STGCN model had the best forecast error, forecast precision, and correlation coefficient. For example, for a forecast step of 10 min on the working day dataset, the accuracy and values for DC-STGCN were, respectively, 3.2% and 3.5% higher than that of the HA model, and the RMSE was reduced by 0.558. Compared to the ARIMA model, the RMSE and accuracy of DC-STGCN were, respectively, 1.719 lower and 21.0% higher. While the accuracy and of DC-STGCN were improved by 2.9% and 3.2% compared to the SVR, the prediction was poorer as the SVR used a linear kernel function. It can be further seen that the neural network-based models, both DC-STGCN and GRU, outperformed the other models. This is because of the poor fit provided by the HA and ARIMA to such a long series of unsteady data, whereas the neural network models fitted the nonlinear data much better.

- (2)

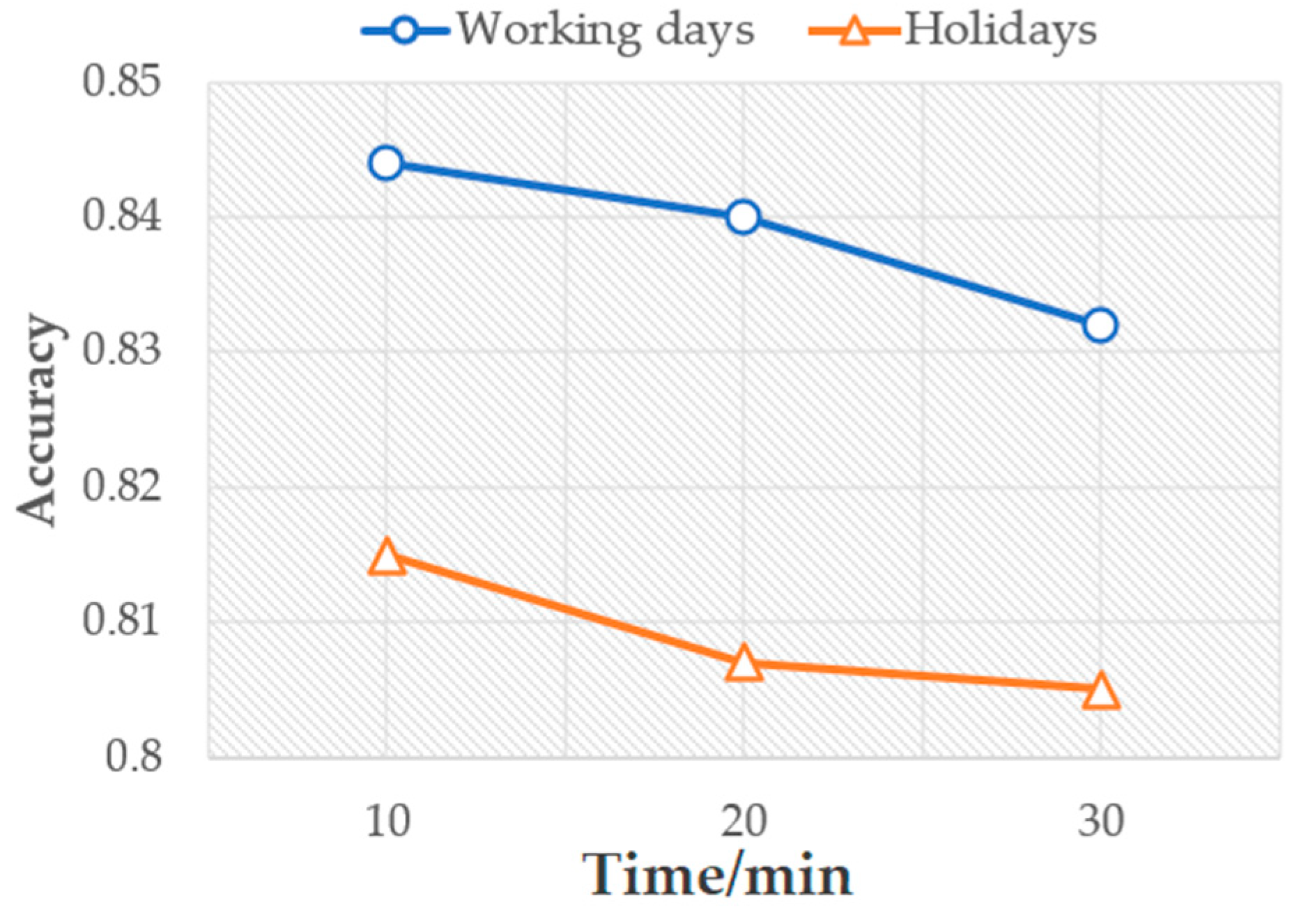

- The DC-STGCN model had long-term forecasting capability. By increasing the prediction time, the prediction performance of the DC-STGCN model was decreased. Nevertheless, the DC-STGCN model still had the best prediction performance comparing with the other models. Figure 10 shows the change in accuracy with increasing forecast time for the DC-STGCN model on both working day and holiday datasets. The accuracy decreased with the forecast time, but the downward trend was rather smooth. Therefore, the DC-STGCN model was less affected by forecast time and had stable long-term forecasting capability.

- (3)

- Network traffic is cyclical as well as self-similar [11]. The network traffic at the current moment is affected by the previous moment. Therefore, in this paper, we used two different scales of MT to capture the effects of the daily and weekly periodicity of traffic. In contrast, the model proposed by He et al. [5] didn’t take into account the flow characteristic; therefore, the DC-STGCN could extract a richer temporal feature. Meanwhile, the results in Table 2 suggest that the DC-STGCN model predicted better than the model (T-GCN), proposed by Zhao et al. [4].

- (4)

- Comparing the prediction results of the working day and holiday datasets, the DC-STGCN model predicted network traffic better on the working day dataset than the holiday dataset. This is because holiday network traffic peaks are higher than weekday peaks and the traffic, therefore, is harder to predict. The DC-STGCN model predicted traffic more accurately for the working day dataset than the holiday dataset. This is because, unlike the more regular weekday traffic, the network traffic on holidays is more random.

4.5. Ablation Studies

4.6. Model Interpretation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Feng, J.; Chen, X.; Gao, R.; Zeng, M.; Li, Y. DeepTP: An End-to-End Neural Network for Mobile Cellular Traffic Prediction. IEEE Netw. 2018, 32, 108–115. [Google Scholar] [CrossRef]

- Andreoletti, D.; Troia, S.; Musumeci, F.; Giordano, S.; Maier, G.; Tornatore, M. Network Traffic Prediction based on Diffusion Convolutional Recurrent Neural Networks. In Proceedings of the IEEE INFOCOM 2019—IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), Paris, France, 29 April–2 May 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 246–251. [Google Scholar]

- Yang, L.; Gu, X.; Shi, H. A Noval Satellite Network Traffic Prediction Method Based on GCN-GRU; WCSP: Nanjing, China, 2020; pp. 718–723. [Google Scholar]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- He, K.; Huang, Y.; Chen, X.; Zhou, Z.; Yu, S. Graph Attention Spatial-Temporal Network for Deep Learning Based Mobile Traffic Prediction. In Proceedings of the 2019 IEEE Global Communications Conference (GLOBECOM), Waikoloa, HI, USA, 9–13 December 2019; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2019; pp. 1–6. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention Based Spatial-Temporal Graph Convolutional Networks for Traffic Flow Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence; Association for the Advancement of Artificial Intelligence (AAAI): Menlo Park, CA, USA, 2019; Volume 33, pp. 922–929. [Google Scholar]

- Ahmed, M.S.; Cook, A.R. Analysis of Freeway Traffic Time-Series Data by Using Box-Jenkins Techniques. Transportation Research Record: Thousand Oaks, CA, USA, 1979. [Google Scholar]

- Laner, M.; Svoboda, P.; Rupp, M. Parsimonious Fitting of Long-Range Dependent Network Traffic Using ARMA Models. IEEE Commun. Lett. 2013, 17, 2368–2371. [Google Scholar] [CrossRef]

- Madan, R.; Mangipudi, P.S. Predicting Computer Network Traffic: A Time Series Forecasting Approach Using DWT, ARIMA and RNN. In Proceedings of the 2018 Eleventh International Conference on Contemporary Computing (IC3), Noida, India, 2–4 August 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Qian, Y.; Xia, J.; Fu, K.; Zhang, R. Network traffic forecasting by support vector machines based on empirical mode decomposition denoising. In Proceedings of the 2012 2nd International Conference on Consumer Electronics, Communications and Networks (CECNet), Yichang, China, 21–23 April 2012; pp. 3327–3330. [Google Scholar]

- Bie, Y.; Wang, L.; Tian, Y.; Hu, Z. A Combined Forecasting Model for Satellite Network Self-Similar Traffic. IEEE Access 2019, 7, 152004–152013. [Google Scholar] [CrossRef]

- Ramakrishnan, N.; Soni, T. Network Traffic Prediction Using Recurrent Neural Networks. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 187–193. [Google Scholar]

- Tudose, A.M.; Sidea, D.O.; Picioroaga, I.I.; Boicea, V.A.; Bulac, C. A CNN Based Model for Short-Term Load Forecasting: A Real Case Study on the Romanian Power System. In Proceedings of the 2020 55th International Universities Power Engineering Conference (UPEC), Torino, Italy, 1–4 September 2020; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 1–6. [Google Scholar]

- Zhang, Q.; Jin, Q.; Chang, J.; Xiang, S.; Pan, C. Kernel-Weighted Graph Convolutional Network: A Deep Learning Approach for Traffic Forecasting. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 1018–1023. [Google Scholar]

- Nie, L.; Jiang, D.; Guo, L.; Yu, S.; Song, H. Traffic Matrix Prediction and Estimation Based on Deep Learning for Data Center Networks. J. Netw. Comput. Appl. 2016, 76, 16–22. [Google Scholar] [CrossRef]

- Sebastian, K.; Gao, H.; Xing, X. Utilizing an Ensemble STL Decomposition and GRU Model for Base Station Traffic Forecasting. In Proceedings of the 2020 59th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Chiang Mai, Thailand, 23–26 September 2020; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 314–319. [Google Scholar]

- Li, T.; Hua, M.; Wu, X. A Hybrid CNN-LSTM Model for Forecasting Particulate Matter (PM2.5). IEEE Access 2020, 8, 26933–26940. [Google Scholar] [CrossRef]

- Bruna, J.; Zaremba, W.; Szlam, A.; Lecun, Y. Spectral Networks and locally connected networks on graphs. arXiv 2013, arXiv:1312.6203. [Google Scholar]

- Gao, J.; Zhang, T.; Xu, C. I Know the Relationships: Zero-Shot Action Recognition via Two-Stream Graph Convolutional Networks and Knowledge Graphs. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Association for the Advancement of Artificial Intelligence (AAAI): Menlo Park, CA, USA, 2019; Volume 33, pp. 8303–8311. [Google Scholar]

- Zhu, J.W.; Song, Y.J.; Zhao, L.; Li, H.F. A3T-GCN: Attention Temporal Graph Convolutional Network for Traffic Forecasting. arXiv 2020, arXiv:2006.11583. [Google Scholar]

- Lu, B.; Gan, X.; Jin, H.; Fu, L.; Zhang, H. Spatiotemporal Adaptive Gated Graph Convolution Network for Urban Traffic Flow Forecasting. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management, Galway, Ireland, 19–23 October 2020; ACM: New York, NY, USA, 2020; pp. 1025–1034. [Google Scholar]

- Ye, J.; Zhao, J.; Ye, K.; Xu, C. Multi-STGCnet: A Graph Convolution Based Spatial-Temporal Framework for Subway Passenger Flow Forecasting. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2020; pp. 1–8. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering. In Proceedings of the NIPS’16 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017, arXiv:160902907. [Google Scholar]

- Zhang, J.; Chi, Y.; Xiao, L. Solar Power Generation Forecast Based on LSTM. In Proceedings of the 2018 IEEE 9th International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 23–25 November 2018; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2018; pp. 869–872. [Google Scholar]

- Barlacchi, G.; De Nadai, M.; Larcher, R.; Casella, A.; Chitic, C.; Torrisi, G.; Antonelli, F.; Vespignani, A.; Pentland, A.P.; Lepri, B. A multi-source dataset of urban life in the city of Milan and the Province of Trentino. Sci. Data 2015, 2, 150055. [Google Scholar] [CrossRef] [PubMed]

| Sliding Window Length | 10 min | ||

|---|---|---|---|

| RMSE | Accuracy | ||

| 4 | 5.5251 | 0.7113 | 0.8054 |

| 6 | 5.4431 | 0.7166 | 0.8133 |

| 8 | 5.4145 | 0.7174 | 0.8141 |

| 10 | 5.4495 | 0.7162 | 0.8135 |

| 12 | 5.4609 | 0.7158 | 0.8135 |

| 14 | 5.4908 | 0.7140 | 0.8118 |

| 16 | 5.4959 | 0.7137 | 0.8113 |

| Models | 10 min | 20 min | 30 min | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | Accuracy | RMSE | Accuracy | RMSE | Accuracy | |||||

| Working Day | HA | 3.790 | 0.812 | 0.898 | 3.790 | 0.812 | 0.898 | 3.790 | 0.812 | 0.898 |

| ARIMA | 4.951 | 0.634 | * | 4.997 | 0.631 | * | 5.022 | 0.629 | * | |

| SVR | 3.728 | 0.815 | 0.901 | 3.888 | 0.813 | 0.899 | 4.085 | 0.804 | 0.889 | |

| GRU | 3.703 | 0.822 | 0.909 | 3.694 | 0.822 | 0.908 | 3.850 | 0.816 | 0.901 | |

| T-GCN [2] | 3.441 | 0.824 | 0.911 | 3.593 | 0.824 | 0.910 | 3.734 | 0.819 | 0.902 | |

| [3] | - | - | - | - | - | - | - | - | - | |

| DC-STGCN | 3.232 | 0.844 | 0.933 | 3.394 | 0.840 | 0.920 | 3.621 | 0.832 | 0.909 | |

| Holiday | HA | 4.190 | 0.790 | 0.835 | 4.190 | 0.790 | 0.835 | 4.190 | 0.790 | 0.835 |

| ARIMA | 5.094 | 0.635 | * | 5.094 | 0.635 | * | 5.116 | 0.634 | * | |

| SVR | 4.243 | 0.788 | 0.835 | 4.326 | 0.780 | 0.831 | 4.333 | 0.780 | 0.821 | |

| GRU | 4.115 | 0.799 | 0.841 | 4.129 | 0.799 | 0.840 | 5.406 | 0.751 | 0.811 | |

| T-GCN [2] | 3.827 | 0.805 | 0.855 | 4.121 | 0.794 | 0.835 | 4.130 | 0.789 | 0.831 | |

| DC-STGCN | 3.791 | 0.815 | 0.866 | 3.881 | 0.807 | 0.854 | 3.910 | 0.805 | 0.852 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, C.; Zhu, J.; Kong, Z.; Shi, H.; Yang, W. DC-STGCN: Dual-Channel Based Graph Convolutional Networks for Network Traffic Forecasting. Electronics 2021, 10, 1014. https://doi.org/10.3390/electronics10091014

Pan C, Zhu J, Kong Z, Shi H, Yang W. DC-STGCN: Dual-Channel Based Graph Convolutional Networks for Network Traffic Forecasting. Electronics. 2021; 10(9):1014. https://doi.org/10.3390/electronics10091014

Chicago/Turabian StylePan, Chengsheng, Jiang Zhu, Zhixiang Kong, Huaifeng Shi, and Wensheng Yang. 2021. "DC-STGCN: Dual-Channel Based Graph Convolutional Networks for Network Traffic Forecasting" Electronics 10, no. 9: 1014. https://doi.org/10.3390/electronics10091014

APA StylePan, C., Zhu, J., Kong, Z., Shi, H., & Yang, W. (2021). DC-STGCN: Dual-Channel Based Graph Convolutional Networks for Network Traffic Forecasting. Electronics, 10(9), 1014. https://doi.org/10.3390/electronics10091014