Abstract

Pruning and quantization are two commonly used approaches to accelerate the LSTM (Long Short-Term Memory) model. However, the traditional linear quantization usually suffers from the problem of gradient vanishing, and the existing pruning methods all have the problem of producing undesired irregular sparsity or large indexing overhead. To alleviate the problem of vanishing gradient, this work proposed a normalized linear quantization approach, which first normalize operands regionally and then quantize them in a local mix-max range. To overcome the problem of irregular sparsity and large indexing overhead, this work adopts the permuted block diagonal mask matrices to generate the sparse model. Due to the sparse model being highly regular, the position of non-zero weights can be obtained by a simple calculation, thus avoiding the large indexing overhead. Based on the sparse LSTM model generated from the permuted block diagonal mask matrices, this paper also proposed a high energy-efficiency accelerator, PermLSTM that comprehensively exploits the sparsity of weights, activations, and products regarding the matrix–vector multiplications, resulting in a 55.1% reduction in power consumption. The accelerator has been realized on Arria-10 FPGAs running at 150 MHz and achieved ∼ energy efficiency compared with the other FPGA-based LSTM accelerators previously reported.

1. Introduction

LSTM and GRU (Gated Recurrent Unit) are two most popular variants of RNN, which have been commonly applied in the sequence processing tasks [1,2,3,4]. Despite their superior capabilities in dealing with the dynamic temporal behavior of data sequences, those two architectures usually suffer from difficulties in the hardware design due to the presence of overwhelmingly numerous parameters and hence the occurrence of high computational complexity. In this work, we explore an avenue to minimize the inference part of the sparse LSTM model pruned by permuted diagonal mask matrices, so called PermLSTM, aiming for high processing performance as well as high energy-efficiency. The design methods derived from this study primarily on LTSM are also applicable to the case of the GRU, since both types of the network share the common architecture except that a GRU has two gates (reset and update gates), whereas an LSTM has three gates (namely input, output, and forget gates).

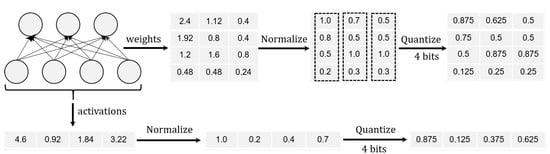

As suggested in Table 1 and Table 2, the power dissipated in accessing the off-chip memory is far more than the on-chip memory and, at the worst, those floating-point operations are extremely power hungry. Therefore, by compressing the network model in an appropriate way, the power dissipation can be reduced without necessarily degrading the whole system. This paper presents a hardware-friendly pruning approach that uses the permuted block diagonal mask matrix to generate a regular sparse structure. Differing from the previous approaches, our processing method completely eliminates some excessive indexing overhead in tracking of non-zero values, which lessens the computational load for hardware implementation. Quantifying weights and activations to fixed-point numbers should also compress the model. However, the linear quantization without normalization usually suffers from non-convergence problems literally caused by the saturation operations involved. To mitigate such a problem, this work proposed a linear quantization with local normalization to map the operands evenly over the range of [−1, 1]. It should be noted that, in order to reduce the impact of normalization on the accuracy of the model as much as possible, this work did not perform the global normalization on the operands, but adopted a more refined normalization approach, that is, grouping the operands according to the output result firstly, and then normalizing the weights and activation in each group. Thus, all the complex and energy-consuming floating-point operations are avoided yet with a negligible accuracy loss. The entire compression flow is as shown in Figure 1.

Table 1.

Breakdown of the energy consumption for multiply-accumulate operations [5]. Adapted with permission from ref. [5]. Copyright 2014 IEEE.

Table 2.

Breakdown of the energy consumption for memory access [5]. Adapted with permission from ref. [5]. Copyright 2014 IEEE.

Figure 1.

The entire compression flow.

Implementation of those activation functions in LSTM is also cumbersome. In previous works, the activation function is often calculated based on the lookup table, the piecewise linear approximation or the curve fitting, giving rise to deteriorated network performance. As described in detail below, it has been found that properly tailoring the piecewise linear approximation to the quantization of the activation in terms of precision should help alleviate those shortcomings owing to some unreconciled error conflicts. Hence, such considerations have been incorporated into our design to boost the energy efficiency.

In addition to the optimization effort at algorithmic level, we also investigate into the design of an efficient processing unit to fully exploit the sparsity of the model. Most of the previous works have focused only on exploiting the sparsity on the weight and the activation. We further extend this sparsity exploitation to the product, which could also be sparse. In fact, as long as either a weight or an activation is zero, the resulting product will certainly be zero. Therefore, the product should have a degree of sparsity that is equivalent to or even greater than in the weight. By tapping into this property, we can effectively reduce the number of additions that follow. Provided that there was no new auxiliary circuitry being added, the power dissipation regarding the whole system then decreases. Since all the weights are pruned using the permuted block diagonal mask matrices, the position of a non-zero weight can be easily located, thereby making it possible to execute only those valid calculations as far as the product concerned.

The main contributions of the work are highlighted below:

- A hardware-friendly compression algorithm that combines both linear quantization with local normalization and structured pruning has been proposed. In comparison to the existing pruning approaches, it excels in dealing with processing irregularity and also in eliminating excessive indexing overhead. Similarly, in comparison to the existing quantization approaches, it not only solved the problem of vanishing gradient, but also adopted a more refined normalization approach to minimize the impact of normalization on model accuracy.

- The discrepancy arising from approximating the sigmoid function with the piecewise linear function has been defined and evaluated. Furthermore, an analysis is made to guide the selection of the bit-width of operands, in a way to facilitate the hardware implementation with minimum resources, as well as to retain the model accuracy.

- An energy-efficient accelerator architecture, PermLSTM, has been designed and implemented, to validate the proposed architecture which comprehensively exploits all sorts of the sparsity existing in the network.

The remainder of this paper is organized as follows: Section 2 gives a brief introduction on LSTM, followed by a quick overview on the existing pruning and quantization approaches. In Section 3, the proposed compression algorithm involving new strategies regarding structured pruning and linear quantization with local normalization is presented. Section 4 discusses our simplification effort in executing the activation functions. Section 5 further discusses design implementation for the proposed accelerator architecture. The experimental results are presented and compared in Section 6. Finally, conclusions are drawn in Section 7.

2. Background

2.1. Long Short-Term Memory

LSTM was first published by S. Hochreiter and J. Schmidhuber in 1997 [6] and later elaborated by F. Gers in 2002 [7]. For an LSTM, the input is characterized as a sequence of data that is: . Regarding a constituent unit in LSTM, the cell states and the internal gate can be calculated by the following formulas:

The superscriptions and the subscription t denote the input gate, the forget gate, the output gate, and the time step, respectively. Considering the input , the cell state and the hidden state , we have a weight matrix for multiplication with the input data , where . In addition, we have a weight matrix for multiplication with the hidden state and a bias vector . The function is regarded as a logistic sigmoid. The symbol ⨀ corresponds to an element-wise multiplication.

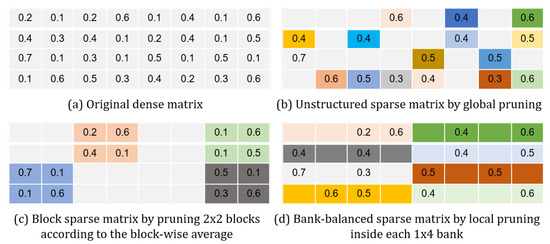

2.2. Model Compression

As some certain redundancy always exists in neural networks [8,9], pruning techniques have been proven effective in model compressing without necessarily degrading the performance. Unfortunately, the present weight pruning approaches all seem to have some drawbacks. Song. H. and Frederick. T. et al perform weight pruning by following the “smaller-norm-less-important” criterion, which suggests that weights with smaller norms can be pruned safely due to their insignificance [10,11,12,13]. Xin. D. and Pavlo. M. et al. heuristically search for trivial weights to be eliminated [14,15]. As illustrated in Figure 2b, these two approaches are generally prone to generating undesired irregular sparsity, which makes hardware design less efficient. Sharan. N. proposed the coarse-grained pruning method targeting all the weights in a block-manipulated fashion, surely delivering regular sparsity, but at the expense of the model accuracy (see Figure 2c) [16]. Referring to Figure 2d, Shi. C. divides each weight matrix row into a plurality of those equally sized banks (marked with different colors), and separately prunes each bank at fine-grained level [17]. This way, the same degree of sparsity across all the weight matrices can be obtained. Seyed. G. proposed a similar solution, which is pruning the model to obtain a row-balanced sparse matrix [18]. However, the address pointing to a non-zero weight position in their solutions becomes unpredictable and requires separate memory for storage.

Figure 2.

The existing pruning methods illustrated by examples.

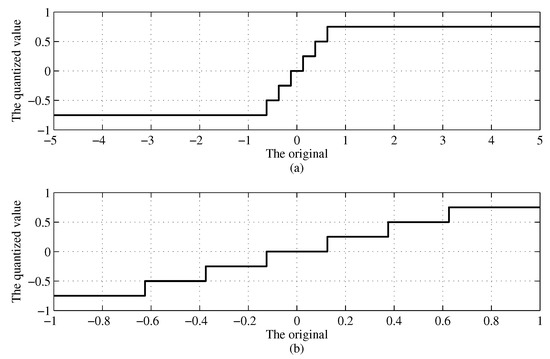

Quantization is another effective means in network compression. Itay. H. quantizes all the weights in binary, which has only two values, namely −1 and 1 [19]. In order to enhance the digital representation for better precision, Chenzhuo, Z. quantizes all the weights in ternary, yet still not sufficiently enough when dealing with some complicated tasks [20]. Shuchang. Z. devised some approaches of quantifying the weights and the activations to any-bit values [21]. In their approaches, an operand will be quantized within the range of and otherwise saturated outside of this range. As shown in Figure 3a, for those operands far greater than 1 in value, even if the straight-through estimation [21] is applied regarding the gradient, such a way of truncation could still nullify some of the gradients according to our experimental results presented in Table 3, leaving the network hard to converge. As a consequence, this type of quantization is not performing well on some certain tasks. To rectify, Jungwook. C. proposed to limit activations within the range of , where is a trainable parameter [22]. However, this approach is only applicable to the quantization of the activations rather than the weights.

Figure 3.

(a) The linear quantization without normalization. (b) The linear quantization with local normalization.

Table 3.

The perplexity of a model using two compression approaches with different bit-width.

2.3. Hardware Acceleration

There are also many optimizations at the hardware level. Elham. A. use an approximate multiplier to replace the DSP block, thereby reducing the logic complexity and power consumption of the entire system [23,24]. Jo. J. measures how much the inputs of two adjacent LSTM cells are similar to each other, then disables the highly-similar LSTM operations and directly transfers the prior results for reducing the computational costs [25]. Patrick. J. use a shift-based arithmetic unit to perform multiplications in an arbitrary bit-width, aiming to make a full use of the properties offered by the low bit-width operands [26]. Bank-Tavakoli. E. optimized the pipeline of the datapath so that operations can be executed in parallel [27]. However, they stop short of exploiting the model sparsity. Jorge. A. tried to utilize only the sparsity in the weight during multiply-accumulate operations [28]. Shen. H. took advantage of the sparsity of both weight and activation [29,30]. In fact, there is a significant degree of sparsity also existing in the product, but, so far, there is no work utilizing this sparsity.

3. Hardware-Friendly Compression Algorithm

Pruning the network by using the permuted block diagonal mask matrices not only produces regular sparsity, but also eliminates the hardware overhead otherwise required to store the index regarding non-zero weights [31]. Quantization is another effective way of compressing the network. As mentioned earlier, the traditional linear quantization (TLQ) approach usually suffers from the non-convergence problem arising from the capped saturation. The normalized linear quantization (NLQ) approach, however, can, to a large extent, remove this limitation [32]. Accordingly, the entire training process will go through two phases. First, after pruned, the model is taken for training until it converges. Secondly, the model is subject to further quantization and then taken back for retraining until it converges once again.

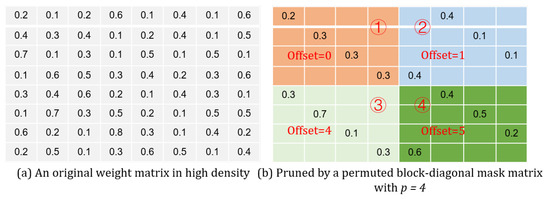

3.1. Pruning the Network Using the Permuted Block Diagonal Mask Matrices

The permuted block diagonal mask matrix is defined as follows: for an m-by-n weight matrix , we have a mask matrix , which contains numbers of p-by-p sub-matrices. The elements of the mask matrix are defined as follows:

where , , and p is the rank of the sub-matrices. The numbers of sub-matrices represent all the permuted block diagonal mask matrices used. Then, the pruned weight matrix can be obtained below:

Here, we give an example to illustrate how the network is pruned by the permuted mask matrix. For an 8-by-8 weight matrix as shown in Figure 4a, the rank of a mask sub-matrix is set to 4. According to the definition of the given earlier, when the row index i and column index j, respectively range from 0 to 3, the is 0. When and , the becomes 1. When and , the becomes 4. When and , the becomes 5. Substituting those four values in (7), we will have the first and the third mask sub-matrices being of the identity matrix, and the second and the fourth mask sub-matrices can be acquired by cyclically right-shifting the first and the third mask sub-matrices, respectively. As a result, the pruned weight matrix is given in Figure 4b.

Figure 4.

(a) An original weight matrix in high density. (b) Pruned by a permuted block-diagonal mask matrix with .

3.2. Linear Quantization with Local Normalization

As shown in Figure 3a, any operand, whose value is less than 1, is quantized and otherwise saturated. Apparently, the associated quantization error will go up when the operand is getting far greater than 1, somehow causing the gradient to disappear during the training. To circumvent this problem, we map all the operands within the range of prior to the quantization [32], as shown in Figure 4b. Instead of simply normalizing all the operands globally [33], the weights and the activations have been normalized separately in a more refined way.

For weights in LSTM, , where is the number of input channels, and is the number of output channels. Considering , it corresponds to the jth element in the activation vector and is evaluated at the (t + 1)th time step, having its associated calculations only related to those weights in the jth column of the weight matrix. Thus, the weights can be normalized per output channel. i.e.,

For activations in LSTM, we have . As, at the (t + 1)th time step, each element in the activation vector is iterated from all the elements in the last activation vector (i.e., at time step t), the normalized activations, , can then be calculated below:

where i is ranging across all the hidden nodes with respect to a cell. The normalized weights and the activations are then subject to a linear quantization, as defined below:

An example showing a complete normalize-then-quantize process regarding the weights and the activations is depicted in Figure 5.

Figure 5.

A normalized linear quantization flow regarding weights and activations.

3.3. Experimental Setup

We implemented our compression algorithm in TensorFlow. Two datasets Penn Treebank(PTB) [34] and TIMIT [35] have been taken to validate our compression flow. Note that TIMIT requires a pre-processing to extract the Mel-frequency cepstral coefficients (MFCC) and obtain the 40-dimensional input feature data. When evaluated on PTB, the model needs two LSTM layers with 200 hidden nodes and one fully connected layer. The GradientDescentOptimizer is chosen as the optimizer, and the learning rate is set to 1. When evaluated on TIMIT, the model has five LSTM layers and a fully connected layer, with 512 hidden nodes in each LSTM layer. The Adam is chosen as the optimizer, and the learning rate is set to 1 .

3.4. Comparison with Bank-Balanced Sparsity

Both the perplexity and the phone error rate are chosen as two separate performance metrics to determine the quality of the model, as defined below:

in which represents an actual probability distribution and is a probability distribution being estimated by the model. A low perplexity should indicate that the associated probability distribution is good at predicting some specific sample:

where is the number of the phonemes misidentified by the model, and is the number of the total phonemes.

After the permuted block diagonal mask matrix (with a rank of p) is applied, the model sparsity will be created and by a measure can be estimated as . In our experiment, p is set to {2,4,6,8,10}, and hence the corresponding sparsity should be {50%, 75%, 83.3%, 87.5%, 90%}.

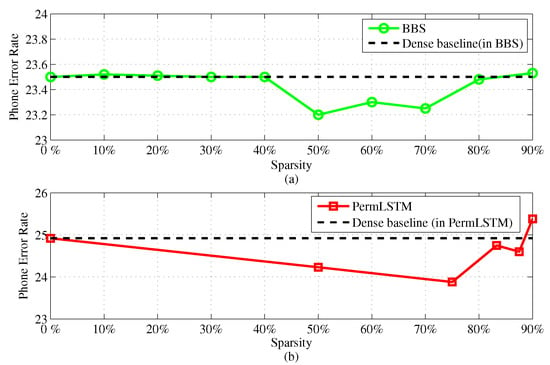

Figure 6 shows the phone error rate varying with the sparsity, measured in both the BBS (Bank Balanced Sparsity) and the PermLSTM cases targeting the same TIMIT dataset. It can be seen that, when the sparsity is less than 87.5%, both BBS and PermLSTM exhibit almost no accuracy loss. As the sparsity increases up to 90%, the relative deviation from the baseline will be 0.4% for PermLSTM and 0.1% for BBS, all within an acceptable margin.

Figure 6.

Phone error rate vs. sparsity on the TIMIT dataset. (a) Evaluted on Bank-Blanced Sparsity (BBS). (b) Evaluted on PermLSTM.

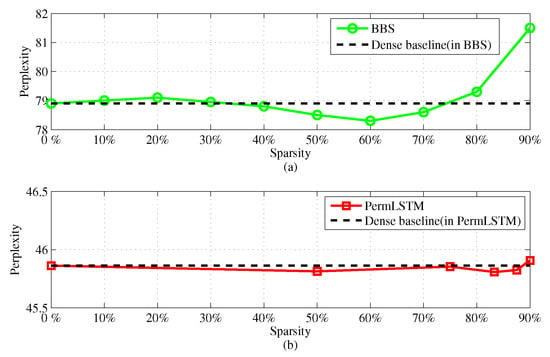

For the PTB dataset, the trend curves regarding the perplexity versus the sparsity are depicted in Figure 7, again for both the BBS and the PermLSTM cases. It can be seen that, as the sparsity is increased above 80%, BBS begins to show a dramatic deterioration to the model accuracy. Specifically, at a 90% sparsity, BBS has an absolute deviation of 2.6 in terms of a worse perplexity. In contrast, the absolute deviation for PermLSTM is merely 0.05.

Figure 7.

Perplexity vs. sparsity on the Penn Treebank (PTB) dataset. (a) Evaluted on BBS. (b) Evaluted on PermLSTM.

3.5. Comparison with the Traditional Linear Quantization

With PTB, we also study different impacts on perplexity arising from the traditional linear quantization (TLQ) i.e, the linear quantization without normalization, and the linear quantization with local normalization (NLQ). In the experiment, the rank of the block-diagonal mask matrix is 4. Table 3 gives the perplexity results in the both cases and a selection of the bit-width conditions.

As shown in Table 3, for (bitsW, bitsA) = (4, 7), the network perplexity from our Prune&NLQ is 45.926, which is fairly close to the estimate in full precision, i.e., 45.861. However, with the same quantization arrangement for the weight and the activation, Prune&TLQ has a perplexity of 115.276, about three times worse off with respect to the full-precision result. Note that, in the case of TLQ, the gradients will start to vanish as the perplexity progressively drops to about 115. As a consequence, the perplexity would cease decreasing any more. However, in the case of NLQ, the gradients can be well persevered throughout the processing, allowing the perplexity to continue a decline until reaching a similar level as the full-precision network.

Apparently, the traditional linear quantization suffers from the capped saturation during an updating for the weight and the activation. Not only would such a limitation result in large errors in magnitude, but it would also incur the gradient being reduced to nil in the calculation. By Prune&NLQ, however, both the weight and the activation are evenly spread over a range of [−1, 1], in a way to mitigate this aggregated loss in precision especially for those large value operands.

4. Simplification of Activation Functions

The and are two activation functions usually used in LSTM. They are exponential or divisive in characteristic, requiring many hardware resources in implementation. There exist three computation approaches, i.e.,: (1) by look-up table [33]; (2) by curve fitting with polynomials [36]; (3) by piecewise linear approximation (PLA) [37]. The computation based on look-up table usually requires a memory size in the region of several to ten kilobytes, which is more energy-intensive. The curve fitting based computation also suffers from some drawbacks involving high computational complexity. Although the piecewise linear approximation is much simpler in computing, the associated deviation may become unbearable, getting the network under-performed. That problem is fundamentally caused by an operational mismatch existing between the approximated activation function and its operand precision.

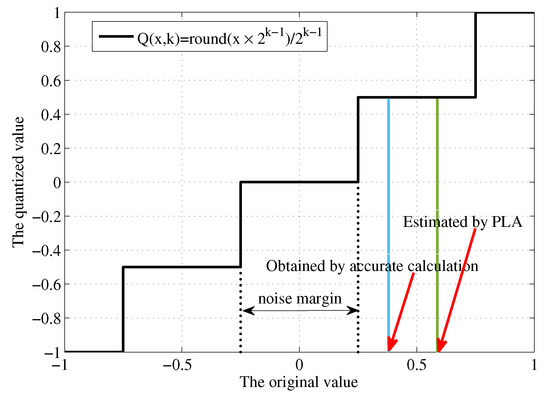

In our design flow, all the operands are also approximated by quantization, which, in turn, helps alleviate the mismatch effect just mentioned. As suggested in Figure 8, the noise margin regarding a quantized operand may just reconcile the deviations (errors) deduced from PLA of the or functions.

Figure 8.

Illustration of an approximation error being reconciled by the noise margin.

Theoretically, the noise margin corresponds to , where k is bit-width of the activation. k should be appropriately selected so that the noise margin is on par with the deviation range. This way, no model accuracy might be compromised. Bear in mind that, if the bit-width for an operand is too small, the model training is likely getting hard to converge.

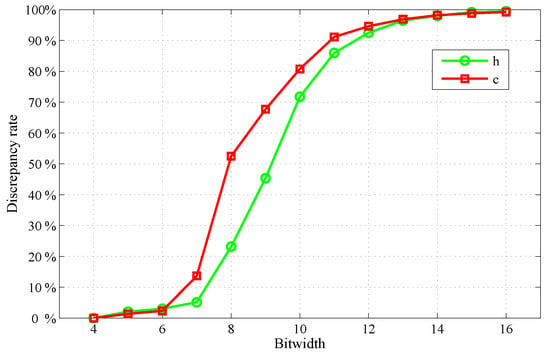

In order to reveal the matching behavior regarding the PLAed activation function and its quantized operand, we carried out a simple analysis. Having 256 hidden nodes inside an LSTM cell, its hidden state and cell state are separately calculated, i.e., with and without PLA applied to the activation function. Where an error caused by PLA is greater than the noise margin regarding a chosen bit-width (k), there will be a discrepancy. We then count all the discrepancies after examining 50 randomly generated pairs of the weight and the input both quantized in bit-width varying from 4 to 16. Note that such arbitration goes through all the 256 outputs for each weight-input pair. Then, the discrepancy rates are estimated and depicted in Figure 9. A detailed procedure can be found in Algorithm 1. Two PLA functions correspondingly for the sigmoid and the tangent are specified below.

| Algorithm 1 Pseudocode in calculating the discrepancy rate regarding the PLAed activation function and its quantized operand. |

|

Figure 9.

The discrepancy rate vs. the bit-width, to characterize mismatch between the PLAed activation function and its quantized operand.

From Figure 9, one can see that a bit-width of 4∼7 for the weight and the input should be sufficiently accurate with respect to the above PLA functions, where the relevant discrepancy rates are well below 20%, that is, more than 80% of the approximate calculation results are consistent with the accurate calculation results. In addition, because the larger the bit-width, the smaller the noise margin, as some larger bit-widths (8∼16) are applied, there exhibit even higher discrepancy rates, implying a strong influence from the precision mismatch. As mentioned before, if the bit-width for an operand is too small, the model training is likely getting hard to converge. Therefore, by trading off the difficulty of model training and the error introduced by approximate calculation, the most suitable bitwidth is 7.

5. Design of Hardware Architecture

In this section, we will discuss the design of the hardware architecture for the proposed LSTM accelerator, by addressing some challenging issues regarding an optimization towards high operational efficiency in terms of energy and resources.

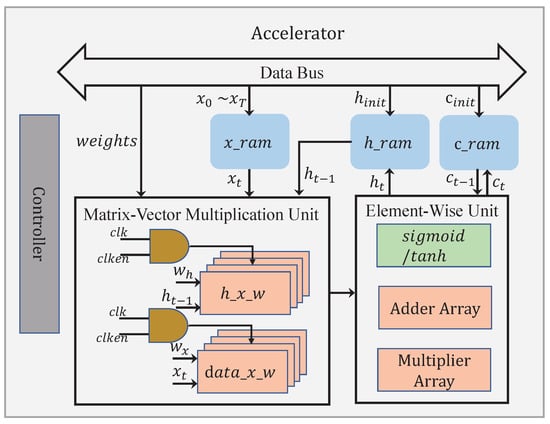

5.1. System Overview

An architecture diagram for the proposed accelerator is shown in Figure 10. The accelerator has two main functional blocks, namely the matrix–vector multiplication unit and the element-wise unit, containing all the basic operations required by the LSTM. The matrix–vector multiplication unit is primarily responsible for the computations regarding and defined in Equations (1)–(4), where . As and are usually differing in dimension, the run times required to complete these two categories of multiplications are different. By exploiting the waiting intervals for the sake of energy saving, our architecture has two separate modules, i.e., and , dedicated to accomplishing and , respectively. When , will be read from at a later time, in a way to align the finishing time for both matrix–vector multiplications. During the time before the next is read from , the module is idle, with its clock disabled. When , it should be the other way around. This time, reading from is being delayed until a later time, in the meantime setting the module at idle. By doing so, a big chunk of the total dynamic power consumption can be effectively cut off. For example, in a typical word sequence generation case, the difference between m (e.g., 27) and n (e.g., 256 or 512) could be quite large, benefiting the energy savings to a large extent. Note that the element-wise unit performs the element-wise addition, the element-wise multiplication, and the activation function.

Figure 10.

The overall architecture of the proposed accelerator.

5.2. Matrix–Vector Multiplication

All the matrix–vector multiplications are regarded as the most computationally intensive during the LSTM inference. Therefore, an effort is directed towards possible reductions in such operations, by tapping into the enhanced sparsity in the weight, the activation, and the product after the compression. However, solutions to a complete treatment were seldom discussed in the prior works.

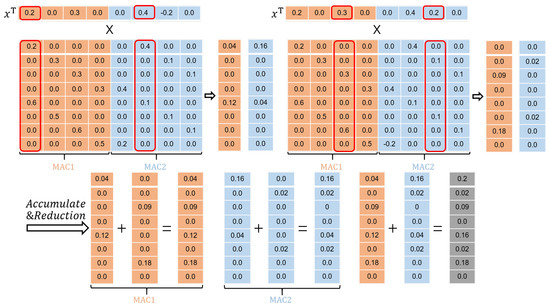

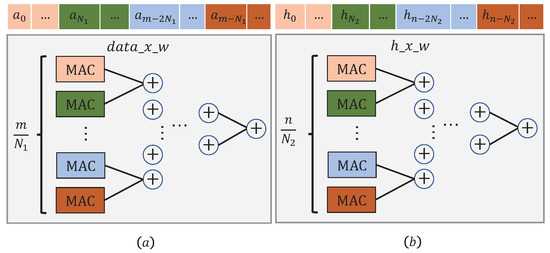

As for the activation, the accelerator has been designed to calculate the matrix–vector multiplications in a column-wise fashion, as depicted in Figure 11. As long as an activation is zero, the subsequent multiplications involving and will be bypassed. Here, represents all the weights from a corresponding column. Figure 12 illustrates the processing flow executed in module . Along the way, m activations are being fed into over clock cycles.

Figure 11.

A column-wise processing procedure.

Figure 12.

The processing flow of (a) Module ; (b) Module .

Note that the MAC (Multiply and accumulate) unit marked in a color should coordinate those data slices marked with the same color. A similar procedure can also be applied to the module .

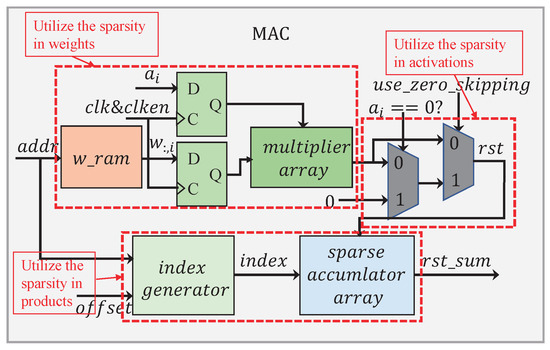

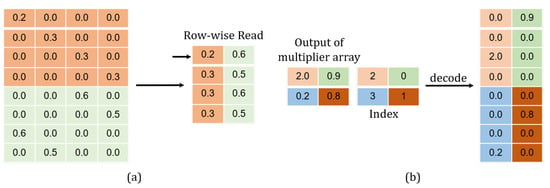

The MAC submodule has a processing flow as given in Figure 13. In order to reduce the memory footprint and lessen the multiplication operation, we developed a memory-accessing strategy by catering fully for the weight sparsity. As illustrated in Figure 14a, only non-zero weights at the same column in the matrix are stored along a single row in the memory, facilitating for them to be read once a clock cycle.

Figure 13.

The processing flow of Module multiply and accumulate (MAC).

Figure 14.

Process involving (a) data allocation into a weight memory; (b) data decoding into a recovered form.

In Figure 13, there are two 2:1 multiplexers that have been employed to dynamically configure the circuit according to the sparsity in the activation. When , the first multiplexer selects zero as its output. At the same time, the clocking to those weight and activation registers is disabled. As a result, all the operations have been shut down in the multiplier array. Apparently, this allows for a great deal of power-saving, considering the activation being highly sparse. When , the result calculated from the multiplier array will pass straight through the first multiplexer. The second multiplexer is used to switch over between the zero-skipping and the non-zero-skipping modes, simply by controlling the signal , in a way to further cutting down the power consumption.

The sparsity in the product should also be taken into account. In order to have only the non-zero products sent for accumulation, their positions in the matrix need to be recorded. As long as either the multiplier or the multiplicand is zero, their product must be zero. In a way, the positions regarding these non-zero products are directly related to the corresponding non-zero weights. According to (7), the coordinates for the non-zero values in a weight matrix should observe the following relations:

In (17), the term calculates the longitude coordinate for all the non-zero weights in a relevant sub-matrix. Since the non-zero values belonging to the same column in the weight matrix are stored along a specific row in the memory , j is then regarded as this row’s address (). As for the offset, it can be decided just by a calculation of , considering that . The module takes such a differentiation route to determine all the locations regarding the non-zero products, e.g.,

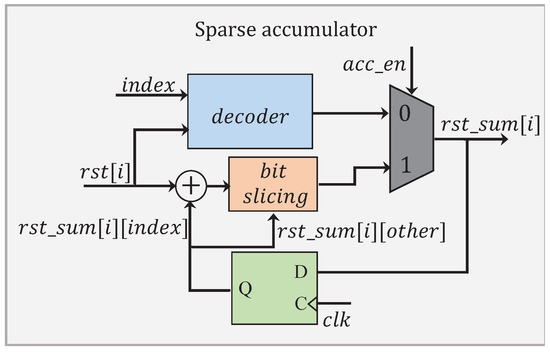

The module has a processing flow as depicted in Figure 15. When an accumulation is not required, the signal is set to 0. This allows for performing only the decoding operation, which has been illustrated in Figure 14b. The non-zero product is decoded in a format of . A total of p accumulated results are individually concatenated into bits in the representation. When the accumulation is required, the signal is set to 1. Instead of performing all the p additions, only those additions involving and the corresponding contents in are processed. The intermediate results obtained are later stitched up with the others in to complete a full accumulation.

Figure 15.

Block diagram of the sparsity-aware accumulator.

5.3. Operations Reduction Analysis

A theoretical analysis has been carried out on the reduced operation effect brought by our proposed design approach. For a weight matrix , it is pruned individually by mask matrices in p-by-p dimensions. Hence, the ratio in terms of the multiplication reduction by treating the weight sparsity can be estimated as follows:

Furthermore, suppose the non-zero activation ratio is . In addition, all the weights take part in the multiplication have been pruned. Then, the multiplication reduction ratio due to attending the sparsity in the activation can also be estimated by:

In terms of the product, the sparsity-aware processing can just lead to reducing the number of additions—in the case where the model is being pruned through the permuted block diagonal mask matrices, and the matrix–vector multiplication is being executed in a column-wise way (see Figure 11). In each column, there are non-zero products. Thus, the number of the additions reduced, as a result from the product sparsity exploitation, can be calculated according to the following:

6. Experimental Results and Comparisons

Experiments are performed to evaluate the characteristics regarding our PermLSTM accelerator design. The performance results are further compared with some other state-of-the-art LSTM accelerators.

6.1. Experimental Setup

In our experiments, the Arria 10 (10AX115U4F45I3SG) FPGA (Intel, Santa Clara, CA, USA) is chosen to serve as a testing vehicle. The design coded in Verilog HDL is synthesized in the Quartus II 15.0 environment, operating at a frequency of 150 MHz. The Quartus PowerPlay (a dedicated Power Analyzer tool) is used to estimate post-placement power consumption of the device. The following operational conditions are assumed, i.e., (1) 25 °C ambient temperature; (2) 12.5% toggle rate for the I/O signals; (3) the real-time toggle rate applied to the core signals; (4) 0.5 static probability in BRAMs.

6.2. Breakdown of the Energy- and Resource-Saving Rates

The characteristics in terms of energy and resource saving vary with the weight/activation/product sparsity, which are measured and discussed. An original matrix–vector multiplication of is referred to as a benchmark, where and . The number of MACs in module is set to 15, which implies that each MAC should perform 15 multiply-accumulate operations concurrently. The power dissipation and the resource utilization are examined on the following conditions: (1) none of the sparsity is considered (the baseline); (2) only the weight sparsity is considered; (3) both the weight and the activation sparsity are considered; (4) all of the sparsities involving the weight, the activation and the product are considered. The measurement results are presented in Table 4.

Table 4.

The breakdown of both energy and resources saved by utilizing three types of sparsity.

In the experiments, , and p take the values of 120, 120, and 4, respectively. According to (19), the weight-sparsity related multiplication reduction rate () is 0.25 (1/4), which means 75% of the operations have now been cut out. Hence, the number of the DSP Blocks needed can be drastically reduced from 900 to 225. This gives rise to an effective power reduction by 718 mW.

Assume the sparsity ratio regarding the activation () be 50%. According to (20), the activation-sparsity related multiplication reduction rate () is 0.125 (1/8). This further reduces the multiplication runs. As a result, the power consumption is once again decreased by 188 mW.

In addition, assume the sparsity for the product to be the same as the weight. According to (21), the number of the reduced additions () is 5400. Obviously, this comprehensive weight/ activation/product sparsity-aware design delivers the lowest power consumption (871.63 mW) and the least resource utilization (minimum physical footprint in terms of logic fabrics and DSP blocks) by a remarkably large margin.

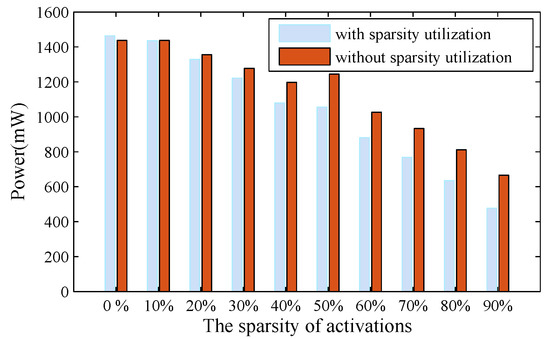

6.3. Influence by Different Levels of the Sparsity in Activation

As the weight sparsity is decided after the training, the activation sparsity is changing in real-time, which would influence how the power saving is estimated. Through varying the activation sparsity level from 0% to 90%, the power dissipations are measured in two conditions, i.e., with or without the activation sparsity undertaken in place. The results are shown in Figure 16. Seen as a general trend, the power is rapidly decreasing with the activation sparsity going up regardless. However, the advantage gap in favor of sparsity treatment against non-sparsity treatment becomes wider at high activation sparsity than at low activation sparsity. For an activation sparsity less than 10%, the power dissipations exhibited in both cases are almost leveled.

Figure 16.

Power consumption vs. different levels of the sparsity in the activation.

6.4. Comparison with State-of-the-Art LSTM Accelerators

The following design parameters have been specified in our implementation: (1) the rank for the block-diagonal matrix is 4; (2) the bit-width for the weight is 4; (3) the bit-width for the activation is 7. Furthermore, there are 200 hidden nodes. Then, 25 in module are employed, with each performing 25 multiply-accumulate operations concurrently. Table 5 lists a statistic of the resources allocated in our design, based on the Vivado post-fitting reports. Because the low bit-width multipliers can be implemented with LUTs, no DSP blocks are required.

Table 5.

The resource utilization of the PermLSTM accelerator.

For the performance, we compared the proposed LSTM accelerator with five state-of-the-art LSTM accelerators, i.e., BBS [17], FINN-L [38], BRDS [18], DeltaRNN [39], and E-LSTM [40], all based on FPGAs. The results have been summarized in Table 6. To be fair, we choose the data of FINN-L with the bit-width setting being 4-4-4 (see note 1 in Table 6).

Table 6.

Comparison with Other FPGA-Based Long Short-Term Memory (LSTM) Accelerators.

Thanks to the coordinated simplification approach towards network compression, the proposed LSTM accelerator design achieves a throughput of 2.22 TOPS and an energy efficiency of 389.5 GOPS/W. Such processing performance demonstrates the ∼ increase in throughput and the ∼ improvement in energy efficiency, in comparison to some other state-of-the-art works.

7. Conclusions

In this paper, we presented a novel pruning and quantization approach. By using the permuted block mask matrices to prune model, we addressed the problem of structural irregularity and large indexing overhead. By normalizing the operands locally prior to the quantization, the problem of vanishing gradient is alleviated. Tapping into the high sparsity created by the proposed compression approach, an energy-efficient accelerator architecture called PermLSTM has been designed. In addition, based on our analysis on the quantization error effect regarding the participating operands on the activation function, the piecewise linear approximation is applied and tailored to match the activation bit-width selected after quantization. The design has been implemented on Arria-10 FPGA. According to the experimental results, PermLSTM achieves a throughput of 2.22 TOPS and an energy efficiency of 389.5 GOPS/W, demonstrating a superior processing performance in comparison to other prior works.

Author Contributions

Conceptualization, Y.Z. and H.Y.; methodology, Y.Z. and H.Y.; software, Y.Z.; validation, Y.Z., H.Y., Y.J., and Z.H.; formal analysis, Y.Z., H.Y., Y.J., and Z.H.; investigation, Y.Z. and H.Y.; resources, H.Y. and Y.J.; data curation, Y.J. and Z.H.; writing—original draft preparation, Y.Z. and H.Y.; writing—review and editing, Y.Z. and H.Y.; visualization, Y.Z.; supervision, H.Y.; project administration, H.Y.; funding acquisition, H.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported jointly by the National Natural Science Foundation of China under Grants 61876172 and 61704173 and the Major Program of Beijing Science and Technology under Grant Z171100000117019. Part of the research activities were also conducted at Shandong Industrial Institute of Integrated Circuits Technology, China.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hinton, G.; Deng, L.; Yu, D.; Dahl, G.; Mohamed, A.; Jaitly, N.; Senior, A.; Vanhoucke, V.; Nguyen, P.; Sainath, T.N.; et al. Deep Neural Networks for Acoustic Modeling in Speech Recognition: The Shared Views of Four Research Groups. IEEE Signal Process. Mag. 2012, 29, 82–97. [Google Scholar] [CrossRef]

- Dzmitry, B.; Kyunghyun, C.; Yoshua, B. Neural Machine Translation by Jointly Learning to Align and Translate. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Rueckert, E.; Nakatenus, M.; Tosatto, S.; Peters, J. Learning inverse dynamics models in O(n) time with lstm networks. In Proceedings of the 17th IEEE-RAS International Conference on Humanoid Robotics, Birmingham, UK, 15–17 November 2017. [Google Scholar]

- Khan, M.; Kim, J. Toward Developing Efficient Conv-AE-Based Intrusion Detection System Using Heterogeneous Dataset. Electronics 2020, 9, 1771. [Google Scholar] [CrossRef]

- Mark, H. Computing’s energy problem (and what we can do about it). In Proceedings of the 2014 IEEE International Conference on Solid-State Circuits Conference, Digest of Technical Papers, San Francisco, CA, USA, 9–13 February 2014. [Google Scholar]

- Sepp, H.; Jürgen, S. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Felix, A.G.; Nicol, N.; Jürgen, S. Learning Precise Timing with LSTM Recurrent Networks. J. Mach. Learn. Res 2002, 3, 115–143. [Google Scholar]

- Yu, C.; Felix, X.; Rogério, S.; Sanjiv, K.; Alok, N.; Shi, C. An Exploration of Parameter Redundancy in Deep Networks with Circulant Projections. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Hao, Z.; Jose, M.; Fatih, P. Less Is More: Towards Compact CNNs. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Song, H.; Huizi, M.; William, J. Deep Compression: Compressing Deep Neural Network with Pruning, Trained Quantization and Huffman Coding. In Proceedings of the 4th International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Frederick, T.; Greg, M. CLIP-Q: Deep Network Compression Learning by In-Parallel Pruning-Quantization. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Junki, P.; Wooseok, Y.; Daehyun, A.; Jaeha, K.; JaeJoon, K. Balancing Computation Loads and Optimizing Input Vector Loading in LSTM Accelerators. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2019, 39, 1889–1901. [Google Scholar]

- Min, L.; Linpeng, L.; Hai, W.; Yan, L.; Hongbo, Q.; Wei, Z. Optimized Compression for Implementing Convolutional Neural Networks on FPGA. Electronics 2019, 8, 295. [Google Scholar]

- Xin, D.; Shangyu, C.; Sinno, J. Learning to Prune Deep Neural Networks via Layer-wise Optimal Brain Surgeon. In Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Pavlo, M.; Arun, M.; Stephen, T.; Iuri, F.; Jan, K. Importance Estimation for Neural Network Pruning. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Sharan, N.; Eric, U.; Gregory, F. Block-Sparse Recurrent Neural Networks. arXiv 2017, arXiv:1711.02782. [Google Scholar]

- Shi, C.; Chen, Z.; Zhu, Y.; Wencong, X.; Lan, N.; De, Z.; Yun, L.; Ming, W.; Lin, Z. Efficient and Effective Sparse LSTM on FPGA with Bank-Balanced Sparsity. In Proceedings of the 2019 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Seaside, CA, USA, 24–26 February 2019. [Google Scholar]

- Seyed, G.; Erfan, T.; Mehdi, K.; Ali, K.; Massoud, P. BRDS: An FPGA-based LSTM Accelerator with Row-Balanced Dual-Ratio Sparsification. arXiv 2021, arXiv:2101.02667. [Google Scholar]

- Itay, H.; Matthieu, C.; Daniel, S.; Ran, E.; Yoshua, B. Binarized Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 29: Annual Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Chenzhuo, Z.; Song, H.; Huizi, M.; William, J. Trained Ternary Quantization. In Proceedings of the 5th International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Shuchang, Z.; Zekun, N.; Xinyu, Z.; He, W.; Yuxin, W.; Yuheng, Z. DoReFa-Net: Training Low Bitwidth Convolutional Neural Networks with Low Bitwidth Gradients. arXiv 2016, arXiv:1606.06160. [Google Scholar]

- Jungwook, C.; Zhuo, W.; Swagath, V.; Pierce, I.; Vijayalakshmi, S.; Kailash, G. PACT: Parameterized Clipping Activation for Quantized Neural Networks. arXiv 2018, arXiv:1805.06085. [Google Scholar]

- Elham, A.; Sarma, B.K. Vrudhula. An Energy-Efficient Reconfigurable LSTM Accelerator for Natural Language Processing. In Proceedings of the International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019. [Google Scholar]

- Elham, A.; Sarma, V. ELSA: A Throughput-Optimized Design of an LSTM Accelerator for Energy-Constrained Devices. ACM Trans. Embed. Comput. Syst. 2020, 19, 3:1–3:21. [Google Scholar]

- Jo, J.; Kung, J.; Lee, Y. Approximate LSTM Computing for Energy-Efficient Speech Recognition. Electronics 2020, 9, 2004. [Google Scholar] [CrossRef]

- Patrick, J.; Jorge, A.; Tayler, H.; Tor, M.; Andreas, M. Stripes: Bit-serial deep neural network computing. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture, Taipei, Taiwan, 15–19 October 2016. [Google Scholar]

- Bank-Tavakoli, E.; Seyed, G.; Mehdi, K.; Ali, K.; Massoud, P. Polar: A pipelined/overlapped fpga-based lstm accelerator. IEEE Trans. Very Large Scale Integr. Syst. 2019, 28, 838–842. [Google Scholar] [CrossRef]

- Jorge, A.; Alberto, D.; Patrick, J.; Sayeh, S.; Gerard, O.; Roman, G.; Andreas, M. Bit-pragmatic deep neural network computing. In Proceedings of the 50th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, MA, USA, 14–18 October 2017. [Google Scholar]

- Shen, H.; Kun, C.; Chih, L.; Hsuan, C.; Bo, T. Design of a Sparsity-Aware Reconfigurable Deep Learning Accelerator Supporting Various Types of Operations. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 376–387. [Google Scholar]

- Shen, H.; Hsuan, C. Sparsity-Aware Deep Learning Accelerator Design Supporting CNN and LSTM Operations. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Sevilla, Spain, 10–21 October 2020. [Google Scholar]

- Chunhua, D.; Siyu, L.; Yi, X.; Keshab, K.; Xuehai, Q.; Bo, Y. PermDNN: Efficient Compressed DNN Architecture with Permuted Diagonal Matrices. In Proceedings of the 51st Annual IEEE/ACM International Symposium on Microarchitecture, Fukuoka, Japan, 20–24 October 2018. [Google Scholar]

- Yong, Z.; Haigang, Y.; Zhihong, H.; Tianli, L.; Yiping, J. A High Energy-Efficiency FPGA-Based LSTM Accelerator Architecture Design by Structured Pruning and Normalized Linear Quantization. In Proceedings of the International Conference on Field-Programmable Technology, Tianjin, China, 9–13 December 2019. [Google Scholar]

- Song, H.; Junlong, K.; Huizi, M.; Yiming, H.; Xin, L.; Yubin, L. ESE: Efficient Speech Recognition Engine with Sparse LSTM on FPGA. In Proceedings of the 2017 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2017. [Google Scholar]

- Mitchell, P.; Beatrice, S.; Mary, A. Building a Large Annotated Corpus of English: The Penn Treebank. Comput. Linguist. 1993, 19, 313–330. [Google Scholar]

- Garofolo, J.; Lamel, L.; Fisher, W.; Fiscus, J.; Pallett, D.; Dahlgren, N.; Zue, V. TIMIT Acoustic-phonetic Continuous Speech Corpus. Linguist. Data Consort. 1992, 11. [Google Scholar] [CrossRef]

- Kewei, C.; Leilei, H.; Minjiang, L.; Xiaoyang, Z.; Yibo, F. A Compact and Configurable Long Short-Term Memory Neural Network Hardware Architecture. In Proceedings of the 2018 IEEE International Conference on Image Processing, Athens, Greece, 7–10 October 2018. [Google Scholar]

- Juan, S.; Marian, V. Laika: A 5uW Programmable LSTM Accelerator for Always-on Keyword Spotting in 65 nm CMOS. In Proceedings of the 44th IEEE European Solid State Circuits Conference, Dresden, Germany, 3–6 September 2018. [Google Scholar]

- Vladimir, R.; Alessandro, P.; Muhammad, M.; Giulio, G.; Norbert, W.; Michaela, B. FINN-L: Library Extensions and Design Trade-Off Analysis for Variable Precision LSTM Networks on FPGAs. In Proceedings of the 28th International Conference on Field Programmable Logic and Applications, Dublin, Ireland, 27–31 August 2018. [Google Scholar]

- Chang, G.; Daniel, N.; Enea, C.; ShihChii, L.; Tobi, D. DeltaRNN: A Power-efficient Recurrent Neural Network Accelerator. In Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 25–27 February 2018. [Google Scholar]

- Wang, M.; Wang, Z.; Lu, J.; Lin, J.; Wang, Z. E-LSTM: An Efficient Hardware Architecture for Long Short-Term Memory. IEEE J. Emerg. Sel. Top. Circuits Syst. 2019, 9, 280–291. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).