1. Introduction

Image denoising is a fundamental and classic topic of image processing tasks. Due to the varying environment and sensor noise, the captured image usually contains noise and the transmission and storage process may also cause the image to be degraded by noise [

1]. Therefore, image denoising is an important and indispensable part of many high-level vision tasks [

2,

3,

4]. Additive White Gaussian noise (AWGN) is the most representative noise among all kind of noises and we make a common assumption that the images are degraded by AWGN. The model of an image which is degraded by AWGN can be described as:

, where

y is the observed degraded image,

x is the noiseless clean image,

v is the AWGN with zero mean and standard deviation is

. The image denoising problem is to restore the noiseless clean image

x from the observed image

y.

Recently, a large number of methods have been proposed for image denoising [

5,

6,

7,

8,

9]. A direct way to restore the image is to estimate the noise

v, and the noiseless clean image is acquired by

. However, for a long period, accurately estimating the noise was once a difficult and almost impossible mission. Before the convolutional neural networks become popular, in [

10], a deep convolutional neural residue network was proposed to learn the noise, which got superior results to many typical denoising methods. The bilateral filter [

11] is a kind of widely used denoising method for its adaptability and good performance, but the performance decreases rapidly at high noise levels. An improvement was the non-local means (NLM) denoising method [

12], which is achieved under the assumption that natural scenes tend to repeat themselves in the same and different scales. NLM acquires better performance than the bilateral filter, but a difficulty for NLM is to tune the hyper-parameters which depend on the noise’s standard deviations, so an improper choice of hyper-parameters would cause it to lose edges or leave noise. Ville uses Stein’s unbiased risk estimate to monitor the mean square errors and avoid tuning the hyper-parameters [

13]. BM3D [

14] reaches the peak of the improved NLM methods, and it is a benchmark for image denoising methods. Transform domain denoising is another kind of popular denoising method [

15,

16]. It transforms a noisy image to a transform domain and removes noise by tuning the coefficients. Fourier transform denoising (FTD) is a typical transform domain denoising method [

17]. It transforms a noisy image to the frequency domain and removes frequencies connected with noise, and recovers the image by inverse Fourier transform. FTD faces a difficulty in determining whether the high frequency information is noise or features. Wavelet domain denoising is a development of the Fourier transform, which maps an image to the wavelet domain; the wavelet coefficients of higher amplitude are information; noise is removed by clipping smaller amplitude coefficients [

18,

19]. Rajwade in [

20] used singular value decomposition for image denoising; noises are considered to relate to smaller singular values, and the noises are removed by dropping smaller singular values. Sparse and redundant representation is another popular transform domain denoising method, which trains a redundant dictionary from the noisy image, and acquires a restored image by optimizing an object function with sparse coefficients priors [

9]. Protter [

21] generalized the sparse and representation methods to image sequence denoising. Later, sparse and representation methods were combined with non-local means to get better performance [

22]. There are also many other denoising methods, such as total variation [

23,

24] and statistical neighborhood approaches [

25]. Most of the above methods are model-based methods that rely on prior knowledge, and they are realized by optimization methods. Three drawbacks for these methods are trying to balance the noise removal and detail-preservation, choosing the prior knowledge and searching for the optimal solution.

An alternative way is the discriminate learning methods, which learn the mapping that maps noisy images to corresponding noiseless clean ones. Burger in [

26] proposed a plain neural network for image denoising, which acquires comparable performance to BM3D. ZhangK in [

10] proposed a fully convolutional network for image denoising. By learning the residue of an image, it can not only remove AWGN, but also work on other image processing tasks, such as image super-resolution and image deblocking. However, for the above discriminate learning methods, they demand training models for each noise level, which brings great inconvenience. To tackle this problem, Isogawa in [

3] proposed a novel activation function with a varying threshold; the noisy images with different noise levels are restored by a unique network. Zhang et al. [

27] used the down-sampled sub-images to train the model and adopted the noisy image and noise level map as the input; noisy images under different noise levels can be handled by a single network. Lefkimmiatis in [

28] integrates non-local self-similarity into a convolutional neural network (CNN) and gets results competitive with many state-of-the-art methods.

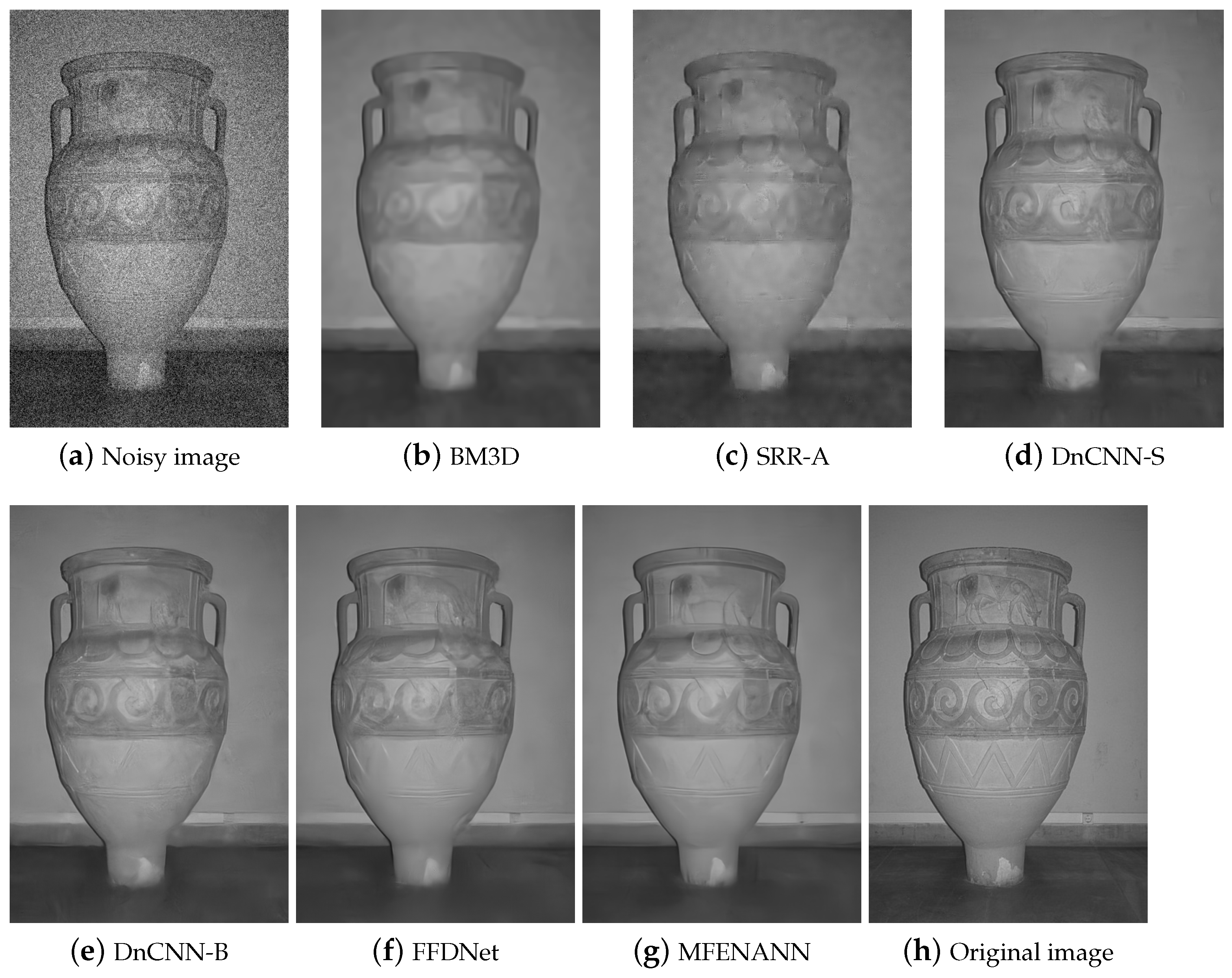

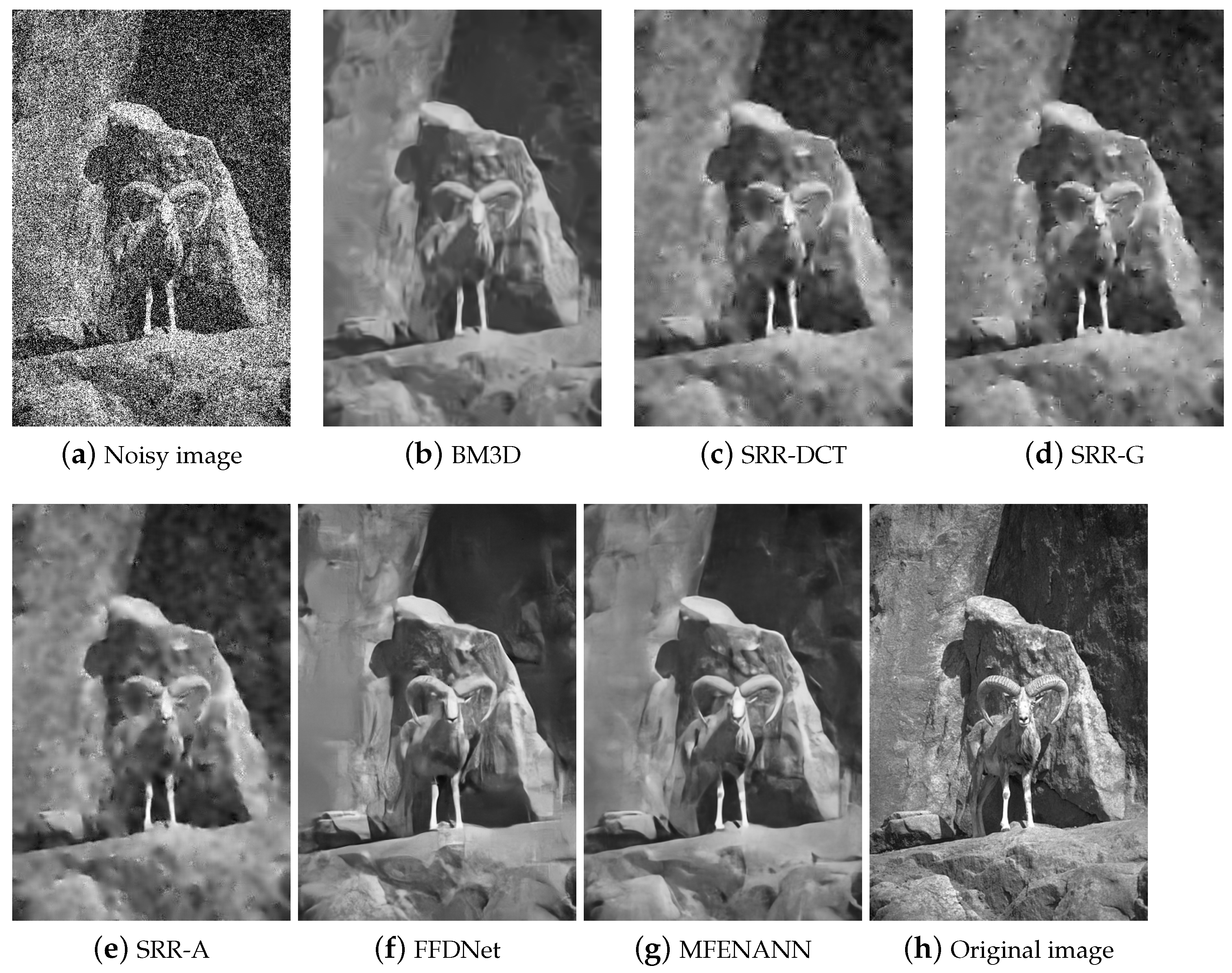

Although the CNN achieves excellent denoising effects, for most CNN based methods, the convolution kernels are of a size of one; the features on distinct scales are neglected. In addition, all the channels of features are treated equally; the relationships of channels are not considered. In this paper, we propose a multi-scale feature extraction-based normalized attention neural network for image denoising. In MFENANN, we define a feature extraction block which extracts and combines features at scales of , and of the noisy image. Moreover, we introduce 1D normalization techniques to NAN, which smooths the optimization landscape in training, and refines the relationship between channels. Furthermore, we introduce the NAN to MFENANN for denoising, in which every channel is augmented by assigning an amount of gain. In this paper, we take the down-sampled images to train the network, which enlarges the receptive field, and reduces the number of calculations in the training. A residual net is used to avoid losing shallow features. Moreover, the network learns the residue of the noisy image, and the noiseless clean image is obtained from the difference between the noisy image and the residue.

In general, the contributions of the paper are summarized as follows: (1) We define a feature extraction block to extract and combine different scale features of the noisy image, which causes the feature maps to contain more detailed information of the original image, and enhances the ability of the network to maintain details. (2) We propose a normalized attention network to learn the relationship between channels, which smooths the optimization landscape and speeds up the convergence process for training an attention model. (3) We introduce NAN to image denoising, in which each channel gets an amount of gain, and channels play different roles in the subsequent convolution, which improves the performance of image denoising.

3. Proposed MFENANN for Image Denoising

In this section, we detail the MFENANN proposed for image denoising. In MFENANN, we define a simple multi-scale feature extraction block which extracts different scale features from noisy image with convolution kernels of different sizes. Moreover, we propose a normalized attention network to learn the relationship between channels which improves SENet by adding 1D normalization techniques. In addition, we introduce the normalized attention network to CNN denoising, in which, each channel gets an amount of gain, and channels play different roles in subsequent convolution. We also define ResNet blocks for MFENANN, which can effectively integrate shallow and deep features and avoid vanishing gradient problem. In the training phase, we assume the size of batchsize is N, and randomly generate N values in the noise level range as the noise standard deviations. We expand each standard deviation into a tensor with the same length and width as the input image as the noise level map and add noise to the corresponding image in the batch. In the testing phase, if the noise level is known, we expand it into a tensor with the same length and width as the input image as the noise level map.

3.1. Network Architecture

Figure 1 shows the architecture of the proposed MFENANN. We down-sample the noisy image using an interlaced sampling way and concatenate the down-sampled subimages and the noise level map as the input for network. Suppose the size of the noisy image is

, where

W is the width of image,

H is the height of image and

C is the number of channels. Therefore, the size of input for MFENANN is

for grayscale image, and

for color image. The multi-scale feature extraction block (MFEBlk) is used to extract and combine distinct scale features for the subsequent convolution. The Relu function has the form of

. MFEBlk is detailed in

Figure 2. ResNetBlock is a defined ResNet block, which is detailed in

Figure 3. NAN is the normalized attention block which is detailed in

Figure 4. In MFENANN, there are five ResNetBlocks and four NAN blocks, the number of layers is 21. The output of network is residue rather than the clean image. The clean image is obtained as follows:

where

is a restored image,

Y is the observed noisy image,

is the learned residue.

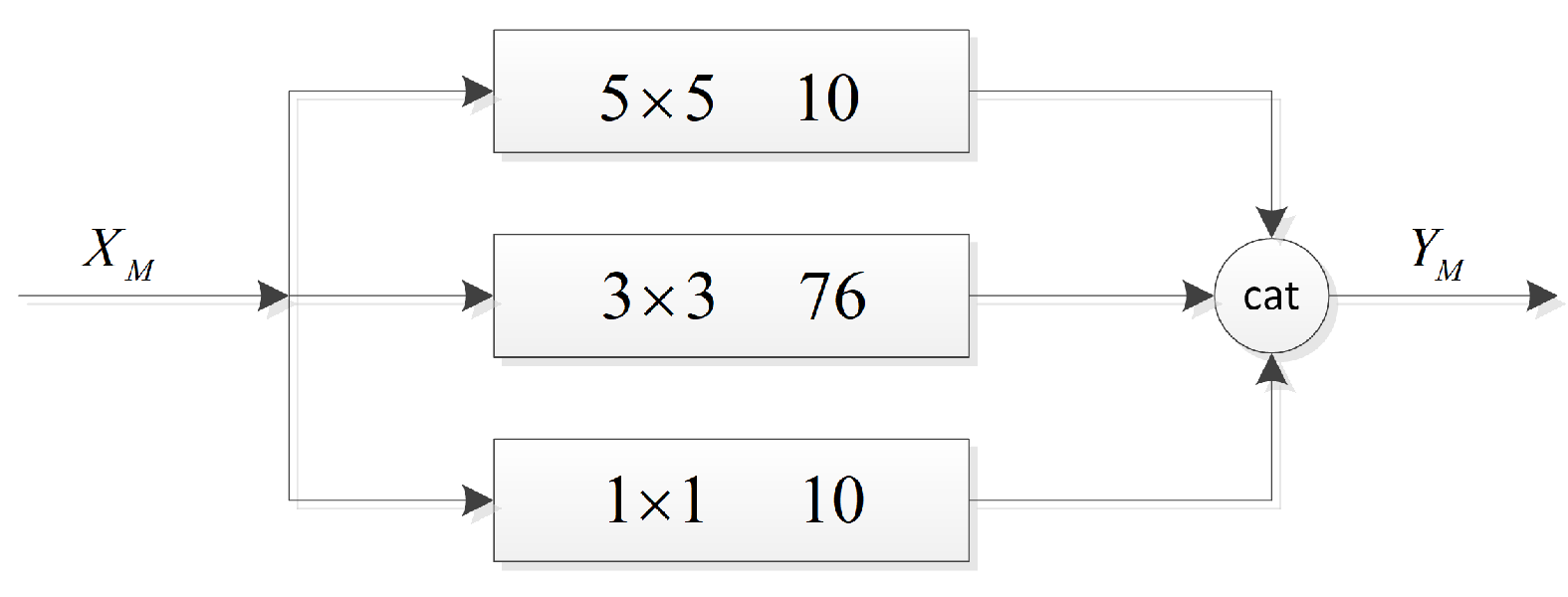

Figure 2 shows the architecture of MFEBlk.

is an input for MFEBlk, the channels number of

is 5 for a grayscale image and 15 for a color image. In MFEBlk, we define 3 kinds of convolutions:

convolution,

convolution and

convolution with kernel numbers of 10, 76 and 10 respectively. Zero-padding is used to keep the same channel size.

is the concatenated feature maps of different scale convolutions. The mathematical model for the MFEBlk is defined as follows:

where

,

and

are convolutions with kernel size of

,

and

respectively. The subscripts 10, 76 and 10 are numbers of convolution kernels. By experiments, we find the choice of quantity of kernels is a balance of the computational costs and performance.

is a function to concatenate channels of feature maps.

is the output which has 96 channels.

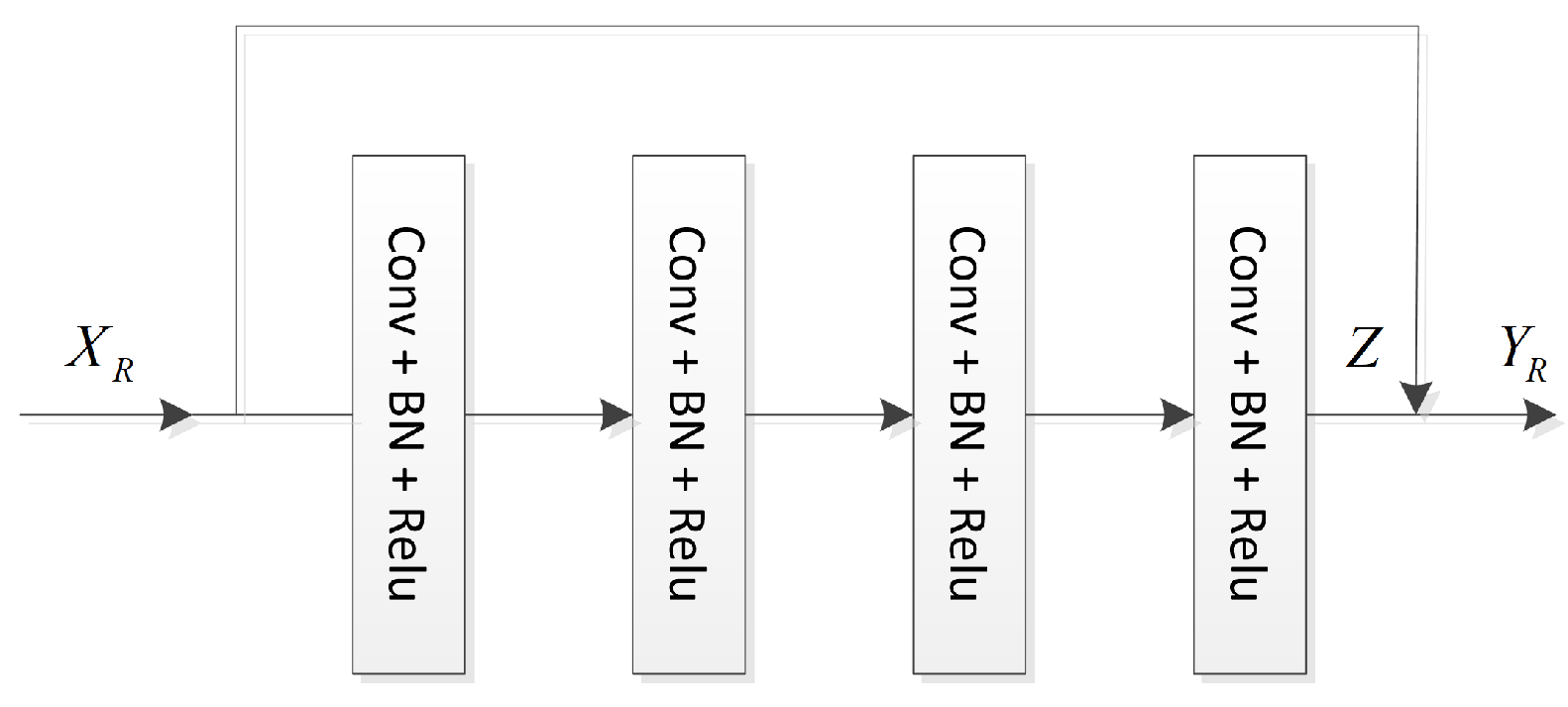

Figure 3 shows the architecture of ResNetBlock. ResNetBlock has four “Conv+BN+Relu” blocks. Each “Conv+BN+Relu” block includes a

convolution layer, a BN layer and a Relu activation function.

Z is the output of the fourth “Conv+BN+Relu” block which has 96 channels. The output

is the sum of the input

and output

Z:

Equation (

4) can be reformed as

, the work to learn

Z is the residual learning. Learning the residues reduces the computational cost in the training, and avoids vanishing gradient problem. The NAN Block is applied between two ResNetBlocks, each channel of the output for ResNetBlock can get an amount of gain. The architecture of NAN Block is detailed in the next section.

3.2. NAN

Figure 4 shows the architecture of NAN block. Assuming the input

has 96 channels, the squeeze operation adopts an average pooling function, which squeeze each channel to be a point. For each channel

, the mathematical expression of squeeze operation is described as follows:

where

is the amplitude at position

,

is the mean value of channel

. FC is the fully connected layer which has 96 neurons. 1DBN and Relu layers can avoid vanishing gradient problem and accelerate the convergence. Suppose the value of batchsize is

k, thus each training process contains

k samples. The 1DBN is described as follows:

where

and

are input and output vectors of BN block,

and

are variables which are updated during back propagation. On the last layer, the sigmoid function maps the input to range 0–1. We address the output of sigmoid function as

s, and

s is a vector of 96 dimensions which can be described as

,

n is 96. For each channel, the scale operation is described as follows:

where

and

are the

mth channels of

and

, respectively.

is the

mth element of

s. From Equation (

8), we find every channel in

is augmented by the corresponding element of

s.

3.3. Role of MFEBlk

Inspired by inception [

40], we propose a MFEBlk to extract distinct scale features using different size kernels. For image denoising problem, we hope the restored image holds most features, thus in the first layer, we take

,

and

convolution kernels to take distinct scale features from the noisy image. Larger kernel size commonly brings about larger numbers of calculations; therefore, we use less

and more

convolution kernels to balance feature extraction and reduce the number of calculations. The

convolution kernels are used to increase nonlinearity and reduce the amount of parameter and calculation [

46,

47].

3.4. Complexity Analysis

For MFENANN, the introduction of MFEBlk, skip connections in ResNetBlocks and NANs bring about an increase in network complexity. Considering that the floating-point operations per second (FLOPS) is related to the input image size, we use grayscale image with size of and color image with size of to compute the increase of parameters and calculations for introducing MFEBlk, skip connections and NANs. Compared with the plain neural network with the same number of layers, for grayscale image and color image, the amount of model parameters both increase by 0.113 M, and the number of calculations increases by 0.013GFLOPS and 0.099GFLOPS respectively. Thus the introduction of MFEBlk, skip connections and NANs only bring little increase of parameters and calculations. In addition, for a grayscale image with size of , compared to DnCNN and FFDNet, the number of calculations of MFENANN decreases by 9.06GFLOPS and increases by 19.42GFLOPS, respectively.