1. Introduction

Over the past decade, soft robots have shown an increasing potential to dramatically expand the capabilities of the field of robotics. Currently, however, most demonstrations have been limited to precisely that, potential. For soft robots to emerge into human-populated environments and to perform useful real-world tasks, advances are required in sensors able to quickly provide robust state information, both as individual sensors and as integrated sensing systems. Soft robots hold the potential for unprecedented levels of interaction with the surrounding environment impossible with traditional rigid-linked robots. This innate ability to yield to the environment and to sense and learn from that interaction is one of the biggest potential advantages of soft robots. By embracing this ability to interact, soft robots can fundamentally change human-robot interaction and allow safe collaboration in the home and workplace. To achieve this leap forward in state awareness and embodied intelligence, a rethinking of soft sensing is necessary.

With this novel approach to robotics and interaction come novel challenges. The number of sensors needed to understand the state of a soft robot dramatically exceed the number needed to determine the pose of a traditional rigid-link robot. Traditional robotic manipulation techniques including D-H parameters, Quaternions, and Product of Exponentials assume rigid links connected by single (often rotational or prismatic) degrees of freedom [

1]. Thus, one could define the entire range of possible poses of a traditional six-link robot using only six sensors. (Additional sensors would likely be included, but for other applications such as monitoring temperature and voltage.) Arguably, defining the pose of a single soft actuator, capable of yielding to the environment and several modes of self-motion, could require more than six sensors. Traditional robots typically contact the external world via manipulators (often end-effectors), with a limited number of small contact points or contact regions, often modeled using friction cone techniques [

2] requiring a single multi-axis strain gauge or even a single axis pressure sensor. Contact with components other than predefined manipulators is unusual, and unplanned contact is avoided at all costs. Some of the main advantages of soft robots are their ability to distribute forces across broad regions of an actuator and that unplanned interactions are often of minor concern. Thus, where a traditional robot may require fewer than 10 sensors to determine pose and interaction, a soft robot could easily require over a hundred. Several groups have tried machine vision methods to alleviate the rapidly growing burden of so many sensors, using cameras and motion capture to directly measure soft robot pose [

3,

4,

5]. While this is effective in some applications, and the work is quite compelling, this technique is ill-suited to applications with a likelihood of obstructed views (such as reaching into boxes to retrieve objects in order fulfillment or laparoscopic surgery). Real-time processing of video to interpret complex 3D motions of an underactuated robot is also an extremely challenging task.

Many technologies have been presented to achieve myriad sensing modes in soft robots. Soft sensors (sensors composed of compliant materials, gels, liquids, or a combination of these housed inside a soft robotics component) have been developed using conductive grease [

6], capacitive liquid [

7], resistive ionic gels [

8], waveguides [

9], and many demonstrations with liquid metals [

10,

11,

12], primarily focusing on a Eutectic of Gallium and Indium (EGaIn) [

13]. These many sensor technologies can measure changes in length [

14], bending [

15], pressure [

16], even temperature in the distal end of a soft finger [

17]. There has been work on mixed-mode sensing models [

7], including Park et al. [

18], able to sense pressure and two modes of stretch, all in one sensor. Other sensing techniques used in soft robotics have involved bonding traditional bend sensors to a soft actuator [

19] and embedded magnets and hall effect sensors [

20]. There has been work in optical methods including the SOFTcell project by Bajcsy and Fearing [

21] in which tactile response was determined through optical analysis of a deformed membrane, and video tracking of markers adhered to or embedded in soft components [

5].

While these studies present compelling sensors, further development is necessary in utilizing a suite of sensors to increase overall state awareness. In both traditional and soft robotics, many have studied proprioceptive sensor systems, robot skin, and bioinspired sensing. Discussion of these broad fields can be found in reviews of various subspecialties [

22,

23,

24,

25,

26]. The value of multi-sensor systems to perceive different proprioceptive or exteroceptive phenomena is widely appreciated. However, as the number of sensors is increased, the computation, data acquisition, and signal processing load drastically increase as well. Each sensor requires electronic circuitry, wiring to each sensor, and a dedicated channel to a data acquisition system or an analog to digital converter (ADC), requiring signal processing and computation. A sensor-skin with a grid of ten-by-ten sensors would be a relatively modest requirement for many perception applications. Using discrete nodes would require 100 dedicated sensors. Multiplexing by separating signals into 10 horizontal and 10 vertical sensors reduces the load to 20 separate sensors (with related disadvantages), which is still a considerable burden for a single sensor-skin device.

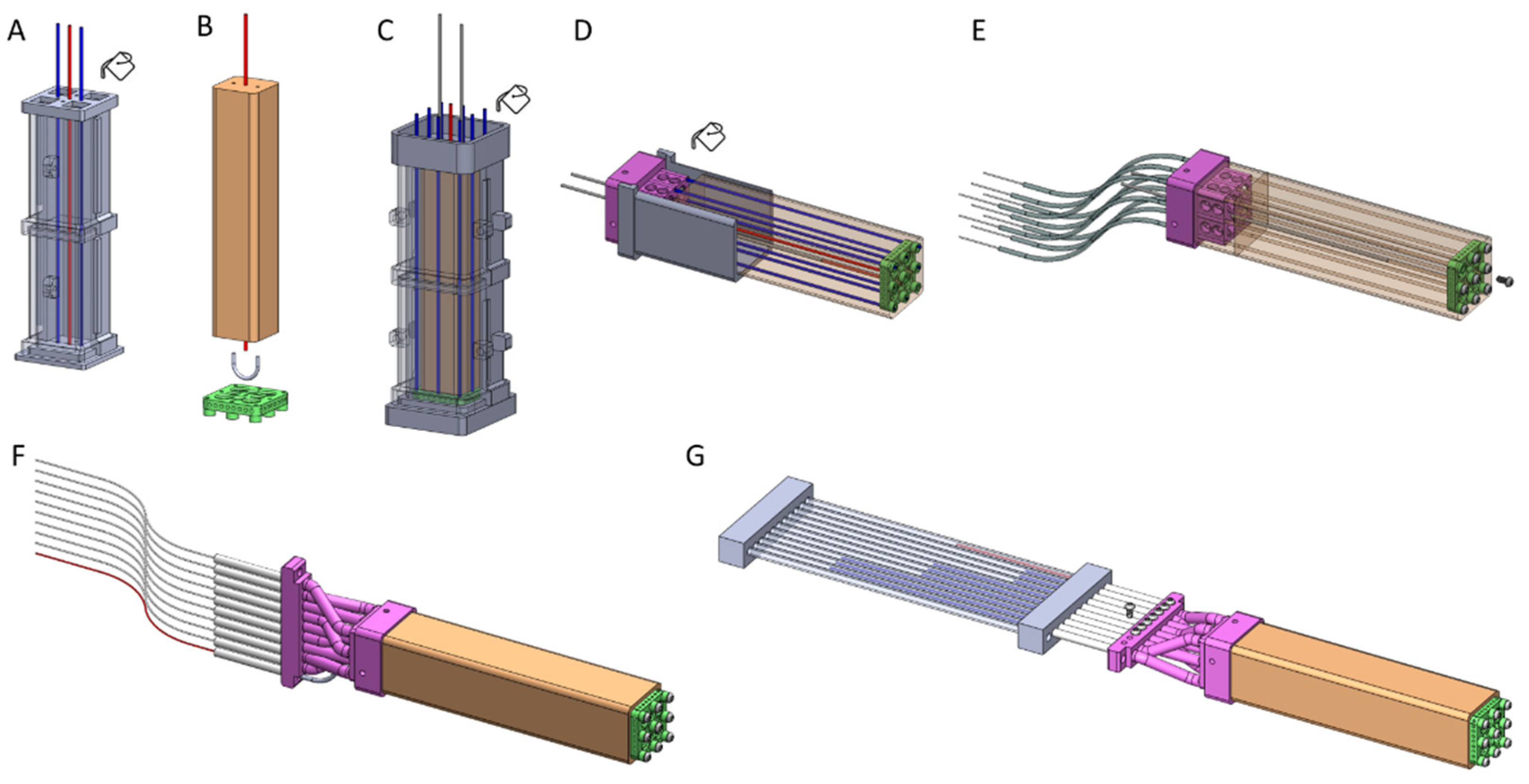

So far, soft sensors have primarily been developed as individual, standalone units. To scale the system from one to five sensors, one simply fabricates and integrates five sensors and five sets of required electronics, which interfaced to five ADCs and sent five signals to a microcontroller. The sensors in this work focus instead on passive sensors which present position and pressure data to a digital camera for real-time or offline data processing. Digital cameras, able to record multi-megapixel resolution are readily available at low cost and are already present on many robot platforms. With our method, a single camera can record and interpret data from many deformation and pressure sensors, providing a platform for state perception and embodied intelligence research. This camera does not record the elastomeric finger itself. Rather, it records the remotely located display assembly (

Figure 1A,B), where it tracks the motion of fiber-based displacement sensors and microfluidic pressure sensors. Recording the sensor states rather than the elastomeric finger itself presents several advantages. Firstly, no clear line of sight is needed. During typical robotic tasks, portions of a finger would often become obstructed when environmental objects or the robot itself come between the finger and the camera. Additionally, by remotely recording the display assembly, all aspects of recording (color, contrast, lighting) can be controlled to values optimum for marker tracking, impossible in real-world robotic applications. Finally, by tracking only monochromatic markers moving in well-defined horizontal or vertical paths in a controlled environment (no unanticipated glare/obstructions), extremely simplified vision algorithms can be used, allowing much faster processing. We present three techniques in which digital cameras record markers from fiber-based deformation sensors and microfluidic pressure sensors inside an elastomeric finger-like structure. We present an elastomeric finger with embedded fiber sensors and two modes of microfluidic pressure sensor (

Figure 1A,B). We present this system’s ability to quantify elongation along and twist about a longitudinal axis, and bending about the two orthogonal axes perpendicular to the longitudinal axis (

Figure 1C). We also present the technique’s ability to quantify overall pressure via an integrated microfluidic sensor (

Figure 1D) and local contact pressure via a surface-mounted microfluidic sensor (

Figure 1E). These sensors (specifically designed to be used in groups) can be designed into soft robotic actuators, and leverage the framework provided from beam theory and classical mechanics of materials to sense the state of each actuated unit. In addition, we present a Cephalopod chromatophore-inspired color-cell pressure sensor that detects changes in local pressure through the deformation of colored liquid cells (

Figure 1F). Chromatophores have been widely studied for decades [

27,

28,

29], and in recent years have become the inspiration for biomimicry and biomimetic work by the soft robotics community [

30] using bulk deformation of a matrix to modulate appearance and spell out a word [

31] or disrupting part of a surface using dielectric elastomers [

32]. In this work, however, we flip the concept, using the color cell as a passive pressure sensor to estimate externally applied force, not as an active device, mechanically distorted to modulate appearance.

While the simple sensor designs presented here have value individually, the key contribution of this work is that the sensors are fundamentally designed to be used in groups and leverage the concepts from beam theory and mechanics of materials to infer system state from a strategically located system of sensors. Intended to be built into a soft robot at the system level, a properly configured array of these deformation and pressure sensors can give state awareness far beyond that of individual sensors. The remainder of this paper is organized as follows:

Section 2 presents the methods used, beginning with a conceptual overview and review of beam theory/mechanics of materials on which these techniques are based, followed by design and fabrication, visual algorithms, and characterization methods.

Section 3 presents results, divided into fiber-based deformation sensors, microfluidic pressure sensors, and color cell pressure sensors.

Section 4 presents a discussion on the work and how it relates to the field. Where relevant, sections are further subdivided into fiber-based deformation sensor, integrated microfluidic pressure sensor, surface-mount pressure sensor, and color cell pressure sensor sections.

2. Method

We present an elastomeric finger containing nine fiber-based displacement sensors, one integrated microfluidic pressure sensor, and the ability to be configured with one or more surface-mounted microfluidic pressure sensors. These are all monitored with a single digital camera. Simultaneous analysis of these sensors allows us to determine the state of the finger. In the fiber-based deformation sensor method (

Figure 1A–C), we embedded a 3 × 3 matrix of fiber-based displacement sensors into the elastomeric finger. Each displacement sensor is composed of two main parts: fiber and tube. The fiber is a flexible but inextensible/incompressible nylon fiber. The tube is a flexible elastomeric void built into the bulk matrix of the finger. Fibers are fixed at the distal end of the finger and routed through tubes along the length of the finger and out to a display assembly (

Figure 1B). A short length (marker) of each fiber inside the display assembly is painted black. This contrasts with the white background allowing a digital camera to record the relative motion of the marker. When the elastomeric finger is distorted (bent, twisted, stretched), each tube changes shape and is stretched or compressed based on the overall mechanics of the mode of distortion. The fiber (free to slide along the length of the tube) slides within the display assembly, where the marker position and motion are recorded by a digital camera. This is similar to the Bowden cable assembly, which transmits force in many bicycle handbrakes. In our device, however, the passive sensor is distorted based on external actuation and used to sense displacement, the reverse of Bowden cables. Multiple cable-based sensors are embedded along the dorsal, ventral, and medial surfaces of the soft robotic sensor (3 × 3 grid at the end of the finger in

Figure 1A,B). Comparing relative motion between this grid of sensors allows differentiation between bending, stretching, and twist.

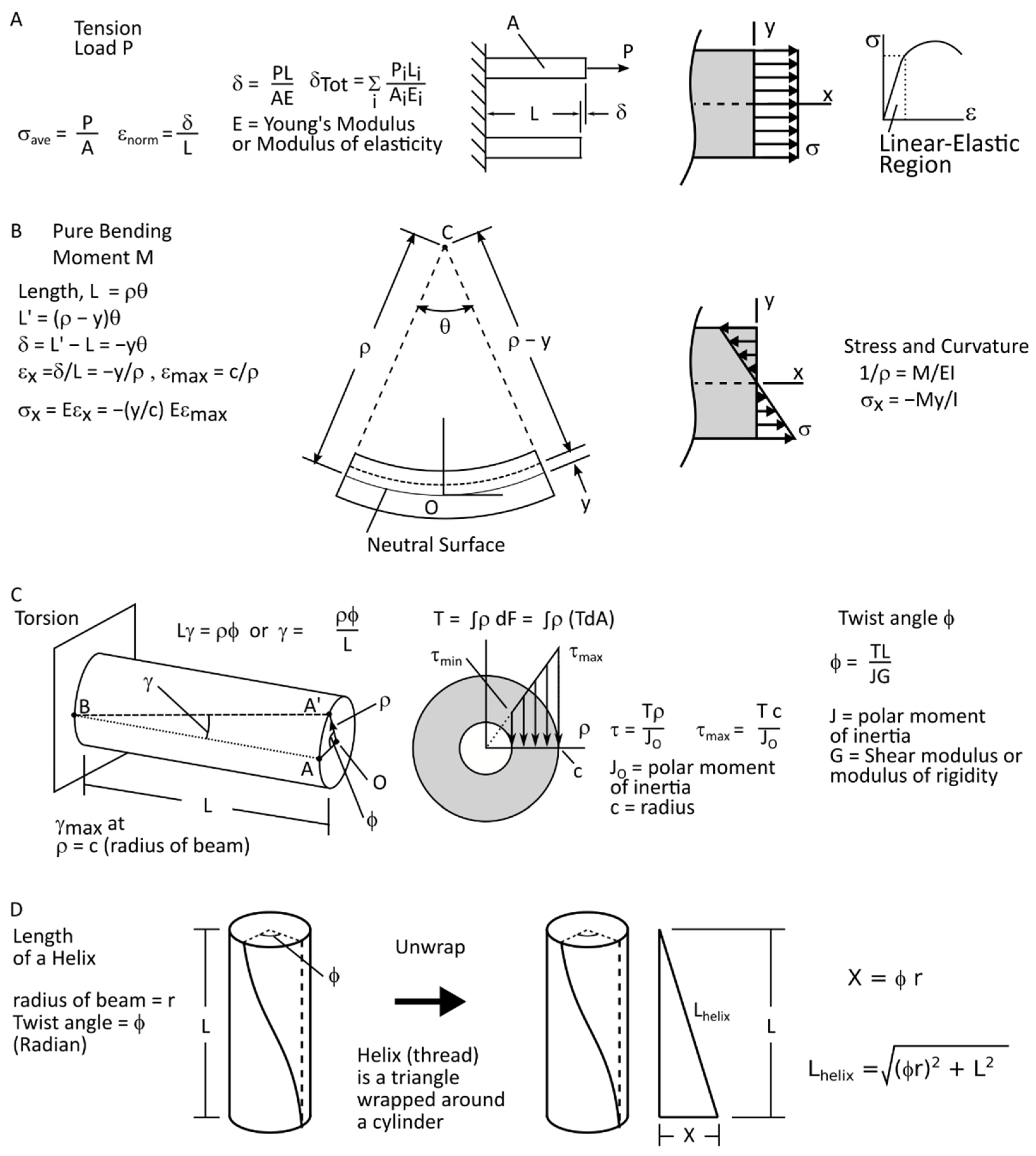

From Euler-Bernoulli beam theory and classical mechanics of materials, we know that beams experience stress and tension/compression throughout their cross-sections based on the mode of the applied loading (bending, tension/compression, twist, combined loading) [

33]. Here we briefly summarize some primary beam deformation modes (

Figure 2A) with more detail on derivation in

Appendix A. For a detailed analysis of beam theory or mechanics of materials, many excellent texts are available [

33,

34,

35,

36].

First exploring simple elongation, we find that deformation is uniform across the cross-section, and proportional to the load applied (

Figure 2A). Deformation follows the equation,

where

is total displacement,

is applied load,

is total beam length,

is cross-section area, and

is Young’s Modulus.

In bending, material closer to the center of curvature (smaller bend radius) experiences compression, material farther from the center of curvature (larger bend radius) experiences tension, and material along the neutral axis experiences neither tension nor compression. Within the linear elastic range, stress from bending (

Figure 2A) follows the equation,

where

is tensile or compressive stress,

is applied bending moment,

is the distance from the neutral surface (positive toward the center of curvature), and

is the second moment of inertia. The negative sign indicates compression toward the center of bending. Strain follows the equation,

where

is the strain in the beam axis,

is the distance from the neutral surface (positive toward the center of curvature), and

is the radius of curvature of the bent beam. The negative indicates shortening toward the center of curvature.

Shearing stress due to torsion (

Figure 2A) follows the equation,

where

is shear stress,

is applied torque,

is distance from the axis of rotation, and

is polar moment of inertia. The angle of twist follows the equation,

where

is the total twist of the beam,

is beam length,

is the polar moment of inertia, and

is the shear modulus. We can find the change in length of a line (linear initially, helical after twist) parallel to the axis of the beam, a distance

from the twist axis. Initially of length

, the line becomes a helix after the beam twists by an angle

, about its central axis. The helix (former line, now helix) length is found from the formula,

where

is the length of the helix,

is the angle of twist found above,

is the beam length, and

is the distance from twist axis (see

Appendix A for derivation). Thus, we find the change in length of a fiber parallel to the longitudinal axis as,

where

is the change in length. With

and

constant for any given beam and loading condition, we see that

increases as

increases. Thus, the farther an element is from the axis of rotation, the more it will increase in length when experiencing a twist. Thus, fibers in the corners of a square cross-section will experience more displacement than fibers at the center of the square faces, and a fiber at the center of the square face will not elongate at all.

While these formulae hold for beams within the linear elastic region, the principles (while not necessarily the magnitudes) remain true in the large deformation regime. See

Appendix A for details on the mechanics of materials described here, further figures on bending modes, and sign conventions [

33].

While each fiber sensor gives us local deformation information, the true value of these devices comes when used in groups. Thus, they must be readily integrated at scale without undue hardware requirements. Traditional sensors require individual hardware for each sensor (

Figure 2B top). These fiber sensors, however, require only the passive fiber components, and a single camera for all fibers (

Figure 2B bottom). Then a single signal can be sent to the PC for video processing. Since video data consists of black markers moving horizontally across a white background, processing complexity is greatly reduced. For example, we select fiber sensors in the lower right and upper left corners of the finger (1 and 9 in front-view,

Figure 2C). When the finger is bent upwards, 9 will indicate compression, but 1 will indicate tension. In elongation or twist, both will indicate tension. However, fibers 6 and 4 will indicate tension equal to 1 and 9 in extension, but less in twist. Expanding this example to the range of extension, bending, and twist scenarios, we configure a 3 × 3 matrix of fiber sensors, across the cross-section of the finger (as shown in

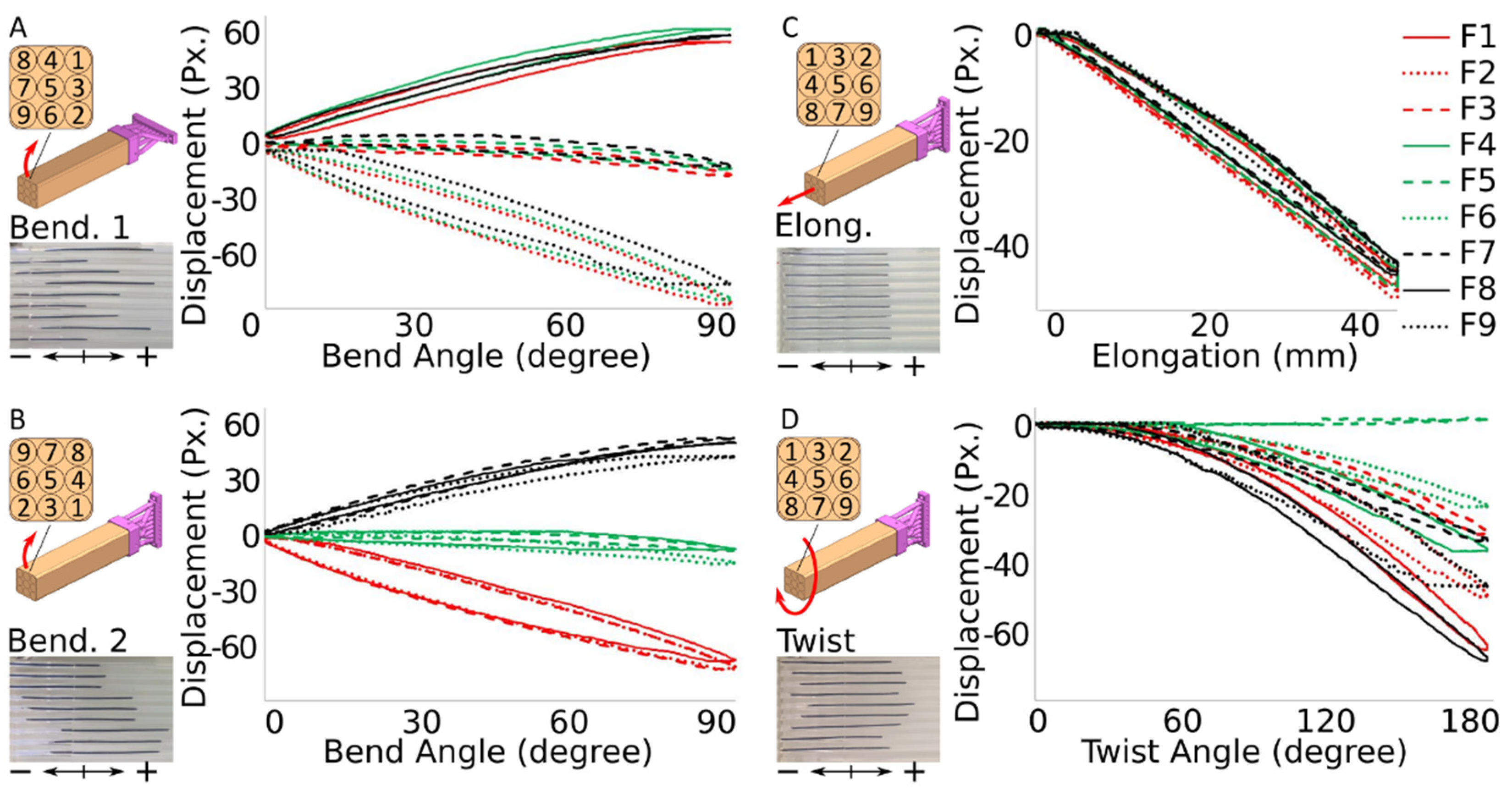

Figure 2C). The combination of displacements allows us to interpret the deformation mode of the overall finger. For example, if the top three sensors are in compression, the middle three show no deformation, and the bottom three show tension, we can infer that the finger is being bent upwards. Only primary deformation modes are presented here. Mixed-mode deformations (combinations such as bend and twist) are left for future exploration. We present offline marker tracking and characterization of these sensors in the deformation modes discussed above, as well as real-time marker tracking, which we envision as a path toward real-time control of soft robot actuators.

In the integrated microfluidic pressure sensor method (

Figure 1D), we embed microfluidic channels into the elastomeric finger to sense the overall pressure exerted on the finger. This sensor consists of a sensing part and a transmission part, both filled with colored liquid. The sensing part is compressible and embedded along the length of the square column-shaped elastomeric finger. The transmission part consists of a flexible, incompressible tube routed through the display assembly. When force is applied to the sensing part, the chamber is compressed, reducing the volume of the sensor part. This forces the incompressible colored liquid out of the sensing part, through the transmission part, and across a display tube in the display assembly.

We also present a surface-mount pressure sensor (

Figure 1E), which can be bonded (singularly or in batches) to the surface of the finger or any similar elastomeric device. This pressure sensor can be installed at any location on a multitude of elastomeric actuators and robotic systems. We present characterization data on one sensor to demonstrate its utility, not an exhaustive study of possible configuration or applications. Both microfluidic methods transmit to the same display assembly used to record fiber position, thus a single digital camera can capture data from fiber-based deformation sensors as well as microfluidic pressure sensors. The configuration we present records 11 sensors (nine fiber, one integrated microfluidic, and one surface mount microfluidic) captured by one digital camera, as that was sufficient for this proof of concept. An effort to minimize scale could greatly increase the number of discrete sensors possible with one camera.

Lastly, we present a color cell pressure sensor inspired by chromatophores in cephalopods [

27]. Rather than mimicking this clever technique, we draw upon it for our inspiration [

37] and flip the application from active modulation for camouflage to a passive sensor. Spherical cells of colored liquid are embedded in an elastomeric substrate. When the substrate undergoes external pressure, local deformation causes the spherical cells to deform, and become disk-like. Viewed from an axis normal to the disk plane, this causes the disks to appear larger than the original spheres. Thus, the applied force can be determined from the diameter of the disk.

2.1. Design and Fabrication

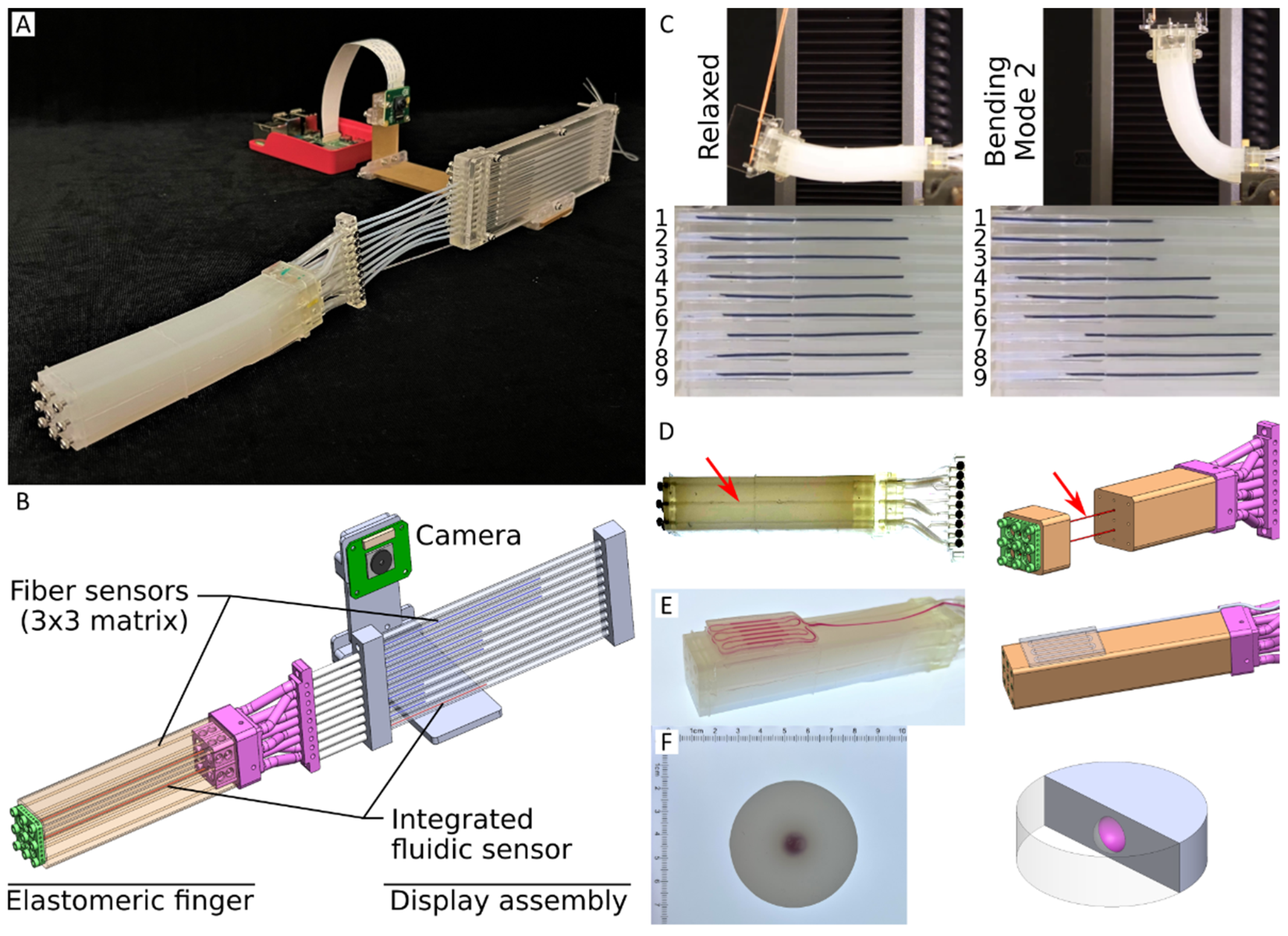

Fiber-based deformation sensor: (Since the elastomeric finger is fabricated as one device, the integrated microfluidic pressure sensor will be described in this section) Similar in concept to many soft robots, we fabricate our square column-shaped soft sensor (elastomeric finger) using multiple molding steps. The fabrication process is summarized in

Figure 3A, with a detailed description in

Appendix C. The assembly is fabricated using three molding steps and a final integration step. Mold 1. We used three plastic bars (diameter 0.9 mm) to create the center cable chamber and two microfluidic chambers. The matrix material of the finger is a readily available elastomer, Ecoflex 00-30 (Smooth-On, Inc. Macungie, PA, USA) in molds printed from a 3D Printer (Form 3, Formlabs, Somerville, MA, USA). Mold 2. Retaining the center plastic bar in the mold, we demolded the two other plastic bars. We used the silicon tube (inner diameter 0.5 mm, outer diameter 1 mm) to connect the microfluidic chambers on the top holes and extend the bottom holes. Then, we attached the top carrier to the center plastic bar and aligned it with the other eight plastic bars into the second mold. The top carrier embedded in the soft sensor provides a surface to fix the cables. Mold 3. We demolded the soft sensor from the second mold, keeping all the plastic bars and two silicon tubes inside the sensor, and then align them to the base holder. After alignment, we secured it into the final mold and connected the finger to the solid base holder once it cures. Integration. We inserted the high-strength fiber cables (Monofilament nylon thread, diameter 0.5 mm) into the soft sensor chambers, fixed them using screws on the top carrier, and injected the colored liquid into the microfluidic chambers.

Microfluidic pressure sensor: The design of the integrated microfluidic pressure sensor is described above, in the Fiber-based pressure sensor section, because it must be fabricated concurrently into one integrated unit. We present the integrated pressure sensor in the finger motif; it can, however, be designed into most actuator systems that use a matrix of molded elastomer. While this integrated sensor provides useful overall pressure of the soft finger, we developed a surface-mount microfluidic pressure sensor to expand sensing capabilities (

Figure 3B). Similar in form to several existing surface-mount sensors, our technique uses the displacement of liquid rather than change in resistance in an ionogel [

8,

15] or liquid metal [

18]. This surface-mount pressure sensor is similar in concept to a microfluidic embodiment of the Skinflow work by Hauser, Rossiter, et al. [

38]. This surface mount sensor can be fabricated from elastomers of various durometers in different thicknesses to modulate sensitivity, and it can be mounted (singly or in groups) at various locations along the elastomeric finger or other actuators. Fluid displacement data can be interpreted in the same camera frame as the fiber-based actuator described above, thus expanding the sensing modes possible with this overall vision-based system.

Color cell pressure sensor: The final sensor technology presented here derives its inspiration from the chromatophores used by many cephalopods and some other animals to change their color and appearance. Chromatophore cells filled with pigment appear as small dark dots. To change perceived color, radial muscle fibers stretch the chromatophore cell from roughly spherical to a wide-thin disk shape of the same volume. Thus, when viewed from an axis normal to the disk-plane, the appearance changes from a small, dark dot in a near-transparent matrix to a larger colored disk. An array of these chromatophores in various colors allows the animal to present a variety of appearances. While cephalopods use their chromatophore cells to actively modulate their appearance, we invert this technique, using passive cells as sensors. Fabricated into an elastomeric matrix, external pressure causes these spherical cells to deform into disks in a plane normal to the applied force. When viewed from an axis normal to the disk plane, the diameter of the disk increases with applied force.

2.2. Vision Algorithms

An algorithm was designed to process two different possible image stream inputs: a real-time camera stream, or a previously recorded video. For the real-time processing, we used a video stream from a Raspberry Pi Camera Module 2, with the constraint of the camera being aligned such that the painted filaments are approximately parallel to the horizontal axis. The videos recorded on a separate device were filmed with the same constraint. To address alignment issues across multiple runs, boundaries are digitally positioned around each of the channels with the filaments in the camera frame (current frame for live stream, first frame for recorded videos) before beginning the algorithm.

The OpenCV Python library for image processing is used to facilitate detection in each frame. Each frame is first cropped to include only the boundaries and then converted to be in grayscale to accentuate differences in light and dark colors and to eliminate possible noise from reflection. Every pixel value within the frame is then scaled up to further accentuate the difference between the white background and the black filaments. The Canny edge detector algorithm is then used to determine the edges of the filaments, and the Hough Lines Probability algorithm returns the start and endpoint pixel coordinates of each line edge. The algorithm then iterates over each detected line and the endpoint furthest to the right within each boundary is recorded as a pixel location in a CSV. Further details are presented in

Appendix B.

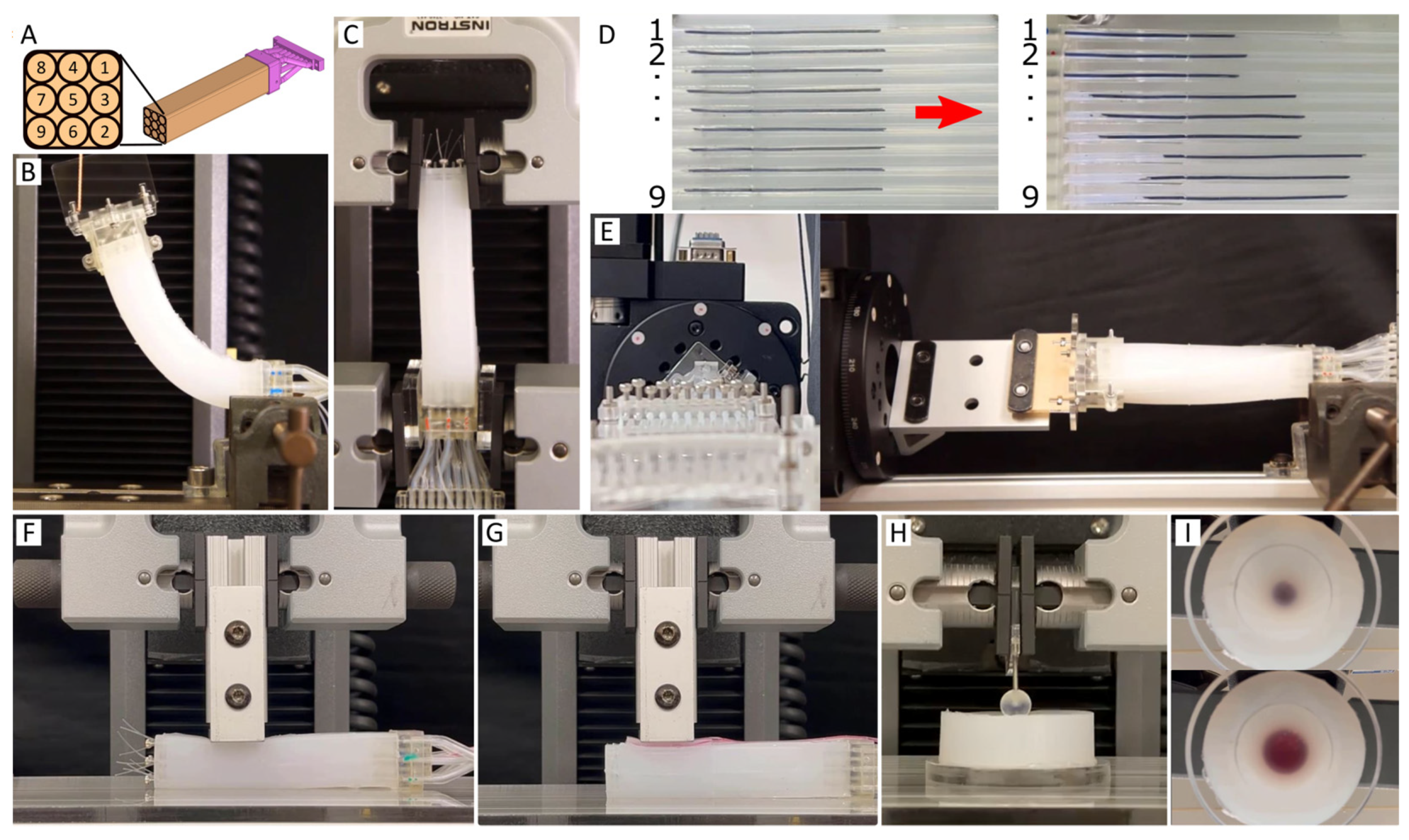

2.3. Sensor Characterization

We evaluated the elastomeric finger containing fiber-based displacement sensors and fluid-based pressure sensor in each actuation mode individually, with the understanding that mixed-mode sensing (elongation and twist combined, or bending along a non-primary axis) will be the goal of future development using the real-time vision algorithms described in

Section 2.2. For the fiber-based sensor, separate characterization fixtures were employed for each mode of evaluation (bending, elongation, twist) as shown in

Figure 4A, each mounted to an Instron 5943 tensile tester (Instron Co., Norwood, MA, USA). The same apparatus was used for bending 1 and bending 2, offset by 90° as illustrated in

Figure 4A–E. Integrated microfluidic pressure sensor characterization (

Figure 4F) and surface mount microfluidic pressure sensor characterization (

Figure 4G) were performed on the same fixture, and chromatophore-inspired sensor characterization (

Figure 4H,I) was performed on a separate fixture. Each test was performed four times to monitor repeatability.

4. Discussion

We presented vision-based methods of sensing deformation and pressure in soft robots, each including only passive components inside the soft robot. First, we presented a fiber-based deformation sensor wherein local material displacement in a soft robot was transmitted to a remote display assembly and tracked by a digital camera. Next, we presented two fluidic sensors, wherein a pressure in a soft robot displaces liquid inside a microfluidic channel, which was transmitted back to the aforementioned display assembly. We presented an integrated microfluidic pressure sensor, by which the overall pressure state inside the body of a soft robot is tracked. Next, we presented a surface-mount pressure sensor to track contacts locally on the surface of a soft robot. Finally, we presented a color-cell pressure sensor. With this sensor, we flip the idea of a chromatophore (with which cephalopods actively stretch color cells from spheres into disks to modulate appearance for camouflage and other applications). In our application, the passive spherical color cell is embedded in an elastomeric matrix. When an external force is applied to the elastomer, the color cell is compressed in the direction normal to the force, expanding it radially. We characterized the radial expansion vs. applied force for one sample configuration.

We presented an elastomeric finger with nine embedded fiber deformation sensors, one integrated pressure sensor, and one surface-mounted pressure sensor. We characterized the fiber sensors in two orthogonal directions of bending, twist about the finger’s primary axis, and extension. All modes of deformation followed the responses expected from by mechanics of materials and beam theory. The integrated microfluidic pressure sensor demonstrated a highly repeatable response to externally applied pressure with no saturation detected at 7N externally applied force. The surface-mounted pressure sensor (to sense contact locally) sensed much smaller applied forces (0.05–0.3 N) but saturated when as little as 2N force was applied. As a contact sensor, early detection is more useful than high saturation levels. These encouraging results on a single elastomeric finger will provide a foundation upon which sensorized actuators will be developed based on actuator designs from our previous work [

15,

39].

While the very simple sensor designs presented here have value individually, the key contribution of this work is that the sensors are fundamentally designed to be used in groups. Intended to be designed into a soft robot at the system level, a properly configured array of these deformation and pressure sensors can give state awareness far beyond that of individual sensors. Most sensors used in soft robots (and many sensors in general) vary in resistance or capacitance in response to a change in a physical parameter such as length, bend angle, or contact pressure. Each sensor requires wiring, electronic circuitry, and a dedicated input to a data acquisition system before the resulting signal is sent to a computer. Five sensors require five times the infrastructure. With our presented method, a digital camera records the movement of markers on fiber sensors and colored liquid in microfluidic channels. Thus, dozens of markers and fluid channels can be monitored almost as easily as one. Other camera-based soft robot state-estimation systems exist, but they primarily record the pose of the robot directly, thus requiring specific lighting conditions, unobstructed line-of-sight access to all parts of the robot.

The elastomeric finger we presented used nine fiber sensors to determine its pose, and two fluidic sensors to determine overall and local pressure states. By configuring fibers in a 3 × 3 matrix, we used the theories put forth in classical Mechanics of Materials (See

Appendix A) to determine pose during states of bending in both primary planes, twist about the primary axis, and elongation along the primary axis. While the presented work was on a finger designed specifically to illustrate adherence to classical mechanics of materials theory, this state estimation could be applied to a range of soft actuators and soft robots in general. As stated above, we plan to use this technique in an actuator design similar to our previous soft finger [

15,

39] with a roughly square cross-section. These fiber and fluidic sensors could be used in many soft robots with actuators having rectangular, round, or trapezoidal cross-sections, requiring sensors to be placed at based on beam theory for that cross-section. With their innate under-actuation and deformability, defining the pose of a soft robot with reasonable accuracy requires far more sensors than do traditional robots. One can readily imagine a soft robot requiring nine sensors (3 × 3 matrix) for EACH actuator to estimate its pose. Thus, a three-fingered gripper would require 27 sensors, a simple quadruped would require 36, and a more complex robot would require many more. The circuitry and wiring required for this many discrete electrical sensors would quickly become burdensome. With our method, passive sensors are all routed back to one central display assembly and recorded by one digital camera. While we present 11 sensors in the display assembly, this number was chosen as it was the number required to characterize the soft finger (nine deformation and two pressure sensors). With our method, any upgrading (to increase sampling frequency or resolution) would be contained to the camera system, while upgrading dozens of electrical sensors would also be a sizeable task. With our method, many fibers could be routed back to one remote display assembly, where a single digital camera could track the motion of all markers in a controlled environment, optimally lit for contrast and marker tracking.