Edge Network Optimization Based on AI Techniques: A Survey

Abstract

:1. Introduction

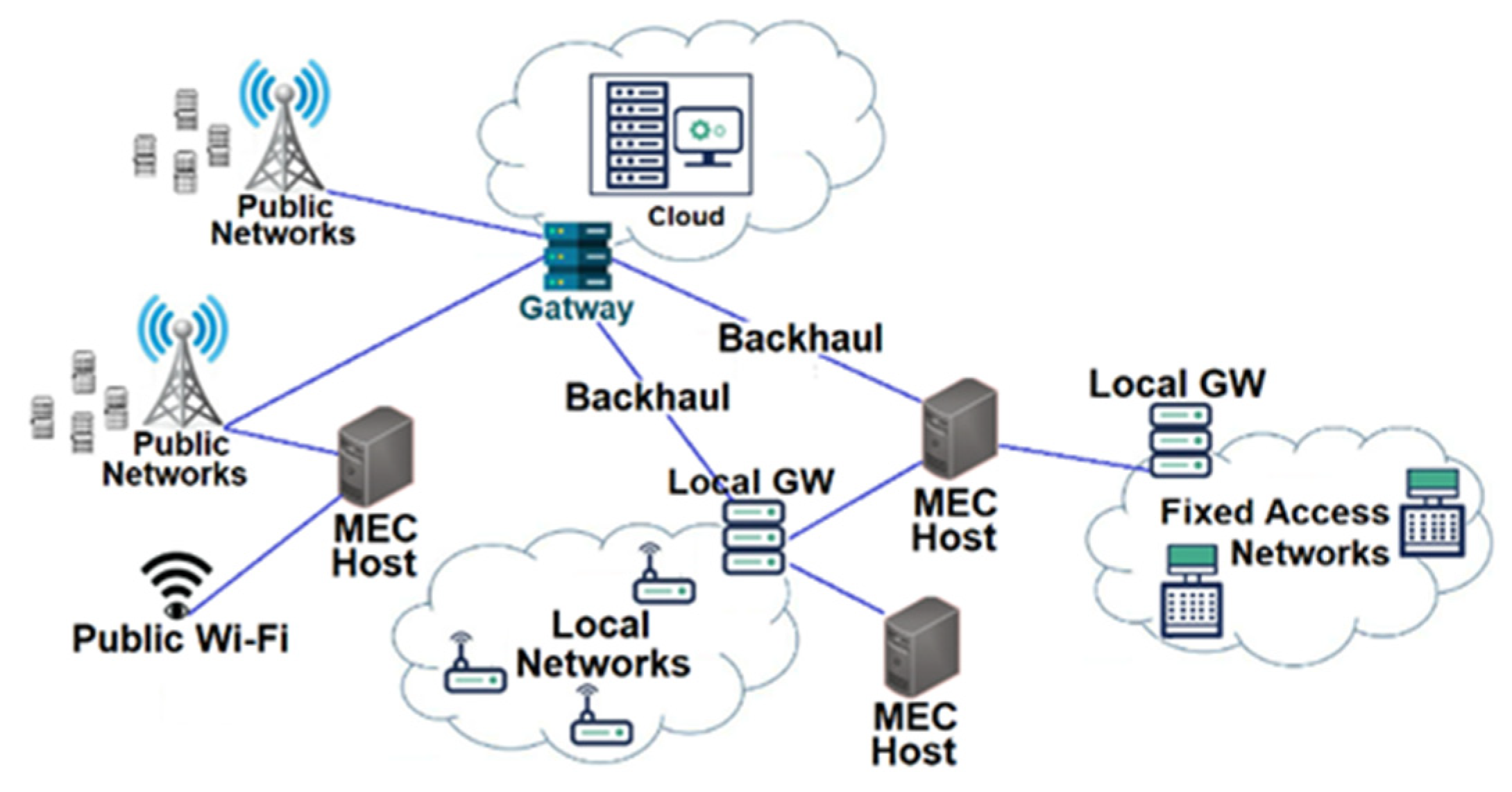

2. Network Concept

2.1. Cloud-Based Network

2.2. Edge-Based Network

- There is not enough or reliable network bandwidth to send data to the cloud.

- There are security and privacy concerns about sending information over public networks or storing it in the cloud. With edge computation, data are stored locally.

- Some applications require fast data sampling or must calculate results with minimal delay.

- Cloud computing power is almost unlimited. Any tool can be used at any time for analysis.

- Due to environmental constraints, some applications can increase the cost of edge computing and make cloud computing more cost effective.

- The dataset may be large. The large number of applications in the cloud and the availability of other data can help applications start self-learning, which can lead to better results.

- Results may need to be widely distributed and viewed across different operating systems. The cloud space can be accessed from several points and from several devices.

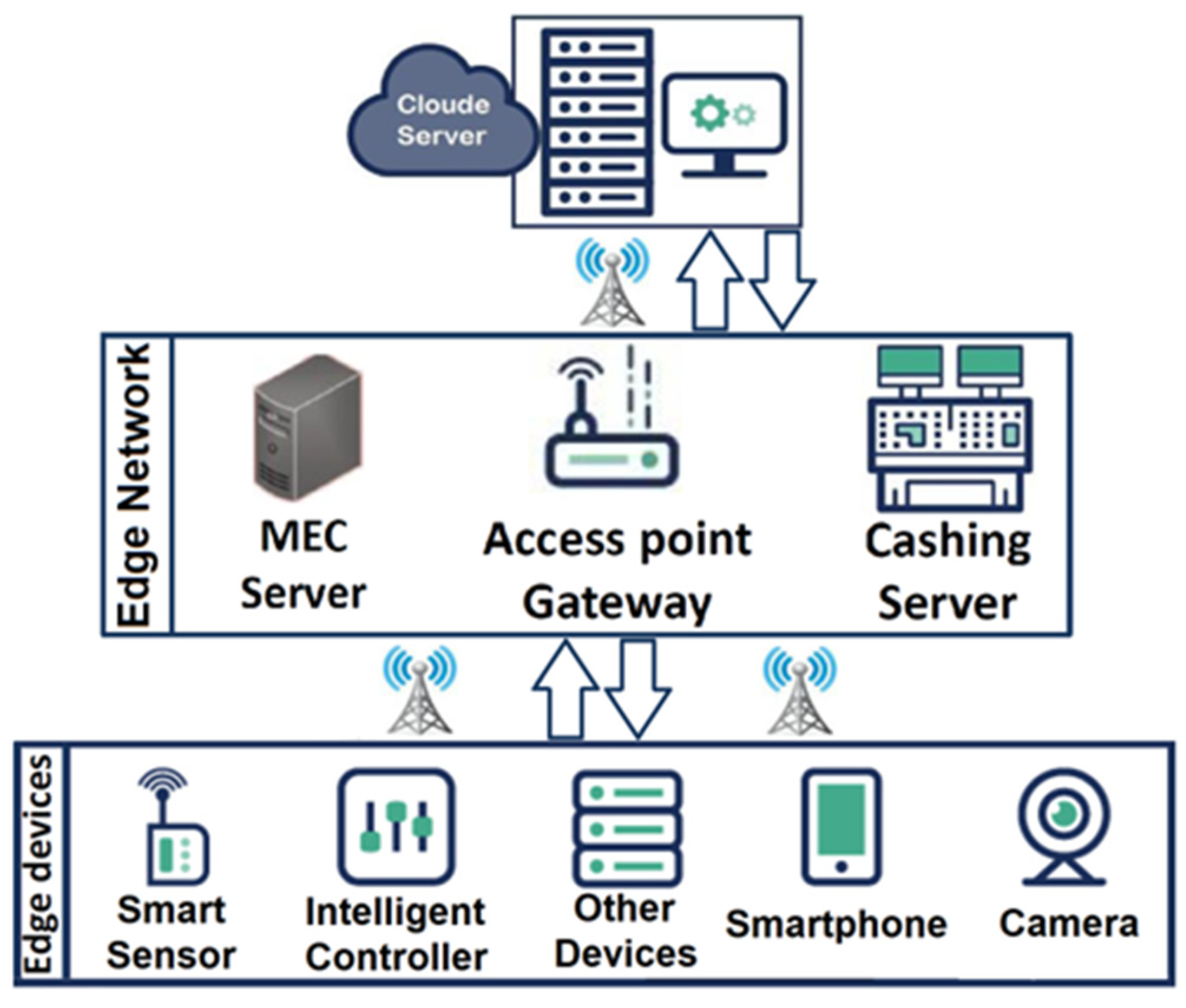

2.3. Edge-Based IoT Network

- Improved performance: IoT performance is easily improved because higher volumes of data in more IoT devices can be collected quickly and without any delay.

- Greater compliance: IoT compliance with existing standards and protocols is complex. Network edge infrastructures are able to do this adaptation, making it much easier to integrate IoT devices.

- Data privacy and security: Edge technology distributes, calculates, and executes data across a wide range of devices and data centers, making it difficult to disable the entire network. A big concern about IoT edge processing devices is that they can easily become a gateway for cyberattacks using malware and other network attack methods from a weak point. Although this is a real danger, the distributed nature of the edge processing structure facilitates the implementation of security protocols that can quarantine infected parts without disabling the entire network. Because most data are processed on local devices instead of being transferred to a central database, edge processing reduces the amount of data that are compromised. It is also possible for eavesdropping while the data are in transmission, and even if a device is attacked, only locally collected information will be exposed [20].

- Reduced operating costs: Using edge technology for IoT and perform computation and processing at the edge network as shown in Figure 2, users do not require to incur extra costs for data transfer and processing in cloud service. Not only is edge technology a way to collect data for transfer to the cloud system but it will also process, analyze, and act on data collected at the edge in milliseconds because of the mitigation of the distance between IoT devices and the data processing location. There are many edge-based IoT applications, such as agricultural applications, wearables, smart homes, energy applications, healthcare applications, transportation applications, industrial automation, surveillance cameras, and smart grids. All of these IoT applications require minimum delay processing and analyzing data.

3. Optimization Concept

3.1. Machine Learning

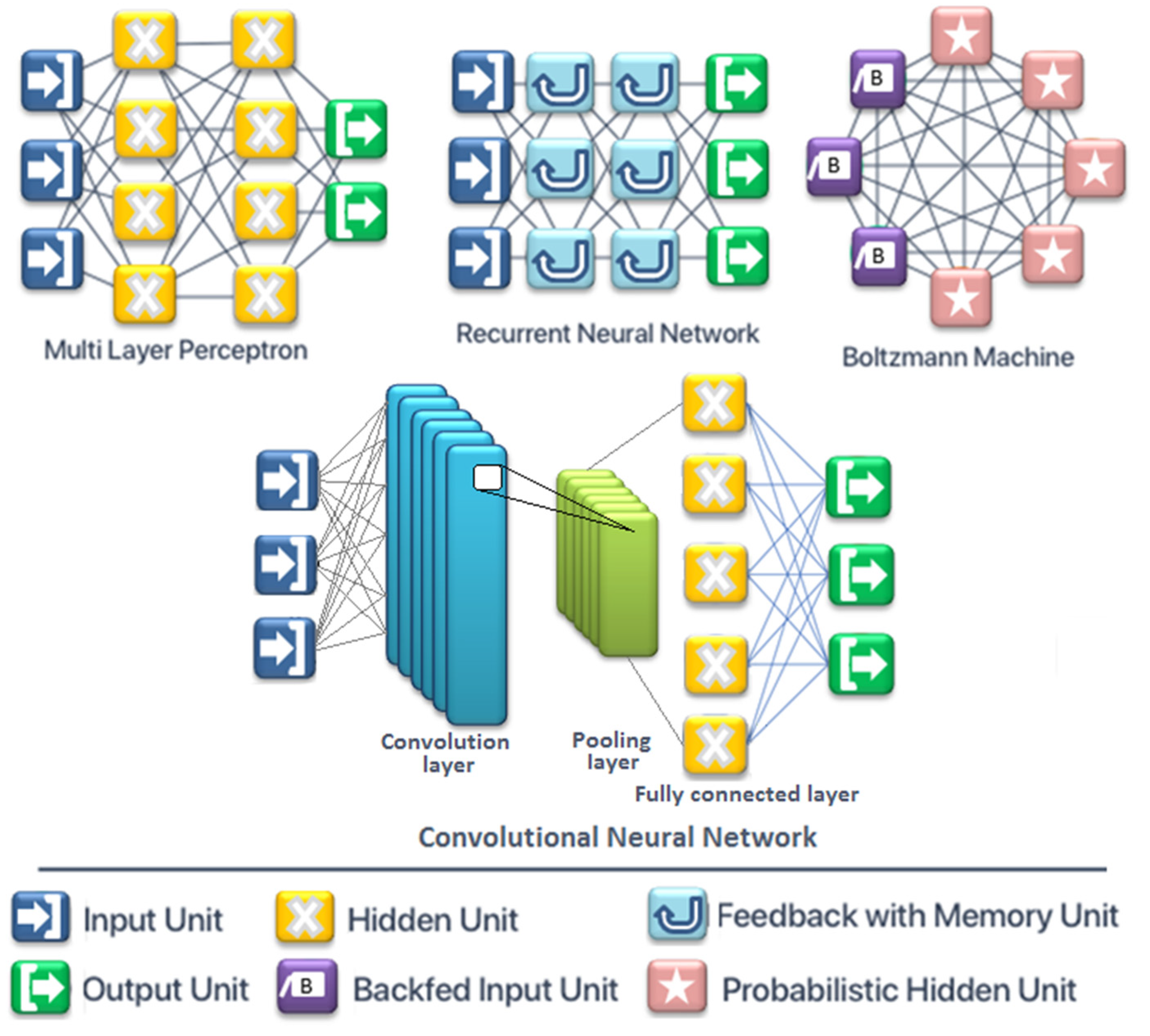

3.2. Artificial Neural Network

3.3. Federated Learning

3.4. Reinforcement Leaning

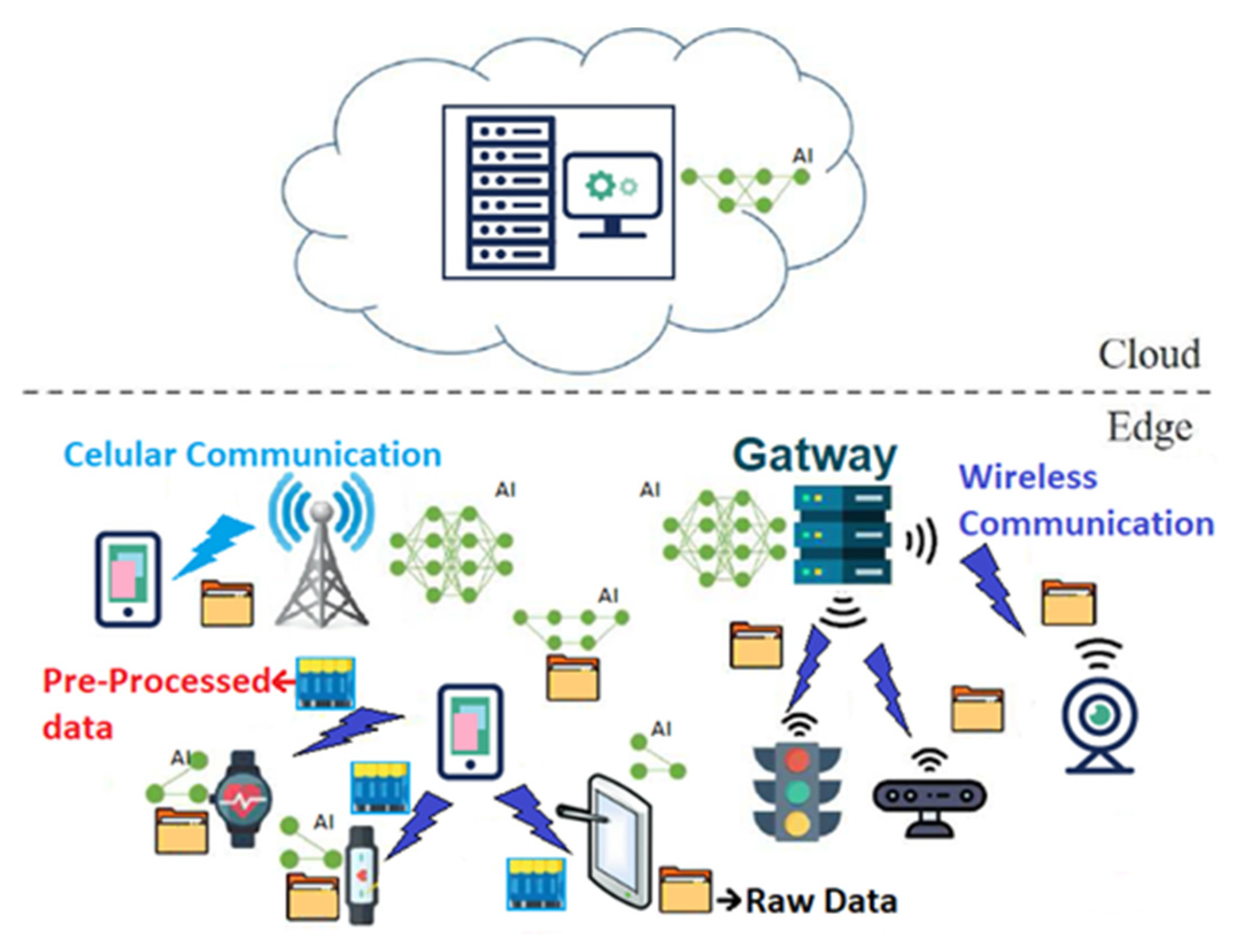

4. Artificial Intelligence at the Network Edge

- In each device, using the local data set, the local model training is repeated several times.

- As a global model, all local models are integrated into one server.

- All models in the devices are synchronized with this universal model.

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Debauche, O.; Mahmoudi, S.; Mahmoudi, S.A.; Manneback, P.; Lebeau, F. A new edge architecture for ai-iot services deployment. Procedia Comput. Sci. 2020, 175, 10–19. [Google Scholar] [CrossRef]

- Murshed, M.G.; Murphy, C.; Hou, D.; Khan, N.; Ananthanarayanan, G.; Hussain, F. Machine learning at the network edge: A survey. arXiv 2019, arXiv:1908.00080. [Google Scholar] [CrossRef]

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A survey on the edge computing for the Internet of Things. IEEE Access 2017, 6, 6900–6919. [Google Scholar] [CrossRef]

- Chang, Z.; Liu, S.; Xiong, X.; Cai, Z.; Tu, G. A Survey of Recent Advances in Edge-Computing-Powered Artificial Intelligence of Things. IEEE Internet Things J. 2021, 8, 13849–13875. [Google Scholar] [CrossRef]

- Ling, L.; Xiaozhen, M.; Yulan, H. CDN cloud: A novel scheme for combining CDN and cloud computing. In Proceedings of the 2nd International Conference on Measurement, Information and Control, Harbin, China, 16–18 August 2013; pp. 16–18. [Google Scholar]

- Lin, C.F.; Leu, M.C.; Chang, C.W.; Yuan, S.M. The study and methods for cloud based CDN. In Proceedings of the International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery, Beijing, China, 10–12 October 2011; pp. 469–475. [Google Scholar]

- Rahman, M.; Iqbal, S.; Gao, J. Load balancer as a service in cloud computing. In Proceedings of the IEEE 8th International Symposium on Service Oriented System Engineering, Oxford, UK, 7–11 April 2014. [Google Scholar]

- Feng, T.; Bi, J.; Hu, H.; Cao, H. Networking as a service: A cloud-based network architecture. J. Netw. 2011, 6, 1084. [Google Scholar] [CrossRef]

- Wu, J.; Ping, L.; Ge, X.; Wang, Y.; Fu, J. Cloud storage as the infrastructure of cloud computing. In Proceedings of the 2010 International Conference on Intelligent Computing and Cognitive Informatics, Kuala Lumpur, Malaysia, 22–23 June 2010; pp. 380–383. [Google Scholar]

- Lu, G.; Zeng, W.H. Cloud computing survey. In Applied Mechanics and Materials; Trans Tech Publications Ltd.: Bäch, Switzerland, 2014; Volume 530, pp. 650–661. [Google Scholar]

- Moghe, U.; Lakkadwala, P.; Mishra, D.K. Cloud computing: Survey of different utilization techniques. In Proceedings of the 2012 CSI Sixth International Conference on Software Engineering (CONSEG), Madhay Pradesh, India, 5–7 September 2012; pp. 1–4. [Google Scholar]

- Zhao, Y.; Wang, W.; Li, Y.; Meixner, C.C.; Tornatore, M.; Zhang, J. Edge computing and networking: A survey on infrastructures and applications. IEEE Access 2019, 7, 101213–101230. [Google Scholar] [CrossRef]

- Shi, W.; Cao, J.; Zhang, Q.; Li, Y.; Xu, L. Edge computing: Vision and challenges. IEEE Internet Things J. 2016, 3, 637–646. [Google Scholar] [CrossRef]

- Sonmez, C.; Ozgovde, A.; Ersoy, C. Edgecloudsim: An environment for performance evaluation of edge computing systems. Trans. Emerg. Telecommun. Technol. 2018, 29, e3493. [Google Scholar] [CrossRef]

- Rajavel, R.; Ravichandran, S.K.; Harimoorthy, K.; Nagappan, P.; Gobichettipalayam, K.R. IoT-based smart healthcare video surveillance system using edge computing. J. Ambient. Intell. Humaniz. Comput. 2021, 1–3. Available online: https://link.springer.com/article/10.1007/s12652-021-03157-1 (accessed on 16 November 2021). [CrossRef]

- Dillon, T.; Wu, C.; Chang, E. Cloud computing: Issues and challenges. In Proceedings of the 2010 24th IEEE International Conference on Advanced Information Networking and Applications, Perth, Australia, 20–23 April 2010; pp. 27–33. [Google Scholar]

- Avram, M.G. Advantages and challenges of adopting cloud computing from an enterprise perspective. Procedia Technol. 2014, 12, 529–534. [Google Scholar] [CrossRef] [Green Version]

- Čolaković, M.; Hadžialić, M. Internet of Things (IoT): A review of enabling technologies, challenges, and open research issues. Comput. Netw. 2018, 144, 17–39. [Google Scholar] [CrossRef]

- Stuurman, K.; Kamara, I. IoT Standardization-The Approach in the Field of Data Protection as a Model for Ensuring Compliance of IoT Applications? In Proceedings of the IEEE 4th International Conference on Future Internet of Things and Cloud Workshops (FiCloudW), Vienna, Austria, 20–24 August 2016; pp. 22–24. [Google Scholar]

- Sha, K.; Yang, T.A.; Wei, W.; Davari, S. A survey of edge computing-based designs for iot security. Digit. Commun. Netw. 2020, 6, 195–202. [Google Scholar] [CrossRef]

- El Naqa, I.; Murphy, M.J. What is machine learning? In Machine Learning in Radiation Oncology; Springer: Cham, Switzerland, 2015; pp. 3–11. [Google Scholar]

- Amutha, J.; Sharma, S.; Sharma, S.K. Strategies based on various aspects of clustering in wireless sensor networks using classical, optimization and machine learning techniques: Review, taxonomy, research findings, challenges and future directions. Comput. Sci. Rev. 2021, 40, 100376. [Google Scholar] [CrossRef]

- Yao, X. Evolving artificial neural networks. Proc. IEEE 1999, 87, 1423–1447. [Google Scholar]

- Yang, Q.; Liu, Y.; Cheng, Y.; Kang, Y.; Chen, T.; Yu, H. Federated learning. Synth. Lect. Artif. Intell. Mach. Learn. 2019, 13, 1–207. [Google Scholar] [CrossRef]

- Hu, K.; Li, Y.; Xia, M.; Wu, J.; Lu, M.; Zhang, S.; Weng, L. Federated Learning: A Distributed Shared Machine Learning Method. Complexity 2021, 8261663. [Google Scholar] [CrossRef]

- Zhu, G.; Wang, Y.; Huang, K. Broadband analog aggregation for low-latency federated edge learning. IEEE Trans. Wirel. Commun. 2019, 19, 491–506. [Google Scholar] [CrossRef]

- Lin, F.P.C.; Brinton, C.G.; Michelusi, N. Federated Learning with Communication Delay in Edge Networks. arXiv 2020, arXiv:2008.09323. [Google Scholar]

- Saputra, Y.M.; Hoang, D.T.; Nguyen, D.N.; Dutkiewicz, E.; Niyato, D.; Kim, D.I. Distributed deep learning at the edge: A novel proactive and cooperative caching framework for mobile edge networks. IEEE Wirel. Commun. Lett. 2019, 8, 1220–1223. [Google Scholar] [CrossRef] [Green Version]

- Chang, Z.; Lei, L.; Zhou, Z.; Mao, S.; Ristaniemi, T. Learn to cache: Machine learning for network edge caching in the big data era. IEEE Wirel. Commun. 2018, 25, 28–35. [Google Scholar] [CrossRef]

- Zhu, H.; Cao, Y.; Wang, W.; Jiang, T.; Jin, S. Deep reinforcement learning for mobile edge caching: Review, new features, and open issues. IEEE Netw. 2018, 32, 50–57. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction. Robotica 1999, 17, 229–235. [Google Scholar] [CrossRef]

- Li, J.; Gao, H.; Lv, T.; Lu, Y. Deep reinforcement learning based computation offloading and resource allocation for MEC. In Proceedings of the 2018 IEEE Wireless Communications and Networking Conference (WCNC), Barcelona, Spain, 15–18 April 2018; pp. 1–6. [Google Scholar]

- Zhang, W.; Zhang, Z.; Zeadally, S.; Chao, H.C.; Leung, V.C. MASM: A multiple-algorithm service model for energy-delay optimization in edge artificial intelligence. IEEE Trans. Ind. Inform. 2019, 15, 4216–4224. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-edge ai: Intelligentizing mobile edge computing, caching and communication by federated learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Wang, C.; Li, X.; Leung, V.C.M.; Taleb, T. Federated deep reinforcement learning for internet of things with decentralized cooperative edge caching. IEEE Internet Things J. 2020, 7, 9441–9455. [Google Scholar] [CrossRef]

- Ren, J.; Wang, H.; Hou, T.; Zheng, S.; Tang, C. Federated learning-based computation offloading optimization in edge computing-supported internet of things. IEEE Access 2019, 7, 69194–69201. [Google Scholar] [CrossRef]

- Luo, B.; Li, X.; Wang, S.; Huang, J.; Tassiulas, L. Cost-Effective Federated Learning in Mobile Edge Networks. arXiv 2021, arXiv:2109.05411. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, S.; Pu, L.; Yu, S. CEFL: Online admission control, data scheduling, and accuracy tuning for cost-efficient federated learning across edge nodes. IEEE Internet Things J. 2020, 7, 9341–9356. [Google Scholar] [CrossRef]

- Wei, Y.; Wang, Z.; Guo, D.; Yu, F.R. Deep Q-learning based computation offloading strategy for mobile edge computing. Comput. Mater. Contin. 2019, 59, 89–104. [Google Scholar] [CrossRef] [Green Version]

- Huang, S.A.; Yang, C.H. A hardware-efficient ADMM-based SVM training algorithm for edge computing. arXiv 2019, arXiv:1907.09916. [Google Scholar]

- Agarap, A.F. An architecture combining convolutional neural network (CNN) and support vector machine (SVM) for image classification. arXiv 2017, arXiv:1712.03541. [Google Scholar]

- Nikouei, S.Y.; Chen, Y.; Song, S.; Xu, R.; Choi, B.Y.; Faughnan, T. Smart surveillance as an edge network service: From harr-cascade, svm to a lightweight cnn. In Proceedings of the 2018 IEEE 4th International Conference on Collaboration and Internet Computing (CIC), Philadelphia, PA, USA, 18–20 October 2018; pp. 256–265. [Google Scholar]

- Gong, C.; Lin, F.; Gong, X.; Lu, Y. Intelligent cooperative edge computing in internet of things. IEEE Internet Things J. 2020, 7, 9372–9382. [Google Scholar] [CrossRef]

- Zhou, C.; Liu, Q.; Zeng, R. Novel defense schemes for artificial intelligence deployed in edge computing environment. Wirel. Commun. Mob. Comput. 2020, 8832697. [Google Scholar] [CrossRef]

- Xiao, L.; Wan, X.; Dai, C.; Du, X.; Chen, X.; Guizani, M. Security in mobile edge caching with reinforcement learning. IEEE Wirel. Commun. 2018, 25, 116–122. [Google Scholar] [CrossRef] [Green Version]

- Kaviani, S.; Sohn, I. Influence of random topology in artificial neural networks: A Survey. ICT Express 2020, 6, 145–150. [Google Scholar] [CrossRef]

| Algorithm | Network Structure | Metrics | Dataset | Details | Ref. |

|---|---|---|---|---|---|

| FL | Edge network | Delay | MNIST | The accuracy of BAA and OFDMA schemes is the same. | [27] |

| FL | Edge network | Delay | NIST | If α ε (0,1], then the convergence rate is the same in FedDelAvg and FedAvg. | [28] |

| DNN | Mobile edge network | Delay, cache hit rate | MovieLens 1M-dataset | Using DL raises concerns on privacy. | [29] |

| DNN | Mobile edge network | User satisfaction, storage capability, energy efficiency, delay | Alexanderplatz | With increasing training set size from 500 to 5000, the prediction accuracy increases by 40%+. | [30] |

| DQN | Mobile edge caching | QoE, cache hit rate | MovieLens | Improvement percentage on the offloaded traffic in the A3C method~2 × baseline method improvement percentage on the offloaded traffic. | [31] |

| QL and DQN | Mobile edge computing | Energy efficiency, delay | N/A | With more than 8 GHz capacity of the MEC server, the sum cost of all algorithms except the full offload is a fixed number. | [32] |

| Tide ebb | Edge network | Energy efficiency, overhead, QoS, delay | N/A | This algorithm optimizes the workload assignment weights (WAWs) in addition to the joint computing talent of virtual machines. | [33] |

| DQN and DDQN | Mobile edge computing, caching | Cache hit rate, utility | N/A | FedAvg is used to solve the Non-IDD and unbalancing problem in FL. | [34] |

| DDQN | IoT | Cache hit rate, average delay, convergence | MSN dataset from Xender | FADE has low efficiency in reducing delay. | [35] |

| DDQN | IoT | Training complexity | N/A | - | [36] |

| FL | Mobile edge network | Energy efficiency, overhead, accuracy, delay | MNIST, EMNIST | The algorithm is a control algorithm based on sampling. | [37] |

| DNN | IoT | Energy efficiency, overhead, queue congestion, accuracy, delay | Cifar-10, MFLOPS | Lyapunov optimization is used. | [38] |

| QL and DNN | Mobile edge computing | Energy efficiency, overhead, delay | N/A | λ m(t) is important to a user sensitive to delay; λ m(e) is important to a user in a low-battery state. | [39] |

| SVM | Edge devices | Accuracy, energy efficiency, delay | CHB-MIT Scalp Epilepsy, Wisconsin Breast Cancer | The dimension of the kernel matrix is reduced by the Nystrom method. | [40] |

| SVM and CNN | Edge network | Energy efficiency, overhead, accuracy, delay | VOC12, VOC07 | The proposed L-CNN algorithm enforces security and privacy policies. | [42] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pooyandeh, M.; Sohn, I. Edge Network Optimization Based on AI Techniques: A Survey. Electronics 2021, 10, 2830. https://doi.org/10.3390/electronics10222830

Pooyandeh M, Sohn I. Edge Network Optimization Based on AI Techniques: A Survey. Electronics. 2021; 10(22):2830. https://doi.org/10.3390/electronics10222830

Chicago/Turabian StylePooyandeh, Mitra, and Insoo Sohn. 2021. "Edge Network Optimization Based on AI Techniques: A Survey" Electronics 10, no. 22: 2830. https://doi.org/10.3390/electronics10222830

APA StylePooyandeh, M., & Sohn, I. (2021). Edge Network Optimization Based on AI Techniques: A Survey. Electronics, 10(22), 2830. https://doi.org/10.3390/electronics10222830